Gfs google-file-system-13331

Download as PPT, PDF1 like503 views

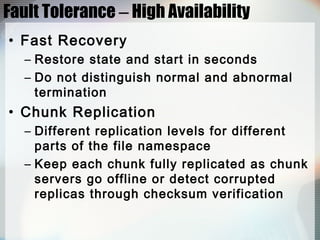

The Google File System (GFS) is a scalable distributed file system designed by Google to provide reliable, scalable storage and high performance for large datasets and workloads. It uses low-cost commodity hardware and is optimized for large files, streaming reads and writes, and high throughput. The key aspects of GFS include using a single master node to manage metadata, chunking files into 64MB chunks distributed across multiple chunk servers, replicating chunks for reliability, and optimizing for large sequential reads and appends. GFS provides high availability, fault tolerance, and data integrity through replication, fast recovery, and checksum verification.

1 of 31

Download to read offline

Ad

Recommended

Cloud infrastructure. Google File System and MapReduce - Andrii Vozniuk

Cloud infrastructure. Google File System and MapReduce - Andrii VozniukAndrii Vozniuk My presentation for the Cloud Data Management course at EPFL by Anastasia Ailamaki and Christoph Koch.

It is mainly based on the following two papers:

1) S. Ghemawat, H. Gobioff, S. Leung. The Google File System. SOSP, 2003

2) J. Dean, S. Ghemawat. MapReduce: Simplified Data Processing on Large Clusters. OSDI, 2004

Seminar Report on Google File System

Seminar Report on Google File SystemVishal Polley Google has designed and implemented a scalable distributed file system for their large distributed data intensive applications. They named it Google File System, GFS.

Google File System

Google File SystemAmir Payberah The document describes the Google File System (GFS). GFS is a distributed file system that runs on top of commodity hardware. It addresses problems with scaling to very large datasets and files by splitting files into large chunks (64MB or 128MB) and replicating chunks across multiple machines. The key components of GFS are the master, which manages metadata and chunk placement, chunkservers, which store chunks, and clients, which access chunks. The master handles operations like namespace management, replica placement, garbage collection and stale replica detection to provide a fault-tolerant filesystem.

GOOGLE FILE SYSTEM

GOOGLE FILE SYSTEMJYoTHiSH o.s Designed by Sanjay Ghemawat , Howard Gobioff and Shun-Tak Leung of Google in 2002-03.

Provides fault tolerance, serving large number of clients with high aggregate performance.

The field of Google is beyond the searching.

Google store the data in more than 15 thousands commodity hardware.

Handles the exceptions of Google and other Google specific challenges in their distributed file system.

GFS

GFSSuman Karumuri Google File System (GFS) is a distributed file system designed for large streaming reads and appends on inexpensive commodity hardware. It uses a master-chunk server architecture to manage the placement of large files across multiple machines, provides fault tolerance through replication and versioning, and aims to balance high throughput and availability even in the presence of frequent failures. The consistency model allows for defined and undefined regions to support the needs of batch-oriented, data-intensive applications like MapReduce.

gfs-sosp2003

gfs-sosp2003Hiroshi Ono The Google File System is a scalable distributed file system designed to meet the rapidly growing data storage needs of Google. It provides fault tolerance on inexpensive commodity hardware and high aggregate performance to large numbers of clients. Key aspects of its design include handling frequent component failures as the norm, managing huge files up to multiple gigabytes in size containing many objects, optimizing for file appending and sequential reads of appended data, and co-designing the file system interface to increase flexibility for applications. The largest deployment to date includes over 1,000 storage nodes providing hundreds of terabytes of storage.

Advance google file system

Advance google file systemLalit Rastogi This document summarizes the Google File System (GFS). It describes the key components of GFS including data flow, master operation for namespace management and locking, metadata management, enhanced operations like atomic record append and snapshots, garbage collection, and conclusions. The master manages metadata and locks for operations while data blocks are stored across chunkservers. GFS provides an incremental, fault-tolerant storage solution to meet Google's requirements.

Google file system

Google file systemLalit Rastogi The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

Cluster based storage - Nasd and Google file system - advanced operating syst...

Cluster based storage - Nasd and Google file system - advanced operating syst...Antonio Cesarano This is a seminar at the Course of Advanced Operating Systems at University of Salerno which shows the first cluster based storage technology (NASD) and its evolution till the development of the new Google File System.

Google File System

Google File Systemguest2cb4689 This document provides an overview of the Google File System (GFS). It describes the key components of GFS including the master server, chunkservers, and clients. The master manages metadata like file namespaces and chunk mappings. Chunkservers store file data in 64MB chunks that are replicated across servers. Clients read and write chunks through the master and chunkservers. GFS provides high throughput and fault tolerance for Google's massive data storage and analysis needs.

Google File System

Google File SystemJunyoung Jung The document summarizes the Google File System (GFS). It discusses the key points of GFS's design including:

- Files are divided into fixed-size 64MB chunks for efficiency.

- Metadata is stored on a master server while data chunks are stored on chunkservers.

- The master manages file system metadata and chunk locations while clients communicate with both the master and chunkservers.

- GFS provides features like leases to coordinate updates, atomic appends, and snapshots for consistency and fault tolerance.

Google File System

Google File SystemAmgad Muhammad GFS is a distributed file system designed by Google to store and manage large files on commodity hardware. It is optimized for large streaming reads and writes, with files divided into 64MB chunks that are replicated across multiple servers. The master node manages metadata like file mappings and chunk locations, while chunk servers store and serve data to clients. The system is designed to be fault-tolerant by detecting and recovering from frequent hardware failures.

The Google file system

The Google file systemSergio Shevchenko The Google File System was designed by Google to store and manage large files across thousands of commodity servers. It uses a single master to manage metadata and track file locations across chunkservers. Chunks are replicated for reliability and placed across racks to improve bandwidth utilization. The system provides high throughput for concurrent reads and writes through leases to maintain consistency and pipelining of data flows. Logs and replication are used to provide fault tolerance against server failures.

Replication, Durability, and Disaster Recovery

Replication, Durability, and Disaster RecoverySteven Francia This session introduces the basic components of high availability before going into a deep dive on MongoDB replication. We'll explore some of the advanced capabilities with MongoDB replication and best practices to ensure data durability and redundancy. We'll also look at various deployment scenarios and disaster recovery configurations.

The Google File System (GFS)

The Google File System (GFS)Romain Jacotin This document summarizes a lecture on the Google File System (GFS). Some key points:

1. GFS was designed for large files and high scalability across thousands of servers. It uses a single master and multiple chunkservers to store and retrieve large file chunks.

2. Files are divided into 64MB chunks which are replicated across servers for reliability. The master manages metadata and chunk locations while clients access chunkservers directly for reads/writes.

3. Atomic record appends allow efficient concurrent writes. Snapshots create instantly consistent copies of files. Leases and replication order ensure consistency across servers.

advanced Google file System

advanced Google file Systemdiptipan The Google File System (GFS) is a distributed file system designed to provide efficient, reliable access to data stored on commodity hardware. It consists of a single master node that manages metadata and chunk replication, and multiple chunkserver nodes that store file data in chunks. The master maintains metadata mapping files to variable-sized chunks, which are replicated across servers for fault tolerance. It performs tasks like chunk creation, rebalancing, and garbage collection to optimize storage usage and availability. Data flows linearly through servers to minimize latency. The system provides high throughput, incremental growth, and fault tolerance for Google's large-scale computing needs.

Google File Systems

Google File SystemsAzeem Mumtaz The Google File System is a distributed file system designed by Google to provide scalability, fault tolerance, and high performance on commodity hardware. It uses a master-slave architecture with one master and multiple chunkservers. Files are divided into 64MB chunks which are replicated across servers. The master maintains metadata and controls operations like replication and load balancing. Writes are replicated to replicas in order by the primary chunkserver holding the lease. The system provides high availability and reliability through replication and fast recovery from failures. It has been shown to achieve high throughput for Google's large-scale data processing workloads.

Google file system

Google file systemDhan V Sagar The document summarizes Google File System (GFS), which was designed to provide reliable, scalable storage for Google's large data processing needs. GFS uses a master server to manage metadata and chunk servers to store file data in large chunks (64MB). It replicates chunks across multiple servers for reliability. The architecture supports high throughput by minimizing interaction between clients and the master, and allowing clients to read from the closest replica of a chunk.

google file system

google file systemdiptipan The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

Google file system

Google file systemAnkit Thiranh It contains a little description about the Google File System, a presentation about the research paper written about Google file System.

Google

Googlerpaikrao The Google File System (GFS) is designed for large datasets and frequent component failures. It uses a single master node to track metadata for files broken into large chunks and stored across multiple chunkservers. The design prioritizes high throughput for large streaming reads and writes over small random access. Fault tolerance is achieved through replicating chunks across servers and recovering lost data from logs.

Google file system

Google file systemRoopesh Jhurani The document describes the Google File System (GFS), which was developed by Google to handle its large-scale distributed data and storage needs. GFS uses a master-slave architecture with the master managing metadata and chunk servers storing file data in 64MB chunks that are replicated across machines. It is designed for high reliability and scalability handling failures through replication and fast recovery. Measurements show it can deliver high throughput to many concurrent readers and writers.

Gfs介绍

Gfs介绍yiditushe GFS is a file system designed by Google to share data across large clusters of commodity servers and PCs. It uses a master server to manage metadata and chunk servers to store and serve file data in 64MB chunks. The design aims to detect and recover from failures automatically while supporting large files, streaming reads and writes, and concurrent appends across multiple clients. The client API mimics UNIX but provides only append semantics without consistency guarantees between clients.

The Chubby lock service for loosely- coupled distributed systems

The Chubby lock service for loosely- coupled distributed systems Ioanna Tsalouchidou The document describes Chubby, a lock service developed by Google for loosely coupled distributed systems. Chubby uses a consensus protocol to elect a master from replicas and provides coarse-grained locking. It interfaces like a file system but stores ephemeral and permanent nodes. Clients cache data and use handles to subscribe to events. Chubby scales through techniques like reducing communication and partitioning while its usage includes locking, naming, and master election in distributed systems like GFS and Bigtable.

Google File System

Google File SystemDreamJobs1 The document discusses the Google File System (GFS), which was developed by Google to handle large files across thousands of commodity servers. It provides three main functions: (1) dividing files into chunks and replicating chunks for fault tolerance, (2) using a master server to manage metadata and coordinate clients and chunkservers, and (3) prioritizing high throughput over low latency. The system is designed to reliably store very large files and enable high-speed streaming reads and writes.

Google file system GFS

Google file system GFSzihad164 Google File System is a distributed file system developed by Google to provide efficient and reliable access to large amounts of data across clusters of commodity hardware. It organizes clusters into clients that interface with the system, master servers that manage metadata, and chunkservers that store and serve file data replicated across multiple machines. Updates are replicated for fault tolerance, while the master and chunkservers work together for high performance streaming and random reads and writes of large files.

Google File System

Google File Systemnadikari123 The Google File System (GFS) is a distributed file system designed to provide efficient, reliable access to data for Google's applications processing large datasets. GFS uses a master-slave architecture, with the master managing metadata and chunk servers storing file data in 64MB chunks replicated across machines. The system provides fault tolerance through replication, fast recovery of failed components, and logging of metadata operations. Performance testing showed it could support write rates of 30MB/s and recovery of 600GB of data from a failed chunk server in under 25 minutes. GFS delivers high throughput to concurrent users through its distributed architecture and replication of data.

Unit 2.pptx

Unit 2.pptxPriyankaAher11 This document discusses different types of data centers and virtualization technology. It defines a data center as a facility used to house computer systems and components. There are several types of data centers including colocation, managed, enterprise, and cloud. Colocation centers rent rack space, managed centers are fully maintained by a provider, enterprise centers are private facilities for a single company, and cloud centers are infrastructure owned by cloud providers. The document also explains that virtualization allows sharing physical resources among multiple organizations through techniques like OS, server, hardware, and storage virtualization.

GFS - Google File System

GFS - Google File Systemtutchiio The Google File System (GFS) is designed to provide reliable, scalable storage for large files on commodity hardware. It uses a single master server to manage metadata and coordinate replication across multiple chunk servers. Files are split into 64MB chunks which are replicated across servers and stored as regular files. The system prioritizes high throughput over low latency and provides fault tolerance through replication and checksumming to detect data corruption.

HDFS_architecture.ppt

HDFS_architecture.pptvijayapraba1 Hadoop is a framework for distributed storage and processing of large datasets across clusters of commodity hardware. It includes HDFS, a distributed file system, and MapReduce, a programming model for large-scale data processing. HDFS stores data reliably across clusters and allows computations to be processed in parallel near the data. The key components are the NameNode, DataNodes, JobTracker and TaskTrackers. HDFS provides high throughput access to application data and is suitable for applications handling large datasets.

Ad

More Related Content

What's hot (20)

Cluster based storage - Nasd and Google file system - advanced operating syst...

Cluster based storage - Nasd and Google file system - advanced operating syst...Antonio Cesarano This is a seminar at the Course of Advanced Operating Systems at University of Salerno which shows the first cluster based storage technology (NASD) and its evolution till the development of the new Google File System.

Google File System

Google File Systemguest2cb4689 This document provides an overview of the Google File System (GFS). It describes the key components of GFS including the master server, chunkservers, and clients. The master manages metadata like file namespaces and chunk mappings. Chunkservers store file data in 64MB chunks that are replicated across servers. Clients read and write chunks through the master and chunkservers. GFS provides high throughput and fault tolerance for Google's massive data storage and analysis needs.

Google File System

Google File SystemJunyoung Jung The document summarizes the Google File System (GFS). It discusses the key points of GFS's design including:

- Files are divided into fixed-size 64MB chunks for efficiency.

- Metadata is stored on a master server while data chunks are stored on chunkservers.

- The master manages file system metadata and chunk locations while clients communicate with both the master and chunkservers.

- GFS provides features like leases to coordinate updates, atomic appends, and snapshots for consistency and fault tolerance.

Google File System

Google File SystemAmgad Muhammad GFS is a distributed file system designed by Google to store and manage large files on commodity hardware. It is optimized for large streaming reads and writes, with files divided into 64MB chunks that are replicated across multiple servers. The master node manages metadata like file mappings and chunk locations, while chunk servers store and serve data to clients. The system is designed to be fault-tolerant by detecting and recovering from frequent hardware failures.

The Google file system

The Google file systemSergio Shevchenko The Google File System was designed by Google to store and manage large files across thousands of commodity servers. It uses a single master to manage metadata and track file locations across chunkservers. Chunks are replicated for reliability and placed across racks to improve bandwidth utilization. The system provides high throughput for concurrent reads and writes through leases to maintain consistency and pipelining of data flows. Logs and replication are used to provide fault tolerance against server failures.

Replication, Durability, and Disaster Recovery

Replication, Durability, and Disaster RecoverySteven Francia This session introduces the basic components of high availability before going into a deep dive on MongoDB replication. We'll explore some of the advanced capabilities with MongoDB replication and best practices to ensure data durability and redundancy. We'll also look at various deployment scenarios and disaster recovery configurations.

The Google File System (GFS)

The Google File System (GFS)Romain Jacotin This document summarizes a lecture on the Google File System (GFS). Some key points:

1. GFS was designed for large files and high scalability across thousands of servers. It uses a single master and multiple chunkservers to store and retrieve large file chunks.

2. Files are divided into 64MB chunks which are replicated across servers for reliability. The master manages metadata and chunk locations while clients access chunkservers directly for reads/writes.

3. Atomic record appends allow efficient concurrent writes. Snapshots create instantly consistent copies of files. Leases and replication order ensure consistency across servers.

advanced Google file System

advanced Google file Systemdiptipan The Google File System (GFS) is a distributed file system designed to provide efficient, reliable access to data stored on commodity hardware. It consists of a single master node that manages metadata and chunk replication, and multiple chunkserver nodes that store file data in chunks. The master maintains metadata mapping files to variable-sized chunks, which are replicated across servers for fault tolerance. It performs tasks like chunk creation, rebalancing, and garbage collection to optimize storage usage and availability. Data flows linearly through servers to minimize latency. The system provides high throughput, incremental growth, and fault tolerance for Google's large-scale computing needs.

Google File Systems

Google File SystemsAzeem Mumtaz The Google File System is a distributed file system designed by Google to provide scalability, fault tolerance, and high performance on commodity hardware. It uses a master-slave architecture with one master and multiple chunkservers. Files are divided into 64MB chunks which are replicated across servers. The master maintains metadata and controls operations like replication and load balancing. Writes are replicated to replicas in order by the primary chunkserver holding the lease. The system provides high availability and reliability through replication and fast recovery from failures. It has been shown to achieve high throughput for Google's large-scale data processing workloads.

Google file system

Google file systemDhan V Sagar The document summarizes Google File System (GFS), which was designed to provide reliable, scalable storage for Google's large data processing needs. GFS uses a master server to manage metadata and chunk servers to store file data in large chunks (64MB). It replicates chunks across multiple servers for reliability. The architecture supports high throughput by minimizing interaction between clients and the master, and allowing clients to read from the closest replica of a chunk.

google file system

google file systemdiptipan The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

Google file system

Google file systemAnkit Thiranh It contains a little description about the Google File System, a presentation about the research paper written about Google file System.

Google

Googlerpaikrao The Google File System (GFS) is designed for large datasets and frequent component failures. It uses a single master node to track metadata for files broken into large chunks and stored across multiple chunkservers. The design prioritizes high throughput for large streaming reads and writes over small random access. Fault tolerance is achieved through replicating chunks across servers and recovering lost data from logs.

Google file system

Google file systemRoopesh Jhurani The document describes the Google File System (GFS), which was developed by Google to handle its large-scale distributed data and storage needs. GFS uses a master-slave architecture with the master managing metadata and chunk servers storing file data in 64MB chunks that are replicated across machines. It is designed for high reliability and scalability handling failures through replication and fast recovery. Measurements show it can deliver high throughput to many concurrent readers and writers.

Gfs介绍

Gfs介绍yiditushe GFS is a file system designed by Google to share data across large clusters of commodity servers and PCs. It uses a master server to manage metadata and chunk servers to store and serve file data in 64MB chunks. The design aims to detect and recover from failures automatically while supporting large files, streaming reads and writes, and concurrent appends across multiple clients. The client API mimics UNIX but provides only append semantics without consistency guarantees between clients.

The Chubby lock service for loosely- coupled distributed systems

The Chubby lock service for loosely- coupled distributed systems Ioanna Tsalouchidou The document describes Chubby, a lock service developed by Google for loosely coupled distributed systems. Chubby uses a consensus protocol to elect a master from replicas and provides coarse-grained locking. It interfaces like a file system but stores ephemeral and permanent nodes. Clients cache data and use handles to subscribe to events. Chubby scales through techniques like reducing communication and partitioning while its usage includes locking, naming, and master election in distributed systems like GFS and Bigtable.

Google File System

Google File SystemDreamJobs1 The document discusses the Google File System (GFS), which was developed by Google to handle large files across thousands of commodity servers. It provides three main functions: (1) dividing files into chunks and replicating chunks for fault tolerance, (2) using a master server to manage metadata and coordinate clients and chunkservers, and (3) prioritizing high throughput over low latency. The system is designed to reliably store very large files and enable high-speed streaming reads and writes.

Google file system GFS

Google file system GFSzihad164 Google File System is a distributed file system developed by Google to provide efficient and reliable access to large amounts of data across clusters of commodity hardware. It organizes clusters into clients that interface with the system, master servers that manage metadata, and chunkservers that store and serve file data replicated across multiple machines. Updates are replicated for fault tolerance, while the master and chunkservers work together for high performance streaming and random reads and writes of large files.

Google File System

Google File Systemnadikari123 The Google File System (GFS) is a distributed file system designed to provide efficient, reliable access to data for Google's applications processing large datasets. GFS uses a master-slave architecture, with the master managing metadata and chunk servers storing file data in 64MB chunks replicated across machines. The system provides fault tolerance through replication, fast recovery of failed components, and logging of metadata operations. Performance testing showed it could support write rates of 30MB/s and recovery of 600GB of data from a failed chunk server in under 25 minutes. GFS delivers high throughput to concurrent users through its distributed architecture and replication of data.

Unit 2.pptx

Unit 2.pptxPriyankaAher11 This document discusses different types of data centers and virtualization technology. It defines a data center as a facility used to house computer systems and components. There are several types of data centers including colocation, managed, enterprise, and cloud. Colocation centers rent rack space, managed centers are fully maintained by a provider, enterprise centers are private facilities for a single company, and cloud centers are infrastructure owned by cloud providers. The document also explains that virtualization allows sharing physical resources among multiple organizations through techniques like OS, server, hardware, and storage virtualization.

Similar to Gfs google-file-system-13331 (20)

GFS - Google File System

GFS - Google File Systemtutchiio The Google File System (GFS) is designed to provide reliable, scalable storage for large files on commodity hardware. It uses a single master server to manage metadata and coordinate replication across multiple chunk servers. Files are split into 64MB chunks which are replicated across servers and stored as regular files. The system prioritizes high throughput over low latency and provides fault tolerance through replication and checksumming to detect data corruption.

HDFS_architecture.ppt

HDFS_architecture.pptvijayapraba1 Hadoop is a framework for distributed storage and processing of large datasets across clusters of commodity hardware. It includes HDFS, a distributed file system, and MapReduce, a programming model for large-scale data processing. HDFS stores data reliably across clusters and allows computations to be processed in parallel near the data. The key components are the NameNode, DataNodes, JobTracker and TaskTrackers. HDFS provides high throughput access to application data and is suitable for applications handling large datasets.

Cloud computing UNIT 2.1 presentation in

Cloud computing UNIT 2.1 presentation inRahulBhole12 Cloud storage allows users to store files online through cloud storage providers like Apple iCloud, Dropbox, Google Drive, Amazon Cloud Drive, and Microsoft SkyDrive. These providers offer various amounts of free storage and options to purchase additional storage. They allow files to be securely uploaded, accessed, and synced across devices. The best cloud storage provider depends on individual needs and preferences regarding storage space requirements and features offered.

(ATS6-PLAT06) Maximizing AEP Performance

(ATS6-PLAT06) Maximizing AEP PerformanceBIOVIA How to get the maximum performance from your AEP server. This will discuss ways to improve execution time of short running jobs and how to properly configure the server depending on the expected number of users as well as the average size and duration of individual jobs. Included will be examples of making use of job pooling, Database connection sharing, and parallel subprotocol tuning. Determining when to make use of cluster, grid, or load balanced configurations along with memory and CPU sizing guidelines will also be discussed.

(ATS4-PLAT08) Server Pool Management

(ATS4-PLAT08) Server Pool ManagementBIOVIA In order to obtain the best performance possible out of your AEP server, the core architecture provides methods to reuse job processes multiple times. This talk will cover how the mechanism functions, what performance improvements you might expect as well as what potential problems you might encounter, how to use pooling in protocols and applications, and how the administrator or package developers can configure and debug specialized job pools for their particular applications

Ch8 main memory

Ch8 main memoryWelly Dian Astika This document provides an overview of memory management techniques in operating systems. It discusses contiguous memory allocation, segmentation, paging, and swapping. Contiguous allocation allocates processes to contiguous sections of memory which can lead to fragmentation issues. Segmentation divides memory into logical segments defined by segment tables. Paging divides memory into fixed-size pages and uses page tables to map virtual to physical addresses, avoiding external fragmentation. Swapping moves processes between main memory and disk to allow more processes to reside in memory than will physically fit. The document describes the hardware and data structures used to implement these techniques.

Gfs final

Gfs finalAmitSaha123 Google is a multi-billion dollar company. It's one of the big power players on the World Wide Web and beyond. The company relies on a distributed computing system to provide users with the infrastructure they need to access, create and alter data.

Surely Google buys state-of-the-art computers and servers to keep things running smoothly, right?

Wrong. The machines that power Google's operations aren't cutting-edge power computers with lots of bells and whistles. In fact, they're relatively inexpensive machines running on Linux operating systems. How can one of the most influential companies on the Web rely on cheap hardware? It's due to the Google File System (GFS), which capitalizes on the strengths of off-the-shelf servers while compensating for any hardware weaknesses. It's all in the design.

Google uses the GFS to organize and manipulate huge files and to allow application developers the research and development resources they require. The GFS is unique to Google and isn't for sale. But it could serve as a model for file systems for organizations with similar needs.

Toronto High Scalability meetup - Scaling ELK

Toronto High Scalability meetup - Scaling ELKAndrew Trossman The document discusses scaling logging and monitoring infrastructure at IBM. It describes:

1) User scenarios that generate varying amounts of log data, from small internal groups generating 3-5 TB/day to many external users generating kilobytes to gigabytes per day.

2) The architecture uses technologies like OpenStack, Docker, Kafka, Logstash, Elasticsearch, Grafana to process and analyze logs and metrics.

3) Key aspects of scaling include automating deployments with Heat and Ansible, optimizing components like Logstash and Elasticsearch, and techniques like sharding indexes across multiple nodes.

The Google Chubby lock service for loosely-coupled distributed systems

The Google Chubby lock service for loosely-coupled distributed systemsRomain Jacotin The Google Chubby lock service presented in 2006 is the inspiration for Apache ZooKeeper: let's take a deep dive into Chubby to better understand ZooKeeper and distributed consensus.

Lecture-7 Main Memroy.pptx

Lecture-7 Main Memroy.pptxAmanuelmergia Operating systems use main memory management techniques like paging and segmentation to allocate memory to processes efficiently. Paging divides both logical and physical memory into fixed-size pages. It uses a page table to map logical page numbers to physical frame numbers. This allows processes to be allocated non-contiguous physical frames. A translation lookaside buffer (TLB) caches recent page translations to improve performance by avoiding slow accesses to the page table in memory. Protection bits and valid/invalid bits ensure processes only access their allocated memory regions.

Big Data for QAs

Big Data for QAsAhmed Misbah This presentation introduces the Big Data topic to Software Quality Assurance Engineers. It can also be useful for Software Developers and other software professionals.

Operating systems- Main Memory Management

Operating systems- Main Memory ManagementDr. Chandrakant Divate Operating systems- Main Memory Management

08 operating system support

08 operating system supportSher Shah Merkhel This chapter discusses operating system support and functions including program creation, execution, I/O access, file access, system access, error handling, and accounting. It covers the evolution of operating systems from early single-program systems with no OS to modern time-sharing systems. Key topics include memory management techniques like paging, segmentation, and virtual memory which allow more efficient use of system resources through processes and virtual address translation.

Memory Management.pdf

Memory Management.pdfSujanTimalsina5 This chapter discusses various memory management techniques used in computer systems, including segmentation, paging, and swapping. Segmentation divides memory into variable-length logical segments, while paging divides it into fixed-size pages that can be mapped to non-contiguous physical frames. Paging requires a page table to map virtual to physical addresses and allows processes to exceed physical memory by swapping pages to disk. The chapter describes address translation hardware like TLBs, protection mechanisms, and issues like fragmentation.

Tuning Linux for MongoDB

Tuning Linux for MongoDBTim Vaillancourt This document discusses how to tune Linux for optimal MongoDB performance. Key points include setting ulimits to allow for many processes and open files, disabling transparent huge pages, using the deadline IO scheduler, setting the dirty ratio and swappiness low, and ensuring consistent clocks with NTP. Monitoring tools like Percona PMM or Prometheus with Grafana dashboards can help analyze MongoDB and system metrics.

Introduction to distributed file systems

Introduction to distributed file systemsViet-Trung TRAN This document discusses distributed file systems. It begins by defining key terms like filenames, directories, and metadata. It then describes the goals of distributed file systems, including network transparency, availability, and access transparency. The document outlines common distributed file system architectures like client-server and peer-to-peer. It also discusses specific distributed file systems like NFS, focusing on their protocols, caching, replication, and security considerations.

Exchange Server 2013 : les mécanismes de haute disponibilité et la redondance...

Exchange Server 2013 : les mécanismes de haute disponibilité et la redondance...Microsoft Technet France La nouvelle version d'Exchange Server 2013 intègre une foule de nouveautés lui permettant d'être aujourd'hui le serveur de messagerie le plus sécurisé et le plus fiable sur le marché. L'expérience acquise par la gestion des solutions de messagerie Cloud par les équipes Microsoft a été directement intégrée dans cette nouvelle version du produit ce qui va vous permettre la mise en place d'un système de messagerie ultra résilient. Scott Schnoll, Principal Technical Writer dans l'équipe Exchange à Microsoft Corp va vous expliquer de manière didactique l'ensemble des mécanismes de haute disponibilité et les solutions de resilience inter sites dans les plus petits détails. Venez apprendre directement par l'expert qui a travaillé sur ces sujets chez Microsoft ! Attention, session très technique, en anglais.

Hadoop

HadoopGirish Khanzode Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It implements Google's MapReduce programming model and the Hadoop Distributed File System (HDFS) for reliable data storage. Key components include a JobTracker that coordinates jobs, TaskTrackers that run tasks on worker nodes, and a NameNode that manages the HDFS namespace and DataNodes that store application data. The framework provides fault tolerance, parallelization, and scalability.

08 operating system support

08 operating system supportAnwal Mirza The document summarizes key concepts from Chapter 8 of William Stallings' Computer Organization and Architecture textbook. It discusses the objectives and functions of operating systems, including convenience, efficiency, and acting as a resource manager. It describes different types of operating systems and early batch processing systems. It also provides overviews of memory management techniques like paging, segmentation, virtual memory, and demand paging. Process scheduling and different approaches to memory allocation are summarized as well.

Exchange Server 2013 : les mécanismes de haute disponibilité et la redondance...

Exchange Server 2013 : les mécanismes de haute disponibilité et la redondance...Microsoft Technet France

Ad

Recently uploaded (20)

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

Gfs google-file-system-13331

- 1. The Google File System Tut Chi Io(Modified by Fengchang)

- 2. WHAT IS GFS • Google FILE SYSTEM(GFS) • scalable distributed file system (DFS) • falt tolerence • Reliability • Scalability • availability and performance to large networks and connected nodes.

- 3. WHAT IS GFS • built from low-cost COMMODITY HARDWARE components • optimized to accomodate Google's different data use and storage needs, • capitalized on the strength of off-the- shelf servers while minimizing hardware weaknesses

- 4. Design Overview – Assumption • Inexpensive commodity hardware • Large files: Multi-GB • Workloads – Large streaming reads – Small random reads – Large, sequential appends • Concurrent append to the same file • High Throughput > Low Latency

- 5. Design Overview – Interface • Create • Delete • Open • Close • Read • Write • Snapshot • Record Append

- 6. What does it look like

- 7. Design Overview – Architecture • Single master, multiple chunk servers, multiple clients – User-level process running on commodity Linux machine – GFS client code linked into each client application to communicate • File -> 64MB chunks -> Linux files – on local disks of chunk servers – replicated on multiple chunk servers (3r) • Cache metadata but not chunk on clients

- 8. Design Overview – Single Master • Why centralization? Simplicity! • Global knowledge is needed for – Chunk placement – Replication decisions

- 9. Design Overview – Chunk Size • 64MB – Much Larger than ordinary, why? – Advantages • Reduce client-master interaction • Reduce network overhead • Reduce the size of the metadata – Disadvantages • Internal fragmentation – Solution: lazy space allocation • Hot Spots – many clients accessing a 1-chunk file, e.g. executables – Solution: – Higher replication factor – Stagger application start times – Client-to-client communication

- 10. Design Overview – Metadata • File & chunk namespaces – In master’s memory – In master’s and chunk servers’ storage • File-chunk mapping – In master’s memory – In master’s and chunk servers’ storage • Location of chunk replicas – In master’s memory – Ask chunk servers when • Master starts • Chunk server joins the cluster – If persistent, master and chunk servers must be in sync

- 11. Design Overview – Metadata – In-memory DS • Why in-memory data structure for the master? – Fast! For GC and LB • Will it pose a limit on the number of chunks -> total capacity? – No, a 64MB chunk needs less than 64B metadata (640TB needs less than 640MB) • Most chunks are full • Prefix compression on file names

- 12. Design Overview – Metadata – Log • The only persistent record of metadata • Defines the order of concurrent operations • Critical – Replicated on multiple remote machines – Respond to client only when log locally and remotely • Fast recovery by using checkpoints – Use a compact B-tree like form directly mapping into memory – Switch to a new log, Create new checkpoints in a separate threads

- 13. Design Overview – Consistency Model • Consistent – All clients will see the same data, regardless of which replicas they read from • Defined – Consistent, and clients will see what the mutation writes in its entirety

- 14. Design Overview – Consistency Model • After a sequence of success, a region is guaranteed to be defined – Same order on all replicas – Chunk version number to detect stale replicas • Client cache stale chunk locations? – Limited by cache entry’s timeout – Most files are append-only • A Stale replica return a premature end of chunk

- 15. System Interactions – Lease • Minimized management overhead • Granted by the master to one of the replicas to become the primary • Primary picks a serial order of mutation and all replicas follow • 60 seconds timeout, can be extended • Can be revoked

- 16. System Interactions – Mutation Order Current lease holder? identity of primary location of replicas (cached by client) 3a. data 3b. data 3c. data Write request Primary assign s/n to mutations Applies it Forward write request Operation completed Operation completed Operation completed or Error report

- 17. System Interactions – Data Flow • Decouple data flow and control flow • Control flow – Master -> Primary -> Secondaries • Data flow – Carefully picked chain of chunk servers • Forward to the closest first • Distances estimated from IP addresses – Linear (not tree), to fully utilize outbound bandwidth (not divided among recipients) – Pipelining, to exploit full-duplex links • Time to transfer B bytes to R replicas = B/T + RL • T: network throughput, L: latency

- 18. System Interactions – Atomic Record Append • Concurrent appends are serializable – Client specifies only data – GFS appends at least once atomically – Return the offset to the client – Heavily used by Google to use files as • multiple-producer/single-consumer queues • Merged results from many different clients – On failures, the client retries the operation – Data are defined, intervening regions are inconsistent • A Reader can identify and discard extra padding and record fragments using the checksums

- 19. System Interactions – Snapshot • Makes a copy of a file or a directory tree almost instantaneously • Use copy-on-write • Steps – Revokes lease – Logs operations to disk – Duplicates metadata, pointing to the same chunks • Create real duplicate locally – Disks are 3 times as fast as 100 Mb Ethernet links

- 20. Master Operation – Namespace Management • No per-directory data structure • No support for alias • Lock over regions of namespace to ensure serialization • Lookup table mapping full pathnames to metadata – Prefix compression -> In-Memory

- 21. Master Operation – Namespace Locking • Each node (file/directory) has a read- write lock • Scenario: prevent /home/user/foo from being created while /home/user is being snapshotted to /save/user – Snapshot • Read locks on /home, /save • Write locks on /home/user, /save/user – Create • Read locks on /home, /home/user • Write lock on /home/user/foo

- 22. Master Operation – Policies • New chunks creation policy – New replicas on below-average disk utilization – Limit # of “recent” creations on each chun server – Spread replicas of a chunk across racks • Re-replication priority – Far from replication goal first – Chunk that is blocking client first – Live files first (rather than deleted) • Rebalance replicas periodically

- 23. Master Operation – Garbage Collection • Lazy reclamation – Logs deletion immediately – Rename to a hidden name • Remove 3 days later • Undelete by renaming back • Regular scan for orphaned chunks – Not garbage: • All references to chunks: file-chunk mapping • All chunk replicas: Linux files under designated directory on each chunk server – Erase metadata – HeartBeat message to tell chunk servers to delete chunks

- 24. Master Operation – Garbage Collection • Advantages – Simple & reliable • Chunk creation may failed • Deletion messages may be lost – Uniform and dependable way to clean up unuseful replicas – Done in batches and the cost is amortized – Done when the master is relatively free – Safety net against accidental, irreversible deletion

- 25. Master Operation – Garbage Collection • Disadvantage – Hard to fine tune when storage is tight • Solution – Delete twice explicitly -> expedite storage reclamation – Different policies for different parts of the namespace • Stale Replica Detection – Master maintains a chunk version number

- 26. Fault Tolerance – High Availability • Fast Recovery – Restore state and start in seconds – Do not distinguish normal and abnormal termination • Chunk Replication – Different replication levels for different parts of the file namespace – Keep each chunk fully replicated as chunk servers go offline or detect corrupted replicas through checksum verification

- 27. Fault Tolerance – High Availability • Master Replication – Log & checkpoints are replicated – Master failures? • Monitoring infrastructure outside GFS starts a new master process – “Shadow” masters • Read-only access to the file system when the primary master is down • Enhance read availability • Reads a replica of the growing operation log

- 28. Fault Tolerance – Data Integrity • Use checksums to detect data corruption • A chunk(64MB) is broken up into 64KB blocks with 32-bit checksum • Chunk server verifies the checksum before returning, no error propagation • Record append – Incrementally update the checksum for the last block, error will be detected when read • Random write – Read and verify the first and last block first – Perform write, compute new checksums

- 29. Conclusion • GFS supports large-scale data processing using commodity hardware • Reexamine traditional file system assumption – based on application workload and technological environment – Treat component failures as the norm rather than the exception – Optimize for huge files that are mostly appended – Relax the stand file system interface

- 30. Conclusion • Fault tolerance – Constant monitoring – Replicating crucial data – Fast and automatic recovery – Checksumming to detect data corruption at the disk or IDE subsystem level • High aggregate throughput – Decouple control and data transfer – Minimize operations by large chunk size and by chunk lease

- 31. Reference • Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung, “The Google File System”