Git mercurial - Git basics , features and commands

Download as PPT, PDF0 likes24 views

Git mercurial - Git basics , features and commands

1 of 68

Download to read offline

Ad

Recommended

Hoodie: Incremental processing on hadoop

Hoodie: Incremental processing on hadoopPrasanna Rajaperumal Hoodie (Hadoop Upsert Delete and Incremental) is an analytical, scan-optimized data storage abstraction which enables applying mutations to data in HDFS on the order of few minutes and chaining of incremental processing in hadoop

Openstack meetup lyon_2017-09-28

Openstack meetup lyon_2017-09-28Xavier Lucas This document summarizes the key aspects of a public cloud archive storage solution. It offers affordable and unlimited storage using standard transfer protocols. Data is stored using erasure coding for redundancy and fault tolerance. Accessing archived data takes 10 minutes to 12 hours depending on previous access patterns, with faster access for inactive archives. The solution uses middleware to handle sealing and unsealing archives along with tracking access patterns to regulate retrieval times.

Data Science with the Help of Metadata

Data Science with the Help of MetadataJim Dowling Data scientists spend too much of their time collecting, cleaning and wrangling data as well as curating and enriching it. Some of this work is inevitable due to the variety of data sources, but there are tools and frameworks that help automate many of these non-creative tasks. A unifying feature of these tools is support for rich metadata for data sets, jobs, and data policies. In this talk, I will introduce state-of-the-art tools for automating data science and I will show how you can use metadata to help automate common tasks in Data Science. I will also introduce a new architecture for extensible, distributed metadata in Hadoop, called Hops (Hadoop Open Platform-as-a-Service), and show how tinker-friendly metadata (for jobs, files, users, and projects) opens up new ways to build smarter applications.

Testing data and metadata backends with ClawIO

Testing data and metadata backends with ClawIOHugo González Labrador The document summarizes testing of storage and metadata backends for a cloud synchronization framework called ClawIO. ClawIO aims to avoid synchronization between the metadata database and filesystem data, unlike most sync platforms. The authors benchmarked different metadata storage configurations including a local filesystem with MySQL, local filesystem with xattrs, and local filesystem with Redis and xattrs. Stat and upload operations using these configurations showed that storing metadata in memory databases improved performance and scalability over solely using the local filesystem. Future work includes implementing more backends like EOS, S3, and Swift and running additional benchmarks.

Geek Sync | Guide to Understanding and Monitoring Tempdb

Geek Sync | Guide to Understanding and Monitoring TempdbIDERA Software You can watch the replay for this Geek Sync webcast in the IDERA Resource Center: http://ow.ly/7OmW50A5qNs

Every SQL Server system you work with has a tempdb database. In this Geek Sync, you’ll learn how tempdb is structured, what it’s used for and the common performance problems that are tied to this shared resource.

Metadata and Provenance for ML Pipelines with Hopsworks

Metadata and Provenance for ML Pipelines with Hopsworks Jim Dowling This talk describes the scale-out, consistent metadata architecture of Hopsworks and how we use it to support custom metadata and provenance for ML Pipelines with Hopsworks Feature Store, NDB, and ePipe . The talk is here: https://www.youtube.com/watch?v=oPp8PJ9QBnU&feature=emb_logo

Scaling an ELK stack at bol.com

Scaling an ELK stack at bol.comRenzo Tomà A presentation about the deployment of an ELK stack at bol.com

At bol.com we use Elasticsearch, Logstash and Kibana in a logsearch system that allows our developers and operations people to easilly access and search thru logevents coming from all layers of its infrastructure.

The presentations explains the initial design and its failures. It continues with explaining the latest design (mid 2014). Its improvements. And finally a set of tips are giving regarding Logstash and Elasticsearch scaling.

These slides were first presented at the Elasticsearch NL meetup on September 22nd 2014 at the Utrecht bol.com HQ.

The basics of remote data replication

The basics of remote data replicationFileCatalyst Looking at remote data replication, including possible scenarios and how it compares to syncing information. This slide deck also covers how data replication happens across various operating systems and how to use HotFolder to HotFolder replication.

Making Data Timelier and More Reliable with Lakehouse Technology

Making Data Timelier and More Reliable with Lakehouse TechnologyMatei Zaharia Enterprise data architectures usually contain many systems—data lakes, message queues, and data warehouses—that data must pass through before it can be analyzed. Each transfer step between systems adds a delay and a potential source of errors. What if we could remove all these steps? In recent years, cloud storage and new open source systems have enabled a radically new architecture: the lakehouse, an ACID transactional layer over cloud storage that can provide streaming, management features, indexing, and high-performance access similar to a data warehouse. Thousands of organizations including the largest Internet companies are now using lakehouses to replace separate data lake, warehouse and streaming systems and deliver high-quality data faster internally. I’ll discuss the key trends and recent advances in this area based on Delta Lake, the most widely used open source lakehouse platform, which was developed at Databricks.

20140120 presto meetup_en

20140120 presto meetup_enOgibayashi Presto was used to analyze logs collected in a Hadoop cluster. It provided faster query performance compared to Hive+Tez, with results returning in seconds rather than hours. Presto was deployed across worker nodes and performed better than Hive+Tez for different query and data formats. With repeated queries, Presto's performance improved further due to caching, while Hive+Tez showed no change. Overall, Presto demonstrated itself to be a faster solution for interactive queries on large log data.

GC free coding in @Java presented @Geecon

GC free coding in @Java presented @GeeconPeter Lawrey Look at why, when and how to reduce garbage in a Java application. Includes an example of the simple to hacky optimisations.

Big data computing overview

Big data computing overviewYoung Sung Son big data computing 개요

Linux cluster

Google File System (GFS)

Hadoop Map & Reduce

Spark Stream Processing

Reintroducing the Stream Processor: A universal tool for continuous data anal...

Reintroducing the Stream Processor: A universal tool for continuous data anal...Paris Carbone The talk motivates the use of data stream processing technology for different aspects of continuous data computation, beyond "real-time" analysis, to incorporate historical data computation, reliable application logic and interactive analysis.

Hadoop introduction

Hadoop introductionmusrath mohammad The document provides an overview of Hadoop including:

- A brief history of Hadoop and its origins from Nutch.

- An overview of the Hadoop architecture including HDFS and MapReduce.

- Examples of how companies like Yahoo, Facebook and Amazon use Hadoop at large scales to process petabytes of data.

Reproducible research: practice

Reproducible research: practiceC. Tobin Magle This document discusses reproducible research and provides guidance on key practices and tools to support reproducibility. It defines reproducibility as distributing all data, code, and tools required to reproduce published research results. Version control systems like Git allow researchers to track changes over time and collaborate more effectively. Tools like DMPTool can help researchers create data management plans and plan for long-term storage and sharing of research data and materials. R Markdown allows integrating human-readable text with executable code to produce reproducible reports and analyses.

Apache ZooKeeper TechTuesday

Apache ZooKeeper TechTuesdayAndrei Savu ZooKeeper is a highly available, scalable, distributed configuration, consensus, group membership, leader election, naming and coordination service. It provides a hierarchical namespace and basic operations like create, delete, and read data. It is useful for building distributed applications and services like queues. Future releases will focus on monitoring improvements, read-only mode, and failure detection models. The community is working on features like children for ephemeral nodes and viewing session information.

Stephan Ewen - Experiences running Flink at Very Large Scale

Stephan Ewen - Experiences running Flink at Very Large ScaleVerverica This talk shares experiences from deploying and tuning Flink steam processing applications for very large scale. We share lessons learned from users, contributors, and our own experiments about running demanding streaming jobs at scale. The talk will explain what aspects currently render a job as particularly demanding, show how to configure and tune a large scale Flink job, and outline what the Flink community is working on to make the out-of-the-box for experience as smooth as possible. We will, for example, dive into - analyzing and tuning checkpointing - selecting and configuring state backends - understanding common bottlenecks - understanding and configuring network parameters

Firebird's Big Databases (in English)

Firebird's Big Databases (in English)Alexey Kovyazin In this presentation Dmitr Kuzmenko speaks about Firebird big databases: peculiarities of maintenance and approaches to keep them up and running.

Research Papers Recommender based on Digital Repositories Metadata

Research Papers Recommender based on Digital Repositories MetadataRicard de la Vega Final project of a Postgraduate Course on Big data Management & Analytics at UPC-BarcelonaTech (Polytechnic University of Catalonia)

Rackspace: Email's Solution for Indexing 50K Documents per Second: Presented ...

Rackspace: Email's Solution for Indexing 50K Documents per Second: Presented ...Lucidworks George Bailey and Cameron Baker of Rackspace presented their solution for indexing over 50,000 documents per second for Rackspace Email. They modernized their system using Apache Flume for event processing and aggregation and SolrCloud for real-time search. This reduced indexing time from over 20 minutes to under 5 seconds, reduced the number of physical servers needed from over 100 to 14, and increased indexing throughput from 1,000 to over 50,000 documents per second while supporting over 13 billion searchable documents.

Scaling Flink in Cloud

Scaling Flink in CloudSteven Wu How Netflix run Apache Flink at very large scale in these two scenarios. (1) Thousands of stateless routing jobs in the context of Keystone data pipeline (2) single large state job with many TBs of state and parallelism at a couple thousands

Db forensics for sql rally

Db forensics for sql rallyParesh Motiwala, PMP® In this session we will discuss, various methods to analyse possible criminal actions/accidents and pin point it to a specific person/group of persons and time/time frame.

We will discuss the goals of a forensic investigation, define breaches, types of breaches and how to verify them. We will also learn about various database file formats, methodology of forensic investigation, collection and analysis of artifacts. We will take a look at native SQL methods.

We will also cover what artifacts to collect and why.

We will also cover a couple of third party tools available in the market. Understand why it is not always easy to use these tools.

Can we retrace the DML/DDL statements and possibly undo the harm?

We will also learn how to preserve the evidence, how to setup HoneyPots.

We will also look at the Initial and Advanced Response Toolkit. How to use SQL Binaries to determine hack.

Big data at experimental facilities

Big data at experimental facilitiesIan Foster 1) Scientists at the Advanced Photon Source use the Argonne Leadership Computing Facility for data reconstruction and analysis from experimental facilities in real-time or near real-time. This provides feedback during experiments.

2) Using the Swift parallel scripting language and ALCF supercomputers like Mira, scientists can process terabytes of data from experiments in minutes rather than hours or days. This enables errors to be detected and addressed during experiments.

3) Key applications discussed include near-field high-energy X-ray diffraction microscopy, X-ray nano/microtomography, and determining crystal structures from diffuse scattering images through simulation and optimization. The workflows developed provide significant time savings and improved experimental outcomes.

A Multidimensional Empirical Study on Refactoring Activity

A Multidimensional Empirical Study on Refactoring ActivityNikolaos Tsantalis The document summarizes a multidimensional empirical study on refactoring activity in three open source projects. It aims to understand refactoring practices by developers through analyzing version control histories. The study seeks to answer five research questions related to the types of refactorings applied to test vs production code, individual contributions to refactoring, alignment with release dates, relationship between test and production refactoring, and motivations for applied refactorings. The methodology examines commit histories, detects refactorings, identifies test code, analyzes activity trends, and classifies sampled refactorings. Key findings include different refactoring focuses for test vs production code, alignment of refactoring and testing, increased refactoring before releases

Google Cloud Computing on Google Developer 2008 Day

Google Cloud Computing on Google Developer 2008 Dayprogrammermag The document discusses the evolution of computing models from clusters and grids to cloud computing. It describes how cluster computing involved tightly coupled resources within a LAN, while grids allowed for resource sharing across domains. Utility computing introduced an ownership model where users leased computing power. Finally, cloud computing allows access to services and data from any internet-connected device through a browser.

DataEngConf: Parquet at Datadog: Fast, Efficient, Portable Storage for Big Data

DataEngConf: Parquet at Datadog: Fast, Efficient, Portable Storage for Big DataHakka Labs By Doug Daniels (Director of Engineering, Data Dog)

At Datadog, we collect hundreds of billions of metric data points per day from hosts, services, and customers all over the world. In addition charting and monitoring this data in real time, we also run many large-scale offline jobs to apply algorithms and compute aggregations on the data. In the past months, we’ve migrated our largest data sets over to Apache Parquet—an efficient, portable columnar storage format

Computing Outside The Box September 2009

Computing Outside The Box September 2009Ian Foster Keynote talk at Parco 2009 in Lyon, France. An updated version of http://www.slideshare.net/ianfoster/computing-outside-the-box-june-2009.

Storage for next-generation sequencing

Storage for next-generation sequencingGuy Coates The computational requirements of next generation sequencing is placing a huge demand on IT organisations .

Building compute clusters is now a well understood and relatively straightforward problem. However, NGS sequencing applications require large amounts of storage, and high IO rates.

This talk details our approach for providing storage for next-gen sequencing applications.

Talk given at BIO-IT World, Europe, 2009.

(Public) FedCM BlinkOn 16 fedcm and privacy sandbox apis

(Public) FedCM BlinkOn 16 fedcm and privacy sandbox apisDivyanshGupta922023 (Public) FedCM BlinkOn 16 fedcm and privacy sandbox apis

Ad

More Related Content

Similar to Git mercurial - Git basics , features and commands (20)

Making Data Timelier and More Reliable with Lakehouse Technology

Making Data Timelier and More Reliable with Lakehouse TechnologyMatei Zaharia Enterprise data architectures usually contain many systems—data lakes, message queues, and data warehouses—that data must pass through before it can be analyzed. Each transfer step between systems adds a delay and a potential source of errors. What if we could remove all these steps? In recent years, cloud storage and new open source systems have enabled a radically new architecture: the lakehouse, an ACID transactional layer over cloud storage that can provide streaming, management features, indexing, and high-performance access similar to a data warehouse. Thousands of organizations including the largest Internet companies are now using lakehouses to replace separate data lake, warehouse and streaming systems and deliver high-quality data faster internally. I’ll discuss the key trends and recent advances in this area based on Delta Lake, the most widely used open source lakehouse platform, which was developed at Databricks.

20140120 presto meetup_en

20140120 presto meetup_enOgibayashi Presto was used to analyze logs collected in a Hadoop cluster. It provided faster query performance compared to Hive+Tez, with results returning in seconds rather than hours. Presto was deployed across worker nodes and performed better than Hive+Tez for different query and data formats. With repeated queries, Presto's performance improved further due to caching, while Hive+Tez showed no change. Overall, Presto demonstrated itself to be a faster solution for interactive queries on large log data.

GC free coding in @Java presented @Geecon

GC free coding in @Java presented @GeeconPeter Lawrey Look at why, when and how to reduce garbage in a Java application. Includes an example of the simple to hacky optimisations.

Big data computing overview

Big data computing overviewYoung Sung Son big data computing 개요

Linux cluster

Google File System (GFS)

Hadoop Map & Reduce

Spark Stream Processing

Reintroducing the Stream Processor: A universal tool for continuous data anal...

Reintroducing the Stream Processor: A universal tool for continuous data anal...Paris Carbone The talk motivates the use of data stream processing technology for different aspects of continuous data computation, beyond "real-time" analysis, to incorporate historical data computation, reliable application logic and interactive analysis.

Hadoop introduction

Hadoop introductionmusrath mohammad The document provides an overview of Hadoop including:

- A brief history of Hadoop and its origins from Nutch.

- An overview of the Hadoop architecture including HDFS and MapReduce.

- Examples of how companies like Yahoo, Facebook and Amazon use Hadoop at large scales to process petabytes of data.

Reproducible research: practice

Reproducible research: practiceC. Tobin Magle This document discusses reproducible research and provides guidance on key practices and tools to support reproducibility. It defines reproducibility as distributing all data, code, and tools required to reproduce published research results. Version control systems like Git allow researchers to track changes over time and collaborate more effectively. Tools like DMPTool can help researchers create data management plans and plan for long-term storage and sharing of research data and materials. R Markdown allows integrating human-readable text with executable code to produce reproducible reports and analyses.

Apache ZooKeeper TechTuesday

Apache ZooKeeper TechTuesdayAndrei Savu ZooKeeper is a highly available, scalable, distributed configuration, consensus, group membership, leader election, naming and coordination service. It provides a hierarchical namespace and basic operations like create, delete, and read data. It is useful for building distributed applications and services like queues. Future releases will focus on monitoring improvements, read-only mode, and failure detection models. The community is working on features like children for ephemeral nodes and viewing session information.

Stephan Ewen - Experiences running Flink at Very Large Scale

Stephan Ewen - Experiences running Flink at Very Large ScaleVerverica This talk shares experiences from deploying and tuning Flink steam processing applications for very large scale. We share lessons learned from users, contributors, and our own experiments about running demanding streaming jobs at scale. The talk will explain what aspects currently render a job as particularly demanding, show how to configure and tune a large scale Flink job, and outline what the Flink community is working on to make the out-of-the-box for experience as smooth as possible. We will, for example, dive into - analyzing and tuning checkpointing - selecting and configuring state backends - understanding common bottlenecks - understanding and configuring network parameters

Firebird's Big Databases (in English)

Firebird's Big Databases (in English)Alexey Kovyazin In this presentation Dmitr Kuzmenko speaks about Firebird big databases: peculiarities of maintenance and approaches to keep them up and running.

Research Papers Recommender based on Digital Repositories Metadata

Research Papers Recommender based on Digital Repositories MetadataRicard de la Vega Final project of a Postgraduate Course on Big data Management & Analytics at UPC-BarcelonaTech (Polytechnic University of Catalonia)

Rackspace: Email's Solution for Indexing 50K Documents per Second: Presented ...

Rackspace: Email's Solution for Indexing 50K Documents per Second: Presented ...Lucidworks George Bailey and Cameron Baker of Rackspace presented their solution for indexing over 50,000 documents per second for Rackspace Email. They modernized their system using Apache Flume for event processing and aggregation and SolrCloud for real-time search. This reduced indexing time from over 20 minutes to under 5 seconds, reduced the number of physical servers needed from over 100 to 14, and increased indexing throughput from 1,000 to over 50,000 documents per second while supporting over 13 billion searchable documents.

Scaling Flink in Cloud

Scaling Flink in CloudSteven Wu How Netflix run Apache Flink at very large scale in these two scenarios. (1) Thousands of stateless routing jobs in the context of Keystone data pipeline (2) single large state job with many TBs of state and parallelism at a couple thousands

Db forensics for sql rally

Db forensics for sql rallyParesh Motiwala, PMP® In this session we will discuss, various methods to analyse possible criminal actions/accidents and pin point it to a specific person/group of persons and time/time frame.

We will discuss the goals of a forensic investigation, define breaches, types of breaches and how to verify them. We will also learn about various database file formats, methodology of forensic investigation, collection and analysis of artifacts. We will take a look at native SQL methods.

We will also cover what artifacts to collect and why.

We will also cover a couple of third party tools available in the market. Understand why it is not always easy to use these tools.

Can we retrace the DML/DDL statements and possibly undo the harm?

We will also learn how to preserve the evidence, how to setup HoneyPots.

We will also look at the Initial and Advanced Response Toolkit. How to use SQL Binaries to determine hack.

Big data at experimental facilities

Big data at experimental facilitiesIan Foster 1) Scientists at the Advanced Photon Source use the Argonne Leadership Computing Facility for data reconstruction and analysis from experimental facilities in real-time or near real-time. This provides feedback during experiments.

2) Using the Swift parallel scripting language and ALCF supercomputers like Mira, scientists can process terabytes of data from experiments in minutes rather than hours or days. This enables errors to be detected and addressed during experiments.

3) Key applications discussed include near-field high-energy X-ray diffraction microscopy, X-ray nano/microtomography, and determining crystal structures from diffuse scattering images through simulation and optimization. The workflows developed provide significant time savings and improved experimental outcomes.

A Multidimensional Empirical Study on Refactoring Activity

A Multidimensional Empirical Study on Refactoring ActivityNikolaos Tsantalis The document summarizes a multidimensional empirical study on refactoring activity in three open source projects. It aims to understand refactoring practices by developers through analyzing version control histories. The study seeks to answer five research questions related to the types of refactorings applied to test vs production code, individual contributions to refactoring, alignment with release dates, relationship between test and production refactoring, and motivations for applied refactorings. The methodology examines commit histories, detects refactorings, identifies test code, analyzes activity trends, and classifies sampled refactorings. Key findings include different refactoring focuses for test vs production code, alignment of refactoring and testing, increased refactoring before releases

Google Cloud Computing on Google Developer 2008 Day

Google Cloud Computing on Google Developer 2008 Dayprogrammermag The document discusses the evolution of computing models from clusters and grids to cloud computing. It describes how cluster computing involved tightly coupled resources within a LAN, while grids allowed for resource sharing across domains. Utility computing introduced an ownership model where users leased computing power. Finally, cloud computing allows access to services and data from any internet-connected device through a browser.

DataEngConf: Parquet at Datadog: Fast, Efficient, Portable Storage for Big Data

DataEngConf: Parquet at Datadog: Fast, Efficient, Portable Storage for Big DataHakka Labs By Doug Daniels (Director of Engineering, Data Dog)

At Datadog, we collect hundreds of billions of metric data points per day from hosts, services, and customers all over the world. In addition charting and monitoring this data in real time, we also run many large-scale offline jobs to apply algorithms and compute aggregations on the data. In the past months, we’ve migrated our largest data sets over to Apache Parquet—an efficient, portable columnar storage format

Computing Outside The Box September 2009

Computing Outside The Box September 2009Ian Foster Keynote talk at Parco 2009 in Lyon, France. An updated version of http://www.slideshare.net/ianfoster/computing-outside-the-box-june-2009.

Storage for next-generation sequencing

Storage for next-generation sequencingGuy Coates The computational requirements of next generation sequencing is placing a huge demand on IT organisations .

Building compute clusters is now a well understood and relatively straightforward problem. However, NGS sequencing applications require large amounts of storage, and high IO rates.

This talk details our approach for providing storage for next-gen sequencing applications.

Talk given at BIO-IT World, Europe, 2009.

More from DivyanshGupta922023 (19)

(Public) FedCM BlinkOn 16 fedcm and privacy sandbox apis

(Public) FedCM BlinkOn 16 fedcm and privacy sandbox apisDivyanshGupta922023 (Public) FedCM BlinkOn 16 fedcm and privacy sandbox apis

jquery summit presentation for large scale javascript applications

jquery summit presentation for large scale javascript applicationsDivyanshGupta922023 The document discusses different patterns for organizing JavaScript applications, including the module pattern and MVC frameworks. It provides examples of implementing the module pattern in jQuery, Dojo, YUI, and ExtJS. It also covers MVC frameworks like Backbone.js, AngularJS, and Ember and discusses how to convert existing code to an MVC structure.

Next.js - ReactPlayIO.pptx

Next.js - ReactPlayIO.pptxDivyanshGupta922023 Next.js helps address some shortcomings of React like initial load time and SEO support. It introduces features like server-side rendering, static generation, and incremental static regeneration that improve performance. The new App Router in Next.js 13.4 introduces shared layouts and nested routing to provide a more structured approach to building apps. React Server Components in Next.js also improve performance by enabling server-centric routing and caching of component trees.

Management+team.pptx

Management+team.pptxDivyanshGupta922023 Nestlé has a strong and experienced global leadership team. The team includes Paul Bulcke as Chairman, who has worked at Nestlé since 1979. Mark Schneider has served as CEO since 2017, bringing experience from Fresenius Group. Other senior leaders head major regions, including Laurent Freixe for the Americas, Wan Ling Martello for Asia and Africa, and Marco Settembri for Europe. All have extensive international experience in senior management roles.

DHC Microbiome Presentation 4-23-19.pptx

DHC Microbiome Presentation 4-23-19.pptxDivyanshGupta922023 - Recent advancements in statistical analysis of microbial metagenomic sequence data include improved OTU clustering algorithms like ASVs that provide single nucleotide resolution, and log-ratio methodology to analyze compositional microbiome data by removing the compositional constraint.

- 16S amplicon sequencing provides information on relative abundance but is subject to biases from copy number variation and primer mismatches. Shallow shotgun metagenomics is a potential alternative.

- Microbiome data are compositional and violate assumptions of standard statistical tests. The CoDA framework applies log-ratio transformations and appropriate statistical methods to account for this compositionality.

developer-burnout.pdf

developer-burnout.pdfDivyanshGupta922023 Developer burnout is sneaky and can slowly grind at the developer until constant fatigue and lack of motivation become normal. Key signs include realizing you've been doing the same thing for years without enjoyment. Success in open source can lead to taking on too many projects and goals without delegation. To avoid burnout, developers should be gentle with themselves, publish less to communities while still influencing them, delegate tasks, pursue other interests and hobbies, and take breaks if needed. Selectively engaging with others on social media can also help prevent burnout.

AzureIntro.pptx

AzureIntro.pptxDivyanshGupta922023 El documento describe los conceptos clave de la computación en la nube, incluyendo infraestructura como servicio (IaaS), plataforma como servicio (PaaS) y software como servicio (SaaS). También discute la escalabilidad, los modelos de implementación pública, privada e híbrida, y ejemplos de proveedores de servicios en la nube como Microsoft Azure, AWS y Google App Engine.

api-driven-development.pdf

api-driven-development.pdfDivyanshGupta922023 This document discusses API driven development and building APIs for humans. It recommends following three steps: 1) Have a real problem to solve, like difficulty using OneNote across platforms. 2) Respond by writing documentation like a README before writing code. 3) Build the API by writing code to implement what was documented. It also recommends building APIs openly on GitHub so components separate concerns and best practices emerge through community feedback.

Internet of Things.pptx

Internet of Things.pptxDivyanshGupta922023 The Internet of Things (IoT) allows physical objects to be connected to the internet through sensors and software. This enables the objects to exchange data between each other and other connected devices using existing network infrastructure. Each object can be uniquely identified and can interoperate within the existing internet. The IoT creates opportunities to remotely sense and control objects, improving efficiency and integrating the physical world with computer systems.

Functional JS+ ES6.pptx

Functional JS+ ES6.pptxDivyanshGupta922023 This document discusses JavaScript functions, arrays, objects, and classes. It shows examples of functions being first-class citizens that can be passed as arguments to other functions. Examples demonstrate using array methods like map, filter, and reduce to transform and extract data from arrays. Objects are manipulated using property accessors, and classes are defined with inheritance. Various syntax features are presented like let, const, arrow functions, destructuring, and modules.

AAAI19-Open.pptx

AAAI19-Open.pptxDivyanshGupta922023 This document summarizes Terrance Boult's talk on open world recognition and learning with unknown inputs. It discusses how traditional machine learning assumes all classes are known, but in reality there are many "unknown unknowns" that models are not trained on. It reviews different levels of openness in problems from closed multi-class classification to open set recognition. It also summarizes different algorithms that can help with open set recognition, like SVDD and openmax, and discusses challenges in applying these techniques to deep networks. The talk concludes that we cannot anticipate all unknown inputs and that open set recognition is an important area for further research.

10-security-concepts-lightning-talk 1of2.pptx

10-security-concepts-lightning-talk 1of2.pptxDivyanshGupta922023 Vulnerabilities are weaknesses that can allow attackers to compromise a system through bad code, design errors, or programming mistakes, while exploits are code that takes advantage of vulnerabilities. Zero-day vulnerabilities are the most dangerous as they are new and unfixed, whereas known vulnerabilities are tracked through the CVE system. Attack surfaces are all possible entry points like vulnerabilities that attackers can use to access a system, so the principle of least privilege should be followed to minimize access and resources for users and applications.

Introduction to Directed Acyclic Graphs.pptx

Introduction to Directed Acyclic Graphs.pptxDivyanshGupta922023 This document provides an introduction to using directed acyclic graphs (DAGs) for confounder selection in nonexperimental studies. It discusses what DAGs are, their benefits over traditional covariate selection approaches, limitations, key terminology, and examples of how DAGs can identify when adjustment is needed or could induce bias. The document also introduces d-separation criteria for assessing open and closed paths in DAGs and overviews software tools for applying these rules to select minimum adjustment sets from complex causal diagrams.

ReactJS presentation.pptx

ReactJS presentation.pptxDivyanshGupta922023 React JS is a JavaScript library for building user interfaces. It uses virtual DOM and one-way data binding to render components efficiently. Everything in React is a component - they accept custom inputs called props and control the output display through rendering. Components can manage private state and update due to props or state changes. The lifecycle of a React component involves initialization, updating due to state/prop changes, and unmounting from the DOM. React promotes unidirectional data flow and single source of truth to make views more predictable and easier to debug.

Nextjs13.pptx

Nextjs13.pptxDivyanshGupta922023 Next.js introduces a new App Directory structure that routes pages based on files, separates components by whether data is fetched on the server or client, and introduces a new way to fetch data using React Suspense. It also supports server-side rendering, static site generation, incremental static regeneration, internationalization, theming, accessibility and alternative patterns to Context API.

Ad

Recently uploaded (20)

Degree_of_Automation.pdf for Instrumentation and industrial specialist

Degree_of_Automation.pdf for Instrumentation and industrial specialistshreyabhosale19 degree of Automation for industrial and Instrumentation learners.

Lecture 13 (Air and Noise Pollution and their Control) (1).pptx

Lecture 13 (Air and Noise Pollution and their Control) (1).pptxhuzaifabilalshams (Air and Noise Pollution and their Control)

ADVXAI IN MALWARE ANALYSIS FRAMEWORK: BALANCING EXPLAINABILITY WITH SECURITY

ADVXAI IN MALWARE ANALYSIS FRAMEWORK: BALANCING EXPLAINABILITY WITH SECURITYijscai With the increased use of Artificial Intelligence (AI) in malware analysis there is also an increased need to

understand the decisions models make when identifying malicious artifacts. Explainable AI (XAI) becomes

the answer to interpreting the decision-making process that AI malware analysis models use to determine

malicious benign samples to gain trust that in a production environment, the system is able to catch

malware. With any cyber innovation brings a new set of challenges and literature soon came out about XAI

as a new attack vector. Adversarial XAI (AdvXAI) is a relatively new concept but with AI applications in

many sectors, it is crucial to quickly respond to the attack surface that it creates. This paper seeks to

conceptualize a theoretical framework focused on addressing AdvXAI in malware analysis in an effort to

balance explainability with security. Following this framework, designing a machine with an AI malware

detection and analysis model will ensure that it can effectively analyze malware, explain how it came to its

decision, and be built securely to avoid adversarial attacks and manipulations. The framework focuses on

choosing malware datasets to train the model, choosing the AI model, choosing an XAI technique,

implementing AdvXAI defensive measures, and continually evaluating the model. This framework will

significantly contribute to automated malware detection and XAI efforts allowing for secure systems that

are resilient to adversarial attacks.

railway wheels, descaling after reheating and before forging

railway wheels, descaling after reheating and before forgingJavad Kadkhodapour railway wheels, descaling after reheating and before forging

Explainable-Artificial-Intelligence-in-Disaster-Risk-Management (2).pptx_2024...

Explainable-Artificial-Intelligence-in-Disaster-Risk-Management (2).pptx_2024...LiyaShaji4 Explainable artificial intelligence

ELectronics Boards & Product Testing_Shiju.pdf

ELectronics Boards & Product Testing_Shiju.pdfShiju Jacob This presentation provides a high level insight about DFT analysis and test coverage calculation, finalizing test strategy, and types of tests at different levels of the product.

Fort night presentation new0903 pdf.pdf.

Fort night presentation new0903 pdf.pdf.anuragmk56 This is the document of fortnight review progress meeting

Ad

Git mercurial - Git basics , features and commands

- 1. Beyond code: Versioning data with Git and Mercurial Stephanie Collett and Martin Haye California Digital Library, University of California

- 6. Agenda • Background • Case Study #1: eScholarship Backup • Case Study #2: Zephir Metadata • Summary

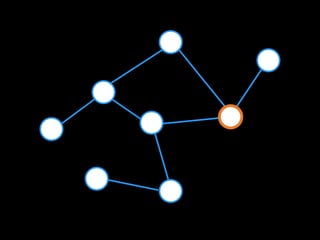

- 15. Why distributed?

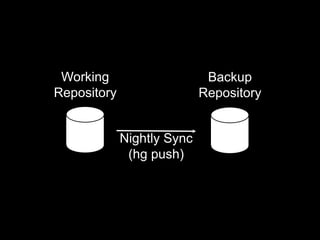

- 17. Case #1 eScholarship Data/Metadata Backup

- 18. eScholarship

- 21. XML Metadata 10 files per work }

- 29. 30-60 minutes for the batch job

- 31. Case #2 Zephir Metadata Management System

- 34. Zephir

- 40. Individually

- 41. Versioning + Audit Trail + Diffing + Debugging

- 42. Collectively

- 43. record/ marc.xml

- 44. 1 file, ~4k

- 46. 4 file, ~36k

- 48. record/ + record/.git 43 files, ~132k

- 49. record/ + record/.git ~132k x 10 million

- 50. record/ + record/.git 43 files x 10 million

- 53. Grit Gem (Git) vs. Rugged Gem (Libgit2)

- 54. Grit Gem (Git)

- 60. Grit vs. Rugged • add files • commit • add files • determine changes • determine parent • commit • replace HEAD

- 62. Summary

- 64. vs.

- 67. texty data, small files 100-10,000 files per repository

- 68. If it looks like code, even if it's data, it will probably work

Editor's Notes

- #4: Not tutorial

- #5: http://www.flickr.com/photos/maisonbisson/201844037/ Not an explanation on how Mercurial and Git work under the hood

- #6: Not an epic fight to determine whose better, Git or Mercurial

- #10: http://www.flickr.com/photos/apenguincalledelvis/4262022435/

- #11: http://www.flickr.com/photos/aiwells/4672742619/ versioning, author, annotate, compare, manage (we just scrape the surface)

- #17: lightweight, file-based, compressed, reliable, ubiquitous

- #26: http://www.flickr.com/photos/bycp/5033418814/

- #28: 30-60 minutes

- #30: http://www.flickr.com/photos/ckaiserca/434018871/in/photostream/

- #33: The mission of HathiTrust is to contribute to the common good by collecting, organizing, preserving, communicating, and sharing the record of human knowledge.

- #34: http://www.flickr.com/photos/zenobia_joy/5393914694/

- #35: http://www.flickr.com/photos/ppl_ri_images/4019188259/

- #41: versioning worked great, debugging fantastic, audit trail, see what files changed, and what changed in the files.

- #48: 38 files

- #50: 33 times the space needed

- #56: Not tutorial

- #57: http://www.flickr.com/photos/12495774@N02/2405297371/ committing in grit: ~.2, committing in rugged ~.004

- #59: http://www.flickr.com/photos/kingway-school/5876407905/in/photostream/

- #60: http://www.flickr.com/photos/maisonbisson/201844037/

- #62: http://www.flickr.com/photos/boris/2345300428/sizes/z/in/photostream/ 50x faster (.2, .004)

- #65: Some implementations easy, others hard. Depends on complexity and API support.

- #66: Certain uses are going to be very slow. Lots of files, binary files, or command line use.

- #67: Real differences between the implementation, API support in languages

- #68: http://www.flickr.com/photos/pewari/3609957389/