Gluent Extending Enterprise Applications with Hadoop

Download as PPTX, PDF3 likes868 views

This presentation shows how to transparently extend enterprise applications with the power of modern data platforms such as Hadoop. Application re-writing is not needed and there is no downtime when virtualizing data with Gluent.

1 of 36

Downloaded 29 times

Ad

Recommended

Offload, Transform, and Present - The New World of Data Integration

Offload, Transform, and Present - The New World of Data Integrationgluent. This session explores how one organization built an integrated analytics platform by implementing Gluent to offload its Oracle enterprise data warehouse (EDW) data to Hadoop, and to transparently present native Hadoop data back to its EDW. As a result of its efforts, the company is now able to support operational reporting, OLAP, data discovery, predictive analytics, and machine learning from a single scalable platform that combines the benefits of an enterprise data warehouse with those of a data lake. This session includes a brief overview of the platform and use cases to demonstrate how the company has utilized the solution to provide business value.

The Enterprise and Connected Data, Trends in the Apache Hadoop Ecosystem by A...

The Enterprise and Connected Data, Trends in the Apache Hadoop Ecosystem by A...Big Data Spain https://www.bigdataspain.org/2016/program/thu-enterprise-connected-data-trends-apache-hadoop-ecosystem.html

https://www.youtube.com/watch?v=WArxOEmtv1s&index=5&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik&t=52s

Rapid Data Analytics @ Netflix

Rapid Data Analytics @ NetflixData Con LA At Netflix, we've spent a lot of time thinking about how we can make our analytics group move quickly. Netflix's Data Engineering & Analytics organization embraces the company's culture of "Freedom & Responsibility".

How does a company with a $40 billion market cap and $6 billion in annual revenue keep their data teams moving with the agility of a tiny company?

How do hundreds of data engineers and scientists make the best decisions for their projects independently, without the analytics environment devolving into chaos?

We'll talk about how Netflix equips its business intelligence and data engineers with:

the freedom to leverage cloud-based data tools - Spark, Presto, Redshift, Tableau and others - in ways that solve our most difficult data problems

the freedom to find and introduce right software for the job - even if it isn't used anywhere else in-house

the freedom to create and drop new tables in production without approval

the freedom to choose when a question is a one-off, and when a question is asked often enough to require a self-service tool

the freedom to retire analytics and data processes whose value doesn't justify their support costs

Speaker Bios

Monisha Kanoth is a Senior Data Architect at Netflix, and was one of the founding members of the current streaming Content Analytics team. She previously worked as a big data lead at Convertro (acquired by AOL) and as a data warehouse lead at MySpace.

Jason Flittner is a Senior Business Intelligence Engineer at Netflix, focusing on data transformation, analysis, and visualization as part of the Content Data Engineering & Analytics team. He previously led the EC2 Business Intelligence team at Amazon Web Services and was a business intelligence engineer with Cisco.

Chris Stephens is a Senior Data Engineer at Netflix. He previously served as the CTO at Deep 6 Analytics, a machine learning & content analytics company in Los Angeles, and on the data warehouse teams at the FOX Audience Network and Anheuser-Busch.

Lambda architecture for real time big data

Lambda architecture for real time big dataTrieu Nguyen - The document discusses the Lambda Architecture, a system designed by Nathan Marz for building real-time big data applications. It is based on three principles: human fault-tolerance, data immutability, and recomputation.

- The document provides two case studies of applying Lambda Architecture - at Greengar Studios for API monitoring and statistics, and at eClick for real-time data analytics on streaming user event data.

- Key lessons discussed are keeping solutions simple, asking the right questions to enable deep analytics and profit, using reactive and functional approaches, and turning data into useful insights.

Realtime streaming architecture in INFINARIO

Realtime streaming architecture in INFINARIOJozo Kovac About our experience with realtime analyses on never-ending stream of user events. Discuss Lambda architecture, Kappa, Apache Kafka and our own approach.

Getting It Right Exactly Once: Principles for Streaming Architectures

Getting It Right Exactly Once: Principles for Streaming ArchitecturesSingleStore Darryl Smith, Chief Data Platform Architect and Distinguished Engineer, Dell Technologies. September 2016, Strata+Hadoop World, NY

How to design and implement a data ops architecture with sdc and gcp

How to design and implement a data ops architecture with sdc and gcpJoseph Arriola Do you know how to use StreamSets Data Collector with Google Cloud Platform (GCP)? In this session we'll explain how YaloChat designed and implemented a streaming architecture that is sustainable, operable and scalable. Discover how we deployed Data Collector to integrate GCP components such as Pub / Sub and BigQuery to achieve DataOps in the cloud

Big Data Computing Architecture

Big Data Computing ArchitectureGang Tao The document discusses the evolution of big data architectures from Hadoop and MapReduce to Lambda architecture and stream processing frameworks. It notes the limitations of early frameworks in terms of latency, scalability, and fault tolerance. Modern architectures aim to unify batch and stream processing for low latency queries over both historical and new data.

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...DataWorks Summit/Hadoop Summit This document discusses how TiVo used a graph database and Spark to gain insights from their log data. TiVo collects log data from their set top boxes but faced challenges analyzing it to optimize the user experience. Their solution was to build a graph of user sessions in Neo4j connected by edges of user actions. This allowed them to analyze paths users take and understand how users discover and access content. They demonstrated analyzing the most popular paths and apps. The graph provided advantages over SQL for understanding interconnected user behavior.

Shortening the Feedback Loop: How Spotify’s Big Data Ecosystem has evolved to...

Shortening the Feedback Loop: How Spotify’s Big Data Ecosystem has evolved to...Big Data Spain https://www.bigdataspain.org/2016/program/thu-shortening-feedback-loop-how-spotify-big-data-ecosystem-has-evolved-produce-real-time-insights.html

https://www.youtube.com/watch?v=qNuy3JZsFPM&index=18&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik&t=28s

Using Hadoop to build a Data Quality Service for both real-time and batch data

Using Hadoop to build a Data Quality Service for both real-time and batch dataDataWorks Summit/Hadoop Summit Griffin is a data quality platform built by eBay on Hadoop and Spark to provide a unified process for detecting data quality issues in both real-time and batch data across multiple systems. It defines common data quality dimensions and metrics and calculates measurement values and quality scores, storing results and generating trending reports. Griffin provides a centralized data quality service for eBay and has been deployed processing over 1.2PB of data and 800M daily records using 100+ metrics. It is open source and contributions are welcome.

Data Apps with the Lambda Architecture - with Real Work Examples on Merging B...

Data Apps with the Lambda Architecture - with Real Work Examples on Merging B...Altan Khendup The document discusses the Lambda architecture, which provides a common pattern for integrating real-time and batch processing systems. It describes the key components of Lambda - the batch layer, speed layer, and serving layer. The challenges of implementing Lambda are that it requires multiple systems and technologies to be coordinated. Real-world examples are needed to help practical application. The document also provides examples of medical and customer analytics use cases that could benefit from a Lambda approach.

Instrumenting your Instruments

Instrumenting your Instruments DataWorks Summit/Hadoop Summit This document summarizes Premal Shah's presentation on how 6sense instruments their systems to analyze customer data. 6sense uses Hadoop and other tools to ingest customer data from various sources, run modeling and scoring, and provide actionable insights to customers. They discuss the data pipeline, challenges of performance and scaling, and how they use metrics and tools like Sumo Logic and OpsClarity to optimize and monitor their systems.

Apache frameworks for Big and Fast Data

Apache frameworks for Big and Fast DataNaveen Korakoppa Apache frameworks provide solutions for processing big and fast data. Traditional APIs use a request/response model with pull-based interactions, while modern data streaming uses a publish/subscribe model. Key concepts for big data architectures include batch processing frameworks like Hadoop, stream processing tools like Storm, and hybrid options like Spark and Flink. Popular data ingestion tools include Kafka for messaging, Flume for log data, and Sqoop for structured data. The best solution depends on requirements like latency, data volume, and workload type.

Oracle GoldenGate and Apache Kafka: A Deep Dive Into Real-Time Data Streaming

Oracle GoldenGate and Apache Kafka: A Deep Dive Into Real-Time Data StreamingMichael Rainey We produce quite a lot of data! Much of the data are business transactions stored in a relational database. More frequently, the data are non-structured, high volume and rapidly changing datasets known in the industry as Big Data. The challenge for data integration professionals is to combine and transform the data into useful information. Not just that, but it must also be done in near real-time and using a target system such as Hadoop. The topic of this session, real-time data streaming, provides a great solution for this challenging task. By integrating GoldenGate, Oracle’s premier data replication technology, and Apache Kafka, the latest open-source streaming and messaging system, we can implement a fast, durable, and scalable solution.

Presented at Oracle OpenWorld 2016

Stream Processing as Game Changer for Big Data and Internet of Things by Kai ...

Stream Processing as Game Changer for Big Data and Internet of Things by Kai ...Big Data Spain https://www.bigdataspain.org/2016/program/thu-big-data-analytics-machine-learning-to-real-time-processing.html

https://www.youtube.com/watch?v=QiQ6LKKvd9k&t=3s&index=19&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik

Bigger Faster Easier: LinkedIn Hadoop Summit 2015

Bigger Faster Easier: LinkedIn Hadoop Summit 2015Shirshanka Das We discuss LinkedIn's big data ecosystem and its evolution through the years. We introduce three open source projects, Gobblin for ingestion, Cubert for computation and Pinot for fast OLAP serving. We also showcase our in-house data discovery and lineage portal WhereHows.

Accelerating Data Warehouse Modernization

Accelerating Data Warehouse ModernizationDataWorks Summit/Hadoop Summit Modern data warehouses need to be modernized to handle big data, integrate multiple data silos, reduce costs, and reduce time to market. A modern data warehouse blueprint includes a data lake to land and ingest structured, unstructured, external, social, machine, and streaming data alongside a traditional data warehouse. Key challenges for modernization include making data discoverable and usable for business users, rethinking ETL to allow for data blending, and enabling self-service BI over Hadoop. Common tactics for modernization include using a data lake as a landing zone, offloading infrequently accessed data to Hadoop, and exploring data in Hadoop to discover new insights.

Migration and Coexistence between Relational and NoSQL Databases by Manuel H...

Migration and Coexistence between Relational and NoSQL Databases by Manuel H...Big Data Spain https://www.bigdataspain.org/2016/program/thu-migration-coexistence-between-relational-nosql-databases.html

https://www.youtube.com/watch?v=mM9pchslCn4&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik&index=56&t=18s

Innovation in the Enterprise Rent-A-Car Data Warehouse

Innovation in the Enterprise Rent-A-Car Data WarehouseDataWorks Summit Big Data adoption is a journey. Depending on the business the process can take weeks, months, or even years. With any transformative technology the challenges have less to do with the technology and more to do with how a company adapts itself to a new way of thinking about data. Building a Center of Excellence is one way for IT to help drive success.

This talk will explore Enterprise Holdings Inc. (which operates the Enterprise Rent-A-Car, National Car Rental and Alamo Rent A Car) and their experience with Big Data. EHI’s journey started in 2013 with Hadoop as a POC and today are working to create the next generation data warehouse in Microsoft’s Azure cloud utilizing a lambda architecture.

We’ll discuss the Center of Excellence, the roles in the new world, share the things which worked well, and rant about those which didn’t.

No deep Hadoop knowledge is necessary, architect or executive level.

Netflix Data Engineering @ Uber Engineering Meetup

Netflix Data Engineering @ Uber Engineering MeetupBlake Irvine People, Platform, Projects: these slides overview how Netflix works with Big Data. I share how our teams are organized, the roles we typically have on the teams, an overview of our Big Data Platform, and two example projects.

Streaming Analytics

Streaming AnalyticsNeera Agarwal Streaming analytics provides real-time processing of continuous data streams. It contrasts with batch processing which operates on bounded datasets. Streaming analytics is used for applications like clickstream analysis, fraud detection, and IoT. Key concepts include event time windows, exactly-once processing, and state management. LinkedIn's streaming platform standardizes profile data in real-time using techniques like stream-table joins, broadcast joins, and reprocessing prior data. Popular open source streaming systems include Kafka Streams, Spark Streaming, Flink, and Storm.

Stream Analytics

Stream Analytics Franco Ucci This document provides an overview of Oracle Stream Analytics capabilities for processing fast streaming data. It discusses deployment approaches on Oracle Cloud, hybrid cloud, and on-premises. It also covers event processing techniques like pattern detection, time windows, and continuous querying enabled by Oracle Stream Analytics. Specific use cases for retail and healthcare are also presented.

Digital Business Transformation in the Streaming Era

Digital Business Transformation in the Streaming EraAttunity Enterprises are rapidly adopting stream computing backbones, in-memory data stores, change data capture, and other low-latency approaches for end-to-end applications. As businesses modernize their data architectures over the next several years, they will begin to evolve toward all-streaming architectures. In this webcast, Wikibon, Attunity, and MemSQL will discuss how enterprise data professionals should migrate their legacy architectures in this direction. They will provide guidance for migrating data lakes, data warehouses, data governance, and transactional databases to support all-streaming architectures for complex cloud and edge applications. They will discuss how this new architecture will drive enterprise strategies for operationalizing artificial intelligence, mobile computing, the Internet of Things, and cloud-native microservices.

Link to the Wikibon report - wikibon.com/wikibons-2018-big-data-analytics-trends-forecast

Link to Attunity Streaming CDC Book Download - http://www.bit.ly/cdcbook

Link to MemSQL's Free Data Pipeline Book - http://go.memsql.com/oreilly-data-pipelines

Spark and Hadoop at Production Scale-(Anil Gadre, MapR)

Spark and Hadoop at Production Scale-(Anil Gadre, MapR)Spark Summit This document discusses how MapR helps companies take Spark to production scale. It provides examples of companies using Spark and MapR for real-time security analytics, genomics research, and customer analytics. MapR offers high performance, reliability, and support to ensure Spark runs successfully in production environments. The document also announces new Spark-based quick start solutions from MapR and promotes free MapR training.

Qlik and Confluent Success Stories with Kafka - How Generali and Skechers Kee...

Qlik and Confluent Success Stories with Kafka - How Generali and Skechers Kee...HostedbyConfluent Converting production databases into live data streams for Apache Kafka can be labor intensive and costly. As Kafka architectures grow, complexity also rises as data teams begin to configure clusters for redundancy, partitions for performance, as well as for consumer groups for correlated analytics processing. In this breakout session, you’ll hear data streaming success stories from Generali and Skechers that leverage Qlik Data Integration and Confluent. You’ll discover how Qlik’s data integration platform lets organizations automatically produce real-time transaction streams into Kafka, Confluent Platform, or Confluent Cloud, deliver faster business insights from data, enable streaming analytics, as well as streaming ingestion for modern analytics. Learn how these customer use Qlik and Confluent to: - Turn databases into live data feeds - Simplify and automate the real-time data streaming process - Accelerate data delivery to enable real-time analytics Learn how Skechers and Generali breathe new life into data in the cloud, stay ahead of changing demands, while lowering over-reliance on resources, production time and costs.

Implementing the Lambda Architecture efficiently with Apache Spark

Implementing the Lambda Architecture efficiently with Apache SparkDataWorks Summit This document discusses implementing the Lambda Architecture efficiently using Apache Spark. It provides an overview of the Lambda Architecture concept, which aims to provide low latency querying while supporting batch updates. The Lambda Architecture separates processing into batch and speed layers, with a serving layer that merges the results. Apache Spark is presented as an efficient way to implement the Lambda Architecture due to its unified processing engine, support for streaming and batch data, and ability to easily scale out. The document recommends resources for learning more about Spark and the Lambda Architecture.

Time Series Analysis Using an Event Streaming Platform

Time Series Analysis Using an Event Streaming PlatformDr. Mirko Kämpf

Advanced time series analysis (TSA) requires very special data preparation procedures to convert raw data into useful and compatible formats.

In this presentation you will see some typical processing patterns for time series based research, from simple statistics to reconstruction of correlation networks.

The first case is relevant for anomaly detection and to protect safety.

Reconstruction of graphs from time series data is a very useful technique to better understand complex systems like supply chains, material flows in factories, information flows within organizations, and especially in medical research.

With this motivation we will look at typical data aggregation patterns. We investigate how to apply analysis algorithms in the cloud. Finally we discuss a simple reference architecture for TSA on top of the Confluent Platform or Confluent cloud.

How Yellowbrick Data Integrates to Existing Environments Webcast

How Yellowbrick Data Integrates to Existing Environments WebcastYellowbrick Data This document discusses how Yellowbrick can integrate into existing data environments. It describes Yellowbrick's data warehouse capabilities and how it compares to other solutions. The document recommends upgrading from single server databases or traditional MPP systems to Yellowbrick when data outgrows a single server or there are too many disparate systems. It also recommends moving from pre-configured or cloud-only systems to Yellowbrick to significantly reduce costs while improving query performance. The document concludes with a security demonstration using a netflow dataset.

Engineering Machine Learning Data Pipelines Series: Streaming New Data as It ...

Engineering Machine Learning Data Pipelines Series: Streaming New Data as It ...Precisely This document discusses engineering machine learning data pipelines and addresses five big challenges: 1) scattered and difficult to access data, 2) data cleansing at scale, 3) entity resolution, 4) tracking data lineage, and 5) ongoing real-time changed data capture and streaming. It presents DMX Change Data Capture as a solution to capture changes from various data sources and replicate them in real-time to targets like Kafka, HDFS, databases and data lakes to feed machine learning models. Case studies demonstrate how DMX-h has helped customers like a global hotel chain and insurance and healthcare companies build scalable data pipelines.

Ad

More Related Content

What's hot (20)

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...DataWorks Summit/Hadoop Summit This document discusses how TiVo used a graph database and Spark to gain insights from their log data. TiVo collects log data from their set top boxes but faced challenges analyzing it to optimize the user experience. Their solution was to build a graph of user sessions in Neo4j connected by edges of user actions. This allowed them to analyze paths users take and understand how users discover and access content. They demonstrated analyzing the most popular paths and apps. The graph provided advantages over SQL for understanding interconnected user behavior.

Shortening the Feedback Loop: How Spotify’s Big Data Ecosystem has evolved to...

Shortening the Feedback Loop: How Spotify’s Big Data Ecosystem has evolved to...Big Data Spain https://www.bigdataspain.org/2016/program/thu-shortening-feedback-loop-how-spotify-big-data-ecosystem-has-evolved-produce-real-time-insights.html

https://www.youtube.com/watch?v=qNuy3JZsFPM&index=18&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik&t=28s

Using Hadoop to build a Data Quality Service for both real-time and batch data

Using Hadoop to build a Data Quality Service for both real-time and batch dataDataWorks Summit/Hadoop Summit Griffin is a data quality platform built by eBay on Hadoop and Spark to provide a unified process for detecting data quality issues in both real-time and batch data across multiple systems. It defines common data quality dimensions and metrics and calculates measurement values and quality scores, storing results and generating trending reports. Griffin provides a centralized data quality service for eBay and has been deployed processing over 1.2PB of data and 800M daily records using 100+ metrics. It is open source and contributions are welcome.

Data Apps with the Lambda Architecture - with Real Work Examples on Merging B...

Data Apps with the Lambda Architecture - with Real Work Examples on Merging B...Altan Khendup The document discusses the Lambda architecture, which provides a common pattern for integrating real-time and batch processing systems. It describes the key components of Lambda - the batch layer, speed layer, and serving layer. The challenges of implementing Lambda are that it requires multiple systems and technologies to be coordinated. Real-world examples are needed to help practical application. The document also provides examples of medical and customer analytics use cases that could benefit from a Lambda approach.

Instrumenting your Instruments

Instrumenting your Instruments DataWorks Summit/Hadoop Summit This document summarizes Premal Shah's presentation on how 6sense instruments their systems to analyze customer data. 6sense uses Hadoop and other tools to ingest customer data from various sources, run modeling and scoring, and provide actionable insights to customers. They discuss the data pipeline, challenges of performance and scaling, and how they use metrics and tools like Sumo Logic and OpsClarity to optimize and monitor their systems.

Apache frameworks for Big and Fast Data

Apache frameworks for Big and Fast DataNaveen Korakoppa Apache frameworks provide solutions for processing big and fast data. Traditional APIs use a request/response model with pull-based interactions, while modern data streaming uses a publish/subscribe model. Key concepts for big data architectures include batch processing frameworks like Hadoop, stream processing tools like Storm, and hybrid options like Spark and Flink. Popular data ingestion tools include Kafka for messaging, Flume for log data, and Sqoop for structured data. The best solution depends on requirements like latency, data volume, and workload type.

Oracle GoldenGate and Apache Kafka: A Deep Dive Into Real-Time Data Streaming

Oracle GoldenGate and Apache Kafka: A Deep Dive Into Real-Time Data StreamingMichael Rainey We produce quite a lot of data! Much of the data are business transactions stored in a relational database. More frequently, the data are non-structured, high volume and rapidly changing datasets known in the industry as Big Data. The challenge for data integration professionals is to combine and transform the data into useful information. Not just that, but it must also be done in near real-time and using a target system such as Hadoop. The topic of this session, real-time data streaming, provides a great solution for this challenging task. By integrating GoldenGate, Oracle’s premier data replication technology, and Apache Kafka, the latest open-source streaming and messaging system, we can implement a fast, durable, and scalable solution.

Presented at Oracle OpenWorld 2016

Stream Processing as Game Changer for Big Data and Internet of Things by Kai ...

Stream Processing as Game Changer for Big Data and Internet of Things by Kai ...Big Data Spain https://www.bigdataspain.org/2016/program/thu-big-data-analytics-machine-learning-to-real-time-processing.html

https://www.youtube.com/watch?v=QiQ6LKKvd9k&t=3s&index=19&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik

Bigger Faster Easier: LinkedIn Hadoop Summit 2015

Bigger Faster Easier: LinkedIn Hadoop Summit 2015Shirshanka Das We discuss LinkedIn's big data ecosystem and its evolution through the years. We introduce three open source projects, Gobblin for ingestion, Cubert for computation and Pinot for fast OLAP serving. We also showcase our in-house data discovery and lineage portal WhereHows.

Accelerating Data Warehouse Modernization

Accelerating Data Warehouse ModernizationDataWorks Summit/Hadoop Summit Modern data warehouses need to be modernized to handle big data, integrate multiple data silos, reduce costs, and reduce time to market. A modern data warehouse blueprint includes a data lake to land and ingest structured, unstructured, external, social, machine, and streaming data alongside a traditional data warehouse. Key challenges for modernization include making data discoverable and usable for business users, rethinking ETL to allow for data blending, and enabling self-service BI over Hadoop. Common tactics for modernization include using a data lake as a landing zone, offloading infrequently accessed data to Hadoop, and exploring data in Hadoop to discover new insights.

Migration and Coexistence between Relational and NoSQL Databases by Manuel H...

Migration and Coexistence between Relational and NoSQL Databases by Manuel H...Big Data Spain https://www.bigdataspain.org/2016/program/thu-migration-coexistence-between-relational-nosql-databases.html

https://www.youtube.com/watch?v=mM9pchslCn4&list=PL6O3g23-p8Tr5eqnIIPdBD_8eE5JBDBik&index=56&t=18s

Innovation in the Enterprise Rent-A-Car Data Warehouse

Innovation in the Enterprise Rent-A-Car Data WarehouseDataWorks Summit Big Data adoption is a journey. Depending on the business the process can take weeks, months, or even years. With any transformative technology the challenges have less to do with the technology and more to do with how a company adapts itself to a new way of thinking about data. Building a Center of Excellence is one way for IT to help drive success.

This talk will explore Enterprise Holdings Inc. (which operates the Enterprise Rent-A-Car, National Car Rental and Alamo Rent A Car) and their experience with Big Data. EHI’s journey started in 2013 with Hadoop as a POC and today are working to create the next generation data warehouse in Microsoft’s Azure cloud utilizing a lambda architecture.

We’ll discuss the Center of Excellence, the roles in the new world, share the things which worked well, and rant about those which didn’t.

No deep Hadoop knowledge is necessary, architect or executive level.

Netflix Data Engineering @ Uber Engineering Meetup

Netflix Data Engineering @ Uber Engineering MeetupBlake Irvine People, Platform, Projects: these slides overview how Netflix works with Big Data. I share how our teams are organized, the roles we typically have on the teams, an overview of our Big Data Platform, and two example projects.

Streaming Analytics

Streaming AnalyticsNeera Agarwal Streaming analytics provides real-time processing of continuous data streams. It contrasts with batch processing which operates on bounded datasets. Streaming analytics is used for applications like clickstream analysis, fraud detection, and IoT. Key concepts include event time windows, exactly-once processing, and state management. LinkedIn's streaming platform standardizes profile data in real-time using techniques like stream-table joins, broadcast joins, and reprocessing prior data. Popular open source streaming systems include Kafka Streams, Spark Streaming, Flink, and Storm.

Stream Analytics

Stream Analytics Franco Ucci This document provides an overview of Oracle Stream Analytics capabilities for processing fast streaming data. It discusses deployment approaches on Oracle Cloud, hybrid cloud, and on-premises. It also covers event processing techniques like pattern detection, time windows, and continuous querying enabled by Oracle Stream Analytics. Specific use cases for retail and healthcare are also presented.

Digital Business Transformation in the Streaming Era

Digital Business Transformation in the Streaming EraAttunity Enterprises are rapidly adopting stream computing backbones, in-memory data stores, change data capture, and other low-latency approaches for end-to-end applications. As businesses modernize their data architectures over the next several years, they will begin to evolve toward all-streaming architectures. In this webcast, Wikibon, Attunity, and MemSQL will discuss how enterprise data professionals should migrate their legacy architectures in this direction. They will provide guidance for migrating data lakes, data warehouses, data governance, and transactional databases to support all-streaming architectures for complex cloud and edge applications. They will discuss how this new architecture will drive enterprise strategies for operationalizing artificial intelligence, mobile computing, the Internet of Things, and cloud-native microservices.

Link to the Wikibon report - wikibon.com/wikibons-2018-big-data-analytics-trends-forecast

Link to Attunity Streaming CDC Book Download - http://www.bit.ly/cdcbook

Link to MemSQL's Free Data Pipeline Book - http://go.memsql.com/oreilly-data-pipelines

Spark and Hadoop at Production Scale-(Anil Gadre, MapR)

Spark and Hadoop at Production Scale-(Anil Gadre, MapR)Spark Summit This document discusses how MapR helps companies take Spark to production scale. It provides examples of companies using Spark and MapR for real-time security analytics, genomics research, and customer analytics. MapR offers high performance, reliability, and support to ensure Spark runs successfully in production environments. The document also announces new Spark-based quick start solutions from MapR and promotes free MapR training.

Qlik and Confluent Success Stories with Kafka - How Generali and Skechers Kee...

Qlik and Confluent Success Stories with Kafka - How Generali and Skechers Kee...HostedbyConfluent Converting production databases into live data streams for Apache Kafka can be labor intensive and costly. As Kafka architectures grow, complexity also rises as data teams begin to configure clusters for redundancy, partitions for performance, as well as for consumer groups for correlated analytics processing. In this breakout session, you’ll hear data streaming success stories from Generali and Skechers that leverage Qlik Data Integration and Confluent. You’ll discover how Qlik’s data integration platform lets organizations automatically produce real-time transaction streams into Kafka, Confluent Platform, or Confluent Cloud, deliver faster business insights from data, enable streaming analytics, as well as streaming ingestion for modern analytics. Learn how these customer use Qlik and Confluent to: - Turn databases into live data feeds - Simplify and automate the real-time data streaming process - Accelerate data delivery to enable real-time analytics Learn how Skechers and Generali breathe new life into data in the cloud, stay ahead of changing demands, while lowering over-reliance on resources, production time and costs.

Implementing the Lambda Architecture efficiently with Apache Spark

Implementing the Lambda Architecture efficiently with Apache SparkDataWorks Summit This document discusses implementing the Lambda Architecture efficiently using Apache Spark. It provides an overview of the Lambda Architecture concept, which aims to provide low latency querying while supporting batch updates. The Lambda Architecture separates processing into batch and speed layers, with a serving layer that merges the results. Apache Spark is presented as an efficient way to implement the Lambda Architecture due to its unified processing engine, support for streaming and batch data, and ability to easily scale out. The document recommends resources for learning more about Spark and the Lambda Architecture.

Time Series Analysis Using an Event Streaming Platform

Time Series Analysis Using an Event Streaming PlatformDr. Mirko Kämpf

Advanced time series analysis (TSA) requires very special data preparation procedures to convert raw data into useful and compatible formats.

In this presentation you will see some typical processing patterns for time series based research, from simple statistics to reconstruction of correlation networks.

The first case is relevant for anomaly detection and to protect safety.

Reconstruction of graphs from time series data is a very useful technique to better understand complex systems like supply chains, material flows in factories, information flows within organizations, and especially in medical research.

With this motivation we will look at typical data aggregation patterns. We investigate how to apply analysis algorithms in the cloud. Finally we discuss a simple reference architecture for TSA on top of the Confluent Platform or Confluent cloud.

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...

Building a Graph Database in Neo4j with Spark & Spark SQL to gain new insight...DataWorks Summit/Hadoop Summit

Using Hadoop to build a Data Quality Service for both real-time and batch data

Using Hadoop to build a Data Quality Service for both real-time and batch dataDataWorks Summit/Hadoop Summit

Similar to Gluent Extending Enterprise Applications with Hadoop (20)

How Yellowbrick Data Integrates to Existing Environments Webcast

How Yellowbrick Data Integrates to Existing Environments WebcastYellowbrick Data This document discusses how Yellowbrick can integrate into existing data environments. It describes Yellowbrick's data warehouse capabilities and how it compares to other solutions. The document recommends upgrading from single server databases or traditional MPP systems to Yellowbrick when data outgrows a single server or there are too many disparate systems. It also recommends moving from pre-configured or cloud-only systems to Yellowbrick to significantly reduce costs while improving query performance. The document concludes with a security demonstration using a netflow dataset.

Engineering Machine Learning Data Pipelines Series: Streaming New Data as It ...

Engineering Machine Learning Data Pipelines Series: Streaming New Data as It ...Precisely This document discusses engineering machine learning data pipelines and addresses five big challenges: 1) scattered and difficult to access data, 2) data cleansing at scale, 3) entity resolution, 4) tracking data lineage, and 5) ongoing real-time changed data capture and streaming. It presents DMX Change Data Capture as a solution to capture changes from various data sources and replicate them in real-time to targets like Kafka, HDFS, databases and data lakes to feed machine learning models. Case studies demonstrate how DMX-h has helped customers like a global hotel chain and insurance and healthcare companies build scalable data pipelines.

20160331 sa introduction to big data pipelining berlin meetup 0.3

20160331 sa introduction to big data pipelining berlin meetup 0.3Simon Ambridge This document discusses building data pipelines with Apache Spark and DataStax Enterprise (DSE) for both static and real-time data. It describes how DSE provides a scalable, fault-tolerant platform for distributed data storage with Cassandra and real-time analytics with Spark. It also discusses using Kafka as a messaging queue for streaming data and processing it with Spark. The document provides examples of using notebooks, Parquet, and Akka for building pipelines to handle both large static datasets and fast, real-time streaming data sources.

HP Enterprises in Hana Pankaj Jain May 2016

HP Enterprises in Hana Pankaj Jain May 2016INDUSCommunity HPE offers solutions for hybrid clouds and SAP HANA based on composable infrastructure. Composable infrastructure allows resources to be composed on demand in seconds and infrastructure to be programmed through a single line of code. This approach dramatically reduces overprovisioning and speeds application and service delivery. HPE's composable infrastructure solution is called Synergy, which provides fluid resource pools, software-defined intelligence, and a unified API. HPE also offers converged systems optimized for SAP HANA that are pre-configured to deliver maximum performance.

Transforming Data Architecture Complexity at Sears - StampedeCon 2013

Transforming Data Architecture Complexity at Sears - StampedeCon 2013StampedeCon At the StampedeCon 2013 Big Data conference in St. Louis, Justin Sheppard discussed Transforming Data Architecture Complexity at Sears. High ETL complexity and costs, data latency and redundancy, and batch window limits are just some of the IT challenges caused by traditional data warehouses. Gain an understanding of big data tools through the use cases and technology that enables Sears to solve the problems of the traditional enterprise data warehouse approach. Learn how Sears uses Hadoop as a data hub to minimize data architecture complexity – resulting in a reduction of time to insight by 30-70% – and discover “quick wins” such as mainframe MIPS reduction.

Deliver Best-in-Class HPC Cloud Solutions Without Losing Your Mind

Deliver Best-in-Class HPC Cloud Solutions Without Losing Your MindAvere Systems While cloud computing offers virtually unlimited capacity, harnessing that capacity in an efficient, cost effective fashion can be cumbersome and difficult at the workload level. At the organizational level, it can quickly become chaos.

You must make choices around cloud deployment, and these choices could have a long-lasting impact on your organization. It is important to understand your options and avoid incomplete, complicated, locked-in scenarios. Data management and placement challenges make having the ability to automate workflows and processes across multiple clouds a requirement.

In this webinar, you will:

• Learn how to leverage cloud services as part of an overall computation approach

• Understand data management in a cloud-based world

• Hear what options you have to orchestrate HPC in the cloud

• Learn how cloud orchestration works to automate and align computing with specific goals and objectives

• See an example of an orchestrated HPC workload using on-premises data

From computational research to financial back testing, and research simulations to IoT processing frameworks, decisions made now will not only impact future manageability, but also your sanity.

Justin Sheppard & Ankur Gupta from Sears Holdings Corporation - Single point ...

Justin Sheppard & Ankur Gupta from Sears Holdings Corporation - Single point ...Global Business Events Justin Sheppard & Ankur Gupta from Sears Holdings Corporation spoke at the CIO North America Event in June 2013

Enabling big data & AI workloads on the object store at DBS

Enabling big data & AI workloads on the object store at DBS Alluxio, Inc. DBS Bank is headquartered in Singapore and has evolved its data platforms over three generations from proprietary systems to a hybrid cloud-native platform using open source technologies. It is using Alluxio to unify access to data stored in its on-premises object store and HDFS as well as enable analytics workloads to burst into AWS. Alluxio provides data caching to speed up analytics jobs, intelligent data tiering for efficiency, and policy-driven data migration to the cloud over time. DBS is exploring further using cloud services for speech processing and moving more workloads to the cloud while keeping data on-premises for compliance.

Making BD Work~TIAS_20150622

Making BD Work~TIAS_20150622Anthony Potappel This document discusses the challenges of big data and potential solutions. It addresses the volume, variety, and velocity of big data. Hadoop is presented as a solution for distributed storage and processing. The document also discusses data storage options, flexible resources like cloud computing, and achieving scalability and multi-platform support. Real-world examples of big data applications are provided.

Accelerate Analytics and ML in the Hybrid Cloud Era

Accelerate Analytics and ML in the Hybrid Cloud EraAlluxio, Inc. Alluxio Webinar

April 6, 2021

For more Alluxio events: https://www.alluxio.io/events/

Speakers:

Alex Ma, Alluxio

Peter Behrakis, Alluxio

Many companies we talk to have on premises data lakes and use the cloud(s) to burst compute. Many are now establishing new object data lakes as well. As a result, running analytics such as Hive, Spark, Presto and machine learning are experiencing sluggish response times with data and compute in multiple locations. We also know there is an immense and growing data management burden to support these workflows.

In this talk, we will walk through what Alluxio’s Data Orchestration for the hybrid cloud era is and how it solves the performance and data management challenges we see.

In this tech talk, we'll go over:

- What is Alluxio Data Orchestration?

- How does it work?

- Alluxio customer results

Agile Big Data Analytics Development: An Architecture-Centric Approach

Agile Big Data Analytics Development: An Architecture-Centric ApproachSoftServe Presented at The Hawaii International Conference on System Sciences by Hong-Mei Chen and Rick Kazman (University of Hawaii), Serge Haziyev (SoftServe).

Big Data Open Source Tools and Trends: Enable Real-Time Business Intelligence...

Big Data Open Source Tools and Trends: Enable Real-Time Business Intelligence...Perficient, Inc. This document discusses big data tools and trends that enable real-time business intelligence from machine logs. It provides an overview of Perficient, a leading IT consulting firm, and introduces the speakers Eric Roch and Ben Hahn. It then covers topics like what constitutes big data, how machine data is a source of big data, and how tools like Hadoop, Storm, Elasticsearch can be used to extract insights from machine data in real-time through open source solutions and functional programming approaches like MapReduce. It also demonstrates a sample data analytics workflow using these tools.

Meta scale kognitio hadoop webinar

Meta scale kognitio hadoop webinarMichael Hiskey This webinar discusses tools for making big data easy to work with. It covers MetaScale Expertise, which provides Hadoop expertise and case studies. Kognitio Analytics is discussed as a way to accelerate Hadoop for organizations. The webinar agenda includes an introduction, presentations on MetaScale and Kognitio, and a question and answer session. Rethinking data strategies with Hadoop and using in-memory analytics are presented as ways to gain insights from large, diverse datasets.

Simple, Modular and Extensible Big Data Platform Concept

Simple, Modular and Extensible Big Data Platform ConceptSatish Mohan Few slides outlining a simple, modular and extensible big data platform concept, leveraging the growing ecosystem.

Hadoop and SQL: Delivery Analytics Across the Organization

Hadoop and SQL: Delivery Analytics Across the OrganizationSeeling Cheung This document summarizes a presentation given by Nicholas Berg of Seagate and Adriana Zubiri of IBM on delivering analytics across organizations using Hadoop and SQL. Some key points discussed include Seagate's plans to use Hadoop to enable deeper analysis of factory and field data, the evolving Hadoop landscape and rise of SQL, and a performance comparison showing IBM's Big SQL outperforming Spark SQL, especially at scale. The document provides an overview of Seagate and IBM's strategies and experiences with Hadoop.

How the Development Bank of Singapore solves on-prem compute capacity challen...

How the Development Bank of Singapore solves on-prem compute capacity challen...Alluxio, Inc. The Development Bank of Singapore (DBS) has evolved its data platforms over three generations to address big data challenges and the explosion of data. It now uses a hybrid cloud model with Alluxio to provide a unified namespace across on-prem and cloud storage for analytics workloads. Alluxio enables "zero-copy" cloud bursting by caching hot data and orchestrating analytics jobs between on-prem and cloud resources like AWS EMR and Google Dataproc. This provides dynamic scaling of compute capacity while retaining data locality. Alluxio also offers intelligent data tiering and policy-driven data migration to cloud storage over time for cost efficiency and management.

Testing Big Data: Automated Testing of Hadoop with QuerySurge

Testing Big Data: Automated Testing of Hadoop with QuerySurgeRTTS Are You Ready? Stepping Up To The Big Data Challenge In 2016 - Learn why Testing is pivotal to the success of your Big Data Strategy.

According to a new report by analyst firm IDG, 70% of enterprises have either deployed or are planning to deploy big data projects and programs this year due to the increase in the amount of data they need to manage.

The growing variety of new data sources is pushing organizations to look for streamlined ways to manage complexities and get the most out of their data-related investments. The companies that do this correctly are realizing the power of big data for business expansion and growth.

Learn why testing your enterprise's data is pivotal for success with big data and Hadoop. Learn how to increase your testing speed, boost your testing coverage (up to 100%), and improve the level of quality within your data - all with one data testing tool.

Webinar: SQL for Machine Data?

Webinar: SQL for Machine Data?Crate.io By 2020, 50% of all new software will process machine-generated data of some sort (Gartner). Historically, machine data use cases have required non-SQL data stores like Splunk, Elasticsearch, or InfluxDB.

Today, new SQL DB architectures rival the non-SQL solutions in ease of use, scalability, cost, and performance. Please join this webinar for a detailed comparison of machine data management approaches.

Simplifying Real-Time Architectures for IoT with Apache Kudu

Simplifying Real-Time Architectures for IoT with Apache KuduCloudera, Inc. 3 Things to Learn About:

*Building scalable real time architectures for managing data from IoT

*Processing data in real time with components such as Kudu & Spark

*Customer case studies highlighting real-time IoT use cases

Accelerating Big Data Analytics

Accelerating Big Data AnalyticsAttunity The document discusses using Attunity Replicate to accelerate loading and integrating big data into Microsoft's Analytics Platform System (APS). Attunity Replicate provides real-time change data capture and high-performance data loading from various sources into APS. It offers a simplified and automated process for getting data into APS to enable analytics and business intelligence. Case studies are presented showing how major companies have used APS and Attunity Replicate to improve analytics and gain business insights from their data.

Justin Sheppard & Ankur Gupta from Sears Holdings Corporation - Single point ...

Justin Sheppard & Ankur Gupta from Sears Holdings Corporation - Single point ...Global Business Events

Ad

Recently uploaded (20)

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptx

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptxfatimalazaar2004 BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Ad

Gluent Extending Enterprise Applications with Hadoop

- 1. 1 we liberate enterprise data

- 2. 2 Extending Enterprise Applications with the Full Power of Hadoop Tanel Poder gluent.com

- 3. 3 Gluent - who we are Tanel also co-authored the Expert Oracle Exadata book. Speaker: Tanel Poder A long time computer performance geek. Co-founder & CEO of Gluent. Long term Oracle Database & Data Warehousing guys – focused on performance & scale. Alumni 2009-2016

- 4. 4 • Super-scalable • Processing pushed close to data • Software-defined (open source) • Commodity hardware • No SAN storage bottlenecks • Open data formats • One data, many engines Why Hadoop? Scalable & affordable-at-scale • Yahoo: multiple 4000+ node Hadoop clusters • Facebook: 30 PB Hadoop cluster (in year 2011!) 2017: Enterprise-ready • Hadoop is secure … • … has management tools … • … and evolving fast

- 5. 5 One Data, Many Engines! • Decoupling storage from compute + open data formats = flexible future-proof data platforms! HDFS Parquet ORC XML Avro Amazon S3 Parquet WebLog Kudu Column-store Impala SQLHive SQL Xyz… Solr / Search SparkMR Kudu API libparquet

- 6. 6 BUT No complex transactions No transactional “PL/SQL” No very complex queries

- 7. 7 Is Hadoop only for ”Big Data”?

- 8. 8

- 9. 9 Hadoop for traditional enterprise apps? New “Big Data” applications Traditional enterprise applications

- 10. 10 How to connect all this data with enterprise applications? New data SaaS IoT Big Data Modern data platformsCore enterprise apps Running on relational DBs ? ?

- 11. 11 Hybrid World!

- 12. 1212 Gluent Oracle Postgres SQL Teradata IoT & Big Data MSSQL App X App Y App Z Hadoop/RDBMS connectivity layer Open data formats!

- 13. 13 • Gluent Data Platform (of course :-) • No-ETL Data Sync (Data Offload to Hadoop) • Smart Connector (Transparent Data Query from Hadoop) • ETL & replication products • Informatica, Talend, Pentaho, etc etc… • Oracle GoldenGate, Attunity, DBVisit, etc… • RDBMS->Hadoop Query products • Teradata QueryGrid • Microsoft SQL Server Polybase • Oracle Big Data SQL • IBM Big SQL • Native RDBMS database links & linked servers over ODBC etc… Hybrid World-related Vendors & Tools

- 14. 14 • 2-minute demo! • More technical details at: • https://vimeo.com/196497024 Gluent Demo

- 15. 15 Hybrid World Case Studies

- 16. 16 Case Study 1 – IoT data within existing RDBMS app

- 17. 17 Securus: Satellite Tracking of People (STOP) VeriTracks Application http://www.stopllc.com/ Challenge - how to: • Scale business? • Offer additional services? • Add additional data sources? • Embed predictive & advanced analytics, machine learning? • Cut cost at the same time?! • 150 TB dataset • Geospatial data • Kept in Oracle DB • Growing fast • Google Maps API • Near-realtime reaction • Long-term analytics

- 18. 18 Securus: Satellite Tracking of People (STOP) VeriTracks Application

- 19. 19 1. New analytics in existing apps immediately possible 2. Reduced cost 3. Move fast with low risk – don’t rewrite entire apps • The customer didn’t change a single line of code! Securus STOP: Summary

- 21. 21 Typical Application Story: Monolithic Data Model A complex business application running on a RDBMS Years of application development & improvement Upstream & downstream dependencies Terabytes of historical data (usually years of history) Big queries run for too long or never complete (or never tried) Does not scale with modern demand Way too expensive Application rewrite very costly & risky or virtually impossible Customers Products Preferences Promotions Prices RDBMS + SAN SALES

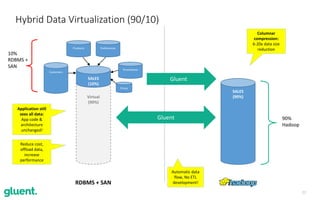

- 22. 22 Hybrid Data Virtualization (90/10) Virtual (90%) SALES (10%) Customers Products Preferences Promotions Prices RDBMS + SAN 10% RDBMS + SAN SALES (90%) 90% Hadoop Gluent Reduce cost, offload data, increase performance Application still sees all data: App code & architecture unchanged! Gluent Columnar compression: 6-20x data size reduction Automatic data flow, No ETL development!

- 23. 23 Hybrid Data Virtualization (100/10) Virtual (90%) SALES (10%) Customers Products Preferences Promotions Prices RDBMS + SAN SALES (100%) 10% RDBMS + SAN 100% Hadoop Gluent Customers Products Preferences Promotions Prices Gluent Gluent New Analytics & Apps Reduce cost and enable new analytics on Hadoop

- 24. 24 Hybrid Data Virtualization (Big Data/IoT) Customers Products Preferences Promotions Prices RDBMS + SAN WEB_VISITS (Hadoop only) SALES WEB_VISITS (Virtual) Gluent Data & compute virtualization: Users query tables in databases, actual data & processing in Hadoop

- 25. 25 • Call Detail Records • Only 90 days of history • Offloaded 89 days Case Study 2 – Large Telecom

- 26. 26 Case Study 2 – Large Telecom - Results

- 27. 27 • Query Elapsed Times Avg 36X Faster in Hybrid Mode • Average Oracle CPU Reduction 87% in Hybrid Mode • Storage cost reduction ~100X • HDFS storage ~10x cheaper than SAN • 11x compression due to columnar format (ORC) • 30 days < 2 minutes • 90 days ~3 minutes • Enabled Completely New Capabilities • Application Owner Wanted to Query 1 Year Case Study 2 – Large Telecom - Results

- 28. 28 Case Study 3 – Multi-Year Reports

- 29. 29 • Many different (generated) queries running for a few seconds each • We executed 5,500 APPX queries from AWR history using our tools • 50% reduction of CPU Case Study 4 – Thousands of ”Short” Queries 7731 3846 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 Before After Total CPU… Schema CPU Seconds DATAMART 7731 DATAMART_H 3846 50% CPU saving with hybrid query Average CPU 1.4 sec/exec before 0.7 sec with hybrid query

- 30. 30 Hybrid Case Study 5 – EDW Offload EDW DB (Oracle) EDW Apps EDW Apps Hadoop Transparent access No ETL data sync EDW DB (Oracle) EDW Apps EDW Apps Shrink legacy cost footprint, increase performance without re-writing apps

- 31. 31 Hybrid Case Study 6 – Access IoT Data in Enterprise Apps EDW DB (Oracle) EDW Apps EDW Apps Hadoop Smart Meter Data Call Recordings Transparent access Transparent access Hybrid Queries over all enterprise data No need to rewrite existing apps

- 32. 32 Hybrid Case Study 7 – data sharing platform (24 DBs) App 23 App 24 Hadoop App 1 App 2 Oracle DB Oracle DB … Oracle DB CDR data Oracle DB CDR data

- 33. 33 Summary

- 34. 34 • The Hybrid World is not “all-or-nothing” • Get the best of both worlds (RDBMS+Hadoop) • No data migration downtime & cutover needed • No need to re-write your apps to take advantage of modern data platforms • No need write ETL jobs to sync your data to Hadoop & Cloud Summary we liberate enterprise data

- 35. 35 Advisor Do you want to assess potential savings & opportunities with Gluent? https://gluent.com/products/gluent-advisor/

- 36. 36 http://gluent.com @gluent Thanks! + Q&A we liberate enterprise data