Goldilocks and the Three MySQL Queries

0 likes512 views

Optimizing MySQL queries using explain or the optimizer tracer can greatly increase the speed of retrieving or storing data.

1 of 43

Downloaded 14 times

![EXPLAIN & EXPLAIN EXTENDED

EXPLAIN [EXTENDED | PARTITIONS]

{

SELECT statement

| DELETE statement

| INSERT statement

| REPLACE statement

| UPDATE statement

}

Or EXPLAIN tbl_name (same as DESCRIBE tbl_name)](https://image.slidesharecdn.com/goldilocks-120125170011-phpapp01/85/Goldilocks-and-the-Three-MySQL-Queries-13-320.jpg)

![Index Hints

Use only as a last resort –

index_hint:

shifts in data can make

USE {INDEX|KEY}

this the 'long way

[{FOR {JOIN|ORDER BY|

GROUP BY}] ([index_list]) around'.

| IGNORE {INDEX|KEY}

[{FOR {JOIN|ORDER BY|

GROUP BY}] (index_list)

| FORCE {INDEX|KEY}

[{FOR {JOIN|ORDER BY|

GROUP BY}] (index_list) http://dev.mysql.com/doc/refman/5.6/en/index-

hints.html](https://image.slidesharecdn.com/goldilocks-120125170011-phpapp01/85/Goldilocks-and-the-Three-MySQL-Queries-36-320.jpg)

Ad

Recommended

San diegophp

San diegophpDave Stokes Presentation given to San Diego PHP on August 2nd, 2012 on MySQL query tuning, programming best practices.

Explain that explain

Explain that explainFabrizio Parrella The document provides guidance on understanding and optimizing database performance. It emphasizes the importance of properly designing schemas, normalizing data, using appropriate data types, and creating useful indexes. Explain plans should be used to test queries and identify optimization opportunities like adding missing indexes. Overall, the document encourages developers to view the database as a collaborative "friend" rather than an enemy, by understanding its capabilities and limitations.

Optimizing MySQL Queries

Optimizing MySQL QueriesAchievers Tech This document provides a summary of MySQL indexes and how to use the EXPLAIN statement to analyze query performance. It defines what indexes are, the different types of indexes like B-tree, hash, and full-text indexes. It also explains concepts like compound indexes, covering indexes, and partial indexes. The document demonstrates how to use the EXPLAIN statement to view and understand a query execution plan, including analyzing the possible and actual indexes used, join types, number of rows examined, and index usage. It provides examples of interpreting EXPLAIN output and analyzing performance bottlenecks.

MySQL Query And Index Tuning

MySQL Query And Index TuningManikanda kumar This document discusses various strategies for optimizing MySQL queries and indexes, including:

- Using the slow query log and EXPLAIN statement to analyze slow queries.

- Avoiding correlated subqueries and issues in older MySQL versions.

- Choosing indexes based on selectivity and covering common queries.

- Identifying and addressing full table scans and duplicate indexes.

- Understanding the different join types and selecting optimal indexes.

Query Optimization with MySQL 5.6: Old and New Tricks - Percona Live London 2013

Query Optimization with MySQL 5.6: Old and New Tricks - Percona Live London 2013Jaime Crespo Tutorial delivered at Percona MySQL Conference Live London 2013.

It doesn't matter what new SSD technologies appear, or what are the latest breakthroughs in flushing algorithms: the number one cause for MySQL applications being slow is poor execution plan of SQL queries. While the latest GA version provided a huge amount of transparent optimizations -specially for JOINS and subqueries- it is still the developer's responsibility to take advantage of all new MySQL 5.6 features.

In this tutorial we will propose the attendants a sample PHP application with bad response time. Through practical examples, we will suggest step-by-step strategies to improve its performance, including:

* Checking MySQL & InnoDB configuration

* Internal (performance_schema) and external tools for profiling (pt-query-digest)

* New EXPLAIN tools

* Simple and multiple column indexing

* Covering index technique

* Index condition pushdown

* Batch key access

* Subquery optimization

Mysql Explain Explained

Mysql Explain ExplainedJeremy Coates Adrian Hardy's slides from PHPNW08

Once you have your query returning the correct results, speed becomes an important factor. Speed can either be an issue from the outset, or can creep in as your dataset grows. Understanding the EXPLAIN command is essential to helping you solve and even anticipate slow queries.

Associated video: http://blip.tv/file/1791781

How to analyze and tune sql queries for better performance percona15

How to analyze and tune sql queries for better performance percona15oysteing The document discusses how to analyze and tune MySQL queries for better performance. It covers several key topics:

1) The MySQL optimizer selects the most efficient access method (e.g. table scan, index scan) based on a cost model that estimates I/O and CPU costs.

2) The join optimizer searches for the lowest-cost join order by evaluating partial plans in a depth-first manner and pruning less promising plans.

3) Tools like the performance schema provide query history and statistics to analyze queries and monitor performance bottlenecks like disk I/O.

4) Indexes, rewriting queries, and query hints can influence the optimizer to select a better execution plan.

MySQL: Indexing for Better Performance

MySQL: Indexing for Better Performancejkeriaki This document discusses indexing in MySQL databases to improve query performance. It begins by defining an index as a data structure that speeds up data retrieval from databases. It then covers various types of indexes like primary keys, unique indexes, and different indexing algorithms like B-Tree, hash, and full text. The document discusses when to create indexes, such as on columns frequently used in queries like WHERE clauses. It also covers multi-column indexes, partial indexes, and indexes to support sorting, joining tables, and avoiding full table scans. The concepts of cardinality and selectivity are introduced. The document concludes with a discussion of index overhead and using EXPLAIN to view query execution plans and index usage.

Oracle SQL, PL/SQL best practices

Oracle SQL, PL/SQL best practicesSmitha Padmanabhan The document provides guidelines for optimizing SQL and PL/SQL code for performance. It discusses best practices for using indexes like creating them on frequently queried columns and avoiding functions on indexed columns. Other topics covered include using EXISTS instead of JOINs when possible, avoiding DISTINCT, and placing filters in the WHERE clause instead of HAVING. Modular code design, avoiding negatives and LIKE patterns, and letting the optimizer do its work are also recommended. The goal is to help programmers write efficient code by understanding query execution and tuning techniques.

How mysql choose the execution plan

How mysql choose the execution plan辛鹤 李 The document discusses how MySQL chooses query execution plans and the importance of indexing for performance. It covers the MySQL optimizer, tools for analyzing queries like EXPLAIN and TRACE, and techniques like index condition pushdown that push conditions to the storage engine. The document uses examples and a quiz to illustrate indexing concepts and how the optimizer works in MySQL.

Oracle views

Oracle viewsBalqees Al.Mubarak This document discusses database views in Oracle. It defines what views are and how they present data differently than the underlying tables. It describes the differences between simple views with one table and complex views with functions or multiple tables. It provides the syntax for creating views and examples of creating both simple and complex views. It also covers modifying views, using the WITH CHECK OPTION for data integrity, making views read-only to prevent DML operations, and dropping views.

Webinar 2013 advanced_query_tuning

Webinar 2013 advanced_query_tuning晓 周 Advanced MySQL Query Tuning from

Percona Database Performance for share.

https://www.youtube.com/watch?v=TPFibi2G_oo

Explaining the explain_plan

Explaining the explain_planarief12H This document discusses execution plans in Oracle databases. It begins by defining an execution plan as the detailed steps the optimizer uses to execute a SQL statement, expressed as database operators. It then covers how to generate plans using EXPLAIN PLAN or V$SQL_PLAN, what constitutes a good plan for the optimizer in terms of cost and performance, and key aspects of plans including cardinality, access paths, join types, and join order. Examples are provided to illustrate each concept.

MySQL index optimization techniques

MySQL index optimization techniqueskumar gaurav This document discusses various techniques for optimizing MySQL indexes, including:

- Ensuring indexes have good selectivity on fields and composite indexes are ordered optimally

- Using prefix indexes that take up less space and are faster than whole column indexes

- Explaining query execution plans using EXPLAIN to identify optimal indexes

- Using hints like USE INDEX, IGNORE INDEX, and STRAIGHT_JOIN to influence the optimizer

- Analyzing the slow query log and general query log to identify queries that need optimization

MySQL Indexing : Improving Query Performance Using Index (Covering Index)

MySQL Indexing : Improving Query Performance Using Index (Covering Index)Hemant Kumar Singh The document discusses improving query performance in databases using indexes. It explains what indexes are and the different types of indexes including column, composite, and covering indexes. It provides examples of how to create indexes on single and multiple columns and how the order of columns matters. The document also discusses factors that affect database performance and guidelines for index usage and size optimization.

01 oracle architecture

01 oracle architectureSmitha Padmanabhan Oracle Architecture in regards to writing better performing queries.

Understand Oracle Architecture

Database Buffer Cache

Shared Pool

Write better performing queries

Subqueries -Oracle DataBase

Subqueries -Oracle DataBaseSalman Memon After completing this lesson, you should be able to

do the following:

Describe the types of problem that subqueries can solve

Define subqueries

List the types of subqueries

Write single-row and multiple-row subqueries

http://phpexecutor.com

Sub query_SQL

Sub query_SQLCoT A subquery is a SELECT statement embedded within another SQL statement. It allows queries to retrieve data from multiple tables or queries. There are two types of subqueries: single-row and multiple-row. Single-row subqueries return only one row of data and use single-row comparison operators like =. Multiple-row subqueries return more than one row of data and use operators like IN, ANY, ALL that can handle multiple values. Subqueries are useful for solving problems that require performing multiple related queries by nesting one query within another.

How to Analyze and Tune MySQL Queries for Better Performance

How to Analyze and Tune MySQL Queries for Better Performanceoysteing The document discusses techniques for optimizing MySQL queries for better performance. It covers topics like cost-based query optimization in MySQL, selecting optimal data access methods like indexes, the join optimizer, subquery optimizations, and tools for monitoring and analyzing queries. The presentation agenda includes introductions to index selection, join optimization, subquery optimizations, ordering and aggregation, and influencing the optimizer. Examples are provided to illustrate index selection, ref access analysis, and the range optimizer.

Lab5 sub query

Lab5 sub queryBalqees Al.Mubarak A sub-query is a query embedded within another SQL query. The sub-query executes first and returns its results to the outer query. Sub-queries can be used to filter records in the outer query based on conditions determined by the sub-query results. Sub-queries can return single rows, multiple rows, or multiple columns and are used with operators like =, >, IN to relate the outer and inner queries.

Views, Triggers, Functions, Stored Procedures, Indexing and Joins

Views, Triggers, Functions, Stored Procedures, Indexing and Joinsbaabtra.com - No. 1 supplier of quality freshers The document provides information about various SQL concepts like views, triggers, functions, indexes, and joins. It defines views as virtual tables created by queries on other tables. Triggers are blocks of code that execute due to data modification language statements on tables. Functions allow reusable code and improve clarity. Indexes speed up searches by allowing fast data retrieval. Joins combine data from two or more tables based on relationships between columns. Stored procedures are SQL statements with an assigned name that are stored for shared use.

MySQL Index Cookbook

MySQL Index CookbookMYXPLAIN This document provides an overview of indexing in MySQL. It begins with definitions of terminology like B-Trees, keys, indexes, and clustering. It then covers topics like primary keys, compound indexes, and optimization techniques. Use cases are presented to demonstrate how to design indexes for different querying needs, such as normalization, geospatial queries, and pagination. The document aims to explain indexing concepts and help the reader design efficient indexes.

Oracle query optimizer

Oracle query optimizerSmitha Padmanabhan The document discusses the Oracle query optimizer. It describes the key components and steps of the optimizer including the query transformer, query estimator, and plan generator. The query transformer rewrites queries for better performance through techniques like view merging, predicate pushing, and subquery unnesting. The query estimator calculates selectivity, cardinality, and cost to determine the overall cost of execution plans. The plan generator explores access paths, join methods, and join orders to select the lowest cost plan.

Nested Queries Lecture

Nested Queries LectureFelipe Costa This document provides an overview of nested queries in SQL, including examples and explanations of:

- What nested queries are and how they are structured using subqueries

- How to write nested queries using operators like IN, EXISTS, and correlated subqueries

- Examples of nested queries for SELECT, UPDATE, DELETE, and the FROM clause using data on country fertility rates

- Advantages of nested queries like readability and ability to isolate parts of statements

plsql les01

plsql les01sasa_eldoby 1. The document describes how to create and use stored procedures in Oracle, including defining parameters, parameter passing modes, and developing procedures.

2. Key aspects of procedures are that they promote reusability and maintainability, are created using the CREATE PROCEDURE statement, and can accept parameters to communicate data between the calling environment and the procedure.

3. Parameters can be defined using modes like IN, OUT, and IN OUT to determine how data is passed into and out of a procedure.

SQL subquery

SQL subqueryVikas Gupta The document discusses subqueries in SQL. It defines a subquery as a SELECT statement embedded within another SELECT statement. Subqueries allow queries to be built from simpler statements by executing an inner query and using its results to inform the conditions of the outer query. The key aspects covered are: subqueries can be used in the WHERE, HAVING, FROM and other clauses; single-row subqueries use single-value operators while multiple-row subqueries use operators like ANY and ALL; and subqueries execute before the outer query to provide their results.

Myth busters - performance tuning 101 2007

Myth busters - performance tuning 101 2007paulguerin The document discusses various techniques for optimizing database performance in Oracle, including:

- Using the cost-based optimizer (CBO) to choose the most efficient execution plan based on statistics and hints.

- Creating appropriate indexes on columns used in predicates and queries to reduce I/O and sorting.

- Applying constraints and coding practices like limiting returned rows to improve query performance.

- Tuning SQL statements through techniques like predicate selectivity, removing unnecessary objects, and leveraging indexes.

MySQL Optimizer Cost Model

MySQL Optimizer Cost ModelOlav Sandstå The cost model is one of the core components of the MySQL optimizer. This presentation gives an overview over the MySQL Optimizer Cost Model, what is new in 5.7 and some ideas for further improvements.

Sql no sql

Sql no sqlDave Stokes The document discusses how SQL and NoSQL databases can work together for big data. It provides an overview of relational databases based on Codd's rules and how NoSQL databases are used for less structured data like documents and graphs. Examples of using MongoDB and Hadoop are provided. The document also discusses using MySQL with memcached to get the benefits of both SQL and NoSQL for accessing data.

My SQL 101

My SQL 101Dave Stokes MySQL is a widely used open-source relational database management system. The presentation covered how to install, configure, start, stop, and connect to MySQL. It also discussed how to load and view data, backup databases, set up user authentication, and where to go for additional training resources. Common MySQL commands and tools were demonstrated.

Ad

More Related Content

What's hot (20)

Oracle SQL, PL/SQL best practices

Oracle SQL, PL/SQL best practicesSmitha Padmanabhan The document provides guidelines for optimizing SQL and PL/SQL code for performance. It discusses best practices for using indexes like creating them on frequently queried columns and avoiding functions on indexed columns. Other topics covered include using EXISTS instead of JOINs when possible, avoiding DISTINCT, and placing filters in the WHERE clause instead of HAVING. Modular code design, avoiding negatives and LIKE patterns, and letting the optimizer do its work are also recommended. The goal is to help programmers write efficient code by understanding query execution and tuning techniques.

How mysql choose the execution plan

How mysql choose the execution plan辛鹤 李 The document discusses how MySQL chooses query execution plans and the importance of indexing for performance. It covers the MySQL optimizer, tools for analyzing queries like EXPLAIN and TRACE, and techniques like index condition pushdown that push conditions to the storage engine. The document uses examples and a quiz to illustrate indexing concepts and how the optimizer works in MySQL.

Oracle views

Oracle viewsBalqees Al.Mubarak This document discusses database views in Oracle. It defines what views are and how they present data differently than the underlying tables. It describes the differences between simple views with one table and complex views with functions or multiple tables. It provides the syntax for creating views and examples of creating both simple and complex views. It also covers modifying views, using the WITH CHECK OPTION for data integrity, making views read-only to prevent DML operations, and dropping views.

Webinar 2013 advanced_query_tuning

Webinar 2013 advanced_query_tuning晓 周 Advanced MySQL Query Tuning from

Percona Database Performance for share.

https://www.youtube.com/watch?v=TPFibi2G_oo

Explaining the explain_plan

Explaining the explain_planarief12H This document discusses execution plans in Oracle databases. It begins by defining an execution plan as the detailed steps the optimizer uses to execute a SQL statement, expressed as database operators. It then covers how to generate plans using EXPLAIN PLAN or V$SQL_PLAN, what constitutes a good plan for the optimizer in terms of cost and performance, and key aspects of plans including cardinality, access paths, join types, and join order. Examples are provided to illustrate each concept.

MySQL index optimization techniques

MySQL index optimization techniqueskumar gaurav This document discusses various techniques for optimizing MySQL indexes, including:

- Ensuring indexes have good selectivity on fields and composite indexes are ordered optimally

- Using prefix indexes that take up less space and are faster than whole column indexes

- Explaining query execution plans using EXPLAIN to identify optimal indexes

- Using hints like USE INDEX, IGNORE INDEX, and STRAIGHT_JOIN to influence the optimizer

- Analyzing the slow query log and general query log to identify queries that need optimization

MySQL Indexing : Improving Query Performance Using Index (Covering Index)

MySQL Indexing : Improving Query Performance Using Index (Covering Index)Hemant Kumar Singh The document discusses improving query performance in databases using indexes. It explains what indexes are and the different types of indexes including column, composite, and covering indexes. It provides examples of how to create indexes on single and multiple columns and how the order of columns matters. The document also discusses factors that affect database performance and guidelines for index usage and size optimization.

01 oracle architecture

01 oracle architectureSmitha Padmanabhan Oracle Architecture in regards to writing better performing queries.

Understand Oracle Architecture

Database Buffer Cache

Shared Pool

Write better performing queries

Subqueries -Oracle DataBase

Subqueries -Oracle DataBaseSalman Memon After completing this lesson, you should be able to

do the following:

Describe the types of problem that subqueries can solve

Define subqueries

List the types of subqueries

Write single-row and multiple-row subqueries

http://phpexecutor.com

Sub query_SQL

Sub query_SQLCoT A subquery is a SELECT statement embedded within another SQL statement. It allows queries to retrieve data from multiple tables or queries. There are two types of subqueries: single-row and multiple-row. Single-row subqueries return only one row of data and use single-row comparison operators like =. Multiple-row subqueries return more than one row of data and use operators like IN, ANY, ALL that can handle multiple values. Subqueries are useful for solving problems that require performing multiple related queries by nesting one query within another.

How to Analyze and Tune MySQL Queries for Better Performance

How to Analyze and Tune MySQL Queries for Better Performanceoysteing The document discusses techniques for optimizing MySQL queries for better performance. It covers topics like cost-based query optimization in MySQL, selecting optimal data access methods like indexes, the join optimizer, subquery optimizations, and tools for monitoring and analyzing queries. The presentation agenda includes introductions to index selection, join optimization, subquery optimizations, ordering and aggregation, and influencing the optimizer. Examples are provided to illustrate index selection, ref access analysis, and the range optimizer.

Lab5 sub query

Lab5 sub queryBalqees Al.Mubarak A sub-query is a query embedded within another SQL query. The sub-query executes first and returns its results to the outer query. Sub-queries can be used to filter records in the outer query based on conditions determined by the sub-query results. Sub-queries can return single rows, multiple rows, or multiple columns and are used with operators like =, >, IN to relate the outer and inner queries.

Views, Triggers, Functions, Stored Procedures, Indexing and Joins

Views, Triggers, Functions, Stored Procedures, Indexing and Joinsbaabtra.com - No. 1 supplier of quality freshers The document provides information about various SQL concepts like views, triggers, functions, indexes, and joins. It defines views as virtual tables created by queries on other tables. Triggers are blocks of code that execute due to data modification language statements on tables. Functions allow reusable code and improve clarity. Indexes speed up searches by allowing fast data retrieval. Joins combine data from two or more tables based on relationships between columns. Stored procedures are SQL statements with an assigned name that are stored for shared use.

MySQL Index Cookbook

MySQL Index CookbookMYXPLAIN This document provides an overview of indexing in MySQL. It begins with definitions of terminology like B-Trees, keys, indexes, and clustering. It then covers topics like primary keys, compound indexes, and optimization techniques. Use cases are presented to demonstrate how to design indexes for different querying needs, such as normalization, geospatial queries, and pagination. The document aims to explain indexing concepts and help the reader design efficient indexes.

Oracle query optimizer

Oracle query optimizerSmitha Padmanabhan The document discusses the Oracle query optimizer. It describes the key components and steps of the optimizer including the query transformer, query estimator, and plan generator. The query transformer rewrites queries for better performance through techniques like view merging, predicate pushing, and subquery unnesting. The query estimator calculates selectivity, cardinality, and cost to determine the overall cost of execution plans. The plan generator explores access paths, join methods, and join orders to select the lowest cost plan.

Nested Queries Lecture

Nested Queries LectureFelipe Costa This document provides an overview of nested queries in SQL, including examples and explanations of:

- What nested queries are and how they are structured using subqueries

- How to write nested queries using operators like IN, EXISTS, and correlated subqueries

- Examples of nested queries for SELECT, UPDATE, DELETE, and the FROM clause using data on country fertility rates

- Advantages of nested queries like readability and ability to isolate parts of statements

plsql les01

plsql les01sasa_eldoby 1. The document describes how to create and use stored procedures in Oracle, including defining parameters, parameter passing modes, and developing procedures.

2. Key aspects of procedures are that they promote reusability and maintainability, are created using the CREATE PROCEDURE statement, and can accept parameters to communicate data between the calling environment and the procedure.

3. Parameters can be defined using modes like IN, OUT, and IN OUT to determine how data is passed into and out of a procedure.

SQL subquery

SQL subqueryVikas Gupta The document discusses subqueries in SQL. It defines a subquery as a SELECT statement embedded within another SELECT statement. Subqueries allow queries to be built from simpler statements by executing an inner query and using its results to inform the conditions of the outer query. The key aspects covered are: subqueries can be used in the WHERE, HAVING, FROM and other clauses; single-row subqueries use single-value operators while multiple-row subqueries use operators like ANY and ALL; and subqueries execute before the outer query to provide their results.

Myth busters - performance tuning 101 2007

Myth busters - performance tuning 101 2007paulguerin The document discusses various techniques for optimizing database performance in Oracle, including:

- Using the cost-based optimizer (CBO) to choose the most efficient execution plan based on statistics and hints.

- Creating appropriate indexes on columns used in predicates and queries to reduce I/O and sorting.

- Applying constraints and coding practices like limiting returned rows to improve query performance.

- Tuning SQL statements through techniques like predicate selectivity, removing unnecessary objects, and leveraging indexes.

MySQL Optimizer Cost Model

MySQL Optimizer Cost ModelOlav Sandstå The cost model is one of the core components of the MySQL optimizer. This presentation gives an overview over the MySQL Optimizer Cost Model, what is new in 5.7 and some ideas for further improvements.

Views, Triggers, Functions, Stored Procedures, Indexing and Joins

Views, Triggers, Functions, Stored Procedures, Indexing and Joinsbaabtra.com - No. 1 supplier of quality freshers

Viewers also liked (8)

Sql no sql

Sql no sqlDave Stokes The document discusses how SQL and NoSQL databases can work together for big data. It provides an overview of relational databases based on Codd's rules and how NoSQL databases are used for less structured data like documents and graphs. Examples of using MongoDB and Hadoop are provided. The document also discusses using MySQL with memcached to get the benefits of both SQL and NoSQL for accessing data.

My SQL 101

My SQL 101Dave Stokes MySQL is a widely used open-source relational database management system. The presentation covered how to install, configure, start, stop, and connect to MySQL. It also discussed how to load and view data, backup databases, set up user authentication, and where to go for additional training resources. Common MySQL commands and tools were demonstrated.

MySQL 5.6 Updates

MySQL 5.6 UpdatesDave Stokes This document provides an overview and summary of updates and new features in MySQL 5.6:

- MySQL 5.6 improves performance, scalability, instrumentation, transactional throughput, availability, and flexibility compared to previous versions.

- Key areas of focus include improvements to InnoDB for transactional workloads, replication for high availability and data integrity, and the optimizer for better performance and diagnostics.

- New features in MySQL 5.6 include enhanced replication utilities for high availability, improved subquery and index optimizations in the query optimizer, and expanded performance schema instrumentation for database profiling.

Better sq lqueries

Better sq lqueriesDave Stokes This document contains slides from a presentation on writing better MySQL queries for beginners. The presentation covers SQL history and syntax, data storage and types, table design, indexes, query monitoring and optimization. It emphasizes selecting only necessary columns, using appropriate data types, normalizing tables, indexing columns used in WHERE clauses, and monitoring queries to optimize performance. Resources for learning more about MySQL are provided at the end.

Scaling MySQl 1 to N Servers -- Los Angelese MySQL User Group Feb 2014

Scaling MySQl 1 to N Servers -- Los Angelese MySQL User Group Feb 2014Dave Stokes The document discusses various options for scaling MySQL databases to handle increasing load. It begins with simple options like upgrading MySQL versions, adding caching layers, and read/write splitting. More complex and reliable options include using MySQL replication, cloud hosting, MySQL Cluster, and columnar storage engines. Scaling to very large "big data" workloads may involve using NoSQL technologies, Hadoop, and data partitioning/sharding. The key challenges discussed are defining business and technical requirements, planning for high availability, and managing increased complexity.

MySQL 5.7 New Features to Exploit -- PHPTek/Chicago MySQL User Group May 2014

MySQL 5.7 New Features to Exploit -- PHPTek/Chicago MySQL User Group May 2014Dave Stokes MySQL 5.7 is on the way and this presentation outlines the changes and how to best take advantage of them. Presentations May 2014 to PHPTek and Chicago MySQL User Group.

MySQL Query Tuning for the Squeemish -- Fossetcon Orlando Sep 2014

MySQL Query Tuning for the Squeemish -- Fossetcon Orlando Sep 2014Dave Stokes An introduction to MySQL query tuning for those who are new to the subject. This is not a dark art but a skill that can be developed.

MySql's NoSQL -- best of both worlds on the same disks

MySql's NoSQL -- best of both worlds on the same disksDave Stokes The document discusses MySQL's implementation of NoSQL capabilities within its traditional SQL database. MySQL 5.6 introduced a Memcached plugin that allows for fast, non-SQL key-value access to data stored in InnoDB tables. This provides the speed of NoSQL with the ACID compliance and crash recovery of SQL. The plugin can be installed and configured, then data accessed from either Memcached clients or SQL. This allows MySQL to serve as both a traditional SQL database and a NoSQL store.

Ad

Similar to Goldilocks and the Three MySQL Queries (20)

Brad McGehee Intepreting Execution Plans Mar09

Brad McGehee Intepreting Execution Plans Mar09guest9d79e073 Brad McGehee's presentation on "How to Interpret Query Execution Plans in SQL Server 2005/2008".

Presented to the San Francisco SQL Server User Group on March 11, 2009.

Brad McGehee Intepreting Execution Plans Mar09

Brad McGehee Intepreting Execution Plans Mar09Mark Ginnebaugh Brad McGehee on "How to Interpret Query Execution Plans in SQL Server 2005/2008".

Presented to the San Francisco SQL Server User Group in March 2009.

Myth busters - performance tuning 102 2008

Myth busters - performance tuning 102 2008paulguerin The document provides an overview of various techniques for optimizing database and application performance. It discusses fundamentals like minimizing logical I/O, balancing workload, and serial processing. It also covers the cost-based optimizer, column constraints and indexes, SQL tuning tips, subqueries vs joins, and non-SQL issues like undo storage and data migrations. Key recommendations include using column constraints, focusing on serial processing per table, and not over-relying on statistics to solve all performance problems.

Sql scripting sorcerypaper

Sql scripting sorcerypaperoracle documents oracle foreign key primary key constraints performance tuning MTS IOT 9i block size backup rman corrupted column drop rename recovery controlfile backup clone architecture database archives export dump dmp duplicate rows extents segments fragmentation hot cold blobs migration tablespace locally managed redo undo new features rollback ora-1555 shrink free space user password link TNS tnsnames.ora listener java shutdown sequence

15 Ways to Kill Your Mysql Application Performance

15 Ways to Kill Your Mysql Application Performanceguest9912e5 Jay is the North American Community Relations Manager at MySQL. Author of Pro MySQL, Jay has also written articles for Linux Magazine and regularly assists software developers in identifying how to make the most effective use of MySQL. He has given sessions on performance tuning at the MySQL Users Conference, RedHat Summit, NY PHP Conference, OSCON and Ohio LinuxFest, among others.In his abundant free time, when not being pestered by his two needy cats and two noisy dogs, he daydreams in PHP code and ponders the ramifications of __clone().

Introduction to Databases - query optimizations for MySQL

Introduction to Databases - query optimizations for MySQLMárton Kodok This document provides information about relational and non-relational databases. It discusses key aspects of relational databases including tables made of columns and rows with defined relationships and constraints. It also covers non-relational databases (NoSQL) including key-value stores, document databases, graph databases, and wide-column stores. The document compares SQL to NoSQL and discusses spreadsheet/frontend programs versus databases. It also covers database definition language, structured query language, indexing, and performance optimization techniques.

Advanced MySQL Query Optimizations

Advanced MySQL Query OptimizationsDave Stokes This presentation is an INTRODUCTION to intermediate MySQL query optimization for the Audience of PHP World 2017. It covers some of the more intricate features in a cursory overview.

Basics on SQL queries

Basics on SQL queriesKnoldus Inc. SQL(Structured Query Language) is a ANSI standard language for accessing and manipulating relational databases. It includes database creation, deletion, fetching rows, modifying rows, etc. All the Relational Database Management Systems (RDMS) like MySQL, MS Access, Oracle, Sybase, Informix, Postgres and SQL Server use SQL as their standard database language.

Mohan Testing

Mohan Testingsmittal81 Use EXPLAIN to profile query execution plans. Optimize queries by using proper indexes, limiting unnecessary DISTINCT and ORDER BY clauses, batching INSERTs, and avoiding correlated subqueries. Know your storage engines and choose the best one for your data needs. Monitor configuration variables, indexes, and queries to ensure optimal performance. Design schemas thoughtfully with normalization and denormalization in mind.

Presentation interpreting execution plans for sql statements

Presentation interpreting execution plans for sql statementsxKinAnx Download & Share Technology

Presentations http://goo.gl/k80oY0

Student Guide & Best http://goo.gl/6OkI77

MySQL Performance Optimization

MySQL Performance OptimizationMindfire Solutions This presentation is all about the factors that directly affects the query performance/scaling and how on would tackle them.

My SQL Skills Killed the Server

My SQL Skills Killed the ServerdevObjective This document discusses SQL skills and how queries can negatively impact server performance if not written efficiently. It covers topics like query plans, execution contexts, using parameters, indexing, handling large datasets, and external influences on SQL performance. Specific "bad" SQL examples are also provided and explained. The presenter's goal is to help developers optimize their SQL and prevent poorly written queries from bringing servers to their knees.

Sql killedserver

Sql killedserverColdFusionConference This document discusses SQL skills and how queries can negatively impact server performance if not written efficiently. It covers topics like query plans, execution contexts, using parameters, indexing, handling large datasets, and external influences on SQL performance. Specific "bad" SQL examples are also provided and analyzed. The presenter aims to help developers optimize their SQL and prevent poorly written queries from bringing servers to their knees.

Conquering "big data": An introduction to shard query

Conquering "big data": An introduction to shard queryJustin Swanhart Shard-Query is a middleware solution that enables massively parallel query execution for MySQL databases. It works by splitting single SQL queries into multiple smaller queries or tasks that can run concurrently on one or more database servers. This allows it to scale out query processing across more CPUs and servers for improved performance on large datasets and analytics workloads. It supports both partitioning tables for parallelism within a single server as well as sharding tables across multiple servers. The end result is that it can enable MySQL to perform like other parallel database solutions through distributed query processing.

Top 10 Oracle SQL tuning tips

Top 10 Oracle SQL tuning tipsNirav Shah Design and develop with performance in mind

Establish a tuning environment

Index wisely

Reduce parsing

Take advantage of Cost Based Optimizer

Avoid accidental table scans

Optimize necessary table scans

Optimize joins

Use array processing

Consider PL/SQL for “tricky” SQL

Tunning sql query

Tunning sql queryvuhaininh88 This document discusses various techniques for analyzing and tuning SQL queries to improve performance. It covers measurement methods like EXPLAIN and slow logs, database design optimizations like normalization and index usage, optimizing WHERE conditions to use indexes, choosing the best access methods, and join optimization techniques. Specific strategies mentioned include changing WHERE conditions to utilize indexes more efficiently, using STRAIGHT_JOIN to control join order, and optimizing queries that use filesort or joins vs subqueries.

Query parameterization

Query parameterizationRiteshkiit This document discusses various techniques for optimizing SQL queries in SQL Server, including:

1) Using parameterized queries instead of ad-hoc queries to avoid compilation overhead and improve plan caching.

2) Ensuring optimal ordering of predicates in the WHERE clause and creating appropriate indexes to enable index seeks.

3) Understanding how the query optimizer works by estimating cardinality based on statistics and choosing low-cost execution plans.

4) Avoiding parameter sniffing issues and non-deterministic expressions that prevent accurate cardinality estimation.

5) Using features like the Database Tuning Advisor and query profiling tools to identify optimization opportunities.

How to Fine-Tune Performance Using Amazon Redshift

How to Fine-Tune Performance Using Amazon RedshiftAWS Germany How to fine-tune the performance on Amazon Redshift to meet your requirements. Best practices and recommendations.

Cost Based Oracle

Cost Based OracleSantosh Kangane There are NO thumb Rules in Oracle. Different Versions of Oracle and data Patterns Drives the SQL performance !!!!

This presentation is just to introduce as to How CBO workouts the SQL plans That probably will help you to find what is suitable for given SQL.

How you write a SQL, it matters !!!!

MySQL Scaling Presentation

MySQL Scaling PresentationTommy Falgout This document provides strategies for optimizing MySQL performance as databases grow in complexity and size. It discusses ways to optimize queries, schemas, hardware, software configuration, caching, and monitoring. The key lessons are to optimize queries, choose appropriate data types and storage engines, configure MySQL and cache settings properly, benchmark and monitor performance, and scale reads and writes separately.

Ad

More from Dave Stokes (20)

Json within a relational database

Json within a relational databaseDave Stokes The document discusses using JSON documents within a relational database. It provides examples of storing JSON documents in MySQL collections and querying them using both document and SQL syntax. Key points include:

- JSON documents can be stored in MySQL collections, providing a flexible document data model while retaining MySQL's reliability and ACID transactions.

- Documents can be queried using either document queries or by converting the JSON to relational form using JSON_TABLE, allowing the use of SQL.

- Examples are shown in several languages for CRUD operations on collections as well as indexing, validation, and more advanced queries like aggregating data from arrays.

- Storing JSON documents natively in MySQL allows leveraging both document and rel

Database basics for new-ish developers -- All Things Open October 18th 2021

Database basics for new-ish developers -- All Things Open October 18th 2021Dave Stokes Do you wonder why it takes your database to find the top five of your fifty six million customers? Do you really have a good idea of what NULL is and how to use it? And why are some database queries so quick and others frustratingly slow? Relational databases have been around for over fifty years and frustrating developers for at least forty nine of those years. This session is an attempt to explain why sometimes the database seems very fast and other times not. You will learn how to set up data (normalization) to avoid redundancies into tables by their function, how to join two tables to combine data, and why Structured Query Language is so very different than most other languages. And you will see how thinking in sets over records can greatly improve your life with a database.

Php & my sql - how do pdo, mysq-li, and x devapi do what they do

Php & my sql - how do pdo, mysq-li, and x devapi do what they doDave Stokes PHP & MySQL -- How do PDO, MySQLi, and X DevAPI Do What They Do -- Longhorn PHP Conference October 15th, 2021

Longhorn PHP - MySQL Indexes, Histograms, Locking Options, and Other Ways to ...

Longhorn PHP - MySQL Indexes, Histograms, Locking Options, and Other Ways to ...Dave Stokes This document discusses various ways to speed up queries in MySQL, including the proper use of indexes, histograms, and locking options. It begins with an introduction to indexes, explaining that indexes are data structures that improve the speed of data retrieval by allowing for faster lookups and access to ordered records. The document then covers different types of indexes like clustered indexes, secondary indexes, functional indexes, and multi-value indexes. It emphasizes choosing indexes carefully based on the most common queries and selecting columns that are not often updated. Overall, the document provides an overview of optimization techniques in MySQL with a focus on index usage.

MySQL 8.0 New Features -- September 27th presentation for Open Source Summit

MySQL 8.0 New Features -- September 27th presentation for Open Source SummitDave Stokes MySQL 8.0 has many new features that you probably need to know about but don't. Like default security, window functions, CTEs, CATS (not what you think), JSON_TABLE(), and UTF8MB4 support.

JavaScript and Friends August 20th, 20201 -- MySQL Shell and JavaScript

JavaScript and Friends August 20th, 20201 -- MySQL Shell and JavaScriptDave Stokes The MySQL Shell has a JavaScript mode where you can use JS libraries to access you data and you can also write (and save) your own custom reports (or programs) for future use.

Open Source World June '21 -- JSON Within a Relational Database

Open Source World June '21 -- JSON Within a Relational DatabaseDave Stokes How does free form JSON work with a strictly typed relational database? This session will cover how MySQL and JSON give you the best of both worlds

Dutch PHP Conference 2021 - MySQL Indexes and Histograms

Dutch PHP Conference 2021 - MySQL Indexes and HistogramsDave Stokes This document discusses how to speed up queries in MySQL through the proper use of indexes, histograms, and other techniques. It begins by explaining that the MySQL optimizer tries to determine the most efficient way to execute queries by considering different query plans. The optimizer relies on statistics about column distributions to estimate query costs. The document then discusses using EXPLAIN to view and analyze query plans, and how indexes can improve query performance by allowing faster data retrieval through secondary indexes and other index types. Proper index selection and column data types are important to allow the optimizer to use indexes efficiently.

Validating JSON -- Percona Live 2021 presentation

Validating JSON -- Percona Live 2021 presentationDave Stokes JSON is a free form data exchange format which can cause problems when combined with a strictly typed relational database. Thanks to the folks at https://json-schema.org and the MySQL engineers at Oracle we can no specify required fields, type checks, and range checks.

Midwest PHP Presentation - New MSQL Features

Midwest PHP Presentation - New MSQL FeaturesDave Stokes 22 April 2021 presentation for the MidWest PHP conference on New Features in MySQL 8.0 with updates on the newly released 8.0.24

Data Love Conference - Window Functions for Database Analytics

Data Love Conference - Window Functions for Database AnalyticsDave Stokes 16 April 2021 presentation for the Data Love Conference on Window Functions for Data Base Analytics. Examples are on MySQL but will work for other RDMS's with window functions. Assumes no user background on window functions or analytics

Open Source 1010 and Quest InSync presentations March 30th, 2021 on MySQL Ind...

Open Source 1010 and Quest InSync presentations March 30th, 2021 on MySQL Ind...Dave Stokes Speeding up queries on a MySQL server with indexes and histograms is not a mysterious art but simple engineering. This presentation is an indepth introduction that was presented on March 30th to the Quest Insynch and Open Source 101 conferences

Confoo 2021 -- MySQL New Features

Confoo 2021 -- MySQL New FeaturesDave Stokes Confoo.ca conference talk February 24th 2021 on MySQL new features found in version 8.0 including server and supporting utility updates for those who may have missed some really neat new features

Confoo 2021 - MySQL Indexes & Histograms

Confoo 2021 - MySQL Indexes & HistogramsDave Stokes Confoo 2021 presentation on MySQL Indexes, Histograms, and other ways to speed up your queries. This slide deck has slides that may not have been included in the presentation that were omitted due to time constraints

Datacon LA - MySQL without the SQL - Oh my!

Datacon LA - MySQL without the SQL - Oh my! Dave Stokes My presentations from Datacon LA 202 on using MySQL as a NoSQL JSON document store as well as a traditional relation database

MySQL Replication Update - DEbconf 2020 presentation

MySQL Replication Update - DEbconf 2020 presentationDave Stokes MySQL InnoDB Cluster and ReplicaSet are big advancements in providing a safe way to keep you data happy & healthy!

MySQL 8.0 Operational Changes

MySQL 8.0 Operational ChangesDave Stokes MySQL 8.0 introduces new features like resource groups to dedicate server resources to different query classes. It has a faster backup process using MySQL Shell utilities and compression of replication logs. The presentation provides an overview of InnoDB Cluster which allows multi-primary replication topologies and automated failover using Group Replication. It demonstrates how to easily set up a basic 3 node InnoDB Cluster on the local machine for testing using the MySQL Shell. MySQL Router can then be used to route application connections to the cluster for load balancing and high availability without application changes.

cPanel now supports MySQL 8.0 - My Top Seven Features

cPanel now supports MySQL 8.0 - My Top Seven FeaturesDave Stokes This is a very brief overview for cPanel customers on MySQL 8.0 and these are my top 7 (seven) new features.

A Step by Step Introduction to the MySQL Document Store

A Step by Step Introduction to the MySQL Document StoreDave Stokes Looking for a fast, flexible NoSQL document store? And one that runs with the power and reliability of MySQL. This is an intro on how to use the MySQL Document Store

Discover The Power of NoSQL + MySQL with MySQL

Discover The Power of NoSQL + MySQL with MySQLDave Stokes The document discusses the MySQL Document Store, which provides both NoSQL and SQL capabilities on a single platform. It allows for schemaless document storage and querying using JSON documents, while also providing the reliability, security and transaction support of MySQL. Examples are given in several programming languages of basic CRUD operations on document collections using simple APIs that avoid the need for SQL. The document also shows how JSON documents can be queried using SQL/JSON functions, enabling more complex analysis that was previously only possible in a relational database. This provides the best aspects of both NoSQL and SQL on a proven database platform.

Recently uploaded (20)

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Goldilocks and the Three MySQL Queries

- 1. Goldilocks And The Three Queries – MySQL's EXPLAIN Explained Dave Stokes MySQL Community Manager, North America [email protected]

- 2. Please Read The following is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s products remains at the sole discretion of Oracle.

- 3. Simple Introduction EXPLAIN & EXPLAIN EXTENDED are tools to help optimize queries. As tools there are only as good as the crafts persons using them. There is more to this subject than can be covered here in a single presentation. But hopefully this session will start you out on the right path for using EXPLAIN.

- 4. Why worry about the optimizer? Client sends statement to server Server checks the query cache to see if it has already run statement. If so, it retrieves stored result and sends it back to the Client. Statement is parsed, preprocessed and optimized to make a Query Execution Plan. The query execution engine sends the QEP to the storage engine API. Results sent to the Client.

- 5. Once upon a time ... There was a PHP Programmer named Goldilocks who wanted to get the phone number of her friend Little Red Riding Hood in Networking’s phone number. She found an old, dusty piece of code in the enchanted programmers library. Inside the code was a special chant to get all the names and phone numbers of the employees of Grimm-Fayre-Tails Corp. And so, Goldi tried that special chant! SELECT name, phone FROM employees;

- 6. Oh-No! But the special chant kept running, and running, and running. Eventually Goldi control-C-ed when she realized that Grimm hired many, many folks after hearing that the company had 10^10 employees in the database.

- 7. A second chant Goldi did some searching in the library and learned she could add to the chant to look only for her friend Red. SELECT name, phone FROM employees WHERE name LIKE 'Red%'; Goldi crossed her fingers, held her breath, and let 'er rip.

- 8. What she got Name, phone Redford 1234 Redmund 2323 Redlegs 1234 Red Sox 1914 Redding 9021 – But this was not what Goldilocks needed. So she asked a kindly old Java Owl for help

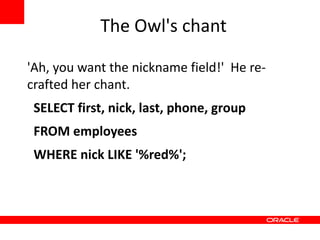

- 9. The Owl's chant 'Ah, you want the nickname field!' He re- crafted her chant. SELECT first, nick, last, phone, group FROM employees WHERE nick LIKE '%red%';

- 10. Still too much data … but better Betty, Big Red, Lopez, 4321, Accounting Ethel, Little Red, Riding-Hoode, 127.0.0.1, Networks Agatha, Red Herring, Christie, 007, Public Relations Johnny, Reds Catcher, Bench, 421, Gaming

- 11. 'We can tune the query better' Cried the Owl. SELECT first, nick, name, phone, group WHERE nick LIKE 'Red%' AND group = 'Networking'; But Goldi was too busy after she got the data she needed to listen.

- 12. The preceding were obviously flawed queries • But how do you check if queries are running efficiently? • What does the query the MySQL server runs really look like? (the dreaded Query Execution Plan). What is cost based optimization? • How can you make queries faster?

- 13. EXPLAIN & EXPLAIN EXTENDED EXPLAIN [EXTENDED | PARTITIONS] { SELECT statement | DELETE statement | INSERT statement | REPLACE statement | UPDATE statement } Or EXPLAIN tbl_name (same as DESCRIBE tbl_name)

- 14. What is being EXPLAINed Prepending EXPLAIN to a statement* asks the optimizer how it would plan to execute that statement (and sometimes it guesses wrong) at lowest cost (measures in disk page seeks*). What it can tell you: --Where to add INDEXes to speed row access --Check JOIN order And Optimizer Tracing (more later) has been recently introduced! * SELECT, DELETE, INSERT, REPLACE & UPDATE as of 5.6, only SELECT 5.5 & previous * Does not know if page is in memory, on disk (storage engine's problem, not optimizer), see MySQL Manual 7.8.3

- 15. The Columns id Which SELECT select_type The SELECT type table Output row table type JOIN type possible_keys Potential indexes key Actual index used key_ken Length of actual index ref Columns used against index rows Estimate of rows extra Additional Info

- 16. A first look at EXPLAIN ...using World database Will read all 4079 rows – all the rows in this table

- 17. EXPLAIN EXTENDED -> query plan Filtered: Estimated % of rows filtered By condition The query as seen by server (kind of, sort of, close)

- 18. Add in a WHERE clause

- 19. Time for a quick review of indexes Advantages Disadvantages – Go right to desired – Overhead* row(s) instead of • CRUD reading ALL – Not used on full table ROWS scans – Smaller than whole table (read from disk faster) * May need to run – Can 'carry' other data ANALYZE TABLE to update statistics such as with compound cardinality to help optimizer indexes make better choices

- 20. Quiz: Why read 4079 rows when only five are needed?

- 21. Information in the type Column ALL – full table scan (to be avoided when possible) CONST – WHERE ID=1 EQ_REF – WHERE a.ID = b.ID (uses indexes, 1 row returned) REF – WHERE state='CA' (multiple rows for key values) REF_OR_NULL – WHERE ID IS NULL (extra lookup needed for NULL) INDEX_MERGE – WHERE ID = 10 OR state = 'CA' RANGE – WHERE x IN (10,20,30) INDEX – (usually faster when index file < data file) UNIQUE_SUBQUERY – INDEX-SUBQUERY – SYSTEM – Table with 1 row or in-memory table

- 22. Full table scans VS Index So lets create a copy of the World.City table that has no indexes. The optimizer estimates that it would require 4,279 rows to be read to find the desired record – 5% more than actual rows. And the table has only 4,079 rows.

- 23. How does NULL change things? Taking NOT NULL away from the ID field (plus the previous index) increases the estimated rows read to 4296! Roughly 5.5% more rows than actual in file. Running ANALYZE TABLE reduces the count to 3816 – still > 1

- 24. Both of the following return 1 row

- 25. EXPLAIN PARTITIONS - Add 12 hash partitions to City

- 26. Some parts of your query may be hidden!!

- 27. Latin1 versus UTF8 Create a copy of the City table but with UTF8 character set replacing Latin1. The three character key_len grows to nine characters. That is more data to read and more to compare which is pronounced 'slower'.

- 28. INDEX Length If a new index on CountryCode with length of 2 bytes, does it work as well as the original 3 bytes?

- 29. Forcing use of new shorter index ... Still generates a guesstimate that 39 rows must be read. In some cases there is performance to be gained in using shorter indexes.

- 30. Subqueries Run as part of EXPLAIN execution and may cause significant overhead. So be careful when testing. Note here that #1 is not using an index. And that is why we recommend rewriting sub queries as joins.

- 31. EXAMPLE of covering Indexing In this case, adding an index reduces the reads from 239 to 42. Can we do better for this query?

- 32. Index on both Continent and Government Form With both Continent and GovernmentForm indexed together, we go from 42 rows read to 19. Using index means the data is retrieved from index not table (good) Using index condition means eval pushed down to storage engine. This can reduce storage engine read of table and server reads of storage engine (not bad)

- 33. Extra *** USING INDEX – Getting data from the index rather than the table USING FILESORT – Sorting was needed rather than using an index. Uses file system (slow) ORDER BY can use indexes USING TEMPORARY – A temp table was created – see tmp_table_size and max_heap_table_size USING WHERE – filter outside storage engine Using Join Buffer -- means no index used.

- 34. Things can get messy!

- 35. straight_join forces order of tables

- 36. Index Hints Use only as a last resort – index_hint: shifts in data can make USE {INDEX|KEY} this the 'long way [{FOR {JOIN|ORDER BY| GROUP BY}] ([index_list]) around'. | IGNORE {INDEX|KEY} [{FOR {JOIN|ORDER BY| GROUP BY}] (index_list) | FORCE {INDEX|KEY} [{FOR {JOIN|ORDER BY| GROUP BY}] (index_list) http://dev.mysql.com/doc/refman/5.6/en/index- hints.html

- 37. Controlling the Optimizer mysql> SELECT @@optimizer_switchG You can turn on or off *************************** 1. row *************************** certain optimizer @@optimizer_switch: index_merge=on,index_merge_union=on, settings for index_merge_sort_union=on, GLOBAL or index_merge_intersection=on, SESSION engine_condition_pushdown=on, index_condition_pushdown=on, mrr=on,mrr_cost_based=on, See MySQL Manual block_nested_loop=on,batched_key_access=off 7.8.4.2 and know your mileage may vary.

- 38. Things to watch mysqladmin -r -i 10 extended-status Slow_queries – number in last period Select_scan – full table scans Select_full_join full scans to complete Created_tmp_disk_tables – file sorts Key_read_requerts/Key_wrtie_requests – read/write weighting of application, may need to modify application

- 39. Optimizer Tracing (6.5.3 onward) SET optimizer_trace="enabled=on"; SELECT Name FROM City WHERE ID=999; SELECT trace into dumpfile '/tmp/foo' FROM INFORMATION_SCHEMA.OPTIMIZER_TRACE; Shows more logic than EXPLAIN The output shows much deeper detail on how the optimizer chooses to process a query. This level of detail is well past the level for this presentation.

- 40. Sample from the trace – but no clues on optimizing for Joe Average DBA

- 41. Final Thoughts 1. READ chapter 7 of the MySQL Manual 2. Run ANALYZE TABLE periodically 3. Minimize disk I/o

- 42. Q&A