Grails And The Semantic Web

3 likes1,446 views

In this presentation, we describe the underlying principles of the Semantic Web along with the core concepts and technologies, how they fit in with the Grails Framework and any existing tools, API\'s and Implementations.

1 of 13

Downloaded 24 times

Ad

Recommended

Harnessing The Semantic Web

Harnessing The Semantic Webwilliam_greenly This document discusses harnessing the semantic web. It begins by addressing common misconceptions about the semantic web. It then outlines key use cases like query federation and linking data. The document explains core concepts such as HTTP, URIs, RDF, RDFS, OWL, and SPARQL. It provides examples of RDF triples and SPARQL queries. The document also discusses embedding RDF in HTML using RDFa. It reviews the current semantic web landscape and provides a case study of a car options ontology at VW.co.uk.

Linked Data, Ontologies and Inference

Linked Data, Ontologies and InferenceBarry Norton The document discusses linked data, ontologies, and inference. It provides examples of using RDFS and OWL to infer new facts from schemas and ontologies. Key points include:

- Linked Data uses URIs and HTTP to identify things and provide useful information about them via standards like RDF and SPARQL.

- Projects like LOD aim to develop best practices for publishing interlinked open datasets. FactForge and LinkedLifeData are examples that contain billions of statements across life science and general knowledge datasets.

- RDFS and OWL allow defining schemas and ontologies that enable inferring new facts through reasoning. Rules like rdfs:domain and rdfs:range allow inferring type information

Semantic web for ontology chapter4 bynk

Semantic web for ontology chapter4 bynkNamgee Lee Introduce Semantic web based on book "Semantic web for the Working Ontologist" Dean Allemang / Jim Hendler

Publishing Linked Data 3/5 Semtech2011

Publishing Linked Data 3/5 Semtech2011Juan Sequeda This document summarizes techniques for publishing linked data on the web. It discusses publishing static RDF files, embedding RDF in HTML using RDFa, linking to other URIs, generating linked data from relational databases using RDB2RDF tools, publishing linked data from triplestores and APIs, hosting linked data in the cloud, and testing linked data quality.

Eclipse RDF4J - Working with RDF in Java

Eclipse RDF4J - Working with RDF in JavaJeen Broekstra Jeen Broekstra is a principal engineer at metaphacts GmbH and the project lead for Eclipse RDF4J. RDF4J is a modular collection of Java libraries for working with RDF data, including reading, writing, storing, querying, and updating RDF databases or remote SPARQL endpoints. It provides a vendor-neutral API and tools like RDF4J Server, Workbench, and Console. RDF4J aims to support modern Java versions while focusing on SHACL, SPARQL 1.2, and experimental RDF* and SPARQL* features.

Tutorial "An Introduction to SPARQL and Queries over Linked Data" Chapter 1 (...

Tutorial "An Introduction to SPARQL and Queries over Linked Data" Chapter 1 (...Olaf Hartig These are the slides from my ICWE 2012 Tutorial "An Introduction to SPARQL and Queries over Linked Data"

Tutorial "An Introduction to SPARQL and Queries over Linked Data" Chapter 3 (...

Tutorial "An Introduction to SPARQL and Queries over Linked Data" Chapter 3 (...Olaf Hartig These are the slides from my ICWE 2012 Tutorial "An Introduction to SPARQL and Queries over Linked Data"

SPARQL Query Forms

SPARQL Query FormsLeigh Dodds There are 4 SPARQL query forms: SELECT, ASK, CONSTRUCT, and DESCRIBE. Each form serves a different purpose. SELECT returns variable bindings and is equivalent to an SQL SELECT query. ASK returns a boolean for whether a pattern matches or not. CONSTRUCT returns an RDF graph constructed from templates. DESCRIBE returns an RDF graph that describes resources found. Beyond their basic uses, the forms can be used for tasks like indexing, transformation, validation, and prototyping user interfaces.

Normalizing Data for Migrations

Normalizing Data for MigrationsKyle Banerjee Simple techniques to prepare data for migration without programming skills and fix problems such as carriage returns in delimited data

あなたが創るセマンティックウェブ

あなたが創るセマンティックウェブYasuhisa Hasegawa RDF や Ontology といった専門的な言葉をほとんど使わないで、セマンティックウェブを啓蒙するという少し変わった視点で話をしました。

http://www.yasuhisa.com/could/diary/websig-semanticweb/

VALA Tech Camp 2017: Intro to Wikidata & SPARQL

VALA Tech Camp 2017: Intro to Wikidata & SPARQLJane Frazier A hands-on introduction to interrogation of Wikidata content using SPARQL, the query language used to query data represented in RDF, SKOS, OWL, and other Semantic Web standards.

Presented by myself and Peter Neish, Research Data Specialist @ University of Melbourne.

Getting Started With The Talis Platform

Getting Started With The Talis PlatformLeigh Dodds Developer training session providing an overview of the core features and services of the Talis Platform. Includes basic overview of REST and RDF

Data Integration And Visualization

Data Integration And VisualizationIvan Ermilov The document discusses data discovery, conversion, integration and visualization using RDF. It covers topics like ontologies, vocabularies, data catalogs, converting different data formats to RDF including CSV, XML and relational databases. It also discusses federated SPARQL queries to integrate data from multiple sources and different techniques for visualizing linked data including analyzing relationships, events, and multidimensional data.

LinkML presentation to Yosemite Group

LinkML presentation to Yosemite GroupChris Mungall LinkML is a modeling language for building semantic models that can be used to represent biomedical and other scientific knowledge. It allows generating various schemas and representations like OWL, JSON Schema, GraphQL from a single semantic model specification. The key advantages of LinkML include simplicity through YAML files, ability to represent models in multiple forms like JSON, RDF, and property graphs, and "stealth semantics" where semantic representations like RDF are generated behind the scenes.

Linked Data - Exposing what we have

Linked Data - Exposing what we haveRichard Wallis The document discusses exposing library holdings data on the web using linked data. It notes that OCLC has exposed over 300 million resources using Schema.org, RDFa, and links to controlled vocabularies. The data is available via various formats like RDF/XML, JSON-LD and Turtle. BIBFRAME is presented as the new standard for bibliographic description that allows library data to be shared as part of the web. Libraries are encouraged to make their resources discoverable on the web of data by linking to other institutions and authorities.

Open Source: Liberating your systems

Open Source: Liberating your systemsRichard Wallis Presentation at Breaking the Barriers 2009 - Open Source in Libraries conference.

London 18th May 2009

Yann Nicolas - Elag 2018 : From XML to MARC

Yann Nicolas - Elag 2018 : From XML to MARCABES The document discusses the ABES agency's work in collecting, normalizing, enriching and sharing bibliographic metadata from various sources like XML files, MARC records, and linked open data using RDF. It focuses on four use cases: linking print and ebook metadata, linking documents to authority records, linking articles to controlled vocabularies, and linking book chapters to concepts. The work involves transforming different data formats into RDF and linking entities between multiple data graphs. Challenges discussed include balancing data model flexibility with technological choices and workflows, and ensuring two-way communication between the RDF processing and cataloging activities.

From XML to MARC. RDF behind the scenes.

From XML to MARC. RDF behind the scenes.Y. Nicolas [ELAG Conference. 2018]

We collect heterogeneous metadata packages from various publishers. Although all of them are in XML, they vary a lot in terms of vocabulary, structure, granularity, precision, and accuracy. It is quite a challenge to cope with this jungle and recycling it to meet the needs of the Sudoc, the French academic union cataloguing system.

How to integrate and enrich these metadata ? How to integrate them in order to process them in a regular way, not through ad hoc processes ? How to integrate them with specific or generic controlled vocabularies ? How to enrich them with author identifiers, for instance ?

RDF looks like the ideal solution for integration and enrichment. Metadata are stored in the Virtuoso RDF database and processed through a workflow steered by the Oracle DB. We will illustrate this generic solution with Oxford UP metadata : ONIX records for printed books and KBART package description for ebooks.

PLNOG 6: Piotr Modzelewski, Bartłomiej Rymarski - Product Catalogue - Case Study

PLNOG 6: Piotr Modzelewski, Bartłomiej Rymarski - Product Catalogue - Case StudyPROIDEA This document appears to be a product catalogue containing case studies on various technologies. It discusses technologies like Thrift for generating code across multiple languages, Cassandra and Solr for scalable databases and search, and MogileFS for distributed file storage. For each technology, it provides an overview, examples of usage, and considerations for implementation and performance. It aims to provide solutions for building scalable services quickly using these open source tools.

Metadata - Linked Data

Metadata - Linked DataRichard Wallis Presentation to the NISO/BISG 7th Annual Forum

At ALA - Chicago 28th June 2013

#ala2013 #nisobisg07

20 billion triples in production

20 billion triples in productionIoan Toma The document discusses the UniProt SPARQL endpoint, which provides access to 20 billion biomedical data triples. It notes challenges in hosting large life science databases and standards-based federated querying. The endpoint uses Apache load balancing across two nodes each with 64 CPU cores and 256GB RAM. Loading rates of 500,000 triples/second are achieved. Usage peaks at 35 million queries per month from an estimated 300-2000 real users. Very large queries involving counting all IRIs can take hours. Template-based compilation and key-value approaches are proposed to optimize challenging queries. Public monitoring is also discussed.

WorldCat, Works, and Schema.org

WorldCat, Works, and Schema.orgRichard Wallis Presentation to the LODLAM Training Day at the Semantic Tech and Business Conference in San Jose, California - August 2014

Linked Data - Radical Change?

Linked Data - Radical Change?Richard Wallis The document discusses linked data and its potential impact on libraries. It describes linked data as connecting the world's libraries by publishing structured data about 290 million resources using common schemas, embedding RDFa, and linking to controlled vocabularies. While linked data presents challenges like metadata for different types of materials, it offers opportunities to describe resources as part of the web and link catalog data to related concepts through identifiers.

Drupal and the Semantic Web

Drupal and the Semantic WebKristof Van Tomme Drupal 7 will use RDFa markup in core, in this session I will:

-explain what the implications are of this and why this matters

-give a short introduction to the Semantic web, RDF, RDFa and SPARQL in human language

-give a short overview of the RDF modules that are available in contrib

-talk about some of the potential use cases of all these magical technologies

This is a talk from the Drupal track at Fosdem 2010.

Hadoop World 2011: Radoop: a Graphical Analytics Tool for Big Data - Gabor Ma...

Hadoop World 2011: Radoop: a Graphical Analytics Tool for Big Data - Gabor Ma...Cloudera, Inc. Hadoop is an excellent environment for analyzing large data sets, but it lacks an easy-to-use graphical interface for building data pipelines and performing advanced analytics. RapidMiner is an excellent open-source tool for data analytics, but is limited to running on a single machine.In this presentation, we will introduce Radoop, an extension to RapidMiner that lets users interact with a Hadoop cluster. Radoop combines the strengths of both projects and provides a user-friendly interface for editing and running ETL, analytics, and machine learning processes on Hadoop. We will also discuss lessons learned while integrating HDFS, Hive, and Mahout with RapidMiner.

Rapid Digitization of Latin American Ephemera with Hydra

Rapid Digitization of Latin American Ephemera with HydraJon Stroop Princeton University Library began to collect and build an archive of Latin American ephemera and gray literature in the mid 1970s to document the activities of political and social organizations and movements, as well as the broader political, socioeconomic and cultural developments of the region. Access to the material was provided by slowly accumulating and organizing thematic sub-collections, creating finding aids, and microfilming selected curated sub-collections. Reproductions of the microfilm were commercially distributed and resulting royalties were used to fund new acquisitions. That model gradually become unsustainable during the past decade and microfilming was halted in 2008.

Hydra breathes new life into this project by providing us with a framework for creating an end-to-end application that will facilitate rapid digitization, cataloging, and access to this important collection. Since the system went into production in April of 2014, nearly 1500 items have been cataloged, with the throughput rate ultimately accelerating to over 300 items per month in August.

8th TUC Meeting - Zhe Wu (Oracle USA). Bridging RDF Graph and Property Graph...

8th TUC Meeting - Zhe Wu (Oracle USA). Bridging RDF Graph and Property Graph...LDBC council During the 8th TUC Meeting held at Oracle’s facilities in Redwood City, California, Zhe Wu, Software Architect at Oracle Spatial and Graph, explained how is his team trying to bridge RDF Graph and Property Data Models.

Docker build, test and deploy saa s applications

Docker build, test and deploy saa s applicationswilliam_greenly Docker can be used to build, test, and deploy software applications. It allows creating software containers that package code and dependencies together. Docker is useful for testing applications in isolation, continuous integration testing, and distributing software. Some key benefits are portability across stacks, reusable images, and treating infrastructure as code. Challenges include evolving standards and ensuring version compatibility across images and tools.

Semantic Web And Coldfusion

Semantic Web And Coldfusionwilliam_greenly The Semantic Web is the extension of the World Wide Web that enables people to share content beyond the boundaries of applications and websites.

It has been described in rather different ways: as a utopic vision, as a web of data, or merely as a natural paradigm shift in our daily use of the Web.

Most of all, the Semantic Web has inspired and engaged many people to create innovative and intelligent technologies and applications.

In this presentation we describe the underlying principles and key features of the semantic web along with where and how they fit in with server side and client side technologies supported by ColdFusion.

Web of things

Web of thingswilliam_greenly This document discusses the Web of Things, which aims to provide interoperability across IoT platforms through standards for describing things, discovery, and security/privacy. It outlines how the IoT builds upon existing internet protocols and standards, but introduces new protocols and data models for diverse connected devices. Key aspects of the Web of Things include bindings for application protocols, standardized thing descriptions represented using semantic web technologies like JSON-LD, and efforts to develop common methods for service discovery across the many existing approaches.

Ad

More Related Content

What's hot (19)

Normalizing Data for Migrations

Normalizing Data for MigrationsKyle Banerjee Simple techniques to prepare data for migration without programming skills and fix problems such as carriage returns in delimited data

あなたが創るセマンティックウェブ

あなたが創るセマンティックウェブYasuhisa Hasegawa RDF や Ontology といった専門的な言葉をほとんど使わないで、セマンティックウェブを啓蒙するという少し変わった視点で話をしました。

http://www.yasuhisa.com/could/diary/websig-semanticweb/

VALA Tech Camp 2017: Intro to Wikidata & SPARQL

VALA Tech Camp 2017: Intro to Wikidata & SPARQLJane Frazier A hands-on introduction to interrogation of Wikidata content using SPARQL, the query language used to query data represented in RDF, SKOS, OWL, and other Semantic Web standards.

Presented by myself and Peter Neish, Research Data Specialist @ University of Melbourne.

Getting Started With The Talis Platform

Getting Started With The Talis PlatformLeigh Dodds Developer training session providing an overview of the core features and services of the Talis Platform. Includes basic overview of REST and RDF

Data Integration And Visualization

Data Integration And VisualizationIvan Ermilov The document discusses data discovery, conversion, integration and visualization using RDF. It covers topics like ontologies, vocabularies, data catalogs, converting different data formats to RDF including CSV, XML and relational databases. It also discusses federated SPARQL queries to integrate data from multiple sources and different techniques for visualizing linked data including analyzing relationships, events, and multidimensional data.

LinkML presentation to Yosemite Group

LinkML presentation to Yosemite GroupChris Mungall LinkML is a modeling language for building semantic models that can be used to represent biomedical and other scientific knowledge. It allows generating various schemas and representations like OWL, JSON Schema, GraphQL from a single semantic model specification. The key advantages of LinkML include simplicity through YAML files, ability to represent models in multiple forms like JSON, RDF, and property graphs, and "stealth semantics" where semantic representations like RDF are generated behind the scenes.

Linked Data - Exposing what we have

Linked Data - Exposing what we haveRichard Wallis The document discusses exposing library holdings data on the web using linked data. It notes that OCLC has exposed over 300 million resources using Schema.org, RDFa, and links to controlled vocabularies. The data is available via various formats like RDF/XML, JSON-LD and Turtle. BIBFRAME is presented as the new standard for bibliographic description that allows library data to be shared as part of the web. Libraries are encouraged to make their resources discoverable on the web of data by linking to other institutions and authorities.

Open Source: Liberating your systems

Open Source: Liberating your systemsRichard Wallis Presentation at Breaking the Barriers 2009 - Open Source in Libraries conference.

London 18th May 2009

Yann Nicolas - Elag 2018 : From XML to MARC

Yann Nicolas - Elag 2018 : From XML to MARCABES The document discusses the ABES agency's work in collecting, normalizing, enriching and sharing bibliographic metadata from various sources like XML files, MARC records, and linked open data using RDF. It focuses on four use cases: linking print and ebook metadata, linking documents to authority records, linking articles to controlled vocabularies, and linking book chapters to concepts. The work involves transforming different data formats into RDF and linking entities between multiple data graphs. Challenges discussed include balancing data model flexibility with technological choices and workflows, and ensuring two-way communication between the RDF processing and cataloging activities.

From XML to MARC. RDF behind the scenes.

From XML to MARC. RDF behind the scenes.Y. Nicolas [ELAG Conference. 2018]

We collect heterogeneous metadata packages from various publishers. Although all of them are in XML, they vary a lot in terms of vocabulary, structure, granularity, precision, and accuracy. It is quite a challenge to cope with this jungle and recycling it to meet the needs of the Sudoc, the French academic union cataloguing system.

How to integrate and enrich these metadata ? How to integrate them in order to process them in a regular way, not through ad hoc processes ? How to integrate them with specific or generic controlled vocabularies ? How to enrich them with author identifiers, for instance ?

RDF looks like the ideal solution for integration and enrichment. Metadata are stored in the Virtuoso RDF database and processed through a workflow steered by the Oracle DB. We will illustrate this generic solution with Oxford UP metadata : ONIX records for printed books and KBART package description for ebooks.

PLNOG 6: Piotr Modzelewski, Bartłomiej Rymarski - Product Catalogue - Case Study

PLNOG 6: Piotr Modzelewski, Bartłomiej Rymarski - Product Catalogue - Case StudyPROIDEA This document appears to be a product catalogue containing case studies on various technologies. It discusses technologies like Thrift for generating code across multiple languages, Cassandra and Solr for scalable databases and search, and MogileFS for distributed file storage. For each technology, it provides an overview, examples of usage, and considerations for implementation and performance. It aims to provide solutions for building scalable services quickly using these open source tools.

Metadata - Linked Data

Metadata - Linked DataRichard Wallis Presentation to the NISO/BISG 7th Annual Forum

At ALA - Chicago 28th June 2013

#ala2013 #nisobisg07

20 billion triples in production

20 billion triples in productionIoan Toma The document discusses the UniProt SPARQL endpoint, which provides access to 20 billion biomedical data triples. It notes challenges in hosting large life science databases and standards-based federated querying. The endpoint uses Apache load balancing across two nodes each with 64 CPU cores and 256GB RAM. Loading rates of 500,000 triples/second are achieved. Usage peaks at 35 million queries per month from an estimated 300-2000 real users. Very large queries involving counting all IRIs can take hours. Template-based compilation and key-value approaches are proposed to optimize challenging queries. Public monitoring is also discussed.

WorldCat, Works, and Schema.org

WorldCat, Works, and Schema.orgRichard Wallis Presentation to the LODLAM Training Day at the Semantic Tech and Business Conference in San Jose, California - August 2014

Linked Data - Radical Change?

Linked Data - Radical Change?Richard Wallis The document discusses linked data and its potential impact on libraries. It describes linked data as connecting the world's libraries by publishing structured data about 290 million resources using common schemas, embedding RDFa, and linking to controlled vocabularies. While linked data presents challenges like metadata for different types of materials, it offers opportunities to describe resources as part of the web and link catalog data to related concepts through identifiers.

Drupal and the Semantic Web

Drupal and the Semantic WebKristof Van Tomme Drupal 7 will use RDFa markup in core, in this session I will:

-explain what the implications are of this and why this matters

-give a short introduction to the Semantic web, RDF, RDFa and SPARQL in human language

-give a short overview of the RDF modules that are available in contrib

-talk about some of the potential use cases of all these magical technologies

This is a talk from the Drupal track at Fosdem 2010.

Hadoop World 2011: Radoop: a Graphical Analytics Tool for Big Data - Gabor Ma...

Hadoop World 2011: Radoop: a Graphical Analytics Tool for Big Data - Gabor Ma...Cloudera, Inc. Hadoop is an excellent environment for analyzing large data sets, but it lacks an easy-to-use graphical interface for building data pipelines and performing advanced analytics. RapidMiner is an excellent open-source tool for data analytics, but is limited to running on a single machine.In this presentation, we will introduce Radoop, an extension to RapidMiner that lets users interact with a Hadoop cluster. Radoop combines the strengths of both projects and provides a user-friendly interface for editing and running ETL, analytics, and machine learning processes on Hadoop. We will also discuss lessons learned while integrating HDFS, Hive, and Mahout with RapidMiner.

Rapid Digitization of Latin American Ephemera with Hydra

Rapid Digitization of Latin American Ephemera with HydraJon Stroop Princeton University Library began to collect and build an archive of Latin American ephemera and gray literature in the mid 1970s to document the activities of political and social organizations and movements, as well as the broader political, socioeconomic and cultural developments of the region. Access to the material was provided by slowly accumulating and organizing thematic sub-collections, creating finding aids, and microfilming selected curated sub-collections. Reproductions of the microfilm were commercially distributed and resulting royalties were used to fund new acquisitions. That model gradually become unsustainable during the past decade and microfilming was halted in 2008.

Hydra breathes new life into this project by providing us with a framework for creating an end-to-end application that will facilitate rapid digitization, cataloging, and access to this important collection. Since the system went into production in April of 2014, nearly 1500 items have been cataloged, with the throughput rate ultimately accelerating to over 300 items per month in August.

8th TUC Meeting - Zhe Wu (Oracle USA). Bridging RDF Graph and Property Graph...

8th TUC Meeting - Zhe Wu (Oracle USA). Bridging RDF Graph and Property Graph...LDBC council During the 8th TUC Meeting held at Oracle’s facilities in Redwood City, California, Zhe Wu, Software Architect at Oracle Spatial and Graph, explained how is his team trying to bridge RDF Graph and Property Data Models.

Viewers also liked (6)

Docker build, test and deploy saa s applications

Docker build, test and deploy saa s applicationswilliam_greenly Docker can be used to build, test, and deploy software applications. It allows creating software containers that package code and dependencies together. Docker is useful for testing applications in isolation, continuous integration testing, and distributing software. Some key benefits are portability across stacks, reusable images, and treating infrastructure as code. Challenges include evolving standards and ensuring version compatibility across images and tools.

Semantic Web And Coldfusion

Semantic Web And Coldfusionwilliam_greenly The Semantic Web is the extension of the World Wide Web that enables people to share content beyond the boundaries of applications and websites.

It has been described in rather different ways: as a utopic vision, as a web of data, or merely as a natural paradigm shift in our daily use of the Web.

Most of all, the Semantic Web has inspired and engaged many people to create innovative and intelligent technologies and applications.

In this presentation we describe the underlying principles and key features of the semantic web along with where and how they fit in with server side and client side technologies supported by ColdFusion.

Web of things

Web of thingswilliam_greenly This document discusses the Web of Things, which aims to provide interoperability across IoT platforms through standards for describing things, discovery, and security/privacy. It outlines how the IoT builds upon existing internet protocols and standards, but introduces new protocols and data models for diverse connected devices. Key aspects of the Web of Things include bindings for application protocols, standardized thing descriptions represented using semantic web technologies like JSON-LD, and efforts to develop common methods for service discovery across the many existing approaches.

Data translation with SPARQL 1.1

Data translation with SPARQL 1.1andreas_schultz This document provides an outline for a WWW 2012 tutorial on schema mapping with SPARQL 1.1. The outline includes sections on why data integration is important, schema mapping, translating RDF data with SPARQL 1.1, and common mapping patterns. Mapping patterns discussed include simple renaming, structural patterns like renaming based on property existence or value, value transformation using SPARQL functions, and aggregation. The tutorial aims to show how SPARQL 1.1 can be used to express executable mappings between different data schemas and representations.

Virtuoso RDF Triple Store Analysis Benchmark & mapping tools RDF / OO

Virtuoso RDF Triple Store Analysis Benchmark & mapping tools RDF / OOPaolo Cristofaro This document discusses benchmarking Virtuoso, an open source triplestore, using the Berlin SPARQL Benchmark (BSBM). It summarizes the results of loading and querying datasets of various sizes (10M, 100M, 200M triples) on different systems. Virtuoso showed short loading times and high query throughput. The document also provides information on connecting to and working with Virtuoso using RESTful services, the Jena API, and the Sesame framework.

Advanced Replication Internals

Advanced Replication InternalsScott Hernandez Internals of replication in mongodb. These internals cover replication selection, the replication process, elections (and the rules), and oplog transformation.

This presentation was given at the MongoDB San Francisco conference.

Ad

Similar to Grails And The Semantic Web (20)

RDF Graph Data Management in Oracle Database and NoSQL Platforms

RDF Graph Data Management in Oracle Database and NoSQL PlatformsGraph-TA This document discusses Oracle's support for graph data models across its database and NoSQL platforms. It provides an overview of Oracle's RDF graph and property graph support in Oracle Database 12c and Oracle NoSQL Database. It also outlines Oracle's strategy to support graph data types on all its enterprise platforms, including Oracle Database, Oracle NoSQL, Oracle Big Data, and Oracle Cloud.

ISWC GoodRelations Tutorial Part 2

ISWC GoodRelations Tutorial Part 2Martin Hepp This is part 2 of the ISWC 2009 tutorial on the GoodRelations ontology and RDFa for e-commerce on the Web of Linked Data.

See also

http://www.ebusiness-unibw.org/wiki/Web_of_Data_for_E-Commerce_Tutorial_ISWC2009

GoodRelations Tutorial Part 2

GoodRelations Tutorial Part 2guestecacad2 This is part 2 of the ISWC 2009 tutorial on the GoodRelations ontology and RDFa for e-commerce on the Web of Linked Data.

See also

http://www.ebusiness-unibw.org/wiki/Web_of_Data_for_E-Commerce_Tutorial_ISWC2009

SPARQL 1.1 Update (2013-03-05)

SPARQL 1.1 Update (2013-03-05)andyseaborne SPARQL is a standard query language for RDF that has undergone two iterations (1.0 and 1.1) through the W3C process. SPARQL 1.1 includes updates to RDF stores, subqueries, aggregation, property paths, negation, and remote querying. It also defines separate specifications for querying, updating, protocols, graph store protocols, and federated querying. Apache Jena provides implementations of SPARQL 1.1 and tools like Fuseki for deploying SPARQL servers.

8th TUC Meeting -

8th TUC Meeting - LDBC council Jerven Bolleman, Lead Software Developer at Swiss-Prot Group, explained why are they offering a free SPARQL and RDF endpoint for the world to use and why is it hard to optimize it.

RDFa: introduction, comparison with microdata and microformats and how to use it

RDFa: introduction, comparison with microdata and microformats and how to use itJose Luis Lopez Pino Presentation for the course 'XML and Web Technologies' of the IT4BI Erasmus Mundus Master's Programme. Introduction, motivation, target domain, schema, attributes, comparing RDFa with RDF, comparing RDFa with Microformats, comparing RDFa with Microdata, how to use RDFa to improve websites, how to extract metadata defined with RDFa, GRDDL and a simple exercise.

Semantic Web use cases in outcomes research

Semantic Web use cases in outcomes researchChimezie Ogbuji Presentation given to University of Akron graduate CS class about work done with semantic web and outcomes research

Access Control for HTTP Operations on Linked Data

Access Control for HTTP Operations on Linked DataLuca Costabello Shi3ld is an access control module for enforcing authorization on triple stores. Shi3ld protects SPARQL queries and HTTP operations on Linked Data and relies on attribute-based access policies.

http://wimmics.inria.fr/projects/shi3ld-ldp/

Shi3ld comes in two flavours: Shi3ld-SPARQL, designed for SPARQL endpoints, and Shi3ld-HTTP, designed for HTTP operations on triples.

SHI3LD for HTTP offers authorization for read/write HTTP operations on Linked Data. It supports the SPARQL 1.1 Graph Store Protocol, and the Linked Data Platform specifications.

RDFauthor (EKAW)

RDFauthor (EKAW)Norman Heino My presentation on RDFauthor at EKAW2010, Lisbon. For more information on RDFauthor visit http://aksw.org/Projects/RDFauthor; for the code visit http://code.google.com/p/rdfauthor/.

HTAP Queries

HTAP QueriesAtif Shaikh The document discusses HTAP (Hybrid Transactional/Analytical Processing), data fabrics, and key PostgreSQL features that enable data fabrics. It describes HTAP as addressing resource contention by allowing mixed workloads on the same system and analytics on inflight transactional data. Data fabrics are defined as providing a logical unified data model, distributed cache, query federation, and semantic normalization across an enterprise data fabric cluster. Key PostgreSQL features that support data fabrics include its schema store, distributed cache, query federation, optimization, and normalization capabilities as well as foreign data wrappers.

Comparative Study That Aims Rdf Processing For The Java Platform

Comparative Study That Aims Rdf Processing For The Java PlatformComputer Science This document provides a comparative study of popular Java APIs for processing Resource Description Framework (RDF) data. It summarizes four main APIs: JRDF, Sesame, and Jena. For each API, it describes key features like storage methods, query support, documentation, and license. It finds that while each API has strengths, Sesame and Jena tend to have richer documentation and more developed feature sets than JRDF. The study aims to help Java developers choose the best RDF processing API for their needs.

Pig on Spark

Pig on Sparkmortardata Mayur Rustagi of Sigmoid Analytics describes recent progress from the Pig-on-Spark project at the NYC Pig User Group meetup.

Scaling Spark Workloads on YARN - Boulder/Denver July 2015

Scaling Spark Workloads on YARN - Boulder/Denver July 2015Mac Moore Hortonworks Presentation at The Boulder/Denver BigData Meetup on July 22nd, 2015. Topic: Scaling Spark Workloads on YARN. Spark as a workload in a multi-tenant Hadoop infrastructure, scaling, cloud deployment, tuning.

PHP, the GraphQL ecosystem and GraphQLite

PHP, the GraphQL ecosystem and GraphQLiteJEAN-GUILLAUME DUJARDIN Creating a GraphQL API is more and more common for PHP developers. The task can seem complex but there are a lot of tools to help.

In this talk given at AFUP Paris PHP Meetup, I'm presenting GraphQL and why it is important. Then, I'm having a look at the existing libraries in PHP. Finally, I'm diving in the details of GraphQLite; a library that creates a GraphQL schema by analyzing your PHP code.

Producing, publishing and consuming linked data - CSHALS 2013

Producing, publishing and consuming linked data - CSHALS 2013François Belleau This document discusses lessons learned from the Bio2RDF project for producing, publishing, and consuming linked data. It outlines three key lessons: 1) How to efficiently produce RDF using existing ETL tools like Talend to transform data formats into RDF triples; 2) How to publish linked data by designing URI patterns, offering SPARQL endpoints and associated tools, and registering data in public registries; 3) How to consume SPARQL endpoints by building semantic mashups using workflows to integrate data from multiple endpoints and then querying the mashup to answer questions.

Stardog 1.1: An Easier, Smarter, Faster RDF Database

Stardog 1.1: An Easier, Smarter, Faster RDF Databasekendallclark A talk from Semtech NYC 2012 about Stardog 1.1, the upcoming new release of Stardog, the RDF Database.

Stardog 1.1: Easier, Smarter, Faster RDF Database

Stardog 1.1: Easier, Smarter, Faster RDF DatabaseClark & Parsia LLC A talk from Semtech NYC 2012 about Stardog 1.1, the forthcoming release that adds SPARQL 1.1 and user-defined rules.

Rdf Processing Tools In Java

Rdf Processing Tools In JavaDicusarCorneliu This document compares several Java tools for processing Resource Description Framework (RDF) data: METAmorphoses, Jena, and JRDF. It evaluates each tool based on how it stores RDF triples, whether it supports the SPARQL query language, how well it is documented for developers, and its licensing. METAmorphoses transforms data between relational databases and RDF XML, Jena stores triples in memory and supports SPARQL with ARQ, and JRDF stores triples as Java objects and provides a GUI for SPARQL queries.

Not Your Father’s Data Warehouse: Breaking Tradition with Innovation

Not Your Father’s Data Warehouse: Breaking Tradition with InnovationInside Analysis The Briefing Room with Dr. Robin Bloor and Teradata

Live Webcast on May 20, 2014

Watch the archive: https://bloorgroup.webex.com/bloorgroup/lsr.php?RCID=f09e84f88e4ca6e0a9179c9a9e930b82

Traditional data warehouses have been the backbone of corporate decision making for over three decades. With the emergence of Big Data and popular technologies like open-source Apache™ Hadoop®, some analysts question the lifespan of the data warehouse and the future role it will play in enterprise information management. But it’s not practical to believe that emerging technologies provide a wholesale replacement of existing technologies and corporate investments in data management. Rather, a better approach is for new innovations and technologies to complement and build upon existing solutions.

Register for this episode of The Briefing Room to hear veteran Analyst Dr. Robin Bloor as he explains where tomorrow’s data warehouse fits in the information landscape. He’ll be briefed by Imad Birouty of Teradata, who will highlight the ways in which his company is evolving to meet the challenges presented by different types of data and applications. He will also tout Teradata’s recently-announced Teradata® Database 15 and Teradata® QueryGrid™, an analytics platform that enables data processing across the enterprise.

Visit InsideAnlaysis.com for more information.

Practical Cross-Dataset Queries with SPARQL (Introduction)

Practical Cross-Dataset Queries with SPARQL (Introduction)Richard Cyganiak This document provides an overview of using SPARQL as a query language for querying data across the web of data. It discusses how data from different sources like relational databases, Excel files, XML, JSON, microdata, etc. can be converted to RDF and queried using SPARQL. The tutorial will cover topics like federated querying across local and remote SPARQL endpoints, using SPARQL CONSTRUCT to map schemas, instance matching with Silk, and visualizing SPARQL results. Hands-on sessions will have participants install Jena tools and run queries on sample RDF data and endpoints.

RDFa: introduction, comparison with microdata and microformats and how to use it

RDFa: introduction, comparison with microdata and microformats and how to use itJose Luis Lopez Pino

Ad

Grails And The Semantic Web

- 1. Grails and the Semantic Web 19/09/2011

- 2. Common Misconceptions • Is bleeding edge and still experimental • Has been around a while and isn’t working • Has a high learning curve and adoption cost • Is all about unstructured content, nlp and SEO • Semantic Web data integration is all about query federation (EII) and never about warehousing/ETL

- 3. Use Cases • Query federation • Linking data • Inferring new data • Data management

- 4. Core Concepts • HTTP / URI’s • RDF • RDFS, OWL etc. • SPARQL • RDFa

- 5. RDF • Triples or quads • N3/Turtle, XML, RDFj (JSON) @prefix gr: <http://purl.org/goodrelations/v1#> . @prefix foaf: <http://xmlns.com/foaf/0.1/> . @prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>. @prefix dc: <http://purl.org/dc/elements/1.1/>. @prefix innovation: <http://purl.org/innovation/ns#>. @prefix ex: <http://www.example.com/>. @prefix dbpedia: <http://dbpedia.org/resource/>. @prefix ncicb: <http://ncicb.nci.nih.gov/xml/owl/EVS/Thesaurus.owl#>. ex:glucose-monitor dc:title "Non invasive testing of blood glucose levels"; innovation:embodiedBy ex:lein-2000; innovation:hasImprovement <uuid:aaaaaaaa>. ex:lein-2000 dc:title "The Lein 2000 blood glucose meter"; rdf:type gr:ProductOrServiceModel; rdf:type innovation:Embodiment; rdf:type ncicb:Diagnostic_Therapeutic_and_Research_Equipment; innovation:hasUsage <uuid:bbbbbbbb>. <uuid:bbbbbbbb> innovation:usedBy dbpedia:Physician; innovation:usedOn ex:Diabetics. <uuid:aaaaaaaa> innovation:improvesEffectivenessOf dbpedia:Medical_Test

- 6. SPARQL • SPARQL 1.1 Query • SPARQL 1.1 Update • SPARQL 1.1 Protocol for RDF • SPARQL 1.1 Graph Store HTTP Protocol • SPARQL 1.1 Entailment Regimes • SPARQL 1.1 Service Description • SPARQL 1.1 Federation Extensions

- 7. Alignment with Grails GORM RDF RDFS SPARQL GSP’s RDFa

- 8. Tools / API’s / Plugins • Jena/Sesame • Groovy SPARQL • RDFa Plugin • Triplestores

- 9. Jena / Sesame • RDF/RDFS/OWL Libraries • Ontology + Reasoning • SPARQL libraries • Development level triplestores

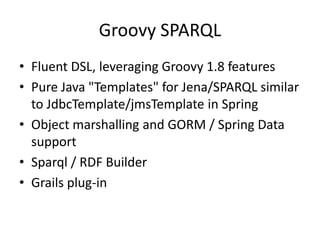

- 10. Groovy SPARQL • Fluent DSL, leveraging Groovy 1.8 features • Pure Java "Templates" for Jena/SPARQL similar to JdbcTemplate/jmsTemplate in Spring • Object marshalling and GORM / Spring Data support • Sparql / RDF Builder • Grails plug-in

- 11. RDFa Plugin

- 12. Triple Stores • 4Store • Virtuoso – Native JDBC Driver • Stardog – DataSouce and DataSourceFactoryBean for managing Stardog connections – SnarlTemplate for transaction- and connection-pool safe Stardog programming – DataImporter for easy bootstrapping of input data into Stardog

- 13. Known Issues • Support for SPARQL 1.1 • Reasoning • Compliance