Hadoop 2.4 installing on ubuntu 14.04

Download as pptx, pdf1 like1,102 views

This document provides steps to install Hadoop 2.4 on Ubuntu 14.04. It discusses installing Java, adding a dedicated Hadoop user, installing SSH, creating SSH certificates, installing Hadoop, configuring files, formatting the HDFS, starting and stopping Hadoop, and using Hadoop interfaces. The steps include modifying configuration files, creating directories for HDFS data, and running commands to format, start, and stop the single node Hadoop cluster.

1 of 27

Downloaded 26 times

![➔ Now it's time to start the newly installed single node cluster. We can use start-

all.sh or (start-dfs.sh and start-yarn.sh)

hduser@k:/home/k$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

14/07/13 23:36:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your

platform... using builtin-java classes where applicable

Starting namenodes on [localhost]

………………. ………………….. ……………….. . ……. ………………………………….. ……………………

……………………………. ……………………………………………………………….. ……………………………….. ..

localhost: starting nodemanager,

logging to /usr/local/hadoop/logs/yarn-hduser-nodemanager-k.out

➔ check if it's really up and running.

hduser@k:/home/k$ jps

➔ Another way to check is using netstat

hduser@k:/home/k$ netstat -plten | grep java

STARTING

HADOOP](https://image.slidesharecdn.com/hadoop2-150329205723-conversion-gate01/85/Hadoop-2-4-installing-on-ubuntu-14-04-21-320.jpg)

Ad

Recommended

Hadoop installation

Hadoop installationhabeebulla g This document provides instructions for installing Hadoop on Ubuntu. It describes creating a separate user for Hadoop, setting up SSH keys for access, installing Java, downloading and extracting Hadoop, configuring core Hadoop files like core-site.xml and hdfs-site.xml, and common errors that may occur during the Hadoop installation and configuration process. Finally, it explains how to format the namenode, start the Hadoop daemons, and check the Hadoop web interfaces.

Hadoop installation on windows

Hadoop installation on windows habeebulla g This document outlines the installation and configuration process for Hadoop on a Windows system using Ubuntu in a virtual machine. It provides detailed steps for creating a user, setting up SSH, installing Java, downloading Hadoop, configuring various XML files, and verifying the installation. It also describes how to access Hadoop services through specified ports in a web browser.

Hadoop single node setup

Hadoop single node setupMohammad_Tariq This document provides instructions for setting up Hadoop in single node mode on Ubuntu. It describes adding a Hadoop user, installing Java and SSH, downloading and extracting Hadoop, configuring environment variables and Hadoop configuration files, and formatting the NameNode.

An example Hadoop Install

An example Hadoop InstallMike Frampton This document provides a step-by-step guide for installing Apache Hadoop 1.2.0 on a three-machine cluster using Ubuntu 12.04 and Java 1.6. It includes instructions for setting up SSH, configuring Hadoop settings, formatting the Hadoop file system, and running a test MapReduce job to ensure the cluster is operational. The document concludes by confirming a successful setup and offers consultancy services for IT projects.

Hadoop installation

Hadoop installationAnkit Desai The document provides a comprehensive guide for installing a single-node and multi-node Hadoop cluster on Ubuntu, detailing the necessary steps from downloading Ubuntu and Java to configuring Hadoop settings. It includes commands for creating users, setting up SSH access, and starting the Hadoop services, along with troubleshooting common issues. Additionally, it emphasizes the importance of configuring network settings and file permissions for proper functionality.

Single node hadoop cluster installation

Single node hadoop cluster installation Mahantesh Angadi This document provides instructions for installing a single-node Hadoop cluster on Ubuntu. It outlines downloading and configuring Java, installing Hadoop, configuring SSH access to localhost, editing Hadoop configuration files, and formatting the HDFS filesystem via the namenode. Key steps include adding a dedicated Hadoop user, generating SSH keys, setting properties in core-site.xml, hdfs-site.xml and mapred-site.xml, and running 'hadoop namenode -format' to initialize the filesystem.

Hadoop 2.0 cluster setup on ubuntu 14.04 (64 bit)

Hadoop 2.0 cluster setup on ubuntu 14.04 (64 bit)Nag Arvind Gudiseva This document provides instructions for installing Hadoop on a single node Ubuntu 14.04 system by setting up Java, SSH, creating Hadoop users and groups, downloading and configuring Hadoop, and formatting the HDFS filesystem. Key steps include installing Java and SSH, configuring SSH certificates for passwordless access, modifying configuration files like core-site.xml and hdfs-site.xml to specify directories, and starting Hadoop processes using start-all.sh.

Hadoop single cluster installation

Hadoop single cluster installationMinh Tran The document details the steps for installing Hadoop on an Ubuntu server, including prerequisites such as installing JDK, SSH, and Hadoop itself. It describes how to configure the environment, start a single-node cluster, run a MapReduce job using example eBooks, and check the Hadoop process status. Configuration files and command examples are provided for clarity throughout the installation process.

Apache Hadoop & Hive installation with movie rating exercise

Apache Hadoop & Hive installation with movie rating exerciseShiva Rama Krishna Dasharathi This document provides instructions for installing Hadoop and Hive. It outlines pre-requisites like Java, downloading Hadoop and Hive tarballs. It describes setting environment variables, configuring Hadoop for pseudo-distributed mode, formatting HDFS and starting services. Instructions are given for starting the Hive metastore and using sample rating data in Hive queries to learn the basics.

Running hadoop on ubuntu linux

Running hadoop on ubuntu linuxTRCK The document discusses Hadoop and HDFS. It provides an overview of HDFS architecture and how it is designed to be highly fault tolerant and provide high throughput access to large datasets. It also discusses setting up single node and multi-node Hadoop clusters on Ubuntu Linux, including configuration, formatting, starting and stopping the clusters, and running MapReduce jobs.

Hadoop Installation

Hadoop Installationmrinalsingh385 This document provides a comprehensive guide for installing Hadoop 2.2.0 on a system using Ubuntu, detailing the necessary steps such as Java installation, SSH configuration, user creation, Hadoop installation, and configuration of environment variables. It includes troubleshooting tips for common errors encountered during the setup process and concludes with verifying successful service startup. The guide is aimed at those looking to establish a Hadoop environment and encourages further learning through related training resources.

Hadoop Installation and basic configuration

Hadoop Installation and basic configurationGerrit van Vuuren This document provides an overview of Hadoop HDFS/MapReduce architecture, hardware requirements, installation and configuration process, monitoring options, and key components like the Namenode. It discusses configuring the Namenode, JobTracker, DataNodes, and TaskTrackers. Hardware requirements for the NameNode/JobTracker and DataNode/TaskTracker are specified. Installation can be done via tar file download or using a prebuilt rpm. Configuration involves editing XML configuration files and starting required services. Monitoring can be done via web scraping, Ganglia, or Cacti. The Namenode writes edits to RAM and write-ahead log, with checkpoints to the filesystem. High availability is experimental in YARN and Hadoop

Drupal from scratch

Drupal from scratchRovic Honrado Drupal from Scratch provides a comprehensive guide to installing Drupal on a Debian-based system using command lines. The document outlines how to install Drupal Core, set up a MySQL database, configure a virtual host for local development, and complete the first Drupal site installation. Key steps include downloading and extracting Drupal Core, installing prerequisite software like PHP and Apache, creating a database, enabling virtual hosts, and navigating the Drupal installation process.

Open Source Backup Conference 2014: Workshop bareos introduction, by Philipp ...

Open Source Backup Conference 2014: Workshop bareos introduction, by Philipp ...NETWAYS The document is a workshop guide for the Bareos backup software, detailing installation, configuration, and usage. It covers topics such as virtual machine setup, communication architecture, and configuration file syntax, while also providing hands-on exercises for backup and restore operations. The guide includes examples of common tasks, commands, and configuration adjustments to optimize backup processes.

HADOOP 실제 구성 사례, Multi-Node 구성

HADOOP 실제 구성 사례, Multi-Node 구성Young Pyo The document describes the steps to set up a Hadoop cluster with one master node and three slave nodes. It includes installing Java and Hadoop, configuring environment variables and Hadoop files, generating SSH keys, formatting the namenode, starting services, and running a sample word count job. Additional sections cover adding and removing nodes and performing health checks on the cluster.

Set up Hadoop Cluster on Amazon EC2

Set up Hadoop Cluster on Amazon EC2IMC Institute 1. The document describes how to set up a Hadoop cluster on Amazon EC2, including creating a VPC, launching EC2 instances for a master node and slave nodes, and configuring the instances to install and run Hadoop services.

2. Key steps include creating a VPC, security group and EC2 instances for the master and slaves, installing Java and Hadoop on the master, cloning the master image for the slaves, and configuring files to set the master and slave nodes and start Hadoop services.

3. The setup is tested by verifying Hadoop processes are running on all nodes and accessing the HDFS WebUI.

Hadoop 20111215

Hadoop 20111215exsuns The document provides descriptions of various components in Hadoop including Hadoop Core, Pig, ZooKeeper, JobTracker, TaskTracker, NameNode, Secondary NameNode, and the design of HDFS. It also discusses how to deploy Hadoop in a distributed environment and configure core-site.xml, hdfs-site.xml, and mapred-site.xml.

Hadoop spark performance comparison

Hadoop spark performance comparisonarunkumar sadhasivam This document provides information on running Spark programs and accessing HDFS from Spark using Java. It discusses running a word count example in local mode and standalone Spark without Hadoop. It also compares the performance of running the same program in different environments like standalone Java, Hadoop and Spark. The document then shows how to access HDFS files from Spark Java program using the Hadoop common jar.

Hadoop meet Rex(How to construct hadoop cluster with rex)

Hadoop meet Rex(How to construct hadoop cluster with rex)Jun Hong Kim This document discusses using Rex to easily install and configure a Hadoop cluster. It begins by introducing Rex and its capabilities. Various preparation steps are described, such as installing Rex, generating SSH keys, and creating user accounts. Tasks are then defined using Rex to install software like Java, download Hadoop source files, configure hosts files, and more. The goals are to automate the entire Hadoop setup process and eliminate manual configuration using Rex's simple yet powerful scripting abilities.

Hadoop 20111117

Hadoop 20111117exsuns Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. The core of Hadoop includes HDFS for distributed storage, and MapReduce for distributed processing. Other Hadoop projects include Pig for data flows, ZooKeeper for coordination, and YARN for job scheduling. Key Hadoop daemons include the NameNode, Secondary NameNode, DataNodes, JobTracker and TaskTrackers.

Out of the Box Replication in Postgres 9.4(pgconfsf)

Out of the Box Replication in Postgres 9.4(pgconfsf)Denish Patel Denish Patel gave a presentation on PostgreSQL replication. He began by introducing himself and his background. He then discussed PostgreSQL write-ahead logging (WAL), replication history, and how replication is currently setup. The presentation covered replication slots, demoing replication without external tools using pg_basebackup, streaming replication with slots, and pg_receivexlog. Patel also discussed monitoring replication and answered questions from the audience.

Install hadoop in a cluster

Install hadoop in a clusterXuhong Zhang This document provides instructions for installing Hadoop on a cluster. It outlines prerequisites like having multiple Linux machines with Java installed and SSH configured. The steps include downloading and unpacking Hadoop, configuring environment variables and configuration files, formatting the namenode, starting HDFS and Yarn processes, and running a sample MapReduce job to test the installation.

250hadoopinterviewquestions

250hadoopinterviewquestionsRamana Swamy This document provides 250 interview questions for experienced Hadoop developers. It covers questions related to Hadoop cluster setup, HDFS, MapReduce, HBase, Hive, Pig, Sqoop and more. Links are also provided to additional interview questions on specific Hadoop components like HDFS, MapReduce etc. The questions range from basic to advanced levels.

Hadoop HDFS

Hadoop HDFS Madhur Nawandar The document provides information about HDFS (Hadoop Distributed File System) including its design goals of storing large amounts of data reliably through horizontal scalability. It discusses HDFS configuration files and commands for interacting with HDFS through the hadoop fs command. The document also summarizes HDFS limitations and provides examples of using HDFS programmatically in Java.

Empacotamento e backport de aplicações em debian

Empacotamento e backport de aplicações em debianAndre Ferraz This document discusses packaging and backporting applications in Debian. It covers topics like Debian package structure with debian-binary, control.tar.gz and data.tar.gz files. It also mentions tools like debootstrap and schroot that are used for building packages. Other packaging topics discussed include Perl, PHP, Ruby and Python packaging. Backporting is covered along with checking for dependency and conflict issues when bringing packages from unstable to stable.

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...Titus Damaiyanti 1. The document discusses installing Hadoop in single node cluster mode on Ubuntu, including installing Java, configuring SSH, extracting and configuring Hadoop files. Key configuration files like core-site.xml and hdfs-site.xml are edited.

2. Formatting the HDFS namenode clears all data. Hadoop is started using start-all.sh and the jps command checks if daemons are running.

3. The document then moves to discussing running a KMeans clustering MapReduce program on the installed Hadoop framework.

Run wordcount job (hadoop)

Run wordcount job (hadoop)valeri kopaleishvili The document provides step-by-step instructions for installing a single-node Hadoop cluster on Ubuntu Linux using VMware. It details downloading and configuring required software like Java, SSH, and Hadoop. Configuration files are edited to set properties for core Hadoop functions and enable HDFS. Finally, sample data is copied to HDFS and a word count MapReduce job is run to test the installation.

Hadoop single node installation on ubuntu 14

Hadoop single node installation on ubuntu 14jijukjoseph The document provides a comprehensive guide for installing Hadoop on a single node using Ubuntu 14.04, detailing prerequisites such as Java and SSH installation. It includes instructions on creating a Hadoop user, downloading and configuring Hadoop, setting environment variables, and formatting the namenode. Additionally, the document outlines the process for starting Hadoop daemons and verifying the installation through specific commands and web interfaces.

02 Hadoop deployment and configuration

02 Hadoop deployment and configurationSubhas Kumar Ghosh This document provides instructions for configuring a single node Hadoop deployment on Ubuntu. It describes installing Java, adding a dedicated Hadoop user, configuring SSH for key-based authentication, disabling IPv6, installing Hadoop, updating environment variables, and configuring Hadoop configuration files including core-site.xml, mapred-site.xml, and hdfs-site.xml. Key steps include setting JAVA_HOME, configuring HDFS directories and ports, and setting hadoop.tmp.dir to the local /app/hadoop/tmp directory.

R hive tutorial supplement 1 - Installing Hadoop

R hive tutorial supplement 1 - Installing HadoopAiden Seonghak Hong This document provides instructions for installing Hadoop on a small cluster of 4 virtual machines for testing purposes. It describes downloading and extracting Hadoop, configuring environment variables and SSH keys, editing configuration files, and checking the Hadoop status page to confirm the installation was successful.

More Related Content

What's hot (17)

Apache Hadoop & Hive installation with movie rating exercise

Apache Hadoop & Hive installation with movie rating exerciseShiva Rama Krishna Dasharathi This document provides instructions for installing Hadoop and Hive. It outlines pre-requisites like Java, downloading Hadoop and Hive tarballs. It describes setting environment variables, configuring Hadoop for pseudo-distributed mode, formatting HDFS and starting services. Instructions are given for starting the Hive metastore and using sample rating data in Hive queries to learn the basics.

Running hadoop on ubuntu linux

Running hadoop on ubuntu linuxTRCK The document discusses Hadoop and HDFS. It provides an overview of HDFS architecture and how it is designed to be highly fault tolerant and provide high throughput access to large datasets. It also discusses setting up single node and multi-node Hadoop clusters on Ubuntu Linux, including configuration, formatting, starting and stopping the clusters, and running MapReduce jobs.

Hadoop Installation

Hadoop Installationmrinalsingh385 This document provides a comprehensive guide for installing Hadoop 2.2.0 on a system using Ubuntu, detailing the necessary steps such as Java installation, SSH configuration, user creation, Hadoop installation, and configuration of environment variables. It includes troubleshooting tips for common errors encountered during the setup process and concludes with verifying successful service startup. The guide is aimed at those looking to establish a Hadoop environment and encourages further learning through related training resources.

Hadoop Installation and basic configuration

Hadoop Installation and basic configurationGerrit van Vuuren This document provides an overview of Hadoop HDFS/MapReduce architecture, hardware requirements, installation and configuration process, monitoring options, and key components like the Namenode. It discusses configuring the Namenode, JobTracker, DataNodes, and TaskTrackers. Hardware requirements for the NameNode/JobTracker and DataNode/TaskTracker are specified. Installation can be done via tar file download or using a prebuilt rpm. Configuration involves editing XML configuration files and starting required services. Monitoring can be done via web scraping, Ganglia, or Cacti. The Namenode writes edits to RAM and write-ahead log, with checkpoints to the filesystem. High availability is experimental in YARN and Hadoop

Drupal from scratch

Drupal from scratchRovic Honrado Drupal from Scratch provides a comprehensive guide to installing Drupal on a Debian-based system using command lines. The document outlines how to install Drupal Core, set up a MySQL database, configure a virtual host for local development, and complete the first Drupal site installation. Key steps include downloading and extracting Drupal Core, installing prerequisite software like PHP and Apache, creating a database, enabling virtual hosts, and navigating the Drupal installation process.

Open Source Backup Conference 2014: Workshop bareos introduction, by Philipp ...

Open Source Backup Conference 2014: Workshop bareos introduction, by Philipp ...NETWAYS The document is a workshop guide for the Bareos backup software, detailing installation, configuration, and usage. It covers topics such as virtual machine setup, communication architecture, and configuration file syntax, while also providing hands-on exercises for backup and restore operations. The guide includes examples of common tasks, commands, and configuration adjustments to optimize backup processes.

HADOOP 실제 구성 사례, Multi-Node 구성

HADOOP 실제 구성 사례, Multi-Node 구성Young Pyo The document describes the steps to set up a Hadoop cluster with one master node and three slave nodes. It includes installing Java and Hadoop, configuring environment variables and Hadoop files, generating SSH keys, formatting the namenode, starting services, and running a sample word count job. Additional sections cover adding and removing nodes and performing health checks on the cluster.

Set up Hadoop Cluster on Amazon EC2

Set up Hadoop Cluster on Amazon EC2IMC Institute 1. The document describes how to set up a Hadoop cluster on Amazon EC2, including creating a VPC, launching EC2 instances for a master node and slave nodes, and configuring the instances to install and run Hadoop services.

2. Key steps include creating a VPC, security group and EC2 instances for the master and slaves, installing Java and Hadoop on the master, cloning the master image for the slaves, and configuring files to set the master and slave nodes and start Hadoop services.

3. The setup is tested by verifying Hadoop processes are running on all nodes and accessing the HDFS WebUI.

Hadoop 20111215

Hadoop 20111215exsuns The document provides descriptions of various components in Hadoop including Hadoop Core, Pig, ZooKeeper, JobTracker, TaskTracker, NameNode, Secondary NameNode, and the design of HDFS. It also discusses how to deploy Hadoop in a distributed environment and configure core-site.xml, hdfs-site.xml, and mapred-site.xml.

Hadoop spark performance comparison

Hadoop spark performance comparisonarunkumar sadhasivam This document provides information on running Spark programs and accessing HDFS from Spark using Java. It discusses running a word count example in local mode and standalone Spark without Hadoop. It also compares the performance of running the same program in different environments like standalone Java, Hadoop and Spark. The document then shows how to access HDFS files from Spark Java program using the Hadoop common jar.

Hadoop meet Rex(How to construct hadoop cluster with rex)

Hadoop meet Rex(How to construct hadoop cluster with rex)Jun Hong Kim This document discusses using Rex to easily install and configure a Hadoop cluster. It begins by introducing Rex and its capabilities. Various preparation steps are described, such as installing Rex, generating SSH keys, and creating user accounts. Tasks are then defined using Rex to install software like Java, download Hadoop source files, configure hosts files, and more. The goals are to automate the entire Hadoop setup process and eliminate manual configuration using Rex's simple yet powerful scripting abilities.

Hadoop 20111117

Hadoop 20111117exsuns Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. The core of Hadoop includes HDFS for distributed storage, and MapReduce for distributed processing. Other Hadoop projects include Pig for data flows, ZooKeeper for coordination, and YARN for job scheduling. Key Hadoop daemons include the NameNode, Secondary NameNode, DataNodes, JobTracker and TaskTrackers.

Out of the Box Replication in Postgres 9.4(pgconfsf)

Out of the Box Replication in Postgres 9.4(pgconfsf)Denish Patel Denish Patel gave a presentation on PostgreSQL replication. He began by introducing himself and his background. He then discussed PostgreSQL write-ahead logging (WAL), replication history, and how replication is currently setup. The presentation covered replication slots, demoing replication without external tools using pg_basebackup, streaming replication with slots, and pg_receivexlog. Patel also discussed monitoring replication and answered questions from the audience.

Install hadoop in a cluster

Install hadoop in a clusterXuhong Zhang This document provides instructions for installing Hadoop on a cluster. It outlines prerequisites like having multiple Linux machines with Java installed and SSH configured. The steps include downloading and unpacking Hadoop, configuring environment variables and configuration files, formatting the namenode, starting HDFS and Yarn processes, and running a sample MapReduce job to test the installation.

250hadoopinterviewquestions

250hadoopinterviewquestionsRamana Swamy This document provides 250 interview questions for experienced Hadoop developers. It covers questions related to Hadoop cluster setup, HDFS, MapReduce, HBase, Hive, Pig, Sqoop and more. Links are also provided to additional interview questions on specific Hadoop components like HDFS, MapReduce etc. The questions range from basic to advanced levels.

Hadoop HDFS

Hadoop HDFS Madhur Nawandar The document provides information about HDFS (Hadoop Distributed File System) including its design goals of storing large amounts of data reliably through horizontal scalability. It discusses HDFS configuration files and commands for interacting with HDFS through the hadoop fs command. The document also summarizes HDFS limitations and provides examples of using HDFS programmatically in Java.

Empacotamento e backport de aplicações em debian

Empacotamento e backport de aplicações em debianAndre Ferraz This document discusses packaging and backporting applications in Debian. It covers topics like Debian package structure with debian-binary, control.tar.gz and data.tar.gz files. It also mentions tools like debootstrap and schroot that are used for building packages. Other packaging topics discussed include Perl, PHP, Ruby and Python packaging. Backporting is covered along with checking for dependency and conflict issues when bringing packages from unstable to stable.

Similar to Hadoop 2.4 installing on ubuntu 14.04 (20)

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...Titus Damaiyanti 1. The document discusses installing Hadoop in single node cluster mode on Ubuntu, including installing Java, configuring SSH, extracting and configuring Hadoop files. Key configuration files like core-site.xml and hdfs-site.xml are edited.

2. Formatting the HDFS namenode clears all data. Hadoop is started using start-all.sh and the jps command checks if daemons are running.

3. The document then moves to discussing running a KMeans clustering MapReduce program on the installed Hadoop framework.

Run wordcount job (hadoop)

Run wordcount job (hadoop)valeri kopaleishvili The document provides step-by-step instructions for installing a single-node Hadoop cluster on Ubuntu Linux using VMware. It details downloading and configuring required software like Java, SSH, and Hadoop. Configuration files are edited to set properties for core Hadoop functions and enable HDFS. Finally, sample data is copied to HDFS and a word count MapReduce job is run to test the installation.

Hadoop single node installation on ubuntu 14

Hadoop single node installation on ubuntu 14jijukjoseph The document provides a comprehensive guide for installing Hadoop on a single node using Ubuntu 14.04, detailing prerequisites such as Java and SSH installation. It includes instructions on creating a Hadoop user, downloading and configuring Hadoop, setting environment variables, and formatting the namenode. Additionally, the document outlines the process for starting Hadoop daemons and verifying the installation through specific commands and web interfaces.

02 Hadoop deployment and configuration

02 Hadoop deployment and configurationSubhas Kumar Ghosh This document provides instructions for configuring a single node Hadoop deployment on Ubuntu. It describes installing Java, adding a dedicated Hadoop user, configuring SSH for key-based authentication, disabling IPv6, installing Hadoop, updating environment variables, and configuring Hadoop configuration files including core-site.xml, mapred-site.xml, and hdfs-site.xml. Key steps include setting JAVA_HOME, configuring HDFS directories and ports, and setting hadoop.tmp.dir to the local /app/hadoop/tmp directory.

R hive tutorial supplement 1 - Installing Hadoop

R hive tutorial supplement 1 - Installing HadoopAiden Seonghak Hong This document provides instructions for installing Hadoop on a small cluster of 4 virtual machines for testing purposes. It describes downloading and extracting Hadoop, configuring environment variables and SSH keys, editing configuration files, and checking the Hadoop status page to confirm the installation was successful.

Hadoop completereference

Hadoop completereferencearunkumar sadhasivam This document provides instructions for configuring Java, Hadoop, and related components on a single Ubuntu system. It includes steps to install Java 7, add a dedicated Hadoop user, configure SSH access, disable IPv6, install Hadoop, and configure core Hadoop files and directories. Prerequisites and configuration of files like yarn-site.xml, core-site.xml, mapred-site.xml, and hdfs-site.xml are described. The goal is to set up a single node Hadoop cluster for testing and development purposes.

Setting up a HADOOP 2.2 cluster on CentOS 6

Setting up a HADOOP 2.2 cluster on CentOS 6Manish Chopra This document outlines the steps to set up a Hadoop 2.2 cluster on RHEL/CentOS 6 using VMware, requiring one master node and two slave nodes with specified configurations. It covers the installation of Java and Hadoop, configuring user authentication, setting up necessary folders and XML configurations for HDFS and YARN, and starting the services. Finally, it provides URLs for accessing the Namenode and ResourceManager after the services are launched.

Setup and run hadoop distrubution file system example 2.2

Setup and run hadoop distrubution file system example 2.2Mounir Benhalla The document provides instructions for setting up Hadoop 2.2.0 on Ubuntu. It describes installing Java and OpenSSH, creating Hadoop user and groups, setting up SSH keys for passwordless login, configuring Hadoop environment variables, formatting the namenode, starting Hadoop services, and running a sample Pi estimation MapReduce job to test the installation.

Configure h base hadoop and hbase client

Configure h base hadoop and hbase clientShashwat Shriparv This document provides instructions for configuring Hadoop, HBase, and HBase client on a single node system. It includes steps for installing Java, adding a dedicated Hadoop user, configuring SSH, disabling IPv6, installing and configuring Hadoop, formatting HDFS, starting the Hadoop processes, running example MapReduce jobs to test the installation, and configuring HBase.

Big data with hadoop Setup on Ubuntu 12.04

Big data with hadoop Setup on Ubuntu 12.04Mandakini Kumari This document provides an overview of Hadoop and how to set it up. It first defines big data and describes Hadoop's advantages over traditional systems, such as its ability to handle large datasets across commodity hardware. It then outlines Hadoop's components like HDFS and MapReduce. The document concludes by detailing the steps to install Hadoop, including setting up Linux prerequisites, configuring files, and starting the processes.

Exp-3.pptx

Exp-3.pptxPraveenKumar581409 1) The document describes the steps to install a single node Hadoop cluster on a laptop or desktop.

2) It involves downloading and extracting required software like Hadoop, JDK, and configuring environment variables.

3) Key configuration files like core-site.xml, hdfs-site.xml and mapred-site.xml are edited to configure the HDFS, namenode and jobtracker.

4) The namenode is formatted and Hadoop daemons like datanode, secondary namenode and jobtracker are started.

Configuring Your First Hadoop Cluster On EC2

Configuring Your First Hadoop Cluster On EC2benjaminwootton This document provides instructions for setting up a small 3 node Hadoop cluster on Amazon EC2. It covers configuring EC2 instances, installing Java and Hadoop, configuring the Hadoop nodes and services, and running a sample MapReduce job to validate the cluster. The goal is to provide a simple tutorial for getting started with Hadoop on EC2 for learning purposes.

July 2010 Triangle Hadoop Users Group - Chad Vawter Slides

July 2010 Triangle Hadoop Users Group - Chad Vawter Slidesryancox This document provides an overview of setting up a Hadoop cluster, including installing the Apache Hadoop distribution, configuring SSH keys for passwordless login between nodes, configuring environment variables and Hadoop configuration files, and starting and stopping the HDFS and MapReduce services. It also briefly discusses alternative Hadoop distributions from Cloudera and Yahoo, as well as using cloud platforms like Amazon EC2 for Hadoop clusters.

Deploy hadoop cluster

Deploy hadoop clusterChirag Ahuja This document provides instructions for setting up a 3-node Hadoop cluster with 1 master node and 2 slave nodes. It describes installing Java, configuring SSH access, downloading and installing Hadoop, editing configuration files, starting HDFS and MapReduce services on the master, and verifying that all Hadoop daemon processes are running as expected on each node.

Single node setup

Single node setupKBCHOW123 This document describes how to set up Hadoop in three modes - standalone, pseudo-distributed, and fully-distributed - on a single node. Standalone mode runs Hadoop as a single process, pseudo-distributed runs daemons as separate processes, and fully-distributed requires a multi-node cluster. It provides instructions on installing Java and SSH, downloading Hadoop, configuring files for the different modes, starting and stopping processes, and running example jobs.

Hadoop installation steps

Hadoop installation stepsMayank Sharma This document provides instructions to install Hadoop version 2.6.0 on an Ubuntu 12.04 LTS machine. It involves updating the system, installing Java, creating a Hadoop user, installing SSH, downloading and extracting Hadoop, configuring files and directories, and starting the Hadoop services. The installation is verified by checking running processes and accessing the NameNode and Secondary NameNode web interfaces. The document concludes by providing commands to stop the Hadoop cluster services.

Apache Hadoop Shell Rewrite

Apache Hadoop Shell RewriteAllen Wittenauer The document outlines the overview of a shell script rewrite for Hadoop, emphasizing goals like consistency and simplification. It details changes to the structure and functionality of various scripts, aiming for backward compatibility while introducing new features. The document also discusses logging, daemon management, and security enhancements for running Hadoop daemons securely.

Hadoop operations basic

Hadoop operations basicHafizur Rahman This document provides an overview of Hadoop, including its architecture, installation, configuration, and commands. It describes the challenges of large-scale data that Hadoop addresses through distributed processing and storage across clusters. The key components of Hadoop are HDFS for storage and MapReduce for distributed processing. HDFS stores data across clusters and provides fault tolerance through replication, while MapReduce allows parallel processing of large datasets through a map and reduce programming model. The document also outlines how to install and configure Hadoop in pseudo-distributed and fully distributed modes.

Hadoop installation with an example

Hadoop installation with an exampleNikita Kesharwani This document provides an overview of Apache Hadoop, an open-source framework for distributed storage and processing of large datasets across clusters of computers. It discusses what Hadoop is, why it is useful for big data problems, examples of companies using Hadoop, the core Hadoop components like HDFS and MapReduce, and how to install and run Hadoop in pseudo-distributed mode on a single node. It also includes an example of running a word count MapReduce job to count word frequencies in input files.

Configuring and manipulating HDFS files

Configuring and manipulating HDFS filesRupak Roy The document describes different installation types of Hadoop, including standalone mode, pseudo-distributed mode, and fully distributed mode, along with the installation requirements for Java and Hadoop. It outlines configuration steps for each mode, focusing on settings within various configuration files such as hadoop-env.sh, hdfs-site.xml, and mapred-site.xml. Additionally, it covers commands for managing files in HDFS, including loading, retrieving, and deleting files, plus enabling the trash feature for recoverable deletions.

Ad

More from baabtra.com - No. 1 supplier of quality freshers (20)

Agile methodology and scrum development

Agile methodology and scrum developmentbaabtra.com - No. 1 supplier of quality freshers The document provides an overview of agile methodology compared to traditional waterfall methodology. Waterfall development completes each phase sequentially before moving to the next, which can be risky and inefficient. Agile is iterative and adaptable, prioritizing working software over documentation. Scrum is an agile framework that uses short sprints, daily stand-ups, and backlogs to deliver working software frequently. Kanban also uses iterative development but visualizes workflows on boards to limit work in progress and optimize lead times.

Best coding practices

Best coding practicesbaabtra.com - No. 1 supplier of quality freshers This document provides best practices for coding. It discusses the importance of coding practices in reducing rework time and saving money. It covers four key areas: program design, naming conventions, documentation, and formatting. Program design discusses architectures like MVC and data storage. Naming conventions provide guidelines for naming classes, methods, variables and packages using conventions like camel casing. Documentation comments are important for maintaining programs and explaining code to other developers. Formatting addresses indentation, whitespace and brace formatting.

Core java - baabtra

Core java - baabtrabaabtra.com - No. 1 supplier of quality freshers This document provides an introduction to the Java programming language. It discusses installing the JDK and Eclipse development environment. It then covers JVM, JRE, and JDK concepts. The document demonstrates a simple "Hello World" Java program. It discusses Java naming conventions and basic data types. It also outlines common loops and control structures in Java. The remainder of the document provides examples of Java programs and discusses arrays, lists, modifiers, methods, OOP concepts by relating real-world objects to objects in software, and how to define classes and create objects in Java.

Acquiring new skills what you should know

Acquiring new skills what you should knowbaabtra.com - No. 1 supplier of quality freshers The document discusses the process of acquiring new skills and understanding how memory functions in relation to knowledge patterns. It emphasizes the importance of connecting new concepts to existing knowledge, the necessity of practice for skill mastery, and the critical role of sleep in memory retention. Additionally, it highlights that individualized practice techniques are essential for effective learning in specific skills.

Baabtra.com programming at school

Baabtra.com programming at schoolbaabtra.com - No. 1 supplier of quality freshers Baabtra.com aims to enhance students' analytical and problem-solving skills through programming education, preparing them to become creators of technology. The program caters to students from KG1 to grade 12 with various activities, including game-based learning and coding in Python, PHP, Android, or Java. Schools can implement Baabtra's programming zone and access resources via a cloud platform, with teacher training and certificates provided upon course completion.

99LMS for Enterprises - LMS that you will love

99LMS for Enterprises - LMS that you will love baabtra.com - No. 1 supplier of quality freshers 99LMS is an enterprise learning environment that manages company learning processes, enhancing employee skills through integrated course content, classroom training, and e-learning. It aims to reskill employees, reduce training costs, ensure regulatory compliance, and improve performance management while providing features like offline access and modular content. The platform supports various roles and includes capabilities for trainers and HR to oversee training effectiveness and manage employee progress.

Php sessions & cookies

Php sessions & cookiesbaabtra.com - No. 1 supplier of quality freshers The document provides an introduction to using PHP sessions and cookies to maintain state across multiple requests. It discusses how cookies store small amounts of data on the client browser, while sessions allow storing data on the server. The document then provides steps to create a login system using sessions: 1) Check login credentials and create a session variable on successful login, 2) Display user profile details on the profile page by fetching the session variable, 3) Include a logout link that destroys the session to end the user session. Key differences between cookies and sessions are also summarized - cookies are stored on the client while sessions are stored on the server.

Php database connectivity

Php database connectivitybaabtra.com - No. 1 supplier of quality freshers This document provides an introduction and instructions for connecting a PHP application to a MySQL database. It discusses storing and retrieving data from the database. The key steps covered are:

1. Connecting to the MySQL server from PHP using mysql_connect().

2. Selecting the database to use with mysql_select_db().

3. Executing SQL queries to store/retrieve data with mysql_query().

4. Different mysql_fetch_* functions that can be used to retrieve rows of data from a database query.

An example task is provided where a registration form allows storing user data to a database table, and then retrieving and displaying the stored data in a table.

Chapter 6 database normalisation

Chapter 6 database normalisationbaabtra.com - No. 1 supplier of quality freshers The document discusses database normalization and the three forms of normalization. It provides examples of tables that violate each normal form and explains how to modify the tables to conform to the normal forms. The first normal form requires each field to contain a single value and related data to be stored in separate tables or fields. The second normal form eliminates redundant data by creating separate tables for values that apply to multiple records. The third normal form ensures non-key fields are fully dependent on the primary key and removes transitive dependencies.

Chapter 5 transactions and dcl statements

Chapter 5 transactions and dcl statementsbaabtra.com - No. 1 supplier of quality freshers This document discusses transactions in SQL Server. It introduces transactions and their relevance in ensuring data integrity when multiple tables need to be updated together. Transactions group a set of database operations so that if any operation fails, all operations are rolled back. The document demonstrates creating a transaction using START TRANSACTION, COMMIT TRANSACTION, and ROLLBACK TRANSACTION statements. It also shows handling transactions within try/catch blocks so that failures cause a rollback. Transactions ensure atomicity and integrity by committing all operations together as a single unit, or rolling them all back if any fail.

Chapter 4 functions, views, indexing

Chapter 4 functions, views, indexingbaabtra.com - No. 1 supplier of quality freshers The document discusses user defined functions (UDF), views, and indexing in SQL Server. It provides an example of a UDF that returns a teacher's name based on their ID. Key differences between stored procedures and UDFs are that UDFs are compiled at runtime, can't perform DML operations, and must return a value. Views are described as customized representations of data from tables that don't take up storage space themselves. Indexing improves the speed of operations by organizing data to allow faster searches.

Chapter 3 stored procedures

Chapter 3 stored proceduresbaabtra.com - No. 1 supplier of quality freshers This document discusses stored procedures in SQL Server. It begins by explaining that stored procedures allow encapsulation of repetitive tasks and are stored in the database data dictionary. It then shows how stored procedures reduce network traffic and client-server communication compared to individual SQL statements. The document provides examples of how to create a stored procedure using CREATE PROCEDURE and how to call it using EXEC. It notes advantages like precompiled execution, reduced traffic, code reuse, and security control. It also demonstrates using parameters, loops, conditions and variables inside stored procedures.

Chapter 2 grouping,scalar and aggergate functions,joins inner join,outer join

Chapter 2 grouping,scalar and aggergate functions,joins inner join,outer joinbaabtra.com - No. 1 supplier of quality freshers This document provides an introduction to SQL server functions, grouping, and joins. It discusses aggregate functions that return single values from columns like average, sum, min, and count. It also discusses scalar functions that return a single value for each row like upper, lower, and round. The document explains how to use the GROUP BY clause to group result sets and then run aggregate functions on the groups. It also covers the HAVING clause, which filters grouped data. Finally, it describes different types of joins like inner joins, left outer joins, and right outer joins.

Chapter 1 introduction to sql server

Chapter 1 introduction to sql serverbaabtra.com - No. 1 supplier of quality freshers This document provides an introduction to database management systems (DBMS) and SQL Server. It discusses what a database is and where databases are used. It then explains what a DBMS is and some examples of DBMS software. The document goes on to describe the relational database model including entities, attributes, relationships and keys. It also discusses the entity-relationship model. Finally, it covers SQL statements including DDL, DML, and DCL and provides examples of commands for creating tables, inserting and updating data, and setting privileges.

Chapter 1 introduction to sql server

Chapter 1 introduction to sql serverbaabtra.com - No. 1 supplier of quality freshers This document provides an introduction to database management systems (DBMS) and SQL Server. It discusses what a database is and where databases are used. It then describes DBMS as software that allows users to define, create, query, update and administer databases. Common DBMS examples include Oracle, MySQL, PostgreSQL and SQL Server. The document goes on to explain relational databases and the entity-relationship model. It also covers key concepts like tables, rows, columns, primary keys and foreign keys. Finally, it discusses the major SQL statements: DDL for defining database structure, DML for managing data, and DCL for controlling access privileges.

Microsoft holo lens

Microsoft holo lensbaabtra.com - No. 1 supplier of quality freshers Microsoft HoloLens is the first holographic computer that does not require wires or connection to another device. It allows users to see holograms overlaid in the real world. HoloLens features see-through holographic lenses, sensors, and a processing unit that allows it to understand the environment and overlay holograms without wires in real-time. HoloLens uses augmented reality technology to supplement real-world environments with virtual elements and can enable entirely new ways of communication, creation and exploration through holograms.

Blue brain

Blue brainbaabtra.com - No. 1 supplier of quality freshers The document outlines the Blue Brain project which aims to create a virtual model of the human brain through simulation. The objectives are to gain insights into human thinking and memory in order to cure diseases like Parkinson's. It discusses how the brain works and how artificial neural networks could be used to simulate it. Requirements for the Blue Brain include nanobots to monitor brain activity and interface it with a computer. Potential applications include developing supercomputers and understanding animal behavior, while risks involve dependency on technology and security issues.

5g

5gbaabtra.com - No. 1 supplier of quality freshers The document provides information on the 5 generations of cellular network technology: 1G through 5G. 1G began in the 1980s and allowed analog voice calls. 2G launched in the late 1980s and added digital voice and SMS. 3G started in the late 1990s and enabled higher speed data up to 2Mbps. 4G launched in 2010 with speeds up to 100Mbps. 5G is the next major phase, expected to have speeds up to 1Gbps, be 10 times more capable than previous generations, and allow for complete wireless communication with almost no limitations.

Aptitude skills baabtra

Aptitude skills baabtrabaabtra.com - No. 1 supplier of quality freshers An aptitude test measures an individual's ability to apply skills and knowledge. It tests logical reasoning and thinking through multiple choice questions that are timed strictly. Different types of aptitude tests include verbal, numerical, abstract, and spatial abilities. To prepare, practice is important as well as focusing on weak areas. When taking the test, arrive on time, understand instructions, answer questions accurately and quickly while watching the time, and avoid spending too much time on any one question.

Gd baabtra

Gd baabtrabaabtra.com - No. 1 supplier of quality freshers The document provides guidance on improving group discussion and personal interview skills. It discusses what a group discussion entails, how candidates are evaluated in a group discussion, sample discussion topics, and tips for participating effectively. It also covers what to expect in a personal interview, how to prepare, questions that may be asked, and questions candidates can ask the interviewer. The document aims to help candidates understand both formats and perform at their best.

Chapter 2 grouping,scalar and aggergate functions,joins inner join,outer join

Chapter 2 grouping,scalar and aggergate functions,joins inner join,outer joinbaabtra.com - No. 1 supplier of quality freshers

Ad

Recently uploaded (20)

OpenACC and Open Hackathons Monthly Highlights June 2025

OpenACC and Open Hackathons Monthly Highlights June 2025OpenACC The OpenACC organization focuses on enhancing parallel computing skills and advancing interoperability in scientific applications through hackathons and training. The upcoming 2025 Open Accelerated Computing Summit (OACS) aims to explore the convergence of AI and HPC in scientific computing and foster knowledge sharing. This year's OACS welcomes talk submissions from a variety of topics, from Using Standard Language Parallelism to Computer Vision Applications. The document also highlights several open hackathons, a call to apply for NVIDIA Academic Grant Program and resources for optimizing scientific applications using OpenACC directives.

Salesforce Summer '25 Release Frenchgathering.pptx.pdf

Salesforce Summer '25 Release Frenchgathering.pptx.pdfyosra Saidani Salesforce Summer '25 Release Frenchgathering.pptx.pdf

PyCon SG 25 - Firecracker Made Easy with Python.pdf

PyCon SG 25 - Firecracker Made Easy with Python.pdfMuhammad Yuga Nugraha Explore the ease of managing Firecracker microVM with the firecracker-python. In this session, I will introduce the basics of Firecracker microVM and demonstrate how this custom SDK facilitates microVM operations easily. We will delve into the design and development process behind the SDK, providing a behind-the-scenes look at its creation and features. While traditional Firecracker SDKs were primarily available in Go, this module brings a simplicity of Python to the table.

OpenPOWER Foundation & Open-Source Core Innovations

OpenPOWER Foundation & Open-Source Core InnovationsIBM penPOWER offers a fully open, royalty-free CPU architecture for custom chip design.

It enables both lightweight FPGA cores (like Microwatt) and high-performance processors (like POWER10).

Developers have full access to source code, specs, and tools for end-to-end chip creation.

It supports AI, HPC, cloud, and embedded workloads with proven performance.

Backed by a global community, it fosters innovation, education, and collaboration.

"How to survive Black Friday: preparing e-commerce for a peak season", Yurii ...

"How to survive Black Friday: preparing e-commerce for a peak season", Yurii ...Fwdays We will explore how e-commerce projects prepare for the busiest time of the year, which key aspects to focus on, and what to expect. We’ll share our experience in setting up auto-scaling, load balancing, and discuss the loads that Silpo handles, as well as the solutions that help us navigate this season without failures.

From Manual to Auto Searching- FME in the Driver's Seat

From Manual to Auto Searching- FME in the Driver's SeatSafe Software Finding a specific car online can be a time-consuming task, especially when checking multiple dealer websites. A few years ago, I faced this exact problem while searching for a particular vehicle in New Zealand. The local classified platform, Trade Me (similar to eBay), wasn’t yielding any results, so I expanded my search to second-hand dealer sites—only to realise that periodically checking each one was going to be tedious. That’s when I noticed something interesting: many of these websites used the same platform to manage their inventories. Recognising this, I reverse-engineered the platform’s structure and built an FME workspace that automated the search process for me. By integrating API calls and setting up periodic checks, I received real-time email alerts when matching cars were listed. In this presentation, I’ll walk through how I used FME to save hours of manual searching by creating a custom car-finding automation system. While FME can’t buy a car for you—yet—it can certainly help you find the one you’re after!

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdf

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdfcaoyixuan2019 A presentation at Internetware 2025.

UserCon Belgium: Honey, VMware increased my bill

UserCon Belgium: Honey, VMware increased my billstijn40 VMware’s pricing changes have forced organizations to rethink their datacenter cost management strategies. While FinOps is commonly associated with cloud environments, the FinOps Foundation has recently expanded its framework to include Scopes—and Datacenter is now officially part of the equation. In this session, we’ll map the FinOps Framework to a VMware-based datacenter, focusing on cost visibility, optimization, and automation. You’ll learn how to track costs more effectively, rightsize workloads, optimize licensing, and drive efficiency—all without migrating to the cloud. We’ll also explore how to align IT teams, finance, and leadership around cost-aware decision-making for on-prem environments. If your VMware bill keeps increasing and you need a new approach to cost management, this session is for you!

Curietech AI in action - Accelerate MuleSoft development

Curietech AI in action - Accelerate MuleSoft developmentshyamraj55 CurieTech AI in Action – Accelerate MuleSoft Development

Overview:

This presentation demonstrates how CurieTech AI’s purpose-built agents empower MuleSoft developers to create integration workflows faster, more accurately, and with less manual effort

linkedin.com

+12

curietech.ai

+12

meetups.mulesoft.com

+12

.

Key Highlights:

Dedicated AI agents for every stage: Coding, Testing (MUnit), Documentation, Code Review, and Migration

curietech.ai

+7

curietech.ai

+7

medium.com

+7

DataWeave automation: Generate mappings from tables or samples—95%+ complete within minutes

linkedin.com

+7

curietech.ai

+7

medium.com

+7

Integration flow generation: Auto-create Mule flows based on specifications—speeds up boilerplate development

curietech.ai

+1

medium.com

+1

Efficient code reviews: Gain intelligent feedback on flows, patterns, and error handling

youtube.com

+8

curietech.ai

+8

curietech.ai

+8

Test & documentation automation: Auto-generate MUnit test cases, sample data, and detailed docs from code

curietech.ai

+5

curietech.ai

+5

medium.com

+5

Why Now?

Achieve 10× productivity gains, slashing development time from hours to minutes

curietech.ai

+3

curietech.ai

+3

medium.com

+3

Maintain high accuracy with code quality matching or exceeding manual efforts

curietech.ai

+2

curietech.ai

+2

curietech.ai

+2

Ideal for developers, architects, and teams wanting to scale MuleSoft projects with AI efficiency

Conclusion:

CurieTech AI transforms MuleSoft development into an AI-accelerated workflow—letting you focus on innovation, not repetition.

9-1-1 Addressing: End-to-End Automation Using FME

9-1-1 Addressing: End-to-End Automation Using FMESafe Software This session will cover a common use case for local and state/provincial governments who create and/or maintain their 9-1-1 addressing data, particularly address points and road centerlines. In this session, you'll learn how FME has helped Shelby County 9-1-1 (TN) automate the 9-1-1 addressing process; including automatically assigning attributes from disparate sources, on-the-fly QAQC of said data, and reporting. The FME logic that this presentation will cover includes: Table joins using attributes and geometry, Looping in custom transformers, Working with lists and Change detection.

EIS-Webinar-Engineering-Retail-Infrastructure-06-16-2025.pdf

EIS-Webinar-Engineering-Retail-Infrastructure-06-16-2025.pdfEarley Information Science As AI reshapes expectations in retail and B2B commerce, organizations are recognizing a critical reality: meaningful AI outcomes depend on well-structured, adaptable infrastructure. In this session, Seth Earley is joined by Phil Ryan - AI strategist, search technologist, and founder of Glass Leopard Technologies - for a candid conversation on what it truly means to engineer systems for scale, agility, and intelligence.

Phil draws on more than two decades of experience leading search and AI initiatives for enterprise organizations. Together, he and Seth explore the challenges businesses face when legacy architectures limit personalization, agility, and real-time decisioning - and what needs to change to support agentic technologies and next-best-action capabilities.

Key themes from the webinar include:

Composability as a prerequisite for AI - Why modular, loosely coupled systems are essential for adapting to rapid innovation and evolving business needs

Search and relevance as foundational to AI - How techniques honed-in enterprise search have laid the groundwork for more responsive and intelligent customer experiences

From MDM and CDP to agentic systems - How data platforms are evolving to support richer customer context and dynamic orchestration

Engineering for business alignment - Why successful AI programs require architectural decisions grounded in measurable outcomes

The conversation is practical and forward-looking, connecting deep technical understanding with real-world business needs. Whether you’re modernizing your commerce stack or exploring how AI can enhance product discovery, personalization, or customer journeys, this session provides a clear-eyed view of the capabilities, constraints, and priorities that matter most.

OWASP Barcelona 2025 Threat Model Library

OWASP Barcelona 2025 Threat Model LibraryPetraVukmirovic Threat Model Library Launch at OWASP Barcelona 2025

https://owasp.org/www-project-threat-model-library/

The Future of Product Management in AI ERA.pdf

The Future of Product Management in AI ERA.pdfAlyona Owens Hi, I’m Aly Owens, I have a special pleasure to stand here as over a decade ago I graduated from CityU as an international student with an MBA program. I enjoyed the diversity of the school, ability to work and study, the network that came with being here, and of course the price tag for students here has always been more affordable than most around.

Since then I have worked for major corporations like T-Mobile and Microsoft and many more, and I have founded a startup. I've also been teaching product management to ensure my students save time and money to get to the same level as me faster avoiding popular mistakes. Today as I’ve transitioned to teaching and focusing on the startup, I hear everybody being concerned about Ai stealing their jobs… We’ll talk about it shortly.

But before that, I want to take you back to 1997. One of my favorite movies is “Fifth Element”. It wowed me with futuristic predictions when I was a kid and I’m impressed by the number of these predictions that have already come true. Self-driving cars, video calls and smart TV, personalized ads and identity scanning. Sci-fi movies and books gave us many ideas and some are being implemented as we speak. But we often get ahead of ourselves:

Flying cars,Colonized planets, Human-like AI: not yet, Time travel, Mind-machine neural interfaces for everyone: Only in experimental stages (e.g. Neuralink).

Cyberpunk dystopias: Some vibes (neon signs + inequality + surveillance), but not total dystopia (thankfully).

On the bright side, we predict that the working hours should drop as Ai becomes our helper and there shouldn’t be a need to work 8 hours/day. Nobody knows for sure but we can require that from legislation. Instead of waiting to see what the government and billionaires come up with, I say we should design our own future.

So, we as humans, when we don’t know something - fear takes over. The same thing happened during the industrial revolution. In the Industrial Era, machines didn’t steal jobs—they transformed them but people were scared about their jobs. The AI era is making similar changes except it feels like robots will take the center stage instead of a human. First off, even when it comes to the hottest space in the military - drones, Ai does a fraction of work. AI algorithms enable real-time decision-making, obstacle avoidance, and mission optimization making drones far more autonomous and capable than traditional remote-controlled aircraft. Key technologies include computer vision for object detection, GPS-enhanced navigation, and neural networks for learning and adaptation. But guess what? There are only 2 companies right now that utilize Ai in drones to make autonomous decisions - Skydio and DJI.

"Scaling in space and time with Temporal", Andriy Lupa.pdf

"Scaling in space and time with Temporal", Andriy Lupa.pdfFwdays Design patterns like Event Sourcing and Event Streaming have long become standards for building real-time analytics systems. However, when the system load becomes nonlinear with fast and often unpredictable spikes, it's crucial to respond quickly in order not to lose real-time operating itself.

In this talk, I’ll share my experience implementing and using a tool like Temporal.io. We'll explore the evolution of our system for maintaining real-time report generation and discuss how we use Temporal both for short-lived pipelines and long-running background tasks.

CapCut Pro Crack For PC Latest Version {Fully Unlocked} 2025

CapCut Pro Crack For PC Latest Version {Fully Unlocked} 2025pcprocore 👉𝗡𝗼𝘁𝗲:𝗖𝗼𝗽𝘆 𝗹𝗶𝗻𝗸 & 𝗽𝗮𝘀𝘁𝗲 𝗶𝗻𝘁𝗼 𝗚𝗼𝗼𝗴𝗹𝗲 𝗻𝗲𝘄 𝘁𝗮𝗯> https://pcprocore.com/ 👈◀

CapCut Pro Crack is a powerful tool that has taken the digital world by storm, offering users a fully unlocked experience that unleashes their creativity. With its user-friendly interface and advanced features, it’s no wonder why aspiring videographers are turning to this software for their projects.

Connecting Data and Intelligence: The Role of FME in Machine Learning

Connecting Data and Intelligence: The Role of FME in Machine LearningSafe Software In this presentation, we want to explore powerful data integration and preparation for Machine Learning. FME is known for its ability to manipulate and transform geospatial data, connecting diverse data sources into efficient and automated workflows. By integrating FME with Machine Learning techniques, it is possible to transform raw data into valuable insights faster and more accurately, enabling intelligent analysis and data-driven decision making.

cnc-processing-centers-centateq-p-110-en.pdf

cnc-processing-centers-centateq-p-110-en.pdfAmirStern2 מרכז עיבודים תעשייתי בעל 3/4/5 צירים, עד 22 החלפות כלים עם כל אפשרויות העיבוד הדרושות. בעל שטח עבודה גדול ומחשב נוח וקל להפעלה בשפה העברית/רוסית/אנגלית/ספרדית/ערבית ועוד..

מסוגל לבצע פעולות עיבוד שונות המתאימות לענפים שונים: קידוח אנכי, אופקי, ניסור, וכרסום אנכי.

Quantum AI: Where Impossible Becomes Probable

Quantum AI: Where Impossible Becomes ProbableSaikat Basu Imagine combining the "brains" of Artificial Intelligence (AI) with the "super muscles" of Quantum Computing. That's Quantum AI!

It's a new field that uses the mind-bending rules of quantum physics to make AI even more powerful.

Hadoop 2.4 installing on ubuntu 14.04

- 2. [email protected] facebook.com/akshath.kumar180 Twitter.com/akshath4u in.linkedin.com/in/akshathkumar HADOOP 2.4 INSTALLATION ON UBUNTU 14.04

- 3. Hadoop is a free, Java-based programming framework that supports the processing of large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation. In this presentation, we'll install a single-node Hadoop cluster backed by the Hadoop Distributed File System on Ubuntu. HADOOP ON 64 BIT UBUNTU 14.04

- 4. ➢ INSTALLING JAVA ➢ ADDING A DEDICATED HADOOP USER ➢ INSTALLING SSH ➢ CREATE AND SETUP SSH CERTIFICATES ➢ INSTALL HADOOP ➢ SETUP CONFIGURATION FILES ➢ FORMAT THE NEW HADOOP FILESYSTEM ➢ STARTING HADOOP ➢ STOPPING HADOOP ➢ HADOOP WEB INTERFACES ➢ USING HADOOP STEPS FOR INSTALLING HADOOP

- 5. ❖ Hadoop framework is written in Java! ➢ Open Terminal in Ubuntu 14.04, and Following the Steps INSTALLING JAVA

- 6. ADDING A DEDICATED HADOOP USER

- 7. ➔ SSH has 2 main components : ◆ ssh : The command we use to connect to remote machines - the client. ◆ sshd : The daemon that is running on the server and allows clients to connect to the server. ➔ The ssh is pre-enabled on Linux, but in order to start sshd daemon, we need to install ssh first. Use this command to do that. $ sudo apt-get install ssh INSTALLING SSH

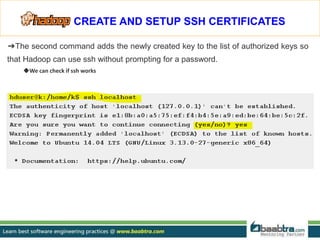

- 8. ➔ Hadoop requires SSH access to manage its nodes,we therefore need to configure SSH access to localhost. So, we need to have SSH up and running on our machine and configured it to allow SSH public key authentication. CREATE AND SETUP SSH CERTIFICATES

- 9. ➔ The second command adds the newly created key to the list of authorized keys so that Hadoop can use ssh without prompting for a password. ◆ We can check if ssh works CREATE AND SETUP SSH CERTIFICATES

- 10. ➔ Type the following Command in Ubuntu Terminal. ➔ We want to move the Hadoop installation to the /usr/local/hadoop directory using the following command INSTALL HADOOP $ wget http://mirrors.sonic.net/apache/hadoop/common/hadoop-2.4.1/hadoop-2.4.1.tar.gz $ tar xvzf hadoop-2.4.1.tar.gz $ sudo mv hadoop-2.4.1 /usr/local/hadoop $ sudo chown -R hduser:hadoop hadoop $ pwd /usr/local/hadoop $ ls bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

- 11. ➔ The following files will have to be modified to complete the Hadoop setup 1. ~/.bashrc 2. /usr/local/hadoop/etc/hadoop/hadoop-env.sh 3. /usr/local/hadoop/etc/hadoop/core-site.xml 4. /usr/local/hadoop/etc/hadoop/mapred-site.xml.template 5. /usr/local/hadoop/etc/hadoop/hdfs-site.xml ➔ First of all we need to find the path where Java has been installed to set the JAVA_HOME environment variable using the following command SETUP CONFIGURATION FILES $ update-alternatives --config java There is only one alternative in link group java (providing /usr/bin/java): /usr/lib/jvm/java-7-openjdk-amd64/jre/bin/java Nothing to configure.

- 12. 1. ~/.bashrc We can append the following to the end of ~/.bashrc SETUP CONFIGURATION FILES

- 13. 2. /usr/local/hadoop/etc/hadoop/hadoop-env.sh ★ We need to set JAVA_HOME by modifying hadoop-env.sh file export JAVA_HOME=/usr/lib/jvm/java-7- openjdk-amd64 ★ Adding the above statement in the hadoop-env.sh file ensures that the value of JAVA_HOME variable will be available to Hadoop whenever it is started up. SETUP CONFIGURATION FILES

- 14. 3. /usr/local/hadoop/etc/hadoop/core-site.xm ★ he /usr/local/hadoop/etc/hadoop/core-site.xml file contains configuration properties that Hadoop uses when starting up. This file can be used to override the default settings that Hadoop starts with. $ sudo mkdir -p /app/hadoop/tmp $ sudo chown hduser:hadoop /app/hadoop/tmp SETUP CONFIGURATION FILES

- 15. Open the file and enter the following in between the <configuration> </configuration> tag. SETUP CONFIGURATION FILES

- 16. 4. /usr/local/hadoop/etc/hadoop/mapred-site.xml ➔ By default, the /usr/local/hadoop/etc/hadoop/ folder contains the /usr/local/hadoop/etc/hadoop/mapred-site.xml.template file which has to be renamed/copied with the name mapred-site.xml $ cp/usr/local/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/etc/hadoop/mapred-site.xml SETUP CONFIGURATION FILES

- 17. ➔ The mapred-site.xml file is used to specify which framework is being used for MapReduce. We need to enter the following content in between the <configuration></configuration> tag SETUP CONFIGURATION FILES

- 18. 5. /usr/local/hadoop/etc/hadoop/hdfs-site.xml ➔ Before editing this file, we need to create two directories which will contain the namenode and the datanode for this Hadoop installation. This can be done using the following commands SETUP CONFIGURATION FILES

- 19. ➔ Open the file and enter the following content in between the <configuration></configuration> tag <configuration> <property> <name>dfs.replication</name> <value>1</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop_store/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop_store/hdfs/datanode</value> </property> </configuration> SETUP CONFIGURATION FILES

- 20. ➔ Now, the Hadoop filesystem needs to be formatted so that we can start to use it. The format command should be issued with write permission since it creates current directory under /usr/local/hadoop_store/hdfs/namenode folder. hduser@k:~$ hadoop namenode -format DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. …………………………………………………………………………. …………. ………………….. ……………………. ……….. ………… …………………. …………………… …………………… ……………………………………. ………………. ……….. 14/07/13 22:13:10 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at k/127.0.1.1 ************************************************************/ FORMAT THE NEW HADOOP FILESYSTEM The hadoop namenode -format command should be executed once before we start using Hadoop. If this command is executed again after Hadoop has been used, it'll destroy all the data on the Hadoop file system.

- 21. ➔ Now it's time to start the newly installed single node cluster. We can use start- all.sh or (start-dfs.sh and start-yarn.sh) hduser@k:/home/k$ start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh 14/07/13 23:36:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Starting namenodes on [localhost] ………………. ………………….. ……………….. . ……. ………………………………….. …………………… ……………………………. ……………………………………………………………….. ……………………………….. .. localhost: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hduser-nodemanager-k.out ➔ check if it's really up and running. hduser@k:/home/k$ jps ➔ Another way to check is using netstat hduser@k:/home/k$ netstat -plten | grep java STARTING HADOOP

- 22. ➔ Type the following Command into the Terminal for Stopping the Hadoop $ pwd /usr/local/hadoop/sbin $ ls distribute-exclude.sh httpfs.sh start-all.sh ----------------------- ------------- ---------- ----------- ------------ -- -- - -- -------- - -- - - -- - - - - - ---- start-secure-dns.sh stop-balancer.sh stop-yarn.sh ➔ We run stop-all.sh or (stop-dfs.sh and stop-yarn.sh) to stop all the daemons running on our machine: $ /usr/local/hadoop/sbin/stop-all.sh STOPPING HADOOP

- 23. Thank You...

- 24. US UK UAE 7002 Hana Road, Edison NJ 08817, United States of America. 90 High Street, Cherry Hinton, Cambridge, CB1 9HZ, United Kingdom. Suite No: 51, Oasis Center, Sheikh Zayed Road, Dubai, UAE Email to [email protected] or Visit baabtra.com Looking for learning more about the above topic?

- 25. India Centres Emarald Mall (Big Bazar Building) Mavoor Road, Kozhikode, Kerala, India. Ph: + 91 – 495 40 25 550 NC Complex, Near Bus Stand Mukkam, Kozhikode, Kerala, India. Ph: + 91 – 495 40 25 550 Cafit Square IT Park, Hilite Business Park, Kozhikode Kerala, India. Email: [email protected] TBI - NITC NIT Campus, Kozhikode. Kerala, India. Start up Village Eranakulam, Kerala, India. Start up Village UL CC Kozhikode, Kerala

- 26. Follow us @ twitter.com/baabtra Like us @ facebook.com/baabtra Subscribe to us @ youtube.com/baabtra Become a follower @ slideshare.net/BaabtraMentoringPartner Connect to us @ in.linkedin.com/in/baabtra Give a feedback @ massbaab.com/baabtra Thanks in advance www.baabtra.com | www.massbaab.com |www.baabte.com

- 27. Want to learn more about programming or Looking to become a good programmer? Are you wasting time on searching so many contents online? Do you want to learn things quickly? Tired of spending huge amount of money to become a Software professional? Do an online course @ baabtra.com We put industry standards to practice. Our structured, activity based courses are so designed to make a quick, good software professional out of anybody who holds a passion for coding.