Hadoop: A distributed framework for Big Data

Download as PPTX, PDF0 likes200 views

Hadoop is a Java software framework that supports data-intensive distributed applications and is developed under open source license. It enables applications to work with thousands of nodes and petabytes of data.

1 of 26

Downloaded 11 times

Ad

Recommended

Hadoop

HadoopBhushan Kulkarni This document provides an overview of Hadoop, an open-source framework for distributed storage and processing of large datasets across clusters of computers. It discusses how Hadoop was developed based on Google's MapReduce algorithm and how it uses HDFS for scalable storage and MapReduce as an execution engine. Key components of Hadoop architecture include HDFS for fault-tolerant storage across data nodes and the MapReduce programming model for parallel processing of data blocks. The document also gives examples of how MapReduce works and industries that use Hadoop for big data applications.

Hadoop

Hadoop Shamama Kamal Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers. It was created in 2005 by Doug Cutting and Mike Carafella at Yahoo!, with Cutting naming it after his son's toy elephant. Hadoop features include reliable data storage with the Hadoop Distributed File System (HDFS), and its MapReduce programming model for large-scale data processing using a distributed algorithm on a computing cluster.

Big data

Big dataAlisha Roy This ppt is to help students to learn about big data, Hadoop, HDFS, MApReduce, architecture of HDFS,

HADOOP

HADOOPHarinder Kaur Hadoop is an open-source framework that allows for the distributed processing of large data sets across clusters of computers. It addresses problems like massive data storage needs and scalable processing of large datasets. Hadoop uses the Hadoop Distributed File System (HDFS) for storage and MapReduce as its processing engine. HDFS stores data reliably across commodity hardware and MapReduce provides a programming model for distributed computing of large datasets.

Anju

AnjuAnju Shekhawat The document provides an overview of Apache Hadoop and how it addresses challenges related to big data. It discusses how Hadoop uses HDFS to distribute and store large datasets across clusters of commodity servers and uses MapReduce as a programming model to process and analyze the data in parallel. The core components of Hadoop - HDFS for storage and MapReduce for processing - allow it to efficiently handle large volumes and varieties of data across distributed systems in a fault-tolerant manner. Major companies have adopted Hadoop to derive insights from their big data.

Big Data and Hadoop - An Introduction

Big Data and Hadoop - An IntroductionNagarjuna Kanamarlapudi This document provides an overview of Hadoop, a tool for processing large datasets across clusters of computers. It discusses why big data has become so large, including exponential growth in data from the internet and machines. It describes how Hadoop uses HDFS for reliable storage across nodes and MapReduce for parallel processing. The document traces the history of Hadoop from its origins in Google's file system GFS and MapReduce framework. It provides brief explanations of how HDFS and MapReduce work at a high level.

Hadoop Architecture

Hadoop ArchitectureDr. C.V. Suresh Babu This presentation discusses the following topics:

Introduction

Components of Hadoop

MapReduce

Map Task

Reduce Task

Anatomy of a Map Reduce

Apache hadoop technology : Beginners

Apache hadoop technology : BeginnersShweta Patnaik This presentation is about apache hadoop technology. It may be helpful for the beginners to know some terminologies of hadoop.

Apache Hadoop

Apache HadoopAjit Koti The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using a simple programming model. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-avaiability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-availabile service on top of a cluster of computers, each of which may be prone to failures.

Hadoop Technology

Hadoop TechnologyEce Seçil AKBAŞ This document provides an overview of Hadoop and big data concepts. It discusses key characteristics of big data including volume, velocity, variety, and value. It then describes Hadoop distributed file system (HDFS) and MapReduce framework which are core components of Hadoop that allow distributed storage and processing of large datasets across clusters of commodity hardware. Finally, it outlines several common use cases where Hadoop can be applied such as customer churn analysis, recommendation engines, and network data analysis.

Hadoop Technology

Hadoop TechnologyAtul Kushwaha This document provides an overview of Big Data and Hadoop. It defines Big Data as large volumes of structured, semi-structured, and unstructured data that is too large to process using traditional databases and software. It provides examples of the large amounts of data generated daily by organizations. Hadoop is presented as a framework for distributed storage and processing of large datasets across clusters of commodity hardware. Key components of Hadoop including HDFS for distributed storage and fault tolerance, and MapReduce for distributed processing, are described at a high level. Common use cases for Hadoop by large companies are also mentioned.

Hadoop

HadoopMallikarjuna G D Hadoop, overview, components, advantages and disadvantages, tools, architecture, jobs in hadoop, map reduce, installation, cluster setup, deployment models ,functionalities of hadoop systems, HDFS

Hadoop trainting-in-hyderabad@kelly technologies

Hadoop trainting-in-hyderabad@kelly technologiesKelly Technologies Hadoop Institutes: kelly technologies are the best Hadoop Training Institutes in Hyderabad. Providing Hadoop training by real time faculty in Hyderabad.

http://www.kellytechno.com/Hyderabad/Course/Hadoop-Training

Analytics 3

Analytics 3Srikanth Ayithy All about data and analytics. its all about data analytics, Introduction to the field of analytsics. What is data analytics?

Map reduce and hadoop at mylife

Map reduce and hadoop at myliferesponseteam A brief talk introducing and explaining MapReduce and Hadoop along with describing part of how we use Hadoop MapReduce at MyLife.com.

Hadoop And Their Ecosystem

Hadoop And Their Ecosystemsunera pathan The document provides an overview of Hadoop and its ecosystem. It discusses the history and architecture of Hadoop, describing how it uses distributed storage and processing to handle large datasets across clusters of commodity hardware. The key components of Hadoop include HDFS for storage, MapReduce for processing, and additional tools like Hive, Pig, HBase, Zookeeper, Flume, Sqoop and Oozie that make up its ecosystem. Advantages are its ability to handle unlimited data storage and high speed processing, while disadvantages include lower speeds for small datasets and limitations on data storage size.

HADOOP TECHNOLOGY ppt

HADOOP TECHNOLOGY pptsravya raju The most well known technology used for Big Data is Hadoop.

It is actually a large scale batch data processing system

Hadoop data analysis

Hadoop data analysisVakul Vankadaru Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of computers. It has two main components:

1) The Hadoop Distributed File System (HDFS) which stores data reliably across commodity hardware. It divides files into blocks and replicates them for fault tolerance.

2) MapReduce, which processes data in parallel. It handles scheduling, input partitioning, and failover. Users write mapping and reducing functions. Mappers process key-value pairs and output new pairs shuffled to reducers, which combine values for each key.

Hadoop-Quick introduction

Hadoop-Quick introductionSandeep Singh - Data is a precious resource that can last longer than the systems themselves (Tim Berners-Lee)

- Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It provides reliability, scalability and flexibility.

- Hadoop consists of HDFS for storage and MapReduce for processing. The main nodes include NameNode, DataNodes, JobTracker and TaskTrackers. Tools like Hive, Pig, HBase extend its capabilities for SQL-like queries, data flows and NoSQL access.

Hadoop

HadoopOded Rotter Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers. It reliably stores and processes gobs of information across many commodity computers. Key components of Hadoop include the HDFS distributed file system for high-bandwidth storage, and MapReduce for parallel data processing. Hadoop can deliver data and run large-scale jobs reliably in spite of system changes or failures by detecting and compensating for hardware problems in the cluster.

Introduction to Hadoop and Hadoop component

Introduction to Hadoop and Hadoop component rebeccatho This document provides an introduction to Apache Hadoop, which is an open-source software framework for distributed storage and processing of large datasets. It discusses Hadoop's main components of MapReduce and HDFS. MapReduce is a programming model for processing large datasets in a distributed manner, while HDFS provides distributed, fault-tolerant storage. Hadoop runs on commodity computer clusters and can scale to thousands of nodes.

Introduction to Big Data & Hadoop Architecture - Module 1

Introduction to Big Data & Hadoop Architecture - Module 1Rohit Agrawal Learning Objectives - In this module, you will understand what is Big Data, What are the limitations of the existing solutions for Big Data problem; How Hadoop solves the Big Data problem, What are the common Hadoop ecosystem components, Hadoop Architecture, HDFS and Map Reduce Framework, and Anatomy of File Write and Read.

P.Maharajothi,II-M.sc(computer science),Bon secours college for women,thanjavur.

P.Maharajothi,II-M.sc(computer science),Bon secours college for women,thanjavur.MaharajothiP Hadoop is an open-source software framework that supports data-intensive distributed applications. It has a flexible architecture designed for reliable, scalable computing and storage of large datasets across commodity hardware. Hadoop uses a distributed file system and MapReduce programming model, with a master node tracking metadata and worker nodes storing data blocks and performing computation in parallel. It is widely used by large companies to analyze massive amounts of structured and unstructured data.

Hadoop technology

Hadoop technologySohini~~ Music A document describes a multi-node Hadoop cluster used for processing large amounts of data. The cluster includes multiple nodes with RAM and HDD storage. It uses a primary and secondary name node to pull transaction logs, merge changes, and store data to HDD. Hadoop provides a cost effective and reliable solution for processing large datasets across standard servers in a parallel and scalable way.

Getting started big data

Getting started big dataKibrom Gebrehiwot Apache Hadoop is a popular open-source framework for storing and processing large datasets across clusters of computers. It includes Apache HDFS for distributed storage, YARN for job scheduling and resource management, and MapReduce for parallel processing. The Hortonworks Data Platform is an enterprise-grade distribution of Apache Hadoop that is fully open source.

Hadoop introduction

Hadoop introductionChirag Ahuja This document provides an introduction to Hadoop, including its ecosystem, architecture, key components like HDFS and MapReduce, characteristics, and popular flavors. Hadoop is an open source framework that efficiently processes large volumes of data across clusters of commodity hardware. It consists of HDFS for storage and MapReduce as a programming model for distributed processing. A Hadoop cluster typically has a single namenode and multiple datanodes. Many large companies use Hadoop to analyze massive datasets.

2. hadoop fundamentals

2. hadoop fundamentalsLokesh Ramaswamy Hadoop is an open source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses Google's MapReduce programming model and Google File System for reliability. The Hadoop architecture includes a distributed file system (HDFS) that stores data across clusters and a job scheduling and resource management framework (YARN) that allows distributed processing of large datasets in parallel. Key components include the NameNode, DataNodes, ResourceManager and NodeManagers. Hadoop provides reliability through replication of data blocks and automatic recovery from failures.

THE SOLUTION FOR BIG DATA

THE SOLUTION FOR BIG DATATarak Tar Discussed the huge problems facing on Data storages and enclosed the Technology for it.Discussed drawbacks and future goals of it.

Hadoop

HadoopAnil Reddy Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses a simple programming model called MapReduce that automatically parallelizes and distributes work across nodes. Hadoop consists of Hadoop Distributed File System (HDFS) for storage and MapReduce execution engine for processing. HDFS stores data as blocks replicated across nodes for fault tolerance. MapReduce jobs are split into map and reduce tasks that process key-value pairs in parallel. Hadoop is well-suited for large-scale data analytics as it scales to petabytes of data and thousands of machines with commodity hardware.

Fundamental of Big Data with Hadoop and Hive

Fundamental of Big Data with Hadoop and HiveSharjeel Imtiaz it is bit towards Hadoop/Hive installation experience and ecosystem concept. The outcome of this slide is derived from a under published book Fundamental of Big Data.

Ad

More Related Content

What's hot (20)

Apache Hadoop

Apache HadoopAjit Koti The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using a simple programming model. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-avaiability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-availabile service on top of a cluster of computers, each of which may be prone to failures.

Hadoop Technology

Hadoop TechnologyEce Seçil AKBAŞ This document provides an overview of Hadoop and big data concepts. It discusses key characteristics of big data including volume, velocity, variety, and value. It then describes Hadoop distributed file system (HDFS) and MapReduce framework which are core components of Hadoop that allow distributed storage and processing of large datasets across clusters of commodity hardware. Finally, it outlines several common use cases where Hadoop can be applied such as customer churn analysis, recommendation engines, and network data analysis.

Hadoop Technology

Hadoop TechnologyAtul Kushwaha This document provides an overview of Big Data and Hadoop. It defines Big Data as large volumes of structured, semi-structured, and unstructured data that is too large to process using traditional databases and software. It provides examples of the large amounts of data generated daily by organizations. Hadoop is presented as a framework for distributed storage and processing of large datasets across clusters of commodity hardware. Key components of Hadoop including HDFS for distributed storage and fault tolerance, and MapReduce for distributed processing, are described at a high level. Common use cases for Hadoop by large companies are also mentioned.

Hadoop

HadoopMallikarjuna G D Hadoop, overview, components, advantages and disadvantages, tools, architecture, jobs in hadoop, map reduce, installation, cluster setup, deployment models ,functionalities of hadoop systems, HDFS

Hadoop trainting-in-hyderabad@kelly technologies

Hadoop trainting-in-hyderabad@kelly technologiesKelly Technologies Hadoop Institutes: kelly technologies are the best Hadoop Training Institutes in Hyderabad. Providing Hadoop training by real time faculty in Hyderabad.

http://www.kellytechno.com/Hyderabad/Course/Hadoop-Training

Analytics 3

Analytics 3Srikanth Ayithy All about data and analytics. its all about data analytics, Introduction to the field of analytsics. What is data analytics?

Map reduce and hadoop at mylife

Map reduce and hadoop at myliferesponseteam A brief talk introducing and explaining MapReduce and Hadoop along with describing part of how we use Hadoop MapReduce at MyLife.com.

Hadoop And Their Ecosystem

Hadoop And Their Ecosystemsunera pathan The document provides an overview of Hadoop and its ecosystem. It discusses the history and architecture of Hadoop, describing how it uses distributed storage and processing to handle large datasets across clusters of commodity hardware. The key components of Hadoop include HDFS for storage, MapReduce for processing, and additional tools like Hive, Pig, HBase, Zookeeper, Flume, Sqoop and Oozie that make up its ecosystem. Advantages are its ability to handle unlimited data storage and high speed processing, while disadvantages include lower speeds for small datasets and limitations on data storage size.

HADOOP TECHNOLOGY ppt

HADOOP TECHNOLOGY pptsravya raju The most well known technology used for Big Data is Hadoop.

It is actually a large scale batch data processing system

Hadoop data analysis

Hadoop data analysisVakul Vankadaru Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of computers. It has two main components:

1) The Hadoop Distributed File System (HDFS) which stores data reliably across commodity hardware. It divides files into blocks and replicates them for fault tolerance.

2) MapReduce, which processes data in parallel. It handles scheduling, input partitioning, and failover. Users write mapping and reducing functions. Mappers process key-value pairs and output new pairs shuffled to reducers, which combine values for each key.

Hadoop-Quick introduction

Hadoop-Quick introductionSandeep Singh - Data is a precious resource that can last longer than the systems themselves (Tim Berners-Lee)

- Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It provides reliability, scalability and flexibility.

- Hadoop consists of HDFS for storage and MapReduce for processing. The main nodes include NameNode, DataNodes, JobTracker and TaskTrackers. Tools like Hive, Pig, HBase extend its capabilities for SQL-like queries, data flows and NoSQL access.

Hadoop

HadoopOded Rotter Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers. It reliably stores and processes gobs of information across many commodity computers. Key components of Hadoop include the HDFS distributed file system for high-bandwidth storage, and MapReduce for parallel data processing. Hadoop can deliver data and run large-scale jobs reliably in spite of system changes or failures by detecting and compensating for hardware problems in the cluster.

Introduction to Hadoop and Hadoop component

Introduction to Hadoop and Hadoop component rebeccatho This document provides an introduction to Apache Hadoop, which is an open-source software framework for distributed storage and processing of large datasets. It discusses Hadoop's main components of MapReduce and HDFS. MapReduce is a programming model for processing large datasets in a distributed manner, while HDFS provides distributed, fault-tolerant storage. Hadoop runs on commodity computer clusters and can scale to thousands of nodes.

Introduction to Big Data & Hadoop Architecture - Module 1

Introduction to Big Data & Hadoop Architecture - Module 1Rohit Agrawal Learning Objectives - In this module, you will understand what is Big Data, What are the limitations of the existing solutions for Big Data problem; How Hadoop solves the Big Data problem, What are the common Hadoop ecosystem components, Hadoop Architecture, HDFS and Map Reduce Framework, and Anatomy of File Write and Read.

P.Maharajothi,II-M.sc(computer science),Bon secours college for women,thanjavur.

P.Maharajothi,II-M.sc(computer science),Bon secours college for women,thanjavur.MaharajothiP Hadoop is an open-source software framework that supports data-intensive distributed applications. It has a flexible architecture designed for reliable, scalable computing and storage of large datasets across commodity hardware. Hadoop uses a distributed file system and MapReduce programming model, with a master node tracking metadata and worker nodes storing data blocks and performing computation in parallel. It is widely used by large companies to analyze massive amounts of structured and unstructured data.

Hadoop technology

Hadoop technologySohini~~ Music A document describes a multi-node Hadoop cluster used for processing large amounts of data. The cluster includes multiple nodes with RAM and HDD storage. It uses a primary and secondary name node to pull transaction logs, merge changes, and store data to HDD. Hadoop provides a cost effective and reliable solution for processing large datasets across standard servers in a parallel and scalable way.

Getting started big data

Getting started big dataKibrom Gebrehiwot Apache Hadoop is a popular open-source framework for storing and processing large datasets across clusters of computers. It includes Apache HDFS for distributed storage, YARN for job scheduling and resource management, and MapReduce for parallel processing. The Hortonworks Data Platform is an enterprise-grade distribution of Apache Hadoop that is fully open source.

Hadoop introduction

Hadoop introductionChirag Ahuja This document provides an introduction to Hadoop, including its ecosystem, architecture, key components like HDFS and MapReduce, characteristics, and popular flavors. Hadoop is an open source framework that efficiently processes large volumes of data across clusters of commodity hardware. It consists of HDFS for storage and MapReduce as a programming model for distributed processing. A Hadoop cluster typically has a single namenode and multiple datanodes. Many large companies use Hadoop to analyze massive datasets.

2. hadoop fundamentals

2. hadoop fundamentalsLokesh Ramaswamy Hadoop is an open source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses Google's MapReduce programming model and Google File System for reliability. The Hadoop architecture includes a distributed file system (HDFS) that stores data across clusters and a job scheduling and resource management framework (YARN) that allows distributed processing of large datasets in parallel. Key components include the NameNode, DataNodes, ResourceManager and NodeManagers. Hadoop provides reliability through replication of data blocks and automatic recovery from failures.

THE SOLUTION FOR BIG DATA

THE SOLUTION FOR BIG DATATarak Tar Discussed the huge problems facing on Data storages and enclosed the Technology for it.Discussed drawbacks and future goals of it.

Similar to Hadoop: A distributed framework for Big Data (20)

Hadoop

HadoopAnil Reddy Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses a simple programming model called MapReduce that automatically parallelizes and distributes work across nodes. Hadoop consists of Hadoop Distributed File System (HDFS) for storage and MapReduce execution engine for processing. HDFS stores data as blocks replicated across nodes for fault tolerance. MapReduce jobs are split into map and reduce tasks that process key-value pairs in parallel. Hadoop is well-suited for large-scale data analytics as it scales to petabytes of data and thousands of machines with commodity hardware.

Fundamental of Big Data with Hadoop and Hive

Fundamental of Big Data with Hadoop and HiveSharjeel Imtiaz it is bit towards Hadoop/Hive installation experience and ecosystem concept. The outcome of this slide is derived from a under published book Fundamental of Big Data.

Introduction to Hadoop

Introduction to HadoopYork University Apache Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. The core of Hadoop consists of HDFS for storage and MapReduce for processing. Hadoop has been expanded with additional projects including YARN for job scheduling and resource management, Pig and Hive for SQL-like queries, HBase for column-oriented storage, Zookeeper for coordination, and Ambari for provisioning and managing Hadoop clusters. Hadoop provides scalable and cost-effective solutions for storing and analyzing massive amounts of data.

Big Data Technologies - Hadoop

Big Data Technologies - HadoopTalentica Software The document provides an overview of Hadoop including what it is, how it works, its architecture and components. Key points include:

- Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers using simple programming models.

- It consists of HDFS for storage and MapReduce for processing via parallel computation using a map and reduce technique.

- HDFS stores data reliably across commodity hardware and MapReduce processes large amounts of data in parallel across nodes in a cluster.

Lecture 2 part 1

Lecture 2 part 1Jazan University The document discusses Hadoop, its components, and how they work together. It covers HDFS, which stores and manages large files across commodity servers; MapReduce, which processes large datasets in parallel; and other tools like Pig and Hive that provide interfaces for Hadoop. Key points are that Hadoop is designed for large datasets and hardware failures, HDFS replicates data for reliability, and MapReduce moves computation instead of data for efficiency.

02 Hadoop.pptx HADOOP VENNELA DONTHIREDDY

02 Hadoop.pptx HADOOP VENNELA DONTHIREDDYVenneladonthireddy1 Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses a master-slave architecture with the NameNode as master and DataNodes as slaves. The NameNode manages file system metadata and the DataNodes store data blocks. Hadoop also includes a MapReduce engine where the JobTracker splits jobs into tasks that are processed by TaskTrackers on each node. Hadoop saw early adoption from companies handling big data like Yahoo!, Facebook and Amazon and is now widely used for applications like advertisement targeting, search, and security analytics.

Hadoop bigdata overview

Hadoop bigdata overviewharithakannan This document provides an overview of Hadoop, an open source framework for distributed storage and processing of large datasets across clusters of computers. It discusses that Hadoop was created to address the challenges of "Big Data" characterized by high volume, variety and velocity of data. The key components of Hadoop are HDFS for storage and MapReduce as an execution engine for distributed computation. HDFS uses a master-slave architecture with a NameNode master and DataNode slaves, and provides fault tolerance through data replication. MapReduce allows processing of large datasets in parallel through mapping and reducing functions.

Hadoop trainting in hyderabad@kelly technologies

Hadoop trainting in hyderabad@kelly technologiesKelly Technologies kelly technologies is the best Hadoop Training Institutes in Hyderabad. Providing Hadoop training by real time faculty in Hyderaba

www.kellytechno.com

Big data applications

Big data applicationsJuan Pablo Paz Grau, Ph.D., PMP A summarized version of a presentation regarding Big Data architecture, covering from Big Data concept to Hadoop and tools like Hive, Pig and Cassandra

Big data and hadoop

Big data and hadoopMohit Tare Big data is generated from a variety of sources at a massive scale and high velocity. Hadoop is an open source framework that allows processing and analyzing large datasets across clusters of commodity hardware. It uses a distributed file system called HDFS that stores multiple replicas of data blocks across nodes for reliability. Hadoop also uses a MapReduce processing model where mappers process data in parallel across nodes before reducers consolidate the outputs into final results. An example demonstrates how Hadoop would count word frequencies in a large text file by mapping word counts across nodes before reducing the results.

hadoop

hadoopDeep Mehta it just provide information about hadoop what is hadoop and how hadoop overcomes the disadvantage of distributed system and i have also shown an example program for mapreduce

Hadoop and Distributed Computing

Hadoop and Distributed ComputingFederico Cargnelutti This document discusses distributed computing and Hadoop. It begins by explaining distributed computing and how it divides programs across several computers. It then introduces Hadoop, an open-source Java framework for distributed processing of large data sets across clusters of computers. Key aspects of Hadoop include its scalable distributed file system (HDFS), MapReduce programming model, and ability to reliably process petabytes of data on thousands of nodes. Common use cases and challenges of using Hadoop are also outlined.

002 Introduction to hadoop v3

002 Introduction to hadoop v3Dendej Sawarnkatat The document provides an introduction to Apache Hadoop, including:

1) It describes Hadoop's architecture which uses HDFS for distributed storage and MapReduce for distributed processing of large datasets across commodity clusters.

2) It explains that Hadoop solves issues of hardware failure and combining data through replication of data blocks and a simple MapReduce programming model.

3) It gives a brief history of Hadoop originating from Doug Cutting's Nutch project and the influence of Google's papers on distributed file systems and MapReduce.

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015Deanna Kosaraju Optimal Execution Of MapReduce Jobs In Cloud

Anshul Aggarwal, Software Engineer, Cisco Systems

Session Length: 1 Hour

Tue March 10 21:30 PST

Wed March 11 0:30 EST

Wed March 11 4:30:00 UTC

Wed March 11 10:00 IST

Wed March 11 15:30 Sydney

Voices 2015 www.globaltechwomen.com

We use MapReduce programming paradigm because it lends itself well to most data-intensive analytics jobs run on cloud these days, given its ability to scale-out and leverage several machines to parallel process data. Research has demonstrates that existing approaches to provisioning other applications in the cloud are not immediately relevant to MapReduce -based applications. Provisioning a MapReduce job entails requesting optimum number of resource sets (RS) and configuring MapReduce parameters such that each resource set is maximally utilized.

Each application has a different bottleneck resource (CPU :Disk :Network), and different bottleneck resource utilization, and thus needs to pick a different combination of these parameters based on the job profile such that the bottleneck resource is maximally utilized.

The problem at hand is thus defining a resource provisioning framework for MapReduce jobs running in a cloud keeping in mind performance goals such as Optimal resource utilization with Minimum incurred cost, Lower execution time, Energy Awareness, Automatic handling of node failure and Highly scalable solution.

THE SOLUTION FOR BIG DATA

THE SOLUTION FOR BIG DATATarak Tar Discussed the huge problems facing on Data storages and enclosed the Technology for it.Discussed drawbacks and future goals of it.

Hadoop Maharajathi,II-M.sc.,Computer Science,Bonsecours college for women

Hadoop Maharajathi,II-M.sc.,Computer Science,Bonsecours college for womenmaharajothip1 This document provides an overview of Hadoop, an open-source software framework for distributed storage and processing of large datasets across commodity hardware. It discusses Hadoop's history and goals, describes its core architectural components including HDFS, MapReduce and their roles, and gives examples of how Hadoop is used at large companies to handle big data.

Big data & hadoop

Big data & hadoopAbhi Goyan This document discusses big data and Apache Hadoop. It defines big data as large, diverse, complex data sets that are difficult to process using traditional data processing applications. It notes that big data comes from sources like sensor data, social media, and business transactions. Hadoop is presented as a tool for working with big data through its distributed file system HDFS and MapReduce programming model. MapReduce allows processing of large data sets across clusters of computers and can be used to solve problems like search, sorting, and analytics. HDFS provides scalable and reliable storage and access to data.

Unit IV.pdf

Unit IV.pdfKennyPratheepKumar This document provides information about Hadoop and its components. It discusses the history of Hadoop and how it has evolved over time. It describes key Hadoop components including HDFS, MapReduce, YARN, and HBase. HDFS is the distributed file system of Hadoop that stores and manages large datasets across clusters. MapReduce is a programming model used for processing large datasets in parallel. YARN is the cluster resource manager that allocates resources to applications. HBase is the Hadoop database that provides real-time random data access.

Big data and hadoop overvew

Big data and hadoop overvewKunal Khanna The document provides an overview of big data and Hadoop, discussing what big data is, current trends and challenges, approaches to solving big data problems including distributed computing, NoSQL, and Hadoop, and introduces HDFS and the MapReduce framework in Hadoop for distributed storage and processing of large datasets.

Ad

Recently uploaded (20)

AI-assisted Software Testing (3-hours tutorial)

AI-assisted Software Testing (3-hours tutorial)Vəhid Gəruslu Invited tutorial at the Istanbul Software Testing Conference (ISTC) 2025 https://iststc.com/

"Feed Water Heaters in Thermal Power Plants: Types, Working, and Efficiency G...

"Feed Water Heaters in Thermal Power Plants: Types, Working, and Efficiency G...Infopitaara A feed water heater is a device used in power plants to preheat water before it enters the boiler. It plays a critical role in improving the overall efficiency of the power generation process, especially in thermal power plants.

🔧 Function of a Feed Water Heater:

It uses steam extracted from the turbine to preheat the feed water.

This reduces the fuel required to convert water into steam in the boiler.

It supports Regenerative Rankine Cycle, increasing plant efficiency.

🔍 Types of Feed Water Heaters:

Open Feed Water Heater (Direct Contact)

Steam and water come into direct contact.

Mixing occurs, and heat is transferred directly.

Common in low-pressure stages.

Closed Feed Water Heater (Surface Type)

Steam and water are separated by tubes.

Heat is transferred through tube walls.

Common in high-pressure systems.

⚙️ Advantages:

Improves thermal efficiency.

Reduces fuel consumption.

Lowers thermal stress on boiler components.

Minimizes corrosion by removing dissolved gases.

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...Journal of Soft Computing in Civil Engineering Passenger car unit (PCU) of a vehicle type depends on vehicular characteristics, stream characteristics, roadway characteristics, environmental factors, climate conditions and control conditions. Keeping in view various factors affecting PCU, a model was developed taking a volume to capacity ratio and percentage share of particular vehicle type as independent parameters. A microscopic traffic simulation model VISSIM has been used in present study for generating traffic flow data which some time very difficult to obtain from field survey. A comparison study was carried out with the purpose of verifying when the adaptive neuro-fuzzy inference system (ANFIS), artificial neural network (ANN) and multiple linear regression (MLR) models are appropriate for prediction of PCUs of different vehicle types. From the results observed that ANFIS model estimates were closer to the corresponding simulated PCU values compared to MLR and ANN models. It is concluded that the ANFIS model showed greater potential in predicting PCUs from v/c ratio and proportional share for all type of vehicles whereas MLR and ANN models did not perform well.

Avnet Silica's PCIM 2025 Highlights Flyer

Avnet Silica's PCIM 2025 Highlights FlyerWillDavies22 See what you can expect to find on Avnet Silica's stand at PCIM 2025.

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...charlesdick1345 This paper proposes a shoulder inverse kinematics (IK) technique. Shoulder complex is comprised of the sternum, clavicle, ribs, scapula, humerus, and four joints.

DT REPORT by Tech titan GROUP to introduce the subject design Thinking

DT REPORT by Tech titan GROUP to introduce the subject design ThinkingDhruvChotaliya2 This a Report of a Design Thinking

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...Journal of Soft Computing in Civil Engineering Analysis of reinforced concrete deep beam is based on simplified approximate method due to the complexity of the exact analysis. The complexity is due to a number of parameters affecting its response. To evaluate some of this parameters, finite element study of the structural behavior of the reinforced self-compacting concrete deep beam was carried out using Abaqus finite element modeling tool. The model was validated against experimental data from the literature. The parametric effects of varied concrete compressive strength, vertical web reinforcement ratio and horizontal web reinforcement ratio on the beam were tested on eight (8) different specimens under four points loads. The results of the validation work showed good agreement with the experimental studies. The parametric study revealed that the concrete compressive strength most significantly influenced the specimens’ response with the average of 41.1% and 49 % increment in the diagonal cracking and ultimate load respectively due to doubling of concrete compressive strength. Although the increase in horizontal web reinforcement ratio from 0.31 % to 0.63 % lead to average of 6.24 % increment on the diagonal cracking load, it does not influence the ultimate strength and the load-deflection response of the beams. Similar variation in vertical web reinforcement ratio leads to an average of 2.4 % and 15 % increment in cracking and ultimate load respectively with no appreciable effect on the load-deflection response.

Raish Khanji GTU 8th sem Internship Report.pdf

Raish Khanji GTU 8th sem Internship Report.pdfRaishKhanji This report details the practical experiences gained during an internship at Indo German Tool

Room, Ahmedabad. The internship provided hands-on training in various manufacturing technologies, encompassing both conventional and advanced techniques. Significant emphasis was placed on machining processes, including operation and fundamental

understanding of lathe and milling machines. Furthermore, the internship incorporated

modern welding technology, notably through the application of an Augmented Reality (AR)

simulator, offering a safe and effective environment for skill development. Exposure to

industrial automation was achieved through practical exercises in Programmable Logic Controllers (PLCs) using Siemens TIA software and direct operation of industrial robots

utilizing teach pendants. The principles and practical aspects of Computer Numerical Control

(CNC) technology were also explored. Complementing these manufacturing processes, the

internship included extensive application of SolidWorks software for design and modeling tasks. This comprehensive practical training has provided a foundational understanding of

key aspects of modern manufacturing and design, enhancing the technical proficiency and readiness for future engineering endeavors.

Introduction to FLUID MECHANICS & KINEMATICS

Introduction to FLUID MECHANICS & KINEMATICSnarayanaswamygdas Fluid mechanics is the branch of physics concerned with the mechanics of fluids (liquids, gases, and plasmas) and the forces on them. Originally applied to water (hydromechanics), it found applications in a wide range of disciplines, including mechanical, aerospace, civil, chemical, and biomedical engineering, as well as geophysics, oceanography, meteorology, astrophysics, and biology.

It can be divided into fluid statics, the study of various fluids at rest, and fluid dynamics.

Fluid statics, also known as hydrostatics, is the study of fluids at rest, specifically when there's no relative motion between fluid particles. It focuses on the conditions under which fluids are in stable equilibrium and doesn't involve fluid motion.

Fluid kinematics is the branch of fluid mechanics that focuses on describing and analyzing the motion of fluids, such as liquids and gases, without considering the forces that cause the motion. It deals with the geometrical and temporal aspects of fluid flow, including velocity and acceleration. Fluid dynamics, on the other hand, considers the forces acting on the fluid.

Fluid dynamics is the study of the effect of forces on fluid motion. It is a branch of continuum mechanics, a subject which models matter without using the information that it is made out of atoms; that is, it models matter from a macroscopic viewpoint rather than from microscopic.

Fluid mechanics, especially fluid dynamics, is an active field of research, typically mathematically complex. Many problems are partly or wholly unsolved and are best addressed by numerical methods, typically using computers. A modern discipline, called computational fluid dynamics (CFD), is devoted to this approach. Particle image velocimetry, an experimental method for visualizing and analyzing fluid flow, also takes advantage of the highly visual nature of fluid flow.

Fundamentally, every fluid mechanical system is assumed to obey the basic laws :

Conservation of mass

Conservation of energy

Conservation of momentum

The continuum assumption

For example, the assumption that mass is conserved means that for any fixed control volume (for example, a spherical volume)—enclosed by a control surface—the rate of change of the mass contained in that volume is equal to the rate at which mass is passing through the surface from outside to inside, minus the rate at which mass is passing from inside to outside. This can be expressed as an equation in integral form over the control volume.

The continuum assumption is an idealization of continuum mechanics under which fluids can be treated as continuous, even though, on a microscopic scale, they are composed of molecules. Under the continuum assumption, macroscopic (observed/measurable) properties such as density, pressure, temperature, and bulk velocity are taken to be well-defined at "infinitesimal" volume elements—small in comparison to the characteristic length scale of the system, but large in comparison to molecular length scale

Fort night presentation new0903 pdf.pdf.

Fort night presentation new0903 pdf.pdf.anuragmk56 This is the document of fortnight review progress meeting

RICS Membership-(The Royal Institution of Chartered Surveyors).pdf

RICS Membership-(The Royal Institution of Chartered Surveyors).pdfMohamedAbdelkader115 Glad to be one of only 14 members inside Kuwait to hold this credential.

Please check the members inside kuwait from this link:

https://www.rics.org/networking/find-a-member.html?firstname=&lastname=&town=&country=Kuwait&member_grade=(AssocRICS)&expert_witness=&accrediation=&page=1

π0.5: a Vision-Language-Action Model with Open-World Generalization

π0.5: a Vision-Language-Action Model with Open-World GeneralizationNABLAS株式会社 今回の資料「Transfusion / π0 / π0.5」は、画像・言語・アクションを統合するロボット基盤モデルについて紹介しています。

拡散×自己回帰を融合したTransformerをベースに、π0.5ではオープンワールドでの推論・計画も可能に。

This presentation introduces robot foundation models that integrate vision, language, and action.

Built on a Transformer combining diffusion and autoregression, π0.5 enables reasoning and planning in open-world settings.

15th International Conference on Computer Science, Engineering and Applicatio...

15th International Conference on Computer Science, Engineering and Applicatio...IJCSES Journal #computerscience #programming #coding #technology #programmer #python #computer #developer #tech #coder #javascript #java #codinglife #html #code #softwaredeveloper #webdeveloper #software #cybersecurity #linux #computerengineering #webdevelopment #softwareengineer #machinelearning #hacking #engineering #datascience #css #programmers #pythonprogramming

Data Structures_Introduction to algorithms.pptx

Data Structures_Introduction to algorithms.pptxRushaliDeshmukh2 Concept of Problem Solving, Introduction to Algorithms, Characteristics of Algorithms, Introduction to Data Structure, Data Structure Classification (Linear and Non-linear, Static and Dynamic, Persistent and Ephemeral data structures), Time complexity and Space complexity, Asymptotic Notation - The Big-O, Omega and Theta notation, Algorithmic upper bounds, lower bounds, Best, Worst and Average case analysis of an Algorithm, Abstract Data Types (ADT)

Compiler Design_Lexical Analysis phase.pptx

Compiler Design_Lexical Analysis phase.pptxRushaliDeshmukh2 The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

Convert NFA to DFA

Transforming grammars and regular expressions

Transforming automata to grammars

Language for specifying lexical analyzers

Compiler Design Unit1 PPT Phases of Compiler.pptx

Compiler Design Unit1 PPT Phases of Compiler.pptxRushaliDeshmukh2 Compiler phases

Lexical analysis

Syntax analysis

Semantic analysis

Intermediate (machine-independent) code generation

Intermediate code optimization

Target (machine-dependent) code generation

Target code optimization

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...

Development of MLR, ANN and ANFIS Models for Estimation of PCUs at Different ...Journal of Soft Computing in Civil Engineering

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...

Structural Response of Reinforced Self-Compacting Concrete Deep Beam Using Fi...Journal of Soft Computing in Civil Engineering

Ad

Hadoop: A distributed framework for Big Data

- 2. What is Big-Data? What is Hadoop? Why Distributed File System? Hadoop Distributed File System (HDFS) Replication & Rack Awareness

- 3. Major Problems in Distributed File System Hadoop Computing Model(MapReduce) Advantages Of Hadoop Disadvantages Of Hadoop Prominent Users Tools

- 4. Big data refers to data volumes in the range of exabytes (1018) and beyond.i.e.large amount of data We define “Big Data” as the amount of data just beyond technology’s capability to store,manage and process efficiently.

- 6. Doug Cutting 2005: Doug Cutting and Michael J. Cafarella developed Hadoop to support distribution for the Nutch search engine project. The project was funded by Yahoo. 2006: Yahoo gave the project to Apache Software Foundation.

- 7. • Hadoop was created by Doug Cutting and Mike Cafarella in 2005. Cutting, who was working at Yahoo! • Hadoop is a software framework for distributed processing of large datasets across large clusters of computers • Hadoop is open-source implementation for Google MapReduce • Hadoop is based on a simple programming model called MapReduce

- 8. • Hadoop is based on a simple data model, any data will fit. • ApacheHadoop is an open-source software framework written in Java for distributed storage • Hadoop framework consists on two main layers • Distributed file system (HDFS) • Execution engine (MapReduce) • Hadoop is one time write many time read.

- 9. Parallel processing used in hadoop for processing data so less time required for processing huge amount of data.

- 10. Datanodes can be organized into racks

- 11. Single name node and many data nodes Name node maintains the file system metadata Files are split into fixed sized blocks and stored on data nodes (Default 64MB) Data blocks are replicated for fault tolerance and fast access (Default is 3) Datanodes periodically send heartbeats to namenode HDFS is a master-slave architecture Master: name node Slaves: data nodes (100s or 1000s of nodes)

- 13. Under Replication:- Total Replication < Replication Factor Over Replication:- Total Replication > Replication Factor

- 17. 1)Hardware Failure 2)Large Data Sets 3) Redundancy Of Data

- 18. Two main phases: Map and Reduce • Any job is converted into map and reduce tasks • Developers need ONLY to implement the Map and Reduce classes MapReduce is a master-slave architecture • Master: JobTracker • Slaves: TaskTrackers (100s or 1000s of tasktrackers) • Every data node is running a tasktracker

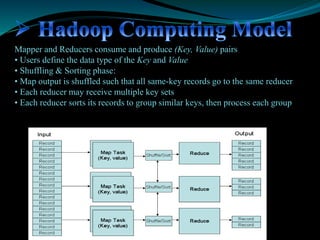

- 19. Mapper and Reducers consume and produce (Key, Value) pairs • Users define the data type of the Key and Value • Shuffling & Sorting phase: • Map output is shuffled such that all same-key records go to the same reducer • Each reducer may receive multiple key sets • Each reducer sorts its records to group similar keys, then process each group

- 20. Job: Count the occurrences of each word in a data set Map Tasks Reduce Tasks Reduce phase is optional: Jobs can be Map Only

- 22. 1)Security Concerns 2)Vulnerable By Nature 3)Not Fit for Small Data 4)Potential Stability Issues 5)General Limitations

- 23. 1)Yahoo! 2)Facebook 3)Hadoop hosting in the Cloud 4)Hadoop on Microsoft Azure 5)Hadoop on Amazon EC2/S3 services 6)Amazon Elastic MapReduce

- 24. NoSQL:- Databases,MongoDB, CouchDB, Cassandra, Redis, BigTable, Hbase, Hypertable, ZooKeeper . MapReduce :- Hadoop, Hive, Pig, Cascading, Caffeine, S4, MapR, Flume, Kafka, Oozie, Greenplum Storage:- S3, Hadoop Distributed File System

- 25. Servers :- EC2, Google App Engine, Elastic, Beanstalk. Processing :- R, Yahoo! Pipes, Mechanical Turk,ElasticSearch, BigSheets, Tinkerpop.