Hadoop & Hep

1 like1,111 views

Simon Metson of Bristol University and CERN's CMS experiment, discussing how to use Hadoop for processing CERN event data, or other data generated in/by the experiment

1 of 12

Downloaded 63 times

Recommended

01 introduction to cloud computing technology

01 introduction to cloud computing technologyNan Sheng The document outlines a syllabus for a 15-week course on cloud computing that will cover core cloud techniques including scalable networks, distributed file systems, databases, parallel computing and security. The course aims to help students understand cloud principles and learn how to use popular cloud technologies rather than re-implementing them. It will also include invited seminars from companies like Baidu, Taobao, EMC and Google.

北航云计算公开课01 introduction to cloud computing technology

北航云计算公开课01 introduction to cloud computing technologyyhz87 This document outlines a proposed course on cloud computing that would meet weekly over 16 sessions. It includes core topics like scalable network services, distributed file systems, databases, parallel computing and security. Students would learn principles and how to use tools like Hadoop, HDFS, HBase and Zookeeper rather than reimplementing techniques. The course would feature invited seminars from companies like Baidu, Taobao, EMC and Google. Assessment would be through homework, a midterm exam and final exam, with homework accounting for 50% of the grade. The goal is for students to gain hands-on experience building a cloud.

Hofstra University - Overview of Big Data

Hofstra University - Overview of Big Datasarasioux Spoke to PhD candidates and faculty about emerging technologies in big data in the media and advertising industries.

Chattanooga Hadoop Meetup - Hadoop 101 - November 2014

Chattanooga Hadoop Meetup - Hadoop 101 - November 2014Josh Patterson Josh Patterson is a principal solution architect who has worked with Hadoop at Cloudera and Tennessee Valley Authority. Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity servers. It allows for consolidating mixed data types at low cost while keeping raw data always available. Hadoop uses commodity hardware and scales to petabytes without changes. Its distributed file system provides fault tolerance and replication while its processing engine handles all data types and scales processing.

Big Data is not Rocket Science

Big Data is not Rocket Sciencelarsgeorge These are my slides for the 5 minute overview talk I gave during a recent workshop at the European Commission in Brussels, on the topic of "Big Data Skills in Europe".

Adapt and respond: keeping responsive into the future

Adapt and respond: keeping responsive into the futureChris Mills Media queries blah blah blah. You've all heard that talk a hundred times, so I won't do that. Instead, I'll go beyond the obvious, looking at what we can do today to adapt our front-ends to different browsing environments, from mobiles and other alternative devices to older browsers we may be called upon to support.

You'll learn advanced media query and viewport tricks, including a look at @viewport, Insights into responsive images: problems, and current solutions, providing usable alternatives to older browsers with Modernizr and YepNope, other CSS3 responsive goodness - multi-col, Flexbox, and more, and finally where RWD is going — matchMedia, CSS4 media queries, etc.

Help! My Hadoop doesn't work!

Help! My Hadoop doesn't work!Steve Loughran This document provides guidance on reporting bugs in the Apache Hadoop project. It explains that the JIRA issue tracker is used to report bugs and feature requests, and outlines best practices for submitting high-quality issue reports. These include searching for existing issues and solutions first, providing detailed steps to replicate the problem, and attaching relevant logs and stack traces. The document discourages "help!" emails and stresses that the best way to get a bug fixed is often for the reporter to propose a patch with tests.

Beyond Unit Testing

Beyond Unit TestingSteve Loughran The document discusses various techniques for testing large, distributed systems beyond traditional unit testing. It recommends embracing virtualization to simulate production environments and deploying applications and tests across multiple virtual machines. Various tools are presented to help with distributed, automated testing including Cactus for in-container testing, Selenium and jsUnit for browser testing, and SmartFrog as a framework for describing, deploying and managing distributed service components and tests. The document calls for a focus on system-level tests that simulate the full production environment and integrate testing across distributed systems.

When Web Services Go Bad

When Web Services Go BadSteve Loughran The document discusses some of the challenges of developing and deploying web services at scale, including:

- Meeting service level agreements for high availability and performance.

- Choosing appropriate technologies and architectures that can scale to support large volumes of traffic and data.

- Ensuring services are robust, reliable and secure through practices like rigorous testing, monitoring, and automated deployment.

- Fostering collaboration between development and operations teams to address deployment issues as they arise.

Benchmarking

BenchmarkingSteve Loughran The document discusses benchmarking Hadoop performance and discusses several challenges: estimating Hadoop performance on hardware and clusters, designing Hadoop-ready servers and clusters, and optimizing networks for Hadoop. It also discusses a customer request to benchmark Hadoop Sort on a 100GB dataset and considers other benchmark tests like PageRank, RAM and CPU intensive tests, and tests that perform seeks in files. Finally, it discusses measuring network traffic related to specific Hadoop jobs, using small test datasets to predict performance on large datasets, and recommendations for Hadoop-ready server, rack, and container hardware designs.

Deploying On EC2

Deploying On EC2Steve Loughran This document summarizes Steve Loughran's research into deploying applications across distributed cloud resources like Amazon EC2 and S3. It discusses moving from single server installations to server farms and cloud computing. Key benefits include scaling easily without large capital costs, but challenges include lack of persistent storage, dynamic IP addresses, and single points of failure. The document provides examples of using EC2 and S3 programmatically through the SmartFrog framework.

HA Hadoop -ApacheCon talk

HA Hadoop -ApacheCon talkSteve Loughran Hadoop provides high availability through replication of data across multiple nodes. Replication handles data integrity through checksums and automatic re-replication of corrupt blocks. Rack failures are reduced by dual networking and more replication bandwidth. NameNode failures are rare but cause downtime, so Hadoop 1 adds cold failover of Namenodes using VMware HA or RedHat HA. Hadoop 2 introduces live failover of Namenodes using a quorum journal manager to eliminate single points of failure. Full stack high availability adds monitoring and restart of all services.

Hadoop: today and tomorrow

Hadoop: today and tomorrowSteve Loughran Presentation on where Hadoop is today -and where it is going, at the London Hadoop Users group, April 2012

The Wondrous Curse of Interoperability

The Wondrous Curse of InteroperabilitySteve Loughran Slides from 2003 on WS interoperability. This predates WS-*, incidentally -SOAPBuilders were the people working together

Testing

TestingSteve Loughran The document discusses various types of software testing including unit testing, functional testing, system testing, performance testing, and acceptance testing. It provides examples of unit test frameworks for different programming languages. Test-driven development and using continuous integration tools are advocated to improve testing practices and prevent code that does not work from being deployed. Challenges of testing like the difficulty of testing distributed systems are also outlined.

My other computer is a datacentre - 2012 edition

My other computer is a datacentre - 2012 editionSteve Loughran An updated version of the "my other computer is a datacentre" talk, presented at the Bristol University HPC talk.

Because it is targeted at universities, it emphasises some of the interesting problems -the classic CS ones of scheduling, new ones of availability and failure handling within what is now a single computer, and emergent problems of power and heterogeneity. It also includes references, all of which are worth reading, and, being mostly Google and Microsoft papers, are free to download without needing ACM or IEEE library access.

Comments welcome.

Hadoop Futures

Hadoop FuturesSteve Loughran Tom White presented on the future of Hadoop at a user group meeting. Key goals for Hadoop include modularity, support for multiple languages, and integration with other systems. The Hadoop project was split into core, HDFS, and MapReduce repositories. Upcoming releases include 0.20.1 and 0.21, with 1.0 to establish versioning rules. Interesting projects include using Avro for RPC, distributed configuration, and improving MapReduce performance.

New Roles In The Cloud

New Roles In The CloudSteve Loughran Presentation on how developer roles change when meeting cloud infrastructure, and how a a "role driven"/template based VM deployment model helps this separation

Farming hadoop in_the_cloud

Farming hadoop in_the_cloudSteve Loughran The document discusses running Hadoop clusters in the cloud and the challenges that presents. It introduces CloudFarmer, a tool that allows defining roles for VMs and dynamically allocating VMs to roles. This allows building agile Hadoop clusters in the cloud that can adapt as needs change without static configurations. CloudFarmer provides a web UI to manage roles and hosts.

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 edition

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 editionSteve Loughran An update of the "Hadoop and Kerberos: the Madness Beyond the Gate" talk, covering recent work "the Fix Kerberos" JIRA and its first deliverable: KDiag

Application Architecture For The Cloud

Application Architecture For The CloudSteve Loughran The document discusses considerations for building application architectures for cloud computing. It outlines some benefits and drawbacks of moving applications to the cloud. It also discusses components needed for cloud applications, such as web UIs, agile scaling, live upgrades, and using services like Amazon S3, SimpleDB, and EC2. It proposes an "Apache Cloud Computing Edition" with technologies like Hadoop and MapReduce and addresses needs like configuration, resource management, and testing in the cloud.

Apache Spark and Object Stores

Apache Spark and Object StoresSteve Loughran This document discusses using Apache Spark with object stores like Amazon S3 and Microsoft Azure Blob Storage. It covers challenges around classpath configuration, credentials, code examples, and performance commitments when using these storage systems. Key points include using Hadoop connectors like S3A and WASB, configuring credentials through properties or environment variables, and tuning Spark for object store performance and consistency.

Spark Summit East 2017: Apache spark and object stores

Spark Summit East 2017: Apache spark and object storesSteve Loughran Spark Summit East 2017 talk on Apache Spark and object store integration, specifically AWS S3 and Azure WASB

Household INFOSEC in a Post-Sony Era

Household INFOSEC in a Post-Sony EraSteve Loughran This document discusses household information security risks in the post-Sony era. It identifies key risks like data integrity, privacy, and availability issues. It provides examples of vulnerabilities across different devices and platforms like LG TVs, iPads, iPhones, and PS4s. It also discusses vulnerabilities in software like Firefox, Chrome, Internet Explorer, Flash, and SparkContext. It recommends approaches to address these risks like using containers for isolation, validating packages with PGP to ensure authentication, and enabling audit logs.

Hadoop gets Groovy

Hadoop gets GroovySteve Loughran The document discusses using Groovy to interact with Hadoop. Some key points:

- Groovy allows mappers and reducers to be written more concisely using closures and lists compared to Java.

- Groovy scripts can be run from Hadoop jobs by compiling scripts into classes at runtime.

- Performance of Groovy has improved with Java 7 but Pig should be used over Groovy if possible for production jobs.

- Groovy is best used for testing and extending Hadoop classes, not entire production pipelines.

Unexpected Challenges in Large Scale Machine Learning by Charles Parker

Unexpected Challenges in Large Scale Machine Learning by Charles ParkerBigMine Talk by Charles Parker (BigML) at BigMine12 at KDD12.

In machine learning, scale adds complexity. The most obvious consequence of scale is that data takes longer to process. At certain points, however, scale makes trivial operations costly, thus forcing us to re-evaluate algorithms in light of the complexity of those operations. Here, we will discuss one important way a general large scale machine learning setting may diff

er from the standard supervised classification setting and show some the results of some preliminary experiments highlighting this di

fference. The results suggest that there is potential for signifi

cant improvement beyond obvious solutions.

Big Data & Hadoop Introduction

Big Data & Hadoop IntroductionJayant Mukherjee Disclaimer :

The images, company, product and service names that are used in this presentation, are for illustration purposes only. All trademarks and registered trademarks are the property of their respective owners.

Data/Image collected from various sources from Internet.

Intention was to present the big picture of Big Data & Hadoop

Silicon valley nosql meetup april 2012

Silicon valley nosql meetup april 2012InfiniteGraph Join Objectivity, Inc.’s VP of Product Management, Brian Clark, in a discussion of the latest trends in Big Data Analytics, defining what is Big Data and understanding how to maximize your existing architectures by utilizing NOSQL technologies to improve functionality and provide real-time results. There will be a focus on relationship analytics as well as an introduction to NOSQL data stores, object and graph databases, such as the architecture behind Objectivity/DB and InfiniteGraph.

To Cloud or Not To Cloud?

To Cloud or Not To Cloud?Greg Lindahl The document discusses the pros and cons of using public cloud computing services versus hosting infrastructure internally for a new startup. Some advantages mentioned include flexibility, avoiding large upfront capital expenditures, and the ability to scale resources up and down as needed. Disadvantages include public cloud services becoming more expensive than internal hosting at large scale, inefficient resource ratios for some workloads, and high costs for intensive disk and SSD usage. The document aims to provide considerations for a startup evaluating whether to use public cloud services.

Building A Scalable Open Source Storage Solution

Building A Scalable Open Source Storage SolutionPhil Cryer The Biodiversity Heritage Library (BHL), like many other projects within biodiversity informatics, maintains terabytes of data that must be safeguarded against loss. Further, a scalable and resilient infrastructure is required to enable continuous data interoperability, as BHL provides unique services to its community of users. This volume of data and associated availability requirements present significant challenges to a distributed organization like BHL, not only in funding capital equipment purchases, but also in ongoing system administration and maintenance. A new standardized system is required to bring new opportunities to collaborate on distributed services and processing across what will be geographically dispersed nodes. Such services and processing include taxon name finding, indexes or GUID/LSID services, distributed text mining, names reconciliation and other computationally intensive tasks, or tasks with high availability requirements.

More Related Content

Viewers also liked (17)

When Web Services Go Bad

When Web Services Go BadSteve Loughran The document discusses some of the challenges of developing and deploying web services at scale, including:

- Meeting service level agreements for high availability and performance.

- Choosing appropriate technologies and architectures that can scale to support large volumes of traffic and data.

- Ensuring services are robust, reliable and secure through practices like rigorous testing, monitoring, and automated deployment.

- Fostering collaboration between development and operations teams to address deployment issues as they arise.

Benchmarking

BenchmarkingSteve Loughran The document discusses benchmarking Hadoop performance and discusses several challenges: estimating Hadoop performance on hardware and clusters, designing Hadoop-ready servers and clusters, and optimizing networks for Hadoop. It also discusses a customer request to benchmark Hadoop Sort on a 100GB dataset and considers other benchmark tests like PageRank, RAM and CPU intensive tests, and tests that perform seeks in files. Finally, it discusses measuring network traffic related to specific Hadoop jobs, using small test datasets to predict performance on large datasets, and recommendations for Hadoop-ready server, rack, and container hardware designs.

Deploying On EC2

Deploying On EC2Steve Loughran This document summarizes Steve Loughran's research into deploying applications across distributed cloud resources like Amazon EC2 and S3. It discusses moving from single server installations to server farms and cloud computing. Key benefits include scaling easily without large capital costs, but challenges include lack of persistent storage, dynamic IP addresses, and single points of failure. The document provides examples of using EC2 and S3 programmatically through the SmartFrog framework.

HA Hadoop -ApacheCon talk

HA Hadoop -ApacheCon talkSteve Loughran Hadoop provides high availability through replication of data across multiple nodes. Replication handles data integrity through checksums and automatic re-replication of corrupt blocks. Rack failures are reduced by dual networking and more replication bandwidth. NameNode failures are rare but cause downtime, so Hadoop 1 adds cold failover of Namenodes using VMware HA or RedHat HA. Hadoop 2 introduces live failover of Namenodes using a quorum journal manager to eliminate single points of failure. Full stack high availability adds monitoring and restart of all services.

Hadoop: today and tomorrow

Hadoop: today and tomorrowSteve Loughran Presentation on where Hadoop is today -and where it is going, at the London Hadoop Users group, April 2012

The Wondrous Curse of Interoperability

The Wondrous Curse of InteroperabilitySteve Loughran Slides from 2003 on WS interoperability. This predates WS-*, incidentally -SOAPBuilders were the people working together

Testing

TestingSteve Loughran The document discusses various types of software testing including unit testing, functional testing, system testing, performance testing, and acceptance testing. It provides examples of unit test frameworks for different programming languages. Test-driven development and using continuous integration tools are advocated to improve testing practices and prevent code that does not work from being deployed. Challenges of testing like the difficulty of testing distributed systems are also outlined.

My other computer is a datacentre - 2012 edition

My other computer is a datacentre - 2012 editionSteve Loughran An updated version of the "my other computer is a datacentre" talk, presented at the Bristol University HPC talk.

Because it is targeted at universities, it emphasises some of the interesting problems -the classic CS ones of scheduling, new ones of availability and failure handling within what is now a single computer, and emergent problems of power and heterogeneity. It also includes references, all of which are worth reading, and, being mostly Google and Microsoft papers, are free to download without needing ACM or IEEE library access.

Comments welcome.

Hadoop Futures

Hadoop FuturesSteve Loughran Tom White presented on the future of Hadoop at a user group meeting. Key goals for Hadoop include modularity, support for multiple languages, and integration with other systems. The Hadoop project was split into core, HDFS, and MapReduce repositories. Upcoming releases include 0.20.1 and 0.21, with 1.0 to establish versioning rules. Interesting projects include using Avro for RPC, distributed configuration, and improving MapReduce performance.

New Roles In The Cloud

New Roles In The CloudSteve Loughran Presentation on how developer roles change when meeting cloud infrastructure, and how a a "role driven"/template based VM deployment model helps this separation

Farming hadoop in_the_cloud

Farming hadoop in_the_cloudSteve Loughran The document discusses running Hadoop clusters in the cloud and the challenges that presents. It introduces CloudFarmer, a tool that allows defining roles for VMs and dynamically allocating VMs to roles. This allows building agile Hadoop clusters in the cloud that can adapt as needs change without static configurations. CloudFarmer provides a web UI to manage roles and hosts.

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 edition

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 editionSteve Loughran An update of the "Hadoop and Kerberos: the Madness Beyond the Gate" talk, covering recent work "the Fix Kerberos" JIRA and its first deliverable: KDiag

Application Architecture For The Cloud

Application Architecture For The CloudSteve Loughran The document discusses considerations for building application architectures for cloud computing. It outlines some benefits and drawbacks of moving applications to the cloud. It also discusses components needed for cloud applications, such as web UIs, agile scaling, live upgrades, and using services like Amazon S3, SimpleDB, and EC2. It proposes an "Apache Cloud Computing Edition" with technologies like Hadoop and MapReduce and addresses needs like configuration, resource management, and testing in the cloud.

Apache Spark and Object Stores

Apache Spark and Object StoresSteve Loughran This document discusses using Apache Spark with object stores like Amazon S3 and Microsoft Azure Blob Storage. It covers challenges around classpath configuration, credentials, code examples, and performance commitments when using these storage systems. Key points include using Hadoop connectors like S3A and WASB, configuring credentials through properties or environment variables, and tuning Spark for object store performance and consistency.

Spark Summit East 2017: Apache spark and object stores

Spark Summit East 2017: Apache spark and object storesSteve Loughran Spark Summit East 2017 talk on Apache Spark and object store integration, specifically AWS S3 and Azure WASB

Household INFOSEC in a Post-Sony Era

Household INFOSEC in a Post-Sony EraSteve Loughran This document discusses household information security risks in the post-Sony era. It identifies key risks like data integrity, privacy, and availability issues. It provides examples of vulnerabilities across different devices and platforms like LG TVs, iPads, iPhones, and PS4s. It also discusses vulnerabilities in software like Firefox, Chrome, Internet Explorer, Flash, and SparkContext. It recommends approaches to address these risks like using containers for isolation, validating packages with PGP to ensure authentication, and enabling audit logs.

Hadoop gets Groovy

Hadoop gets GroovySteve Loughran The document discusses using Groovy to interact with Hadoop. Some key points:

- Groovy allows mappers and reducers to be written more concisely using closures and lists compared to Java.

- Groovy scripts can be run from Hadoop jobs by compiling scripts into classes at runtime.

- Performance of Groovy has improved with Java 7 but Pig should be used over Groovy if possible for production jobs.

- Groovy is best used for testing and extending Hadoop classes, not entire production pipelines.

Similar to Hadoop & Hep (20)

Unexpected Challenges in Large Scale Machine Learning by Charles Parker

Unexpected Challenges in Large Scale Machine Learning by Charles ParkerBigMine Talk by Charles Parker (BigML) at BigMine12 at KDD12.

In machine learning, scale adds complexity. The most obvious consequence of scale is that data takes longer to process. At certain points, however, scale makes trivial operations costly, thus forcing us to re-evaluate algorithms in light of the complexity of those operations. Here, we will discuss one important way a general large scale machine learning setting may diff

er from the standard supervised classification setting and show some the results of some preliminary experiments highlighting this di

fference. The results suggest that there is potential for signifi

cant improvement beyond obvious solutions.

Big Data & Hadoop Introduction

Big Data & Hadoop IntroductionJayant Mukherjee Disclaimer :

The images, company, product and service names that are used in this presentation, are for illustration purposes only. All trademarks and registered trademarks are the property of their respective owners.

Data/Image collected from various sources from Internet.

Intention was to present the big picture of Big Data & Hadoop

Silicon valley nosql meetup april 2012

Silicon valley nosql meetup april 2012InfiniteGraph Join Objectivity, Inc.’s VP of Product Management, Brian Clark, in a discussion of the latest trends in Big Data Analytics, defining what is Big Data and understanding how to maximize your existing architectures by utilizing NOSQL technologies to improve functionality and provide real-time results. There will be a focus on relationship analytics as well as an introduction to NOSQL data stores, object and graph databases, such as the architecture behind Objectivity/DB and InfiniteGraph.

To Cloud or Not To Cloud?

To Cloud or Not To Cloud?Greg Lindahl The document discusses the pros and cons of using public cloud computing services versus hosting infrastructure internally for a new startup. Some advantages mentioned include flexibility, avoiding large upfront capital expenditures, and the ability to scale resources up and down as needed. Disadvantages include public cloud services becoming more expensive than internal hosting at large scale, inefficient resource ratios for some workloads, and high costs for intensive disk and SSD usage. The document aims to provide considerations for a startup evaluating whether to use public cloud services.

Building A Scalable Open Source Storage Solution

Building A Scalable Open Source Storage SolutionPhil Cryer The Biodiversity Heritage Library (BHL), like many other projects within biodiversity informatics, maintains terabytes of data that must be safeguarded against loss. Further, a scalable and resilient infrastructure is required to enable continuous data interoperability, as BHL provides unique services to its community of users. This volume of data and associated availability requirements present significant challenges to a distributed organization like BHL, not only in funding capital equipment purchases, but also in ongoing system administration and maintenance. A new standardized system is required to bring new opportunities to collaborate on distributed services and processing across what will be geographically dispersed nodes. Such services and processing include taxon name finding, indexes or GUID/LSID services, distributed text mining, names reconciliation and other computationally intensive tasks, or tasks with high availability requirements.

Apache hadoop by shah

Apache hadoop by shahShah Hussain Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity servers. It was designed to scale up from single servers to thousands of machines, with very high fault tolerance. The core of Hadoop includes Hadoop Distributed File System (HDFS) for data storage and Hadoop MapReduce for distributed computing. It is written in Java and can run on Linux, Mac OS X, Windows and Solaris. Many large companies such as Amazon, Facebook, Yahoo and IBM use Hadoop to process petabytes of data.

Mapping Life Science Informatics to the Cloud

Mapping Life Science Informatics to the CloudChris Dagdigian This document discusses strategies for mapping informatics to the cloud. It provides 9 tips for doing so effectively. Tip 1 advises that high-performance computing and clouds require a new model where resources are dedicated to each application. Tip 2 recommends hybrid cloud approaches but cautions they are less usable than claimed and practical only sometimes. The document emphasizes the need to handle legacy codes in addition to new "big data" approaches.

5 Things that Make Hadoop a Game Changer

5 Things that Make Hadoop a Game ChangerCaserta 5 Things that Make Hadoop a Game Changer

Webinar by Elliott Cordo, Caserta Concepts

There is much hype and mystery surrounding Hadoop's role in analytic architecture. In this webinar, Elliott presented, in detail, the services and concepts that makes Hadoop a truly unique solution - a game changer for the enterprise. He talked about the real benefits of a distributed file system, the multi workload processing capabilities enabled by YARN, and the 3 other important things you need to know about Hadoop.

To access the recorded webinar, visit the event site: https://www.brighttalk.com/webcast/9061/131029

For more information the services and solutions that Caserta Concepts offers, please visit http://casertaconcepts.com/

Big data and hadoop overvew

Big data and hadoop overvewKunal Khanna The document provides an overview of big data and Hadoop, discussing what big data is, current trends and challenges, approaches to solving big data problems including distributed computing, NoSQL, and Hadoop, and introduces HDFS and the MapReduce framework in Hadoop for distributed storage and processing of large datasets.

A Lightning Introduction To Clouds & HLT - Human Language Technology Conference

A Lightning Introduction To Clouds & HLT - Human Language Technology ConferenceBasis Technology What’s all this cloud stuff, anyway? What kinds of problems do organizations set out to solve with ‘a cloud,’ or even ‘the cloud’? What are a few of the major government initiatives involving this technology? How does HLT in general, and Search in particular, fit?

This talk will take a tour of the technology behind clouds and the sometimes-foggy ambitions of the projects that use them, and look in particular detail at the challenges of applying cloud technologies to Text Analytics.

View more slides from the Human Language Technology Conference 2012 here: http://info.basistech.com/hlt-2012-slides

Cloud-Friendly Hadoop and Hive - StampedeCon 2013

Cloud-Friendly Hadoop and Hive - StampedeCon 2013StampedeCon `At the StampedeCon 2013 Big Data conference in St. Louis, Shrikanth Shankar, Head of Engineering at Qubole, presented Cloud-Friendly Hadoop and Hive. The cloud reduces the barrier to entry for many small and medium size enterprises into analytics. Hadoop and related frameworks like Hive, Oozie, Sqoop are becoming tools of choice for deriving insights from data. However, these frameworks were designed for in-house datacenters, which have different tradeoffs from a cloud environment, and making them run well in the cloud presents some challenges. In this talk, Shrikanth Shankar, Head of Engineering at Qubole, describes how these experiences taught us to extend Hadoop and Hive to exploit these new tradeoffs. Use cases will be presented that show how the challenges at large scale at Facebook are now making it extremely easy for a significantly smaller end user to leverage these technologies in the cloud.

Big iron 2 (published)

Big iron 2 (published)Ben Stopford The document discusses the evolution of database technologies from relational databases to NoSQL databases. It argues that NoSQL databases better fit the needs of modern software development by supporting iterative development, fast feedback, and frequent releases. While early NoSQL technologies faced criticisms regarding lack of features like transactions and integrity checks, they proved useful for scaling applications to large data volumes. The document also advocates for an approach that balances flexibility with complexity by using schemaless stores at the front-end and more rigid structures at the back-end.

Large scale topic modeling

Large scale topic modelingSameer Wadkar The document discusses large scale topic modeling using Latent Dirichlet Allocation (LDA). It provides an overview of LDA, including what it can do, a quick example, and analysis results of applying LDA to Sarah Palin's emails which discovered multiple topics. The document also discusses types of analysis LDA can perform, toolkits for topic modeling, and describes Axiomine's solution for performing large scale LDA without Hadoop for improved performance.

Bw tech hadoop

Bw tech hadoopMindgrub Technologies Hadoop is an open source distributed processing platform for large data sets across clusters of commodity hardware. It allows for the distributed processing of large data sets across clusters of computers using simple programming models. Hadoop features include a distributed file system (HDFS), a MapReduce programming model for large scale data processing, and an ecosystem of projects including HBase, Pig, Hive, and ZooKeeper. Hadoop is well suited for batch processing large amounts of structured and unstructured data, providing scalability and fault tolerance. However, it is not as suitable for low latency queries or updating existing data.

BW Tech Meetup: Hadoop and The rise of Big Data

BW Tech Meetup: Hadoop and The rise of Big Data Mindgrub Technologies Hadoop is an open source, distributed computation platform, that is very important in the worlds of search, analytics, and big data. Donald Miner, a Solutions Architect at Greenplum, will give an hour presentation that will focus on ways to get started with Hadoop and provide advice on how successfully utilize the platform

Specific topics of discussion include how Hadoop works, what Hadoop should and should not be used for, MapReduce design patterns, and the upcoming synergy of SQL and NoSQL in Hadoop.

Pig and Python to Process Big Data

Pig and Python to Process Big DataShawn Hermans Shawn Hermans gave a presentation on using Pig and Python for big data analysis. He began with an introduction and background about himself. The presentation covered what big data is, challenges in working with large datasets, and how tools like MapReduce, Pig and Python can help address these challenges. As examples, he demonstrated using Pig to analyze US Census data and propagate satellite positions from Two Line Element sets. He also showed how to extend Pig with Python user defined functions.

Rhat OSS - Cloudera - Mike Olson - Hadoop Data Analytics In The Cloud

Rhat OSS - Cloudera - Mike Olson - Hadoop Data Analytics In The CloudCloudera, Inc. This document discusses the history and capabilities of Hadoop, an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It describes how Hadoop was developed from Doug Cutting's work on Nutch in 2002 and its adoption by Yahoo! in 2006. It also provides an overview of Hadoop's key components: HDFS for distributed data storage, MapReduce for distributed computations, and its reliability features which handle faults through replication and rebalancing. Finally, it discusses options for deploying Hadoop in data centers or cloud services.

Houston Hadoop Meetup Presentation by Vikram Oberoi of Cloudera

Houston Hadoop Meetup Presentation by Vikram Oberoi of ClouderaMark Kerzner The document discusses Hadoop, an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It describes Hadoop's core components - the Hadoop Distributed File System (HDFS) for scalable data storage, and MapReduce for distributed processing of large datasets in parallel. Typical problems suited for Hadoop involve complex data from multiple sources that need to be consolidated, stored inexpensively at scale, and processed in parallel across the cluster.

Petabyte scale on commodity infrastructure

Petabyte scale on commodity infrastructureelliando dias This document discusses Hadoop, an open-source software framework for distributed storage and processing of large datasets across clusters of commodity servers. It describes how Hadoop addresses the need to reliably process huge datasets using a distributed file system and MapReduce processing on commodity hardware. It also provides details on how Hadoop has been implemented and used at Yahoo to process petabytes of data and support thousands of jobs weekly on large clusters.

Dan node meetup_socket_talk

Dan node meetup_socket_talkIshi von Meier This document summarizes Dan Getelman's presentation on using Node.js and Socket.io for real-time functionality at Lore. It discusses Lore's architecture using a front-end framework, Node.js, Socket.io for real-time communications, and a Python API. It describes how they ended up with this architecture to build the best experience for teachers and students. It also outlines how their system works with Redis for messaging, caching, and publishing updates, and how the front-end receives and handles messages.

More from Steve Loughran (20)

Hadoop Vectored IO

Hadoop Vectored IOSteve Loughran Description of Hadoop vectored IO API with benchmark results showing 10-20% speedup in TPC-DS query times

The age of rename() is over

The age of rename() is overSteve Loughran The document discusses how storage models need to evolve as the underlying technologies change. Object stores like S3 provide scale and high availability but lack semantics and performance of file systems. Non-volatile memory also challenges current models. The POSIX file system metaphor is ill-suited for object stores and NVM. SQL provides an alternative that abstracts away the underlying complexities, leaving just object-relational mapping and transaction isolation to address. The document examines renaming operations, asynchronous I/O, and persistent in-memory data structures as examples of areas where new models may be needed.

What does Rename Do: (detailed version)

What does Rename Do: (detailed version)Steve Loughran August 2018 version of my "What does rename() do", includes the full details on what the Hadoop MapReduce and Spark commit protocols are, so the audience will really understand why rename really, really matters

Put is the new rename: San Jose Summit Edition

Put is the new rename: San Jose Summit EditionSteve Loughran This is the June 2018 variant of the "Put is the new Rename Talk", looking at Hadoop stack integration with object stores, including S3, Azure storage and GCS.

@Dissidentbot: dissent will be automated!

@Dissidentbot: dissent will be automated!Steve Loughran This document outlines the development history of the Dissident bot from its creation in January 2017 to June 2018. It discusses improvements made over time including adding conversation mode, a TODO item to develop a Chomsky-Type-1 Grammar AI, and fixing a bug where conversation mode would spam the bot's username. It also provides details on the bot's configuration settings and methods used to detect spam, bots, and politicans spreading misinformation.

PUT is the new rename()

PUT is the new rename()Steve Loughran A review of the state of cloud store integration with the Hadoop stack in 2018; including S3Guard, the new S3A committers and S3 Select.

Presented at Dataworks Summit Berlin 2018, where the demos were live.

Extreme Programming Deployed

Extreme Programming DeployedSteve Loughran This document discusses the principles and practices of Extreme Programming (XP), an agile software development process. It describes XP as an intense, test-centric programming process focused on projects with high rates of change. Key practices include pair programming, test-driven development, planning with user stories and tasks, doing the simplest thing that could work, and refactoring code aggressively. Problems may include short-term "hill-climbing" solutions and risks of fundamental design errors. The document provides additional resources on XP and notes that the day's session will involve practicing XP techniques through pair programming.

Testing

TestingSteve Loughran 2005 talk on testing: I know your code doesn't work, because I know my own is broken. Covers CruiseControl & Apache Gump

I hate mocking

I hate mockingSteve Loughran Steve Loughran expresses dislike for mocking in tests because mock code reflects assumptions rather than reality. Any changes to the real code can break the tests, leading to false positives. Test failures are often "fixed" by editing the test or mock code, which could hide real problems. He proposes avoiding mock tests and instead adding functional tests against real infrastructure with fault injection for integration testing.

What does rename() do?

What does rename() do?Steve Loughran Berlin Buzzwords 2017 talk: A look at what our storage models, metaphors and APIs are, showing how we need to rethink the Posix APIs to work with object stores, while looking at different alternatives for local NVM.

This is the unabridged talk; the BBuzz talk was 20 minutes including demo and questions, so had ~half as many slides

Dancing Elephants: Working with Object Storage in Apache Spark and Hive

Dancing Elephants: Working with Object Storage in Apache Spark and HiveSteve Loughran A talk looking at the intricate details of working with an object store from Hadoop, Hive, Spark, etc, why the "filesystem" metaphor falls down, and what work myself and others have been up to to try and fix things

Apache Spark and Object Stores —for London Spark User Group

Apache Spark and Object Stores —for London Spark User GroupSteve Loughran The March 2017 version of the "Apache Spark and Object Stores", includes coverage of the Staging Committer. If you'd been at the talk you'd have seen the projector fail just before the demo. It worked earlier! Honest!

Hadoop, Hive, Spark and Object Stores

Hadoop, Hive, Spark and Object StoresSteve Loughran Cloud deployments of Apache Hadoop are becoming more commonplace. Yet Hadoop and it's applications don't integrate that well —something which starts right down at the file IO operations. This talk looks at how to make use of cloud object stores in Hadoop applications, including Hive and Spark. It will go from the foundational "what's an object store?" to the practical "what should I avoid" and the timely "what's new in Hadoop?" — the latter covering the improved S3 support in Hadoop 2.8+. I'll explore the details of benchmarking and improving object store IO in Hive and Spark, showing what developers can do in order to gain performance improvements in their own code —and equally, what they must avoid. Finally, I'll look at ongoing work, especially "S3Guard" and what its fast and consistent file metadata operations promise.

Hadoop and Kerberos: the Madness Beyond the Gate

Hadoop and Kerberos: the Madness Beyond the GateSteve Loughran Apachecon Big Data EU 2105 talk on Hadoop and Kerberos; needs to viewed with slide animation to make sense

Slider: Applications on YARN

Slider: Applications on YARNSteve Loughran This document discusses Apache Slider, which allows applications to be deployed and managed on Apache Hadoop YARN. Slider uses an Application Master, agents, and scripts to deploy applications defined in an XML package. The Application Master keeps applications in a desired state across YARN containers and handles lifecycle commands like start, stop, and scaling. Slider integrates with Apache Ambari for graphical management and configuration of applications on YARN.

YARN Services

YARN ServicesSteve Loughran This document discusses YARN services in Hadoop, which allow long-lived applications to run within a Hadoop cluster. YARN (Yet Another Resource Negotiator) provides an operating system-like platform for data processing by allowing various applications to share cluster resources. The document outlines features for long-lived services in YARN, including log aggregation, Kerberos token renewal, and service registration/discovery. It also discusses how Hadoop 2.6 and later versions implement these features to enable long-running applications that can withstand failures.

Datacentre stack

Datacentre stackSteve Loughran This document appears to be a list of terms related to computer science and data systems including HDFS, YARN, Kernighan, Cerf, Lamport, Codd, Knuth and SQL. It references people, technologies and concepts but provides no additional context or explanation.

Overview of slider project

Overview of slider projectSteve Loughran The document discusses Hortonworks' Slider project, which aims to simplify deploying and managing distributed applications on YARN. Slider provides a packaging format for applications, launches application components as YARN containers via an Application Master, and handles service registration and configuration management. It addresses limitations of earlier frameworks by supporting dynamic configurations, embedded usage, and integration with service discovery in Zookeeper.

2014 01-02-patching-workflow

2014 01-02-patching-workflowSteve Loughran workflow for patchng hadoop. uses the font P22 Typewriter which is why it renders oddly for everyone who doesn't have that installed

2013 11-19-hoya-status

2013 11-19-hoya-statusSteve Loughran This document discusses HBase on YARN (Hoya), which allows dynamic HBase clusters to be launched on a YARN cluster. Hoya is a Java tool that uses a JSON specification to deploy HBase clusters. The HBase master and region servers run as YARN containers while Zookeeper handles coordination. Hoya's Application Master interfaces with YARN to deploy, manage, and handle failures of the HBase cluster. This allows efficient sharing of resources and elastic scaling of HBase clusters based on workload.

Recently uploaded (20)

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Hadoop & Hep

- 1. Hadoop and HEP Simon Wednesday, 12 August 2009

- 2. About us • CMS will take 1-10PB of data a year • we’ll generate approx. the same in simulation data • It could run for 20-30 years • Have ~80 large computing centres around the world (>0.5PB, 100’s job slots each) • ~3000 members of the collaboration Wednesday, 12 August 2009

- 3. Why so much data? • We have a very big digital camera • Each event is ~1MB for normal running • size increases for HI and upgrade studies • Need many millions of events to get statistically significant results out for rare processes • In my thesis I started with ~5M events to see an eventual “signal” of ~300 Wednesday, 12 August 2009

- 4. What’s an event? • We have protons colliding, which contain quarks • Quarks interact to produce excited states of matter • These excited states decay and we record the decay products • We then work back from the products to “see” the original event • Many events happen at once • Think of working out how a carburettor works by crashing 6 cars together on a motorway Wednesday, 12 August 2009

- 5. An event Wednesday, 12 August 2009

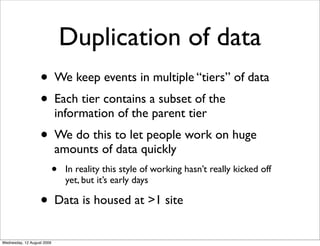

- 6. Duplication of data • We keep events in multiple “tiers” of data • Each tier contains a subset of the information of the parent tier • We do this to let people work on huge amounts of data quickly • In reality this style of working hasn’t really kicked off yet, but it’s early days • Data is housed at >1 site Wednesday, 12 August 2009

- 7. Duplication of work • One person’s signal is another’s background • Common framework (CMSSW) for analysis but very little ability to share large amounts of work • People coalesce into working groups, but these are generally small • While everyone is trying to do the same thing they’re all trying to do it in different ways • I suspect this is different from, say, Yahoo or last.fm Wednesday, 12 August 2009

- 8. How we work • Large, ~dedicated compute farms • PBS/Torque/Maui/SGE accessed via grid interface • ACL’s to prevent misuse of resources • Not worried about people reading our data, but worried they might delete it accidentally • Prevent DDoS Wednesday, 12 August 2009

- 9. Where we use Hadoop • We currently use Hadoop’s HDFS at some of our T2 sites, mainly in the US • Led by Nebraska, been very successful to date • I suspect more people will switch as centres expand • Administration tools as well as performance particularly appreciated • Alternatives are academic/research projects and tend to have a different focus (pub for details/rants) • Maintenance & stability of code a big issue • Storage in WN’s is also interesting Wednesday, 12 August 2009

- 10. What would we have to do to run analysis with Hadoop? • Split events sensibly over the cluster • By event? by file? don’t care? • Data files are ~2G - need to reliably reconstruct these files for export if we split them up • Have CMSSW run in Hadoop • Many, many pitfalls there, may not even be possible... Wednesday, 12 August 2009

- 11. Metadata • Lots of metadata associated with the data itself • Moving that to HBase or similar and mining with Hadoop would be interesting • Currently this is stored in big Oracle databases • Also, log mining - probably harder to get people interested in this Wednesday, 12 August 2009

- 12. Issues • Some analyses don’t map onto MapReduce • Data is complex and in a weird file format • CMSSW has a large memory foot print • Not efficient to run only a few events as start up/tear down is expensive • Sociologically it would be difficult to persuade people to move to MapReduce algorithms • Until people see benefits - demonstrating those benefits is hard, physicists don’t think in cost terms Wednesday, 12 August 2009