Hadoop Installation

- 1. A GUIDE TO HADOOP INSTALLATION BY WizIQ

- 2. Hadoop Installation • Putty connectivity • Java Installation (openjdk-7-jdk) • Group/User Creation &SSH Certification • Hadoop Install (Hadoop 2.2.0) • Hadoop configuration • Hadoop Services

- 3. Putty Connectivity User ununtu@<name> Make sure you have SSHAuth browse to .ppk file(location)

- 5. Java Installation • ubuntu@ip-10-45-133-21:~$ sudo apt-get install openjdk-7-jdk • Error : Err http://us-east- 1.ec2.archive.ubuntu.com/ubuntu/ precise- updates/main liblvm2app2.2 amd64 2.02.66-4ubuntu7.1 403 Forbidden] …… • Solution: ubuntu@ip-10-45-133-21:~$ sudo apt-get update ubuntu@ip-10-45-133-21:~$ sudo apt-get install openjdk-7-jdk ubuntu@ip-10-45-133-21:~$ cd /usr/lib/jvm • Error: ubuntu@ip-10-45-133-21:/usr/lib/jvm$ ln -s java-7-openjdk-amd64 jdk ln: failed to create symbolic link `jdk': Permission denied ubuntu@ip-10-45-133-21:/usr/lib/jvm$ • Solution: ubuntu@ip-10-45-133-21:/usr/lib/jvm$sudo su root@ip-10-45-133-21:/usr/lib/jvm# ln -s java- 7-openjdk-amd64 jdk

- 6. root@ip-10-45-133-21:/usr/lib/jvm# sudo apt-get install openssh-server

- 7. Group/User Creation &SSH Certification • ubuntu@ip-10-45-133-21$ sudo addgroup hadoop • ubuntu@ip-10-45-133-21$ sudo adduser --ingroup hadoop hduser • ubuntu@ip-10-45-133-21$ sudo adduser hduser sudo **After user is created, re-login into ubuntu using hduser ubuntu@ip-10-45-133-21:~$ su –l hduser Password: Setup SSH Certificate hduser@ip-10-45-133-21$ ssh-keygen -t rsa -P '' ... Your identification has been saved in /home/hduser/.ssh/id_rsa. Your public key has been saved in /home/hduser/.ssh/id_rsa.pub. ... hduser@ip-10-45-133-21$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys hduser@ip-10-45-133-21$ ssh localhost **

- 8. **Note: I have ignored the password here for %id_rsa:

- 9. Hadoop Install(Hadoop 2.2.0) • hduser@ip-10-45-133-21:~# su -l hduser • hduser@ip-10-45-133-21$ cd ~ • hduser@ip-10-45-133-21$ wget http://www.trieuvan.com/apache/hadoop/c ommon/hadoop- hduser@ip-10-45-133- 212.2.0/hadoop-2.2.0.tar.gz • hduser@ip-10-45-133-21$ sudo tar vxzf hadoop-2.2.0.tar.gz -C /usr/local • hduser@ip-10-45-133-21$ cd /usr/local • hduser@ip-10-45-133-21$ sudo mv hadoop- 2.2.0 hadoop • hduser@ip-10-45-133-21$ sudo chown -R hduser:hadoop hadoop

- 10. Hadoop Configuration • Setup Hadoop Environment Variables: • hduser@ip-10-45-133-21$cd ~ • hduser@ip-10-45-133-21$vi .bashrc Copy & Paste following to the end of the file: #Hadoop variables export JAVA_HOME=/usr/lib/jvm/jdk/ export HADOOP_INSTALL=/usr/local/hadoop export PATH=$PATH:$HADOOP_INSTALL/bin export PATH=$PATH:$HADOOP_INSTALL/sbin export HADOOP_MAPRED_HOME=$HADOOP_INSTALL export HADOOP_COMMON_HOME=$HADOOP_INSTALL export HADOOP_HDFS_HOME=$HADOOP_INSTALL export YARN_HOME=$HADOOP_INSTALL ###end of paste hduser@ip-10-45-133-21$ cd /usr/local/hadoop/etc/hadoop hduser@ip-10-45-133-21$ vi hadoop-env.sh #modify JAVA_HOME export JAVA_HOME=/usr/lib/jvm/jdk/

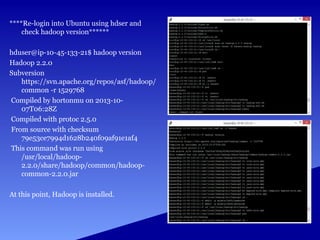

- 11. ****Re-login into Ubuntu using hdser and check hadoop version****** hduser@ip-10-45-133-21$ hadoop version Hadoop 2.2.0 Subversion https://svn.apache.org/repos/asf/hadoop/ common -r 1529768 Compiled by hortonmu on 2013-10- 07T06:28Z Compiled with protoc 2.5.0 From source with checksum 79e53ce7994d1628b240f09af91e1af4 This command was run using /usr/local/hadoop- 2.2.0/share/hadoop/common/hadoop- common-2.2.0.jar At this point, Hadoop is installed.

- 12. Configure Hadoop : hduser@ip-10-45-133-21$ cd /usr/local/hadoop/etc/hadoop hduser@ip-10-45-133-21$ vi core-site.xml

- 13. #Paste following between <configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property> hduser@ip-10-45-133-21$ vi yarn-site.xml #Paste following between <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> hduser@ip-10-45-133-21$ mv mapred-site.xml.template mapred-site.xml hduser@ip-10-45-133-21$ vi mapred-site.xml

- 14. • #Paste following between <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> hduser@ip-10-45-133-21$ cd ~ hduser@ip-10-45-133-21$ mkdir -p mydata/hdfs/namenode hduser@ip-10-45-133-21$ mkdir -p mydata/hdfs/datanode hduser@ip-10-45-133-21$ cd /usr/local/hadoop/etc/hadoop hduser@ip-10-45-133-21$ vi hdfs-site.xml • #Paste following between <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/hduser/mydata/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/hduser/mydata/hdfs/datanode</value> </property>

- 15. Format Namenode : hduser@ip-10-45-133-21$ hdfs namenode -format

- 16. Hadoop Services hduser@ip-10-45-133-21$ start-dfs.sh .... hduser@ip-10-45-133-21$ start-yarn.sh .... Error:

- 17. Tried checking again: 1) SSH Certification creation 2) ssh localhost 3) vi hadoop-env.sh 4) vi hdfs-site.xml 5) Namenode and datanode directories 6) reformatted namenode 7) Started the services That’s it after that it started working as below screens. Not sure what fixed the issue as observed in next slide • Error1: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable …. • Error2: Localhost: Permission Denied (Publickey)…

- 19. • hduser@ip-10-45-133-21:~$ jps • All went on successfully and could see below services running.

- 20. • Reconnected and works fine

- 21. THANK YOU! Enroll now for Hadoop and Big Data Training @ WizIQ.com Visit: http://www.wiziq.com/course/21308-hadoop-big-data-training For more information, feel free to contact us at [email protected]

![Java Installation

• ubuntu@ip-10-45-133-21:~$ sudo apt-get

install openjdk-7-jdk

• Error : Err http://us-east-

1.ec2.archive.ubuntu.com/ubuntu/ precise-

updates/main liblvm2app2.2 amd64

2.02.66-4ubuntu7.1

403 Forbidden] ……

• Solution: ubuntu@ip-10-45-133-21:~$

sudo apt-get update

ubuntu@ip-10-45-133-21:~$ sudo apt-get

install openjdk-7-jdk

ubuntu@ip-10-45-133-21:~$ cd /usr/lib/jvm

• Error:

ubuntu@ip-10-45-133-21:/usr/lib/jvm$ ln -s

java-7-openjdk-amd64 jdk

ln: failed to create symbolic link `jdk':

Permission denied

ubuntu@ip-10-45-133-21:/usr/lib/jvm$

• Solution:

ubuntu@ip-10-45-133-21:/usr/lib/jvm$sudo su

root@ip-10-45-133-21:/usr/lib/jvm# ln -s java-

7-openjdk-amd64 jdk](https://image.slidesharecdn.com/hadoopinstallation-140806075401-phpapp01/85/Hadoop-Installation-5-320.jpg)