Hadoop Overview kdd2011

8 likes2,224 views

In KDD2011, Vijay Narayanan (Yahoo!) and Milind Bhandarkar (Greenplum Labs, EMC) conducted a tutorial on "Modeling with Hadoop". This is the first half of the tutorial.

1 of 62

Downloaded 286 times

Ad

Recommended

Big data architectures and the data lake

Big data architectures and the data lakeJames Serra The document provides an overview of big data architectures and the data lake concept. It discusses why organizations are adopting data lakes to handle increasing data volumes and varieties. The key aspects covered include:

- Defining top-down and bottom-up approaches to data management

- Explaining what a data lake is and how Hadoop can function as the data lake

- Describing how a modern data warehouse combines features of a traditional data warehouse and data lake

- Discussing how federated querying allows data to be accessed across multiple sources

- Highlighting benefits of implementing big data solutions in the cloud

- Comparing shared-nothing, massively parallel processing (MPP) architectures to symmetric multi-processing (

Data Lakehouse, Data Mesh, and Data Fabric (r1)

Data Lakehouse, Data Mesh, and Data Fabric (r1)James Serra So many buzzwords of late: Data Lakehouse, Data Mesh, and Data Fabric. What do all these terms mean and how do they compare to a data warehouse? In this session I’ll cover all of them in detail and compare the pros and cons of each. I’ll include use cases so you can see what approach will work best for your big data needs.

Apache Iceberg: An Architectural Look Under the Covers

Apache Iceberg: An Architectural Look Under the CoversScyllaDB Data Lakes have been built with a desire to democratize data - to allow more and more people, tools, and applications to make use of data. A key capability needed to achieve it is hiding the complexity of underlying data structures and physical data storage from users. The de-facto standard has been the Hive table format addresses some of these problems but falls short at data, user, and application scale. So what is the answer? Apache Iceberg.

Apache Iceberg table format is now in use and contributed to by many leading tech companies like Netflix, Apple, Airbnb, LinkedIn, Dremio, Expedia, and AWS.

Watch Alex Merced, Developer Advocate at Dremio, as he describes the open architecture and performance-oriented capabilities of Apache Iceberg.

You will learn:

• The issues that arise when using the Hive table format at scale, and why we need a new table format

• How a straightforward, elegant change in table format structure has enormous positive effects

• The underlying architecture of an Apache Iceberg table, how a query against an Iceberg table works, and how the table’s underlying structure changes as CRUD operations are done on it

• The resulting benefits of this architectural design

Data Lake Overview

Data Lake OverviewJames Serra The data lake has become extremely popular, but there is still confusion on how it should be used. In this presentation I will cover common big data architectures that use the data lake, the characteristics and benefits of a data lake, and how it works in conjunction with a relational data warehouse. Then I’ll go into details on using Azure Data Lake Store Gen2 as your data lake, and various typical use cases of the data lake. As a bonus I’ll talk about how to organize a data lake and discuss the various products that can be used in a modern data warehouse.

Data Lakehouse, Data Mesh, and Data Fabric (r2)

Data Lakehouse, Data Mesh, and Data Fabric (r2)James Serra So many buzzwords of late: Data Lakehouse, Data Mesh, and Data Fabric. What do all these terms mean and how do they compare to a modern data warehouse? In this session I’ll cover all of them in detail and compare the pros and cons of each. They all may sound great in theory, but I'll dig into the concerns you need to be aware of before taking the plunge. I’ll also include use cases so you can see what approach will work best for your big data needs. And I'll discuss Microsoft version of the data mesh.

Delta lake and the delta architecture

Delta lake and the delta architectureAdam Doyle - Delta Lake is an open source project that provides ACID transactions, schema enforcement, and time travel capabilities to data stored in data lakes such as S3 and ADLS.

- It allows building a "Lakehouse" architecture where the same data can be used for both batch and streaming analytics.

- Key features include ACID transactions, scalable metadata handling, time travel to view past data states, schema enforcement, schema evolution, and change data capture for streaming inserts, updates and deletes.

Introduction to snowflake

Introduction to snowflakeSunil Gurav Snowflake is an analytic data warehouse provided as software-as-a-service (SaaS). It uses a unique architecture designed for the cloud, with a shared-disk database and shared-nothing architecture. Snowflake's architecture consists of three layers - the database layer, query processing layer, and cloud services layer - which are deployed and managed entirely on cloud platforms like AWS and Azure. Snowflake offers different editions like Standard, Premier, Enterprise, and Enterprise for Sensitive Data that provide additional features, support, and security capabilities.

Data warehouse presentaion

Data warehouse presentaionsridhark1981 The document discusses data warehouses and their characteristics. A data warehouse integrates data from multiple sources and transforms it into a multidimensional structure to support decision making. It has a complex architecture including source systems, a staging area, operational data stores, and the data warehouse. A data warehouse also has a complex lifecycle as business rules change and new data requirements emerge over time, requiring the architecture to evolve.

Data Warehousing Trends, Best Practices, and Future Outlook

Data Warehousing Trends, Best Practices, and Future OutlookJames Serra Over the last decade, the 3Vs of data - Volume, Velocity & Variety has grown massively. The Big Data revolution has completely changed the way companies collect, analyze & store data. Advancements in cloud-based data warehousing technologies have empowered companies to fully leverage big data without heavy investments both in terms of time and resources. But, that doesn’t mean building and managing a cloud data warehouse isn’t accompanied by any challenges. From deciding on a service provider to the design architecture, deploying a data warehouse tailored to your business needs is a strenuous undertaking. Looking to deploy a data warehouse to scale your company’s data infrastructure or still on the fence? In this presentation you will gain insights into the current Data Warehousing trends, best practices, and future outlook. Learn how to build your data warehouse with the help of real-life use-cases and discussion on commonly faced challenges. In this session you will learn:

- Choosing the best solution - Data Lake vs. Data Warehouse vs. Data Mart

- Choosing the best Data Warehouse design methodologies: Data Vault vs. Kimball vs. Inmon

- Step by step approach to building an effective data warehouse architecture

- Common reasons for the failure of data warehouse implementations and how to avoid them

Apache Iceberg Presentation for the St. Louis Big Data IDEA

Apache Iceberg Presentation for the St. Louis Big Data IDEAAdam Doyle Presentation on Apache Iceberg for the February 2021 St. Louis Big Data IDEA. Apache Iceberg is an alternative database platform that works with Hive and Spark.

Introducing the Snowflake Computing Cloud Data Warehouse

Introducing the Snowflake Computing Cloud Data WarehouseSnowflake Computing Introducing Snowflake, an elastic data warehouse delivered as a service in the cloud. It aims to simplify data warehousing by removing the need for customers to manage infrastructure, scaling, and tuning. Snowflake uses a multi-cluster architecture to provide elastic scaling of storage, compute, and concurrency. It can bring together structured and semi-structured data for analysis without requiring data transformation. Customers have seen significant improvements in performance, cost savings, and the ability to add new workloads compared to traditional on-premises data warehousing solutions.

Building the Data Lake with Azure Data Factory and Data Lake Analytics

Building the Data Lake with Azure Data Factory and Data Lake AnalyticsKhalid Salama In essence, a data lake is commodity distributed file system that acts as a repository to hold raw data file extracts of all the enterprise source systems, so that it can serve the data management and analytics needs of the business. A data lake system provides means to ingest data, perform scalable big data processing, and serve information, in addition to manage, monitor and secure the it environment. In these slide, we discuss building data lakes using Azure Data Factory and Data Lake Analytics. We delve into the architecture if the data lake and explore its various components. We also describe the various data ingestion scenarios and considerations. We introduce the Azure Data Lake Store, then we discuss how to build Azure Data Factory pipeline to ingest the data lake. After that, we move into big data processing using Data Lake Analytics, and we delve into U-SQL.

DataOps - The Foundation for Your Agile Data Architecture

DataOps - The Foundation for Your Agile Data ArchitectureDATAVERSITY Achieving agility in data and analytics is hard. It’s no secret that most data organizations struggle to deliver the on-demand data products that their business customers demand. Recently, there has been much hype around new design patterns that promise to deliver this much sought-after agility.

In this webinar, Chris Bergh, CEO and Head Chef of DataKitchen will cut through the noise and describe several elegant and effective data architecture design patterns that deliver low errors, rapid development, and high levels of collaboration. He’ll cover:

• DataOps, Data Mesh, Functional Design, and Hub & Spoke design patterns;

• Where Data Fabric fits into your architecture;

• How different patterns can work together to maximize agility; and

• How a DataOps platform serves as the foundational superstructure for your agile architecture.

Differentiate Big Data vs Data Warehouse use cases for a cloud solution

Differentiate Big Data vs Data Warehouse use cases for a cloud solutionJames Serra It can be quite challenging keeping up with the frequent updates to the Microsoft products and understanding all their use cases and how all the products fit together. In this session we will differentiate the use cases for each of the Microsoft services, explaining and demonstrating what is good and what isn't, in order for you to position, design and deliver the proper adoption use cases for each with your customers. We will cover a wide range of products such as Databricks, SQL Data Warehouse, HDInsight, Azure Data Lake Analytics, Azure Data Lake Store, Blob storage, and AAS as well as high-level concepts such as when to use a data lake. We will also review the most common reference architectures (“patterns”) witnessed in customer adoption.

Building an Effective Data Warehouse Architecture

Building an Effective Data Warehouse ArchitectureJames Serra Why use a data warehouse? What is the best methodology to use when creating a data warehouse? Should I use a normalized or dimensional approach? What is the difference between the Kimball and Inmon methodologies? Does the new Tabular model in SQL Server 2012 change things? What is the difference between a data warehouse and a data mart? Is there hardware that is optimized for a data warehouse? What if I have a ton of data? During this session James will help you to answer these questions.

Snowflake Datawarehouse Architecturing

Snowflake Datawarehouse ArchitecturingIshan Bhawantha Hewanayake Introduction to Snowflake Datawarehouse and Architecture for Big data company. Centralized data management. Snowpipe and Copy into a command for data loading. Stream loading and Batch Processing.

Data Mesh

Data MeshPiethein Strengholt Presentation on Data Mesh: The paradigm shift is a new type of eco-system architecture, which is a shift left towards a modern distributed architecture in which it allows domain-specific data and views “data-as-a-product,” enabling each domain to handle its own data pipelines.

Intro to Delta Lake

Intro to Delta LakeDatabricks Delta Lake brings reliability, performance, and security to data lakes. It provides ACID transactions, schema enforcement, and unified handling of batch and streaming data to make data lakes more reliable. Delta Lake also features lightning fast query performance through its optimized Delta Engine. It enables security and compliance at scale through access controls and versioning of data. Delta Lake further offers an open approach and avoids vendor lock-in by using open formats like Parquet that can integrate with various ecosystems.

Data Pipline Observability meetup

Data Pipline Observability meetup Omid Vahdaty This document discusses the need for observability in data pipelines. It notes that real data pipelines often fail or take a long time to rerun without providing any insight into what went wrong. This is because of frequent code, data, dependency, and infrastructure changes. The document recommends taking a production engineering approach to observability using metrics, logging, and alerting tools. It also suggests experiment management and encapsulating reporting in notebooks. Most importantly, it stresses measuring everything through metrics at all stages of data ingestion and processing to better understand where issues occur.

Elastic Data Warehousing

Elastic Data WarehousingSnowflake Computing The document discusses elastic data warehousing using Snowflake's cloud-based data warehouse as a service. Traditional data warehousing and NoSQL solutions are costly and complex to manage. Snowflake provides a fully managed elastic cloud data warehouse that can scale instantly. It allows consolidating all data in one place and enables fast analytics on diverse data sources at massive scale, without the infrastructure complexity or management overhead of other solutions. Customers have realized significantly faster analytics, lower costs, and the ability to easily add new workloads compared to their previous data platforms.

Apache HBase™

Apache HBase™Prashant Gupta The document provides an introduction to NoSQL and HBase. It discusses what NoSQL is, the different types of NoSQL databases, and compares NoSQL to SQL databases. It then focuses on HBase, describing its architecture and components like HMaster, regionservers, Zookeeper. It explains how HBase stores and retrieves data, the write process involving memstores and compaction. It also covers HBase shell commands for creating, inserting, querying and deleting data.

Data Engineer's Lunch #83: Strategies for Migration to Apache Iceberg

Data Engineer's Lunch #83: Strategies for Migration to Apache IcebergAnant Corporation In this talk, Dremio Developer Advocate, Alex Merced, discusses strategies for migrating your existing data over to Apache Iceberg. He'll go over the following:

How to Migrate Hive, Delta Lake, JSON, and CSV sources to Apache Iceberg

Pros and Cons of an In-place or Shadow Migration

Migrating between Apache Iceberg catalogs Hive/Glue -- Arctic/Nessie

Demystifying Data Warehousing as a Service - DFW

Demystifying Data Warehousing as a Service - DFWKent Graziano This document provides an overview and introduction to Snowflake's cloud data warehousing capabilities. It begins with the speaker's background and credentials. It then discusses common data challenges organizations face today around data silos, inflexibility, and complexity. The document defines what a cloud data warehouse as a service (DWaaS) is and explains how it can help address these challenges. It provides an agenda for the topics to be covered, including features of Snowflake's cloud DWaaS and how it enables use cases like data mart consolidation and integrated data analytics. The document highlights key aspects of Snowflake's architecture and technology.

Hadoop Tutorial For Beginners

Hadoop Tutorial For BeginnersDataflair Web Services Pvt Ltd The presentation covers following topics: 1) Hadoop Introduction 2) Hadoop nodes and daemons 3) Architecture 4) Hadoop best features 5) Hadoop characteristics. For more further knowledge of Hadoop refer the link: http://data-flair.training/blogs/hadoop-tutorial-for-beginners/

The Marriage of the Data Lake and the Data Warehouse and Why You Need Both

The Marriage of the Data Lake and the Data Warehouse and Why You Need BothAdaryl "Bob" Wakefield, MBA In the past few years, the term "data lake" has leaked into our lexicon. But what exactly IS a data lake? Some IT managers confuse data lakes with data warehouses. Some people think data lakes replace data warehouses. Both of these conclusions are false. Their is room in your data architecture for both data lakes and data warehouses. They both have different use cases and those use cases can be complementary.

Todd Reichmuth, Solutions Engineer with Snowflake Computing, has spent the past 18 years in the world of Data Warehousing and Big Data. He spent that time at Netezza and then later at IBM Data. Earlier in 2018 making the jump to the cloud at Snowflake Computing.

Mike Myer, Sales Director with Snowflake Computing, has spent the past 6 years in the world of Security and looking to drive awareness to better Data Warehousing and Big Data solutions available! Was previously at local tech companies FireMon and Lockpath and decided to join Snowflake due to the disruptive technology that's truly helping folks in the Big Data world on a day to day basis.

Cloud DW technology trends and considerations for enterprises to apply snowflake

Cloud DW technology trends and considerations for enterprises to apply snowflakeSANG WON PARK 올해 처음 오프라인으로 진행된 "한국 데이터 엔니지어 모임"에서 발표한 cloud dw와 snowflake라는 주제로 발표한 내용을 정리하여 공유함. (2022.07)

[ 발표 주제 ]

Cloud DW 기술 트렌드와 Snowflake 적용

- Modern Data Stack에서 Cloud DW의 역할

- 기존 Data Lake + DW와 무엇이 다른가?

- Data Engineer 관점에서 어떻게 사용하면 좋을까? (기능/성능/비용 측면의 장점/단점)

[ 주요 내용 ]

- 최근 많은 Data Engineer가 기존 기술 스택(Hadoop, Spark, DW 등)의 기술적/운영적 한계를 극복하기 위한 고민중.

- 특히 Cloud의 장점과 운영 및 성능을 고려한 Cloud DW(AWS Redshift, GCP BigQuery, DataBricks, Snowflake)를 고려

- 이 중 Snowflake를 실제 프로젝트에 적용한 경험과 기술적인 특징/장점/단점을 공유하고자 함.

작년부터 정부의 데이터 정책 변화와 Cloud 기반의 기술 변화 가속화로 기업의 데이터 환경에도 많은 변화가 발생하고 있고, 기업들은 이에 적응하기 위한 다양한 시도를 하고 있다.

그 중심에 cloud dw (또는 Lake house)가 위치하고 있으며, 이를 기반으로 통합 데이터 플랫폼으로의 아키텍처로 변화하고 있다. 하지만, 아직까지 기존 DW 제품과 주요 CSP(AWS, GCP, Azure)의 제품군을 다양하게 시도하고 있으나, 기대와 다르게 생각보나 낮은 성능 또는 비싼 사용료, 운영의 복잡성으로 인한 많은 시행착오를 거치고 있다.

이 상황에서 작년에 처음 검토한 snowflake의 다양한 기능들이 기업들의 고민과 문제를 상당부분 손쉽게 해결할 수 있다는 것을 확인할 수 있었고, 이를 이용하여 실제 많은 기업들에게 적용하기 위한 POC를 수행하거나, 실제 적용하는 프로젝트를 수행하게 되었다.

본 발표 내용은 이러한 경험을 기반으로 기업(그리고 실제 업무를 수행할 Data Engineer) 관점에서 snowflake가 어떻게 문제를 해결할 수 있는지 cloud dw를 도입/활용/확장 하는 단계별로 문제와 해결 방안을 중심으로 설명하였다.

https://blog.naver.com/freepsw?Redirect=Update&logNo=222815591918

Introduction to Hadoop and Hadoop component

Introduction to Hadoop and Hadoop component rebeccatho This document provides an introduction to Apache Hadoop, which is an open-source software framework for distributed storage and processing of large datasets. It discusses Hadoop's main components of MapReduce and HDFS. MapReduce is a programming model for processing large datasets in a distributed manner, while HDFS provides distributed, fault-tolerant storage. Hadoop runs on commodity computer clusters and can scale to thousands of nodes.

Scaling hadoopapplications

Scaling hadoopapplicationsMilind Bhandarkar The document discusses best practices for scaling Hadoop applications. It covers causes of sublinear scalability like sequential bottlenecks, load imbalance, over-partitioning, and synchronization issues. It also provides equations for analyzing scalability and discusses techniques like reducing algorithmic overheads, increasing task granularity, and using compression. The document recommends using higher-level languages, tuning configuration parameters, and minimizing remote procedure calls to improve scalability.

Hadoop: The Default Machine Learning Platform ?

Hadoop: The Default Machine Learning Platform ?Milind Bhandarkar Apache Hadoop, since its humble beginning as an execution engine for web crawler and building search indexes, has matured into a general purpose distributed application platform and data store. Large Scale Machine Learning (LSML) techniques and algorithms proved to be quite tricky for Hadoop to handle, ever since we started offering Hadoop as a service at Yahoo in 2006. In this talk, I will discuss early experiments of implementing LSML algorithms on Hadoop at Yahoo. I will describe how it changed Hadoop, and led to generalization of the Hadoop platform to accommodate programming paradigms other than MapReduce. I will unveil some of our recent efforts to incorporate diverse LSML runtimes into Hadoop, evolving it to become *THE* LSML platform. I will also make a case for an industry-standard LSML benchmark, based on common deep analytics pipelines that utilize LSML workload.

Ad

More Related Content

What's hot (20)

Data Warehousing Trends, Best Practices, and Future Outlook

Data Warehousing Trends, Best Practices, and Future OutlookJames Serra Over the last decade, the 3Vs of data - Volume, Velocity & Variety has grown massively. The Big Data revolution has completely changed the way companies collect, analyze & store data. Advancements in cloud-based data warehousing technologies have empowered companies to fully leverage big data without heavy investments both in terms of time and resources. But, that doesn’t mean building and managing a cloud data warehouse isn’t accompanied by any challenges. From deciding on a service provider to the design architecture, deploying a data warehouse tailored to your business needs is a strenuous undertaking. Looking to deploy a data warehouse to scale your company’s data infrastructure or still on the fence? In this presentation you will gain insights into the current Data Warehousing trends, best practices, and future outlook. Learn how to build your data warehouse with the help of real-life use-cases and discussion on commonly faced challenges. In this session you will learn:

- Choosing the best solution - Data Lake vs. Data Warehouse vs. Data Mart

- Choosing the best Data Warehouse design methodologies: Data Vault vs. Kimball vs. Inmon

- Step by step approach to building an effective data warehouse architecture

- Common reasons for the failure of data warehouse implementations and how to avoid them

Apache Iceberg Presentation for the St. Louis Big Data IDEA

Apache Iceberg Presentation for the St. Louis Big Data IDEAAdam Doyle Presentation on Apache Iceberg for the February 2021 St. Louis Big Data IDEA. Apache Iceberg is an alternative database platform that works with Hive and Spark.

Introducing the Snowflake Computing Cloud Data Warehouse

Introducing the Snowflake Computing Cloud Data WarehouseSnowflake Computing Introducing Snowflake, an elastic data warehouse delivered as a service in the cloud. It aims to simplify data warehousing by removing the need for customers to manage infrastructure, scaling, and tuning. Snowflake uses a multi-cluster architecture to provide elastic scaling of storage, compute, and concurrency. It can bring together structured and semi-structured data for analysis without requiring data transformation. Customers have seen significant improvements in performance, cost savings, and the ability to add new workloads compared to traditional on-premises data warehousing solutions.

Building the Data Lake with Azure Data Factory and Data Lake Analytics

Building the Data Lake with Azure Data Factory and Data Lake AnalyticsKhalid Salama In essence, a data lake is commodity distributed file system that acts as a repository to hold raw data file extracts of all the enterprise source systems, so that it can serve the data management and analytics needs of the business. A data lake system provides means to ingest data, perform scalable big data processing, and serve information, in addition to manage, monitor and secure the it environment. In these slide, we discuss building data lakes using Azure Data Factory and Data Lake Analytics. We delve into the architecture if the data lake and explore its various components. We also describe the various data ingestion scenarios and considerations. We introduce the Azure Data Lake Store, then we discuss how to build Azure Data Factory pipeline to ingest the data lake. After that, we move into big data processing using Data Lake Analytics, and we delve into U-SQL.

DataOps - The Foundation for Your Agile Data Architecture

DataOps - The Foundation for Your Agile Data ArchitectureDATAVERSITY Achieving agility in data and analytics is hard. It’s no secret that most data organizations struggle to deliver the on-demand data products that their business customers demand. Recently, there has been much hype around new design patterns that promise to deliver this much sought-after agility.

In this webinar, Chris Bergh, CEO and Head Chef of DataKitchen will cut through the noise and describe several elegant and effective data architecture design patterns that deliver low errors, rapid development, and high levels of collaboration. He’ll cover:

• DataOps, Data Mesh, Functional Design, and Hub & Spoke design patterns;

• Where Data Fabric fits into your architecture;

• How different patterns can work together to maximize agility; and

• How a DataOps platform serves as the foundational superstructure for your agile architecture.

Differentiate Big Data vs Data Warehouse use cases for a cloud solution

Differentiate Big Data vs Data Warehouse use cases for a cloud solutionJames Serra It can be quite challenging keeping up with the frequent updates to the Microsoft products and understanding all their use cases and how all the products fit together. In this session we will differentiate the use cases for each of the Microsoft services, explaining and demonstrating what is good and what isn't, in order for you to position, design and deliver the proper adoption use cases for each with your customers. We will cover a wide range of products such as Databricks, SQL Data Warehouse, HDInsight, Azure Data Lake Analytics, Azure Data Lake Store, Blob storage, and AAS as well as high-level concepts such as when to use a data lake. We will also review the most common reference architectures (“patterns”) witnessed in customer adoption.

Building an Effective Data Warehouse Architecture

Building an Effective Data Warehouse ArchitectureJames Serra Why use a data warehouse? What is the best methodology to use when creating a data warehouse? Should I use a normalized or dimensional approach? What is the difference between the Kimball and Inmon methodologies? Does the new Tabular model in SQL Server 2012 change things? What is the difference between a data warehouse and a data mart? Is there hardware that is optimized for a data warehouse? What if I have a ton of data? During this session James will help you to answer these questions.

Snowflake Datawarehouse Architecturing

Snowflake Datawarehouse ArchitecturingIshan Bhawantha Hewanayake Introduction to Snowflake Datawarehouse and Architecture for Big data company. Centralized data management. Snowpipe and Copy into a command for data loading. Stream loading and Batch Processing.

Data Mesh

Data MeshPiethein Strengholt Presentation on Data Mesh: The paradigm shift is a new type of eco-system architecture, which is a shift left towards a modern distributed architecture in which it allows domain-specific data and views “data-as-a-product,” enabling each domain to handle its own data pipelines.

Intro to Delta Lake

Intro to Delta LakeDatabricks Delta Lake brings reliability, performance, and security to data lakes. It provides ACID transactions, schema enforcement, and unified handling of batch and streaming data to make data lakes more reliable. Delta Lake also features lightning fast query performance through its optimized Delta Engine. It enables security and compliance at scale through access controls and versioning of data. Delta Lake further offers an open approach and avoids vendor lock-in by using open formats like Parquet that can integrate with various ecosystems.

Data Pipline Observability meetup

Data Pipline Observability meetup Omid Vahdaty This document discusses the need for observability in data pipelines. It notes that real data pipelines often fail or take a long time to rerun without providing any insight into what went wrong. This is because of frequent code, data, dependency, and infrastructure changes. The document recommends taking a production engineering approach to observability using metrics, logging, and alerting tools. It also suggests experiment management and encapsulating reporting in notebooks. Most importantly, it stresses measuring everything through metrics at all stages of data ingestion and processing to better understand where issues occur.

Elastic Data Warehousing

Elastic Data WarehousingSnowflake Computing The document discusses elastic data warehousing using Snowflake's cloud-based data warehouse as a service. Traditional data warehousing and NoSQL solutions are costly and complex to manage. Snowflake provides a fully managed elastic cloud data warehouse that can scale instantly. It allows consolidating all data in one place and enables fast analytics on diverse data sources at massive scale, without the infrastructure complexity or management overhead of other solutions. Customers have realized significantly faster analytics, lower costs, and the ability to easily add new workloads compared to their previous data platforms.

Apache HBase™

Apache HBase™Prashant Gupta The document provides an introduction to NoSQL and HBase. It discusses what NoSQL is, the different types of NoSQL databases, and compares NoSQL to SQL databases. It then focuses on HBase, describing its architecture and components like HMaster, regionservers, Zookeeper. It explains how HBase stores and retrieves data, the write process involving memstores and compaction. It also covers HBase shell commands for creating, inserting, querying and deleting data.

Data Engineer's Lunch #83: Strategies for Migration to Apache Iceberg

Data Engineer's Lunch #83: Strategies for Migration to Apache IcebergAnant Corporation In this talk, Dremio Developer Advocate, Alex Merced, discusses strategies for migrating your existing data over to Apache Iceberg. He'll go over the following:

How to Migrate Hive, Delta Lake, JSON, and CSV sources to Apache Iceberg

Pros and Cons of an In-place or Shadow Migration

Migrating between Apache Iceberg catalogs Hive/Glue -- Arctic/Nessie

Demystifying Data Warehousing as a Service - DFW

Demystifying Data Warehousing as a Service - DFWKent Graziano This document provides an overview and introduction to Snowflake's cloud data warehousing capabilities. It begins with the speaker's background and credentials. It then discusses common data challenges organizations face today around data silos, inflexibility, and complexity. The document defines what a cloud data warehouse as a service (DWaaS) is and explains how it can help address these challenges. It provides an agenda for the topics to be covered, including features of Snowflake's cloud DWaaS and how it enables use cases like data mart consolidation and integrated data analytics. The document highlights key aspects of Snowflake's architecture and technology.

Hadoop Tutorial For Beginners

Hadoop Tutorial For BeginnersDataflair Web Services Pvt Ltd The presentation covers following topics: 1) Hadoop Introduction 2) Hadoop nodes and daemons 3) Architecture 4) Hadoop best features 5) Hadoop characteristics. For more further knowledge of Hadoop refer the link: http://data-flair.training/blogs/hadoop-tutorial-for-beginners/

The Marriage of the Data Lake and the Data Warehouse and Why You Need Both

The Marriage of the Data Lake and the Data Warehouse and Why You Need BothAdaryl "Bob" Wakefield, MBA In the past few years, the term "data lake" has leaked into our lexicon. But what exactly IS a data lake? Some IT managers confuse data lakes with data warehouses. Some people think data lakes replace data warehouses. Both of these conclusions are false. Their is room in your data architecture for both data lakes and data warehouses. They both have different use cases and those use cases can be complementary.

Todd Reichmuth, Solutions Engineer with Snowflake Computing, has spent the past 18 years in the world of Data Warehousing and Big Data. He spent that time at Netezza and then later at IBM Data. Earlier in 2018 making the jump to the cloud at Snowflake Computing.

Mike Myer, Sales Director with Snowflake Computing, has spent the past 6 years in the world of Security and looking to drive awareness to better Data Warehousing and Big Data solutions available! Was previously at local tech companies FireMon and Lockpath and decided to join Snowflake due to the disruptive technology that's truly helping folks in the Big Data world on a day to day basis.

Cloud DW technology trends and considerations for enterprises to apply snowflake

Cloud DW technology trends and considerations for enterprises to apply snowflakeSANG WON PARK 올해 처음 오프라인으로 진행된 "한국 데이터 엔니지어 모임"에서 발표한 cloud dw와 snowflake라는 주제로 발표한 내용을 정리하여 공유함. (2022.07)

[ 발표 주제 ]

Cloud DW 기술 트렌드와 Snowflake 적용

- Modern Data Stack에서 Cloud DW의 역할

- 기존 Data Lake + DW와 무엇이 다른가?

- Data Engineer 관점에서 어떻게 사용하면 좋을까? (기능/성능/비용 측면의 장점/단점)

[ 주요 내용 ]

- 최근 많은 Data Engineer가 기존 기술 스택(Hadoop, Spark, DW 등)의 기술적/운영적 한계를 극복하기 위한 고민중.

- 특히 Cloud의 장점과 운영 및 성능을 고려한 Cloud DW(AWS Redshift, GCP BigQuery, DataBricks, Snowflake)를 고려

- 이 중 Snowflake를 실제 프로젝트에 적용한 경험과 기술적인 특징/장점/단점을 공유하고자 함.

작년부터 정부의 데이터 정책 변화와 Cloud 기반의 기술 변화 가속화로 기업의 데이터 환경에도 많은 변화가 발생하고 있고, 기업들은 이에 적응하기 위한 다양한 시도를 하고 있다.

그 중심에 cloud dw (또는 Lake house)가 위치하고 있으며, 이를 기반으로 통합 데이터 플랫폼으로의 아키텍처로 변화하고 있다. 하지만, 아직까지 기존 DW 제품과 주요 CSP(AWS, GCP, Azure)의 제품군을 다양하게 시도하고 있으나, 기대와 다르게 생각보나 낮은 성능 또는 비싼 사용료, 운영의 복잡성으로 인한 많은 시행착오를 거치고 있다.

이 상황에서 작년에 처음 검토한 snowflake의 다양한 기능들이 기업들의 고민과 문제를 상당부분 손쉽게 해결할 수 있다는 것을 확인할 수 있었고, 이를 이용하여 실제 많은 기업들에게 적용하기 위한 POC를 수행하거나, 실제 적용하는 프로젝트를 수행하게 되었다.

본 발표 내용은 이러한 경험을 기반으로 기업(그리고 실제 업무를 수행할 Data Engineer) 관점에서 snowflake가 어떻게 문제를 해결할 수 있는지 cloud dw를 도입/활용/확장 하는 단계별로 문제와 해결 방안을 중심으로 설명하였다.

https://blog.naver.com/freepsw?Redirect=Update&logNo=222815591918

Introduction to Hadoop and Hadoop component

Introduction to Hadoop and Hadoop component rebeccatho This document provides an introduction to Apache Hadoop, which is an open-source software framework for distributed storage and processing of large datasets. It discusses Hadoop's main components of MapReduce and HDFS. MapReduce is a programming model for processing large datasets in a distributed manner, while HDFS provides distributed, fault-tolerant storage. Hadoop runs on commodity computer clusters and can scale to thousands of nodes.

The Marriage of the Data Lake and the Data Warehouse and Why You Need Both

The Marriage of the Data Lake and the Data Warehouse and Why You Need BothAdaryl "Bob" Wakefield, MBA

Viewers also liked (16)

Scaling hadoopapplications

Scaling hadoopapplicationsMilind Bhandarkar The document discusses best practices for scaling Hadoop applications. It covers causes of sublinear scalability like sequential bottlenecks, load imbalance, over-partitioning, and synchronization issues. It also provides equations for analyzing scalability and discusses techniques like reducing algorithmic overheads, increasing task granularity, and using compression. The document recommends using higher-level languages, tuning configuration parameters, and minimizing remote procedure calls to improve scalability.

Hadoop: The Default Machine Learning Platform ?

Hadoop: The Default Machine Learning Platform ?Milind Bhandarkar Apache Hadoop, since its humble beginning as an execution engine for web crawler and building search indexes, has matured into a general purpose distributed application platform and data store. Large Scale Machine Learning (LSML) techniques and algorithms proved to be quite tricky for Hadoop to handle, ever since we started offering Hadoop as a service at Yahoo in 2006. In this talk, I will discuss early experiments of implementing LSML algorithms on Hadoop at Yahoo. I will describe how it changed Hadoop, and led to generalization of the Hadoop platform to accommodate programming paradigms other than MapReduce. I will unveil some of our recent efforts to incorporate diverse LSML runtimes into Hadoop, evolving it to become *THE* LSML platform. I will also make a case for an industry-standard LSML benchmark, based on common deep analytics pipelines that utilize LSML workload.

Extending Hadoop for Fun & Profit

Extending Hadoop for Fun & ProfitMilind Bhandarkar Apache Hadoop project, and the Hadoop ecosystem has been designed be extremely flexible, and extensible. HDFS, Yarn, and MapReduce combined have more that 1000 configuration parameters that allow users to tune performance of Hadoop applications, and more importantly, extend Hadoop with application-specific functionality, without having to modify any of the core Hadoop code.

In this talk, I will start with simple extensions, such as writing a new InputFormat to efficiently process video files. I will provide with some extensions that boost application performance, such as optimized compression codecs, and pluggable shuffle implementations. With refactoring of MapReduce framework, and emergence of YARN, as a generic resource manager for Hadoop, one can extend Hadoop further by implementing new computation paradigms.

I will discuss one such computation framework, that allows Message Passing applications to run in the Hadoop cluster alongside MapReduce. I will conclude by outlining some of our ongoing work, that extends HDFS, by removing namespace limitations of the current Namenode implementation.

Future of Data Intensive Applicaitons

Future of Data Intensive ApplicaitonsMilind Bhandarkar "Big Data" is a much-hyped term nowadays in Business Computing. However, the core concept of collaborative environments conducting experiments over large shared data repositories has existed for decades. In this talk, I will outline how recent advances in Cloud Computing, Big Data processing frameworks, and agile application development platforms enable Data Intensive Cloud Applications. I will provide a brief history of efforts in building scalable & adaptive run-time environments, and the role these runtime systems will play in new Cloud Applications. I will present a vision for cloud platforms for science, where data-intensive frameworks such as Apache Hadoop will play a key role.

Hadoop summit 2010 frameworks panel elephant bird

Hadoop summit 2010 frameworks panel elephant birdKevin Weil Elephant Bird is a framework for working with structured data within Hadoop ecosystems. It allows users to specify a flexible, forward-backward compatible, self-documenting data schema and then generates code for input/output formats, Hadoop Writables, and Pig load/store functions. This reduces the amount of code needed and allows users to focus on their data. Elephant Bird underlies 20,000 Hadoop jobs per day at Twitter.

The Zoo Expands: Labrador *Loves* Elephant, Thanks to Hamster

The Zoo Expands: Labrador *Loves* Elephant, Thanks to HamsterMilind Bhandarkar The document summarizes Milind Bhandarkar's work developing Hamster, a system for running MPI applications on Hadoop YARN. Some key points:

- Hamster allows MPI applications to run alongside Hadoop dataflow jobs on the same cluster managed by YARN. It implements an MPI runtime on top of YARN.

- Hamster's design leverages OpenMPI's strengths while allowing it to integrate with YARN. It includes an application master, node service, and scheduler component.

- Performance tests show Hamster has low overhead and scales well for large MPI jobs. It introduces only a small performance penalty compared to running MPI natively with OpenMPI.

- Example results are shown

Hadoop at Twitter (Hadoop Summit 2010)

Hadoop at Twitter (Hadoop Summit 2010)Kevin Weil Kevin Weil presented on Hadoop at Twitter. He discussed Twitter's data lifecycle including data input via Scribe and Crane, storage in HDFS and HBase, analysis using Pig and Oink, and data products like Birdbrain. He described how tools like Scribe, Crane, Elephant Bird, Pig, and HBase were developed and used at Twitter to handle large volumes of log and tabular data at petabyte scale.

Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010)

Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010)Kevin Weil A look at Twitter's data lifecycle, some of the tools we use to handle big data, and some of the questions we answer from our data.

Big Data at Twitter, Chirp 2010

Big Data at Twitter, Chirp 2010Kevin Weil Slides from my talk on collecting, storing, and analyzing big data at Twitter for Chirp Hack Day at Twitter's Chirp conference.

Hadoop and pig at twitter (oscon 2010)

Hadoop and pig at twitter (oscon 2010)Kevin Weil This document summarizes Kevin Weil's presentation on Hadoop and Pig at Twitter. Weil discusses how Twitter uses Hadoop and Pig to analyze massive amounts of user data, including tweets. He explains how Pig allows for more concise and readable analytics jobs compared to raw MapReduce. Weil also provides examples of how Twitter builds data-driven products and services using these tools, such as their People Search feature.

Modeling with Hadoop kdd2011

Modeling with Hadoop kdd2011Milind Bhandarkar This document discusses modeling algorithms using the MapReduce framework. It outlines types of learning that can be done in MapReduce, including parallel training of models, ensemble methods, and distributed algorithms that fit the statistical query model (SQM). Specific algorithms that can be implemented in MapReduce are discussed, such as linear regression, naive Bayes, logistic regression, and decision trees. The document provides examples of how these algorithms can be formulated and computed in a MapReduce paradigm by distributing computations across mappers and reducers.

Introduction To Apache Pig at WHUG

Introduction To Apache Pig at WHUGAdam Kawa This document provides an introduction to Apache Pig including:

- What Pig is and how it offers a high-level language called PigLatin for analyzing large datasets.

- How PigLatin provides common data operations and types and is more natural for analysts than MapReduce.

- Examples of how WordCount looks in PigLatin versus Java MapReduce.

- How Pig works by parsing, optimizing, and executing PigLatin scripts as MapReduce jobs on Hadoop.

- Considerations for developing, running, and optimizing PigLatin scripts.

Rainbird: Realtime Analytics at Twitter (Strata 2011)

Rainbird: Realtime Analytics at Twitter (Strata 2011)Kevin Weil Introducing Rainbird, Twitter's high volume distributed counting service for realtime analytics, built on Cassandra. This presentation looks at the motivation, design, and uses of Rainbird across Twitter.

Practical Problem Solving with Apache Hadoop & Pig

Practical Problem Solving with Apache Hadoop & PigMilind Bhandarkar The document discusses a presentation about practical problem solving with Hadoop and Pig. It provides an agenda that covers introductions to Hadoop and Pig, including the Hadoop distributed file system, MapReduce, performance tuning, and examples. It discusses how Hadoop is used at Yahoo, including statistics on usage. It also provides examples of how Hadoop has been used for applications like log processing, search indexing, and machine learning.

Hadoop, Pig, and Twitter (NoSQL East 2009)

Hadoop, Pig, and Twitter (NoSQL East 2009)Kevin Weil A talk on the use of Hadoop and Pig inside Twitter, focusing on the flexibility and simplicity of Pig, and the benefits of that for solving real-world big data problems.

Hadoop MapReduce joins

Hadoop MapReduce joinsShalish VJ The document discusses different types of joins that can be performed in MapReduce including reduce-side joins, replicated joins, and composite joins. Reduce-side joins perform the join operation in the reducer by shuffling all the data to the reducers based on the join key. Replicated joins load smaller datasets into memory on the mapper to perform the join without a shuffle. Composite joins require preprocessed and sorted data to perform the join entirely on the mapper without a shuffle for inner and full outer joins.

Ad

Similar to Hadoop Overview kdd2011 (20)

Hadoop Overview & Architecture

Hadoop Overview & Architecture EMC This document provides an overview of Hadoop architecture. It discusses how Hadoop uses MapReduce and HDFS to process and store large datasets reliably across commodity hardware. MapReduce allows distributed processing of data through mapping and reducing functions. HDFS provides a distributed file system that stores data reliably in blocks across nodes. The document outlines components like the NameNode, DataNodes and how Hadoop handles failures transparently at scale.

Hadoop london

Hadoop londonYahoo Developer Network Apache Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It provides reliable storage through its distributed file system and scalable processing through its MapReduce programming model. Yahoo! uses Hadoop extensively for applications like log analysis, content optimization, and computational advertising, processing over 6 petabytes of data across 40,000 machines daily.

Hadoop Tutorial with @techmilind

Hadoop Tutorial with @techmilindEMC Apache Hadoop has emerged as the storage and processing platform of choice for Big Data. In this tutorial, I will give an overview of Apache Hadoop and its ecosystem, with specific use cases. I will explain the MapReduce programming framework in detail, and outline how it interacts with Hadoop Distributed File System (HDFS). While Hadoop is written in Java, MapReduce applications can be written using a variety of languages using a framework called Hadoop Streaming. I will give several examples of MapReduce applications using Hadoop Streaming.

Hadoop: A distributed framework for Big Data

Hadoop: A distributed framework for Big DataDhanashri Yadav Hadoop is a Java software framework that supports data-intensive distributed applications and is developed under open source license. It enables applications to work with thousands of nodes and petabytes of data.

Introduction to Hadoop

Introduction to HadoopYork University Apache Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. The core of Hadoop consists of HDFS for storage and MapReduce for processing. Hadoop has been expanded with additional projects including YARN for job scheduling and resource management, Pig and Hive for SQL-like queries, HBase for column-oriented storage, Zookeeper for coordination, and Ambari for provisioning and managing Hadoop clusters. Hadoop provides scalable and cost-effective solutions for storing and analyzing massive amounts of data.

Large scale computing with mapreduce

Large scale computing with mapreducehansen3032 This document discusses large scale computing with MapReduce. It provides background on the growth of digital data, noting that by 2020 there will be over 5,200 GB of data for every person on Earth. It introduces MapReduce as a programming model for processing large datasets in a distributed manner, describing the key aspects of Map and Reduce functions. Examples of MapReduce jobs are also provided, such as counting URL access frequencies and generating a reverse web link graph.

Osd ctw spark

Osd ctw sparkWisely chen Spark is a fast and general engine for large-scale data processing. It runs on Hadoop clusters through YARN and Mesos, and can also run standalone. Spark is up to 100x faster than Hadoop for certain applications because it keeps data in memory rather than disk, and it supports iterative algorithms through its Resilient Distributed Dataset (RDD) abstraction. The presenter provides a demo of Spark's word count algorithm in Scala, Java, and Python to illustrate how easy it is to use Spark across languages.

What's new in hadoop 3.0

What's new in hadoop 3.0Heiko Loewe my compilation of the changes and differences of the upcoming 3.0 version of Hadoop. Present during the Meetup of the group https://www.meetup.com/Big-Data-Hadoop-Spark-NRW/

Hadoop with Python

Hadoop with PythonDonald Miner The document discusses using Python with Hadoop frameworks. It introduces Hadoop Distributed File System (HDFS) and MapReduce, and how to use the mrjob library to write MapReduce jobs in Python. It also covers using Python with higher-level Hadoop frameworks like Pig, accessing HDFS with snakebite, and using Python clients for HBase and the PySpark API for the Spark framework. Key advantages discussed are Python's rich ecosystem and ability to access Hadoop frameworks.

Hadoop

HadoopAnil Reddy Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses a simple programming model called MapReduce that automatically parallelizes and distributes work across nodes. Hadoop consists of Hadoop Distributed File System (HDFS) for storage and MapReduce execution engine for processing. HDFS stores data as blocks replicated across nodes for fault tolerance. MapReduce jobs are split into map and reduce tasks that process key-value pairs in parallel. Hadoop is well-suited for large-scale data analytics as it scales to petabytes of data and thousands of machines with commodity hardware.

HadoopThe Hadoop Java Software Framework

HadoopThe Hadoop Java Software FrameworkThoughtWorks Storage and computation is getting cheaper AND easily accessible on demand in the cloud. We now collect and store some really large data sets Eg: user activity logs, genome sequencing, sensory data etc. Hadoop and the ecosystem of projects built around it present simple and easy to use tools for storing and analyzing such large data collections on commodity hardware.

Topics Covered

* The Hadoop architecture.

* Thinking in MapReduce.

* Run some sample MapReduce Jobs (using Hadoop Streaming).

* Introduce PigLatin, a easy to use data processing language.

Speaker Profile: Mahesh Reddy is an Entrepreneur, chasing dreams. Works on large scale crawl and extraction of structured data from the web. He is a graduate frm IIT Kanpur(2000-05) and previously worked at Yahoo! Labs as Research Engineer/Tech Lead on Search and Advertising products.

Apache Spark - Santa Barbara Scala Meetup Dec 18th 2014

Apache Spark - Santa Barbara Scala Meetup Dec 18th 2014cdmaxime This document provides an introduction to Apache Spark, a general purpose cluster computing framework. It discusses how Spark improves upon MapReduce by offering better performance, support for iterative algorithms, and an easier developer experience. Spark retains MapReduce's advantages like scalability, fault tolerance, and data locality, but offers more by leveraging distributed memory and supporting directed acyclic graphs of tasks. Examples demonstrate how Spark can run programs up to 100x faster than Hadoop MapReduce and how it supports machine learning algorithms and streaming data analysis.

Etu L2 Training - Hadoop 企業應用實作

Etu L2 Training - Hadoop 企業應用實作James Chen This document provides an overview of an advanced Big Data hands-on course covering Hadoop, Sqoop, Pig, Hive and enterprise applications. It introduces key concepts like Hadoop and large data processing, demonstrates tools like Sqoop, Pig and Hive for data integration, querying and analysis on Hadoop. It also discusses challenges for enterprises adopting Hadoop technologies and bridging the skills gap.

L19CloudMapReduce introduction for cloud computing .ppt

L19CloudMapReduce introduction for cloud computing .pptMaruthiPrasad96 This document provides an overview of cloud computing with MapReduce and Hadoop. It discusses what cloud computing and MapReduce are, how they work, and examples of applications that use MapReduce. Specifically, MapReduce is introduced as a programming model for large-scale data processing across thousands of machines in a fault-tolerant way. Example applications like search, sorting, inverted indexing, finding popular words, and numerical integration are described. The document also outlines how to get started with Hadoop and write MapReduce jobs in Java.

Fundamental of Big Data with Hadoop and Hive

Fundamental of Big Data with Hadoop and HiveSharjeel Imtiaz it is bit towards Hadoop/Hive installation experience and ecosystem concept. The outcome of this slide is derived from a under published book Fundamental of Big Data.

Apache Spark - San Diego Big Data Meetup Jan 14th 2015

Apache Spark - San Diego Big Data Meetup Jan 14th 2015cdmaxime This document provides an introduction to Apache Spark presented by Maxime Dumas of Cloudera. It discusses:

1. What Cloudera does including distributing Hadoop components with enterprise tooling and support.

2. An overview of the Apache Hadoop ecosystem including why Hadoop is used for scalability, efficiency, and flexibility with large amounts of data.

3. An introduction to Apache Spark which improves on MapReduce by being faster, easier to use, and supporting more types of applications such as machine learning and graph processing. Spark can be 100x faster than MapReduce for certain applications.

Lecture 2 part 3

Lecture 2 part 3Jazan University The document discusses key concepts related to Hadoop including its components like HDFS, MapReduce, Pig, Hive, and HBase. It provides explanations of HDFS architecture and functions, how MapReduce works through map and reduce phases, and how higher-level tools like Pig and Hive allow for more simplified programming compared to raw MapReduce. The summary also mentions that HBase is a NoSQL database that provides fast random access to large datasets on Hadoop, while HCatalog provides a relational abstraction layer for HDFS data.

Scala and spark

Scala and sparkFabio Fumarola This document provides an introduction to Apache Spark, including its architecture and programming model. Spark is a cluster computing framework that provides fast, in-memory processing of large datasets across multiple cores and nodes. It improves upon Hadoop MapReduce by allowing iterative algorithms and interactive querying of datasets through its use of resilient distributed datasets (RDDs) that can be cached in memory. RDDs act as immutable distributed collections that can be manipulated using transformations and actions to implement parallel operations.

Introduction to Spark - Phoenix Meetup 08-19-2014

Introduction to Spark - Phoenix Meetup 08-19-2014cdmaxime This document provides an introduction to Apache Spark presented by Maxime Dumas. It discusses how Spark improves on MapReduce by offering better performance through leveraging distributed memory and supporting iterative algorithms. Spark retains MapReduce's advantages of scalability, fault-tolerance, and data locality while offering a more powerful and easier to use programming model. Examples demonstrate how tasks like word counting, logistic regression, and streaming data processing can be implemented on Spark. The document concludes by discussing Spark's integration with other Hadoop components and inviting attendees to try Spark.

Ad

Recently uploaded (20)

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Hadoop Overview kdd2011

- 1. Modeling with Hadoop Vijay K. Narayanan Principal Scientist, Yahoo! Labs, Yahoo! Milind Bhandarkar Chief Architect, Greenplum Labs, EMC2

- 2. Session 1: Overview of Hadoop • Motivation • Hadoop • Map-Reduce • Distributed File System • Next Generation MapReduce • Q & A 2

- 3. Session 2: Modeling with Hadoop • Types of learning in MapReduce • Algorithms in MapReduce framework • Data parallel algorithms • Sequential algorithms • Challenges and Enhancements 3

- 4. Session 3: Hands On Exercise • Spin-up Single Node Hadoop cluster in a Virtual Machine • Write a regression trainer • Train model on a dataset 4

- 5. Overview of Apache Hadoop

- 6. Hadoop At Yahoo! (Some Statistics) • 40,000 + machines in 20+ clusters • Largest cluster is 4,000 machines • 170 Petabytes of storage • 1000+ users • 1,000,000+ jobs/month 6

- 9. Who Uses Hadoop ?

- 10. Why Hadoop ? 10

- 11. Big Datasets (Data-Rich Computing theme proposal. J. Campbell, et al, 2007)

- 12. Cost Per Gigabyte (http://www.mkomo.com/cost-per-gigabyte)

- 13. Storage Trends (Graph by Adam Leventhal, ACM Queue, Dec 2009)

- 14. Motivating Examples 14

- 16. Search Assist • Insight: Related concepts appear close together in text corpus • Input: Web pages • 1 Billion Pages, 10K bytes each • 10 TB of input data • Output: List(word, List(related words)) 16

- 17. Search Assist // Input: List(URL, Text) foreach URL in Input : Words = Tokenize(Text(URL)); foreach word in Tokens : Insert (word, Next(word, Tokens)) in Pairs; Insert (word, Previous(word, Tokens)) in Pairs; // Result: Pairs = List (word, RelatedWord) Group Pairs by word; // Result: List (word, List(RelatedWords) foreach word in Pairs : Count RelatedWords in GroupedPairs; // Result: List (word, List(RelatedWords, count)) foreach word in CountedPairs : Sort Pairs(word, *) descending by count; choose Top 5 Pairs; // Result: List (word, Top5(RelatedWords)) 17

- 18. People You May Know

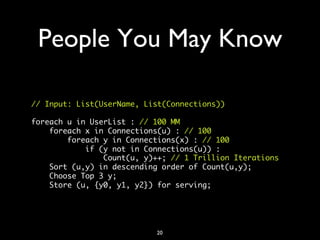

- 19. People You May Know • Insight: You might also know Joe Smith if a lot of folks you know, know Joe Smith • if you don t know Joe Smith already • Numbers: • 100 MM users • Average connections per user is 100 19

- 20. People You May Know // Input: List(UserName, List(Connections)) foreach u in UserList : // 100 MM foreach x in Connections(u) : // 100 foreach y in Connections(x) : // 100 if (y not in Connections(u)) : Count(u, y)++; // 1 Trillion Iterations Sort (u,y) in descending order of Count(u,y); Choose Top 3 y; Store (u, {y0, y1, y2}) for serving; 20

- 21. Performance • 101 Random accesses for each user • Assume 1 ms per random access • 100 ms per user • 100 MM users • 100 days on a single machine 21

- 22. MapReduce Paradigm 22

- 23. Map Reduce • Primitives in Lisp ( Other functional languages) 1970s • Google Paper 2004 • http://labs.google.com/papers/ mapreduce.html 23

- 24. Map Output_List = Map (Input_List) Square (1, 2, 3, 4, 5, 6, 7, 8, 9, 10) = (1, 4, 9, 16, 25, 36,49, 64, 81, 100) 24

- 25. Reduce Output_Element = Reduce (Input_List) Sum (1, 4, 9, 16, 25, 36,49, 64, 81, 100) = 385 25

- 26. Parallelism • Map is inherently parallel • Each list element processed independently • Reduce is inherently sequential • Unless processing multiple lists • Grouping to produce multiple lists 26

- 27. Search Assist Map // Input: http://hadoop.apache.org Pairs = Tokenize_And_Pair ( Text ( Input ) ) Output = { (apache, hadoop) (hadoop, mapreduce) (hadoop, streaming) (hadoop, pig) (apache, pig) (hadoop, DFS) (streaming, commandline) (hadoop, java) (DFS, namenode) (datanode, block) (replication, default)... } 27

- 28. Search Assist Reduce // Input: GroupedList (word, GroupedList(words)) CountedPairs = CountOccurrences (word, RelatedWords) Output = { (hadoop, apache, 7) (hadoop, DFS, 3) (hadoop, streaming, 4) (hadoop, mapreduce, 9) ... } 28

- 29. Issues with Large Data • Map Parallelism: Chunking input data • Reduce Parallelism: Grouping related data • Dealing with failures load imbalance 29

- 31. Apache Hadoop • January 2006: Subproject of Lucene • January 2008: Top-level Apache project • Stable Version: 0.20.203 • Latest Version: 0.22 (Coming soon) 31

- 32. Apache Hadoop • Reliable, Performant Distributed file system • MapReduce Programming framework • Ecosystem: HBase, Hive, Pig, Howl, Oozie, Zookeeper, Chukwa, Mahout, Cascading, Scribe, Cassandra, Hypertable, Voldemort, Azkaban, Sqoop, Flume, Avro ... 32

- 33. Problem: Bandwidth to Data • Scan 100TB Datasets on 1000 node cluster • Remote storage @ 10MB/s = 165 mins • Local storage @ 50-200MB/s = 33-8 mins • Moving computation is more efficient than moving data • Need visibility into data placement 33

- 34. Problem: Scaling Reliably • Failure is not an option, it s a rule ! • 1000 nodes, MTBF 1 day • 4000 disks, 8000 cores, 25 switches, 1000 NICs, 2000 DIMMS (16TB RAM) • Need fault tolerant store with reasonable availability guarantees • Handle hardware faults transparently 34

- 35. Hadoop Goals • Scalable: Petabytes (10 15 Bytes) of data on thousands on nodes • Economical: Commodity components only • Reliable • Engineering reliability into every application is expensive 35

- 36. Hadoop MapReduce 36

- 37. Think MapReduce • Record = (Key, Value) • Key : Comparable, Serializable • Value: Serializable • Input, Map, Shuffle, Reduce, Output 37

- 38. Seems Familiar ? cat /var/log/auth.log* | grep session opened | cut -d -f10 | sort | uniq -c ~/userlist 38

- 39. Map • Input: (Key , Value ) 1 1 • Output: List(Key , Value ) 2 2 • Projections, Filtering, Transformation 39

- 40. Shuffle • Input: List(Key , Value ) 2 2 • Output • Sort(Partition(List(Key , List(Value )))) 2 2 • Provided by Hadoop 40

- 41. Reduce • Input: List(Key , List(Value )) 2 2 • Output: List(Key , Value ) 3 3 • Aggregation 41

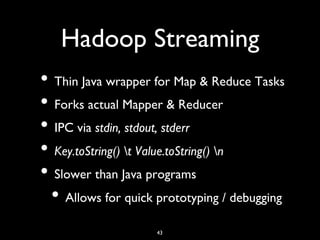

- 42. Hadoop Streaming • Hadoop is written in Java • Java MapReduce code is native • What about Non-Java Programmers ? • Perl, Python, Shell, R • grep, sed, awk, uniq as Mappers/Reducers • Text Input and Output 42

- 43. Hadoop Streaming • Thin Java wrapper for Map Reduce Tasks • Forks actual Mapper Reducer • IPC via stdin, stdout, stderr • Key.toString() t Value.toString() n • Slower than Java programs • Allows for quick prototyping / debugging 43

- 44. Hadoop Streaming $ bin/hadoop jar hadoop-streaming.jar -input in-files -output out-dir -mapper mapper.sh -reducer reducer.sh # mapper.sh sed -e 's/ /n/g' | grep . # reducer.sh uniq -c | awk '{print $2 t $1}' 44

- 45. Hadoop Distributed File System (HDFS) 45

- 46. HDFS • Data is organized into files and directories • Files are divided into uniform sized blocks (default 128MB) and distributed across cluster nodes • HDFS exposes block placement so that computation can be migrated to data 46

- 47. HDFS • Blocks are replicated (default 3) to handle hardware failure • Replication for performance and fault tolerance (Rack-Aware placement) • HDFS keeps checksums of data for corruption detection and recovery 47

- 48. HDFS • Master-Worker Architecture • Single NameNode • Many (Thousands) DataNodes 48

- 49. HDFS Master (NameNode) • Manages filesystem namespace • File metadata (i.e. inode ) • Mapping inode to list of blocks + locations • Authorization Authentication • Checkpoint journal namespace changes 49

- 50. Namenode • Mapping of datanode to list of blocks • Monitor datanode health • Replicate missing blocks • Keeps ALL namespace in memory • 60M objects (File/Block) in 16GB 50

- 51. Datanodes • Handle block storage on multiple volumes block integrity • Clients access the blocks directly from data nodes • Periodically send heartbeats and block reports to Namenode • Blocks are stored as underlying OS s files 51

- 53. Next Generation MapReduce 53

- 54. MapReduce Today (Courtesy: Arun Murthy, Hortonworks)

- 55. Why ? • Scalability Limitations today • Maximum cluster size: 4000 nodes • Maximum Concurrent tasks: 40,000 • Job Tracker SPOF • Fixed map and reduce containers (slots) • Punishes pleasantly parallel apps 55

- 56. Why ? (contd) • MapReduce is not suitable for every application • Fine-Grained Iterative applications • HaLoop: Hadoop in a Loop • Message passing applications • Graph Processing 56

- 57. Requirements • Need scalable cluster resources manager • Separate scheduling from resource management • Multi-Lingual Communication Protocols 57

- 58. Bottom Line • @techmilind #mrng (MapReduce, Next Gen) is in reality, #rmng (Resource Manager, Next Gen) • Expect different programming paradigms to be implemented • Including MPI (soon) 58

- 59. Architecture (Courtesy: Arun Murthy, Hortonworks)

- 60. The New World • Resource Manager • Allocates resources (containers) to applications • Node Manager • Manages containers on nodes • Application Master • Specific to paradigm e.g. MapReduce application master, MPI application master etc 60

- 61. Container • In current terminology: A Task Slot • Slice of the node s hardware resources • #of cores, virtual memory, disk size, disk and network bandwidth etc • Currently, only memory usage is sliced 61

- 62. Questions ? 62