Hadoop Tiering with Dell EMC Isilon - 2018

- 1. 1 of 35 Optimizing your HADOOP Infrastructure with Hortonworks And Dell EMC Deep Dive into HDFS Tiering your data across DAS and Isilon Boni Bruno, CISSP, CISM Chief Solutions Architect

- 2. 2 of 35 Growing Hadoop Data Volumes: Not all data has the same characteristics Cost Perf Cold Data Goal: Low Price/TB Cost Perf Hot Data Goal : High Throughput/TB • Data in Long term retention • Accessed/Analyzed occasionally • Ex: Long term medical records, bank records, research data, phone logs etc. • Recently generated data • Accessed/Analyzed frequently • Ex: New medical records, bank records, research data, phone logs etc. Hot + Cold Data IT Budget Hot Data Time TB Significant portion of Data Growth in majority of Enterprises comes from “Cold Data” Gap 75TB inflection point Data Growth Larger Hadoop Clusters More Cost • Servers • Maintenance • Floor Space, Power • OS and Hadoop Software More Risk • Hardware Failure • Security • Compliance

- 3. 3 of 35 Expanding Hadoop from 100TB to 1PB Usable Capacity Hadoop DAS Cluster 100TB Usable Capacity • 12 R730XD Servers • 15 RHEL Subscriptions • 2 Racks • 4 HDP Ent+ Subscriptions Hadoop DAS Cluster 1PB Usable Capacity • 82 R730XD Servers • 85 RHEL Subscriptions • 7 Racks • 22 HDP Ent+ Subscriptions Cold Data = Low CPU Utilization Cold Data Large Hadoop Clusters = Up to 80% Cold data Server Failure Probability = 1.49% Server Failure Probability = 8.15% 1 3 2

- 4. 4 of 35 Hortonworks Hadoop Tiered Storage with Isilon • Shared Storage Overlay for “Cold Data” • Two Hadoop Namespaces : DAS and Shared Storage • All data (DAS + Shared) analyzed by Hadoop apps DAS Cluster Shared Storage Cluster Namespace hdfs://DAS Namespace hdfs://Isilon Physical Config Hot Data Cold Data < 75TB > 75TB Logical Config Hadoop Yarn-based Apps: Hive, Spark and Map-Reduce Hot Data in DAS Cluster (hdfs://DAS) Cold Data in Isilon Shared Storage Cluster (hdfs://Isilon) Ambari,Ranger, Knox,Atlas NativeIsilonData ManagementTools Improved Accuracy Deeper AnalyticsMore Data

- 5. 5 of 35 Hortonworks Hadoop Tiered Storage Benefits • No disruption to existing Hadoop DAS cluster • No migration of data out of existing Isilon • Analyze long term data with compliance, protection, security • HA for long term data and Hadoop name node Minimize Business Risk and reduce TCO in managing explosive growth of cold data in Hadoop DAS Cluster Shared Storage Cluster DAS NameNode/DataNodes Add Compute for Shared storage Isilon HA NameNode/DataNodes Carve out Hadoop Access Zone 1 2 3 4 1 2 3 4 Hardware Maintenance Floor Space OS Software Hadoop Software Grow Compute slower than data. Save on :

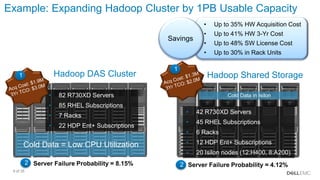

- 6. 6 of 35 Hadoop DAS Cluster • 82 R730XD Servers • 85 RHEL Subscriptions • 7 Racks • 22 HDP Ent+ Subscriptions Cold Data = Low CPU Utilization Server Failure Probability = 4.12%Server Failure Probability = 8.15% 1 2 Hadoop Shared Storage Cold Data in Isilon • 42 R730XD Servers • 45 RHEL Subscriptions • 6 Racks • 12 HDP Ent+ Subscriptions • 20 Isilon nodes (12:H400, 8:A200) 2 1 • Up to 35% HW Acquisition Cost • Up to 41% HW 3-Yr Cost • Up to 48% SW License Cost • Up to 30% in Rack Units Example: Expanding Hadoop Cluster by 1PB Usable Capacity Savings

- 7. 7 of 35 Hadoop Tiered Storage Implementation Services Transition: Single Namespace → Dual Namespace Connect solution to business metrics and TCO Define and implement reusable best practices Goal: Assist customers in expanding from a Hadoop on DAS solution to the Tiered Storage Solution Best practices Guidelines for Cold vs. Hot Data Security in dual namespace environment Hadoop app config for dual namespace 1. Technology Advisory Service 2. Data Migration Service

- 8. 8 of 35 Implementation Services: Sample Success Stories Supply Chain Optimization Global IT Provider Reducing Fraud and Waste Global Pharmaceutical Provider Cell Tower Analysis Mobile carrier Predicting and avoiding ATM Thefts Large South American Bank Enhancing Workflow and Resource Management Major US City Sanitation Department Enabling value added Customer Services Global Engineering Firm Financial Services | Aviation | Healthcare | Education | Telecom | Retail | Utilities | Federal | Entertainment

- 9. 9 of 35 Basic Reference Architecture

- 10. 10 of 35 Key Solution Attributes Supports Spark, Hive and MapReduce across Isilon and DAS namespaces No need to configure Ambari agent on Isilon Works in both Kerberized and non-kerberized environments HDFS Tiering also works with Ranger Common Hive meta-store across Isilon and DAS namespaces High Speed HBase and Kudu are run on DAS cluster Allows for easy separation of HDFS storage without adding compute Provides better TCO for active archiving

- 11. 11 of 35 HDFS Tiering with Isilon also works with Ranger! HDFS Tiering has been validated with HDFS, HIVE, and YARN policies enabled on DAS Cluster!

- 12. 12 of 35 Enable Ranger Plugin on Isilon After creating your Ranger policies on DAS, simply enable the Ranger Plugin on Isilon. Note: Requires OneFS 8.0.1.x or 8.1.0.x

- 13. 13 of 35 Example Ranger HDFS Access Policy for DAS & Isilon Creating and assigning new access policies 1. Create sample directories such as GRANT_ACCESS and RESTRICT_ACCESS on the Isilon HDFS cluster. 2. Create hdp-user1 on all the nodes of the HDP cluster and Isilon cluster. 3. In the Ranger UI under HDP3_hadoop Service Manager, assign Read/Write/Execute (RWX) access for the hdp-user1 on GRANT_ACCESS

- 14. 14 of 35 Testing Ranger HDFS Access Policy with Remote Isilon File System 1. Assign RWX access to hdp-user1 in the GRANT_ACCESS directory and deny RWX access in the RESTRICT_ACCESS directory. (Shown in previous slides) 2. Access the GRANT_ACCESS directory with different hdfs commands, verify there are no permission issues: hadoop fs -ls hdfs://isi.yourdomain.com:8020/GRANT_ACCESS hadoop fs -put /etc/redhat-release hdfs://isi.yourdomain.com:8020/GRANT_ACCESS/ hadoop fs -ls hdfs://isi.yourdomain.com:8020/GRANT_ACCESS Found 1 items -rw-r--r-- 3 hdp-user1 hadoop 52 2017-08-24 12:12 hdfs://isi.yourdomain.com:8020/GRANT_ACCESS/redhat-release SUCCESS!

- 15. 15 of 35 Example Ranger HDFS Deny Policy for DAS & Isilon Creating and assigning new access policies 1. Create sample directories such as GRANT_ACCESS and RESTRICT_ACCESS on the Isilon HDFS cluster. 2. Create hdp-user1 on all the nodes of the HDP cluster and Isilon cluster. 3. In the Ranger UI under HDP3_hadoop Service Manager, assign Read/Write/Execute (RWX) access for the hdp-user1 on RESTRICT_ACCESS

- 16. 16 of 35 Testing Ranger HDFS Deny Policy with Remote Isilon File System 1. Add the user hdp-user1 to the Ranger RESTRICT_ACCESS directory policy on the remote Isilon HDFS. 2. Test access is restricted: hadoop fs -put /etc/redhat-release hdfs://isi.yourdomain.com:8020/RESTRICT_ACCESS/ 17/08/24 12:18:34 WARN retry.RetryInvocationHandler: Exception while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over null. Not retrying because try once and fail. org.apache.hadoop.ipc.RemoteException(org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException): Permission denied: [email protected], access=EXECUTE, path="/RESTRICT_ACCESS" at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1552) at org.apache.hadoop.ipc.Client.call(Client.java:1496) at org.apache.hadoop.ipc.Client.call(Client.java:1396) . . at org.apache.hadoop.fs.FsShell.main(FsShell.java:350) put: Permission denied: [email protected], access=EXECUTE, path="/RESTRICT_ACCESS" SUCCESS!

- 17. 17 of 35 Extensive Testing of Ranger Hive Policies with Isilon Test case name Step Description Hive data warehouse Ranger policy setup 1 Assign RWX on /user/hive directory for hdp-user1 on HDP (local DAS HDFS) and Isilon cluster using Ranger UI DDL operations 1. LOAD DATA LOCAL inpath 2. INSERT into table 3. INSERT Overwrite TABLE 1 Drop remote database if EXISTS cascade 2 Create remote_db, hive warehouse resides on remote Isilon HDFS 3 Create internal nonpartitioned table on remote_db 4 LOAD data local inpath into table created in preceding step 5 Create internal nonpartitioned table data on remote Isilon HDFS 6 LOAD data local inpath into table created in preceding step 7 Create internal transactional table on remote_db 8 INSERT into table from internal nonpartitioned table 9 Create internal partitioned table on remote_db 10 INSERT OVERWRITE TABLE from internal nonpartitioned table 11 Create external nonpartitioned table on remote_db 12 Drop local database if EXISTS cascade 13 Create local_db, hive warehouse resides on local DAS Hadoop cluster 14 Create internal nonpartitioned table on local_db 15 LOAD data local inpath into table created in preceding step 16 Create internal nonpartitioned table data on local DAS Hadoop cluster 17 LOAD data local inpath into table created in preceding step 18 Create internal transactional table on local_db 19 INSERT into table from internal nonpartitioned table 20 Create internal partitioned table on local_db 21 INSERT OVERWRITE TABLE from internal nonpartitioned table 22 Create external nonpartitioned table on local_db DML operations 1. Query local database tables 2. Query remote database tables 1 Query data from local external nonpartitioned table 2 Query data from local internal nonpartitioned table 3 Query data from local nonpartitioned remote data table 4 Query data from local internal partitioned table 5 Query data from local internal transactional table 6 Query data from remote external nonpartitioned table 7 Query data from remote internal nonpartitioned table 8 Query data from remote nonpartitioned remote data table All test cases to the right successfully passed with HDFS tiering to Isilon using a single metastore on DAS cluster with Hive Ranger Policies enabled.

- 18. 18 of 35 Using Hive with Hadoop Tiered Storage The Dell EMC® Isilon® HDFS tiering solutions allows for a common Hive Metastore across DAS and Isilon clusters. There is no need to maintain separate Metastores with HDFS tiering, by simply creating external databases, tables, or partitions that specify Isilon as the remote filesystem location in Hive, users can transparently access remote data on Isilon. This is a powerful use case! Note: You still need to maintain backups of your Hive DB as a best practice!

- 19. 19 of 35 Creating a remote Hive database on Isilon Note: Hive CLI uses Hive Server 1, Hortonworks is focusing on Hive Server 2 now so start using beeline instead of Hive CLI (both still work). Keep in mind, there is only one metastore and it’s on the DAS cluster. All the Hive work is done on DAS. Run the Hive CLI or beeline client to create a remote database location on Isilon: >CREATE database remote_DB COMMENT ‘Remote Database' LOCATION 'hdfs://isi.yourdomain.com:8020/user/hive/remote_DB' OK Time taken: 0.045 seconds

- 20. 20 of 35 Creating a remote Hive table on Isilon and loading data Create an internal nonpartitioned table and load data using local inpath: USE remote_DB; OK Time taken: 0.036 seconds CREATE TABLE passwd_int_nonpart (user_name STRING, password STRING, user_id STRING, group_id STRING, user_id_info STRING, home_dir STRING, shell STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ':‘; OK Time taken: 0.211 seconds LOAD data local inpath '/etc/passwd' into TABLE passwd_int_nonparty; Loading data to table remote_db.passwd_int_nonpart Table remote_db.passwd_int_nonpart stats: [numFiles=1, numRows=0, totalSize=1808, rawDataSize=0] OK Time taken: 0.261 seconds

- 21. 21 of 35 Hive Tiering Performance with Isilon Gen5 & Gen6 In additional to the functional testing of Hive in an HDFS Tiering setup, performance of both x410 (Gen 5) and H600 (Gen 6) were tested using a TPCDS (3 TB Dataset). The 3TB data set was first generated on the DAS cluster (6 Nodes – 1 MASTER, 5 WORKERS, each with 40cores, 256G RAM, and 12 x 1TB drives excluding OS drives). The 3TB TPCDS set was generated again on two remote Isilon cluters: • 7 x X410 nodes (Isilon X410-4U-Dual-256GB-2x1GE-2x10GE SFP+-96TB-3277GB SSD) running OneFS 8.0.1.1 • 1 H600 Chassis (4 nodes of Isilon H600-4U-Single-256GB-1x1GE-2x40GE SFP+-36TB-6554GB SSD) running OneFS 8.1.0.0 [Results of performance test on next slide]

- 22. 22 of 35 HDFS Tiered Storage Performance – DAS vs Gen5 vs Gen6 HDP 2.5.3.99 - HiveServer2 (No LLAP) Benchmarks 31 12 15 21 19 20 23 42 62 29 14 13 22 22 21 30 59 64 30 13 12 19 15 15 18 37 59 Q3 Q12 Q20 Q42 Q52 Q55 Q73 Q89 Q91 Time(S) Query Number TPCDS (3TB) HDFS Tiered Storage Results das (5) x410 (7) h600 (4) All queries ran from the same DAS Compute Nodes! The difference is where that data resides (local disks or on Isilon). Here you see H600 (less nodes) outperforming DAS!

- 23. 23 of 35 Moving Data with DistCp (use –skipcrccheck with Isilon) Moving data between DAS and Isilon with DistCp works fine (both Non-Kerberos and Kerberos tested). Note: As with any Hadoop cluster, data must not be active on source when using DistCp to move data. Example below uses DistCp to copy a file from the local DAS cluster to the remote Isilon cluster. $ hadoop distcp -skipcrccheck -update /tmp/redhat-release hdfs://isi.yourdomain.com/tmp/ $ hdfs dfs -ls -R hdfs://isi.yourdomain.com:8020/tmp/redhat-release -rw-r--r-- 3 root hdfs 27 2017-09-07 13:26 hdfs://isilon.solarch.lab.emc.com:8020/tmp/redhat-release Now from the remote Isilon cluster to the local DAS cluster. $ hadoop distcp -pc hdfs://isi.yourdomain.com:8020/tmp/redhat-release hdfs://das.yourdomain.com:8020/tmp/ $ hdfs dfs -ls -R hdfs://das.yourdomain.com:8020/tmp/redhat-release -rw-r--r-- 3 hdfs hdfs 27 2017-09-07 13:26 hdfs://hdp-master.bigdata.emc.local:8020/tmp/redhat-release

- 24. 24 of 35 Using MapReduce with HDFS Tiering You can run MapReduce jobs using Isilon as an source or destination, just specify the hdfs path in the MR job. Let’s run a MapReduce WordCount job using the local DAS cluster as a source input and the remote Isilon cluster as the destination output as an example: $ yarn jar /usr/hdp/current/hadoop-mapreduce-client/hadoop-mapreduce-examples.jar wordcount hdfs://das.yourdomain.com/tmp/mr/redhat-release hdfs://isi.yourdomain.com/tmp/mr/redhat-release-das $ hdfs dfs -ls -R hdfs://isi.yourdomain.com/tmp/mr/redhat-release-das -rw-r--r-- 3 ambary-qa hdfs 0 2017-08-04 01:49 hdfs://isi.yourdomain.com/tmp/mr/redhat-release-das/_SUCCESS -rw-r--r-- 3 ambary-qa hdfs 68 2017-08-04 01:49 hdfs://isi-cluster-hdfs2.bigdata.emc.local:8020/tmp/mr/redhat-release-das-hdfs2/part-r-00000 Now the reverse, WordCount job on input from the remote Isilon cluster with output going to the local DAS cluster: $ yarn jar /usr/hdp/current/hadoop-mapreduce-client/hadoop-mapreduce-examples.jar wordcount hdfs://isi.yourdomain.com/tmp/mr/redhat-release hdfs://das.yourdomain.com/tmp/mr/redhat-release-isi $ hdfs dfs -ls -R hdfs://das.yourdomain.com/tmp/mr/redhat-release-isi -rw-r--r-- 3 ambary-qa hdfs 0 2017-08-04 09:50 hdfs://hdp-master03.bigdata.emc.local:8020/tmp/mr/redhat-release-isi/_SUCCESS -rw-r--r-- 3 ambary-qa hdfs 68 2017-08-04 09:50 hdfs://hdp-master03.bigdata.emc.local:8020/tmp/mr/redhat-release-isi/part-r-00000

- 25. 25 of 35 Using Spark with HDFS Tiering Let’s create a word count and line count Scala file for Spark testing: cat >/tmp/spark_line_word_count.scala <<EOF val args=sc.getConf.get("spark.driver.args").split("s+") var input=args(0) var output1=args(1) + "-wc" var text_file=sc.textFile(input) val word_count=text_file.flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey(_ + _) word_count.saveAsTextFile(output1) var output2=args(1) + "-lc" var line_count=sc.parallelize(Seq(text_file.count())) line_count.saveAsTextFile(output2) exit EOF

- 26. 26 of 35 Using Spark with HDFS Tiering - continued Using the file we just created, we can run a Spark shell to do a word count and line count (as an example) on input from the local DAS cluster with output going to the remote Isilon cluster and vice-versa. Commands shown below: $ spark-shell -i /tmp/spark_line_word_count.scala --conf 'spark.driver.args=hdfs://das.yourdomain.com/tmp/spark/redhat-release hdfs://isi.yourdomain.com/tmp/spark/redhat-release-das' $ spark-shell -i /tmp/spark_line_word_count.scala --conf 'spark.driver.args=hdfs://isi.yourdomain.com/tmp/spark/redhat-release-das hdfs://das.yourdomain.com/tmp/spark/redhat-release-isilon'

- 27. 27 of 35 Next Steps 2. Solution white-boarding and proof-point discussion with subject matter expert 4. Discuss Implementation Services 3. Discuss Cost and TCO Analysis 1. Assess your data and workloads Improve Outcomes Control Costs Minimize Risk $

![20 of 35

Creating a remote Hive table on Isilon and loading data

Create an internal nonpartitioned table and load data using local inpath:

USE remote_DB;

OK

Time taken: 0.036 seconds

CREATE TABLE passwd_int_nonpart (user_name STRING, password STRING, user_id STRING,

group_id STRING, user_id_info STRING, home_dir STRING, shell STRING) ROW FORMAT DELIMITED

FIELDS TERMINATED BY ':‘;

OK

Time taken: 0.211 seconds

LOAD data local inpath '/etc/passwd' into TABLE passwd_int_nonparty;

Loading data to table remote_db.passwd_int_nonpart

Table remote_db.passwd_int_nonpart stats: [numFiles=1, numRows=0, totalSize=1808, rawDataSize=0]

OK

Time taken: 0.261 seconds](https://image.slidesharecdn.com/hdfstieringwithisilon-2018-180227190552/85/Hadoop-Tiering-with-Dell-EMC-Isilon-2018-20-320.jpg)

![21 of 35

Hive Tiering Performance with Isilon Gen5 & Gen6

In additional to the functional testing of Hive in an HDFS Tiering setup, performance

of both x410 (Gen 5) and H600 (Gen 6) were tested using a TPCDS (3 TB Dataset).

The 3TB data set was first generated on the DAS cluster (6 Nodes – 1 MASTER, 5

WORKERS, each with 40cores, 256G RAM, and 12 x 1TB drives excluding OS

drives).

The 3TB TPCDS set was generated again on two remote Isilon cluters:

• 7 x X410 nodes (Isilon X410-4U-Dual-256GB-2x1GE-2x10GE SFP+-96TB-3277GB SSD) running OneFS

8.0.1.1

• 1 H600 Chassis (4 nodes of Isilon H600-4U-Single-256GB-1x1GE-2x40GE SFP+-36TB-6554GB SSD)

running OneFS 8.1.0.0

[Results of performance test on next slide]](https://image.slidesharecdn.com/hdfstieringwithisilon-2018-180227190552/85/Hadoop-Tiering-with-Dell-EMC-Isilon-2018-21-320.jpg)