Ad

Hands-on Guide to Apache Spark 3: Build Scalable Computing Engines for Batch and Stream Data Processing 1st Edition Alfonso Antolínez García

- 1. Read Anytime Anywhere Easy Ebook Downloads at ebookmeta.com Hands-on Guide to Apache Spark 3: Build Scalable Computing Engines for Batch and Stream Data Processing 1st Edition Alfonso Antolínez García https://ebookmeta.com/product/hands-on-guide-to-apache- spark-3-build-scalable-computing-engines-for-batch-and- stream-data-processing-1st-edition-alfonso-antolinez-garcia/ OR CLICK HERE DOWLOAD EBOOK Visit and Get More Ebook Downloads Instantly at https://ebookmeta.com

- 3. Alfonso Antolínez García Hands-on Guide to Apache Spark 3 Build Scalable Computing Engines for Batch and Stream Data Processing

- 4. Alfonso Antolínez García Madrid, Spain ISBN 978-1-4842-9379-9 e-ISBN 978-1-4842-9380-5 https://doi.org/10.1007/978-1-4842-9380-5 © Alfonso Antolínez García 2023 Apress Standard The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, expressed or implied, with respect to the material contained herein or for any errors or omissions that may have been made. The publisher remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. This Apress imprint is published by the registered company APress Media, LLC, part of Springer Nature. The registered company address is: 1 New York Plaza, New York, NY 10004, U.S.A.

- 5. To my beloved family

- 6. Any source code or other supplementary material referenced by the author in this book is available to readers on GitHub (https://github.com/Apress). For more detailed information, please visit http://www.apress.com/source-code.

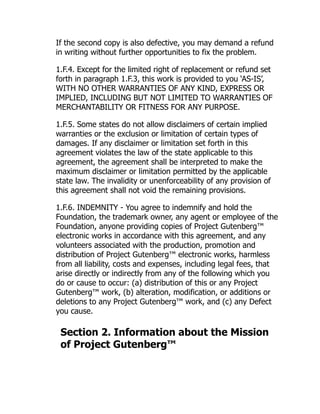

- 7. Table of Contents Part I: Apache Spark Batch Data Processing Chapter 1:Introduction to Apache Spark for Large-Scale Data Analytics 1. 1 What Is Apache Spark? Simpler to Use and Operate Fast Scalable Ease of Use Fault Tolerance at Scale 1. 2 Spark Unified Analytics Engine 1. 3 How Apache Spark Works Spark Application Model Spark Execution Model Spark Cluster Model 1. 4 Apache Spark Ecosystem Spark Core Spark APIs Spark SQL and DataFrames and Datasets Spark Streaming Spark GraphX 1. 5 Batch vs.Streaming Data What Is Batch Data Processing? What Is Stream Data Processing? Difference Between Stream Processing and Batch Processing

- 8. 1. 6 Summary Chapter 2:Getting Started with Apache Spark 2. 1 Downloading and Installing Apache Spark Installation of Apache Spark on Linux Installation of Apache Spark on Windows 2. 2 Hands-On Spark Shell Using the Spark Shell Command Running Self-Contained Applications with the spark-submit Command 2. 3 Spark Application Concepts Spark Application and SparkSession Access the Existing SparkSession 2. 4 Transformations, Actions, Immutability, and Lazy Evaluation Transformations Narrow Transformations Wide Transformations Actions 2. 5 Summary Chapter 3:Spark Low-Level API 3. 1 Resilient Distributed Datasets (RDDs) Creating RDDs from Parallelized Collections Creating RDDs from External Datasets Creating RDDs from Existing RDDs 3. 2 Working with Key-Value Pairs Creating Pair RDDs Showing the Distinct Keys of a Pair RDD

- 9. Transformations on Pair RDDs Actions on Pair RDDs 3. 3 Spark Shared Variables:Broadcasts and Accumulators Broadcast Variables Accumulators 3. 4 When to Use RDDs 3. 5 Summary Chapter 4:The Spark High-Level APIs 4. 1 Spark Dataframes Attributes of Spark DataFrames Methods for Creating Spark DataFrames 4. 2 Use of Spark DataFrames Select DataFrame Columns Select Columns Based on Name Patterns Filtering Results of a Query Based on One or Multiple Conditions Using Different Column Name Notations Using Logical Operators for Multi-condition Filtering Manipulating Spark DataFrame Columns Renaming DataFrame Columns Dropping DataFrame Columns Creating a New Dataframe Column Dependent on Another Column User-Defined Functions (UDFs) Merging DataFrames with Union and UnionByName Joining DataFrames with Join 4. 3 Spark Cache and Persist of Data

- 10. Unpersisting Cached Data 4. 4 Summary Chapter 5:Spark Dataset API and Adaptive Query Execution 5. 1 What Are Spark Datasets? 5. 2 Methods for Creating Spark Datasets 5. 3 Adaptive Query Execution 5. 4 Data-Dependent Adaptive Determination of the Shuffle Partition Number 5. 5 Runtime Replanning of Join Strategies 5. 6 Optimization of Unevenly Distributed Data Joins 5. 7 Enabling the Adaptive Query Execution (AQE) 5. 8 Summary Chapter 6:Introduction to Apache Spark Streaming 6. 1 Real-Time Analytics of Bound and Unbound Data 6. 2 Challenges of Stream Processing 6. 3 The Uncertainty Component of Data Streams 6. 4 Apache Spark Streaming’s Execution Model 6. 5 Stream Processing Architectures The Lambda Architecture The Kappa Architecture 6. 6 Spark Streaming Architecture:Discretized Streams 6. 7 Spark Streaming Sources and Receivers Basic Input Sources Advanced Input Sources 6. 8 Spark Streaming Graceful Shutdown 6. 9 Transformations on DStreams

- 11. 6. 10 Summary Part II: Apache Spark Streaming Chapter 7:Spark Structured Streaming 7. 1 General Rules for Message Delivery Reliability 7. 2 Structured Streaming vs.Spark Streaming 7. 3 What Is Apache Spark Structured Streaming? Spark Structured Streaming Input Table Spark Structured Streaming Result Table Spark Structured Streaming Output Modes 7. 4 Datasets and DataFrames Streaming API Socket Structured Streaming Sources Running Socket Structured Streaming Applications Locally File System Structured Streaming Sources Running File System Streaming Applications Locally 7. 5 Spark Structured Streaming Transformations Streaming State in Spark Structured Streaming Spark Stateless Streaming Spark Stateful Streaming Stateful Streaming Aggregations 7. 6 Spark Checkpointing Streaming Recovering from Failures with Checkpointing 7. 7 Summary Chapter 8:Streaming Sources and Sinks 8. 1 Spark Streaming Data Sources Reading Streaming Data from File Data Sources Reading Streaming Data from Kafka

- 12. Reading Streaming Data from MongoDB 8. 2 Spark Streaming Data Sinks Writing Streaming Data to the Console Sink Writing Streaming Data to the File Sink Writing Streaming Data to the Kafka Sink Writing Streaming Data to the ForeachBatch Sink Writing Streaming Data to the Foreach Sink Writing Streaming Data to Other Data Sinks 8. 3 Summary Chapter 9:Event-Time Window Operations and Watermarking 9. 1 Event-Time Processing 9. 2 Stream Temporal Windows in Apache Spark What Are Temporal Windows and Why Are They Important in Streaming 9. 3 Tumbling Windows 9. 4 Sliding Windows 9. 5 Session Windows Session Window with Dynamic Gap 9. 6 Watermarking in Spark Structured Streaming What Is a Watermark? 9. 7 Summary Chapter 10:Future Directions for Spark Streaming 10. 1 Streaming Machine Learning with Spark What Is Logistic Regression? Types of Logistic Regression Use Cases of Logistic Regression

- 13. Assessing the Sensitivity and Specificity of Our Streaming ML Model 10. 2 Spark 3. 3. x Spark RocksDB State Store Database 10. 3 The Project Lightspeed Predictable Low Latency Enhanced Functionality for Processing Data/ Events New Ecosystem of Connectors Improve Operations and Troubleshooting 10. 4 Summary Bibliography Index

- 14. About the Author Alfonso Antolínez García is a senior IT manager with a long professional career serving in several multinational companies such as Bertelsmann SE, Lafarge, and TUI AG. He has been working in the media industry, the building materials industry, and the leisure industry. Alfonso also works as a university professor, teaching artificial intelligence, machine learning, and data science. In his spare time, he writes research papers on artificial intelligence, mathematics, physics, and the applications of information theory to other sciences.

- 15. About the Technical Reviewer Akshay R. Kulkarni is an AI and machine learning evangelist and a thought leader. He has consulted several Fortune 500 and global enterprises to drive AI- and data science–led strategic transformations. He is a Google Developer Expert, author, and regular speaker at major AI and data science conferences (including Strata, O’Reilly AI Conf, and GIDS). He is a visiting faculty member for some of the top graduate institutes in India. In 2019, he has been also featured as one of the top 40 under-40 data scientists in India. In his spare time, he enjoys reading, writing, coding, and building next-gen AI products.

- 16. Part I Apache Spark Batch Data Processing

- 17. (1) © The Author(s), under exclusive license to APress Media, LLC, part of Springer Nature 2023 A. Antolínez García, Hands-on Guide to Apache Spark 3 https://doi.org/10.1007/978-1-4842-9380-5_1 1. Introduction to Apache Spark for Large-Scale Data Analytics Alfonso Antolínez García1 Madrid, Spain Apache Spark started as a research project at the UC Berkeley AMPLab in 2009. It became open source in 2010 and was transferred to the Apache Software Foundation in 2013 and boasts the largest open source big data community. From its genesis, Spark was designed with a significant change in mind, to store intermediate data computations in Random Access Memory (RAM), taking advantage of the coming-down RAM prices that occurred in the 2010s, in comparison with Hadoop that keeps information in slower disks. In this chapter, I will provide an introduction to Spark, explaining how it works, the Spark Unified Analytics Engine, and the Apache Spark ecosystem. Lastly, I will describe the differences between batch and streaming data. 1.1 What Is Apache Spark? Apache Spark is a unified engine for large-scale data analytics. It provides high-level application programming interfaces (APIs) for Java, Scala, Python, and R programming languages and supports SQL, streaming data, machine learning (ML), and graph processing. Spark is a multi-language engine for executing data engineering, data science,

- 18. and machine learning on single-node machines or clusters of computers, either on-premise or in the cloud. Spark provides in-memory computing for intermediate computations, meaning data is kept in memory instead of writing it to slow disks, making it faster than Hadoop MapReduce, for example. It includes a set of high-level tools and modules such as follows: Spark SQL is for structured data processing and access to external data sources like Hive; MLlib is the library for machine learning; GraphX is the Spark component for graphs and graph-parallel computation; Structured Streaming is the Spark SQL stream processing engine; Pandas API on Spark enables Pandas users to work with large datasets by leveraging Spark; SparkR provides a lightweight interface to utilize Apache Spark from the R language; and finally PySpark provides a similar front end to run Python programs over Spark. There are five key benefits that make Apache Spark unique and bring it to the spotlight: Simpler to use and operate Fast Scalable Ease of use Fault tolerance at scale Let’s have a look at each of them. Simpler to Use and Operate Spark’s capabilities are accessed via a common and rich API, which makes it possible to interact with a unified general-purpose distributed data processing engine via different programming languages and cope with data at scale. Additionally, the broad documentation available makes the development of Spark applications straightforward. The Hadoop MapReduce processing technique and distributed computing model inspired the creation of Apache Spark. This model is conceptually simple: divide a huge problem into smaller subproblems, distribute each piece of the problem among as many individual solvers as possible, collect the individual solutions to the partial problems, and assemble them in a final result.

- 19. Fast On November 5, 2014, Databricks officially announced they have won the Daytona GraySort contest.1 In this competition, the Databricks team used a Spark cluster of 206 EC2 nodes to sort 100 TB of data (1 trillion records) in 23 minutes. The previous world record of 72 minutes using a Hadoop MapReduce cluster of 2100 nodes was set by Yahoo. Summarizing, Spark sorted the same data three times faster with ten times fewer machines. Impressive, right? But wait a bit. The same post also says, “All the sorting took place on disk (HDFS), without using Spark’s in-memory cache.” So was it not all about Spark’s in-memory capabilities? Apache Spark is recognized for its in-memory performance. However, assuming Spark’s outstanding results are due to this feature is one of the most common misconceptions about Spark’s design. From its genesis, Spark was conceived to achieve a superior performance both in memory and on disk. Therefore, Spark operators perform regular operations on disk when data does not fit in memory. Scalable Apache Spark is an open source framework intended to provide parallelized data processing at scale. At the same time, Spark high-level functions can be used to carry out different data processing tasks on datasets of diverse sizes and schemas. This is accomplished by distributing workloads from several servers to thousands of machines, running on a cluster of computers and orchestrated by a cluster manager like Mesos or Hadoop YARN. Therefore, hardware resources can increase linearly with every new computer added. It is worth clarifying that hardware addition to the cluster does not necessarily represent a linear increase in computing performance and hence linear reduction in processing time because internal cluster management, data transfer, network traffic, and so on also consume resources, subtracting them from the effective Spark computing capabilities. Despite the fact that running in cluster mode leverages Spark’s full distributed capacity, it can also be run locally on a single computer, called local mode.

- 20. If you have searched for information about Spark before, you probably have read something like “Spark runs on commodity hardware.” It is important to understand the term “commodity hardware.” In the context of big data, commodity hardware does not denote low quality, but rather equipment based on market standards, which is general-purpose, widely available, and hence affordable as opposed to purpose-built computers. Ease of Use Spark makes the life of data engineers and data scientists operating on large datasets easier. Spark provides a single unified engine and API for diverse use cases such as streaming, batch, or interactive data processing. These tools allow it to easily cope with diverse scenarios like ETL processes, machine learning, or graphs and graph-parallel computation. Spark also provides about a hundred operators for data transformation and the notion of dataframes for manipulating semi- structured data. Fault Tolerance at Scale At scale many things can go wrong. In the big data context, fault refers to failure, that is to say, Apache Spark’s fault tolerance represents its capacity to operate and to recover after a failure occurs. In large-scale clustered environments, the occurrence of any kind of failure is certain at any time; thus, Spark is designed assuming malfunctions are going to appear sooner or later. Spark is a distributed computing framework with built-in fault tolerance that takes advantage of a simple data abstraction named a RDD (Resilient Distributed Dataset) that conceals data partitioning and distributed computation from the user. RDDs are immutable collections of objects and are the building blocks of the Apache Spark data structure. They are logically divided into portions, so they can be processed in parallel, across multiple nodes of the cluster. The acronym RDD denotes the essence of these objects: Resilient (fault-tolerant): The RDD lineage or Directed Acyclic Graph (DAG) permits the recomputing of lost partitions due to node failures from which they are capable of recovering automatically.

- 21. Distributed: RDDs are processes in several nodes in parallel. Dataset: It’s the set of data to be processed. Datasets can be the result of parallelizing an existing collection of data; loading data from an external source such as a database, Hive tables, or CSV, text, or JSON files: and creating a RDD from another RDD. Using this simple concept, Spark is able to handle a wide range of data processing workloads that previously needed independent tools. Spark provides two types of fault tolerance: RDD fault tolerance and streaming write-ahead logs. Spark uses its RDD abstraction to handle failures of worker nodes in the cluster; however, to control failures in the driver process, Spark 1.2 introduced write-ahead logs, to save received data to a fault-tolerant storage, such as HDFS, S3, or a similar safeguarding tool. Fault tolerance is also achieved thanks to the introduction of the so- called DAG, or Directed Acyclic Graph, concept. Formally, a DAG is defined as a set of vertices and edges. In Spark, a DAG is used for the visual representation of RDDs and the operations being performed on them. The RDDs are represented by vertices, while the operations are represented by edges. Every edge is directed from an earlier state to a later state. This task tracking contributes to making fault tolerance possible. It is also used to schedule tasks and for the coordination of the cluster worker nodes. 1.2 Spark Unified Analytics Engine The idea of platform integration is not new in the world of software. Consider, for example, the notion of Customer Relationship Management (CRM) or Enterprise Resource Planning (ERP). The idea of unification is rooted in Spark’s design from inception. On October 28, 2016, the Association for Computing Machinery (ACM) published the article titled “Apache Spark: a unified engine for big data processing.” In this article, authors assert that due to the nature of big data datasets, a standard pipeline must combine MapReduce, SQL-like queries, and iterative machine learning capabilities. The same document states Apache Spark combines batch processing capabilities, graph analysis, and data streaming, integrating a single SQL query engine formerly split

- 22. up into different specialized systems such as Apache Impala, Drill, Storm, Dremel, Giraph, and others. Spark’s simplicity resides in its unified API, which makes the development of applications easier. In contrast to previous systems that required saving intermediate data to a permanent storage to transfer it later on to other engines, Spark incorporates many functionalities in the same engine and can execute different modules to the same data and very often in memory. Finally, Spark has facilitated the development of new applications, such as scaling iterative algorithms, integrating graph querying and algorithms in the Spark Graph component. The value added by the integration of several functionalities into a single system can be seen, for instance, in modern smartphones. For example, nowadays, taxi drivers have replaced several devices (GPS navigator, radio, music cassettes, etc.) with a single smartphone. In unifying the functions of these devices, smartphones have eventually enabled new functionalities and service modalities that would not have been possible with any of the devices operating independently. 1.3 How Apache Spark Works We have already mentioned Spark scales by distributing computing workload across a large cluster of computers, incorporating fault tolerance and parallel computing. We have also pointed out it uses a unified engine and API to manage workloads and to interact with applications written in different programming languages. In this section we are going to explain the basic principles Apache Spark uses to perform big data analysis under the hood. We are going to walk you through the Spark Application Model, Spark Execution Model, and Spark Cluster Model. Spark Application Model In MapReduce, the highest-level unit of computation is the job; in Spark, the highest-level unit of computation is the application. In a job we can load data, apply a map function to it, shuffle it, apply a reduce function to it, and finally save the information to a fault-tolerant storage device. In Spark, applications are self-contained entities that execute

- 23. the user's code and return the results of the computation. As mentioned before, Spark can run applications using coordinated resources of multiple computers. Spark applications can carry out a single batch job, execute an iterative session composed of several jobs, or act as a long-lived streaming server processing unbounded streams of data. In Spark, a job is launched every time an application invokes an action. Unlike other technologies like MapReduce, which starts a new process for each task, Spark applications are executed as independent processes under the coordination of the SparkSession object running in the driver program. Spark applications using iterative algorithms benefit from dataset caching capabilities among other operations. This is feasible because those algorithms conduct repetitive operations on data. Finally, Spark applications can maintain steadily running processes on their behalf in cluster nodes even when no job is being executed, and multiple applications can run on top of the same executor. The former two characteristics combined leverage Spark rapid startup time and in-memory computing. Spark Execution Model The Spark Execution Model contains vital concepts such as the driver program, executors, jobs, tasks, and stages. Understanding of these concepts is of paramount importance for fast and efficient Spark application development. Inside Spark, tasks are the smallest execution unit and are executed inside an executor. A task executes a limited number of instructions. For example, loading a file, filtering, or applying a map() function to the data could be considered a task. Stages are collections of tasks running the same code, each of them in different chunks of a dataset. For example, the use of functions such as reduceByKey(), Join(), etc., which require a shuffle or reading a dataset, will trigger in Spark the creation of a stage. Jobs, on the other hand, comprise several stages. Next, due to their relevance, we are going to study the concepts of the driver program and executors together with the Spark Cluster Model.

- 24. Spark Cluster Model Apache Spark running in cluster mode has a master/worker hierarchical architecture depicted in Figure 1-1 where the driver program plays the role of master node. The Spark Driver is the central coordinator of the worker nodes (slave nodes), and it is responsible for delivering the results back to the client. Workers are machine nodes that run executors. They can host one or multiple workers, they can execute only one JVM (Java Virtual Machine) per worker, and each worker can generate one or more executors as shown in Figure 1-2. The Spark Driver generates the SparkContext and establishes the communication with the Spark Execution environment and with the cluster manager, which provides resources for the applications. The Spark Framework can adopt several cluster managers: Spark’s Standalone Cluster Manager, Apache Mesos, Hadoop YARN, or Kubernetes. The driver connects to the different nodes of the cluster and starts processes called executors, which provide computing resources and in-memory storage for RDDs. After resources are available, it sends the applications’ code (JAR or Python files) to the executors acquired. Finally, the SparkContext sends tasks to the executors to run the code already placed in the workers, and these tasks are launched in separate processor threads, one per worker node core. The SparkContext is also used to create RDDs.

- 25. Figure 1-1 Apache Spark cluster mode overview In order to provide applications with logical fault tolerance at both sides of the cluster, each driver schedules its own tasks and each task, running in every executor, executes its own JVM (Java Virtual Machine) processes, also called executor processes. By default executors run in static allocation, meaning they keep executing for the entire lifetime of a Spark application, unless dynamic allocation is enabled. The driver, to keep track of executors’ health and status, receives regular heartbeats and partial execution metrics for the ongoing tasks (Figure 1-3). Heartbeats are periodic messages (every 10 s by default) from the executors to the driver. Figure 1-2 Spark communication architecture with worker nodes and executors This Execution Model also has some downsides. Data cannot be exchanged between Spark applications (instances of the SparkContext) via the in-memory computation model, without first saving the data to an external storage device. As mentioned before, Spark can be run with a wide variety of cluster managers. That is possible because Spark is a cluster-agnostic platform. This means that as long as a cluster manager is able to obtain executor processes and to provide communication among the architectural components, it is suitable for the purpose of executing Spark. That is why communication between the driver program and worker nodes must be available at all times, because the former must acquire incoming connections from the executors for as long as applications are executing on them.

- 26. Figure 1-3 Spark’s heartbeat communication between executors and the driver 1.4 Apache Spark Ecosystem The Apache Spark ecosystem is composed of a unified and fault- tolerant core engine, on top of which are four higher-level libraries that include support for SQL queries, data streaming, machine learning, and graph processing. Those individual libraries can be assembled in sophisticated workflows, making application development easier and improving productivity. Spark Core Spark Core is the bedrock on top of which in-memory computing, fault tolerance, and parallel computing are developed. The Core also provides data abstraction via RDDs and together with the cluster manager data arrangement over the different nodes of the cluster. The high-level libraries (Spark SQL, Streaming, MLlib for machine learning, and GraphX for graph data processing) are also running over the Core. Spark APIs Spark incorporates a series of application programming interfaces (APIs) for different programming languages (SQL, Scala, Java, Python, and R), paving the way for the adoption of Spark by a great variety of professionals with different development, data science, and data engineering backgrounds. For example, Spark SQL permits the

- 27. interaction with RDDs as if we were submitting SQL queries to a traditional relational database. This feature has facilitated many transactional database administrators and developers to embrace Apache Spark. Let’s now review each of the four libraries in detail. Spark SQL and DataFrames and Datasets Apache Spark provides a data programming abstraction called DataFrames integrated into the Spark SQL module. If you have experience working with Python and/or R dataframes, Spark DataFrames could look familiar to you; however, the latter are distributable across multiple cluster workers, hence not constrained to the capacity of a single computer. Spark was designed to tackle very large datasets in the most efficient way. A DataFrame looks like a relational database table or Excel spreadsheet, with columns of different data types, headers containing the names of the columns, and data stored as rows as shown in Table 1- 1. Table 1-1 Representation of a DataFrame as a Relational Table or Excel Spreadsheet firstName lastName profession birthPlace Antonio Dominguez Bandera Actor Málaga Rafael Nadal Parera Tennis Player Mallorca Amancio Ortega Gaona Businessman Busdongo de Arbas Pablo Ruiz Picasso Painter Málaga Blas de Lezo Admiral Pasajes Miguel Serveto y Conesa Scientist/Theologist Villanueva de Sigena On the other hand, Figure 1-4 depicts an example of a DataFrame.

- 28. Figure 1-4 Example of a DataFrame A Spark DataFrame can also be defined as an integrated data structure optimized for distributed big data processing. A Spark DataFrame is also a RDD extension with an easy-to-use API for simplifying writing code. For the purposes of distributed data processing, the information inside a Spark DataFrame is structured around schemas. Spark schemas contain the names of the columns, the data type of a column, and its nullable properties. When the nullable property is set to true, that column accepts null values. SQL has been traditionally the language of choice for many business analysts, data scientists, and advanced users to leverage data. Spark SQL allows these users to query structured datasets as they would have done if they were in front of their traditional data source, hence facilitating adoption. On the other hand, in Spark a dataset is an immutable and a strongly typed data structure. Datasets, as DataFrames, are mapped to a data schema and incorporate type safety and an object-oriented interface. The Dataset API converts between JVM objects and tabular data representation taking advantage of the encoder concept. This tabular representation is internally stored in a binary format called Spark Tungsten, which improves operations in serialized data and improves in-memory performance. Datasets incorporate compile-time safety, allowing user-developed code to be error-tested before the application is executed. There are several differences between datasets and dataframes. The most important one could be datasets are only available to the Java and Scala APIs. Python or R applications cannot use datasets. Spark Streaming

- 29. Spark Structured Streaming is a high-level library on top of the core Spark SQL engine. Structured Streaming enables Spark’s fault-tolerant and real-time processing of unbounded data streams without users having to think about how the streaming takes place. Spark Structured Streaming provides fault-tolerant, fast, end-to-end, exactly-once, at- scale stream processing. Spark Streaming permits express streaming computation in the same fashion as static data is computed via batch processing. This is achieved by executing the streaming process incrementally and continuously and updating the outputs as the incoming data is ingested. With Spark 2.3, a new low-latency processing mode called continuous processing was introduced, achieving end-to-end latencies of as low as 1 ms, ensuring at-least-once2 message delivery. The at- least-once concept is depicted in Figure 1-5. By default, Structured Streaming internally processes the information as micro-batches, meaning data is processed as a series of tiny batch jobs. Figure 1-5 Depiction of the at-least-once message delivery semantic Spark Structured Streaming also uses the same concepts of datasets and DataFrames to represent streaming aggregations, event-time windows, stream-to-batch joins, etc. using different programming language APIs (Scala, Java, Python, and R). It means the same queries can be used without changing the dataset/DataFrame operations, therefore choosing the operational mode that best fits our application requirements without modifying the code. Spark’s machine learning (ML) library is commonly known as MLlib, though it is not its official name. MLlib’s goal is to provide big data out- of-the-box, easy-to-use machine learning capabilities. At a high level, it provides capabilities such as follows: Machine learning algorithms like classification, clustering, regression, collaborative filtering, decision trees, random forests, and gradient-boosted trees among others

- 30. Featurization: Term Frequency-Inverse Document Frequency (TF-IDF) statistical and feature vectorization method for natural language processing and information retrieval. Word2vec: It takes text corpus as input and produces the word vectors as output. StandardScaler: It is a very common tool for pre-processing steps and feature standardization. Principal component analysis, which is an orthogonal transformation to convert possibly correlated variables. Etc. ML Pipelines, to create and tune machine learning pipelines Predictive Model Markup Language (PMML), to export models to PMML Basic Statistics, including summary statistics, correlation between series, stratified sampling, etc. As of Spark 2.0, the primary Spark Machine Learning API is the DataFrame-based API in the spark.ml package, switching from the traditional RDD-based APIs in the spark.mllib package. Spark GraphX GraphX is a new high-level Spark library for graphs and graph-parallel computation designed to solve graph problems. GraphX extends the Spark RDD capabilities by introducing this new graph abstraction to support graph computation and includes a collection of graph algorithms and builders to optimize graph analytics. The Apache Spark ecosystem described in this section is portrayed in Figure 1-6.

- 31. Figure 1-6 The Apache Spark ecosystem In Figure 1-7 we can see the Apache Spark ecosystem of connectors. Figure 1-7 Apache Spark ecosystem of connectors 1.5 Batch vs. Streaming Data

- 32. Nowadays, the world generates boundless amounts of data, and it continues to augment at an astonishing rate. It is expected the volume of information created, captured, copied, and consumed worldwide from 2010 to 2025 will exceed 180 ZB.3 If this figure does not say much to you, imagine your personal computer or laptop has a hard disk of 1 TB (which could be considered a standard in modern times). It would be necessary for you to have 163,709,046,319.13 disks to store such amount of data.4 Presently, data is rarely static. Remember the famous three Vs of big data: Volume The unprecedented explosion of data production means that storage is no longer the real challenge, but to generate actionable insights from within gigantic datasets. Velocity Data is generated at an ever-accelerating pace, posing the challenge for data scientists to find techniques to collect, process, and make use of information as it comes in. Variety Big data is disheveled, sources of information heterogeneous, and data formats diverse. While structured data is neatly arranged within tables, unstructured data is information in a wide variety of forms without following predefined data models, making it difficult to store in conventional databases. The vast majority of new data being generated today is unstructured, and it can be human-generated or machine-generated. Unstructured data is more difficult to deal with and extract value from. Examples of unstructured data include medical images, video, audio files, sensors, social media posts, and more. For businesses, data processing is critical to accelerate data insights obtaining deep understanding of particular issues by analyzing information. This deep understanding assists organizations in developing business acumen and turning information into actionable insights. Therefore, it is relevant enough to trigger actions leading us to

- 33. improve operational efficiency, gain competitive advantage, leverage revenue, and increase profits. Consequently, in the face of today's and tomorrow's business challenges, analyzing data is crucial to discover actionable insights and stay afloat and profitable. It is worth mentioning there are significant differences between data insights, data analytics, and just data, though many times they are used interchangeably. Data can be defined as a collection of facts, while data analytics is about arranging and scrutinizing the data. Data insights are about discovering patterns in data. There is also a hierarchical relationship between these three concepts. First, information must be collected and organized, only after it can be analyzed and finally data insights can be extracted. This hierarchy can be graphically seen in Figure 1-8. Figure 1-8 Hierarchical relationship between data, data analytics, and data insight extraction When it comes to data processing, there are many different methodologies, though stream and batch processing are the two most common ones. In this section, we will explain the differences between these two data processing techniques. So let's define batch and stream processing before diving into the details. What Is Batch Data Processing? Batch data processing can be defined as a computing method of executing high-volume data transactions in repetitive batches in which the data collection is separate from the processing. In general, batch jobs do not require user interaction once the process is initiated. Batch

- 34. processing is particularly suitable for end-of-cycle treatment of information, such as warehouse stock update at the end of the day, bank reconciliation, or monthly payroll calculation, to mention some of them. Batch processing has become a common part of the corporate back office processes, because it provides a significant number of advantages, such as efficiency and data quality due to the lack of human intervention. On the other hand, batch jobs have some cons. The more obvious one could be they are complex and critical because they are part of the backbone of the organizations. As a result, developing sophisticated batch jobs can be expensive up front in terms of time and resources, but in the long run, they pay the investment off. Another disadvantage of batch data processing is that due to its large scale and criticality, in case of a malfunction, significant production shutdowns are likely to occur. Batch processes are monolithic in nature; thus, in case of rise in data volumes or peaks of demand, they cannot be easily adapted. What Is Stream Data Processing? Stream data processing could be characterized as the process of collecting, manipulating, analyzing, and delivering up-to-date information and keeping the state of data updated while it is still in motion. It could also be defined as a low-latency way of collecting and processing information while it is still in transit. With stream processing, the data is processed in real time; thus, there is no delay between data collection and processing and providing instant response. Stream processing is particularly suitable when data comes in as a continuous flow while changing over time, with high velocity, and real- time analytics is needed or response to an event as soon as it occurs is mandatory. Stream data processing leverages active intelligence, owning in-the-moment consciousness about important business events and enabling triggering instantaneous actions. Analytics is performed instantly on the data or within a fraction of a second; thus, it is perceived by the user as a real-time update. Some examples where stream data processing is the best option are credit card fraud detection, real-time system security monitoring, or the use of Internet- of-Things (IoT) sensors. IoT devices permit monitoring anomalies in machinery and provide control with a heads-up as soon as anomalies or

- 35. outliers5 are detected. Social media and customer sentiment analysis are other trendy fields of stream data processing application. One of the main disadvantages of stream data processing is implementing it at scale. In real life data streaming is far away from being perfect, and often data does not flow regularly or smoothly. Imagine a situation in which data flow is disrupted and some data is missing or broken down. Then, after normal service is restored, that missing or broken-down information suddenly arrives at the platform, flooding the processing system. To be able to cope with situations like this, streaming architectures require spare capacity of computing, communications, and storage. Difference Between Stream Processing and Batch Processing Summarizing, we could say that stream processing involves the treatment and analysis of data in motion in real or almost-real time, while batch processing entails handling and analyzing static information at time intervals. In batch jobs, you manipulate information produced in the past and consolidated in a permanent storage device. It is also what is commonly known as information at rest. In contrast, stream processing is a low-latency solution, demanding the analysis of streams of information while it is still in motion. Incoming data requires to be processed in flight, in real or almost-real time, rather than saved in a permanent storage. Given that data is consumed as it is generated, it provides an up-to-the-minute snapshot of the information, enabling a proactive response to events. Another difference between batch and stream processing is that in stream processing only the information considered relevant for the process being analyzed is stored from the very beginning. On the other hand, data considered of no immediate interest can be stored in low-cost devices for ulterior analysis with data mining algorithms, machine learning models, etc. A graphical representation of batch vs. stream data processing is shown in Figure 1-9.

- 36. 1 Figure 1-9 Batch vs. streaming processing representation 1.6 Summary In this chapter we briefly looked at the Apache Spark architecture, implementation, and ecosystem of applications. We also covered the two different types of data processing Spark can deal with, batch and streaming, and the main differences between them. In the next chapter, we are going to go through the Spark setup process, the Spark application concept, and the two different types of Apache Spark RDD operations: transformations and actions. Footnotes www.databricks.com/blog/2014/11/05/spark-officially-sets-a- new-record-in-large-scale-sorting.html

- 37. 2 3 4 5 With the at-least-once message delivery semantic, a message can be delivered more than once; however, no message can be lost. www.statista.com/statistics/871513/worldwide-data-created/ 1 zettabyte = 1021 bytes. Parameters out of defined thresholds.

- 38. (1) © The Author(s), under exclusive license to APress Media, LLC, part of Springer Nature 2023 A. Antolínez García, Hands-on Guide to Apache Spark 3 https://doi.org/10.1007/978-1-4842-9380-5_2 2. Getting Started with Apache Spark Alfonso Antolínez García1 Madrid, Spain Now that you have an understanding of what Spark is and how it works, we can get you set up to start using it. In this chapter, I’ll provide download and installation instructions and cover Spark command-line utilities in detail. I’ll also review Spark application concepts, as well as transformations, actions, immutability, and lazy evaluation. 2.1 Downloading and Installing Apache Spark The first step you have to take to have your Spark installation up and running is to go to the Spark download page and choose the Spark release 3.3.0. Then, select the package type “Pre-built for Apache Hadoop 3.3 and later” from the drop-down menu in step 2, and click the “Download Spark” link in step 3 (Figure 2-1). Figure 2-1 The Apache Spark download page This will download the file spark-3.3.0-bin-hadoop3.tgz or another similar name in your case, which is a compressed file that contains all the binaries you will need to execute Spark in local mode on your local computer or laptop. What is great about setting Apache Spark up in local mode is that you don’t need much work to do. We basically need to install Java and set some environment variables. Let’s see how to do it in several environments. Installation of Apache Spark on Linux The following steps will install Apache Spark on a Linux system. It can be Fedora, Ubuntu, or another distribution. Step 1: Verifying the Java Installation

- 39. Java installation is mandatory in installing Spark. Type the following command in a terminal window to verify Java is available and its version: $ java -version If Java is already installed on your system, you get to see a message similar to the following: $ java -version java version "18.0.2" 2022-07-19 Java(TM) SE Runtime Environment (build 18.0.2+9-61) Java HotSpot(TM) 64-Bit Server VM (build 18.0.2+9-61, mixed mode, sharing) Your Java version may be different. Java 18 is the Java version in this case. If you don’t have Java installed 1. Open a browser window, and navigate to the Java download page as seen in Figure 2-2. 2. Click the Java file of your choice and save the file to a location (e.g., /home/<user>/Downloads). Figure 2-2 Java download page Step 2: Installing Spark

- 40. Extract the Spark .tgz file downloaded before. To unpack the spark-3.3.0-bin-hadoop3.tgz file in Linux, open a terminal window, move to the location in which the file was downloaded $ cd PATH/TO/spark-3.3.0-bin-hadoop3.tgz_location and execute $ tar -xzvf ./spark-3.3.0-bin-hadoop3.tgz Step 3: Moving Spark Software Files You can move the Spark files to an installation directory such as /usr/local/spark: $ su - Password: $ cd /home/<user>/Downloads/ $ mv spark-3.3.0-bin-hadoop3 /usr/local/spark $ exit Step 4: Setting Up the Environment for Spark Add the following lines to the ~/.bashrc file. This will add the location of the Spark software files and the location of binary files to the PATH variable: export SPARK_HOME=/usr/local/spark export PATH=$PATH:$SPARK_HOME/bin Use the following command for sourcing the ~/.bashrc file, updating the environment variables: $ source ~/.bashrc Step 5: Verifying the Spark Installation Write the following command for opening the Spark shell: $ $SPARK_HOME/bin/spark-shell If Spark is installed successfully, then you will find the following output: Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 22/08/29 22:16:45 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 22/08/29 22:16:46 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041. Spark context Web UI available at http://192.168.0.16:4041 Spark context available as 'sc' (master = local[*], app id = local- 1661804206245). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _ / _ / _ `/ __/ '_/ /___/ .__/_,_/_/ /_/_ version 3.3.0 /_/ Using Scala version 2.12.15 (Java HotSpot(TM) 64-Bit Server VM, Java 18.0.2)

- 41. Type in expressions to have them evaluated. Type :help for more information. scala> You can try the installation a bit further by taking advantage of the README.md file that is present in the $SPARK_HOME directory: scala> val readme_file = sc.textFile("/usr/local/spark/README.md") readme_file: org.apache.spark.rdd.RDD[String] = /usr/local/spark/README.md MapPartitionsRDD[1] at textFile at <console>:23 The Spark context Web UI would be available typing the following URL in your browser: http://localhost:4040 There, you can see the jobs, stages, storage space, and executors that are used for your small application. The result can be seen in Figure 2-3. Figure 2-3 Apache Spark Web UI showing jobs, stages, storage, environment, and executors used for the application running on the Spark shell Installation of Apache Spark on Windows In this section I will show you how to install Apache Spark on Windows 10 and test the installation. It is important to notice that to perform this installation, you must have a user account with administrator privileges. This is mandatory to install the software and modify system PATH. Step 1: Java Installation As we did in the previous section, the first step you should take is to be sure you have Java installed and it is accessible by Apache Spark. You can verify Java is installed using the command line by clicking Start, typing cmd, and clicking Command Prompt. Then, type the following command in the command line: java -version If Java is installed, you will receive an output similar to this: openjdk version "18.0.2.1" 2022-08-18 OpenJDK Runtime Environment (build 18.0.2.1+1-1) OpenJDK 64-Bit Server VM (build 18.0.2.1+1-1, mixed mode, sharing)

- 42. Another Random Document on Scribd Without Any Related Topics

- 43. If the second copy is also defective, you may demand a refund in writing without further opportunities to fix the problem. 1.F.4. Except for the limited right of replacement or refund set forth in paragraph 1.F.3, this work is provided to you ‘AS-IS’, WITH NO OTHER WARRANTIES OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO WARRANTIES OF MERCHANTABILITY OR FITNESS FOR ANY PURPOSE. 1.F.5. Some states do not allow disclaimers of certain implied warranties or the exclusion or limitation of certain types of damages. If any disclaimer or limitation set forth in this agreement violates the law of the state applicable to this agreement, the agreement shall be interpreted to make the maximum disclaimer or limitation permitted by the applicable state law. The invalidity or unenforceability of any provision of this agreement shall not void the remaining provisions. 1.F.6. INDEMNITY - You agree to indemnify and hold the Foundation, the trademark owner, any agent or employee of the Foundation, anyone providing copies of Project Gutenberg™ electronic works in accordance with this agreement, and any volunteers associated with the production, promotion and distribution of Project Gutenberg™ electronic works, harmless from all liability, costs and expenses, including legal fees, that arise directly or indirectly from any of the following which you do or cause to occur: (a) distribution of this or any Project Gutenberg™ work, (b) alteration, modification, or additions or deletions to any Project Gutenberg™ work, and (c) any Defect you cause. Section 2. Information about the Mission of Project Gutenberg™

- 44. Project Gutenberg™ is synonymous with the free distribution of electronic works in formats readable by the widest variety of computers including obsolete, old, middle-aged and new computers. It exists because of the efforts of hundreds of volunteers and donations from people in all walks of life. Volunteers and financial support to provide volunteers with the assistance they need are critical to reaching Project Gutenberg™’s goals and ensuring that the Project Gutenberg™ collection will remain freely available for generations to come. In 2001, the Project Gutenberg Literary Archive Foundation was created to provide a secure and permanent future for Project Gutenberg™ and future generations. To learn more about the Project Gutenberg Literary Archive Foundation and how your efforts and donations can help, see Sections 3 and 4 and the Foundation information page at www.gutenberg.org. Section 3. Information about the Project Gutenberg Literary Archive Foundation The Project Gutenberg Literary Archive Foundation is a non- profit 501(c)(3) educational corporation organized under the laws of the state of Mississippi and granted tax exempt status by the Internal Revenue Service. The Foundation’s EIN or federal tax identification number is 64-6221541. Contributions to the Project Gutenberg Literary Archive Foundation are tax deductible to the full extent permitted by U.S. federal laws and your state’s laws. The Foundation’s business office is located at 809 North 1500 West, Salt Lake City, UT 84116, (801) 596-1887. Email contact links and up to date contact information can be found at the Foundation’s website and official page at www.gutenberg.org/contact

- 45. Section 4. Information about Donations to the Project Gutenberg Literary Archive Foundation Project Gutenberg™ depends upon and cannot survive without widespread public support and donations to carry out its mission of increasing the number of public domain and licensed works that can be freely distributed in machine-readable form accessible by the widest array of equipment including outdated equipment. Many small donations ($1 to $5,000) are particularly important to maintaining tax exempt status with the IRS. The Foundation is committed to complying with the laws regulating charities and charitable donations in all 50 states of the United States. Compliance requirements are not uniform and it takes a considerable effort, much paperwork and many fees to meet and keep up with these requirements. We do not solicit donations in locations where we have not received written confirmation of compliance. To SEND DONATIONS or determine the status of compliance for any particular state visit www.gutenberg.org/donate. While we cannot and do not solicit contributions from states where we have not met the solicitation requirements, we know of no prohibition against accepting unsolicited donations from donors in such states who approach us with offers to donate. International donations are gratefully accepted, but we cannot make any statements concerning tax treatment of donations received from outside the United States. U.S. laws alone swamp our small staff. Please check the Project Gutenberg web pages for current donation methods and addresses. Donations are accepted in a number of other ways including checks, online payments and

- 46. credit card donations. To donate, please visit: www.gutenberg.org/donate. Section 5. General Information About Project Gutenberg™ electronic works Professor Michael S. Hart was the originator of the Project Gutenberg™ concept of a library of electronic works that could be freely shared with anyone. For forty years, he produced and distributed Project Gutenberg™ eBooks with only a loose network of volunteer support. Project Gutenberg™ eBooks are often created from several printed editions, all of which are confirmed as not protected by copyright in the U.S. unless a copyright notice is included. Thus, we do not necessarily keep eBooks in compliance with any particular paper edition. Most people start at our website which has the main PG search facility: www.gutenberg.org. This website includes information about Project Gutenberg™, including how to make donations to the Project Gutenberg Literary Archive Foundation, how to help produce our new eBooks, and how to subscribe to our email newsletter to hear about new eBooks.

![Extract the Spark .tgz file downloaded before. To unpack the spark-3.3.0-bin-hadoop3.tgz file in Linux, open

a terminal window, move to the location in which the file was downloaded

$ cd PATH/TO/spark-3.3.0-bin-hadoop3.tgz_location

and execute

$ tar -xzvf ./spark-3.3.0-bin-hadoop3.tgz

Step 3: Moving Spark Software Files

You can move the Spark files to an installation directory such as /usr/local/spark:

$ su -

Password:

$ cd /home/<user>/Downloads/

$ mv spark-3.3.0-bin-hadoop3 /usr/local/spark

$ exit

Step 4: Setting Up the Environment for Spark

Add the following lines to the ~/.bashrc file. This will add the location of the Spark software files and the

location of binary files to the PATH variable:

export SPARK_HOME=/usr/local/spark

export PATH=$PATH:$SPARK_HOME/bin

Use the following command for sourcing the ~/.bashrc file, updating the environment variables:

$ source ~/.bashrc

Step 5: Verifying the Spark Installation

Write the following command for opening the Spark shell:

$ $SPARK_HOME/bin/spark-shell

If Spark is installed successfully, then you will find the following output:

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use

setLogLevel(newLevel).

22/08/29 22:16:45 WARN NativeCodeLoader: Unable to load native-hadoop

library for your platform... using builtin-java classes where applicable

22/08/29 22:16:46 WARN Utils: Service 'SparkUI' could not bind on port 4040.

Attempting port 4041.

Spark context Web UI available at http://192.168.0.16:4041

Spark context available as 'sc' (master = local[*], app id = local-

1661804206245).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ '_/

/___/ .__/_,_/_/ /_/_ version 3.3.0

/_/

Using Scala version 2.12.15 (Java HotSpot(TM) 64-Bit Server VM, Java 18.0.2)](https://image.slidesharecdn.com/97543-250324152234-99640806/85/Hands-on-Guide-to-Apache-Spark-3-Build-Scalable-Computing-Engines-for-Batch-and-Stream-Data-Processing-1st-Edition-Alfonso-Antolinez-Garcia-40-320.jpg)

![Type in expressions to have them evaluated.

Type :help for more information.

scala>

You can try the installation a bit further by taking advantage of the README.md file that is present in the

$SPARK_HOME directory:

scala> val readme_file = sc.textFile("/usr/local/spark/README.md")

readme_file: org.apache.spark.rdd.RDD[String] = /usr/local/spark/README.md

MapPartitionsRDD[1] at textFile at <console>:23

The Spark context Web UI would be available typing the following URL in your browser:

http://localhost:4040

There, you can see the jobs, stages, storage space, and executors that are used for your small application.

The result can be seen in Figure 2-3.

Figure 2-3 Apache Spark Web UI showing jobs, stages, storage, environment, and executors used for the application running on

the Spark shell

Installation of Apache Spark on Windows

In this section I will show you how to install Apache Spark on Windows 10 and test the installation. It is

important to notice that to perform this installation, you must have a user account with administrator

privileges. This is mandatory to install the software and modify system PATH.

Step 1: Java Installation

As we did in the previous section, the first step you should take is to be sure you have Java installed and it is

accessible by Apache Spark. You can verify Java is installed using the command line by clicking Start, typing

cmd, and clicking Command Prompt. Then, type the following command in the command line:

java -version

If Java is installed, you will receive an output similar to this:

openjdk version "18.0.2.1" 2022-08-18

OpenJDK Runtime Environment (build 18.0.2.1+1-1)

OpenJDK 64-Bit Server VM (build 18.0.2.1+1-1, mixed mode, sharing)](https://image.slidesharecdn.com/97543-250324152234-99640806/85/Hands-on-Guide-to-Apache-Spark-3-Build-Scalable-Computing-Engines-for-Batch-and-Stream-Data-Processing-1st-Edition-Alfonso-Antolinez-Garcia-41-320.jpg)