Hbase: Introduction to column oriented databases

14 likes20,277 views

Big Data is getting more attention each day, followed by new storage paradigms. This presentation shows a fast intro to HBase, a column oriented database used by Facebook and other big players to store and extract knowledge of high volume of data.

1 of 15

Downloaded 578 times

Ad

Recommended

HBase Storage Internals

HBase Storage InternalsDataWorks Summit Apache HBase is the Hadoop opensource, distributed, versioned storage manager well suited for random, realtime read/write access. This talk will give an overview on how HBase achieve random I/O, focusing on the storage layer internals. Starting from how the client interact with Region Servers and Master to go into WAL, MemStore, Compactions and on-disk format details. Looking at how the storage is used by features like snapshots, and how it can be improved to gain flexibility, performance and space efficiency.

8. column oriented databases

8. column oriented databasesFabio Fumarola In these slides we introduce Column-Oriented Stores. We deeply analyze Google BigTable. We discuss about features, data model, architecture, components and its implementation. In the second part we discuss all the major open source implementation for column-oriented databases.

Column oriented database

Column oriented databaseKanike Krishna A column-oriented database stores data tables as columns rather than rows. This improves the speed of queries that aggregate data over large numbers of records by only reading the necessary columns from disk. Column databases compress data well and avoid reading unnecessary columns. However, they have slower insert speeds and incremental loads compared to row-oriented databases, which store each row together and are faster for queries needing entire rows.

Case Abril: Tracking real time user behavior in websites Homes with Ruby, Sin...

Case Abril: Tracking real time user behavior in websites Homes with Ruby, Sin...Luis Cipriani This presentation shows how, in less than 1 month, the Brazilian publishing company Abril build a system to track user visiting in realtime to help publishers decide what would be the best content to atract more users to websites. This system uses ruby, sinatra, heroku and redis.

Intro to column stores

Intro to column storesJustin Swanhart This talk at the Percona Live MySQL Conference and Expo describes open source column stores and compares their capabilities, correctness and performance.

Introduction to column oriented databases

Introduction to column oriented databasesArangoDB Database Column-oriented databases store data by column rather than by row. This allows fast retrieval of entire columns of data with one read operation. Column-oriented databases are well-suited for analytical queries that retrieve many rows but only a few columns, as only the needed columns are read from disk. Row-oriented databases are better for transactional queries that retrieve or update individual rows. The type of data storage - row-oriented or column-oriented - depends on the types of queries that will be run against the data.

Hadoop london

Hadoop londonYahoo Developer Network Apache Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It provides reliable storage through its distributed file system and scalable processing through its MapReduce programming model. Yahoo! uses Hadoop extensively for applications like log analysis, content optimization, and computational advertising, processing over 6 petabytes of data across 40,000 machines daily.

Hadoop Overview kdd2011

Hadoop Overview kdd2011Milind Bhandarkar In KDD2011, Vijay Narayanan (Yahoo!) and Milind Bhandarkar (Greenplum Labs, EMC) conducted a tutorial on "Modeling with Hadoop". This is the first half of the tutorial.

Hive introduction 介绍

Hive introduction 介绍ablozhou Hive is an open-source data warehouse software project that facilitates querying and managing large datasets residing in distributed storage. It provides SQL-like queries to analyze large datasets using Hadoop. Key features include support for HDFS, HBase, and SQL-like language called HiveQL. Hive also supports user-defined functions, aggregation functions, and table-generating functions to extend its capabilities.

Intro to HBase - Lars George

Intro to HBase - Lars GeorgeJAX London With the public confession of Facebook, HBase is on everyone's lips when it comes to the discussion around the new "NoSQL" area of databases. In this talk, Lars will introduce and present a comprehensive overview of HBase. This includes the history of HBase, the underlying architecture, available interfaces, and integration with Hadoop.

Hadoop Overview & Architecture

Hadoop Overview & Architecture EMC This document provides an overview of Hadoop architecture. It discusses how Hadoop uses MapReduce and HDFS to process and store large datasets reliably across commodity hardware. MapReduce allows distributed processing of data through mapping and reducing functions. HDFS provides a distributed file system that stores data reliably in blocks across nodes. The document outlines components like the NameNode, DataNodes and how Hadoop handles failures transparently at scale.

Brust hadoopecosystem

Brust hadoopecosystemAndrew Brust This document provides a summary of Hadoop and its ecosystem components. It begins with introducing the speaker, Andrew Brust, and his background. It then provides an overview of the key Hadoop components like MapReduce, HDFS, HBase, Hive, Pig, Sqoop and Flume. The document demonstrates several of these components and discusses how to use Microsoft Hadoop on Azure, Amazon EMR and Cloudera CDH virtual machines. It also summarizes the Mahout machine learning library and recommends a commercial product for visualizing and applying Mahout outputs.

Generalized framework for using NoSQL Databases

Generalized framework for using NoSQL DatabasesKIRAN V This document proposes a generalized framework for using multiple NoSQL databases like HBase and MongoDB. It allows Java objects to be directly inserted, retrieved, updated and deleted from these databases by converting objects to JSON and using database-specific handlers. The framework provides a more convenient interface compared to conventional APIs and improves performance in some cases. It was implemented and its ease of use and performance were evaluated, showing the generalized interface reduces steps for users compared to individual database APIs.

Hadoop/Mahout/HBaseで テキスト分類器を作ったよ

Hadoop/Mahout/HBaseで テキスト分類器を作ったよNaoki Yanai This document discusses Hadoop, HBase, Mahout, naive Bayes classification, and analyzing web content. It provides an example of using Mahout to train a naive Bayes classifier on web content stored in Hadoop and HBase. Evaluation results are presented, showing over 90% accuracy in classifying different types of web content. The effects of parameters like alpha values, n-grams, and feature selection are also explored.

20100128ebay

20100128ebayJeff Hammerbacher The document discusses Jeff Hammerbacher's presentation on Hadoop, Cloudera, and eBay. It begins with introductions and an outline of topics to be covered, including what Hadoop is (its core components HDFS and MapReduce), how it is being used at Facebook and eBay, and what Cloudera is building. The presentation then goes into detailed explanations of Hadoop, its architecture and subprojects, as well as use cases at large companies.

Big Data Everywhere Chicago: Unleash the Power of HBase Shell (Conversant)

Big Data Everywhere Chicago: Unleash the Power of HBase Shell (Conversant) BigDataEverywhere Jayesh Thakrar, Senior Systems Engineer, Conversant

The venerable HBase shell is often regarded as a simple utility to perform basic DDL and maintenance activities. However, it is in fact a powerful, interactive programming environment, primarily due to the JRuby engine under the covers. In this presentation, I'll describe its JRuby heritage and show some of the things that can be done with the "ird" (interactive ruby shell), as well as show how to exploit JRuby and Java integration via concrete working examples. In addition, I will demonstrate how the "shell" can be used in Hadoop streaming to quickly perform complex and large volume batch jobs.

HBase, no trouble

HBase, no troubleLINE Corporation 鶴原翔夢 (LINE Corporaion)

【京都】LINE Developer Meetup #33での発表資料です

https://line.connpass.com/event/84852/

Hadoop - Introduction to Hadoop

Hadoop - Introduction to HadoopVibrant Technologies & Computers This presentation will give you Information about :

1. What is Hadoop,

2. History of Hadoop,

3. Building Blocks – Hadoop Eco-System,

4. Who is behind Hadoop?,

5. What Hadoop is good for and why it is Good?,

HBaseConEast2016: HBase and Spark, State of the Art

HBaseConEast2016: HBase and Spark, State of the ArtMichael Stack Jean-Marc Spaggiari of Cloudera at HBaseConEast2016: http://www.meetup.com/HBase-NYC/events/233024937/

HBase and Impala Notes - Munich HUG - 20131017

HBase and Impala Notes - Munich HUG - 20131017larsgeorge Talk given during the Munich HUG meetup, 10/17/2013, about how HBase and Impala work together and caveats to watch out for.

MongoDB at GUL

MongoDB at GULIsrael Gutiérrez MongoDB is an open source NoSQL database that uses JSON-like documents with dynamic schemas (BSON format) instead of using tables as in SQL. It allows for embedding related data and flexible querying of this embedded data. Some key features include using JavaScript-style documents, scaling horizontally on commodity hardware, and supporting various languages through its driver interface.

Hadoop @ eBay: Past, Present, and Future

Hadoop @ eBay: Past, Present, and FutureRyan Hennig An overview of eBay's experience with Hadoop in the Past and Present, as well as directions for the Future. Given by Ryan Hennig at the Big Data Meetup at eBay in Netanya, Israel on Dec 2, 2013

Adventures with Raspberry Pi and Twitter API

Adventures with Raspberry Pi and Twitter APILuis Cipriani This document describes using a Raspberry Pi and Twitter API to detect goals in soccer games and play music based on tweets. It discusses setting up a goal detection system that monitors tweets for keywords related to goals and displays notifications. It also outlines creating a tweet jukebox that allows users to request songs on Twitter to be played by a Mopidy music server connected to the Raspberry Pi.

Capturando o pulso do planeta com as APIs de Streaming do Twitter

Capturando o pulso do planeta com as APIs de Streaming do TwitterLuis Cipriani 500 milhões de tweets são criados todos os dias pelos usuários do Twitter. A sua plataforma permite que qualquer desenvolvedor tenha acesso para analisar o grande volume de informação gerado todos os dias via a sua API de streaming. Essa palestra apresentará como acessar esse laboratório de dados para criar aplicações que monitoram protestos, permitem usuários interagir com a TV, identificam padrões de comportamento ou que sua imaginação mandar. Discutiremos também quais arquiteturas de software são as ideais para consumir esse tipo de fluxo de dados.

Twitter e suas APIs de Streaming - Campus Party Brasil 7

Twitter e suas APIs de Streaming - Campus Party Brasil 7Luis Cipriani The document discusses Twitter APIs and how they can be used to access Twitter data in real-time and from the past. It provides information on streaming APIs that return current Twitter data and examples of how APIs have been used to build applications that analyze hashtags, events and more. Statistics on Twitter's daily users and growth over time are also presented.

Segurança de APIs HTTP, um guia sensato para desenvolvedores preocupados

Segurança de APIs HTTP, um guia sensato para desenvolvedores preocupadosLuis Cipriani O documento fornece diretrizes para implementar segurança em APIs HTTP, discutindo esquemas de autenticação como OAuth, SSL e assinaturas digitais. Ele também aborda tópicos como rate limiting, auditoria, conteúdo sensível e modelos de permissão para proteger recursos de acordo com seu nível de sensibilidade. A mensagem central é que os desenvolvedores devem escolher um esquema de autenticação equilibrando segurança e facilidade de implementação de acordo com os riscos envolvidos em cada caso.

API Caching, why your server needs some rest

API Caching, why your server needs some restLuis Cipriani The best HTTP request made to your server is that one that never reaches it. Do you know the life cycle time of your resources? How to be sure that the user never reaches an expired response without the need to open the connection door with the origin server? What kinds of caches do exist and when do I need to use each one of them? Why can I not be afraid to read the RFCs? This talk will present good practices on the usage of HTTP cache for APIs and web applications, turning your digital products to optimize the usage of machines and save money.

Explaining A Programming Model for Context-Aware Applications in Large-Scale ...

Explaining A Programming Model for Context-Aware Applications in Large-Scale ...Luis Cipriani This talk was given in a Marster's Mobile Computing course (the idea is that each student present to the class the contribution a respective paper gives to the scientific community). So all the credit from parts taken from the article are owned by the article authors, here is the reference:

S. Sehic, F. Li, S. Nastic, S Dustdar,“A Programming Model for Context-Aware Applications in Large-Scale Pervasive Systems”, IEEE 8th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), pp 142-149, 2012.

Alexandria: um Sistema de Sistemas para Publicação de Conteúdo Digital utiliz...

Alexandria: um Sistema de Sistemas para Publicação de Conteúdo Digital utiliz...Luis Cipriani O documento apresenta um sistema chamado Alexandria para publicação de conteúdo digital utilizando a arquitetura REST e hipermídia. O sistema é composto por domínios, serviços e data entries que se comunicam através de APIs RESTful para fornecer conteúdo para vários sites de uma empresa de mídia. O sistema permite escalabilidade, evolução independente dos componentes e reúso de funcionalidades entre os sites.

Como um verdadeiro sistema REST funciona: arquitetura e performance na Abril

Como um verdadeiro sistema REST funciona: arquitetura e performance na AbrilLuis Cipriani A palestra irá compartilhar a experiência e lições aprendidas no desenvolvimento da plataforma de publicação da Abril, um sistema distribuído com vários nós independentes que se comunicam usando REST e hypermidia. Também introduziremos alguns conceitos avançados de HTTP que podem fazer com que sistemas REST executem com melhor performance, evitando os problemas comuns de se manter uma plataforma em larga escala, com uma grande diversidade de usuários.

Ad

More Related Content

Similar to Hbase: Introduction to column oriented databases (14)

Hive introduction 介绍

Hive introduction 介绍ablozhou Hive is an open-source data warehouse software project that facilitates querying and managing large datasets residing in distributed storage. It provides SQL-like queries to analyze large datasets using Hadoop. Key features include support for HDFS, HBase, and SQL-like language called HiveQL. Hive also supports user-defined functions, aggregation functions, and table-generating functions to extend its capabilities.

Intro to HBase - Lars George

Intro to HBase - Lars GeorgeJAX London With the public confession of Facebook, HBase is on everyone's lips when it comes to the discussion around the new "NoSQL" area of databases. In this talk, Lars will introduce and present a comprehensive overview of HBase. This includes the history of HBase, the underlying architecture, available interfaces, and integration with Hadoop.

Hadoop Overview & Architecture

Hadoop Overview & Architecture EMC This document provides an overview of Hadoop architecture. It discusses how Hadoop uses MapReduce and HDFS to process and store large datasets reliably across commodity hardware. MapReduce allows distributed processing of data through mapping and reducing functions. HDFS provides a distributed file system that stores data reliably in blocks across nodes. The document outlines components like the NameNode, DataNodes and how Hadoop handles failures transparently at scale.

Brust hadoopecosystem

Brust hadoopecosystemAndrew Brust This document provides a summary of Hadoop and its ecosystem components. It begins with introducing the speaker, Andrew Brust, and his background. It then provides an overview of the key Hadoop components like MapReduce, HDFS, HBase, Hive, Pig, Sqoop and Flume. The document demonstrates several of these components and discusses how to use Microsoft Hadoop on Azure, Amazon EMR and Cloudera CDH virtual machines. It also summarizes the Mahout machine learning library and recommends a commercial product for visualizing and applying Mahout outputs.

Generalized framework for using NoSQL Databases

Generalized framework for using NoSQL DatabasesKIRAN V This document proposes a generalized framework for using multiple NoSQL databases like HBase and MongoDB. It allows Java objects to be directly inserted, retrieved, updated and deleted from these databases by converting objects to JSON and using database-specific handlers. The framework provides a more convenient interface compared to conventional APIs and improves performance in some cases. It was implemented and its ease of use and performance were evaluated, showing the generalized interface reduces steps for users compared to individual database APIs.

Hadoop/Mahout/HBaseで テキスト分類器を作ったよ

Hadoop/Mahout/HBaseで テキスト分類器を作ったよNaoki Yanai This document discusses Hadoop, HBase, Mahout, naive Bayes classification, and analyzing web content. It provides an example of using Mahout to train a naive Bayes classifier on web content stored in Hadoop and HBase. Evaluation results are presented, showing over 90% accuracy in classifying different types of web content. The effects of parameters like alpha values, n-grams, and feature selection are also explored.

20100128ebay

20100128ebayJeff Hammerbacher The document discusses Jeff Hammerbacher's presentation on Hadoop, Cloudera, and eBay. It begins with introductions and an outline of topics to be covered, including what Hadoop is (its core components HDFS and MapReduce), how it is being used at Facebook and eBay, and what Cloudera is building. The presentation then goes into detailed explanations of Hadoop, its architecture and subprojects, as well as use cases at large companies.

Big Data Everywhere Chicago: Unleash the Power of HBase Shell (Conversant)

Big Data Everywhere Chicago: Unleash the Power of HBase Shell (Conversant) BigDataEverywhere Jayesh Thakrar, Senior Systems Engineer, Conversant

The venerable HBase shell is often regarded as a simple utility to perform basic DDL and maintenance activities. However, it is in fact a powerful, interactive programming environment, primarily due to the JRuby engine under the covers. In this presentation, I'll describe its JRuby heritage and show some of the things that can be done with the "ird" (interactive ruby shell), as well as show how to exploit JRuby and Java integration via concrete working examples. In addition, I will demonstrate how the "shell" can be used in Hadoop streaming to quickly perform complex and large volume batch jobs.

HBase, no trouble

HBase, no troubleLINE Corporation 鶴原翔夢 (LINE Corporaion)

【京都】LINE Developer Meetup #33での発表資料です

https://line.connpass.com/event/84852/

Hadoop - Introduction to Hadoop

Hadoop - Introduction to HadoopVibrant Technologies & Computers This presentation will give you Information about :

1. What is Hadoop,

2. History of Hadoop,

3. Building Blocks – Hadoop Eco-System,

4. Who is behind Hadoop?,

5. What Hadoop is good for and why it is Good?,

HBaseConEast2016: HBase and Spark, State of the Art

HBaseConEast2016: HBase and Spark, State of the ArtMichael Stack Jean-Marc Spaggiari of Cloudera at HBaseConEast2016: http://www.meetup.com/HBase-NYC/events/233024937/

HBase and Impala Notes - Munich HUG - 20131017

HBase and Impala Notes - Munich HUG - 20131017larsgeorge Talk given during the Munich HUG meetup, 10/17/2013, about how HBase and Impala work together and caveats to watch out for.

MongoDB at GUL

MongoDB at GULIsrael Gutiérrez MongoDB is an open source NoSQL database that uses JSON-like documents with dynamic schemas (BSON format) instead of using tables as in SQL. It allows for embedding related data and flexible querying of this embedded data. Some key features include using JavaScript-style documents, scaling horizontally on commodity hardware, and supporting various languages through its driver interface.

Hadoop @ eBay: Past, Present, and Future

Hadoop @ eBay: Past, Present, and FutureRyan Hennig An overview of eBay's experience with Hadoop in the Past and Present, as well as directions for the Future. Given by Ryan Hennig at the Big Data Meetup at eBay in Netanya, Israel on Dec 2, 2013

More from Luis Cipriani (10)

Adventures with Raspberry Pi and Twitter API

Adventures with Raspberry Pi and Twitter APILuis Cipriani This document describes using a Raspberry Pi and Twitter API to detect goals in soccer games and play music based on tweets. It discusses setting up a goal detection system that monitors tweets for keywords related to goals and displays notifications. It also outlines creating a tweet jukebox that allows users to request songs on Twitter to be played by a Mopidy music server connected to the Raspberry Pi.

Capturando o pulso do planeta com as APIs de Streaming do Twitter

Capturando o pulso do planeta com as APIs de Streaming do TwitterLuis Cipriani 500 milhões de tweets são criados todos os dias pelos usuários do Twitter. A sua plataforma permite que qualquer desenvolvedor tenha acesso para analisar o grande volume de informação gerado todos os dias via a sua API de streaming. Essa palestra apresentará como acessar esse laboratório de dados para criar aplicações que monitoram protestos, permitem usuários interagir com a TV, identificam padrões de comportamento ou que sua imaginação mandar. Discutiremos também quais arquiteturas de software são as ideais para consumir esse tipo de fluxo de dados.

Twitter e suas APIs de Streaming - Campus Party Brasil 7

Twitter e suas APIs de Streaming - Campus Party Brasil 7Luis Cipriani The document discusses Twitter APIs and how they can be used to access Twitter data in real-time and from the past. It provides information on streaming APIs that return current Twitter data and examples of how APIs have been used to build applications that analyze hashtags, events and more. Statistics on Twitter's daily users and growth over time are also presented.

Segurança de APIs HTTP, um guia sensato para desenvolvedores preocupados

Segurança de APIs HTTP, um guia sensato para desenvolvedores preocupadosLuis Cipriani O documento fornece diretrizes para implementar segurança em APIs HTTP, discutindo esquemas de autenticação como OAuth, SSL e assinaturas digitais. Ele também aborda tópicos como rate limiting, auditoria, conteúdo sensível e modelos de permissão para proteger recursos de acordo com seu nível de sensibilidade. A mensagem central é que os desenvolvedores devem escolher um esquema de autenticação equilibrando segurança e facilidade de implementação de acordo com os riscos envolvidos em cada caso.

API Caching, why your server needs some rest

API Caching, why your server needs some restLuis Cipriani The best HTTP request made to your server is that one that never reaches it. Do you know the life cycle time of your resources? How to be sure that the user never reaches an expired response without the need to open the connection door with the origin server? What kinds of caches do exist and when do I need to use each one of them? Why can I not be afraid to read the RFCs? This talk will present good practices on the usage of HTTP cache for APIs and web applications, turning your digital products to optimize the usage of machines and save money.

Explaining A Programming Model for Context-Aware Applications in Large-Scale ...

Explaining A Programming Model for Context-Aware Applications in Large-Scale ...Luis Cipriani This talk was given in a Marster's Mobile Computing course (the idea is that each student present to the class the contribution a respective paper gives to the scientific community). So all the credit from parts taken from the article are owned by the article authors, here is the reference:

S. Sehic, F. Li, S. Nastic, S Dustdar,“A Programming Model for Context-Aware Applications in Large-Scale Pervasive Systems”, IEEE 8th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), pp 142-149, 2012.

Alexandria: um Sistema de Sistemas para Publicação de Conteúdo Digital utiliz...

Alexandria: um Sistema de Sistemas para Publicação de Conteúdo Digital utiliz...Luis Cipriani O documento apresenta um sistema chamado Alexandria para publicação de conteúdo digital utilizando a arquitetura REST e hipermídia. O sistema é composto por domínios, serviços e data entries que se comunicam através de APIs RESTful para fornecer conteúdo para vários sites de uma empresa de mídia. O sistema permite escalabilidade, evolução independente dos componentes e reúso de funcionalidades entre os sites.

Como um verdadeiro sistema REST funciona: arquitetura e performance na Abril

Como um verdadeiro sistema REST funciona: arquitetura e performance na AbrilLuis Cipriani A palestra irá compartilhar a experiência e lições aprendidas no desenvolvimento da plataforma de publicação da Abril, um sistema distribuído com vários nós independentes que se comunicam usando REST e hypermidia. Também introduziremos alguns conceitos avançados de HTTP que podem fazer com que sistemas REST executem com melhor performance, evitando os problemas comuns de se manter uma plataforma em larga escala, com uma grande diversidade de usuários.

Explaining Semantic Web

Explaining Semantic WebLuis Cipriani O documento discute a Web Semântica, que visa integrar dados na web através de vocabulários e ontologias comuns. Ele explica como os dados podem ser representados em formatos de grafo como RDF e como consultas podem ser feitas usando SPARQL. Também fornece exemplos de aplicações como a BBC e a NASA que usam a Web Semântica.

Fearless HTTP requests abuse

Fearless HTTP requests abuseLuis Cipriani Tech talk at 20o. GURU (Sao Paulo/Brazil Ruby User Group). November 26th, 2011

In REST architectures, there is always concerns about the high volume os HTTP requests, that can increase the load on servers. However, this issue could be easily solved if the system implement a good HTTP cache strategy. This talk will show in a simple way how works the underestimated HTTP cache protocol.

Ad

Recently uploaded (20)

Buckeye Dreamin 2024: Assessing and Resolving Technical Debt

Buckeye Dreamin 2024: Assessing and Resolving Technical DebtLynda Kane Slide Deck from Buckeye Dreamin' 2024 presentation Assessing and Resolving Technical Debt. Focused on identifying technical debt in Salesforce and working towards resolving it.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Hands On: Create a Lightning Aura Component with force:RecordData

Hands On: Create a Lightning Aura Component with force:RecordDataLynda Kane Slide Deck from the 3/26/2020 virtual meeting of the Cleveland Developer Group presentation on creating a Lightning Aura Component using force:RecordData.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Leading AI Innovation As A Product Manager - Michael Jidael

Leading AI Innovation As A Product Manager - Michael JidaelMichael Jidael Unlike traditional product management, AI product leadership requires new mental models, collaborative approaches, and new measurement frameworks. This presentation breaks down how Product Managers can successfully lead AI Innovation in today's rapidly evolving technology landscape. Drawing from practical experience and industry best practices, I shared frameworks, approaches, and mindset shifts essential for product leaders navigating the unique challenges of AI product development.

In this deck, you'll discover:

- What AI leadership means for product managers

- The fundamental paradigm shift required for AI product development.

- A framework for identifying high-value AI opportunities for your products.

- How to transition from user stories to AI learning loops and hypothesis-driven development.

- The essential AI product management framework for defining, developing, and deploying intelligence.

- Technical and business metrics that matter in AI product development.

- Strategies for effective collaboration with data science and engineering teams.

- Framework for handling AI's probabilistic nature and setting stakeholder expectations.

- A real-world case study demonstrating these principles in action.

- Practical next steps to begin your AI product leadership journey.

This presentation is essential for Product Managers, aspiring PMs, product leaders, innovators, and anyone interested in understanding how to successfully build and manage AI-powered products from idea to impact. The key takeaway is that leading AI products is about creating capabilities (intelligence) that continuously improve and deliver increasing value over time.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

"Rebranding for Growth", Anna Velykoivanenko

"Rebranding for Growth", Anna VelykoivanenkoFwdays Since there is no single formula for rebranding, this presentation will explore best practices for aligning business strategy and communication to achieve business goals.

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018Lynda Kane Slide Deck from the #AdminHour's Hour of Code session on 12/6/2018 that features learning to code using the Python Turtle library to create snowflakes

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

Hbase: Introduction to column oriented databases

- 1. HBase Introduction to column oriented databases Luís Cipriani @lfcipriani (twitter, linkedin, github, ...) 22o. GURU (2012-02-25) - Sao Paulo/Brazil sexta-feira, 24 de fevereiro de 12

- 2. ME sexta-feira, 24 de fevereiro de 12

- 3. intro “A BigTable HBase is a sparse, distributed, persistent multidimensional sorted map” http://research.google.com/archive/bigtable.html sexta-feira, 24 de fevereiro de 12

- 4. intro > data model { <-- table // ... "aaaaa" : { <-- row "A" : { <-- column family "foo" : { <-- column (qualifier) 15: "y", <-- timestamp, value 4: "m" } "bar" : {...} }, "B" : { "" : {...} } }, "aaaab" : { "A" : { "foo" : {...}, "bar" : {...}, "joe" : {...} }, "B" : { "" : {...} } }, // ... } sexta-feira, 24 de fevereiro de 12

- 5. intro > data model (Table, RowKey, Family, Column, Timestamp) → Value sexta-feira, 24 de fevereiro de 12

- 6. intro > hadoop stack • hadoop HDFS (or not) • hadoop MapReduce • hadoop ZooKeeper • hadoop HBase • hadoop Hue, Whirr, etc... sexta-feira, 24 de fevereiro de 12

- 7. architecture sexta-feira, 24 de fevereiro de 12

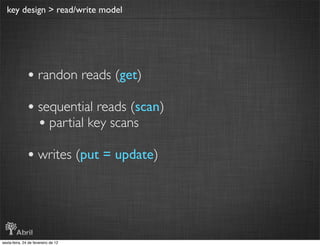

- 8. key design > read/write model • randon reads (get) • sequential reads (scan) • partial key scans • writes (put = update) sexta-feira, 24 de fevereiro de 12

- 9. key design > storage model http://ofps.oreilly.com/titles/9781449396107/advanced.html sexta-feira, 24 de fevereiro de 12

- 10. key design > strategies • tall-narrow vs flat-wide • partial key scans • pagination • time series • salting • field swap • randomization • secondary indexes sexta-feira, 24 de fevereiro de 12

- 11. key design > example sexta-feira, 24 de fevereiro de 12

- 12. development • installation modes • standalone, pseudo-distributed, distributed • JRuby console • Access • java/jruby API (more features) • entrypoints REST, Thrift, Avro, Protobuffers • there several other libs sexta-feira, 24 de fevereiro de 12

- 13. cons • complex config and maintenance • hot regions • no secondary index built-in • no transactions built-in • complex schema design sexta-feira, 24 de fevereiro de 12

- 14. pros • distributed • scalable (auto-sharding) • built on Hadoop stack • handles Big Data • high performance for write and read • no SPOF • fault tolerant, no data loss • active community sexta-feira, 24 de fevereiro de 12

- 15. Reformulação Box de Login Abril ID http://engineering.abril.com.br/ http://abr.io/hbase-intro https://pinboard.in/u:lfcipriani/t:hbase/ http://hbase.apache.org/ ? http://shop.oreilly.com/product/0636920014348.do sexta-feira, 24 de fevereiro de 12