HBase Lightning Talk

14 likes5,952 views

Slides for a lightning talk on HBase that I gave at Near Infinity (www.nearinfinity.com) spring 2012 conference. The associated sample code is on GitHub at https://github.com/sleberknight/basic-hbase-examples

1 of 24

Downloaded 191 times

![Got byte[]?](https://image.slidesharecdn.com/hbase-lightningtalk-120427164435-phpapp01/85/HBase-Lightning-Talk-15-320.jpg)

Ad

Recommended

CouchDB-Lucene

CouchDB-LuceneMartin Rehfeld This document discusses integrating the Apache Lucene full-text search engine with CouchDB. It begins by explaining that while CouchDB supports basic search through MapReduce indexes, implementing a full search engine would require recreating existing work. Lucene is introduced as a high-performance search library that can be used with CouchDB through the couchdb-lucene integration. The document provides examples of Lucene index design documents, querying the index, and integrating search into a Ruby on Rails application with pagination.

Webinar: Strongly Typed Languages and Flexible Schemas

Webinar: Strongly Typed Languages and Flexible SchemasMongoDB This document discusses strategies for managing flexible schemas in strongly typed languages and databases, including decoupled architectures, object-document mappers (ODMs), versioning, and data migrations. It describes how decoupled architectures allow business logic and data storage to evolve independently. ODMs like Spring Data and Morphia reduce impedance mismatch and handle mapping between objects and database documents. Versioning strategies include incrementing fields, storing full documents, or maintaining separate collections for current and past versions. Migrations involve adding/removing fields, changing names/data types, or extracting embedded documents. The document outlines tradeoffs between these approaches.

Strongly Typed Languages and Flexible Schemas

Strongly Typed Languages and Flexible SchemasNorberto Leite We like to use strongly type languages and used them along side with flexible schema databases. What challenges and strategies do we have to deal with data coherence and format validations using different strategies and tools like ODMs versioning, migrations et al. We also review the tradeoffs of such strategies.

MongoDB Europe 2016 - Advanced MongoDB Aggregation Pipelines

MongoDB Europe 2016 - Advanced MongoDB Aggregation PipelinesMongoDB We will do a deep dive into the powerful query capabilities of MongoDB's Aggregation Framework, and show you how you can use MongoDB's built-in features to inspect the execution and tune the performance of your queries. And, last but not least, we will also give you a brief outlook into MongoDB 3.4's awesome new Aggregation Framework additions.

Entity Relationships in a Document Database at CouchConf Boston

Entity Relationships in a Document Database at CouchConf BostonBradley Holt Unlike relational databases, document databases like CouchDB and Couchbase do not directly support entity relationships. This talk will explore patterns of modeling one-to-many and many-to-many entity relationships in a document database. These patterns include using an embedded JSON array, relating documents using identifiers, using a list of keys, and using relationship documents.

CouchDB at New York PHP

CouchDB at New York PHPBradley Holt This is a presentation on CouchDB that I gave at the New York PHP User Group. I talked about the basics of CouchDB, its JSON documents, its RESTful API, writing and querying MapReduce views, using CouchDB from within PHP, and scaling.

2011 Mongo FR - MongoDB introduction

2011 Mongo FR - MongoDB introductionantoinegirbal - MongoDB is a non-relational, document-oriented database that scales horizontally and uses JSON-like documents with dynamic schemas.

- It supports complex queries, embedded documents and arrays, and aggregation and MapReduce for querying and transforming data.

- MongoDB is used by many large companies for operational databases and analytics due to its scalability, flexibility, and performance.

Webinar: Exploring the Aggregation Framework

Webinar: Exploring the Aggregation FrameworkMongoDB Developers love MongoDB because its flexible document model enhances their productivity. But did you know that MongoDB supports rich queries and lets you accomplish some of the same things you currently do with SQL statements? And that MongoDB's powerful aggregation framework makes it possible to perform real-time analytics for dashboards and reports?

Watch this webinar for an introduction to the MongoDB aggregation framework and a walk through of what you can do with it. We'll also demo an analysis of U.S. census data.

Chen li asterix db: 大数据处理开源平台

Chen li asterix db: 大数据处理开源平台jins0618 AsterixDB is an open source "Big Data Management System" (BDMS) that provides flexible data modeling, efficient query processing, and scalable analytics on large datasets. It uses a native storage layer built on LSM trees with indexing and transaction support. The Asterix Data Model (ADM) supports semistructured data types like records, lists, and bags. Queries are written in the Asterix Query Language (AQL) which supports features like spatial and temporal predicates. AsterixDB is being used for applications like social network analysis, education analytics, and more.

MongoDB Europe 2016 - ETL for Pros – Getting Data Into MongoDB The Right Way

MongoDB Europe 2016 - ETL for Pros – Getting Data Into MongoDB The Right WayMongoDB The document discusses best practices for extracting, transforming, and loading (ETL) large amounts of data into MongoDB. It describes common mistakes made in ETL processes, such as performing nested queries to retrieve and assemble documents, and building documents within the database itself using update operations. The presentation provides a case study comparing these inefficient approaches to loading order, item, and tracking data from relational tables into MongoDB documents.

MongoDB Aggregation Framework

MongoDB Aggregation FrameworkCaserta These are slides from our Big Data Warehouse Meetup in April. We talked about NoSQL databases: What they are, how they’re used and where they fit in existing enterprise data ecosystems.

Mike O’Brian from 10gen, introduced the syntax and usage patterns for a new aggregation system in MongoDB and give some demonstrations of aggregation using the new system. The new MongoDB aggregation framework makes it simple to do tasks such as counting, averaging, and finding minima or maxima while grouping by keys in a collection, complementing MongoDB’s built-in map/reduce capabilities.

For more information, visit our website at http://casertaconcepts.com/ or email us at [email protected].

Aligning Web Services with the Semantic Web to Create a Global Read-Write Gra...

Aligning Web Services with the Semantic Web to Create a Global Read-Write Gra...Markus Lanthaler Presentation of the paper "Aligning Web Services with the Semantic Web to Create a Global Read-Write Graph of Data" gave at the 9th IEEE European Conference on Web Services (ECOWS 2011) in Lugano, Switzerland.

Despite significant research and development efforts, the vision of the Semantic Web yielding to a Web of Data has not yet become reality. Even though initiatives such as Linking Open Data gained traction recently, the Web of Data is still clearly outpaced by the growth of the traditional, document-based Web. Instead of releasing data in the form of RDF, many publishers choose to publish their data in the form of Web services. The reasons for this are manifold. Given that RESTful Web services closely resemble the document-based Web, they are not only perceived as less complex and disruptive, but also provide read-write interfaces to the underlying data. In contrast, the current Semantic Web is essentially read-only which clearly inhibits net-working effects and engagement of the crowd. On the other hand, the prevalent use of proprietary schemas to represent the data published by Web services inhibits generic browsers or crawlers to access and understand this data; the consequence are islands of data instead of a global graph of data forming the envisioned Semantic Web. We thus propose a novel approach to integrate Web services into the Web of Data by introducing an algorithm to translate SPARQL queries to HTTP requests. The aim is to create a global read-write graph of data and to standardize the mashup development process. We try to keep the approach as familiar and simple as possible to lower the entry barrier and foster the adoption of our approach. Thus, we based our proposal on SEREDASj, a semantic description language for RESTful data services, for making proprietary JSON service schemas accessible.

Webinar: General Technical Overview of MongoDB for Dev Teams

Webinar: General Technical Overview of MongoDB for Dev TeamsMongoDB In this talk we will focus on several of the reasons why developers have come to love the richness, flexibility, and ease of use that MongoDB provides. First we will give a brief introduction of MongoDB, comparing and contrasting it to the traditional relational database. Next, we’ll give an overview of the APIs and tools that are part of the MongoDB ecosystem. Then we’ll look at how MongoDB CRUD (Create, Read, Update, Delete) operations work, and also explore query, update, and projection operators. Finally, we will discuss MongoDB indexes and look at some examples of how indexes are used.

A Semantic Description Language for RESTful Data Services to Combat Semaphobia

A Semantic Description Language for RESTful Data Services to Combat SemaphobiaMarkus Lanthaler The document proposes a semantic description language (SEREDASj) to provide machine-readable descriptions of RESTful web services. It aims to address the lack of standards for describing REST APIs and help combat "semaphobia", the fear of semantics. The language builds on previous work but is tailored specifically for REST by focusing on simplicity and supporting many use cases including discovery and composition of RESTful services.

Aggregation in MongoDB

Aggregation in MongoDBKishor Parkhe This document discusses various MongoDB aggregation operations including count, distinct, match, limit, sort, project, group, and map reduce. It provides examples of how to use each operation in an aggregation pipeline to count, filter, sort, select fields, compute new fields, group documents, and perform more complex aggregations.

NOSQL: il rinascimento dei database?

NOSQL: il rinascimento dei database?Paolo Bernardi Dopo anni di dominio incontrastato da parte dei database relazionali assistiamo ad un'incredibile proliferazione di soluzioni alternative.

Questa presentazione fornirà una mappa per navigare nel vasto mare dei database non relazionali senza andare alla deriva.

Data Processing and Aggregation with MongoDB

Data Processing and Aggregation with MongoDB MongoDB The document discusses data processing and aggregation using MongoDB. It provides an example of using MongoDB's map-reduce functionality to count the most popular pub names in a dataset of UK pub locations and attributes. It shows the map and reduce functions used to tally the name occurrences and outputs the top 10 results. It then demonstrates performing a similar analysis on just the pubs located in central London using MongoDB's aggregation framework pipeline to match, group and sort the results.

MongoDB Aggregation Framework

MongoDB Aggregation FrameworkTyler Brock The new MongoDB aggregation framework provides a more powerful and performant way to perform data aggregation compared to the existing MapReduce functionality. The aggregation framework uses a pipeline of aggregation operations like $match, $project, $group and $unwind. It allows expressing data aggregation logic through a declarative pipeline in a more intuitive way without needing to write JavaScript code. This provides better performance than MapReduce as it is implemented in C++ rather than JavaScript.

Agg framework selectgroup feb2015 v2

Agg framework selectgroup feb2015 v2MongoDB Developers love MongoDB because its flexible document model enhances their productivity. But did you know that MongoDB supports rich queries and lets you accomplish some of the same things you currently do with SQL statements? And that MongoDB's powerful aggregation framework makes it possible to perform real-time analytics for dashboards and reports?

Attend this webinar for an introduction to the MongoDB aggregation framework and a walk through of what you can do with it. We'll also demo using it to analyze U.S. census data.

The Aggregation Framework

The Aggregation FrameworkMongoDB MongoDB offers two native data processing tools: MapReduce and the Aggregation Framework. MongoDB’s built-in aggregation framework is a powerful tool for performing analytics and statistical analysis in real-time and generating pre-aggregated reports for dashboarding. In this session, we will demonstrate how to use the aggregation framework for different types of data processing including ad-hoc queries, pre-aggregated reports, and more. At the end of this talk, you should walk aways with a greater understanding of the built-in data processing options in MongoDB and how to use the aggregation framework in your next project.

Java/Scala Lab: Борис Трофимов - Обжигающая Big Data.

Java/Scala Lab: Борис Трофимов - Обжигающая Big Data.GeeksLab Odessa История о том, как Scalding framework помогает обрабатывать очень много рекламных компаний в одной очень крупной американской компании.

Inside MongoDB: the Internals of an Open-Source Database

Inside MongoDB: the Internals of an Open-Source DatabaseMike Dirolf The document discusses MongoDB, including how it stores and indexes data, handles queries and replication, and supports sharding and geospatial indexing. Key points covered include how MongoDB stores data in BSON format across data files that grow in size, uses memory-mapped files for data access, supports indexing with B-trees, and replicates operations through an oplog.

Introduction to MongoDB and Hadoop

Introduction to MongoDB and HadoopSteven Francia An Introduction to MongoDB + an Introduction to MongoDB + Hadoop.

This presentation was given at the CT Java Users Group in March 2013.

MongoDB World 2016 : Advanced Aggregation

MongoDB World 2016 : Advanced AggregationJoe Drumgoole This document discusses MongoDB's aggregation framework and provides an example of creating a summary of test results from a public MOT (Ministry of Transport) dataset containing over 25 million records. It shows how to use aggregation pipeline stages like $match, $project, $group to filter the data to only cars from 2013, calculate the age of each car, and then group the results to output statistics on counts, average mileages, and number of passes for each make and age combination. The aggregation framework allows processing large collections in parallel and creating new data from existing data.

Aggregation Framework

Aggregation FrameworkMongoDB This document discusses using MapReduce, the Aggregation Framework, and the Hadoop Connector to perform data analysis and reporting on data stored in MongoDB. It provides examples of using various aggregation pipeline stages like $match, $project, $group to filter, reshape, and group documents. It also covers limitations of the aggregation framework and how the Hadoop Connector can help integrate MongoDB with Hadoop for distributed processing of large datasets across multiple nodes.

Embedding a language into string interpolator

Embedding a language into string interpolatorMichael Limansky My presentation for Scala Days Amsterdam.

How to make a compile time string interpolator for a language you have? Use case and step by step code examples.

Introduction to MongoDB

Introduction to MongoDBNosh Petigara Introduction to MongoDB. Uses example of a simple location-based application to introduce schema design, queries, updates, map-reduce, deployment

MongoDB at GUL

MongoDB at GULIsrael Gutiérrez MongoDB is an open source NoSQL database that uses JSON-like documents with dynamic schemas (BSON format) instead of using tables as in SQL. It allows for embedding related data and flexible querying of this embedded data. Some key features include using JavaScript-style documents, scaling horizontally on commodity hardware, and supporting various languages through its driver interface.

Hbase an introduction

Hbase an introductionFabio Fumarola This document provides an introduction to HBase, including:

- An overview of BigTable, which HBase is modeled after

- Descriptions of the key features of HBase like being distributed, column-oriented, and versioned

- Examples of using the HBase shell to create tables, insert and retrieve data

- An explanation of the Java APIs for administering HBase, inserting/updating/retrieving data using Puts, Gets, and Scans

- Suggestions for setting up HBase with Docker for coding examples

Managing Social Content with MongoDB

Managing Social Content with MongoDBMongoDB Media owners are turning to MongoDB to drive social interaction with their published content. The way customers consume information has changed and passive communication is no longer enough. They want to comment, share and engage with publishers and their community through a range of media types and via multiple channels whenever and wherever they are. There are serious challenges with taking this semi-structured and unstructured data and making it work in a traditional relational database. This webinar looks at how MongoDB’s schemaless design and document orientation gives organisation’s like the Guardian the flexibility to aggregate social content and scale out.

Ad

More Related Content

What's hot (20)

Chen li asterix db: 大数据处理开源平台

Chen li asterix db: 大数据处理开源平台jins0618 AsterixDB is an open source "Big Data Management System" (BDMS) that provides flexible data modeling, efficient query processing, and scalable analytics on large datasets. It uses a native storage layer built on LSM trees with indexing and transaction support. The Asterix Data Model (ADM) supports semistructured data types like records, lists, and bags. Queries are written in the Asterix Query Language (AQL) which supports features like spatial and temporal predicates. AsterixDB is being used for applications like social network analysis, education analytics, and more.

MongoDB Europe 2016 - ETL for Pros – Getting Data Into MongoDB The Right Way

MongoDB Europe 2016 - ETL for Pros – Getting Data Into MongoDB The Right WayMongoDB The document discusses best practices for extracting, transforming, and loading (ETL) large amounts of data into MongoDB. It describes common mistakes made in ETL processes, such as performing nested queries to retrieve and assemble documents, and building documents within the database itself using update operations. The presentation provides a case study comparing these inefficient approaches to loading order, item, and tracking data from relational tables into MongoDB documents.

MongoDB Aggregation Framework

MongoDB Aggregation FrameworkCaserta These are slides from our Big Data Warehouse Meetup in April. We talked about NoSQL databases: What they are, how they’re used and where they fit in existing enterprise data ecosystems.

Mike O’Brian from 10gen, introduced the syntax and usage patterns for a new aggregation system in MongoDB and give some demonstrations of aggregation using the new system. The new MongoDB aggregation framework makes it simple to do tasks such as counting, averaging, and finding minima or maxima while grouping by keys in a collection, complementing MongoDB’s built-in map/reduce capabilities.

For more information, visit our website at http://casertaconcepts.com/ or email us at [email protected].

Aligning Web Services with the Semantic Web to Create a Global Read-Write Gra...

Aligning Web Services with the Semantic Web to Create a Global Read-Write Gra...Markus Lanthaler Presentation of the paper "Aligning Web Services with the Semantic Web to Create a Global Read-Write Graph of Data" gave at the 9th IEEE European Conference on Web Services (ECOWS 2011) in Lugano, Switzerland.

Despite significant research and development efforts, the vision of the Semantic Web yielding to a Web of Data has not yet become reality. Even though initiatives such as Linking Open Data gained traction recently, the Web of Data is still clearly outpaced by the growth of the traditional, document-based Web. Instead of releasing data in the form of RDF, many publishers choose to publish their data in the form of Web services. The reasons for this are manifold. Given that RESTful Web services closely resemble the document-based Web, they are not only perceived as less complex and disruptive, but also provide read-write interfaces to the underlying data. In contrast, the current Semantic Web is essentially read-only which clearly inhibits net-working effects and engagement of the crowd. On the other hand, the prevalent use of proprietary schemas to represent the data published by Web services inhibits generic browsers or crawlers to access and understand this data; the consequence are islands of data instead of a global graph of data forming the envisioned Semantic Web. We thus propose a novel approach to integrate Web services into the Web of Data by introducing an algorithm to translate SPARQL queries to HTTP requests. The aim is to create a global read-write graph of data and to standardize the mashup development process. We try to keep the approach as familiar and simple as possible to lower the entry barrier and foster the adoption of our approach. Thus, we based our proposal on SEREDASj, a semantic description language for RESTful data services, for making proprietary JSON service schemas accessible.

Webinar: General Technical Overview of MongoDB for Dev Teams

Webinar: General Technical Overview of MongoDB for Dev TeamsMongoDB In this talk we will focus on several of the reasons why developers have come to love the richness, flexibility, and ease of use that MongoDB provides. First we will give a brief introduction of MongoDB, comparing and contrasting it to the traditional relational database. Next, we’ll give an overview of the APIs and tools that are part of the MongoDB ecosystem. Then we’ll look at how MongoDB CRUD (Create, Read, Update, Delete) operations work, and also explore query, update, and projection operators. Finally, we will discuss MongoDB indexes and look at some examples of how indexes are used.

A Semantic Description Language for RESTful Data Services to Combat Semaphobia

A Semantic Description Language for RESTful Data Services to Combat SemaphobiaMarkus Lanthaler The document proposes a semantic description language (SEREDASj) to provide machine-readable descriptions of RESTful web services. It aims to address the lack of standards for describing REST APIs and help combat "semaphobia", the fear of semantics. The language builds on previous work but is tailored specifically for REST by focusing on simplicity and supporting many use cases including discovery and composition of RESTful services.

Aggregation in MongoDB

Aggregation in MongoDBKishor Parkhe This document discusses various MongoDB aggregation operations including count, distinct, match, limit, sort, project, group, and map reduce. It provides examples of how to use each operation in an aggregation pipeline to count, filter, sort, select fields, compute new fields, group documents, and perform more complex aggregations.

NOSQL: il rinascimento dei database?

NOSQL: il rinascimento dei database?Paolo Bernardi Dopo anni di dominio incontrastato da parte dei database relazionali assistiamo ad un'incredibile proliferazione di soluzioni alternative.

Questa presentazione fornirà una mappa per navigare nel vasto mare dei database non relazionali senza andare alla deriva.

Data Processing and Aggregation with MongoDB

Data Processing and Aggregation with MongoDB MongoDB The document discusses data processing and aggregation using MongoDB. It provides an example of using MongoDB's map-reduce functionality to count the most popular pub names in a dataset of UK pub locations and attributes. It shows the map and reduce functions used to tally the name occurrences and outputs the top 10 results. It then demonstrates performing a similar analysis on just the pubs located in central London using MongoDB's aggregation framework pipeline to match, group and sort the results.

MongoDB Aggregation Framework

MongoDB Aggregation FrameworkTyler Brock The new MongoDB aggregation framework provides a more powerful and performant way to perform data aggregation compared to the existing MapReduce functionality. The aggregation framework uses a pipeline of aggregation operations like $match, $project, $group and $unwind. It allows expressing data aggregation logic through a declarative pipeline in a more intuitive way without needing to write JavaScript code. This provides better performance than MapReduce as it is implemented in C++ rather than JavaScript.

Agg framework selectgroup feb2015 v2

Agg framework selectgroup feb2015 v2MongoDB Developers love MongoDB because its flexible document model enhances their productivity. But did you know that MongoDB supports rich queries and lets you accomplish some of the same things you currently do with SQL statements? And that MongoDB's powerful aggregation framework makes it possible to perform real-time analytics for dashboards and reports?

Attend this webinar for an introduction to the MongoDB aggregation framework and a walk through of what you can do with it. We'll also demo using it to analyze U.S. census data.

The Aggregation Framework

The Aggregation FrameworkMongoDB MongoDB offers two native data processing tools: MapReduce and the Aggregation Framework. MongoDB’s built-in aggregation framework is a powerful tool for performing analytics and statistical analysis in real-time and generating pre-aggregated reports for dashboarding. In this session, we will demonstrate how to use the aggregation framework for different types of data processing including ad-hoc queries, pre-aggregated reports, and more. At the end of this talk, you should walk aways with a greater understanding of the built-in data processing options in MongoDB and how to use the aggregation framework in your next project.

Java/Scala Lab: Борис Трофимов - Обжигающая Big Data.

Java/Scala Lab: Борис Трофимов - Обжигающая Big Data.GeeksLab Odessa История о том, как Scalding framework помогает обрабатывать очень много рекламных компаний в одной очень крупной американской компании.

Inside MongoDB: the Internals of an Open-Source Database

Inside MongoDB: the Internals of an Open-Source DatabaseMike Dirolf The document discusses MongoDB, including how it stores and indexes data, handles queries and replication, and supports sharding and geospatial indexing. Key points covered include how MongoDB stores data in BSON format across data files that grow in size, uses memory-mapped files for data access, supports indexing with B-trees, and replicates operations through an oplog.

Introduction to MongoDB and Hadoop

Introduction to MongoDB and HadoopSteven Francia An Introduction to MongoDB + an Introduction to MongoDB + Hadoop.

This presentation was given at the CT Java Users Group in March 2013.

MongoDB World 2016 : Advanced Aggregation

MongoDB World 2016 : Advanced AggregationJoe Drumgoole This document discusses MongoDB's aggregation framework and provides an example of creating a summary of test results from a public MOT (Ministry of Transport) dataset containing over 25 million records. It shows how to use aggregation pipeline stages like $match, $project, $group to filter the data to only cars from 2013, calculate the age of each car, and then group the results to output statistics on counts, average mileages, and number of passes for each make and age combination. The aggregation framework allows processing large collections in parallel and creating new data from existing data.

Aggregation Framework

Aggregation FrameworkMongoDB This document discusses using MapReduce, the Aggregation Framework, and the Hadoop Connector to perform data analysis and reporting on data stored in MongoDB. It provides examples of using various aggregation pipeline stages like $match, $project, $group to filter, reshape, and group documents. It also covers limitations of the aggregation framework and how the Hadoop Connector can help integrate MongoDB with Hadoop for distributed processing of large datasets across multiple nodes.

Embedding a language into string interpolator

Embedding a language into string interpolatorMichael Limansky My presentation for Scala Days Amsterdam.

How to make a compile time string interpolator for a language you have? Use case and step by step code examples.

Introduction to MongoDB

Introduction to MongoDBNosh Petigara Introduction to MongoDB. Uses example of a simple location-based application to introduce schema design, queries, updates, map-reduce, deployment

MongoDB at GUL

MongoDB at GULIsrael Gutiérrez MongoDB is an open source NoSQL database that uses JSON-like documents with dynamic schemas (BSON format) instead of using tables as in SQL. It allows for embedding related data and flexible querying of this embedded data. Some key features include using JavaScript-style documents, scaling horizontally on commodity hardware, and supporting various languages through its driver interface.

Similar to HBase Lightning Talk (20)

Hbase an introduction

Hbase an introductionFabio Fumarola This document provides an introduction to HBase, including:

- An overview of BigTable, which HBase is modeled after

- Descriptions of the key features of HBase like being distributed, column-oriented, and versioned

- Examples of using the HBase shell to create tables, insert and retrieve data

- An explanation of the Java APIs for administering HBase, inserting/updating/retrieving data using Puts, Gets, and Scans

- Suggestions for setting up HBase with Docker for coding examples

Managing Social Content with MongoDB

Managing Social Content with MongoDBMongoDB Media owners are turning to MongoDB to drive social interaction with their published content. The way customers consume information has changed and passive communication is no longer enough. They want to comment, share and engage with publishers and their community through a range of media types and via multiple channels whenever and wherever they are. There are serious challenges with taking this semi-structured and unstructured data and making it work in a traditional relational database. This webinar looks at how MongoDB’s schemaless design and document orientation gives organisation’s like the Guardian the flexibility to aggregate social content and scale out.

Cassandra 3.0 - JSON at scale - StampedeCon 2015

Cassandra 3.0 - JSON at scale - StampedeCon 2015StampedeCon This session will explore the new features in Cassandra 3.0, starting with JSON support. Cassandra now allows storing JSON directly to Cassandra rows and vice versa, making it trivial to deploy Cassandra as a component in modern service-oriented architectures.

Cassandra 3.0 also delivers other enhancements to developer productivity: user defined functions let developers deploy custom application logic server side with any language conforming to the Java scripting API, including Javascript. Global indexes allow scaling indexed queries linearly with the size of the cluster, a first for open-source NoSQL databases.

Finally, we will cover the performance improvements in Cassandra 3.0 as well.

Starting with MongoDB

Starting with MongoDBDoThinger This document provides an introduction to MongoDB, including:

1) MongoDB is a schemaless database that supports features like replication, sharding, indexing, file storage, and aggregation.

2) The main concepts include databases containing collections of documents like tables containing rows in SQL databases, but documents can have different structures.

3) Examples demonstrate inserting, querying, updating, and embedding documents in MongoDB collections.

Building Apps with MongoDB

Building Apps with MongoDBNate Abele Relational databases are central to web applications, but they have also been the primary source of pain when it comes to scale and performance. Recently, non-relational databases (also referred to as NoSQL) have arrived on the scene. This session explains not only what MongoDB is and how it works, but when and how to gain the most benefit.

Forbes MongoNYC 2011

Forbes MongoNYC 2011djdunlop Forbes uses MongoDB to support its distributed global workforce of contributors. It structures content, authors, comments, and promoted content in MongoDB collections. Key data includes articles, blogs, authors, and user comments. MongoDB allows flexible schemas and supports Forbes' needs for a distributed workforce to collaboratively create and manage content.

Modeling JSON data for NoSQL document databases

Modeling JSON data for NoSQL document databasesRyan CrawCour Modeling data in a relational database is easy, we all know how to do it because that's what we've always been taught; But what about NoSQL Document Databases?

Document databases take (much) of what you know and flip it upside down. This talk covers some common patterns for modeling data and how to approach things when working with document stores such as Azure DocumentDB

OSCON 2011 CouchApps

OSCON 2011 CouchAppsBradley Holt CouchApps are web applications built using CouchDB, JavaScript, and HTML5. CouchDB is a document-oriented database that stores JSON documents, has a RESTful HTTP API, and is queried using map/reduce views. This talk will answer your basic questions about CouchDB, but will focus on building CouchApps and related tools.

Why NoSQL Makes Sense

Why NoSQL Makes SenseMongoDB The document discusses NoSQL databases and when they may be preferable to traditional SQL databases. It notes that NoSQL databases are non-relational, support horizontal scaling, and allow for new data models that can improve application development flexibility. Examples showing data structures and queries in MongoDB are provided. The document also summarizes pros and cons of different database types from 2000-2010.

Why NoSQL Makes Sense

Why NoSQL Makes SenseMongoDB The document discusses NoSQL databases and when they may be preferable to traditional SQL databases. It notes that NoSQL databases are non-relational, support horizontal scaling, and allow for new data models that can improve application development flexibility. Examples showing data structures and queries in MongoDB are provided. Reasons for different database preferences depending on needs like transactions, data structure, performance, and agility are also summarized.

ETL for Pros: Getting Data Into MongoDB

ETL for Pros: Getting Data Into MongoDBMongoDB The document discusses different approaches for efficiently migrating large amounts of data into MongoDB. It begins by covering MongoDB's document model and some schema design principles. It then outlines three common approaches to migrating data: 1) nested queries, 2) building documents in the database, and 3) loading all data into memory first before insertion. The document provides examples and compares the performance of each approach.

Introduction to solr

Introduction to solrSematext Group, Inc. Radu Gheorghe gives an introduction to Solr, an open source search engine based on Apache Lucene. He discusses when Solr would be used, such as for product search, as well as when it may not be suitable, such as for sparse data. The presentation covers how Solr works with inverted indexes and scoring documents, as well as features like facets, streaming aggregations, master-slave and SolrCloud architectures. A demo is offered to illustrate Solr functionality.

Big Data: Guidelines and Examples for the Enterprise Decision Maker

Big Data: Guidelines and Examples for the Enterprise Decision MakerMongoDB This document provides an overview of a real-time directed content system that uses MongoDB, Hadoop, and MapReduce. It describes:

- The key participants in the system and their roles in generating, analyzing, and operating on data

- An architecture that uses MongoDB for real-time user profiling and content recommendations, Hadoop for periodic analytics on user profiles and content tags, and MapReduce jobs to update the profiles

- How the system works over time to continuously update user profiles based on their interactions with content, rerun analytics daily to update tags and baselines, and make recommendations based on the updated profiles

- How the system supports both real-time and long-term analytics needs through this integrated approach.

Event stream processing using Kafka streams

Event stream processing using Kafka streamsFredrik Vraalsen This document summarizes a presentation about event stream processing using Kafka Streams. The presentation covers an introduction to Kafka and Kafka Streams, the anatomy of a Kafka Streams application, basic operations like filtering and transforming streams, and more advanced topics like aggregations, joins, windowing, querying state stores, and getting data into and out of Kafka.

Couchbase Tutorial: Big data Open Source Systems: VLDB2018

Couchbase Tutorial: Big data Open Source Systems: VLDB2018Keshav Murthy The document provides an agenda and introduction to Couchbase and N1QL. It discusses Couchbase architecture, data types, data manipulation statements, query operators like JOIN and UNNEST, indexing, and query execution flow in Couchbase. It compares SQL and N1QL, highlighting how N1QL extends SQL to query JSON data.

Webinar: Data Processing and Aggregation Options

Webinar: Data Processing and Aggregation OptionsMongoDB MongoDB scales easily to store mass volumes of data. However, when it comes to making sense of it all what options do you have? In this talk, we'll take a look at 3 different ways of aggregating your data with MongoDB, and determine the reasons why you might choose one way over another. No matter what your big data needs are, you will find out how MongoDB the big data store is evolving to help make sense of your data.

Mongo db presentation

Mongo db presentationJulie Sommerville The document discusses MongoDB database architecture and examples of data structures. It provides examples of adding fields to objects and storing comment replies. It also summarizes MongoDB monitoring tools and concepts like sharding.

Introduction to Apache Tajo: Data Warehouse for Big Data

Introduction to Apache Tajo: Data Warehouse for Big DataGruter Introduction to Apache Tajo: Data Warehouse for Big Data

- by Jihoon Son presented at ApacheCon Big Data Europe 2015

Valtech - Big Data & NoSQL : au-delà du nouveau buzz

Valtech - Big Data & NoSQL : au-delà du nouveau buzzValtech Frederic Sauzet, Consultant, Valtech

[email protected]

Herve Desaunois, Responsable Technique

[email protected]

Ces nouvelles bases de données spécialisées comme Hbase et Neo4j sont de très bonnes solutions pour répondre à de nouvelles problématiques : comme un nombre grandissant de données multi-canales à stocker et à exploiter ou à l’exploitation des graphes sociaux du Web 2.0. Les leaders du Web comme Facebook, Twitter, Google, Adobe, Viadeo se sont emparés de ces solutions très performantes de types NoSQL pour bâtir leur empire de données.

2017 - TYPO3 CertiFUNcation: Mathias Schreiber - TYPO3 CMS 8 What's new

2017 - TYPO3 CertiFUNcation: Mathias Schreiber - TYPO3 CMS 8 What's new TYPO3 CertiFUNcation The document summarizes updates and new features coming to TYPO3. It discusses replacing the existing database abstraction layer (EXT:dbal) with Doctrine DBAL, changes to content restriction containers and usage, new capabilities for configuring the rich text editor via YAML presets, and improvements to the Fluid Styled Content and CSS Styled Content template engines. It also covers changes to the language handling system and new features for integrators working with templates and menus.

Ad

More from Scott Leberknight (20)

JShell & ki

JShell & kiScott Leberknight Slides for a short "mini talk" on using JShell, with a tiny bit on ki (Kotlin interactive shell). JShell and ki are REPLs for Java and Kotlin.

JUnit Pioneer

JUnit PioneerScott Leberknight A presentation on JUnit Pioneer given at Fortitude Technologies on Mar. 4, 2021. JUnit Pioneer is an extension library for JUnit 5 (Jupiter).

Sample code on GitHub at:

https://github.com/sleberknight/junit-pioneering-presentation-code

JUnit Pioneer home page:

https://junit-pioneer.org

JDKs 10 to 14 (and beyond)

JDKs 10 to 14 (and beyond)Scott Leberknight This document summarizes the recent and upcoming Java Development Kit (JDK) releases from JDK 10 through JDK 17. It provides an overview of the release schedule and timelines for long-term support releases. It also describes some of the major new features and language projects included in recent and upcoming JDK versions, such as switch expressions, text blocks, records, and value types. References are provided for further information on the Java release cadence and new features.

Unit Testing

Unit TestingScott Leberknight The document discusses unit testing and its benefits and limitations. It notes that while tests provide confidence that code works as intended, they cannot prevent all problems. The Boeing 737 MAX crashes are discussed as an example of issues despite passing tests due to sensor problems. Proper unit testing involves automated, repeatable, and continuous testing of isolated units with mocked dependencies. Test-driven development and design can lead to more testable and maintainable code, but tests also have costs and limitations.

SDKMAN!

SDKMAN!Scott Leberknight A short presentation slide deck on SDKMAN!, the SDK version manager. You can find SDKMAN! at its website, https://sdkman.io/

SDKMAN! is a tool to manage multiple versions of multiple software development kits (SDKs) like Java, Kotlin, Groovy, Scala, etc.

JUnit 5

JUnit 5Scott Leberknight The document discusses JUnit 5, the next generation of the JUnit testing framework for Java. Key aspects include a new programming model using extensions, support for Java 8 features, and ways to migrate from earlier JUnit versions. The new framework consists of the JUnit Platform launcher, the JUnit Jupiter API for writing tests, and the JUnit Vintage engine for running JUnit 3 and 4 tests.

AWS Lambda

AWS LambdaScott Leberknight Slides for a short presentation I gave on AWS Lambda, which "lets you run code without provisioning or managing servers". Lambda is to running code as Amazon S3 is to storing objects.

Dropwizard

DropwizardScott Leberknight Slides accompanying a presentation on Dropwizard I gave at the DevIgnition conference ( www.devignition.com ) on April 29, 2016. The sample code is on GitHub at https://github.com/sleberknight/dropwizard-devignition-2016

RESTful Web Services with Jersey

RESTful Web Services with JerseyScott Leberknight The document provides an overview of Jersey, an open source framework for developing RESTful web services in Java. It describes how Jersey implements JAX-RS and supports developing resources using Java annotations like @Path, @GET and @Produces. Resources are POJOs that handle HTTP requests at specific URI paths. Jersey also supports object injection, sub-resources, response building and common deployment options like using Grizzly HTTP server.

httpie

httpieScott Leberknight HTTPie is a command line HTTP client that aims to make interacting with web services as human-friendly as possible. It provides colorized and formatted output, supports various HTTP methods and authentication types, and allows interacting with web services through simple HTTP commands. Some key features include output formatting, query parameters, file uploads, authentication, and named sessions for persisting settings between requests.

jps & jvmtop

jps & jvmtopScott Leberknight jps and jvmtop are tools for monitoring Java processes and JVMs. jps lists Java processes similarly to ps and provides options like -l for fully qualified class names. jvmtop is like top but for Java processes, showing columns for PID, memory usage, CPU usage, GC activity, and more. Both tools get process information from /tmp files, so beware of tools like tmpwatch that may delete these files and cause processes to go unmonitored.

Cloudera Impala, updated for v1.0

Cloudera Impala, updated for v1.0Scott Leberknight Slides for presentation on Cloudera Impala I gave at the DC/NOVA Java Users Group on 7/9/2013. It is a slightly updated set of slides from the ones I uploaded a few months ago on 4/19/2013. It covers version 1.0.1 and also includes some new slides on HortonWorks' Stinger Initiative.

Java 8 Lambda Expressions

Java 8 Lambda ExpressionsScott Leberknight Slides for a lightning talk on Java 8 lambda expressions I gave at the Near Infinity (www.nearinfinity.com) 2013 spring conference.

The associated sample code is on GitHub at https://github.com/sleberknight/java8-lambda-samples

Google Guava

Google GuavaScott Leberknight Slides for presentation on Google Guava I gave at the Near Infinity (www.nearinfinity.com) 2013 spring conference.

The associated sample code is on GitHub at https://github.com/sleberknight/google-guava-samples

Cloudera Impala

Cloudera ImpalaScott Leberknight Slides for presentation on Cloudera Impala I gave at the Near Infinity (www.nearinfinity.com) 2013 spring conference.

iOS

iOSScott Leberknight Slides for a presentation on iOS that I gave at the fall 2012 Near Infinity conference. Links to the sample applications on GitHub are in the slides.

Apache ZooKeeper

Apache ZooKeeperScott Leberknight Slides for presentation on ZooKeeper I gave at Near Infinity (www.nearinfinity.com) 2012 spring conference.

The associated sample code is on GitHub at https://github.com/sleberknight/zookeeper-samples

Hadoop

HadoopScott Leberknight Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of computers. It consists of HDFS for distributed storage and MapReduce for distributed processing. HDFS stores large files across multiple machines and provides high throughput access to application data. MapReduce allows processing of large datasets in parallel by splitting the work into independent tasks called maps and reduces. Companies use Hadoop for applications like log analysis, data warehousing, machine learning, and scientific computing on large datasets.

wtf is in Java/JDK/wtf7?

wtf is in Java/JDK/wtf7?Scott Leberknight Slides for a short lightning talk I gave at Near Infinity (www.nearinfinity.com) on what exactly is in JDK7.

CoffeeScript

CoffeeScriptScott Leberknight This document provides an overview and introduction to CoffeeScript, a programming language that compiles to JavaScript. It shows how CoffeeScript code compiles to equivalent JavaScript code, and demonstrates CoffeeScript features like functions, classes, and object-oriented programming. The document introduces CoffeeScript syntax for arrays, objects, loops, and functions, and compares syntax to JavaScript. It also covers CoffeeScript concepts like scoping, context, and class-based object-oriented programming using prototypes.

Ad

Recently uploaded (20)

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

HBase Lightning Talk

- 1. A P A C H E HBASE Scott Leberknight

- 2. BACKGROUND

- 4. "Bigtable is a distributed storage system for managing structured data that is designed to scale to a very large size: petabytes of data across thousands of commodity servers. Many projects at Google store data in Bigtable including web indexing, Google Earth, and Google Finance." - Bigtable: A Distributed Storage System for Structured Data http://labs.google.com/papers/bigtable.html

- 5. "A Bigtable is a sparse, distributed, persistent multidimensional sorted map" - Bigtable: A Distributed Storage System for Structured Data http://labs.google.com/papers/bigtable.html

- 6. wtf?

- 7. distributed sparse column-oriented versioned

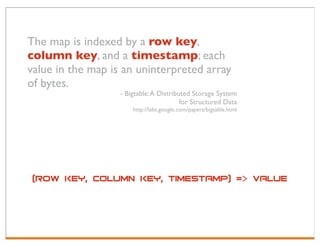

- 8. The map is indexed by a row key, column key, and a timestamp; each value in the map is an uninterpreted array of bytes. - Bigtable: A Distributed Storage System for Structured Data http://labs.google.com/papers/bigtable.html (row key, column key, timestamp) => value

- 9. Key Concepts: row key => 20120407152657 column family => "personal:" column key => "personal:givenName", "personal:surname" timestamp => 1239124584398

- 10. Row Key Timestamp Column Family "info:" ColumN Family "content:" 20120407145045 t7 "info:summary" "An intro to..." t6 "info:author" "John Doe" t5 "Google's Bigtable is..." t4 "Google Bigtable is..." t3 "info:category" "Persistence" t2 "info:author" "John" t1 "info:title" "Intro to Bigtable" 20120320162535 t4 "info:category" "Persistence" t3 "CouchDB is..." t2 "info:author" "Bob Smith" t1 "info:title" "Doc-oriented..."

- 11. Get row 20120407145045... Row Key Timestamp Column Family "info:" Column Family "content:" 20120407145045 t7 "info:summary" "An intro to..." t6 "info:author" "John Doe" t5 "Google's Bigtable is..." t4 "Google Bigtable is..." t3 "info:category" "Persistence" t2 "info:author" "John" t1 "info:title" "Intro to Bigtable" 20120320162535 t4 "info:category" "Persistence" t3 "CouchDB is..." t2 "info:author" "Bob Smith" t1 "info:title" "Doc-oriented..."

- 12. Use HBase when you need random, realtime read/ write access to your Big Data. This project's goal is the hosting of very large tables -- billions of rows X millions of columns -- atop clusters of commodity hardware. HBase is an open-source, distributed, versioned, column-oriented store modeled after Google's Bigtable. - http://hbase.apache.org/

- 13. HBase Shell hbase(main):001:0> create 'blog', 'info', 'content' 0 row(s) in 4.3640 seconds hbase(main):002:0> put 'blog', '20120320162535', 'info:title', 'Document-oriented storage using CouchDB' 0 row(s) in 0.0330 seconds hbase(main):003:0> put 'blog', '20120320162535', 'info:author', 'Bob Smith' 0 row(s) in 0.0030 seconds hbase(main):004:0> put 'blog', '20120320162535', 'content:', 'CouchDB is a document-oriented...' 0 row(s) in 0.0030 seconds hbase(main):005:0> put 'blog', '20120320162535', 'info:category', 'Persistence' 0 row(s) in 0.0030 seconds hbase(main):006:0> get 'blog', '20120320162535' COLUMN CELL content: timestamp=1239135042862, value=CouchDB is a doc... info:author timestamp=1239135042755, value=Bob Smith info:category timestamp=1239135042982, value=Persistence info:title timestamp=1239135042623, value=Document-oriented... 4 row(s) in 0.0140 seconds

- 14. HBase Shell hbase(main):015:0> get 'blog', '20120407145045', {COLUMN=>'info:author', VERSIONS=>3 } timestamp=1239135325074, value=John Doe timestamp=1239135324741, value=John 2 row(s) in 0.0060 seconds hbase(main):016:0> scan 'blog', { STARTROW => '20120300', STOPROW => '20120400' } ROW COLUMN+CELL 20120320162535 column=content:, timestamp=1239135042862, value=CouchDB is... 20120320162535 column=info:author, timestamp=1239135042755, value=Bob Smith 20120320162535 column=info:category, timestamp=1239135042982, value=Persistence 20120320162535 column=info:title, timestamp=1239135042623, value=Document... 4 row(s) in 0.0230 seconds

- 15. Got byte[]?

- 16. // Create a new table Configuration conf = HBaseConfiguration.create(); HBaseAdmin admin = new HBaseAdmin(conf); String tableName = "people"; HTableDescriptor desc = new HTableDescriptor(tableName); desc.addFamily(new HColumnDescriptor("personal")); desc.addFamily(new HColumnDescriptor("contactinfo")); desc.addFamily(new HColumnDescriptor("creditcard")); admin.createTable(desc); System.out.printf("%s is available? %bn", tableName, admin.isTableAvailable(tableName));

- 17. import static org.apache.hadoop.hbase.util.Bytes.toBytes; // Add some data into 'people' table Configuration conf = HBaseConfiguration.create(); Put put = new Put(toBytes("connor-john-m-43299")); put.add(toBytes("personal"), toBytes("givenName"), toBytes("John")); put.add(toBytes("personal"), toBytes("mi"), toBytes("M")); put.add(toBytes("personal"), toBytes("surname"), toBytes("Connor")); put.add(toBytes("contactinfo"), toBytes("email"), toBytes("[email protected]")); table.put(put); table.flushCommits(); table.close();

- 18. Finding data: get (by row key) scan (by row key ranges, filtering)

- 19. // Get a row. Ask for only the data you need. Configuration conf = HBaseConfiguration.create(); HTable table = new HTable(conf, "people"); Get get = new Get(toBytes("connor-john-m-43299")); get.setMaxVersions(2); get.addFamily(toBytes("personal")); get.addColumn(toBytes("contactinfo"), toBytes("email")); Result result = table.get(get);

- 20. // Update existing values, and add a new one Configuration conf = HBaseConfiguration.create(); HTable table = new HTable(conf, "people"); Put put = new Put(toBytes("connor-john-m-43299")); put.add(toBytes("personal"), toBytes("surname"), toBytes("Smith")); put.add(toBytes("contactinfo"), toBytes("email"), toBytes("[email protected]")); put.add(toBytes("contactinfo"), toBytes("address"), toBytes("San Diego, CA")); table.put(put); table.flushCommits(); table.close();

- 21. // Scan rows... Configuration conf = HBaseConfiguration.create(); HTable table = new HTable(conf, "people"); Scan scan = new Scan(toBytes("smith-")); scan.addColumn(toBytes("personal"), toBytes("givenName")); scan.addColumn(toBytes("contactinfo", toBytes("email")); scan.addColumn(toBytes("contactinfo", toBytes("address")); scan.setFilter(new PageFilter(numRowsPerPage)); ResultScanner sacnner = table.getScanner(scan); for (Result result : scanner) { // process result... }

- 22. DAta Modeling Row key design MATCH TO DATA ACCESS PATTERNS WIDE VS. NARROW ROWS

- 23. REferences shop.oreilly.com/product/0636920014348.do http://shop.oreilly.com/product/0636920021773.do (3rd edition pub date is May 29, 2012) hbase.apache.org

- 24. (my info) scott.leberknight at nearinfinity.com www.nearinfinity.com/blogs/ twitter: sleberknight