How to build 1000 microservices with Kafka and thrive

3 likes1,014 views

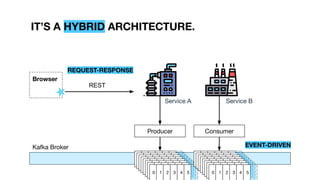

This talk is about the Wix ecosystem for event driven architecture on top of Kafka. I share the best practices, SDKs and tools we have created in order to be able to scale our distributed system to more than 1000 microservices.

1 of 70

Downloaded 25 times

Ad

Recommended

SFBigAnalytics_20190724: Monitor kafka like a Pro

SFBigAnalytics_20190724: Monitor kafka like a ProChester Chen Kafka operators need to provide guarantees to the business that Kafka is working properly and delivering data in real time, and they need to identify and triage problems so they can solve them before end users notice them. This elevates the importance of Kafka monitoring from a nice-to-have to an operational necessity. In this talk, Kafka operations experts Xavier Léauté and Gwen Shapira share their best practices for monitoring Kafka and the streams of events flowing through it. How to detect duplicates, catch buggy clients, and triage performance issues – in short, how to keep the business’s central nervous system healthy and humming along, all like a Kafka pro.

Speakers: Gwen Shapira, Xavier Leaute (Confluence)

Gwen is a software engineer at Confluent working on core Apache Kafka. She has 15 years of experience working with code and customers to build scalable data architectures. She currently specializes in building real-time reliable data processing pipelines using Apache Kafka. Gwen is an author of “Kafka - the Definitive Guide”, "Hadoop Application Architectures", and a frequent presenter at industry conferences. Gwen is also a committer on the Apache Kafka and Apache Sqoop projects.

Xavier Leaute is One of the first engineers to Confluent team, Xavier is responsible for analytics infrastructure, including real-time analytics in KafkaStreams. He was previously a quantitative researcher at BlackRock. Prior to that, he held various research and analytics roles at Barclays Global Investors and MSCI.

Running Kafka On Kubernetes With Strimzi For Real-Time Streaming Applications

Running Kafka On Kubernetes With Strimzi For Real-Time Streaming ApplicationsLightbend In this talk by Sean Glover, Principal Engineer at Lightbend, we will review how the Strimzi Kafka Operator, a supported technology in Lightbend Platform, makes many operational tasks in Kafka easy, such as the initial deployment and updates of a Kafka and ZooKeeper cluster.

See the blog post containing the YouTube video here: https://www.lightbend.com/blog/running-kafka-on-kubernetes-with-strimzi-for-real-time-streaming-applications

8 Lessons Learned from Using Kafka in 1000 Scala microservices - Scale by the...

8 Lessons Learned from Using Kafka in 1000 Scala microservices - Scale by the...Natan Silnitsky Kafka is the bedrock of Wix's distributed microservices system. For the last 5 years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1500 microservices.

We’ve managed to achieve higher decoupling and independence for our various services and dev teams that have very different use-cases while maintaining a single uniform infrastructure in place.

In these slides you will learn about 8 key decisions and steps you can take in order to safely scale-up your Kafka-based system. These include:

* How to increase dev velocity of event driven style code.

* How to optimize working with Kafka in polyglot setting

* How to support growing amount of traffic and developers.

Follow the (Kafka) Streams

Follow the (Kafka) Streamsconfluent Mario Molina, Datio, Software Engineer

Kafka Streams is an open source JVM library for building event streaming applications on top of Apache Kafka. Its goal is to allow programmers to create efficient, real-time, streaming applications and perform analysis and operations on the incoming data.

In this presentation we’ll cover the main features of Kafka Streams and do a live demo!

This demo will be partially on Confluent Cloud, if you haven’t already signed up, you can try Confluent Cloud for free. Get $200 every month for your first three months ($600 free usage in total) get more information and claim it here: https://cnfl.io/cloud-meetup-free

https://www.meetup.com/Mexico-Kafka/events/271972045/

Strimzi - Where Apache Kafka meets OpenShift - OpenShift Spain MeetUp

Strimzi - Where Apache Kafka meets OpenShift - OpenShift Spain MeetUpJosé Román Martín Gil Apache Kafka is the most used data streaming broker by companies. It could manage millions of messages easily and it is the base of many architectures based in events, micro-services, orchestration, ... and now cloud environments. OpenShift is the most extended Platform as a Service (PaaS). It is based in Kubernetes and it helps the companies to deploy easily any kind of workload in a cloud environment. Thanks many of its features it is the base for many architectures based in stateless applications to build new Cloud Native Applications. Strimzi is an open source community that implements a set of Kubernetes Operators to help you to manage and deploy Apache Kafka brokers in OpenShift environments.

These slides will introduce you Strimzi as a new component on OpenShift to manage your Apache Kafka clusters.

Slides used at OpenShift Meetup Spain:

- https://www.meetup.com/es-ES/openshift_spain/events/261284764/

Making Kafka Cloud Native | Jay Kreps, Co-Founder & CEO, Confluent

Making Kafka Cloud Native | Jay Kreps, Co-Founder & CEO, ConfluentHostedbyConfluent A talk discussing the rise of Apache Kafka and data in motion plus the impact of cloud native data systems. This talk will cover how Kafka needs to evolve to keep up with the future of cloud, what this means for distributed systems engineers, and what work is being done to truly make Kafka Cloud Native

Battle-tested event-driven patterns for your microservices architecture - Sca...

Battle-tested event-driven patterns for your microservices architecture - Sca...Natan Silnitsky During the past couple of years I’ve implemented or have witnessed implementations of several key patterns of event-driven messaging designs on top of Kafka that have facilitated creating a robust distributed microservices system at Wix that can easily handle increasing traffic and storage needs with many different use-cases.

In this talk I will share these patterns with you, including:

* Consume and Project (data decoupling)

* End-to-end Events (Kafka+websockets)

* In memory KV stores (consume and query with 0-latency)

* Events transactions (Exactly Once Delivery)

Flexible Authentication Strategies with SASL/OAUTHBEARER (Michael Kaminski, T...

Flexible Authentication Strategies with SASL/OAUTHBEARER (Michael Kaminski, T...confluent In order to maximize Kafka accessibility within an organization, Kafka operators must choose an authentication option that balances security with ease of use. Kafka has been historically limited to a small number of authentication options that are difficult to integrate with a Single Signon (SSO) strategy, such as mutual TLS, basic auth, and Kerberos. The arrival of SASL/OAUTHBEARER in Kafka 2.0.0 affords system operators a flexible framework for integrating Kafka with their existing authentication infrastructure. Ron Dagostino (State Street Corporation) and Mike Kaminski (The New York Times) team up to discuss SASL/OAUTHBEARER and it’s real-world applications. Ron, who contributed the feature to core Kafka, explains the origins and intricacies of its development along with additional, related security changes, including client re-authentication (merged and scheduled for release in v2.2.0) and the plans for support of SASL/OAUTHBEARER in librdkafka-based clients. Mike Kaminski, a developer on The Publishing Pipeline team at The New York Times, talks about how his team leverages SASL/OAUTHBEARER to break down silos between teams by making it easy for product owners to get connected to the Publishing Pipeline’s Kafka cluster.

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...Natan Silnitsky At Wix, we have created a universal event-driven programming infrastructure on top of the Kafka message broker.

This infra makes sure messages are eventually successfully consumed and produced no matter what failure it encounters.

In this talk, you will learn about the features we introduced in order to make sure our distributed system can safely handle an ever growing message throughput in a fault tolerant manner.

You will be introduced to such techniques as retry topics, local persistent queues, and cooperative fibers that help make your flows more resilient and performant.

You will also learn how to make this infra work for all programming languages tech stacks with optimal resource manage using the power of Kubernetes and gRPC.

When to use a client library, and when to deploy an external pod (DaemonSet) or even deploy a sidecar.

Tips & Tricks for Apache Kafka®

Tips & Tricks for Apache Kafka®confluent Kat Grigg, Confluent, Senior Customer Success Architect + Jen Snipes, Confluent, Senior Customer Success Architect

This presentation will cover tips and best practices for Apache Kafka. In this talk, we will be covering the basic internals of Kafka and how these components integrate together including brokers, topics, partitions, consumers and producers, replication, and Zookeeper. We will be talking about the major categories of operations you need to be setting up and monitoring including configuration, deployment, maintenance, monitoring and then debugging.

https://www.meetup.com/KafkaBayArea/events/270915296/

New Features in Confluent Platform 6.0 / Apache Kafka 2.6

New Features in Confluent Platform 6.0 / Apache Kafka 2.6Kai Wähner New Features in Confluent Platform 6.0 / Apache Kafka 2.6, including REST Proxy and API, Tiered Storage for AWS S3 and GCP GCS, Cluster Linking (On-Premise, Edge, Hybrid, Multi-Cloud), Self-Balancing Clusters), ksqlDB.

Using Location Data to Showcase Keys, Windows, and Joins in Kafka Streams DSL...

Using Location Data to Showcase Keys, Windows, and Joins in Kafka Streams DSL...confluent This document provides an overview of using location data to showcase keys, windows, and joins in Kafka Streams DSL and KSQL. It describes several algorithms for finding the nearest airport or aircraft to a given location within a 5-minute window using Kafka Streams. The algorithms involve bucketing location data, calculating distances, aggregating counts, and suppressing updates to optimize processing. The document cautions that windows are not magic and getting the window logic wrong can lead to incorrect results.

Event Driven Architectures with Apache Kafka on Heroku

Event Driven Architectures with Apache Kafka on HerokuHeroku Apache Kafka is the backbone for building architectures that deal with billions of events a day. Chris Castle, Developer Advocate, will show you where it might fit in your roadmap.

- What Apache Kafka is and how to use it on Heroku

- How Kafka enables you to model your data as immutable streams of events, introducing greater parallelism into your applications

- How you can use it to solve scale problems across your stack such as managing high throughput inbound events and building data pipelines

Learn more at https://www.heroku.com/kafka

Reveal.js version of slides: http://slides.com/christophercastle/deck#/

ksqlDB: A Stream-Relational Database System

ksqlDB: A Stream-Relational Database Systemconfluent Speaker: Matthias J. Sax, Software Engineer, Confluent

ksqlDB is a distributed event streaming database system that allows users to express SQL queries over relational tables and event streams. The project was released by Confluent in 2017 and is hosted on Github and developed with an open-source spirit. ksqlDB is built on top of Apache Kafka®, a distributed event streaming platform. In this talk, we discuss ksqlDB’s architecture that is influenced by Apache Kafka and its stream processing library, Kafka Streams. We explain how ksqlDB executes continuous queries while achieving fault tolerance and high vailability. Furthermore, we explore ksqlDB’s streaming SQL dialect and the different types of supported queries.

Matthias J. Sax is a software engineer at Confluent working on ksqlDB. He mainly contributes to Kafka Streams, Apache Kafka's stream processing library, which serves as ksqlDB's execution engine. Furthermore, he helps evolve ksqlDB's "streaming SQL" language. In the past, Matthias also contributed to Apache Flink and Apache Storm and he is an Apache committer and PMC member. Matthias holds a Ph.D. from Humboldt University of Berlin, where he studied distributed data stream processing systems.

https://db.cs.cmu.edu/events/quarantine-db-talk-2020-confluent-ksqldb-a-stream-relational-database-system/

Overcoming the Perils of Kafka Secret Sprawl (Tejal Adsul, Confluent) Kafka S...

Overcoming the Perils of Kafka Secret Sprawl (Tejal Adsul, Confluent) Kafka S...confluent The document discusses the perils of secret sprawl and clear text secrets in Apache Kafka configurations. It proposes using a key management system (KMS) to securely store secrets and introducing a configuration provider that allows replacing secrets in configuration files with references to the KMS. The Kafka Improvement Proposals (KIPs) 297 and 421 aim to address this by externalizing secrets to providers and automatically resolving secrets during configuration parsing. Key recommendations include selecting a KMS, moving secrets to it, adding the configuration provider, and replacing secrets with indirection tuples pointing to the KMS.

KSQL - Stream Processing simplified!

KSQL - Stream Processing simplified!Guido Schmutz KSQL is a stream processing SQL engine, which allows stream processing on top of Apache Kafka. KSQL is based on Kafka Stream and provides capabilities for consuming messages from Kafka, analysing these messages in near-realtime with a SQL like language and produce results again to a Kafka topic. By that, no single line of Java code has to be written and you can reuse your SQL knowhow. This lowers the bar for starting with stream processing significantly.

KSQL offers powerful capabilities of stream processing, such as joins, aggregations, time windows and support for event time. In this talk I will present how KSQL integrates with the Kafka ecosystem and demonstrate how easy it is to implement a solution using KSQL for most part. This will be done in a live demo on a fictitious IoT sample.

From Zero to Hero with Kafka Connect

From Zero to Hero with Kafka Connectconfluent Watch this talk here: https://www.confluent.io/online-talks/from-zero-to-hero-with-kafka-connect-on-demand

Integrating Apache Kafka® with other systems in a reliable and scalable way is often a key part of a streaming platform. Fortunately, Apache Kafka includes the Connect API that enables streaming integration both in and out of Kafka. Like any technology, understanding its architecture and deployment patterns is key to successful use, as is knowing where to go looking when things aren't working.

This talk will discuss the key design concepts within Apache Kafka Connect and the pros and cons of standalone vs distributed deployment modes. We'll do a live demo of building pipelines with Apache Kafka Connect for streaming data in from databases, and out to targets including Elasticsearch. With some gremlins along the way, we'll go hands-on in methodically diagnosing and resolving common issues encountered with Apache Kafka Connect. The talk will finish off by discussing more advanced topics including Single Message Transforms, and deployment of Apache Kafka Connect in containers.

From Zero to Hero with Kafka Connect

From Zero to Hero with Kafka Connectconfluent Webcast available here: https://videos.confluent.io/watch/ptpxz9ks5cratppU9d4bUD?

Speaker: Robin Moffatt

Securing Kafka At Zendesk (Joy Nag, Zendesk) Kafka Summit 2020

Securing Kafka At Zendesk (Joy Nag, Zendesk) Kafka Summit 2020confluent Kafka is one of the most important foundation services at Zendesk. It became even more crucial with the introduction of Global Event Bus which my team built to propagate events between Kafka clusters hosted at different parts of the world and between different products. As part of its rollout, we had to add mTLS support in all of our Kafka Clusters (we have quite a few of them), this was to make propagation of events between clusters hosted at different parts of the world secure. It was quite a journey, but we eventually built a solution that is working well for us.

Things I will be sharing as part of the talk:

1. Establishing the use case/problem we were trying to solve (why we needed mTLS)

2. Building a Certificate Authority with open source tools (with self-signed Root CA)

3. Building helper components to generate certificates automatically and regenerate them before they expire (helps using a shorter TTL (Time To Live) which is good security practice) for both Kafka Clients and Brokers

4. Hot reloading regenerated certificates on Kafka brokers without downtime

5. What we built to rotate the self-signed root CA without downtime as well across the board

6. Monitoring and alerts on TTL of certificates

7. Performance impact of using TLS (along with why TLS affects kafka’s performance)

8. What we are doing to drive adoption of mTLS for existing Kafka clients using PLAINTEXT protocol by making onboarding easier

9. How this will become a base for other features we want, eg ACL, Rate Limiting (by using the principal from the TLS certificate as Identity of clients)

How did we move the mountain? - Migrating 1 trillion+ messages per day across...

How did we move the mountain? - Migrating 1 trillion+ messages per day across...HostedbyConfluent Have you ever migrated Kafka clusters from one data center to another being completely transparent to client applications?

At PayPal, as part of a massive datacenter migration initiative, Kafka team successfully moved all PayPal Kafka traffic across data centers. This initiative involved migrating 20+ Kafka clusters (1000+ broker and zookeeper nodes), as well as 60+ mirrormaker groups which seamlessly handle Kafka traffic volumes as high as 1 trillion messages per day. Throughout the course of this migration, applications required no modification, encountered 0% service outage, 0% message loss and duplicated messages. The whole migration process was fully transparent to Kafka applications.

In this session, you will learn the strategies, techniques and tools the PayPal Kafka team has utilized for managing the migration process. You will also learn the lessons and pitfalls they experienced during this exercise, as well as the secret sauce of making the migration successful.

Stream Processing Live Traffic Data with Kafka Streams

Stream Processing Live Traffic Data with Kafka StreamsTom Van den Bulck In this workshop we will set up a streaming framework which will process realtime data of traffic sensors installed within the Belgian road system.

Starting with the intake of the data, you will learn best practices and the recommended approach to split the information into events in a way that won't come back to haunt you.

With some basic stream operations (count, filter, ... ) you will get to know the data and experience how easy it is to get things done with Spring Boot & Spring Cloud Stream.

But since simple data processing is not enough to fulfill all your streaming needs, we will also let you experience the power of windows.

After this workshop, tumbling, sliding and session windows hold no more mysteries and you will be a true streaming wizard.

Building Stream Processing Applications with Apache Kafka Using KSQL (Robin M...

Building Stream Processing Applications with Apache Kafka Using KSQL (Robin M...confluent Robin is a Developer Advocate at Confluent, the company founded by the creators of Apache Kafka, as well as an Oracle Groundbreaker Ambassador. His career has always involved data, from the old worlds of COBOL and DB2, through the worlds of Oracle and Hadoop, and into the current world with Kafka. His particular interests are analytics, systems architecture, performance testing and optimization. He blogs at http://cnfl.io/rmoff and http://rmoff.net/ and can be found tweeting grumpy geek thoughts as @rmoff. Outside of work he enjoys drinking good beer and eating fried breakfasts, although generally not at the same time.

So You’ve Inherited Kafka? Now What? (Alon Gavra, AppsFlyer) Kafka Summit Lon...

So You’ve Inherited Kafka? Now What? (Alon Gavra, AppsFlyer) Kafka Summit Lon...confluent This document summarizes a presentation about managing Kafka clusters at scale. It discusses how AppsFlyer migrated from a monolithic Kafka deployment to multiple clusters for different teams. It then outlines challenges faced like traffic surges and mixed Kafka protocol versions. Solutions discussed include improving infrastructure, adding visibility tools, creating automation and APIs for management, and implementing sleep-driven design principles to reduce developer fatigue. The presentation concludes by discussing future goals like auto-scaling clusters.

Writing Blazing Fast, and Production-Ready Kafka Streams apps in less than 30...

Writing Blazing Fast, and Production-Ready Kafka Streams apps in less than 30...HostedbyConfluent If you have already worked on various Kafka Streams applications before, then you have probably found yourself in the situation of rewriting the same piece of code again and again.

Whether it's to manage processing failures or bad records, to use interactive queries, to organize your code, to deploy or to monitor your Kafka Streams app, build some in-house libraries to standardize common patterns across your projects seems to be unavoidable.

And, if you're new to Kafka Streams you might be interested to know what are those patterns to use for your next streaming project.

In this talk, I propose to introduce you to Azkarra, an open-source lightweight Java framework that was designed to provide most of that stuffs off-the-shelf by leveraging the best-of-breed ideas and proven practices from the Apache Kafka community.

Kafka Needs No Keeper

Kafka Needs No KeeperC4Media Kafka is evolving to remove its dependency on Zookeeper. The Kafka Improvement Proposal 500 (KIP-500) aims to manage Kafka's metadata log with a self-managed Raft consensus algorithm and controller quorum rather than relying on Zookeeper. This will improve scalability, robustness, and make deployment easier. It will take multiple releases to fully implement KIP-500, beginning with removing Zookeeper from clients and ending with a release where Zookeeper is no longer required.

10 Lessons Learned from using Kafka with 1000 microservices - java global summit

10 Lessons Learned from using Kafka with 1000 microservices - java global summitNatan Silnitsky Kafka is the bedrock of Wix's distributed microservices system. For the last 5 years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1400 microservices.

We’ve managed to achieve higher decoupling and independence for our various services and dev teams that have very different use-cases while maintaining a single uniform infrastructure in place.

In these slides you will learn about 10 key decisions and steps you can take in order to safely scale-up your Kafka-based system. These include:

* How to increase dev velocity of event driven style code.

* How to optimize working with Kafka in polyglot setting

* How to support growing amount of traffic and developers.

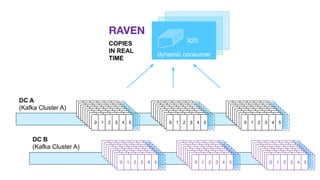

* How to tackle multiple DCs environment.

Kafka Pluggable Authorization for Enterprise Security (Anna Kepler, Viasat) K...

Kafka Pluggable Authorization for Enterprise Security (Anna Kepler, Viasat) K...confluent At Viasat, Kafka is a backbone for a multi-tenant streaming platform that transports data for 1000 streams and used by more than 60 teams in a production environment. Role-based access control to the sensitive data is an essential requirement for our customers who must comply with a variety of regulations including GDPR. Kafka ships with a pluggable Authorizer that can control access to resources like cluster, topic or consumer group. However, maintaining ACLs in the large multi-tenant deployment can be support-intensive. At Viasat, we developed a custom Kafka Authorizer and Role Manager application that integrates our Kafka cluster with Viasat’s internal LDAP services. The presentation will cover how we designed and built Kafka LDAP Authorizer, which allows us to control resources within the cluster as well as services built around Kafka. We apply our permissions model to our data forwarders, ETL jobs, and stream processing. We will also share how we achieved a stress free migration to secure infrastructure without interruption to the production data flow. Our secure deployment model accomplishes multiple goals: – Integration into an LDAP central authentication system. – Use of the same authorization service to control permissions to data in Kafka as well as services built around Kafka. – Delegation of permissions control to the security officers on the teams using the service. – Detailed audit and breach notifications based on the metrics produced by the custom authorizer. We plan to open source our custom Kafka Authorizer.

10 Lessons Learned from using Kafka in 1000 microservices - ScalaUA

10 Lessons Learned from using Kafka in 1000 microservices - ScalaUANatan Silnitsky Kafka is the bedrock of Wix’s distributed Mega Microservices system.

Over the years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1400 mostly Scala microservices.

In this talk, you will learn about 10 key decisions and steps you can take in order to safely scale-up your Kafka-based system.

These Include:

* How to increase dev velocity of event-driven style code.

* How to optimize working with Kafka in polyglot setting

* How to migrate from request-reply to event-driven

* How to tackle multiple DCs environment.

8 Lessons Learned from Using Kafka in 1500 microservices - confluent streamin...

8 Lessons Learned from Using Kafka in 1500 microservices - confluent streamin...Natan Silnitsky Kafka is the bedrock of Wix's distributed microservices system. For the last 5 years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1500 microservices.

We’ve managed to achieve higher decoupling and independence for our various services and dev teams that have very different use-cases while maintaining a single uniform infrastructure in place.

In these slides you will learn about 8 key decisions and steps you can take in order to safely scale-up your Kafka-based system. These include:

* How to increase dev velocity of event driven style code.

* How to optimize working with Kafka in polyglot setting

* How to support growing amount of traffic and developers.

Ad

More Related Content

What's hot (20)

Flexible Authentication Strategies with SASL/OAUTHBEARER (Michael Kaminski, T...

Flexible Authentication Strategies with SASL/OAUTHBEARER (Michael Kaminski, T...confluent In order to maximize Kafka accessibility within an organization, Kafka operators must choose an authentication option that balances security with ease of use. Kafka has been historically limited to a small number of authentication options that are difficult to integrate with a Single Signon (SSO) strategy, such as mutual TLS, basic auth, and Kerberos. The arrival of SASL/OAUTHBEARER in Kafka 2.0.0 affords system operators a flexible framework for integrating Kafka with their existing authentication infrastructure. Ron Dagostino (State Street Corporation) and Mike Kaminski (The New York Times) team up to discuss SASL/OAUTHBEARER and it’s real-world applications. Ron, who contributed the feature to core Kafka, explains the origins and intricacies of its development along with additional, related security changes, including client re-authentication (merged and scheduled for release in v2.2.0) and the plans for support of SASL/OAUTHBEARER in librdkafka-based clients. Mike Kaminski, a developer on The Publishing Pipeline team at The New York Times, talks about how his team leverages SASL/OAUTHBEARER to break down silos between teams by making it easy for product owners to get connected to the Publishing Pipeline’s Kafka cluster.

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...Natan Silnitsky At Wix, we have created a universal event-driven programming infrastructure on top of the Kafka message broker.

This infra makes sure messages are eventually successfully consumed and produced no matter what failure it encounters.

In this talk, you will learn about the features we introduced in order to make sure our distributed system can safely handle an ever growing message throughput in a fault tolerant manner.

You will be introduced to such techniques as retry topics, local persistent queues, and cooperative fibers that help make your flows more resilient and performant.

You will also learn how to make this infra work for all programming languages tech stacks with optimal resource manage using the power of Kubernetes and gRPC.

When to use a client library, and when to deploy an external pod (DaemonSet) or even deploy a sidecar.

Tips & Tricks for Apache Kafka®

Tips & Tricks for Apache Kafka®confluent Kat Grigg, Confluent, Senior Customer Success Architect + Jen Snipes, Confluent, Senior Customer Success Architect

This presentation will cover tips and best practices for Apache Kafka. In this talk, we will be covering the basic internals of Kafka and how these components integrate together including brokers, topics, partitions, consumers and producers, replication, and Zookeeper. We will be talking about the major categories of operations you need to be setting up and monitoring including configuration, deployment, maintenance, monitoring and then debugging.

https://www.meetup.com/KafkaBayArea/events/270915296/

New Features in Confluent Platform 6.0 / Apache Kafka 2.6

New Features in Confluent Platform 6.0 / Apache Kafka 2.6Kai Wähner New Features in Confluent Platform 6.0 / Apache Kafka 2.6, including REST Proxy and API, Tiered Storage for AWS S3 and GCP GCS, Cluster Linking (On-Premise, Edge, Hybrid, Multi-Cloud), Self-Balancing Clusters), ksqlDB.

Using Location Data to Showcase Keys, Windows, and Joins in Kafka Streams DSL...

Using Location Data to Showcase Keys, Windows, and Joins in Kafka Streams DSL...confluent This document provides an overview of using location data to showcase keys, windows, and joins in Kafka Streams DSL and KSQL. It describes several algorithms for finding the nearest airport or aircraft to a given location within a 5-minute window using Kafka Streams. The algorithms involve bucketing location data, calculating distances, aggregating counts, and suppressing updates to optimize processing. The document cautions that windows are not magic and getting the window logic wrong can lead to incorrect results.

Event Driven Architectures with Apache Kafka on Heroku

Event Driven Architectures with Apache Kafka on HerokuHeroku Apache Kafka is the backbone for building architectures that deal with billions of events a day. Chris Castle, Developer Advocate, will show you where it might fit in your roadmap.

- What Apache Kafka is and how to use it on Heroku

- How Kafka enables you to model your data as immutable streams of events, introducing greater parallelism into your applications

- How you can use it to solve scale problems across your stack such as managing high throughput inbound events and building data pipelines

Learn more at https://www.heroku.com/kafka

Reveal.js version of slides: http://slides.com/christophercastle/deck#/

ksqlDB: A Stream-Relational Database System

ksqlDB: A Stream-Relational Database Systemconfluent Speaker: Matthias J. Sax, Software Engineer, Confluent

ksqlDB is a distributed event streaming database system that allows users to express SQL queries over relational tables and event streams. The project was released by Confluent in 2017 and is hosted on Github and developed with an open-source spirit. ksqlDB is built on top of Apache Kafka®, a distributed event streaming platform. In this talk, we discuss ksqlDB’s architecture that is influenced by Apache Kafka and its stream processing library, Kafka Streams. We explain how ksqlDB executes continuous queries while achieving fault tolerance and high vailability. Furthermore, we explore ksqlDB’s streaming SQL dialect and the different types of supported queries.

Matthias J. Sax is a software engineer at Confluent working on ksqlDB. He mainly contributes to Kafka Streams, Apache Kafka's stream processing library, which serves as ksqlDB's execution engine. Furthermore, he helps evolve ksqlDB's "streaming SQL" language. In the past, Matthias also contributed to Apache Flink and Apache Storm and he is an Apache committer and PMC member. Matthias holds a Ph.D. from Humboldt University of Berlin, where he studied distributed data stream processing systems.

https://db.cs.cmu.edu/events/quarantine-db-talk-2020-confluent-ksqldb-a-stream-relational-database-system/

Overcoming the Perils of Kafka Secret Sprawl (Tejal Adsul, Confluent) Kafka S...

Overcoming the Perils of Kafka Secret Sprawl (Tejal Adsul, Confluent) Kafka S...confluent The document discusses the perils of secret sprawl and clear text secrets in Apache Kafka configurations. It proposes using a key management system (KMS) to securely store secrets and introducing a configuration provider that allows replacing secrets in configuration files with references to the KMS. The Kafka Improvement Proposals (KIPs) 297 and 421 aim to address this by externalizing secrets to providers and automatically resolving secrets during configuration parsing. Key recommendations include selecting a KMS, moving secrets to it, adding the configuration provider, and replacing secrets with indirection tuples pointing to the KMS.

KSQL - Stream Processing simplified!

KSQL - Stream Processing simplified!Guido Schmutz KSQL is a stream processing SQL engine, which allows stream processing on top of Apache Kafka. KSQL is based on Kafka Stream and provides capabilities for consuming messages from Kafka, analysing these messages in near-realtime with a SQL like language and produce results again to a Kafka topic. By that, no single line of Java code has to be written and you can reuse your SQL knowhow. This lowers the bar for starting with stream processing significantly.

KSQL offers powerful capabilities of stream processing, such as joins, aggregations, time windows and support for event time. In this talk I will present how KSQL integrates with the Kafka ecosystem and demonstrate how easy it is to implement a solution using KSQL for most part. This will be done in a live demo on a fictitious IoT sample.

From Zero to Hero with Kafka Connect

From Zero to Hero with Kafka Connectconfluent Watch this talk here: https://www.confluent.io/online-talks/from-zero-to-hero-with-kafka-connect-on-demand

Integrating Apache Kafka® with other systems in a reliable and scalable way is often a key part of a streaming platform. Fortunately, Apache Kafka includes the Connect API that enables streaming integration both in and out of Kafka. Like any technology, understanding its architecture and deployment patterns is key to successful use, as is knowing where to go looking when things aren't working.

This talk will discuss the key design concepts within Apache Kafka Connect and the pros and cons of standalone vs distributed deployment modes. We'll do a live demo of building pipelines with Apache Kafka Connect for streaming data in from databases, and out to targets including Elasticsearch. With some gremlins along the way, we'll go hands-on in methodically diagnosing and resolving common issues encountered with Apache Kafka Connect. The talk will finish off by discussing more advanced topics including Single Message Transforms, and deployment of Apache Kafka Connect in containers.

From Zero to Hero with Kafka Connect

From Zero to Hero with Kafka Connectconfluent Webcast available here: https://videos.confluent.io/watch/ptpxz9ks5cratppU9d4bUD?

Speaker: Robin Moffatt

Securing Kafka At Zendesk (Joy Nag, Zendesk) Kafka Summit 2020

Securing Kafka At Zendesk (Joy Nag, Zendesk) Kafka Summit 2020confluent Kafka is one of the most important foundation services at Zendesk. It became even more crucial with the introduction of Global Event Bus which my team built to propagate events between Kafka clusters hosted at different parts of the world and between different products. As part of its rollout, we had to add mTLS support in all of our Kafka Clusters (we have quite a few of them), this was to make propagation of events between clusters hosted at different parts of the world secure. It was quite a journey, but we eventually built a solution that is working well for us.

Things I will be sharing as part of the talk:

1. Establishing the use case/problem we were trying to solve (why we needed mTLS)

2. Building a Certificate Authority with open source tools (with self-signed Root CA)

3. Building helper components to generate certificates automatically and regenerate them before they expire (helps using a shorter TTL (Time To Live) which is good security practice) for both Kafka Clients and Brokers

4. Hot reloading regenerated certificates on Kafka brokers without downtime

5. What we built to rotate the self-signed root CA without downtime as well across the board

6. Monitoring and alerts on TTL of certificates

7. Performance impact of using TLS (along with why TLS affects kafka’s performance)

8. What we are doing to drive adoption of mTLS for existing Kafka clients using PLAINTEXT protocol by making onboarding easier

9. How this will become a base for other features we want, eg ACL, Rate Limiting (by using the principal from the TLS certificate as Identity of clients)

How did we move the mountain? - Migrating 1 trillion+ messages per day across...

How did we move the mountain? - Migrating 1 trillion+ messages per day across...HostedbyConfluent Have you ever migrated Kafka clusters from one data center to another being completely transparent to client applications?

At PayPal, as part of a massive datacenter migration initiative, Kafka team successfully moved all PayPal Kafka traffic across data centers. This initiative involved migrating 20+ Kafka clusters (1000+ broker and zookeeper nodes), as well as 60+ mirrormaker groups which seamlessly handle Kafka traffic volumes as high as 1 trillion messages per day. Throughout the course of this migration, applications required no modification, encountered 0% service outage, 0% message loss and duplicated messages. The whole migration process was fully transparent to Kafka applications.

In this session, you will learn the strategies, techniques and tools the PayPal Kafka team has utilized for managing the migration process. You will also learn the lessons and pitfalls they experienced during this exercise, as well as the secret sauce of making the migration successful.

Stream Processing Live Traffic Data with Kafka Streams

Stream Processing Live Traffic Data with Kafka StreamsTom Van den Bulck In this workshop we will set up a streaming framework which will process realtime data of traffic sensors installed within the Belgian road system.

Starting with the intake of the data, you will learn best practices and the recommended approach to split the information into events in a way that won't come back to haunt you.

With some basic stream operations (count, filter, ... ) you will get to know the data and experience how easy it is to get things done with Spring Boot & Spring Cloud Stream.

But since simple data processing is not enough to fulfill all your streaming needs, we will also let you experience the power of windows.

After this workshop, tumbling, sliding and session windows hold no more mysteries and you will be a true streaming wizard.

Building Stream Processing Applications with Apache Kafka Using KSQL (Robin M...

Building Stream Processing Applications with Apache Kafka Using KSQL (Robin M...confluent Robin is a Developer Advocate at Confluent, the company founded by the creators of Apache Kafka, as well as an Oracle Groundbreaker Ambassador. His career has always involved data, from the old worlds of COBOL and DB2, through the worlds of Oracle and Hadoop, and into the current world with Kafka. His particular interests are analytics, systems architecture, performance testing and optimization. He blogs at http://cnfl.io/rmoff and http://rmoff.net/ and can be found tweeting grumpy geek thoughts as @rmoff. Outside of work he enjoys drinking good beer and eating fried breakfasts, although generally not at the same time.

So You’ve Inherited Kafka? Now What? (Alon Gavra, AppsFlyer) Kafka Summit Lon...

So You’ve Inherited Kafka? Now What? (Alon Gavra, AppsFlyer) Kafka Summit Lon...confluent This document summarizes a presentation about managing Kafka clusters at scale. It discusses how AppsFlyer migrated from a monolithic Kafka deployment to multiple clusters for different teams. It then outlines challenges faced like traffic surges and mixed Kafka protocol versions. Solutions discussed include improving infrastructure, adding visibility tools, creating automation and APIs for management, and implementing sleep-driven design principles to reduce developer fatigue. The presentation concludes by discussing future goals like auto-scaling clusters.

Writing Blazing Fast, and Production-Ready Kafka Streams apps in less than 30...

Writing Blazing Fast, and Production-Ready Kafka Streams apps in less than 30...HostedbyConfluent If you have already worked on various Kafka Streams applications before, then you have probably found yourself in the situation of rewriting the same piece of code again and again.

Whether it's to manage processing failures or bad records, to use interactive queries, to organize your code, to deploy or to monitor your Kafka Streams app, build some in-house libraries to standardize common patterns across your projects seems to be unavoidable.

And, if you're new to Kafka Streams you might be interested to know what are those patterns to use for your next streaming project.

In this talk, I propose to introduce you to Azkarra, an open-source lightweight Java framework that was designed to provide most of that stuffs off-the-shelf by leveraging the best-of-breed ideas and proven practices from the Apache Kafka community.

Kafka Needs No Keeper

Kafka Needs No KeeperC4Media Kafka is evolving to remove its dependency on Zookeeper. The Kafka Improvement Proposal 500 (KIP-500) aims to manage Kafka's metadata log with a self-managed Raft consensus algorithm and controller quorum rather than relying on Zookeeper. This will improve scalability, robustness, and make deployment easier. It will take multiple releases to fully implement KIP-500, beginning with removing Zookeeper from clients and ending with a release where Zookeeper is no longer required.

10 Lessons Learned from using Kafka with 1000 microservices - java global summit

10 Lessons Learned from using Kafka with 1000 microservices - java global summitNatan Silnitsky Kafka is the bedrock of Wix's distributed microservices system. For the last 5 years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1400 microservices.

We’ve managed to achieve higher decoupling and independence for our various services and dev teams that have very different use-cases while maintaining a single uniform infrastructure in place.

In these slides you will learn about 10 key decisions and steps you can take in order to safely scale-up your Kafka-based system. These include:

* How to increase dev velocity of event driven style code.

* How to optimize working with Kafka in polyglot setting

* How to support growing amount of traffic and developers.

* How to tackle multiple DCs environment.

Kafka Pluggable Authorization for Enterprise Security (Anna Kepler, Viasat) K...

Kafka Pluggable Authorization for Enterprise Security (Anna Kepler, Viasat) K...confluent At Viasat, Kafka is a backbone for a multi-tenant streaming platform that transports data for 1000 streams and used by more than 60 teams in a production environment. Role-based access control to the sensitive data is an essential requirement for our customers who must comply with a variety of regulations including GDPR. Kafka ships with a pluggable Authorizer that can control access to resources like cluster, topic or consumer group. However, maintaining ACLs in the large multi-tenant deployment can be support-intensive. At Viasat, we developed a custom Kafka Authorizer and Role Manager application that integrates our Kafka cluster with Viasat’s internal LDAP services. The presentation will cover how we designed and built Kafka LDAP Authorizer, which allows us to control resources within the cluster as well as services built around Kafka. We apply our permissions model to our data forwarders, ETL jobs, and stream processing. We will also share how we achieved a stress free migration to secure infrastructure without interruption to the production data flow. Our secure deployment model accomplishes multiple goals: – Integration into an LDAP central authentication system. – Use of the same authorization service to control permissions to data in Kafka as well as services built around Kafka. – Delegation of permissions control to the security officers on the teams using the service. – Detailed audit and breach notifications based on the metrics produced by the custom authorizer. We plan to open source our custom Kafka Authorizer.

Similar to How to build 1000 microservices with Kafka and thrive (20)

10 Lessons Learned from using Kafka in 1000 microservices - ScalaUA

10 Lessons Learned from using Kafka in 1000 microservices - ScalaUANatan Silnitsky Kafka is the bedrock of Wix’s distributed Mega Microservices system.

Over the years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1400 mostly Scala microservices.

In this talk, you will learn about 10 key decisions and steps you can take in order to safely scale-up your Kafka-based system.

These Include:

* How to increase dev velocity of event-driven style code.

* How to optimize working with Kafka in polyglot setting

* How to migrate from request-reply to event-driven

* How to tackle multiple DCs environment.

8 Lessons Learned from Using Kafka in 1500 microservices - confluent streamin...

8 Lessons Learned from Using Kafka in 1500 microservices - confluent streamin...Natan Silnitsky Kafka is the bedrock of Wix's distributed microservices system. For the last 5 years we have learned a lot about how to successfully scale our event-driven architecture to roughly 1500 microservices.

We’ve managed to achieve higher decoupling and independence for our various services and dev teams that have very different use-cases while maintaining a single uniform infrastructure in place.

In these slides you will learn about 8 key decisions and steps you can take in order to safely scale-up your Kafka-based system. These include:

* How to increase dev velocity of event driven style code.

* How to optimize working with Kafka in polyglot setting

* How to support growing amount of traffic and developers.

Polyglot, Fault Tolerant Event-Driven Programming with Kafka, Kubernetes and ...

Polyglot, Fault Tolerant Event-Driven Programming with Kafka, Kubernetes and ...Natan Silnitsky At Wix, we have created a universal event-driven programming infrastructure on top of the Kafka message broker.

This infra makes sure messages are eventually successfully consumed and produced no matter what failure it encounters.

In this talk, you will learn about the features we introduced in order to make sure our distributed system can safely handle an ever growing message throughput in a fault tolerant manner.

You will be introduced to such techniques as retry topics, local persistent queues, and cooperative fibers that help make your flows more resilient and performant.

You will also learn how to make this infra work for all programming languages tech stacks with optimal resource manage using the power of Kubernetes and gRPC.

When to use a client library, and when to deploy an external pod (DaemonSet, StatefulSet) or even deploy a sidecar.

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...

Polyglot, fault-tolerant event-driven programming with kafka, kubernetes and ...Natan Silnitsky At Wix, we have created a universal event-driven programming infrastructure on top of the Kafka message broker.

This infra makes sure messages are eventually successfully consumed and produced no matter what failure it encounters.

In this talk, you will learn about the features we introduced in order to make sure our distributed system can safely handle an ever growing message throughput in a fault tolerant manner.

You will be introduced to such techniques as retry topics, local persistent queues, and cooperative fibers that help make your flows more resilient and performant.

You will also learn how to make this infra work for all programming languages tech stacks with optimal resource manage using the power of Kubernetes and gRPC.

When to use a client library, and when to deploy an external pod (DaemonSet) or even deploy a sidecar.

Training

TrainingHemantDunga1 This document provides information about key concepts in Apache Kafka including producers, consumers, brokers, topics, partitions, replications, and Zookeeper. It also describes how to start the Kafka services, create and list topics, produce and consume messages, and configure producers and consumers. Finally, it briefly discusses Kafka Streams, Schema Registry, Kafka Connect, and KSQL.

Greyhound - Powerful Functional Kafka Library - Devtalks reimagined

Greyhound - Powerful Functional Kafka Library - Devtalks reimaginedNatan Silnitsky This document provides an overview of Greyhound, a Scala/Java high-level SDK for Apache Kafka. It discusses key Kafka concepts like producers, brokers, topics and partitions. It then summarizes key Greyhound features like simple consumer APIs, composable record handlers, parallel and batch consumption, and retries. Diagrams are included to illustrate how Greyhound wraps the Kafka consumer and handles retries by publishing to retry topics. The presenter aims to abstract Kafka for developers and simplify APIs while adding useful features.

Spark streaming + kafka 0.10

Spark streaming + kafka 0.10Joan Viladrosa Riera Spark Streaming has supported Kafka since it's inception, but a lot has changed since those times, both in Spark and Kafka sides, to make this integration more fault-tolerant and reliable.Apache Kafka 0.10 (actually since 0.9) introduced the new Consumer API, built on top of a new group coordination protocol provided by Kafka itself.

So a new Spark Streaming integration comes to the playground, with a similar design to the 0.8 Direct DStream approach. However, there are notable differences in usage, and many exciting new features. In this talk, we will cover what are the main differences between this new integration and the previous one (for Kafka 0.8), and why Direct DStreams have replaced Receivers for good. We will also see how to achieve different semantics (at least one, at most one, exactly once) with code examples.

Finally, we will briefly introduce the usage of this integration in Billy Mobile to ingest and process the continuous stream of events from our AdNetwork.

Kafka Connect & Kafka Streams/KSQL - the ecosystem around Kafka

Kafka Connect & Kafka Streams/KSQL - the ecosystem around KafkaGuido Schmutz After a quick overview and introduction of Apache Kafka, this session cover two components which extend the core of Apache Kafka: Kafka Connect and Kafka Streams/KSQL.

Kafka Connects role is to access data from the out-side-world and make it available inside Kafka by publishing it into a Kafka topic. On the other hand, Kafka Connect is also responsible to transport information from inside Kafka to the outside world, which could be a database or a file system. There are many existing connectors for different source and target systems available out-of-the-box, either provided by the community or by Confluent or other vendors. You simply configure these connectors and off you go.

Kafka Streams is a light-weight component which extends Kafka with stream processing functionality. By that, Kafka can now not only reliably and scalable transport events and messages through the Kafka broker but also analyse and process these event in real-time. Interestingly Kafka Streams does not provide its own cluster infrastructure and it is also not meant to run on a Kafka cluster. The idea is to run Kafka Streams where it makes sense, which can be inside a “normal” Java application, inside a Web container or on a more modern containerized (cloud) infrastructure, such as Mesos, Kubernetes or Docker. Kafka Streams has a lot of interesting features, such as reliable state handling, queryable state and much more. KSQL is a streaming engine for Apache Kafka, providing a simple and completely interactive SQL interface for processing data in Kafka.

[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration StoryJoan Viladrosa Riera This document provides an overview of Apache Kafka and Apache Spark Streaming and their integration. It discusses what Kafka and Spark Streaming are, how they work, their benefits, and semantics when used together. It also provides examples of code for using the new Kafka integration in Spark 2.0+, including getting metadata, storing offsets in Kafka, and achieving at-most-once, at-least-once, and exactly-once processing semantics. Finally, it shares some insights into how Billy Mobile uses Spark Streaming with Kafka to process large volumes of data.

Kubernetes Networking

Kubernetes NetworkingCJ Cullen The document discusses Kubernetes networking. It describes how Kubernetes networking allows pods to have routable IPs and communicate without NAT, unlike Docker networking which uses NAT. It covers how services provide stable virtual IPs to access pods, and how kube-proxy implements services by configuring iptables on nodes. It also discusses the DNS integration using SkyDNS and Ingress for layer 7 routing of HTTP traffic. Finally, it briefly mentions network plugins and how Kubernetes is designed to be open and customizable.

Networking in Kubernetes

Networking in KubernetesMinhan Xia Overview of networking requirements of Kubernetes cluster, Service Discovery using kubeDNS, Load Balancing, Network Plugins and more extensions.

Apache Kafka - Scalable Message-Processing and more !

Apache Kafka - Scalable Message-Processing and more !Guido Schmutz Presentation @ Oracle Code Berlin.

Independent of the source of data, the integration of event streams into an Enterprise Architecture gets more and more important in the world of sensors, social media streams and Internet of Things. Events have to be accepted quickly and reliably, they have to be distributed and analysed, often with many consumers or systems interested in all or part of the events. How can we make sure that all these events are accepted and forwarded in an efficient and reliable way? This is where Apache Kafaka comes into play, a distirbuted, highly-scalable messaging broker, build for exchanging huge amounts of messages between a source and a target. This session will start with an introduction of Apache and presents the role of Apache Kafka in a modern data / information architecture and the advantages it brings to the table.

Apache Kafka - Event Sourcing, Monitoring, Librdkafka, Scaling & Partitioning

Apache Kafka - Event Sourcing, Monitoring, Librdkafka, Scaling & PartitioningGuido Schmutz Apache Kafka is a distributed streaming platform. It provides a high-throughput distributed messaging system with publish-subscribe capabilities. The document discusses Kafka producers and consumers, Kafka clients in different programming languages, and important configuration settings for Kafka brokers and topics. It also demonstrates sending messages to Kafka topics from a Java producer and consuming messages from the console consumer.

Can Kafka Handle a Lyft Ride? (Andrey Falko & Can Cecen, Lyft) Kafka Summit 2020

Can Kafka Handle a Lyft Ride? (Andrey Falko & Can Cecen, Lyft) Kafka Summit 2020HostedbyConfluent What does a Kafka administrator need to do if they have a user who demands that message delivery be guaranteed, fast, and low cost? In this talk we walk through the architecture we created to deliver for such users. Learn around the alternatives we considered and the pros and cons around what we came up with.

In this talk, we’ll be forced to dive into broker restart and failure scenarios and things we need to do to prevent leader elections from slowing down incoming requests. We’ll need to take care of the consumers as well to ensure that they don’t process the same request twice. We also plan to describe our architecture by showing a demo of simulated requests being produced into Kafka clusters and consumers processing them in lieu of us aggressively causing failures on the Kafka clusters.

We hope the audience walks away with a deeper understanding of what it takes to build robust Kafka clients and how to tune them to accomplish stringent delivery guarantees.

Apache Kafka - Scalable Message Processing and more!

Apache Kafka - Scalable Message Processing and more!Guido Schmutz Apache Kafka is a distributed streaming platform for handling real-time data feeds and deriving value from them. It provides a unified, scalable infrastructure for ingesting, processing, and delivering real-time data feeds. Kafka supports high throughput, fault tolerance, and exactly-once delivery semantics.

Kafka Connect & Kafka Streams/KSQL - the ecosystem around Kafka

Kafka Connect & Kafka Streams/KSQL - the ecosystem around KafkaGuido Schmutz After a quick overview and introduction of Apache Kafka, this session cover two components which extend the core of Apache Kafka: Kafka Connect and Kafka Streams/KSQL.

Kafka Connects role is to access data from the out-side-world and make it available inside Kafka by publishing it into a Kafka topic. On the other hand, Kafka Connect is also responsible to transport information from inside Kafka to the outside world, which could be a database or a file system. There are many existing connectors for different source and target systems available out-of-the-box, either provided by the community or by Confluent or other vendors. You simply configure these connectors and off you go.

Kafka Streams is a light-weight component which extends Kafka with stream processing functionality. By that, Kafka can now not only reliably and scalable transport events and messages through the Kafka broker but also analyse and process these event in real-time. Interestingly Kafka Streams does not provide its own cluster infrastructure and it is also not meant to run on a Kafka cluster. The idea is to run Kafka Streams where it makes sense, which can be inside a “normal” Java application, inside a Web container or on a more modern containerized (cloud) infrastructure, such as Mesos, Kubernetes or Docker. Kafka Streams has a lot of interesting features, such as reliable state handling, queryable state and much more. KSQL is a streaming engine for Apache Kafka, providing a simple and completely interactive SQL interface for processing data in Kafka.

Scaling big with Apache Kafka

Scaling big with Apache KafkaNikolay Stoitsev Apache Kafka sits at the core of the modern scalable event driven architecture. It’s no longer used only as logging infrastructure, but as a core component in thousands of companies around the world. It has the unique capability to provide low-latency, fault-tolerant pipeline at scale that is very important for today’s world of big data. During this talk we’ll see what makes Apache Kafka perfect for the job. We’ll explore how to optimize it for throughput or for durability. And we’ll also go over the messaging semantics it provides. Last but not least, we’ll see how Apache Kafka can help us solve some everyday problems that we face when we build large scale systems in an elegant way.

Not Your Mother's Kafka - Deep Dive into Confluent Cloud Infrastructure | Gwe...

Not Your Mother's Kafka - Deep Dive into Confluent Cloud Infrastructure | Gwe...HostedbyConfluent Confluent Cloud runs a modified version of Apache Kafka - redesigned to be cloud-native and deliver a serverless user experience. In this talk, we will discuss key improvements we've made to Kafka and how they contribute to Confluent Cloud availability, elasticity, and multi-tenancy. You'll learn about innovations that you can use on-prem, and everything you need to make the most of Confluent Cloud.

Building Stream Processing Applications with Apache Kafka's Exactly-Once Proc...

Building Stream Processing Applications with Apache Kafka's Exactly-Once Proc...Matthias J. Sax This talk was given at the "Big Data Applications" Meetup group (https://www.meetup.com/BigDataApps/).

Abstract:

Kafka 0.11 added a new feature called "exactly-once guarantees". In this talk, we will explain what "exactly-once" means in the context of Kafka and data stream processing and how it effects application development. The talk will go into some details about exactly-once namely the new idempotent producer and transactions and how both can be exploited to simplify application code: for example, you don't need to have complex deduplication code in your input path, as you can rely on Kafka to deduplicate messages when data is produces by an upstream application. Transactions can be used to write multiple messages into different topics and/or partitions and commit all writes in an atomic manner (or abort all writes so none will be read by a downstream consumer in read-committed mode). Thus, transactions allow for applications with strong consistency guarantees, like in the financial sector (e.g., either send a withdrawal and deposit message to transfer money or none of them). Finally, we talk about Kafka's Streams API that makes exactly-once stream processing as simple as it can get.

Greyhound - Powerful Pure Functional Kafka Library

Greyhound - Powerful Pure Functional Kafka LibraryNatan Silnitsky Wix has finally released to open-source its Kafka client SDK wrapper called Greyhound.

Completely re-written using the Scala functional library ZIO.

Greyhound harnesses ZIO's sophisticated async and concurrency features together with its easy composability to provide a superior experience to Kafka's own client SDKs.

It offers rich functionality including:

- Trivial setup of message processing parallelisation,

- Various fault tolerant retry policies (for consumers AND producers),

- Easy plug-ability of metrics publishing and context propagation and much more.

This talk will also show how Greyhound is used by Wix developers in more than 1000 event-driven microservices.

Ad

More from Natan Silnitsky (20)

Async Excellence Unlocking Scalability with Kafka - Devoxx Greece

Async Excellence Unlocking Scalability with Kafka - Devoxx GreeceNatan Silnitsky How do you scale 4,000 microservices while tackling latency, bottlenecks, and fault tolerance? At Wix, Kafka powers our event-driven architecture with practical patterns that enhance scalability and developer velocity.

This talk explores four key patterns for asynchronous programming:

1. Integration Events: Reduce latency by pre-fetching instead of synchronous calls.

2. Task Queue: Streamline workflows by offloading non-critical tasks.

3. Task Scheduler: Enable precise, scalable scheduling for delayed or recurring tasks.

4. Iterator: Handle long-running jobs in chunks for resilience and scalability.

Learn how to balance benefits and trade-offs, with actionable insights to optimize your own microservices architecture using these proven patterns.

Wix Single-Runtime - Conquering the multi-service challenge

Wix Single-Runtime - Conquering the multi-service challengeNatan Silnitsky In pursuit of a seamless development experience, Wix has harnessed Nile, a powerful suite of tools that accelerates the creation of platformized microservices. While highly effective, the growth of thousands of microservices has introduced a few challenges: increasing infrastructure expenses, more complex upgrades, and increased inter-service communication.

Enter Wix Single-Runtime—a Server Guild Infra initiative designed to combine the Nile experience, the agility of serverless architectures and the cost-effectiveness of monolithic deployments.

Our solution leverages Kubernetes daemonsets for highly efficient host deployment, working in harmony with numerous lightweight guest services. We will explore the transformation of our current infrastructure to support multi-tenancy, with a keen focus on SDL (Nile's data layer) and its substantial impact on resource usage.

Attendees will walk away with actionable insights on reducing production costs, simplifying infrastructure management, and fostering innovations in a polyglot environment. Join us as we navigate the triumphs and trials of building a robust, cost-effective development ecosystem.

WeAreDevs - Supercharge Your Developer Journey with Tiny Atomic Habits

WeAreDevs - Supercharge Your Developer Journey with Tiny Atomic HabitsNatan Silnitsky Discover the transformative power of atomic habits in your journey as a developer. Join us in this captivating talk to unlock the secrets of becoming a remarkable developer through small, achievable changes.

In this session, we will delve into the Four Laws of Behavior Change, empowering you to adopt new habits that will propel your coding skills to new heights. Learn effective strategies to enter the coding flow state while minimizing distractions, and master the art of acquiring new tech skills with ease.

Don't miss this opportunity to gain practical insights and actionable takeaways that will revolutionize your development process. Embrace the power of tiny atomic habits and unlock your true potential as a great developer.

Beyond Event Sourcing - Embracing CRUD for Wix Platform - Java.IL

Beyond Event Sourcing - Embracing CRUD for Wix Platform - Java.ILNatan Silnitsky In software engineering, the right architecture is essential for robust, scalable platforms. Wix has undergone a pivotal shift from event sourcing to a CRUD-based model for its microservices. This talk will chart the course of this pivotal journey.

Event sourcing, which records state changes as immutable events, provided robust auditing and "time travel" debugging for Wix Stores' microservices. Despite its benefits, the complexity it introduced in state management slowed development. Wix responded by adopting a simpler, unified CRUD model. This talk will explore the challenges of event sourcing and the advantages of Wix's new "CRUD on steroids" approach, which streamlines API integration and domain event management while preserving data integrity and system resilience.

Participants will gain valuable insights into Wix's strategies for ensuring atomicity in database updates and event production, as well as caching, materialization, and performance optimization techniques within a distributed system.

Join us to discover how Wix has mastered the art of balancing simplicity and extensibility, and learn how the re-adoption of the modest CRUD has turbocharged their development velocity, resilience, and scalability in a high-growth environment.

Effective Strategies for Wix's Scaling challenges - GeeCon

Effective Strategies for Wix's Scaling challenges - GeeConNatan Silnitsky This session unveils the multifaceted horizontal scaling strategies that power Wix's robust infrastructure. From Kafka consumer scaling and dynamic traffic routing for site segments to DynamoDB sharding and MySQL clusters custom routing with ProxySQL, we dissect the mechanisms that ensure scalability and performance at Wix.

Attendees will learn about the art of sharding and routing key selection across different systems, and how to apply these strategies to their own infrastructure. We'll share insights into choosing the right scaling strategy for various scenarios, balancing between managed services and custom solutions.

Key Takeaways:

- Grasp various sharding techniques and routing strategies used at Wix.

- Understand key considerations for sharding key and routing rule selection.

- Learn when and why to choose specific horizontal scaling strategies.

- Gain practical knowledge for applying these strategies to achieve scalability and high availability.

Join us to gain a blueprint for scaling your systems horizontally, drawing from Wix's proven practices.

Taming Distributed Systems: Key Insights from Wix's Large-Scale Experience - ...

Taming Distributed Systems: Key Insights from Wix's Large-Scale Experience - ...Natan Silnitsky Discover how Wix transitioned from complex event sourcing and CQRS to streamlined CRUD services, optimizing their vast platform for better scalability, performance, and resiliency.

Wix's platform, designed to accommodate diverse business needs, boasts:

* 3.5 Billion daily HTTP transactions

* 70 Billion Kafka messages per day

* Roughly 4000 microservices in production

This session will highlight the simplification of Wix's architecture through domain events, resilient Kafka messaging, and advanced techniques like materialization and caching. By standardizing APIs and employing tools like protobuf and gRPC, Wix has enhanced the developer experience, both internally and externally, and fostered an open, integrative platform.

Attendees will gain insights into Wix's strategies for microservice coordination, ensuring system resilience and data consistency, as well as query performance optimization through innovative 2-level caching solutions.

Workflow Engines & Event Streaming Brokers - Can they work together? [Current...

Workflow Engines & Event Streaming Brokers - Can they work together? [Current...Natan Silnitsky The document discusses how workflow engines and event streaming brokers can work together. It describes some gaps in using only event streaming at scale for complex business flows and long-running tasks. It then provides an overview of how a workflow engine like Temporal could be integrated to address these gaps, including examples of implementing workflows and activities. Specific opportunities discussed for using Temporal at Wix include long-running processes, cron jobs, and internal microservice tasks.

DevSum - Lessons Learned from 2000 microservices

DevSum - Lessons Learned from 2000 microservicesNatan Silnitsky Wix has a huge scale of event driven traffic. More than 70 billion Kafka business events per day.

Over the past few years Wix has made a gradual transition to an event-driven architecture for its 2000 microservices.

We have made mistakes along the way but have improved and learned a lot about how to make sure our production is still maintainable, performant and resilient.

In this talk you will hear about the lessons we learned including:

1. The importance of atomic operations for databases and events

2. avoiding data consistency issues due to out-of-order and duplicate processing

3. Having essential events debugging and quick-fix tools in production

and a few more

GeeCon - Lessons Learned from 2000 microservices

GeeCon - Lessons Learned from 2000 microservicesNatan Silnitsky Wix has a huge scale of event driven traffic. More than 70 billion Kafka business events per day.

Over the past few years Wix has made a gradual transition to an event-driven architecture for its 2000 microservices.

We have made mistakes along the way but have improved and learned a lot about how to make sure our production is still maintainable, performant and resilient.

In this talk you will hear about the lessons we learned including:

1. The importance of atomic operations for databases and events

2. avoiding data consistency issues due to out-of-order and duplicate processing

3. Having essential events debugging and quick-fix tools in production

and a few more

Migrating to Multi Cluster Managed Kafka - ApacheKafkaIL

Migrating to Multi Cluster Managed Kafka - ApacheKafkaILNatan Silnitsky As Wix Kafka usage grew to 2.5B messages per day, >20K topics and >100K leader partitions serving 2000 microservices,

we decided to migrate from self-operated single cluster per data-center to a managed cloud service (Like Amazon MSK or Confluent Cloud) with a multi-cluster setup.

The classic approach would be to perform this transition when all incoming traffic is removed from the data center.

But draining an entire data-center for an undetermined period of time, until all 2000 services complete the switch was too risky for us.

This talk is about how we gradually migrated all of our Kafka consumers and producers with 0 downtime while they continued to handle regular traffic. You will learn practical steps you can take to greatly reduce the risks and speed up the migration timeline.

Wix+Confluent Meetup - Lessons Learned from 2000 Event Driven Microservices

Wix+Confluent Meetup - Lessons Learned from 2000 Event Driven MicroservicesNatan Silnitsky Wix has a huge scale of event driven traffic. More than 70 billion Kafka business events per day.

Over the past few years Wix has made a gradual transition to an event-driven architecture for its 2000 microservices.

We have made mistakes along the way but have improved and learned a lot about how to make sure our production is still maintainable, performant and resilient.

In this talk you will hear about the lessons we learned including:

1. The importance of atomic operations for databases and events

2. avoiding data consistency issues due to out-of-order and duplicate processing

3. Having essential events debugging and quick-fix tools in production

and a few more

BuildStuff - Lessons Learned from 2000 Event Driven Microservices

BuildStuff - Lessons Learned from 2000 Event Driven MicroservicesNatan Silnitsky Wix has a huge scale of event driven traffic. More than 70 billion Kafka business events per day.

Over the past few years Wix has made a gradual transition to an event-driven architecture for its 2000 microservices.

We have made mistakes along the way but have improved and learned a lot about how to make sure our production is still maintainable, performant and resilient.

In this talk you will hear about the lessons we learned including:

1. The importance of atomic operations for databases and events

2. avoiding data consistency issues due to out-of-order and duplicate processing

3. Having essential events debugging and quick-fix tools in production

and a few more

Lessons Learned from 2000 Event Driven Microservices - Reversim

Lessons Learned from 2000 Event Driven Microservices - ReversimNatan Silnitsky Wix has a huge scale of event driven traffic. More than 70 billion Kafka business events per day.

Over the past few years Wix has made a gradual transition to an event-driven architecture for its 2000 microservices.

We have made mistakes along the way but have improved and learned a lot about how to make sure our production is still maintainable, performant and resilient.

In this talk you will hear about the lessons we learned including:

1. The importance of atomic operations for databases and events

2. avoiding data consistency issues due to out-of-order and duplicate processing

3. Having essential events debugging and quick-fix tools in production

and a few more

Devoxx Ukraine - Kafka based Global Data Mesh