How to Build your First Neural Network

1 like167 views

This document provides an overview of how to build a basic neural network using Keras and TensorFlow. It discusses perceptrons and their limitations, the multilayer perceptron architecture, popular activation functions, and hyperparameters for regression and classification problems. It also covers saving and loading models, data augmentation techniques, and strategies for training deep neural networks.

1 of 22

Downloaded 15 times

![Hichem Felouat - hichemfel@gmail.com 16

Multiclass Classification:

We need to have one output neuron per class, and we should

use the softmax activation function for the whole output layer.

Classification MLPs

For example:

classes 0 through 9 for digit

image classification [28, 28].](https://image.slidesharecdn.com/nn-200927154204/85/How-to-Build-your-First-Neural-Network-16-320.jpg)

![Hichem Felouat - hichemfel@gmail.com 21

How to increase your small image dataset

trainAug = ImageDataGenerator( rotation_range=40, width_shift_range=0.2,

height_shift_range = 0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True,

fill_mode='nearest')

model.compile(loss="binary_crossentropy", optimizer=opt, metrics=["accuracy"])

H = model.fit_generator( trainAug.flow(trainX, trainY, batch_size=BS),

steps_per_epoch=len(trainX) // BS,validation_data=(testX, testY), validation_steps=len(testX)

// BS, epochs=EPOCHS)](https://image.slidesharecdn.com/nn-200927154204/85/How-to-Build-your-First-Neural-Network-21-320.jpg)

Ad

Recommended

Predict future time series forecasting

Predict future time series forecastingHichem Felouat The document discusses recurrent neural networks (RNNs) and their application to time series forecasting. It covers the basics of RNNs, including different types of RNN cells like LSTM and GRU cells that can capture long-term dependencies in time series data. Deep RNNs and convolutional layers are presented as ways to process longer sequences. Finally, it discusses how to prepare time series data for forecasting by removing trends and seasonality and transforming it into a supervised learning problem.

Transfer Learning

Transfer LearningHichem Felouat The document discusses transfer learning and building complex models using Keras and TensorFlow. It provides examples of using the functional API to build models with multiple inputs and outputs. It also discusses reusing pretrained layers from models like ResNet, Xception, and VGG to perform transfer learning for new tasks with limited labeled data. Freezing pretrained layers initially and then training the entire model is recommended for transfer learning.

Build your own Convolutional Neural Network CNN

Build your own Convolutional Neural Network CNNHichem Felouat This document provides an overview of building and training a convolutional neural network (CNN) from scratch in Keras and TensorFlow. It discusses CNN architecture including convolutional layers, pooling layers, and fully connected layers. It also covers techniques for avoiding overfitting such as regularization, dropout, data augmentation, early stopping, and callbacks. The document concludes with instructions on how to save and load a trained CNN model.

Natural Language Processing (NLP)

Natural Language Processing (NLP)Hichem Felouat This document discusses natural language processing and related techniques. It provides an overview of natural language processing, including common challenges like speech recognition, natural language understanding, and language generation. It also demonstrates the use of a tokenizer API in Python to preprocess text data through functions like texts_to_sequences and sequences_to_texts. Finally, it briefly covers recurrent neural networks and techniques like LSTM, GRU, and pretrained word embeddings that are often used for natural language processing tasks.

Object detection and Instance Segmentation

Object detection and Instance SegmentationHichem Felouat The document discusses object detection and instance segmentation models like YOLOv5, Faster R-CNN, EfficientDet, Mask R-CNN, and TensorFlow's object detection API. It provides information on labeling images with bounding boxes for training these models, including open-source and commercial annotation tools. The document also covers evaluating object detection models using metrics like mean average precision (mAP) and intersection over union (IoU). It includes an example of training YOLOv5 on a custom dataset.

Introduction To Generative Adversarial Networks GANs

Introduction To Generative Adversarial Networks GANsHichem Felouat The document provides an introduction to generative adversarial networks (GANs) in 3 paragraphs. It explains that a GAN is composed of two neural networks - a generator and discriminator. The generator takes random inputs and outputs generated data, like images. The discriminator takes real and generated data and tries to classify them as real or fake. The two networks are trained in an adversarial manner, with the generator trying to fool the discriminator and the discriminator trying to improve at detecting fakes.

Machine Learning Algorithms

Machine Learning AlgorithmsHichem Felouat This document provides an overview of machine learning algorithms and scikit-learn. It begins with an introduction and table of contents. Then it covers topics like dataset loading from files, pandas, scikit-learn datasets, preprocessing data like handling missing values, feature selection, dimensionality reduction, training and test sets, supervised and unsupervised learning models, and saving/loading machine learning models. For each topic, it provides code examples and explanations.

Object Detection with Deep Learning - Xavier Giro-i-Nieto - UPC School Barcel...

Object Detection with Deep Learning - Xavier Giro-i-Nieto - UPC School Barcel...Universitat Politècnica de Catalunya The document provides an overview of object detection in computer vision, highlighting key concepts, challenges, datasets, evaluation metrics, and neural architectures. It details the classification process of detecting objects, introduces classic datasets like Pascal and COCO, and discusses evaluation metrics such as Average Precision (AP) and Mean Average Precision (mAP). Additionally, it reviews various neural network architectures, focusing on two-stage and single-stage detectors like R-CNN, Fast R-CNN, and Faster R-CNN.

Tensor flow (1)

Tensor flow (1)景逸 王 TensorFlow is an open source neural network library for Python and C++. It defines data flows as graphs with nodes representing operations and edges representing multidimensional data arrays called tensors. It supports supervised learning algorithms like gradient descent to minimize cost functions. TensorFlow automatically computes gradients so the user only needs to define the network structure, cost function, and optimization algorithm. An example shows training various neural network models on the MNIST handwritten digit dataset, achieving up to 99.2% accuracy. TensorFlow can implement other models like recurrent neural networks and is a simple yet powerful framework for neural networks.

Tensor board

Tensor boardSung Kim The document describes how to use TensorBoard, TensorFlow's visualization tool. It outlines 5 steps: 1) annotate nodes in the TensorFlow graph to visualize, 2) merge summaries, 3) create a writer, 4) run the merged summary and write it, 5) launch TensorBoard pointing to the log directory. TensorBoard can visualize the TensorFlow graph, plot metrics over time, and show additional data like histograms and scalars.

Accelerating Random Forests in Scikit-Learn

Accelerating Random Forests in Scikit-LearnGilles Louppe The document discusses the development and optimization of the random forests implementation in the scikit-learn library, highlighting its history, algorithmic enhancements, and performance improvements over time. It emphasizes the importance of profiling and code optimization, as well as effective use of parallelism and data handling techniques. The overall goal is to deliver a high-performance machine learning tool that remains user-friendly.

Xgboost

XgboostVivian S. Zhang XGBoost is an open-source machine learning tool based on gradient boosting, widely used in Kaggle competitions for its efficiency and accuracy. The document provides a detailed walkthrough of using the R package for classification tasks, showcases its implementation for a real-world Higgs boson competition, and discusses various aspects of model training and optimization. Advanced features, parameter tuning, and the algorithm's tree-building methods are also covered, emphasizing its effectiveness in handling large datasets and achieving top performance in competitions.

Mit6 094 iap10_lec01

Mit6 094 iap10_lec01Tribhuwan Pant This document provides an overview of the Introduction to Programming in MATLAB course. It outlines the course layout including 5 lectures covering various MATLAB topics. Problem sets are due daily and students must complete all lectures and problem sets to pass. Basic MATLAB skills such as scripts, variables, arrays, and basic plotting are introduced. The document also provides instructions for getting started with MATLAB and accessing resources.

Workshop - Introduction to Machine Learning with R

Workshop - Introduction to Machine Learning with RShirin Elsinghorst The document is a workshop guide on machine learning using R, covering essential topics such as data preparation, the caret and h2o packages, and neural networks. It includes practical examples and code snippets for various ML techniques like decision trees, random forests, and hyperparameter tuning. The guide is aimed at practitioners who wish to understand and apply machine learning concepts effectively.

Mit6 094 iap10_lec02

Mit6 094 iap10_lec02Tribhuwan Pant This document summarizes key points from Lecture 2 of the Introduction to Programming in MATLAB course. It discusses user-defined functions, including function declarations and overloading functions. Flow control statements like if/else and for loops are explained. Various plotting functions and options are covered, such as line, image, surface, and 3D plots. Advanced plotting exercises demonstrate modifying a plotting function to include conditionals and subplotting multiple axes. Specialized plotting functions like polar, bar, and quiver are also mentioned.

Rajat Monga at AI Frontiers: Deep Learning with TensorFlow

Rajat Monga at AI Frontiers: Deep Learning with TensorFlowAI Frontiers The document discusses the complexities of deep learning systems using TensorFlow, highlighting its architecture, distributed systems, and the challenges in machine learning. It provides an overview of TensorFlow's capabilities including its compatibility with various platforms, parallelism in operations, and tooling for efficient model training and evaluation. The presentation also emphasizes the tool's ability to manage complexity, allowing users to concentrate on their ideas.

Mit6 094 iap10_lec04

Mit6 094 iap10_lec04Tribhuwan Pant This document is a lecture on advanced MATLAB methods. It discusses probability and statistics, data structures like cells and structs, images and animation, debugging techniques, and online resources. Specific topics covered include random number generation, histograms, cells vs matrices, initializing and accessing structs, figure handles, reading/writing images, creating animations, using the debugger, and the MATLAB File Exchange website.

Mit6 094 iap10_lec03

Mit6 094 iap10_lec03Tribhuwan Pant Here are the steps to solve this ODE problem:

1. Define the ODE function:

function dydt = odefun(t,y)

dydt = -t.*y/10;

end

2. Solve the ODE:

[t,y] = ode45(@odefun,[0 10],10);

3. Plot the result:

plot(t,y)

xlabel('t')

ylabel('y(t)')

This uses ode45 to solve the ODE dy/dt = -t*y/10 on the interval [0,10] with initial condition y(0)=10.

Introduction to TensorFlow

Introduction to TensorFlowRalph Vincent Regalado The document provides an introduction to TensorFlow, an open-source library for machine intelligence that supports various machine learning algorithms. It covers the core concepts of TensorFlow, including tensors, computational graphs, and key features like constants, placeholders, and variables. The document also includes installation instructions and resources for further learning about TensorFlow.

A Tour of Tensorflow's APIs

A Tour of Tensorflow's APIsDean Wyatte This document summarizes TensorFlow's APIs, beginning with an overview of the low-level API using computational graphs and sessions. It then discusses higher-level APIs like Keras, TensorFlow Datasets for input pipelines, and Estimators which hide graph and session details. Datasets improve training speed by up to 300% by enabling parallelism. Estimators resemble scikit-learn and separate model definition from training, making code more modular and reusable. The document provides examples of using Datasets and Estimators with TensorFlow.

Power ai tensorflowworkloadtutorial-20171117

Power ai tensorflowworkloadtutorial-20171117Ganesan Narayanasamy This document provides an overview of running an image classification workload using IBM PowerAI and the MNIST dataset. It discusses deep learning concepts like neural networks and training flows. It then demonstrates how to set up TensorFlow on an IBM PowerAI trial server, load the MNIST dataset, build and train a basic neural network model for image classification, and evaluate the trained model's accuracy on test data.

Gradient Boosted Regression Trees in scikit-learn

Gradient Boosted Regression Trees in scikit-learnDataRobot The document discusses the application of gradient boosted regression trees (GBRT) using the scikit-learn library, emphasizing its advantages and disadvantages in machine learning. It provides a detailed overview of gradient boosting techniques, how to implement them in scikit-learn, and includes a case study on California housing data to illustrate practical usage and challenges. Additionally, it covers hyperparameter tuning, model interpretation, and techniques for avoiding overfitting.

Neural Networks with Google TensorFlow

Neural Networks with Google TensorFlowDarshan Patel The document provides an overview of neural networks, particularly convolutional neural networks (CNNs) using Google TensorFlow, covering their architecture and mathematical concepts. It highlights key elements of TensorFlow, including tensors, operations, and sessions, and explains the role of dropout as a technique to prevent overfitting in neural networks. Furthermore, it discusses the application of TensorFlow in various projects at Google and introduces TensorBoard for visualizing learning processes.

TensorFlow example for AI Ukraine2016

TensorFlow example for AI Ukraine2016Andrii Babii The document provides an overview of TensorFlow, highlighting its key features such as data and model parallelism, computational graph abstraction, and support for Python and C++. It discusses TensorFlow's architecture, core concepts like tensors and operations, as well as extensions like automatic differentiation and control flow. Additionally, it includes practical examples and code snippets for creating a linear regression model using TensorFlow.

Introducton to Convolutional Nerural Network with TensorFlow

Introducton to Convolutional Nerural Network with TensorFlowEtsuji Nakai The document serves as an introduction to convolutional neural networks (CNN) using TensorFlow, detailing the structure and training process for effective image classification. It explains concepts such as logistic regression, loss functions, and gradient descent, along with practical coding examples for implementing these techniques in TensorFlow. Additionally, it covers the multi-layer approach of CNNs and their advantages in extracting features from images for enhanced accuracy in categorization.

Mat lab workshop

Mat lab workshopVinay Kumar MATLAB is a high-level programming language and computing environment used for numerical computations, visualization, and programming. The document discusses MATLAB's capabilities including its toolboxes, plotting functions, control structures, M-files, and user-defined functions. MATLAB is useful for engineering and scientific calculations due to its matrix-based operations and built-in functions.

Tensorflow - Intro (2017)

Tensorflow - Intro (2017)Alessio Tonioni This document provides an overview and introduction to TensorFlow. It describes that TensorFlow is an open source software library for numerical computation using data flow graphs. The graphs are composed of nodes, which are operations on data, and edges, which are multidimensional data arrays (tensors) passing between operations. It also provides pros and cons of TensorFlow and describes higher level APIs, requirements and installation, program structure, tensors, variables, operations, and other key concepts.

Data Wrangling For Kaggle Data Science Competitions

Data Wrangling For Kaggle Data Science CompetitionsKrishna Sankar This document outlines an agenda for a tutorial on data wrangling for Kaggle data science competitions. The tutorial covers the anatomy of a Kaggle competition, algorithms for amateur data scientists, model evaluation and interpretation, and hands-on sessions for three sample competitions: Titanic, Data Science London, and PAKDD 2014. The goals are to familiarize participants with competition mechanics, explore algorithms and the data science process, and have participants submit entries for three competitions by applying algorithms like CART, random forests, and SVMs to Kaggle datasets.

Separating Hype from Reality in Deep Learning with Sameer Farooqui

Separating Hype from Reality in Deep Learning with Sameer FarooquiDatabricks The document discusses deep learning trends and technologies, highlighting TensorFlow as a significant open-source machine learning library developed by Google for training neural networks. It also covers various neural network architectures and training methodologies, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and the use of AutoML for hyperparameter tuning and automated model design. Additionally, notable figures in AI like Geoffrey Hinton and Yann LeCun are mentioned along with advancements in computer vision applications.

Getting started with Machine Learning

Getting started with Machine LearningGaurav Bhalotia The document provides an overview of machine learning, including definitions, types of machine learning algorithms, and the machine learning process. It defines machine learning as using algorithms to learn from data and make predictions. The main types discussed are supervised learning (classification, regression), unsupervised learning (clustering, association rules), and deep learning using neural networks. The machine learning process involves gathering data, feature engineering, splitting data into training/test sets, selecting an algorithm, training a model, validating it on a validation set, and testing it on a held-out test set. Key enablers of machine learning like large datasets and computing power are also mentioned.

More Related Content

What's hot (20)

Tensor flow (1)

Tensor flow (1)景逸 王 TensorFlow is an open source neural network library for Python and C++. It defines data flows as graphs with nodes representing operations and edges representing multidimensional data arrays called tensors. It supports supervised learning algorithms like gradient descent to minimize cost functions. TensorFlow automatically computes gradients so the user only needs to define the network structure, cost function, and optimization algorithm. An example shows training various neural network models on the MNIST handwritten digit dataset, achieving up to 99.2% accuracy. TensorFlow can implement other models like recurrent neural networks and is a simple yet powerful framework for neural networks.

Tensor board

Tensor boardSung Kim The document describes how to use TensorBoard, TensorFlow's visualization tool. It outlines 5 steps: 1) annotate nodes in the TensorFlow graph to visualize, 2) merge summaries, 3) create a writer, 4) run the merged summary and write it, 5) launch TensorBoard pointing to the log directory. TensorBoard can visualize the TensorFlow graph, plot metrics over time, and show additional data like histograms and scalars.

Accelerating Random Forests in Scikit-Learn

Accelerating Random Forests in Scikit-LearnGilles Louppe The document discusses the development and optimization of the random forests implementation in the scikit-learn library, highlighting its history, algorithmic enhancements, and performance improvements over time. It emphasizes the importance of profiling and code optimization, as well as effective use of parallelism and data handling techniques. The overall goal is to deliver a high-performance machine learning tool that remains user-friendly.

Xgboost

XgboostVivian S. Zhang XGBoost is an open-source machine learning tool based on gradient boosting, widely used in Kaggle competitions for its efficiency and accuracy. The document provides a detailed walkthrough of using the R package for classification tasks, showcases its implementation for a real-world Higgs boson competition, and discusses various aspects of model training and optimization. Advanced features, parameter tuning, and the algorithm's tree-building methods are also covered, emphasizing its effectiveness in handling large datasets and achieving top performance in competitions.

Mit6 094 iap10_lec01

Mit6 094 iap10_lec01Tribhuwan Pant This document provides an overview of the Introduction to Programming in MATLAB course. It outlines the course layout including 5 lectures covering various MATLAB topics. Problem sets are due daily and students must complete all lectures and problem sets to pass. Basic MATLAB skills such as scripts, variables, arrays, and basic plotting are introduced. The document also provides instructions for getting started with MATLAB and accessing resources.

Workshop - Introduction to Machine Learning with R

Workshop - Introduction to Machine Learning with RShirin Elsinghorst The document is a workshop guide on machine learning using R, covering essential topics such as data preparation, the caret and h2o packages, and neural networks. It includes practical examples and code snippets for various ML techniques like decision trees, random forests, and hyperparameter tuning. The guide is aimed at practitioners who wish to understand and apply machine learning concepts effectively.

Mit6 094 iap10_lec02

Mit6 094 iap10_lec02Tribhuwan Pant This document summarizes key points from Lecture 2 of the Introduction to Programming in MATLAB course. It discusses user-defined functions, including function declarations and overloading functions. Flow control statements like if/else and for loops are explained. Various plotting functions and options are covered, such as line, image, surface, and 3D plots. Advanced plotting exercises demonstrate modifying a plotting function to include conditionals and subplotting multiple axes. Specialized plotting functions like polar, bar, and quiver are also mentioned.

Rajat Monga at AI Frontiers: Deep Learning with TensorFlow

Rajat Monga at AI Frontiers: Deep Learning with TensorFlowAI Frontiers The document discusses the complexities of deep learning systems using TensorFlow, highlighting its architecture, distributed systems, and the challenges in machine learning. It provides an overview of TensorFlow's capabilities including its compatibility with various platforms, parallelism in operations, and tooling for efficient model training and evaluation. The presentation also emphasizes the tool's ability to manage complexity, allowing users to concentrate on their ideas.

Mit6 094 iap10_lec04

Mit6 094 iap10_lec04Tribhuwan Pant This document is a lecture on advanced MATLAB methods. It discusses probability and statistics, data structures like cells and structs, images and animation, debugging techniques, and online resources. Specific topics covered include random number generation, histograms, cells vs matrices, initializing and accessing structs, figure handles, reading/writing images, creating animations, using the debugger, and the MATLAB File Exchange website.

Mit6 094 iap10_lec03

Mit6 094 iap10_lec03Tribhuwan Pant Here are the steps to solve this ODE problem:

1. Define the ODE function:

function dydt = odefun(t,y)

dydt = -t.*y/10;

end

2. Solve the ODE:

[t,y] = ode45(@odefun,[0 10],10);

3. Plot the result:

plot(t,y)

xlabel('t')

ylabel('y(t)')

This uses ode45 to solve the ODE dy/dt = -t*y/10 on the interval [0,10] with initial condition y(0)=10.

Introduction to TensorFlow

Introduction to TensorFlowRalph Vincent Regalado The document provides an introduction to TensorFlow, an open-source library for machine intelligence that supports various machine learning algorithms. It covers the core concepts of TensorFlow, including tensors, computational graphs, and key features like constants, placeholders, and variables. The document also includes installation instructions and resources for further learning about TensorFlow.

A Tour of Tensorflow's APIs

A Tour of Tensorflow's APIsDean Wyatte This document summarizes TensorFlow's APIs, beginning with an overview of the low-level API using computational graphs and sessions. It then discusses higher-level APIs like Keras, TensorFlow Datasets for input pipelines, and Estimators which hide graph and session details. Datasets improve training speed by up to 300% by enabling parallelism. Estimators resemble scikit-learn and separate model definition from training, making code more modular and reusable. The document provides examples of using Datasets and Estimators with TensorFlow.

Power ai tensorflowworkloadtutorial-20171117

Power ai tensorflowworkloadtutorial-20171117Ganesan Narayanasamy This document provides an overview of running an image classification workload using IBM PowerAI and the MNIST dataset. It discusses deep learning concepts like neural networks and training flows. It then demonstrates how to set up TensorFlow on an IBM PowerAI trial server, load the MNIST dataset, build and train a basic neural network model for image classification, and evaluate the trained model's accuracy on test data.

Gradient Boosted Regression Trees in scikit-learn

Gradient Boosted Regression Trees in scikit-learnDataRobot The document discusses the application of gradient boosted regression trees (GBRT) using the scikit-learn library, emphasizing its advantages and disadvantages in machine learning. It provides a detailed overview of gradient boosting techniques, how to implement them in scikit-learn, and includes a case study on California housing data to illustrate practical usage and challenges. Additionally, it covers hyperparameter tuning, model interpretation, and techniques for avoiding overfitting.

Neural Networks with Google TensorFlow

Neural Networks with Google TensorFlowDarshan Patel The document provides an overview of neural networks, particularly convolutional neural networks (CNNs) using Google TensorFlow, covering their architecture and mathematical concepts. It highlights key elements of TensorFlow, including tensors, operations, and sessions, and explains the role of dropout as a technique to prevent overfitting in neural networks. Furthermore, it discusses the application of TensorFlow in various projects at Google and introduces TensorBoard for visualizing learning processes.

TensorFlow example for AI Ukraine2016

TensorFlow example for AI Ukraine2016Andrii Babii The document provides an overview of TensorFlow, highlighting its key features such as data and model parallelism, computational graph abstraction, and support for Python and C++. It discusses TensorFlow's architecture, core concepts like tensors and operations, as well as extensions like automatic differentiation and control flow. Additionally, it includes practical examples and code snippets for creating a linear regression model using TensorFlow.

Introducton to Convolutional Nerural Network with TensorFlow

Introducton to Convolutional Nerural Network with TensorFlowEtsuji Nakai The document serves as an introduction to convolutional neural networks (CNN) using TensorFlow, detailing the structure and training process for effective image classification. It explains concepts such as logistic regression, loss functions, and gradient descent, along with practical coding examples for implementing these techniques in TensorFlow. Additionally, it covers the multi-layer approach of CNNs and their advantages in extracting features from images for enhanced accuracy in categorization.

Mat lab workshop

Mat lab workshopVinay Kumar MATLAB is a high-level programming language and computing environment used for numerical computations, visualization, and programming. The document discusses MATLAB's capabilities including its toolboxes, plotting functions, control structures, M-files, and user-defined functions. MATLAB is useful for engineering and scientific calculations due to its matrix-based operations and built-in functions.

Tensorflow - Intro (2017)

Tensorflow - Intro (2017)Alessio Tonioni This document provides an overview and introduction to TensorFlow. It describes that TensorFlow is an open source software library for numerical computation using data flow graphs. The graphs are composed of nodes, which are operations on data, and edges, which are multidimensional data arrays (tensors) passing between operations. It also provides pros and cons of TensorFlow and describes higher level APIs, requirements and installation, program structure, tensors, variables, operations, and other key concepts.

Data Wrangling For Kaggle Data Science Competitions

Data Wrangling For Kaggle Data Science CompetitionsKrishna Sankar This document outlines an agenda for a tutorial on data wrangling for Kaggle data science competitions. The tutorial covers the anatomy of a Kaggle competition, algorithms for amateur data scientists, model evaluation and interpretation, and hands-on sessions for three sample competitions: Titanic, Data Science London, and PAKDD 2014. The goals are to familiarize participants with competition mechanics, explore algorithms and the data science process, and have participants submit entries for three competitions by applying algorithms like CART, random forests, and SVMs to Kaggle datasets.

Similar to How to Build your First Neural Network (20)

Separating Hype from Reality in Deep Learning with Sameer Farooqui

Separating Hype from Reality in Deep Learning with Sameer FarooquiDatabricks The document discusses deep learning trends and technologies, highlighting TensorFlow as a significant open-source machine learning library developed by Google for training neural networks. It also covers various neural network architectures and training methodologies, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and the use of AutoML for hyperparameter tuning and automated model design. Additionally, notable figures in AI like Geoffrey Hinton and Yann LeCun are mentioned along with advancements in computer vision applications.

Getting started with Machine Learning

Getting started with Machine LearningGaurav Bhalotia The document provides an overview of machine learning, including definitions, types of machine learning algorithms, and the machine learning process. It defines machine learning as using algorithms to learn from data and make predictions. The main types discussed are supervised learning (classification, regression), unsupervised learning (clustering, association rules), and deep learning using neural networks. The machine learning process involves gathering data, feature engineering, splitting data into training/test sets, selecting an algorithm, training a model, validating it on a validation set, and testing it on a held-out test set. Key enablers of machine learning like large datasets and computing power are also mentioned.

Mlcc #4

Mlcc #4Chung-Hsiang Ofa Hsueh This document summarizes a presentation on neural networks. It discusses introduction to neural networks including what they are and how they can solve non-linear problems. It then covers backpropagation for updating weights, training neural networks including challenges like vanishing gradients. It also discusses multi-class neural networks, word embeddings, and considerations for machine learning engineering in production systems.

33.-Multi-Layer-Perceptron.pdf

33.-Multi-Layer-Perceptron.pdfgnans Kgnanshek This document provides an overview of multi-layer perceptrons (MLPs), also known as neural networks. It begins by discussing how perceptrons work, including taking inputs, multiplying them by weights, passing them through an activation function, and producing an output. MLPs consist of multiple stacked perceptron layers that allow them to solve more complex problems. Key aspects that enable deep learning with MLPs include backpropagation to optimize weights, tuning hyperparameters like the number of layers and activation functions, and using advanced training techniques involving learning rates, epochs, batches and optimizer algorithms.

Multilayer Perceptron - Elisa Sayrol - UPC Barcelona 2018

Multilayer Perceptron - Elisa Sayrol - UPC Barcelona 2018Universitat Politècnica de Catalunya The document discusses multilayer perceptron (MLP) neural networks, explaining their structure including input, hidden, and output layers, and the use of activation functions for non-linear decision boundaries. It covers concepts such as forward pass computation, the universal approximation theorem, and training methods like gradient descent and stochastic gradient descent (SGD). Additionally, it provides practical examples, including MNIST digit recognition, and emphasizes the importance of parameters estimation and optimization in training MLPs.

Scaling Deep Learning with MXNet

Scaling Deep Learning with MXNetAI Frontiers This document outlines key concepts and practical applications of deep learning using MXNet, covering various modules including an introduction to deep learning, setup instructions for MXNet on cloud or local environments, and examples in computer vision and natural language processing. It discusses the architecture of deep neural networks, training methods, and various models like LSTM, as well as AWS resources for implementing deep learning solutions. The text emphasizes the importance of optimizing and testing different deep learning frameworks on AWS.

AI powered emotion recognition: From Inception to Production - Global AI Conf...

AI powered emotion recognition: From Inception to Production - Global AI Conf...Vandana Kannan The document provides a comprehensive overview of deep learning, focusing on its principles, training mechanisms, and applications, particularly in emotion recognition. It discusses the differences between deep learning and traditional machine learning, introduces Apache MXNet and Amazon SageMaker as tools for building and deploying models, and outlines essential concepts like CNN architectures, overfitting, and model optimization. Finally, it details the functionalities and features of the MXNet Model Server for serving machine learning models.

AI powered emotion recognition: From Inception to Production - Global AI Conf...

AI powered emotion recognition: From Inception to Production - Global AI Conf...Apache MXNet The document provides a comprehensive overview of deep learning concepts, particularly focusing on the architecture and training of Convolutional Neural Networks (CNNs) using Apache MXNet and Amazon SageMaker. It explains key elements such as different learning types, model training steps, and techniques to prevent overfitting. Additionally, it discusses the deployment and operational aspects of machine learning models using MXNet Model Server and Amazon's associated services.

Artificial Intelligence, Machine Learning and Deep Learning

Artificial Intelligence, Machine Learning and Deep LearningSujit Pal The document provides an overview of artificial intelligence, machine learning, and deep learning, highlighting their definitions, examples, and distinctions. It covers machine learning approaches, including algorithms, feature extraction, and gradient descent methods, as well as deep learning architectures like fully connected networks, convolutional neural networks, and recurrent neural networks. Additionally, it discusses the importance of data, the need for deep learning, and offers resources for further learning.

Deep Learning Interview Questions And Answers | AI & Deep Learning Interview ...

Deep Learning Interview Questions And Answers | AI & Deep Learning Interview ...Simplilearn - TensorFlow is a popular deep learning library that provides both C++ and Python APIs to make working with deep learning models easier. It supports both CPU and GPU computing and has a faster compilation time than other libraries like Keras and Torch.

- Tensors are multidimensional arrays that represent inputs, outputs, and parameters of deep learning models in TensorFlow. They are the fundamental data structure that flows through graphs in TensorFlow.

- The main programming elements in TensorFlow include constants, variables, placeholders, and sessions. Constants are parameters whose values do not change, variables allow adding trainable parameters, placeholders feed data from outside the graph, and sessions run the graph to evaluate nodes.

Deep Learning Module 2A Training MLP.pptx

Deep Learning Module 2A Training MLP.pptxvipul6601 This document provides an overview of deep learning concepts including linear regression, neural networks, and training multilayer perceptrons. It discusses:

1) How linear regression can be used for prediction tasks by learning weights to relate features to targets.

2) How neural networks extend this by using multiple layers of neurons and nonlinear activation functions to learn complex patterns in data.

3) The process of training neural networks, including forward propagation to make predictions, backpropagation to calculate gradients, and updating weights to reduce loss.

4) Key aspects of multilayer perceptrons like their architecture with multiple fully-connected layers, use of activation functions, and training algorithm involving forward/backward passes and parameter updates.

Neural Networks from Scratch - TensorFlow 101

Neural Networks from Scratch - TensorFlow 101Gerold Bausch This document outlines a lab on deep learning, focusing on building neural networks from scratch and using TensorFlow. It covers topics such as model architecture, backpropagation, optimization, and various methods to build models (sequential API, functional API, and subclassing). Additionally, it discusses the environment setup, software requirements, and how to leverage the TensorFlow framework for training and evaluation of models.

A Scaleable Implementation of Deep Learning on Spark -Alexander Ulanov

A Scaleable Implementation of Deep Learning on Spark -Alexander UlanovSpark Summit This document summarizes research on implementing deep learning models using Spark. It describes:

1) Implementing a multilayer perceptron (MLP) model for digit recognition in Spark using batch processing and matrix optimizations to improve efficiency.

2) Analyzing the tradeoffs of computation and communication in parallelizing the gradient calculation for batch training across multiple nodes to find the optimal number of workers.

3) Benchmark results showing Spark MLP achieves similar performance to Caffe on a single node and outperforms it by scaling nearly linearly when using multiple nodes.

A Scaleable Implemenation of Deep Leaning on Spark- Alexander Ulanov

A Scaleable Implemenation of Deep Leaning on Spark- Alexander UlanovSpark Summit This document summarizes research on implementing deep learning models using Spark. It describes:

1) Implementing a multilayer perceptron (MLP) model for digit recognition in Spark using batch processing and optimizing with native BLAS libraries.

2) Analyzing the tradeoff between computation and communication in parallelizing the gradient calculation for batch training across workers.

3) Benchmark results showing Spark MLP achieves similar performance to Caffe on CPU but scales better by utilizing multiple nodes, getting close to Caffe performance on GPU.

4) Ongoing work to incorporate more deep learning techniques like autoencoders and convolutional neural networks into Spark.

assignment regarding the security of the cyber

assignment regarding the security of the cyberyasir149288 Multi-layer perceptrons (MLPs) are a type of artificial neural network used in machine learning, characterized by their multiple layers including at least one hidden layer. MLPs are trained using the backpropagation learning algorithm, which adjusts weights based on errors from predicted and actual outputs. They are effective classifiers across various domains, such as optical character recognition and image classification tasks like those involving the MNIST dataset.

AI/ML Fundamentals to advanced Slides by GDG Amrita Mysuru.pdf

AI/ML Fundamentals to advanced Slides by GDG Amrita Mysuru.pdfLakshay14663 The document outlines a tech winter break session focused on AI/ML, covering essential topics such as types of machine learning, building a simple ML model, feature engineering, and neural networks. It explains key concepts like model training and evaluation, optimization techniques, and highlights real-world applications in various sectors. The session concludes with self-study recommendations and resources for further exploration in AI/ML.

Deep Learning Study _ FInalwithCNN_RNN_LSTM_GRU.pdf

Deep Learning Study _ FInalwithCNN_RNN_LSTM_GRU.pdfnaveenraghavendran10 The document provides a comprehensive overview of deep learning and object detection, focusing on fundamental concepts such as neural networks, activation functions, and the difference between machine learning and deep learning. It details various architectures including Convolutional Neural Networks (CNNs) and discusses methodologies for training models, including forward and backward propagation and techniques to prevent overfitting. Additionally, it explores the components of neural network operations and the role of hyperparameters in model performance.

Deep learning crash course

Deep learning crash courseVishwas N This document provides an overview of deep learning concepts including neural networks, supervised and unsupervised learning, and key terms. It explains that deep learning uses neural networks with many hidden layers to learn features directly from raw data. Supervised learning algorithms learn from labeled examples to perform classification or regression on unseen data. Unsupervised learning finds patterns in unlabeled data. Key terms defined include neurons, activation functions, loss functions, optimizers, epochs, batches, and hyperparameters.

Deep Learning with Apache MXNet (September 2017)

Deep Learning with Apache MXNet (September 2017)Julien SIMON The document provides an overview of deep learning with Apache MXNet. It discusses key concepts like neural networks, training processes, convolutional neural networks, and Apache MXNet. It also outlines examples of using MXNet, including building a first network, using pre-trained models, learning from scratch on datasets like MNIST, and fine-tuning models on CIFAR-10. The document concludes by mentioning an example of using MXNet with Keras and a quick reference to Sockeye.

Deep learning summary

Deep learning summaryankit_ppt This document provides an overview of deep learning and common deep learning concepts. It discusses that deep learning uses complex neural networks to determine representations of data, rather than requiring humans to engineer features. It also describes convolutional neural networks and how they are better than fully connected networks for tasks like image recognition. Additionally, it covers transfer learning and how pre-trained models can be adapted to new tasks by retraining final layers, reducing data and computation needs. Common deep learning architectures mentioned include AlexNet, VGG16, Inception and MobileNets.

Ad

Recently uploaded (20)

F-BLOCK ELEMENTS POWER POINT PRESENTATIONS

F-BLOCK ELEMENTS POWER POINT PRESENTATIONSmprpgcwa2024 F-block elements are a group of elements in the periodic table that have partially filled f-orbitals. They are also known as inner transition elements. F-block elements are divided into two series:

1.Lanthanides (La- Lu) These elements are also known as rare earth elements.

2.Actinides (Ac- Lr): These elements are radioactive and have complex electronic configurations.

F-block elements exhibit multiple oxidation states due to the availability of f-orbitals.

2. Many f-block compounds are colored due to f-f transitions.

3. F-block elements often exhibit paramagnetic or ferromagnetic behavior.4. Actinides are radioactive.

F-block elements are used as catalysts in various industrial processes.

Actinides are used in nuclear reactors and nuclear medicine.

F-block elements are used in lasers and phosphors due to their luminescent properties.

F-block elements have unique electronic and magnetic properties.

VCE Literature Section A Exam Response Guide

VCE Literature Section A Exam Response Guidejpinnuck This practical guide shows students of Unit 3&4 VCE Literature how to write responses to Section A of the exam. Including a range of examples writing about different types of texts, this guide:

*Breaks down and explains what Q1 and Q2 tasks involve and expect

*Breaks down example responses for each question

*Explains and scaffolds students to write responses for each question

*Includes a comprehensive range of sentence starters and vocabulary for responding to each question

*Includes critical theory vocabulary lists to support Q2 responses

This is why students from these 44 institutions have not received National Se...

This is why students from these 44 institutions have not received National Se...Kweku Zurek This is why students from these 44 institutions have not received National Service PIN codes (LIST)

Great Governors' Send-Off Quiz 2025 Prelims IIT KGP

Great Governors' Send-Off Quiz 2025 Prelims IIT KGPIIT Kharagpur Quiz Club Prelims of the Great Governors' Send-Off Quiz 2025 hosted by the outgoing governors.

QMs: Aarushi, Aatir, Aditya, Arnav

A Visual Introduction to the Prophet Jeremiah

A Visual Introduction to the Prophet JeremiahSteve Thomason These images will give you a visual guide to both the context and the flow of the story of the prophet Jeremiah. Feel free to use these in your study, preaching, and teaching.

Photo chemistry Power Point Presentation

Photo chemistry Power Point Presentationmprpgcwa2024 Photochemistry is the branch of chemistry that deals with the study of chemical reactions and processes initiated by light.

Photochemistry involves the interaction of light with molecules, leading to electronic excitation. Energy from light is transferred to molecules, initiating chemical reactions.

Photochemistry is used in solar cells to convert light into electrical energy.

It is used Light-driven chemical reactions for environmental remediation and synthesis. Photocatalysis helps in pollution abatement and environmental cleanup. Photodynamic therapy offers a targeted approach to treating diseases It is used in Light-activated treatment for cancer and other diseases.

Photochemistry is used to synthesize complex organic molecules.

Photochemistry contributes to the development of sustainable energy solutions.

Romanticism in Love and Sacrifice An Analysis of Oscar Wilde’s The Nightingal...

Romanticism in Love and Sacrifice An Analysis of Oscar Wilde’s The Nightingal...KaryanaTantri21 The story revolves around a college student who despairs not having a red rose as a condition for dancing with the girl he loves. The nightingale hears his complaint and offers to create the red rose at the cost of his life. He sang a love song all night with his chest stuck to the thorns of the rose tree. Finally, the red rose grew, but his sacrifice was in vain. The girl rejected the flower because it didn’t match her outfit and preferred a jewellery gift. The student threw the flower on the street and returned to studying philosophy

Code Profiling in Odoo 18 - Odoo 18 Slides

Code Profiling in Odoo 18 - Odoo 18 SlidesCeline George Profiling in Odoo identifies slow code and resource-heavy processes, ensuring better system performance. Odoo code profiling detects bottlenecks in custom modules, making it easier to improve speed and scalability.

University of Ghana Cracks Down on Misconduct: Over 100 Students Sanctioned

University of Ghana Cracks Down on Misconduct: Over 100 Students SanctionedKweku Zurek University of Ghana Cracks Down on Misconduct: Over 100 Students Sanctioned

K12 Tableau User Group virtual event June 18, 2025

K12 Tableau User Group virtual event June 18, 2025dogden2 National K12 Tableau User Group: June 2025 meeting slides

Aprendendo Arquitetura Framework Salesforce - Dia 02

Aprendendo Arquitetura Framework Salesforce - Dia 02Mauricio Alexandre Silva Aprendendo Arquitetura Framework Salesforce - Dia 02

IIT KGP Quiz Week 2024 Sports Quiz (Prelims + Finals)

IIT KGP Quiz Week 2024 Sports Quiz (Prelims + Finals)IIT Kharagpur Quiz Club The document outlines the format for the Sports Quiz at Quiz Week 2024, covering various sports & games and requiring participants to Answer without external sources. It includes specific details about question types, scoring, and examples of quiz questions. The document emphasizes fair play and enjoyment of the quiz experience.

Intellectual Property Right (Jurisprudence).pptx

Intellectual Property Right (Jurisprudence).pptxVishal Chanalia Intellectual property corresponds to ideas owned by a person or a firm and thus subjected to legal protection under the law.

The main purpose of intellectual property is to give encouragement to the innovators of new concepts by giving them the opportunity to make sufficient profits from their inventions and recover their manufacturing costs and efforts.

HistoPathology Ppt. Arshita Gupta for Diploma

HistoPathology Ppt. Arshita Gupta for Diplomaarshitagupta674 Hello everyone please suggest your views and likes so that I uploaded more study materials

In this slide full HistoPathology according to diploma course available like fixation

Tissue processing , staining etc

Plate Tectonic Boundaries and Continental Drift Theory

Plate Tectonic Boundaries and Continental Drift TheoryMarie This 28 slide presentation covers the basics of plate tectonics and continental drift theory. It is an effective introduction into a full plate tectonics unit study, but does not cover faults, stress, seismic waves, or seafloor spreading.

To download PDF, visit The Homeschool Daily. We will be uploading more slideshows to follow this one. Blessings, Marie

How to Manage Different Customer Addresses in Odoo 18 Accounting

How to Manage Different Customer Addresses in Odoo 18 AccountingCeline George A business often have customers with multiple locations such as office, warehouse, home addresses and this feature allows us to associate with different addresses with each customer streamlining the process of creating sales order invoices and delivery orders.

Vitamin and Nutritional Deficiencies.pptx

Vitamin and Nutritional Deficiencies.pptxVishal Chanalia Vitamin and nutritional deficiency occurs when the body does not receive enough essential nutrients, such as vitamins and minerals, needed for proper functioning. This can lead to various health problems, including weakened immunity, stunted growth, fatigue, poor wound healing, cognitive issues, and increased susceptibility to infections and diseases. Long-term deficiencies can cause serious and sometimes irreversible health complications.

Paper 107 | From Watchdog to Lapdog: Ishiguro’s Fiction and the Rise of “Godi...

Paper 107 | From Watchdog to Lapdog: Ishiguro’s Fiction and the Rise of “Godi...Rajdeep Bavaliya Dive into a captivating analysis where Kazuo Ishiguro’s nuanced fiction meets the stark realities of post‑2014 Indian journalism. Uncover how “Godi Media” turned from watchdog to lapdog, echoing the moral compromises of Ishiguro’s protagonists. We’ll draw parallels between restrained narrative silences and sensationalist headlines—are our media heroes or traitors? Don’t forget to follow for more deep dives!

M.A. Sem - 2 | Presentation

Presentation Season - 2

Paper - 107: The Twentieth Century Literature: From World War II to the End of the Century

Submitted Date: April 4, 2025

Paper Name: The Twentieth Century Literature: From World War II to the End of the Century

Topic: From Watchdog to Lapdog: Ishiguro’s Fiction and the Rise of “Godi Media” in Post-2014 Indian Journalism

[Please copy the link and paste it into any web browser to access the content.]

Video Link: https://youtu.be/kIEqwzhHJ54

For a more in-depth discussion of this presentation, please visit the full blog post at the following link: https://rajdeepbavaliya2.blogspot.com/2025/04/from-watchdog-to-lapdog-ishiguro-s-fiction-and-the-rise-of-godi-media-in-post-2014-indian-journalism.html

Please visit this blog to explore additional presentations from this season:

Hashtags:

#GodiMedia #Ishiguro #MediaEthics #WatchdogVsLapdog #IndianJournalism #PressFreedom #LiteraryCritique #AnArtistOfTheFloatingWorld #MediaCapture #KazuoIshiguro

Keyword Tags:

Godi Media, Ishiguro fiction, post-2014 Indian journalism, media capture, Kazuo Ishiguro analysis, watchdog to lapdog, press freedom India, media ethics, literature and media, An Artist of the Floating World

Ad

How to Build your First Neural Network

- 1. How to Build your First Neural Network Keras & TensorFlow Hichem Felouat [email protected]

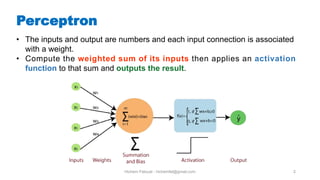

- 2. Hichem Felouat - [email protected] 2 Perceptron • The inputs and output are numbers and each input connection is associated with a weight. • Compute the weighted sum of its inputs then applies an activation function to that sum and outputs the result.

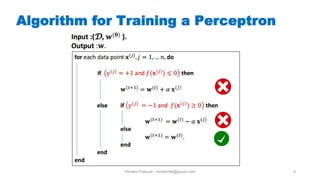

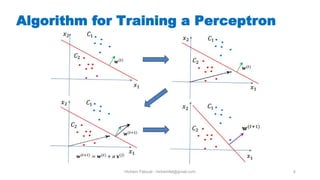

- 3. Hichem Felouat - [email protected] 3 Algorithm for Training a Perceptron

- 4. Hichem Felouat - [email protected] 4 Algorithm for Training a Perceptron

- 5. Hichem Felouat - [email protected] 5 Limitations of Perceptron A perceptron can only separate linearly separable classes, but it is unable to separate non-linear class boundaries. Example: Let the following problem of binary classification(problem of the XOR). Clearly, no line can separate the two classes! Solution : • Use two lines instead of one! • Use an intermediary layer of neurons in the NN.

- 6. Hichem Felouat - [email protected] 6 The Multilayer Perceptron MLP • The signal flows only in one direction (from the inputs to the outputs), so this architecture is an example of a feedforward neural network (FNN). • When an ANN contains a deep stack of hidden layers, it is called a deep neural network (DNN).

- 7. Hichem Felouat - [email protected] 7 The Multilayer Perceptron MLP

- 8. Hichem Felouat - [email protected] 8 The Multilayer Perceptron MLP • In 1986, the backpropagation training algorithm was introduced, which is still used today. • The backpropagation consists of only two passes through the network (one forward, one backward), the backpropagation algorithm is able to compute the gradient of the network’s error with regard to every single model parameter. In other words, it can find out how each connection weight and each bias term should be tweaked in order to reduce the error. David Rumelhart et al. “Learning Internal Representations by Error Propagation,” (Defense Technical Information Center technical report, September 1985).

- 9. Hichem Felouat - [email protected] 9 Popular Activation functions for MLP

- 10. Hichem Felouat - [email protected] 10 Neural Network vocabulary 1) Cost Function 2) Gradient Descent 3) Learning Rate 4) Backpropagation 5) Batches 6) Epochs

- 11. Hichem Felouat - [email protected] 11 Regression MLPs

- 12. Hichem Felouat - [email protected] 12 Regression MLPs 1) If you want to predict a single value (e.g., the price of a house, given many of its features), then you just need a single output neuron, its output is the predicted value. 2) For multivariate regression (i.e., to predict multiple values at once), you need one output neuron per output dimension. For example, to locate the center of an object in an image, you need to predict 2D coordinates, so you need two output neurons. 1 2

- 13. Hichem Felouat - [email protected] 13 Regression MLPs Hyperparameter Typical value input neurons One per input feature hidden layers Depends on the problem, but typically 1 to 5 neurons per hidden layer Depends on the problem, but typically 10 to 100 output neurons 1 per prediction dimension Hidden activation ReLU (relu) Output activation None, or ReLU/softplus (if positive outputs) or logistic/tanh (if bounded outputs) Loss function MSE (mean_squared_error) or MAE/Huber (if outliers) Typical regression MLP architecture:

- 14. Hichem Felouat - [email protected] 14 Classification MLPs

- 15. Hichem Felouat - [email protected] 15 Classification MLPs Binary classification: • We just need a single output neuron using the logistic activation function 0 or 1. Multilabel Binary Classification: • We need one output neuron for each positive class. For example: you could have an email classification system that predicts whether each incoming email is ham or spam and simultaneously predicts whether it is an urgent or nonurgent email. In this case, you would need two output neurons, both using the logistic activation function.

- 16. Hichem Felouat - [email protected] 16 Multiclass Classification: We need to have one output neuron per class, and we should use the softmax activation function for the whole output layer. Classification MLPs For example: classes 0 through 9 for digit image classification [28, 28].

- 17. Hichem Felouat - [email protected] 17 Classification MLPs Hyperparameter Binary classification Multilabel binary classification Multiclass classification input neurons One per input feature One per input feature One per input feature hidden layers neurons per hidden layer Depends on the problem Depends on the problem Depends on the problem output neurons 1 1 per label 1 per class Hidden activation ReLU (relu) ReLU (relu) ReLU (relu) Output layer activation Logistic (sigmoid) Logistic (sigmoid) Softmax (softmax) Loss function Cross entropy Cross entropy Cross entropy Typical classification MLP architecture Binary classification : categorical_crossentropy Multiclass classification : sparse_categorical_crossentropy

- 18. Hichem Felouat - [email protected] 18 Classification MLPs If the training set was very skewed, with some classes being overrepresented and others underrepresented, it would be useful to set the class_weight argument when calling the fit(). sample_weight: Per-instance weights could be useful if some instances were labeled by experts while others were labeled using a crowdsourcing platform: you might want to give more weight to the former.

- 19. Hichem Felouat - [email protected] 19 If you are not satisfied with the performance of your model, you should go back and tune the hyperparameters. • Try another optimizer. • Try tuning model hyperparameters such as the number of layers, the number of neurons per layer, and the types of activation functions to use for each hidden layer. • Try tuning other hyperparameters, such as the number of epochs and the batch size. Classification MLPs Once you are satisfied with your model’s validation accuracy, you should evaluate it on the test set to estimate the generalization error before you deploy the model to production. Gradient Descent, Momentum Optimization, Nesterov Accelerated Gradient, AdaGrad, RMSProp, Adam, Nadam

- 20. Hichem Felouat - [email protected] 20 How to Save and Load Your Model Keras use the HDF5 format to save both the model’s architecture (including every layer’s hyperparameters) and the values of all the model parameters for every layer (e.g., connection weights and biases). It also saves the optimizer (including its hyperparameters and any state it may have). model.save("my_keras_model.h5") Loading the model: model = keras.models.load_model("my_keras_model.h5")

- 21. Hichem Felouat - [email protected] 21 How to increase your small image dataset trainAug = ImageDataGenerator( rotation_range=40, width_shift_range=0.2, height_shift_range = 0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, fill_mode='nearest') model.compile(loss="binary_crossentropy", optimizer=opt, metrics=["accuracy"]) H = model.fit_generator( trainAug.flow(trainX, trainY, batch_size=BS), steps_per_epoch=len(trainX) // BS,validation_data=(testX, testY), validation_steps=len(testX) // BS, epochs=EPOCHS)

- 22. Hichem Felouat - [email protected] 22 Training a Deep DNN Training a very large deep neural network can be painfully slow. Here are some ways to speed up training (and reach a better solution): • Applying a good initialization strategy for the connection weights. • Using a good activation function. • Using Batch Normalization. • Reusing parts of a pretrained network (possibly built on an auxiliary task or using unsupervised learning). • Using faster optimizer.