Ad

High Performance Computing using MPI

- 1. High Performance Computing Ankit Mahato [email protected] fb.com/ankitmahato IIT Kanpur Note: These are not the actual lecture slides but the ones you may find useful for IHPC, Techkriti’13.

- 2. Lots at stake !!

- 3. What is HPC? It is the art of developing supercomputers and software to run on supercomputers. A main area of this discipline is developing parallel processing algorithms and software: programs that can be divided into little pieces so that each piece can be executed simultaneously by separate processors.

- 4. Why HPC? To simulate a bio-molecule of 10000 atoms Non-bond energy term ~ 10^8 operations For 1 microsecond simulation ~ 10^9 steps ~ 10^17 operations On a 1 GFLOPS machine (10^9 operations per second) it takes 10^8 secs (About 3 years 2 months) Need to do large no of simulations for even larger molecules PARAM Yuva – 5 x 10^14 – 3 min 20 sec Titan – 5.7 seconds

- 5. Amdahl's Law Code = Serial Part + Part which can be parallelized The potential program speedup is defined by the fraction of code that can be parallelized

- 6. Amdahl's Law Can you get a speed up of 5 times using quad core processor?

- 7. HPC Architecture HPC architecture typically consist of massive number of computing nodes (typically 1000s) highly interconnected by a specialized low latency network fabric which use MPI for data exchange. Nodes = cores + memory Computation is divided into tasks distributing these tasks across the nodes and they need to synchronize and exchange information several times a second.

- 8. Communication Overheads Latency Startup time for each message transaction 1 μs Bandwidth The rate at which the messages are transmitted across the nodes / processors 10 Gbits /sec. You can’t go faster than these limits.

- 9. MPI M P I = Message Passing Interface It is an industry-wide standard protocol for passing messages between parallel processors. MPI is a specification for the developers and users of message passing libraries. By itself, it is NOT a library - but rather the specification of what such a library should be. Small: Require only six library functions to write any parallel code Large: There are more than 200 functions in MPI-2

- 10. MPI Programming Model Originally, MPI was designed for distributed memory architectures, which were becoming increasingly popular at that time. As architecture trends changed, shared memory SMPs were combined over networks creating hybrid distributed memory / shared memory systems. MPI implementors adapted their libraries to handle both types of underlying memory architectures seamlessly. It means you can use MPI even on your laptops.

- 11. Why MPI ? Today, MPI runs on virtually any hardware platform: • Distributed Memory • Shared Memory • Hybrid A MPI program is basically a C, fortran or Python program that uses the MPI library, SO DON’T BE SCARED.

- 12. MPI

- 13. MPI Communicator: a set of processes that have a valid rank of source or destination fields. The predefined communicator is MPI_COMM_WORLD, and we will be using this communicator all the time. MPI_COMM_WORLD is a default communicator consisting all processes. Furthermore, a programmer can also define a new communicator, which has a smaller number of processes than MPI_COMM_WORLD does.

- 14. MPI Processes: For this module, we just need to know that processes belong to the MPI_COMM_WORLD. If there are p processes, then each process is defined by its rank, which starts from 0 to p - 1. The master has the rank 0. For example, in this process there are 10 processes

- 15. MPI Use SSH client (Putty) to login into any of these Multi - Processor Compute Servers with processors varying from 4 to 15. akash1.cc.iitk.ac.in akash2.cc.iitk.ac.in aatish.cc.iitk.ac.in falaq.cc.iitk.ac.in On your PC you can download mpich2 or openmpi.

- 16. MPI Include Header File C: #include "mpi.h" Fortran: include 'mpif.h' Python: from mpi4py import MPI (not available in CC server you can set it up on your lab workstation)

- 17. MPI Smallest MPI library should provide these 6 functions: MPI_Init - Initialize the MPI execution environment MPI_Comm_size - Determines the size of the group associated with a communictor MPI_Comm_rank - Determines the rank of the calling process in the communicator MPI_Send - Performs a basic send MPI_Recv - Basic receive MPI_Finalize - Terminates MPI execution environment

- 18. MPI Format of MPI Calls: C and Python names are case sensitive. Fortran names are not. Example: CALL MPI_XXXXX(parameter,..., ierr) is equivalent to call mpi_xxxxx(parameter,..., ierr) Programs must not declare variables or functions with names beginning with the prefix MPI_ or PMPI_ for C & Fortran. . For Python ‘from mpi4py import MPI’ already ensures that you don’t make the above mistake.

- 19. MPI C: rc = MPI_Xxxxx(parameter, ... ) Returned as "rc“. MPI_SUCCESS if successful Fortran: CALL MPI_XXXXX(parameter,..., ierr) Returned as "ierr" parameter. MPI_SUCCESS if successful Python: rc = MPI.COMM_WORLD.xxxx(parameter,…)

- 20. MPI MPI_Init Initializes the MPI execution environment. This function must be called in every MPI program, must be called before any other MPI functions and must be called only once in an MPI program. For C programs, MPI_Init may be used to pass the command line arguments to all processes, although this is not required by the standard and is implementation dependent. MPI_Init (&argc,&argv) MPI_INIT (ierr) For python no initialization is required.

- 21. MPI MPI_Comm_size Returns the total number of MPI processes in the specified communicator, such as MPI_COMM_WORLD. If the communicator is MPI_COMM_WORLD, then it represents the number of MPI tasks available to your application. MPI_Comm_size (comm,&size) MPI_COMM_SIZE (comm,size,ierr) Where comm is MPI_COMM_WORLD For python MPI.COMM_WORLD.size is the total number of MPI processes. MPI.COMM_WORLD.Get_size() also returns the same.

- 22. MPI MPI_Comm_rank Returns the rank of the calling MPI process within the specified communicator. Initially, each process will be assigned a unique integer rank between 0 and number of tasks - 1 within the communicator MPI_COMM_WORLD. This rank is often referred to as a task ID. If a process becomes associated with other communicators, it will have a unique rank within each of these as well. MPI_Comm_rank (comm,&rank) MPI_COMM_RANK (comm,rank,ierr) Where comm is MPI_COMM_WORLD For python MPI.COMM_WORLD.rank is the total number of MPI processes. MPI.COMM_WORLD.Get_rank() also returns the same.

- 23. MPI MPI_Comm_rank Returns the rank of the calling MPI process within the specified communicator. Initially, each process will be assigned a unique integer rank between 0 and number of tasks - 1 within the communicator MPI_COMM_WORLD. This rank is often referred to as a task ID. If a process becomes associated with other communicators, it will have a unique rank within each of these as well. MPI_Comm_rank (comm,&rank) MPI_COMM_RANK (comm,rank,ierr) Where comm is MPI_COMM_WORLD For python MPI.COMM_WORLD.rank is the total number of MPI processes. MPI.COMM_WORLD.Get_rank() also returns the same.

- 24. MPI MPI Hello World Program https://gist.github.com/4459911

- 25. MPI In MPI blocking message passing routines are more commonly used. A blocking MPI call means that the program execution will be suspended until the message buffer is safe to use. The MPI standards specify that a blocking SEND or RECV does not return until the send buffer is safe to reuse (for MPI_SEND), or the receive buffer is ready to use (for PI_RECV). The statement after MPI_SEND can safely modify the memory location of the array a because the return from MPI_SEND indicates either a successful completion of the SEND process, or that the buffer containing a has been copied to a safe place. In either case, a's buffer can be safely reused. Also, the return of MPI_RECV indicates that the buffer containing the array b is full and is ready to use, so the code segment after MPI_RECV can safely use b.

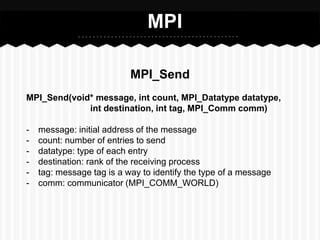

- 26. MPI MPI_Send Basic blocking send operation. Routine returns only after the application buffer in the sending task is free for reuse. Note that this routine may be implemented differently on different systems. The MPI standard permits the use of a system buffer but does not require it. MPI_Send (&buf,count,datatype,dest,tag,comm) MPI_SEND (buf,count,datatype,dest,tag,comm,ierr) comm.send(buf,dest,tag)

- 27. MPI MPI_Send MPI_Send(void* message, int count, MPI_Datatype datatype, int destination, int tag, MPI_Comm comm) - message: initial address of the message - count: number of entries to send - datatype: type of each entry - destination: rank of the receiving process - tag: message tag is a way to identify the type of a message - comm: communicator (MPI_COMM_WORLD)

- 29. MPI MPI_Recv Receive a message and block until the requested data is available in the application buffer in the receiving task. MPI_Recv (&buf,count,datatype,source,tag,comm,&status) MPI_RECV (buf,count,datatype,source,tag,comm,status,ierr) MPI_Recv(void* message, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) - source: rank of the sending process - status: return status

- 30. MPI MPI_Finalize Terminates MPI execution environment Note: All processes must call this routine before exit. The number of processes running after this routine is called is undefined; it is best not to perform anything more than a return after calling MPI_Finalize.

- 31. MPI MPI Hello World 2: This MPI program illustrates the use of MPI_Send and MPI_Recv functions. Basically, the master sends a message, “Hello, world”, to the process whose rank is 1, and then after having received the message, the process prints the message along with its rank. https://gist.github.com/4459944

- 32. MPI Collective communication Collective communication is a communication that must have all processes involved in the scope of a communicator. We will be using MPI_COMM_WORLD as our communicator; therefore, the collective communication will include all processes.

- 33. MPI MPI_Barrier(comm) This function creates a barrier synchronization in a commmunicator(MPI_COMM_WORLD). Each task waits at MPI_Barrier call until all other tasks in the communicator reach the same MPI_Barrier call.

- 34. MPI MPI_Bcast(&message, int count, MPI_Datatype datatype, int root, comm) This function displays the message to all other processes in MPI_COMM_WORLD from the process whose rank is root.

- 35. MPI MPI_Reduce(&message, &receivemessage, int count, MPI_Datatype datatype, MPI_Op op, int root, comm) This function applies a reduction operation on all tasks in MPI_COMM_WORLD and reduces results from each process into one value. MPI_Op includes for example, MPI_MAX, MPI_MIN, MPI_PROD, and MPI_SUM, etc.

- 36. MPI MPI_Scatter(&message, int count, MPI_Datatype, &receivemessage, int count, MPI_Datatype, int root, comm) MPI_Gather(&message, int count, MPI_Datatype, &receivemessage, int count, MPI_Datatype, int root, comm)

- 37. MPI Pi Code https://gist.github.com/4460013

- 38. Thank You Ankit Mahato [email protected] fb.com/ankitmahato IIT Kanpur

- 39. Check out our G+ Page https://plus.google.com/105183351397774464774/posts

- 40. Join our community Share your feelings after you run the first MPI code and have discussions. https://plus.google.com/communities/105106273529839635622