Implement Runtime Environments for HSA using LLVM

17 likes6,074 views

* Transition to heterogeneous - mobile phone to data centre * LLVM and HSA * HSA driven computing environment

1 of 38

Downloaded 163 times

![OpenCL Framework overview

Host Program

int main(int argc, char **argv)

{

...

clBuildProgram(program, ...);

clCreateKernel(program, “dot”...);

...

}

OpenCL Kernels

__kernel void dot(__global const float4 *a

__global const float4 *b

__global float4 *c)

{

int tid = get_global_id(0);

c[tid] = a[tid] * b[tid];

}

Application

Separate programs into

host-side and kernel-side

code fragment

OpenCL Framework

OpenCL Runtime

Runtime APIs

Platform APIs

OpenCL Compiler

Compiler

• compile OpenCL C

language just-in-time

Runtime

• allow host program to

manipulate context

Front-end

Front-end

Back-end

Back-end

Platform

MPU

GPU

MPU : host, kernel program

GPU : kernel program

Source: OpenCL_for_Halifux.pdf, OpenCL overview, Intel Visual Adrenaline](https://image.slidesharecdn.com/hsa-llvm-140123185623-phpapp02/85/Implement-Runtime-Environments-for-HSA-using-LLVM-13-320.jpg)

![Supporting OpenCL

• Syntax parsing by compiler

–

–

–

–

qualif i r

e

vector

built-in function

Optimizations on

single core

__kernel void add( __global float4 *a,

__global float4 *b,

__global float4 *c)

{

int gid = get_global_id(0);

float4 data = (float4) (1.0, 2.0, 3.0, 4.0);

c[gid] = a[gid] + b[gid] + data;

}

• Runtime implementation

– handle multi-core issues

– Co-work with device vendor

Platform APIs

MPU

Runtime APIs

GPU](https://image.slidesharecdn.com/hsa-llvm-140123185623-phpapp02/85/Implement-Runtime-Environments-for-HSA-using-LLVM-15-320.jpg)

![HSAIL example

ld_kernarg_u64 $d0, [%_out];

ld_kernarg_u64 $d1, [%_in];

sqrt((float

(i*i +

(i+1)*(i+1) +

(i+2)*(i+2)))

@block0:

workitemabsid_u32 $s2, 0;

cvt_s64_s32 $d2, $s2;

mad_u64 $d3, $d2, 8, $d1;

ld_global_u64 $d3, [$d3];

//pointer

ld_global_f32 $s0, [$d3+0]; // x

ld_global_f32 $s1, [$d3+4]; // y

ld_global_f32 $s2, [$d3+8]; // z

mul_f32 $s0, $s0, $s0;

// x*x

mul_f32 $s1, $s1, $s1;

// y*y

add_f32 $s0, $s0, $s1; // x*x + y*y

mul_f32 $s2, $s2, $s2;

// z*z

add_f32 $s0, $s0, $s2;// x*x + y*y + z*z

sqrt_f32 $s0, $s0;

mad_u64 $d4, $d2, 4, $d0;

st_global_f32 $s0, [$d4];

ret;](https://image.slidesharecdn.com/hsa-llvm-140123185623-phpapp02/85/Implement-Runtime-Environments-for-HSA-using-LLVM-36-320.jpg)

Ad

Recommended

Embedded Virtualization applied in Mobile Devices

Embedded Virtualization applied in Mobile DevicesNational Cheng Kung University The key problematic instructions for virtualization on ARM are those that change processor state or mode, access privileged resources, or cause unpredictable behavior when executed in user mode. These must be trapped and emulated by the virtual machine monitor.

L4 Microkernel :: Design Overview

L4 Microkernel :: Design OverviewNational Cheng Kung University (1) Myths of Microkernel

(2) Characteristics of 2nd generation microkernel

(3) Toward 3rd generation microkernel

Embedded Hypervisor for ARM

Embedded Hypervisor for ARMNational Cheng Kung University Agenda:

(1) Virtualization from The Past

(2) Hypervisor Design

(3) Embedded Hypervisors for ARM

(4) Toward ARM Cortex-A15

Construct an Efficient and Secure Microkernel for IoT

Construct an Efficient and Secure Microkernel for IoTNational Cheng Kung University The promise of the IoT won’t be fulfilled until integrated

software platforms are available that allow software

developers to develop these devices efficiently and in

the most cost-effective manner possible.

This presentation introduces F9 microkernel, new open source

implementation built from scratch, which deploys

modern kernel techniques dedicated to deeply

embedded devices.

F9: A Secure and Efficient Microkernel Built for Deeply Embedded Systems

F9: A Secure and Efficient Microkernel Built for Deeply Embedded SystemsNational Cheng Kung University F9 is a new open source microkernel designed for deeply embedded systems like IoT devices. It aims to provide efficiency, security, and a flexible development environment. F9 follows microkernel principles with minimal kernel functionality and isolates components as user-level processes. It uses capabilities for access control and focuses on performance through techniques like tickless scheduling and adaptive power management.

Unix v6 Internals

Unix v6 InternalsNational Cheng Kung University UNIX v6 Internals: Background knowledge, C programming language, system call, interrupt/trap, PDP-11, scheduler / timer, etc.

From L3 to seL4: What have we learnt in 20 years of L4 microkernels

From L3 to seL4: What have we learnt in 20 years of L4 microkernelsmicrokerneldude The document summarizes learnings from 20 years of L4 microkernel development. It discusses how early L4 designs focused on performance through techniques like lazy scheduling and direct process switching, but modern kernels emphasize principles like minimality and formal verification. Minimality has driven designs to become simpler over time, with policies separated from mechanisms and drivers moved to user-space. While IPC speed remains important, verification shows it need not compromise performance. Capabilities have also proven effective for access control compared to early process hierarchies. Overall, microkernels remain valuable if guided by core principles demonstrated over L4's evolution.

Microkernel Evolution

Microkernel EvolutionNational Cheng Kung University This presentation covers 3 Generations of Microkernels: Mach, L4, seL4/Fiasco.OC/NOVA.

In addition, the real use cases are included.

Shorten Device Boot Time for Automotive IVI and Navigation Systems

Shorten Device Boot Time for Automotive IVI and Navigation SystemsNational Cheng Kung University Propose a practical approach of the mixture of

ARM hibernation (suspend to disk) and Linux

user-space checkpointing

– to shorten device boot time

Faults inside System Software

Faults inside System SoftwareNational Cheng Kung University Faults inside system software were analyzed, with a focus on diagnosing faults in device drivers. Approaches to deal with faulty drivers included runtime isolation and static analysis. Runtime isolation involves running each driver in a separate process or virtual machine to isolate failures. Static analysis techniques inspect source code for issues like concurrency errors, protocol violations, and invalid register values without needing to execute the code. The talk provided statistics on driver faults, discussed the Linux driver model and common bug causes, and outlined techniques like instrumentation and specification-based development to improve driver correctness and security.

Android Virtualization: Opportunity and Organization

Android Virtualization: Opportunity and OrganizationNational Cheng Kung University Give you an overview about

– device virtualization on ARM

– Benefit and real products

– Android specific virtualization consideration

– doing virtualization in several approaches

Hints for L4 Microkernel

Hints for L4 MicrokernelNational Cheng Kung University Introduce L4 microkernel principles and concepts and Understand the real world usage for microkernels

A tour of F9 microkernel and BitSec hypervisor

A tour of F9 microkernel and BitSec hypervisorLouie Lu A brief tour about F9 microkernel and BitSec hypervisor

This slide won't covering all aspect about them, but to focus on some point in these two kernel.

F9 microkernel repo: https://github.com/f9micro/f9-kernel

Impress template from: http://technology.chtsai.org/impress/

olibc: Another C Library optimized for Embedded Linux

olibc: Another C Library optimized for Embedded LinuxNational Cheng Kung University http://olibc.so/

– Review C library characteristics

– Toolchain optimizations

– Build configurable runtime

– Performance evaluation

Embedded Virtualization for Mobile Devices

Embedded Virtualization for Mobile DevicesNational Cheng Kung University Goals of This Presentation: an overview about

- virtualization in general

- device virtualization on ARM

- doing virtualization in several approaches

Develop Your Own Operating Systems using Cheap ARM Boards

Develop Your Own Operating Systems using Cheap ARM BoardsNational Cheng Kung University * Know the reasons why various operating systems exist and how they are functioned for dedicated purposes

* Understand the basic concepts while building system software from scratch

• How can we benefit from cheap ARM boards and the related open source tools?

- Raspberry Pi & STM32F4-Discovery

Explore Android Internals

Explore Android InternalsNational Cheng Kung University (0) Concepts

(1) Binder: heart of Android

(2) Binder Internals

(3) System Services

(4) Frameworks

Making Linux do Hard Real-time

Making Linux do Hard Real-timeNational Cheng Kung University This document discusses making Linux capable of hard real-time performance. It begins by defining hard and soft real-time systems and explaining that real-time does not necessarily mean fast but rather determinism. It then covers general concepts around real-time performance in Linux like preemption, interrupts, context switching, and scheduling. Specific features in Linux like RT-Preempt, priority inheritance, and threaded interrupts that improve real-time capabilities are also summarized.

Xvisor: embedded and lightweight hypervisor

Xvisor: embedded and lightweight hypervisorNational Cheng Kung University Xvisor is an open source lightweight hypervisor for ARM architectures. It uses a technique called cpatch to modify guest operating system binaries, replacing privileged instructions with hypercalls. This allows the guest OS to run without privileges in user mode under the hypervisor. Xvisor also implements virtual CPU and memory management to isolate guest instances and virtualize physical resources for multiple operating systems.

Plan 9: Not (Only) A Better UNIX

Plan 9: Not (Only) A Better UNIXNational Cheng Kung University Plan 9 was an operating system designed in the 1980s by Bell Labs as a distributed successor to Unix. It treated all system resources, including files, devices, processes and network connections, as files that could be accessed through a single universal file system interface. Plan 9 assumed a network of reliable file servers and CPU servers with personal workstations accessing aggregated remote resources through a high-speed network. It aimed to "build a UNIX out of little systems" rather than integrating separate systems.

Microkernel-based operating system development

Microkernel-based operating system developmentSenko Rašić The document discusses microkernel-based operating system development. It describes how a microkernel has minimal functionality and moves drivers and services to user-level processes that communicate through inter-process communication calls. This can impact performance. Mainstream systems now take a hybrid approach. The document then describes the L4 microkernel and its implementation, Hasenpfeffer, which maximizes reuse of open source components. It lists components and features of the Hasenpfeffer system, including programming languages, drivers, and tools for development.

Priority Inversion on Mars

Priority Inversion on MarsNational Cheng Kung University The Mars Pathfinder mission successfully demonstrated new landing techniques and returned valuable data from the Martian surface. However, it experienced issues with priority inversion in its VxWorks real-time operating system. The lower priority weather data collection task would occasionally prevent the higher priority communication task from completing before the next cycle began, resetting the system. Engineers traced the problem to the use of VxWorks' select() call to wait for I/O from multiple devices, allowing long-running lower priority tasks to block critical higher priority tasks.

Introduction to Microkernels

Introduction to MicrokernelsVasily Sartakov This document provides an introduction to microkernel-based operating systems using the Fiasco.OC microkernel as an example. It outlines the key concepts of microkernels, including using a minimal kernel to provide mechanisms like threads and address spaces while implementing operating system services like filesystems and networking in user-level servers. It describes the objects and capabilities model of the Fiasco.OC microkernel and how it implements threads and inter-process communication. It also discusses how the L4 runtime environment builds further services on top of the microkernel to provide a full operating system environment.

seL4 intro

seL4 intromicrokerneldude Introduction to principles, concepts and mechanisms of the seL4 microkernel. Overview of the kernel API and programming examples

Android Optimization: Myth and Reality

Android Optimization: Myth and RealityNational Cheng Kung University This presentation emphasizes on the myths beyond Android system optimizations and the fact about Android evolution.

Gnu linux for safety related systems

Gnu linux for safety related systemsDTQ4 This document discusses using GNU/Linux for safety-related systems. It introduces GNU/Linux and its development process using tools like git. It also discusses kernel development tools like git, cscope, sparse and tools for testing like gcov and gprof. Finally, it discusses safety standards like IEC 61508 and requirements for the highest safety integrity level like those in EN 50128, including requirements for modular design, coding standards, testing and configuration management. The goal is to determine if GNU/Linux is suitable for safety-critical applications.

Understanding the Dalvik Virtual Machine

Understanding the Dalvik Virtual MachineNational Cheng Kung University (1) Understand how a virtual machine works

(2) Analyze the Dalvik VM using existing tools

(3) VM hacking is really interesting!

Learn C Programming Language by Using GDB

Learn C Programming Language by Using GDBNational Cheng Kung University This document discusses using GDB to relearn C programming. It provides background on using GDB to debug a simple embedded Ajax system called eServ. Key steps outlined include downloading and compiling eServ, using basic GDB commands like run, break, list, and next to observe the program's execution and set breakpoints. The goal is to analyze the system and gain skills in UNIX system programming development.

Lecture notice about Embedded Operating System Design and Implementation

Lecture notice about Embedded Operating System Design and ImplementationNational Cheng Kung University Spring 2014, National Cheng Kung University, Taiwan

Making Linux do Hard Real-time

Making Linux do Hard Real-timeNational Cheng Kung University This presentation covers the general concepts about real-time systems, how Linux kernel works for preemption, the latency in Linux, rt-preempt, and Xenomai, the real-time extension as the dual kernel approach.

Ad

More Related Content

What's hot (20)

Shorten Device Boot Time for Automotive IVI and Navigation Systems

Shorten Device Boot Time for Automotive IVI and Navigation SystemsNational Cheng Kung University Propose a practical approach of the mixture of

ARM hibernation (suspend to disk) and Linux

user-space checkpointing

– to shorten device boot time

Faults inside System Software

Faults inside System SoftwareNational Cheng Kung University Faults inside system software were analyzed, with a focus on diagnosing faults in device drivers. Approaches to deal with faulty drivers included runtime isolation and static analysis. Runtime isolation involves running each driver in a separate process or virtual machine to isolate failures. Static analysis techniques inspect source code for issues like concurrency errors, protocol violations, and invalid register values without needing to execute the code. The talk provided statistics on driver faults, discussed the Linux driver model and common bug causes, and outlined techniques like instrumentation and specification-based development to improve driver correctness and security.

Android Virtualization: Opportunity and Organization

Android Virtualization: Opportunity and OrganizationNational Cheng Kung University Give you an overview about

– device virtualization on ARM

– Benefit and real products

– Android specific virtualization consideration

– doing virtualization in several approaches

Hints for L4 Microkernel

Hints for L4 MicrokernelNational Cheng Kung University Introduce L4 microkernel principles and concepts and Understand the real world usage for microkernels

A tour of F9 microkernel and BitSec hypervisor

A tour of F9 microkernel and BitSec hypervisorLouie Lu A brief tour about F9 microkernel and BitSec hypervisor

This slide won't covering all aspect about them, but to focus on some point in these two kernel.

F9 microkernel repo: https://github.com/f9micro/f9-kernel

Impress template from: http://technology.chtsai.org/impress/

olibc: Another C Library optimized for Embedded Linux

olibc: Another C Library optimized for Embedded LinuxNational Cheng Kung University http://olibc.so/

– Review C library characteristics

– Toolchain optimizations

– Build configurable runtime

– Performance evaluation

Embedded Virtualization for Mobile Devices

Embedded Virtualization for Mobile DevicesNational Cheng Kung University Goals of This Presentation: an overview about

- virtualization in general

- device virtualization on ARM

- doing virtualization in several approaches

Develop Your Own Operating Systems using Cheap ARM Boards

Develop Your Own Operating Systems using Cheap ARM BoardsNational Cheng Kung University * Know the reasons why various operating systems exist and how they are functioned for dedicated purposes

* Understand the basic concepts while building system software from scratch

• How can we benefit from cheap ARM boards and the related open source tools?

- Raspberry Pi & STM32F4-Discovery

Explore Android Internals

Explore Android InternalsNational Cheng Kung University (0) Concepts

(1) Binder: heart of Android

(2) Binder Internals

(3) System Services

(4) Frameworks

Making Linux do Hard Real-time

Making Linux do Hard Real-timeNational Cheng Kung University This document discusses making Linux capable of hard real-time performance. It begins by defining hard and soft real-time systems and explaining that real-time does not necessarily mean fast but rather determinism. It then covers general concepts around real-time performance in Linux like preemption, interrupts, context switching, and scheduling. Specific features in Linux like RT-Preempt, priority inheritance, and threaded interrupts that improve real-time capabilities are also summarized.

Xvisor: embedded and lightweight hypervisor

Xvisor: embedded and lightweight hypervisorNational Cheng Kung University Xvisor is an open source lightweight hypervisor for ARM architectures. It uses a technique called cpatch to modify guest operating system binaries, replacing privileged instructions with hypercalls. This allows the guest OS to run without privileges in user mode under the hypervisor. Xvisor also implements virtual CPU and memory management to isolate guest instances and virtualize physical resources for multiple operating systems.

Plan 9: Not (Only) A Better UNIX

Plan 9: Not (Only) A Better UNIXNational Cheng Kung University Plan 9 was an operating system designed in the 1980s by Bell Labs as a distributed successor to Unix. It treated all system resources, including files, devices, processes and network connections, as files that could be accessed through a single universal file system interface. Plan 9 assumed a network of reliable file servers and CPU servers with personal workstations accessing aggregated remote resources through a high-speed network. It aimed to "build a UNIX out of little systems" rather than integrating separate systems.

Microkernel-based operating system development

Microkernel-based operating system developmentSenko Rašić The document discusses microkernel-based operating system development. It describes how a microkernel has minimal functionality and moves drivers and services to user-level processes that communicate through inter-process communication calls. This can impact performance. Mainstream systems now take a hybrid approach. The document then describes the L4 microkernel and its implementation, Hasenpfeffer, which maximizes reuse of open source components. It lists components and features of the Hasenpfeffer system, including programming languages, drivers, and tools for development.

Priority Inversion on Mars

Priority Inversion on MarsNational Cheng Kung University The Mars Pathfinder mission successfully demonstrated new landing techniques and returned valuable data from the Martian surface. However, it experienced issues with priority inversion in its VxWorks real-time operating system. The lower priority weather data collection task would occasionally prevent the higher priority communication task from completing before the next cycle began, resetting the system. Engineers traced the problem to the use of VxWorks' select() call to wait for I/O from multiple devices, allowing long-running lower priority tasks to block critical higher priority tasks.

Introduction to Microkernels

Introduction to MicrokernelsVasily Sartakov This document provides an introduction to microkernel-based operating systems using the Fiasco.OC microkernel as an example. It outlines the key concepts of microkernels, including using a minimal kernel to provide mechanisms like threads and address spaces while implementing operating system services like filesystems and networking in user-level servers. It describes the objects and capabilities model of the Fiasco.OC microkernel and how it implements threads and inter-process communication. It also discusses how the L4 runtime environment builds further services on top of the microkernel to provide a full operating system environment.

seL4 intro

seL4 intromicrokerneldude Introduction to principles, concepts and mechanisms of the seL4 microkernel. Overview of the kernel API and programming examples

Android Optimization: Myth and Reality

Android Optimization: Myth and RealityNational Cheng Kung University This presentation emphasizes on the myths beyond Android system optimizations and the fact about Android evolution.

Gnu linux for safety related systems

Gnu linux for safety related systemsDTQ4 This document discusses using GNU/Linux for safety-related systems. It introduces GNU/Linux and its development process using tools like git. It also discusses kernel development tools like git, cscope, sparse and tools for testing like gcov and gprof. Finally, it discusses safety standards like IEC 61508 and requirements for the highest safety integrity level like those in EN 50128, including requirements for modular design, coding standards, testing and configuration management. The goal is to determine if GNU/Linux is suitable for safety-critical applications.

Understanding the Dalvik Virtual Machine

Understanding the Dalvik Virtual MachineNational Cheng Kung University (1) Understand how a virtual machine works

(2) Analyze the Dalvik VM using existing tools

(3) VM hacking is really interesting!

Learn C Programming Language by Using GDB

Learn C Programming Language by Using GDBNational Cheng Kung University This document discusses using GDB to relearn C programming. It provides background on using GDB to debug a simple embedded Ajax system called eServ. Key steps outlined include downloading and compiling eServ, using basic GDB commands like run, break, list, and next to observe the program's execution and set breakpoints. The goal is to analyze the system and gain skills in UNIX system programming development.

Viewers also liked (9)

Lecture notice about Embedded Operating System Design and Implementation

Lecture notice about Embedded Operating System Design and ImplementationNational Cheng Kung University Spring 2014, National Cheng Kung University, Taiwan

Making Linux do Hard Real-time

Making Linux do Hard Real-timeNational Cheng Kung University This presentation covers the general concepts about real-time systems, how Linux kernel works for preemption, the latency in Linux, rt-preempt, and Xenomai, the real-time extension as the dual kernel approach.

中輟生談教育: 完全用開放原始碼軟體進行 嵌入式系統教學

中輟生談教育: 完全用開放原始碼軟體進行 嵌入式系統教學National Cheng Kung University 我曾經是個對高等教育徹底失望的人, 連大學都沒唸完,但工作十年後 , 重返學校教書、再學習:

* 想在台灣南部建立新的事業

* 讓工程師能夠兼顧生活與工作的品質

→ 從基礎的底子開始打起

→ 直接在學校培養日後的工程人員

→ 著墨於「基礎建設」 , 將資訊技術作多方應用

How A Compiler Works: GNU Toolchain

How A Compiler Works: GNU ToolchainNational Cheng Kung University * 理解從 C 程式原始碼到二進制的過程,從而成為

電腦的主人

* 觀察 GNU Toolchain 的運作並探討其原理

* 引導不具備編譯器背景知識的聽眾,得以對編譯

器最佳化技術有概念

* 選定一小部份 ARM 和 MIPS 組合語言作為示範

給自己更好未來的 3 個練習:嵌入式作業系統設計、實做,與移植 (2015 年春季 ) 課程說明

給自己更好未來的 3 個練習:嵌入式作業系統設計、實做,與移植 (2015 年春季 ) 課程說明National Cheng Kung University 「哥教的不是知識,是 guts !」

GUTS: 與其死板傳授片面的知識,還不如讓學生有

能力、有勇氣面對資訊科技產業的種種挑戰

進階嵌入式系統開發與實做 (2014 年秋季 ) 課程說明

進階嵌入式系統開發與實做 (2014 年秋季 ) 課程說明National Cheng Kung University 三大主軸

– ARM 組織和結構

– 作業系統界面和設計

– 系統效能、可靠度和安全議題

從線上售票看作業系統設計議題

從線上售票看作業系統設計議題National Cheng Kung University 本簡報大量引用網路討論,試著從阿妹、江蕙演唱會前,大量網友無法透過線上購票系統順利購票的狀況,以系統設計的觀點,去分析這些線上購票系統出了哪些問題

Virtual Machine Constructions for Dummies

Virtual Machine Constructions for DummiesNational Cheng Kung University Build a full-functioned virtual machine from scratch, when Brainfuck is used. Basic concepts about interpreter, optimizations techniques, language specialization, and platform specific tweaks.

PyPy's approach to construct domain-specific language runtime

PyPy's approach to construct domain-specific language runtimeNational Cheng Kung University PyPy takes a tracing just-in-time (JIT) compilation approach to optimize Python programs. It works by first interpreting the program, then tracing hot loops and optimizing their performance by compiling them to machine code. This JIT compilation generates and runs optimized trace trees representing the control flow and operations within loops. If guards placed in the compiled code fail, indicating the optimization may no longer apply, execution falls back to the interpreter or recompiles the trace with additional information. PyPy's approach aims to optimize the most common execution paths of Python programs for high performance while still supporting Python's dynamic nature.

Lecture notice about Embedded Operating System Design and Implementation

Lecture notice about Embedded Operating System Design and ImplementationNational Cheng Kung University

Ad

Similar to Implement Runtime Environments for HSA using LLVM (20)

Petapath HP Cast 12 - Programming for High Performance Accelerated Systems

Petapath HP Cast 12 - Programming for High Performance Accelerated Systemsdairsie Presentation given at HP-CAST 12, Tutorial Session in Madrid, May 2009 on Software Environments for Accelerators.

Large Scale Computing Infrastructure - Nautilus

Large Scale Computing Infrastructure - NautilusGabriele Di Bernardo This document proposes transforming parallel runtimes into operating system kernels to improve performance and scalability. It discusses how modern runtimes run on top of general purpose OSes and are limited by the kernel abstraction. A hybrid runtime integrated with a small prototype kernel called Nautilus could run entirely in kernel mode without syscall overhead and access advanced hardware features. It describes porting the Legion runtime to Nautilus and shows improved performance from reduced interrupts in initial evaluations. The conclusion is that parallel runtimes can be transformed into kernels to easily leverage kernel resources while sometimes a full kernel is not needed.

LCU13: GPGPU on ARM Experience Report

LCU13: GPGPU on ARM Experience ReportLinaro Resource: LCU13

Name: GPGPU on ARM Experience Report

Date: 30-10-2013

Speaker: Tom Gall

Video: http://www.youtube.com/watch?v=57PrMlF17gQ

Neptune @ SoCal

Neptune @ SoCalChris Bunch These slides are from my talk about the Neptune domain specific language, given at SoCal Spring 2011 at Harvey Mudd College.

OS for AI: Elastic Microservices & the Next Gen of ML

OS for AI: Elastic Microservices & the Next Gen of MLNordic APIs AI has been a hot topic lately, with advances being made constantly in what is possible, there has not been as much discussion of the infrastructure and scaling challenges that come with it. How do you support dozens of different languages and frameworks, and make them interoperate invisibly? How do you scale to run abstract code from thousands of different developers, simultaneously and elastically, while maintaining less than 15ms of overhead?

At Algorithmia, we’ve built, deployed, and scaled thousands of algorithms and machine learning models, using every kind of framework (from scikit-learn to tensorflow). We’ve seen many of the challenges faced in this area, and in this talk I’ll share some insights into the problems you’re likely to face, and how to approach solving them.

In brief, we’ll examine the need for, and implementations of, a complete “Operating System for AI” – a common interface for different algorithms to be used and combined, and a general architecture for serverless machine learning which is discoverable, versioned, scalable and sharable.

OpenPOWER Acceleration of HPCC Systems

OpenPOWER Acceleration of HPCC SystemsHPCC Systems JT Kellington, IBM and Allan Cantle, Nallatech present at the 2015 HPCC Systems Engineering Summit Community Day about porting HPCC Systems to the POWER8-based ppc64el architecture.

HSA Features

HSA FeaturesHen-Jung Wu HSA is a new heterogeneous programming model, created for lowering the learning curve of heterogeneous. This slide shares you the advanced features and HSA.

Disclaimer: Unless otherwise noted, the content of this course material is licensed under a Creative Commons Attribution 3.0 License.

You assume all responsibility for use and potential liability associated with any use of the material.

HPC and cloud distributed computing, as a journey

HPC and cloud distributed computing, as a journeyPeter Clapham Introducing an internal cloud brings new paradigms, tools and infrastructure management. When placed alongside traditional HPC the new opportunities are significant But getting to the new world with micro-services, autoscaling and autodialing is a journey that cannot be achieved in a single step.

Ingesting hdfs intosolrusingsparktrimmed

Ingesting hdfs intosolrusingsparktrimmedwhoschek Apache Solr on Hadoop is enabling organizations to collect, process and search larger, more varied data. Apache Spark is is making a large impact across the industry, changing the way we think about batch processing and replacing MapReduce in many cases. But how can production users easily migrate ingestion of HDFS data into Solr from MapReduce to Spark? How can they update and delete existing documents in Solr at scale? And how can they easily build flexible data ingestion pipelines? Cloudera Search Software Engineer Wolfgang Hoschek will present an architecture and solution to this problem. How was Apache Solr, Spark, Crunch, and Morphlines integrated to allow for scalable and flexible ingestion of HDFS data into Solr? What are the solved problems and what's still to come? Join us for an exciting discussion on this new technology.

ISCA Final Presentation - Intro

ISCA Final Presentation - IntroHSA Foundation This document provides an overview of the HSA Foundation and the Heterogeneous System Architecture (HSA) platform. It discusses how HSA aims to make programming of heterogeneous systems like CPUs and GPUs easier through a unified memory model, low-latency dispatch, and support for multiple languages. The document outlines the HSA specifications and open source efforts. It also provides an example of how HSA could accelerate suffix array construction by offloading work to the GPU.

Floating Point Operations , Memory Chip Organization , Serial Bus Architectur...

Floating Point Operations , Memory Chip Organization , Serial Bus Architectur...KRamasamy2 This document discusses parallel computer architecture and challenges. It covers topics such as resource allocation, data access, communication, synchronization, performance and scalability for parallel processing. It also discusses different levels of parallelism that can be exploited in programs as well as the need for and feasibility of parallel computing given technology and application demands.

Floating Point Operations , Memory Chip Organization , Serial Bus Architectur...

Floating Point Operations , Memory Chip Organization , Serial Bus Architectur...VAISHNAVI MADHAN Floating Point Operations , Memory Chip Organization , Serial Bus Architecture , Parallel processing

"The Vision API Maze: Options and Trade-offs," a Presentation from the Khrono...

"The Vision API Maze: Options and Trade-offs," a Presentation from the Khrono...Edge AI and Vision Alliance For the full video of this presentation, please visit:

http://www.embedded-vision.com/platinum-members/embedded-vision-alliance/embedded-vision-training/videos/pages/may-2016-embedded-vision-summit-khronos

For more information about embedded vision, please visit:

http://www.embedded-vision.com

Neil Trevett, President of the Khronos Group, presents the "Vision API Maze: Options and Trade-offs" tutorial at the May 2016 Embedded Vision Summit.

It’s been a busy year in the world of hardware acceleration APIs. Many industry-standard APIs, such as OpenCL and OpenVX, have been upgraded, and the industry has begun to adopt the new generation of low-level, explicit GPU APIs, such as Vulkan, that tightly integrate graphics and compute. Some of these APIs, like OpenVX and OpenCV, are vision-specific, while others, like OpenCL and Vulkan, are general-purpose. Some, like CUDA and Renderscript, are supplier-specific, while others are open standards that any supplier can adopt. Which ones should you use for your project?

In this presentation, Neil Trevett, President of the Khronos Group standards organization, updates the landscape of APIs for vision software development, explaining where each one fits in the development flow. Neil also highlights where these APIs overlap and where they complement each other, and previews some of the latest developments in these APIs.

Michael stack -the state of apache h base

Michael stack -the state of apache h basehdhappy001 The document provides an overview of Apache HBase, an open source, distributed, scalable, big data non-relational database. It discusses that HBase is modeled after Google's Bigtable and built on Hadoop for storage. It also summarizes that HBase is used by many large companies for applications such as messaging, real-time analytics, and search indexing. The project is led by an active community of committers and sees steady improvements and new features with each monthly release.

High Performance Machine Learning in R with H2O

High Performance Machine Learning in R with H2OSri Ambati This document summarizes a presentation by Erin LeDell from H2O.ai about machine learning using the H2O software. H2O is an open-source machine learning platform that provides APIs for R, Python, Scala and other languages. It allows distributed machine learning on large datasets across clusters. The presentation covers H2O's architecture, algorithms like random forests and deep learning, and how to use H2O within R including loading data, training models, and running grid searches. It also discusses H2O on Spark via Sparkling Water and real-world use cases with customers.

The Why and How of HPC-Cloud Hybrids with OpenStack - Lev Lafayette, Universi...

The Why and How of HPC-Cloud Hybrids with OpenStack - Lev Lafayette, Universi...OpenStack Audience Level

Intermediate

Synopsis

High performance computing and cloud computing have traditionally been seen as separate solutions to separate problems, dealing with issues of performance and flexibility respectively. In a diverse research environment however, both sets of compute requirements can occur. In addition to the administrative benefits in combining both requirements into a single unified system, opportunities are provided for incremental expansion.

The deployment of the Spartan cloud-HPC hybrid system at the University of Melbourne last year is an example of such a design. Despite its small size, it has attracted international attention due to its design features. This presentation, in addition to providing a grounding on why one would wish to build an HPC-cloud hybrid system and the results of the deployment, provides a complete technical overview of the design from the ground up, as well as problems encountered and planned future developments.

Speaker Bio

Lev Lafayette is the HPC and Training Officer at the University of Melbourne. Prior to that he worked at the Victorian Partnership for Advanced Computing for several years in a similar role.

A Source-To-Source Approach to HPC Challenges

A Source-To-Source Approach to HPC ChallengesChunhua Liao This document discusses using source-to-source compilers to address high performance computing challenges. It presents a source-to-source approach using the ROSE compiler infrastructure to generate programming models for heterogeneous computing via directives, enable performance optimization through autotuning, and improve resilience by adding source-level redundancy. Preliminary results are shown for accelerating common kernels like AXPY, matrix multiplication, and Jacobi iteration using a prototype source-to-source OpenMP compiler called HOMP to target GPUs.

HSA From A Software Perspective

HSA From A Software Perspective HSA Foundation The document discusses the Heterogeneous System Architecture (HSA) Foundation and its goals of developing an open platform for heterogeneous computing systems. It provides details on HSA specifications and programming models, including:

- The HSA Foundation was formed in 2012 to develop a new platform for systems with CPUs and GPUs/accelerators.

- Key HSA features include a unified memory model, low-latency dispatch, and support for multiple programming languages and frameworks like OpenCL, Java, and C++.

- The HSA intermediate language HSAIL provides a common representation for parallel workloads that can target different CPU and GPU ISAs.

Debugging Numerical Simulations on Accelerated Architectures - TotalView fo...

Debugging Numerical Simulations on Accelerated Architectures - TotalView fo...Rogue Wave Software ScicomP 2015 presentation discussing best practices for debugging CUDA and OpenACC applications with a case study on our collaboration with LLNL to bring debugging to the OpenPOWER stack and OMPT.

Heterogeneous computing

Heterogeneous computingRashid Ansari Heterogeneous computing refers to systems that use more than one type of processor or core. It allows integration of CPUs and GPUs on the same bus, with shared memory and tasks. This is called the Heterogeneous System Architecture (HSA). The HSA aims to reduce latency between devices and make them more compatible for programming. Programming models for HSA include OpenCL, CUDA, and hUMA. Heterogeneous computing is used in platforms like smartphones, laptops, game consoles, and APUs from AMD. It provides benefits like increased performance, lower costs, and better battery life over traditional CPUs, but discrete CPUs and GPUs can provide more power and new software models are needed.

"The Vision API Maze: Options and Trade-offs," a Presentation from the Khrono...

"The Vision API Maze: Options and Trade-offs," a Presentation from the Khrono...Edge AI and Vision Alliance

Ad

More from National Cheng Kung University (9)

2016 年春季嵌入式作業系統課程說明

2016 年春季嵌入式作業系統課程說明National Cheng Kung University 與其死板傳授片面的知識,還不如讓學生有能力、

有勇氣面對資訊科技產業的種種挑戰

− GUTS = General Unix Talk Show

− 向 Unix 作業系統學習,同時銜接最新產業發展,全程採用活躍

的開放原始碼技術,不用擔心學習的是「屠龍術」

目標:開拓視野,從做中學習、從做中肯定自己

Interpreter, Compiler, JIT from scratch

Interpreter, Compiler, JIT from scratchNational Cheng Kung University Introduce Brainf*ck, another Turing complete programming language. Then, try to implement the following from scratch: Interpreter, Compiler [x86_64 and ARM], and JIT Compiler.

進階嵌入式作業系統設計與實做 (2015 年秋季 ) 課程說明

進階嵌入式作業系統設計與實做 (2015 年秋季 ) 課程說明National Cheng Kung University 號稱與資訊科技產業「零距離」、兼顧理論和實務,由第一線軟體工程師全程規劃的精實課程,目標:模擬產業生態和規格、銜接產業界的發展水平、採用業界標準的開發工具與流程

、100% 應用活躍的開放原始碼軟體,以及提供資訊科技工作諮詢和模擬面試

The Internals of "Hello World" Program

The Internals of "Hello World" ProgramNational Cheng Kung University The document discusses how a "Hello World" program works behind the scenes. It covers topics like compilation, linking, executable file formats, loading programs into memory, and process creation. The key points are:

1) A C program is compiled into an object file, then linked with library files to create an executable. The linker resolves symbols and relocates addresses.

2) Executable files use formats like ELF that contain machine code, data, symbol tables, and sections. Object files have a similar format.

3) When a program runs, the OS loads pages of the executable into memory as needed and sets up the process with its own virtual address space.

4) System calls

Open Source from Legend, Business, to Ecosystem

Open Source from Legend, Business, to EcosystemNational Cheng Kung University A short introduction about the business, strategy, and ecosystem behind open source software model.

Summer Project: Microkernel (2013)

Summer Project: Microkernel (2013)National Cheng Kung University 透過開放發展的模式,打造一個真正能用的系統軟體環境,提供給物聯網與醫療電子等應用

進階嵌入式系統開發與實作 (2013 秋季班 ) 課程說明

進階嵌入式系統開發與實作 (2013 秋季班 ) 課程說明National Cheng Kung University 重返學校教書,想在台灣南部建立新的事業,讓工程師能夠兼顧生活與工作的品質:

→ 從基礎的底子開始打起

→ 直接在學校培養日後的工程人員

→ 著墨於「基礎建設」 , 並將資訊技術作多元應用

LLVM 總是打開你的心:從電玩模擬器看編譯器應用實例

LLVM 總是打開你的心:從電玩模擬器看編譯器應用實例National Cheng Kung University The document summarizes the use of LLVM for code generation when recompiling Nintendo games as native games. LLVM provides a full compiler infrastructure that can be used to generate code for various platforms from a common intermediate representation (LLVM bitcode). The document discusses using LLVM for code generation from 6502 assembly to generate native code for emulation. Optimizations available through LLVM are also discussed.

Develop Your Own Operating System

Develop Your Own Operating SystemNational Cheng Kung University (Presentation at COSCUP 2012) Discuss why you should try to develop your own operating system and how you can speed up by taking the microkernel approach.

Recently uploaded (20)

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Implement Runtime Environments for HSA using LLVM

- 1. Implement Runtime Environments for HSA using LLVM Jim Huang ( 黃敬群 ) <[email protected]> Oct 17, 2013 / ITRI, Taiwan

- 2. About this presentation • Transition to heterogeneous – mobile phone to data centre • LLVM and HSA • HSA driven computing environment

- 5. Herb Sutter’s new outlook http://herbsutter.com/welcome-to-the-jungle / “In the twilight of Moore’s Law, the transitions to multicore processors, GPU computing, and HaaS cloud computing are not separate trends, but aspects of a single trend – mainstream computers from desktops to ‘smartphones’ are being permanently transformed into heterogeneous supercomputer clusters. Henceforth, a single computeintensive application will need to harness different kinds of cores, in immense numbers, to get its job done.” “The free lunch is over. Now welcome to the hardware jungle.”

- 6. Four causes of heterogeneity • Multiple types of programmable core • • • CPU (lightweight, heavyweight) GPU Accelerators and application-specific • Interconnect asymmetry • Memory hierarchies • Service oriented software demanding

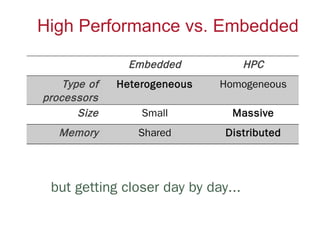

- 7. High Performance vs. Embedded Embedded Type of processors Size Memory HPC Heterogeneous Homogeneous Small Massive Shared Distributed but getting closer day by day...

- 8. Heterogeneity is mainstream Dual-core ARM 1.4GHz, ARMv7s CPU Quad-core ARM Cortex A9 CPU Quad-core SGX543MP4+ Imagination GPU Triple-core SGX554MP4 Imagination GPU Most tablets and smartphones are already powered by heterogeneous processors.

- 9. Source: Heterogeneous Computing in ARM

- 11. Current limitations • Disjoint view of memory spaces between CPUs and GPUs • Hard partition between “host” and “devices” in programming models • Dynamically varying nested parallelism almost impossible to support • Large overheads in scheduling heterogeneous, parallel tasks

- 13. OpenCL Framework overview Host Program int main(int argc, char **argv) { ... clBuildProgram(program, ...); clCreateKernel(program, “dot”...); ... } OpenCL Kernels __kernel void dot(__global const float4 *a __global const float4 *b __global float4 *c) { int tid = get_global_id(0); c[tid] = a[tid] * b[tid]; } Application Separate programs into host-side and kernel-side code fragment OpenCL Framework OpenCL Runtime Runtime APIs Platform APIs OpenCL Compiler Compiler • compile OpenCL C language just-in-time Runtime • allow host program to manipulate context Front-end Front-end Back-end Back-end Platform MPU GPU MPU : host, kernel program GPU : kernel program Source: OpenCL_for_Halifux.pdf, OpenCL overview, Intel Visual Adrenaline

- 14. OpenCL Execution Scenario kernel { code fragment 1 code fragment 2 code fragment 3 } Task-level parallelism 1 2 3 X O Data-level parallelism kernel A { code 1 code 2 code 3 } A:1,2,3 data(0) A:1,2,3 data(2) A:1,2,3 data(3) kernel A { code 1 } A:1,2,3 data(1) A:1,2,3 data(4) A:1,2,3 data(5) kernel B { code 2 } kernel C { code 3 } OpenCL Runtime A:1 B:2 C:3 A:1 B:2 C:3 14

- 15. Supporting OpenCL • Syntax parsing by compiler – – – – qualif i r e vector built-in function Optimizations on single core __kernel void add( __global float4 *a, __global float4 *b, __global float4 *c) { int gid = get_global_id(0); float4 data = (float4) (1.0, 2.0, 3.0, 4.0); c[gid] = a[gid] + b[gid] + data; } • Runtime implementation – handle multi-core issues – Co-work with device vendor Platform APIs MPU Runtime APIs GPU

- 16. LLVM-based compilation toolchain Data in Module 1 . . . opt & link Front ends Linked module Profiling ? yes lli Module N no Back end 1 Exe 1 . . . Exe M . . . Back end M Module 1 . . . Module M Partitioning & mapping Estimation Profile info

- 18. HSA overview • open architecture specification • HSAIL virtual (parallel) instruction set • HSA memory model • HSA dispatcher and run-time • Provides an optimized platform architecture for heterogeneous programming models such as OpenCL, C++AMP, et al 18

- 20. Heterogeneous Programming • Unified virtual address space for all cores • CPU and GPU • distributed arrays • Hardware queues per code with lightweight user mode task dispatch • Enables GPU context switching, preemption, efficient heterogeneous scheduling • First class barrier objects • Aids parallel program composability

- 21. HSA Intermediate Layer (HSAIL) Virtual ISA for parallel programs Similar to LLVM IR and OpenCL SPIR Finalised to specific ISA by a JIT compiler Make late decisions on which core should run a task • Features: • • • • • Explicitly parallel • Support for exceptions, virtual functions and other high-level features • syscall methods (I/O, printf etc.) • Debugging support

- 22. HSA stack for Android (conceptual)

- 23. HSA memory model • Compatible with C++11, OpenCL, Java and .NET memory models • Relaxed consistency • Designed to support both managed language (such as Java) and unmanaged languages (such as C) • Will make it much easier to develop 3rd party compilers for a wide range of heterogeneous products • E.g. Fortran, C++, C++AMP, Java et al

- 24. HSA dispatch • HSA designed to enable heterogeneous task queuing • A work queue per core (CPU, GPU, …) • Distribution of work into queues • Load balancing by work stealing • Any core can schedule work for any other, including itself • Significant reduction in overhead of scheduling work for a core

- 25. Today’s Command and Dispatch Flow Command Flow Application A Direct3D Data Flow User Mode Driver Soft Queue Command Buffer Kernel Mode Driver DMA Buffer GPU HARDWARE A Hardware Queue

- 26. Today’s Command and Dispatch Flow Command Flow Application A Direct3D Data Flow User Mode Driver Soft Queue Kernel Mode Driver Command Buffer Command Flow DMA Buffer Data Flow B A B C Application B Direct3D User Mode Driver Soft Queue Kernel Mode Driver Command Buffer Command Flow Application C Direct3D GPU HARDWARE A DMA Buffer Data Flow User Mode Driver Soft Queue Command Buffer Hardware Queue Kernel Mode Driver DMA Buffer

- 27. HSA Dispatch Flow ✔ ✔ ✔ ✔ ✔ ✔ Software View User-mode dispatch to hardware No kernel mode driver overhead Low dispatch latency ✔ ✔ ✔ ✔ ✔ ✔ Hardware View HW / microcode controlled HW scheduling HW-managed protection

- 28. HSA enabled dispatch Application / Runtime CPU1 CPU2 GPU

- 29. HSA Memory Model • compatible with C++11/Java/.NET memory models • Relaxed consistency memory model • Loads and stores can be re-ordered by the finalizer

- 30. Data Flow of HSA

- 31. HSAIL • intermediate language for parallel compute in HSA – Generated by a high level compiler (LLVM, gcc, Java VM, etc) – Compiled down to GPU ISA or parallel ISAFinalizer – Finalizer may execute at run time, install time or build time • low level instruction set designed for parallel compute in a shared virtual memory environment. • designed for fast compile time, moving most optimizations to HL compiler – Limited register set avoids full register allocation in finalizer

- 32. GPU based Languages • Dynamic Language for exploration of heterogeneous parallel runtimes • LLVM-based compilation – Java, Scala, JavaScript, OpenMP • Project Sumatra:GPU Acceleration for Java in OpenJDK • ThorScript

- 33. Accelerating Java Source: HSA, Phil Rogers

- 34. Open Source software stack for HSA A Linux execution and compilation stack is open-sourced by AMD • Jump start the ecosystem • Allow a single shared implementation where appropriate • Enable university research in all areas Component Name Purpose HSA Bolt Library Enable understanding and debug OpenCL HSAIL Code Generator Enable research LLVM Contributions Industry and academic collaboration HSA Assembler Enable understanding and debug HSA Runtime Standardize on a single runtime HSA Finalizer Enable research and debug HSA Kernel Driver For inclusion in Linux distros

- 35. Open Source Tools • • • • Hosted at GitHub: https://github.com/HSAFoundation HSAIL-Tools: Assembler/Disassembler Instruction Set Simulator HSA ISS Loader Library for Java and C++ for creation and dispatch HSAIL kernel

- 36. HSAIL example ld_kernarg_u64 $d0, [%_out]; ld_kernarg_u64 $d1, [%_in]; sqrt((float (i*i + (i+1)*(i+1) + (i+2)*(i+2))) @block0: workitemabsid_u32 $s2, 0; cvt_s64_s32 $d2, $s2; mad_u64 $d3, $d2, 8, $d1; ld_global_u64 $d3, [$d3]; //pointer ld_global_f32 $s0, [$d3+0]; // x ld_global_f32 $s1, [$d3+4]; // y ld_global_f32 $s2, [$d3+8]; // z mul_f32 $s0, $s0, $s0; // x*x mul_f32 $s1, $s1, $s1; // y*y add_f32 $s0, $s0, $s1; // x*x + y*y mul_f32 $s2, $s2, $s2; // z*z add_f32 $s0, $s0, $s2;// x*x + y*y + z*z sqrt_f32 $s0, $s0; mad_u64 $d4, $d2, 4, $d0; st_global_f32 $s0, [$d4]; ret;

- 37. Conclusions • Heterogeneity is an increasingly important trend • The market is finally starting to create and adopt the necessary open standards • HSA should enable much more dynamically heterogeneous nested parallel programs and programming models

- 38. Reference • Heterogeneous Computing in ARM (2013) • Project Sumatra: GPU Acceleration for Java in OpenJDK – http://openjdk.java.net/projects/sumatra/ • HETEROGENEOUS SYSTEM ARCHITECTURE, Phil Rogers