HTML5 game dev with three.js - HexGL

17 likes9,782 views

These are the slides of my talk about HexGL at the Adobe User Group meetup in the Netherlands. More info: http://bkcore.com/blog/general/adobe-user-group-nl-talk-video-hexgl.html

1 of 64

Downloaded 121 times

![var pool = [];

var geometry = new THREE.Geometry();

geometry.dynamic = true;

for(var i = 0; i < 1000; ++i)

{

var p = new bkcore.Particle();

pool.push(p);

geometry.vertices.push(p.position);

geometry.colors.push(p.color);

}](https://image.slidesharecdn.com/3dgamedevelopment-light-120920062910-phpapp01/85/HTML5-game-dev-with-three-js-HexGL-45-320.jpg)

![// Particle physics

var p = pool[i];

p.position.addSelf(p.velocity);

//…

geometry.verticesNeedUpdate = true;

geometry.colorsNeedUpdate = true;](https://image.slidesharecdn.com/3dgamedevelopment-light-120920062910-phpapp01/85/HTML5-game-dev-with-three-js-HexGL-48-320.jpg)

![var hexvignette: {

uniforms: {

tDiffuse: { type: "t", value: 0, texture: null },

tHex: { type: "t", value: 1, texture: null},

size: { type: "f", value: 512.0},

color: { type: "c", value: new THREE.Color(0x458ab1) }

},

fragmentShader: [

"uniform float size;",

"uniform vec3 color;",

"uniform sampler2D tDiffuse;",

"uniform sampler2D tHex;",

"varying vec2 vUv;",

"void main() { ... }"

].join("n")

};](https://image.slidesharecdn.com/3dgamedevelopment-light-120920062910-phpapp01/85/HTML5-game-dev-with-three-js-HexGL-60-320.jpg)

![var effectHex = new THREE.ShaderPass(hexvignette);

effectHex.uniforms['size'].value = 512.0;

effectHex.uniforms['tHex'].texture = hexTexture;

composer.addPass(effectHex);

//…

effectHex.renderToScreen = true;](https://image.slidesharecdn.com/3dgamedevelopment-light-120920062910-phpapp01/85/HTML5-game-dev-with-three-js-HexGL-61-320.jpg)

Ad

Recommended

WebGL and three.js - Web 3D Graphics

WebGL and three.js - Web 3D Graphics PSTechSerbia Using the potential of WebGL in web browser in a simple way with three.js javascript library. Practical demonstration of a WebGL app developed for a Silicon Valley startup.

WebGL and three.js

WebGL and three.jsAnton Narusberg WebGL allows for 3D graphics rendering within web browsers using JavaScript and standard web technologies. It provides an API for accessing a computer's GPU similarly to Canvas for 2D drawing. THREE.js makes WebGL programming easier by abstracting away complexities like shader programming and matrix computations. A simple example creates a 3D cube in THREE.js with just a few lines of code versus the longer WebGL example. THREE.js provides high-level objects for cameras, lights, materials and more to build 3D scenes efficiently in the browser.

From Hello World to the Interactive Web with Three.js: Workshop at FutureJS 2014

From Hello World to the Interactive Web with Three.js: Workshop at FutureJS 2014Verold The first workshop at the first ever FutureJS conference in Barcelona. From Three.js Hello World to building your first interactive 3D app, to connecting your web app with the Internet of Things.

Introduction to three.js

Introduction to three.jsyuxiang21 Three.js is a powerful JavaScript library for creating 3D graphics in a web browser using WebGL. It allows placing 3D objects into a scene, defining a camera to view the scene, adding lighting, and rendering the 3D graphics. The basics of three.js include setting up a scene, camera, lights, geometry for 3D objects, materials, and a renderer. Examples are provided of what can be created with three.js like animated 3D graphics and a preview of a simple rolling ball demo.

Intro to Three.js

Intro to Three.jsKentucky JavaScript Users Group Three.js is a popular JavaScript library that makes it easier to use WebGL by abstracting away its complexity. It represents 3D objects, cameras, lights and materials as JavaScript objects that can be easily manipulated to build 3D scenes. Basic components of a Three.js scene include a camera, lights, 3D meshes to represent objects, and materials applied to meshes. Common tasks like creating a renderer, camera, scene, adding objects and lights, and implementing an animation loop are demonstrated.

Creating Applications with WebGL and Three.js

Creating Applications with WebGL and Three.jsFuture Insights James Williams's talk from Future Insights Live 2014 in Las Vegas: "There was once a time where gaming in the browser meant Flash. That time is no more. In this session, you’ll learn the basics of game programming, WebGL, and how to use Three.js to create WebGL applications."

Miss his talk? Join us at a future show: www.futureofwebapps.com. Sign up for our newsletter at futureinsights.com and get 15% off your next conference.

[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...![[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...](https://cdn.slidesharecdn.com/ss_thumbnails/15h20-15h50-juliananegreiros-180706162350-thumbnail.jpg?width=560&fit=bounds)

![[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...](https://cdn.slidesharecdn.com/ss_thumbnails/15h20-15h50-juliananegreiros-180706162350-thumbnail.jpg?width=560&fit=bounds)

![[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...](https://cdn.slidesharecdn.com/ss_thumbnails/15h20-15h50-juliananegreiros-180706162350-thumbnail.jpg?width=560&fit=bounds)

![[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...](https://cdn.slidesharecdn.com/ss_thumbnails/15h20-15h50-juliananegreiros-180706162350-thumbnail.jpg?width=560&fit=bounds)

[JS EXPERIENCE 2018] Jogos em JavaScript com WebGL - Juliana Negreiros, Codem...iMasters Jogos em JavaScript com WebGL - Juliana Negreiros, Codeminer42

[JS EXPERIENCE 2018] - 5 de julho de 2018 São Paulo/SP

Introduction to threejs

Introduction to threejsGareth Marland This document provides an introduction to creating 3D scenes using the three.js library. It discusses the key components of a 3D scene: the light source, camera, and renderer. Common light sources like ambient, point, and directional lights are described. Perspective and orthographic cameras are covered. The renderer draws the scene. Examples are provided for setting up a basic scene with these components and adding objects, animating objects, selecting objects using raycasting, and controlling the camera. Advantages of 3D web development using three.js include being browser-based and able to integrate with other web technologies.

Introduction to three.js & Leap Motion

Introduction to three.js & Leap MotionLee Trout This document provides an overview of three.js and the Leap Motion controller for creating 3D graphics and interactions in a web browser. It explains some basic 3D graphics concepts like scenes, objects, materials and lighting used in three.js. It then demonstrates how to load three.js, add basic 3D shapes, import 3D models, and add lighting and shadows. It also introduces the Leap Motion controller for hand tracking input and shows an example of using it with three.js. Finally, it discusses a project using these tools to create an interactive 3D experience of the US Capitol dome.

ENEI16 - WebGL with Three.js

ENEI16 - WebGL with Three.jsJosé Ferrão This document summarizes a presentation on using the three.js library to create 3D graphics in web browsers using WebGL. It introduces key three.js concepts like scenes, objects, transformations, lights, cameras, materials, textures, and rendering. It also covers 3D topics like shaders, normals mapping, reflections and VR using WebVR. The presentation aims to introduce basic 3D graphics programming concepts and explore what is possible with three.js for 3D on the web.

Three.js basics

Three.js basicsVasilika Klimova This document provides an overview of the Three.js library for creating 3D graphics in web browsers using WebGL. It discusses key Three.js concepts like scenes, cameras, lights, materials, and textures. It also provides examples of how to load 3D models and textures, set up animations, and add interactivity using controls. Useful links are included for learning more about Three.js and WebGL fundamentals.

Портируем существующее Web-приложение в виртуальную реальность / Денис Радин ...

Портируем существующее Web-приложение в виртуальную реальность / Денис Радин ...Ontico РИТ++ 2017, Frontend Сonf

Зал Мумбаи, 5 июня, 17:00

Тезисы:

http://frontendconf.ru/2017/abstracts/2478.html

Виртуальная реальность - мощный тренд, который до текущего момента обходил стороной веб-разработчиков. Данный доклад о том, как интегрировать существующие Web-приложения в миры виртуальной реальности, предоставляя вашим пользователям новые возможности и UX, а себе дозу фана.

Должны ли мы использовать CSS или WebGL для проброса приложения в VR?

Какие решения доступны на текущий момент, и каких ошибок стоит остерегаться?

Почему HTML так же хорош для разработки VR-интерфейсов, как и для обычного, плоского Web?

Как веб-разработчик может быть частью VR-революции?

3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]JavaScript Meetup HCMC The document discusses 3D web programming using WebGL and Three.js. It provides an overview of WebGL and how to set it up, then introduces Three.js as a library that wraps raw WebGL code to simplify 3D graphics creation. Examples are given for basic Three.js scene setup and adding objects like cubes and lights. The document concludes with suggestions for interactive workshops using these techniques.

Bs webgl소모임004

Bs webgl소모임004Seonki Paik The document discusses implementing a basic 2D game engine in WebGL. It outlines the steps needed to create the engine including initializing WebGL, parsing shaders, generating buffers, creating mesh and material structures, and rendering. Code snippets show implementations for functions like init(), makeBuffer(), shaderParser(), Material(), Mesh(), and a basic render() function that draws object hierarchies with one draw call per object. The overall goal is to build out the core components and architecture to enable building 2D games and experiences in WebGL.

CUDA Raytracing을 이용한 Voxel오브젝트 가시성 테스트

CUDA Raytracing을 이용한 Voxel오브젝트 가시성 테스트YEONG-CHEON YOU This document discusses using ray tracing to perform visibility testing of voxel objects. It describes how ray tracing can be used to efficiently determine which voxel objects are visible without needing to render everything like with traditional occlusion culling. The key steps are:

1. Create a ray tracing buffer in GPU memory

2. Trace rays from pixels into a KD-tree of voxel objects to find the closest visible object

3. Record the object IDs in the buffer

This approach is shown to perform faster than CPU occlusion culling by implementing it using CUDA on the GPU. Testing finds it can render frames at over 60 FPS even with large voxel worlds containing 50,000+ objects.

HTML5 Canvas - Let's Draw!

HTML5 Canvas - Let's Draw!Phil Reither Let's take a look at the HTML5 element canvas. See how you can draw shapes and images, manipulate single pixels and even animate it. Given as a lecture in the fh ooe in Hagenberg, Austria in December 2011.

HTML5 Canvas

HTML5 CanvasRobyn Overstreet - HTML5 Canvas allows for dynamic drawing and animating directly in HTML using JavaScript scripting. It can be used to draw shapes, images, text and respond to user input like mouse clicks.

- The canvas element creates a grid that allows positioning images and objects by x and y coordinates. Basic drawing functions include lines, rectangles, curves and filling areas with colors.

- Transformations like translation and rotation can change the orientation of drawings on the canvas. The drawing state can be saved and restored to return to previous settings.

- Images can be drawn and manipulated at the pixel level by accessing image data. Animation is achieved by redrawing the canvas repeatedly with small changes.

- Data from sources like JSON can be

Cocos2dを使ったゲーム作成の事例

Cocos2dを使ったゲーム作成の事例Yuichi Higuchi This document discusses various topics related to the cocos2d game engine and game development. It mentions cocos2d features like CCMoveBy and CCMoveTo actions. It provides code samples for loading animation frames from a texture atlas and running animations using CCRepeatForever. It also discusses using SimpleAudioEngine for sound, and transforming sprites by modifying the CCLayer or CCSprite transform properties.

3D everywhere

3D everywhereVasilika Klimova Современный мир – это мир конкуренции. И любое преимущество перед другими может сыграть большую роль в бизнесе. Разработчиков в области 3D становится всё больше. Креативные дизайнеры изобретают всё более улётные проекты, и чтобы оставаться в тренде, нужно идти в ногу со временем. Я познакомлю вас с крутыми проектами и научу, как просто сделать 3D на сайте. Может и у вас появятся собственные идеи, как применить новейшие технологии уже сейчас!

How to Hack a Road Trip with a Webcam, a GSP and Some Fun with Node

How to Hack a Road Trip with a Webcam, a GSP and Some Fun with Nodepdeschen Part of a presentation @ nodemtl meetup. Presenting Kerouac, a real-time webapp featuring a remote GPS tracking device, a webcam and a whole lot of Node.js magic covering some basics of Node.js such as: event emitters and process spawning.

Html5 canvas

Html5 canvasGary Yeh The document provides an overview of HTML5 Canvas:

- Canvas is a 2D drawing platform that uses JavaScript and HTML without plugins, originally created by Apple and now developed as a W3C specification.

- Unlike SVG which uses separate DOM objects, Canvas is bitmap-based where everything is drawn as a single flat picture.

- The document outlines how to get started with Canvas including setting dimensions, accessing the 2D rendering context, and using methods to draw basic and complex shapes with paths, text, and images.

- It discusses using Canvas for animation, interactions, and pixel manipulation, and its potential to replace Flash in the future.

The State of JavaScript

The State of JavaScriptDomenic Denicola Our favorite language is now powering everything from event-driven servers to robots to Git clients to 3D games. The JavaScript package ecosystem has quickly outpaced past that of most other languages, allowing our vibrant community to showcase their talent. The front-end framework war has been taken to the next level, with heavy-hitters like Ember and Angular ushering in the new generation of long-lived, component-based web apps. The extensible web movement, spearheaded by the newly-reformed W3C Technical Architecture Group, has promised to place JavaScript squarely at the foundation of the web platform. Now, the language improvements of ES6 are slowly but surely making their way into the mainstream— witness the recent interest in using generators for async programming. And all the while, whispers of ES7 features are starting to circulate…

JavaScript has grown up. Now it's time to see how far it can go.

Having fun with graphs, a short introduction to D3.js

Having fun with graphs, a short introduction to D3.jsMichael Hackstein This talk is all about drawing on your webpage. We will have a short introduction to d3.js, a library to easily create SVGs in your webpage. Along the way we will render graphs using different layouting strategies. But what are the problems when displaying a graph? Just think of graphs having more vertices then you have pixels on your screen. Or what if you want a user to manupilate the graph and his changes being persistent? Michael will present his answers to this questions, ending up wit a GUI for a graph database.

Making Games in JavaScript

Making Games in JavaScriptSam Cartwright The document provides an overview of making games using JavaScript, HTML, and the DOM. It discusses JavaScript and HTML5 as languages well-suited for game development due to features like fast iteration, treating scripts as data, and allowing different game types. It covers key concepts like the DOM tree, rendering to a <canvas> element, using the 2D context to draw, implementing a main loop with setInterval(), drawing sprites and images, handling user input, and creating basic animations and games with concepts like sprites, matrices, vectors, bullets, and zombies.

Begin three.js.key

Begin three.js.keyYi-Fan Liao This document provides an introduction to using the Three.js library for 3D graphics in web pages. It explains how to set up a basic Three.js application with a renderer, scene, and camera. It then demonstrates how to add 3D objects, textures, lighting, materials, load 3D models, and perform animations. The document also provides information on topics like cameras, textures, loading different 3D file formats, model conversion, and blending 3D content into HTML.

A Novice's Guide to WebGL

A Novice's Guide to WebGLKrzysztof Kula The document provides an introduction to WebGL for novice programmers. It begins with an overview and introduction to key WebGL concepts like shaders, vertices, and fragments. It then demonstrates how to render a colored triangle in WebGL step-by-step, explaining how to set up the rendering context, compile and link shaders, specify attributes and uniforms, and render objects. The document concludes by recommending several resources for learning more about WebGL programming.

HTML 5 Canvas & SVG

HTML 5 Canvas & SVGOfir's Fridman The document compares and contrasts the Canvas and SVG elements in HTML. Canvas uses JavaScript to draw graphics via code and is rendered pixel by pixel, while SVG uses XML to draw graphics as scalable vector shapes. Canvas is better for games, charts, and advertising due to its faster rendering, while SVG is better for icons, logos, and charts due to its scalability and support for event handlers. Several common drawing methods like rectangles, circles, paths, and text are demonstrated for both Canvas and SVG.

nunuStudio Geometrix 2017

nunuStudio Geometrix 2017José Ferrão Introduction to nunuStudio, a threejs based framework for game development, information visualization and virtual reality for the web.

Maps

Mapsboybuon205 This document discusses techniques for creating scrolling maps in 2D games. It describes how to create horizontal and vertical scrolling by loading multiple background images and moving them across the screen. It also covers creating square and rhombus shaped maps by defining a viewport and converting between cell and point coordinates. Methods are provided for loading map textures, drawing the viewport region, and abstractly converting between coordinate systems for different map shapes.

Gems of GameplayKit. UA Mobile 2017.

Gems of GameplayKit. UA Mobile 2017.UA Mobile This document discusses various techniques and optimizations from Apple's GameplayKit framework. It begins by introducing GameplayKit and explaining that it is used to develop gameplay mechanics rather than rendering. Several techniques are then presented as "Gems" including using GKRandomSource for shuffling arrays, GKRTree for performant visual searches, GKPerlinNoiseSource for natural randomness, and using GKObstacleGraph for pathfinding around obstacles. Links are provided at the end for further information on GameplayKit and related algorithms.

Ad

More Related Content

What's hot (20)

Introduction to three.js & Leap Motion

Introduction to three.js & Leap MotionLee Trout This document provides an overview of three.js and the Leap Motion controller for creating 3D graphics and interactions in a web browser. It explains some basic 3D graphics concepts like scenes, objects, materials and lighting used in three.js. It then demonstrates how to load three.js, add basic 3D shapes, import 3D models, and add lighting and shadows. It also introduces the Leap Motion controller for hand tracking input and shows an example of using it with three.js. Finally, it discusses a project using these tools to create an interactive 3D experience of the US Capitol dome.

ENEI16 - WebGL with Three.js

ENEI16 - WebGL with Three.jsJosé Ferrão This document summarizes a presentation on using the three.js library to create 3D graphics in web browsers using WebGL. It introduces key three.js concepts like scenes, objects, transformations, lights, cameras, materials, textures, and rendering. It also covers 3D topics like shaders, normals mapping, reflections and VR using WebVR. The presentation aims to introduce basic 3D graphics programming concepts and explore what is possible with three.js for 3D on the web.

Three.js basics

Three.js basicsVasilika Klimova This document provides an overview of the Three.js library for creating 3D graphics in web browsers using WebGL. It discusses key Three.js concepts like scenes, cameras, lights, materials, and textures. It also provides examples of how to load 3D models and textures, set up animations, and add interactivity using controls. Useful links are included for learning more about Three.js and WebGL fundamentals.

Портируем существующее Web-приложение в виртуальную реальность / Денис Радин ...

Портируем существующее Web-приложение в виртуальную реальность / Денис Радин ...Ontico РИТ++ 2017, Frontend Сonf

Зал Мумбаи, 5 июня, 17:00

Тезисы:

http://frontendconf.ru/2017/abstracts/2478.html

Виртуальная реальность - мощный тренд, который до текущего момента обходил стороной веб-разработчиков. Данный доклад о том, как интегрировать существующие Web-приложения в миры виртуальной реальности, предоставляя вашим пользователям новые возможности и UX, а себе дозу фана.

Должны ли мы использовать CSS или WebGL для проброса приложения в VR?

Какие решения доступны на текущий момент, и каких ошибок стоит остерегаться?

Почему HTML так же хорош для разработки VR-интерфейсов, как и для обычного, плоского Web?

Как веб-разработчик может быть частью VR-революции?

3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]](https://cdn.slidesharecdn.com/ss_thumbnails/thanhlocvo-3dprogramming-131125060122-phpapp02-thumbnail.jpg?width=560&fit=bounds)

3D Web Programming [Thanh Loc Vo , CTO Epsilon Mobile ]JavaScript Meetup HCMC The document discusses 3D web programming using WebGL and Three.js. It provides an overview of WebGL and how to set it up, then introduces Three.js as a library that wraps raw WebGL code to simplify 3D graphics creation. Examples are given for basic Three.js scene setup and adding objects like cubes and lights. The document concludes with suggestions for interactive workshops using these techniques.

Bs webgl소모임004

Bs webgl소모임004Seonki Paik The document discusses implementing a basic 2D game engine in WebGL. It outlines the steps needed to create the engine including initializing WebGL, parsing shaders, generating buffers, creating mesh and material structures, and rendering. Code snippets show implementations for functions like init(), makeBuffer(), shaderParser(), Material(), Mesh(), and a basic render() function that draws object hierarchies with one draw call per object. The overall goal is to build out the core components and architecture to enable building 2D games and experiences in WebGL.

CUDA Raytracing을 이용한 Voxel오브젝트 가시성 테스트

CUDA Raytracing을 이용한 Voxel오브젝트 가시성 테스트YEONG-CHEON YOU This document discusses using ray tracing to perform visibility testing of voxel objects. It describes how ray tracing can be used to efficiently determine which voxel objects are visible without needing to render everything like with traditional occlusion culling. The key steps are:

1. Create a ray tracing buffer in GPU memory

2. Trace rays from pixels into a KD-tree of voxel objects to find the closest visible object

3. Record the object IDs in the buffer

This approach is shown to perform faster than CPU occlusion culling by implementing it using CUDA on the GPU. Testing finds it can render frames at over 60 FPS even with large voxel worlds containing 50,000+ objects.

HTML5 Canvas - Let's Draw!

HTML5 Canvas - Let's Draw!Phil Reither Let's take a look at the HTML5 element canvas. See how you can draw shapes and images, manipulate single pixels and even animate it. Given as a lecture in the fh ooe in Hagenberg, Austria in December 2011.

HTML5 Canvas

HTML5 CanvasRobyn Overstreet - HTML5 Canvas allows for dynamic drawing and animating directly in HTML using JavaScript scripting. It can be used to draw shapes, images, text and respond to user input like mouse clicks.

- The canvas element creates a grid that allows positioning images and objects by x and y coordinates. Basic drawing functions include lines, rectangles, curves and filling areas with colors.

- Transformations like translation and rotation can change the orientation of drawings on the canvas. The drawing state can be saved and restored to return to previous settings.

- Images can be drawn and manipulated at the pixel level by accessing image data. Animation is achieved by redrawing the canvas repeatedly with small changes.

- Data from sources like JSON can be

Cocos2dを使ったゲーム作成の事例

Cocos2dを使ったゲーム作成の事例Yuichi Higuchi This document discusses various topics related to the cocos2d game engine and game development. It mentions cocos2d features like CCMoveBy and CCMoveTo actions. It provides code samples for loading animation frames from a texture atlas and running animations using CCRepeatForever. It also discusses using SimpleAudioEngine for sound, and transforming sprites by modifying the CCLayer or CCSprite transform properties.

3D everywhere

3D everywhereVasilika Klimova Современный мир – это мир конкуренции. И любое преимущество перед другими может сыграть большую роль в бизнесе. Разработчиков в области 3D становится всё больше. Креативные дизайнеры изобретают всё более улётные проекты, и чтобы оставаться в тренде, нужно идти в ногу со временем. Я познакомлю вас с крутыми проектами и научу, как просто сделать 3D на сайте. Может и у вас появятся собственные идеи, как применить новейшие технологии уже сейчас!

How to Hack a Road Trip with a Webcam, a GSP and Some Fun with Node

How to Hack a Road Trip with a Webcam, a GSP and Some Fun with Nodepdeschen Part of a presentation @ nodemtl meetup. Presenting Kerouac, a real-time webapp featuring a remote GPS tracking device, a webcam and a whole lot of Node.js magic covering some basics of Node.js such as: event emitters and process spawning.

Html5 canvas

Html5 canvasGary Yeh The document provides an overview of HTML5 Canvas:

- Canvas is a 2D drawing platform that uses JavaScript and HTML without plugins, originally created by Apple and now developed as a W3C specification.

- Unlike SVG which uses separate DOM objects, Canvas is bitmap-based where everything is drawn as a single flat picture.

- The document outlines how to get started with Canvas including setting dimensions, accessing the 2D rendering context, and using methods to draw basic and complex shapes with paths, text, and images.

- It discusses using Canvas for animation, interactions, and pixel manipulation, and its potential to replace Flash in the future.

The State of JavaScript

The State of JavaScriptDomenic Denicola Our favorite language is now powering everything from event-driven servers to robots to Git clients to 3D games. The JavaScript package ecosystem has quickly outpaced past that of most other languages, allowing our vibrant community to showcase their talent. The front-end framework war has been taken to the next level, with heavy-hitters like Ember and Angular ushering in the new generation of long-lived, component-based web apps. The extensible web movement, spearheaded by the newly-reformed W3C Technical Architecture Group, has promised to place JavaScript squarely at the foundation of the web platform. Now, the language improvements of ES6 are slowly but surely making their way into the mainstream— witness the recent interest in using generators for async programming. And all the while, whispers of ES7 features are starting to circulate…

JavaScript has grown up. Now it's time to see how far it can go.

Having fun with graphs, a short introduction to D3.js

Having fun with graphs, a short introduction to D3.jsMichael Hackstein This talk is all about drawing on your webpage. We will have a short introduction to d3.js, a library to easily create SVGs in your webpage. Along the way we will render graphs using different layouting strategies. But what are the problems when displaying a graph? Just think of graphs having more vertices then you have pixels on your screen. Or what if you want a user to manupilate the graph and his changes being persistent? Michael will present his answers to this questions, ending up wit a GUI for a graph database.

Making Games in JavaScript

Making Games in JavaScriptSam Cartwright The document provides an overview of making games using JavaScript, HTML, and the DOM. It discusses JavaScript and HTML5 as languages well-suited for game development due to features like fast iteration, treating scripts as data, and allowing different game types. It covers key concepts like the DOM tree, rendering to a <canvas> element, using the 2D context to draw, implementing a main loop with setInterval(), drawing sprites and images, handling user input, and creating basic animations and games with concepts like sprites, matrices, vectors, bullets, and zombies.

Begin three.js.key

Begin three.js.keyYi-Fan Liao This document provides an introduction to using the Three.js library for 3D graphics in web pages. It explains how to set up a basic Three.js application with a renderer, scene, and camera. It then demonstrates how to add 3D objects, textures, lighting, materials, load 3D models, and perform animations. The document also provides information on topics like cameras, textures, loading different 3D file formats, model conversion, and blending 3D content into HTML.

A Novice's Guide to WebGL

A Novice's Guide to WebGLKrzysztof Kula The document provides an introduction to WebGL for novice programmers. It begins with an overview and introduction to key WebGL concepts like shaders, vertices, and fragments. It then demonstrates how to render a colored triangle in WebGL step-by-step, explaining how to set up the rendering context, compile and link shaders, specify attributes and uniforms, and render objects. The document concludes by recommending several resources for learning more about WebGL programming.

HTML 5 Canvas & SVG

HTML 5 Canvas & SVGOfir's Fridman The document compares and contrasts the Canvas and SVG elements in HTML. Canvas uses JavaScript to draw graphics via code and is rendered pixel by pixel, while SVG uses XML to draw graphics as scalable vector shapes. Canvas is better for games, charts, and advertising due to its faster rendering, while SVG is better for icons, logos, and charts due to its scalability and support for event handlers. Several common drawing methods like rectangles, circles, paths, and text are demonstrated for both Canvas and SVG.

nunuStudio Geometrix 2017

nunuStudio Geometrix 2017José Ferrão Introduction to nunuStudio, a threejs based framework for game development, information visualization and virtual reality for the web.

Similar to HTML5 game dev with three.js - HexGL (20)

Maps

Mapsboybuon205 This document discusses techniques for creating scrolling maps in 2D games. It describes how to create horizontal and vertical scrolling by loading multiple background images and moving them across the screen. It also covers creating square and rhombus shaped maps by defining a viewport and converting between cell and point coordinates. Methods are provided for loading map textures, drawing the viewport region, and abstractly converting between coordinate systems for different map shapes.

Gems of GameplayKit. UA Mobile 2017.

Gems of GameplayKit. UA Mobile 2017.UA Mobile This document discusses various techniques and optimizations from Apple's GameplayKit framework. It begins by introducing GameplayKit and explaining that it is used to develop gameplay mechanics rather than rendering. Several techniques are then presented as "Gems" including using GKRandomSource for shuffling arrays, GKRTree for performant visual searches, GKPerlinNoiseSource for natural randomness, and using GKObstacleGraph for pathfinding around obstacles. Links are provided at the end for further information on GameplayKit and related algorithms.

Learning Predictive Modeling with TSA and Kaggle

Learning Predictive Modeling with TSA and KaggleYvonne K. Matos This document summarizes Yvonne Matos' presentation on learning predictive modeling by participating in Kaggle challenges using TSA passenger screening data.

The key points are:

1) Matos started with a small subset of 120 images from one body zone to build initial neural network models and address challenges of large data sizes and compute requirements.

2) Through iterative tuning, her best model achieved good performance identifying non-threat images but had a high false negative rate for threats.

3) Her next steps were to reduce the false negative rate, run models on Google Cloud to handle full data sizes, and prepare the best model for real-world use.

Real life XNA

Real life XNAJohan Lindfors The document discusses using XNA for game development on Windows Phone. It covers topics like using 2D and 3D graphics, handling input from touches, sensors and orientation changes, integrating networking, ads and Xbox Live functionality, and optimizing performance. Code examples are provided for common tasks like drawing sprites, handling input and animating objects. Future directions for XNA on other platforms like Silverlight and Windows 8 are also mentioned.

Exploring Canvas

Exploring CanvasKevin Hoyt The document provides an overview of using the HTML5 canvas element to draw graphics and animations. It covers topics like rendering contexts, paths, styles, gradients, text, shapes, mouse/touch interaction, animation, and libraries. Code examples demonstrate how to draw basic shapes, handle user input, interpolate lines, add gradients, render to canvas, and more. The document is a tutorial for learning the capabilities of the canvas element.

Intro to HTML5 Canvas

Intro to HTML5 CanvasJuho Vepsäläinen Brief introduction to HTML5 Canvas. Libs: https://github.com/bebraw/jswiki/wiki (multimedia, graphics). Demos: http://jsdo.it/bebraw/codes

The Ring programming language version 1.5.3 book - Part 58 of 184

The Ring programming language version 1.5.3 book - Part 58 of 184Mahmoud Samir Fayed This document provides an overview of how to build a 2D game engine in Ring using different programming paradigms. It discusses using declarative programming for the game layer, object-oriented programming for game engine classes, and procedural programming for the graphics library interface. The document outlines the layers of the engine including graphics library bindings, the interface layer, game engine classes, and the games layer. It provides details on key classes like Game, GameObject, Sprite, Text, Animate, Sound, and Map that can be used to build games. Examples are given for creating a game window and drawing/animating text to demonstrate using the engine.

The Ring programming language version 1.5.3 book - Part 48 of 184

The Ring programming language version 1.5.3 book - Part 48 of 184Mahmoud Samir Fayed This document provides documentation on creating a 2D game engine in Ring. It discusses organizing the project into layers, including the games layer, game engine classes layer, and interface to graphics library layer. It then describes the key classes in the game engine - Game, GameObject, Sprite, Text, Animate, Sound, and Map. It provides details on the attributes and methods for each class. It also provides an example of how to load the game engine library, create a Game object, and start drawing text to the screen. The document is intended to teach how to structure a 2D game engine project using different programming paradigms in Ring.

Pointer Events in Canvas

Pointer Events in Canvasdeanhudson This document discusses game loop architecture and basic game entities in HTML5 games. It describes setting up a requestAnimationFrame loop to call update and draw on game entities each frame. A simple GameEntity class is provided to manage position and respond to update calls. A Rect class is presented as a basic drawing primitive. Handling of mouse/touch events via normalization and containment checks is outlined. Pixel manipulation with ImageData is demonstrated for effects and hit detection.

Need an detailed analysis of what this code-model is doing- Thanks #St.pdf

Need an detailed analysis of what this code-model is doing- Thanks #St.pdfactexerode Need an detailed analysis of what this code/model is doing. Thanks

#Step 1: Import the required Python libraries:

import numpy as np

import matplotlib.pyplot as plt

import keras

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam,SGD

from keras.datasets import cifar10

#Step 2: Load the data.

#Loading the CIFAR10 data

(X, y), (_, _) = keras.datasets.cifar10.load_data()

#Selecting a single class of images

#The number was randomly chosen and any number

#between 1 and 10 can be chosen

X = X[y.flatten() == 8]

#Step 3: Define parameters to be used in later processes.

#Defining the Input shape

image_shape = (32, 32, 3)

latent_dimensions = 100

#Step 4: Define a utility function to build the generator.

def build_generator():

model = Sequential()

#Building the input layer

model.add(Dense(128 * 8 * 8, activation="relu",

input_dim=latent_dimensions))

model.add(Reshape((8, 8, 128)))

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(Conv2D(3, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

#Generating the output image

noise = Input(shape=(latent_dimensions,))

image = model(noise)

return Model(noise, image)

#Step 5: Define a utility function to build the discriminator.

def build_discriminator():

#Building the convolutional layers

#to classify whether an image is real or fake

model = Sequential()

model.add(Conv2D(32, kernel_size=3, strides=2,

input_shape=image_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(ZeroPadding2D(padding=((0,1),(0,1))))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

#Building the output layer

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

image = Input(shape=image_shape)

validity = model(image)

return Model(image, validity)

#Step 6: Define a utility function to display the generated images.

def display_images():

# Generate a batch of random noise

noise = np.random.normal(0, 1, (16, latent_dimensions))

# Generate images from the noise

generated_images = generator.predict(noise)

# Rescale the images to 0.

Deep dive into deeplearn.js

Deep dive into deeplearn.jsKai Sasaki Deeplearn.js is a deep learning library that runs models in the browser using WebGL acceleration. It represents models as computation graphs of nodes and tensors. Kernels are implemented to run operations on GPUs or CPUs. The library can import models from TensorFlow and allows both training and inference. Future work includes directly importing TensorFlow models and improving demos.

Stupid Canvas Tricks

Stupid Canvas Tricksdeanhudson This document provides tips and tricks for using the Canvas API, with a focus on game programming and bitmaps. It discusses setting up an animation loop using requestAnimationFrame, caching techniques like double buffering to improve performance, and manipulating pixel data directly using ImageData to implement features like hit detection and image filters. The document encourages profiling code and considers challenges in testing Canvas code.

Html5 game programming overview

Html5 game programming overview민태 김 The document discusses using HTML5 and JavaScript to create games. It provides an overview of key game development concepts like media resource control, graphics acceleration, and networking protocols. It then introduces Crafty, an open source JavaScript game engine, demonstrating how to set up a game scene, control sprites, handle player input, fire bullets, and spawn asteroids using Crafty's component-based system.

The Ring programming language version 1.3 book - Part 38 of 88

The Ring programming language version 1.3 book - Part 38 of 88Mahmoud Samir Fayed This document describes a game engine project built in Ring for creating 2D games. It discusses the different layers of the project, including the games layer, game engine classes layer, interface to graphics libraries layer, and graphics library bindings layer. It provides details on the RingAllegro and RingLibSDL libraries used for graphics. It outlines the main game engine classes like Game, GameObject, Sprite, Text, Animate, Sound, and Map. It gives examples of how to use the engine to create a game window, draw text, and move text. The goal of the project is to allow games to be built with Ring using different programming paradigms like declarative programming for games and object-oriented programming for the engine classes

Bindings: the zen of montage

Bindings: the zen of montageKris Kowal The document discusses MontageJS bindings and how they can be used to define relationships between object properties. It provides examples of different types of bindings including property bindings, map bindings, and array range bindings. It also previews upcoming changes to how bindings will be defined and array operations will work.

Presentation: Plotting Systems in R

Presentation: Plotting Systems in RIlya Zhbannikov Describes three plotting systems in R: base, lattice and ggplot2. Example code can be found here: https://github.com/TriangleR/PlottingSystemsInR

Can someone please explain what the code below is doing and comment on.pdf

Can someone please explain what the code below is doing and comment on.pdfkuldeepkumarapgsi Can someone please explain what the code below is doing and comment on its network

performance? Please and thank you.

import numpy as np

import matplotlib.pyplot as plt

import keras

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam,SGD

from keras.datasets import cifar10

#Loading the CIFAR10 data

(X, y), (_, _) = keras.datasets.cifar10.load_data()

#Selecting a single class of images

#The number was randomly chosen and any number

#between 1 and 10 can be chosen

X = X[y.flatten() == 8]

#Defining the Input shape

image_shape = (32, 32, 3)

latent_dimensions = 100

#Define a utility function to build the generator.

def build_generator():

model = Sequential()

#Building the input layer

model.add(Dense(128 * 8 * 8, activation="relu",

input_dim=latent_dimensions))

model.add(Reshape((8, 8, 128)))

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(Conv2D(3, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

#Generating the output image

noise = Input(shape=(latent_dimensions,))

image = model(noise)

return Model(noise, image)

#Define a utility function to build the discriminator.

def build_discriminator():

#Building the convolutional layers

#to classify whether an image is real or fake

model = Sequential()

model.add(Conv2D(32, kernel_size=3, strides=2,

input_shape=image_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(ZeroPadding2D(padding=((0,1),(0,1))))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

#Building the output layer

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

image = Input(shape=image_shape)

validity = model(image)

return Model(image, validity)

#Step 6: Define a utility function to display the generated images.

def display_images():

r, c = 4,4

noise = np.random.normal(0, 1, (r * c,latent_dimensions))

generated_images = generator.predict(noise)

#Scaling the generated images

generated_images = 0.5 * generated_images + 0.5

fig, axs = plt.subplots(r, c)

count = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(ge.

A More Flash Like Web?

A More Flash Like Web?Murat Can ALPAY This document provides an overview of HTML5 and its capabilities for building interactive web applications. It discusses the history and development of HTML5, including the roles of the WHATWG and W3C. It also summarizes key HTML5 features such as JavaScript, Canvas, WebSockets, storage options, and emerging 3D capabilities. Throughout, it provides examples of how these features can be used to create games, multimedia experiences, and real-time applications on the modern web.

COMPUTER GRAPHICS LAB MANUAL

COMPUTER GRAPHICS LAB MANUALVivek Kumar Sinha This document contains a computer graphics lab manual with instructions and sample code for programming graphics experiments. It includes:

1. An introduction and list of experiments covering topics like drawing lines, circles, ellipses, implementing transformations and clipping.

2. Samples of experiment documents with aims, descriptions of algorithms, code samples and questions. The experiments cover drawing pixels, lines using DDA and Bresenham's algorithms, circles using Bresenham's algorithm, and ellipses.

3. The code samples demonstrate how to use graphics functions in C like initgraph, putpixel, getpixel to implement various computer graphics algorithms.

How to make a video game

How to make a video gamedandylion13 The document provides instructions and examples for making games using HTML5 canvas and JavaScript. It discusses using canvas to draw basic shapes and images. It introduces the concept of sprites as reusable drawing components and provides an example sprite class. It demonstrates how to create a game loop to continuously update and render sprites to animate them. It also provides an example of making a sprite respond to keyboard input to allow user control. The document serves as a tutorial for building the core components of a simple HTML5 canvas game.

Ad

Recently uploaded (20)

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

HTML5 game dev with three.js - HexGL

- 1. The making of HexGL

- 2. • Thibaut Despoulain (@bkcore – bkcore.com) • 22 year-old student in Computer Engineering • University of Technology of Belfort-Montbéliard (France) • Web dev and 3D enthousiast • The guy behind the HexGL project

- 4. • Fast-paced, futuristic racing game • Inspired by the F-Zero and Wipeout series • HTML5, JavaScript, WebGL (via Three.js) • Less than 2 months • Just me.

- 9. • JavaScript API • OpenGL ES 2.0 • Chrome, FireFox, (Opera, Safari) • <Canvas> (HTML5)

- 11. • Rendering engine • Maintained by Ricardo Cabello (MrDoob) and Altered Qualia • R50/stable • + : Active community, stable, updated frequently • - : Documentation

- 15. • First « real » game • 2 months to learn and code • Little to no modeling and texturing skills • Physics? Controls? Gameplay?

- 16. • Last time I could have 2 months free • Visibility to get an internship • Huge learning opportunity • Explore Three.js for good

- 18. • Third-party physics engine (rejected) – Slow learning curve – Not really meant for racing games

- 19. • Ray casting (rejected) – Heavy perfomance-wise – Needs Octree-like structure – > too much time to learn and implement

- 20. • Home-made 2D approximation – Little to no learning curve – Easy to implement with 2D maps – Pretty fast – > But with some limitations

- 23. • Home-made 2D approximation – No track overlap – Limited track twist and gradient – Accuracy depends on map resolution – > Enough for what I had in mind

- 25. • No pixel getter on JS Image object/tag • Canvas2D to the rescue

- 26. Load data Draw it on a Get canvas Drawing Getting Loading texture with JS Canvas using pixels using Image object 2D context getImageData()

- 27. • ImageData (BKcore package) – Github.com/Bkcore/bkcore-js var a = new bkcore.ImageData(path, callback); //… a.getPixel(x, y); a.getPixelBilinear(xf, yf); // -> {r, g, b, a};

- 28. Game loop: Convert world position to pixel indexes Get current pixel intensity (red) If pixel is not white: Collision Test pixels relatively (front, left, right) :end

- 29. Front Left Right Track Void

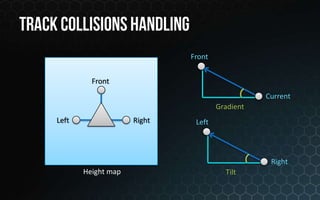

- 30. Front Front Current Gradient Left Right Left Right Height map Tilt

- 34. Model .OBJ Python Three.js Materials converter JSON model .MTL $ python convert_obj_three.py -i mesh.obj -o mesh.js

- 35. var scene = new THREE.Scene(); var loader = new THREE.JSONLoader(); var createMesh = function(geometry) { var mesh = new THREE.Mesh(geometry, new THREE.MeshFaceMaterial()); mesh.position.set(0, 0, 0); mesh.scale.set(3, 3, 3); scene.add(mesh); }; loader.load("mesh.js", createMesh);

- 37. var renderer = new THREE.WebGLRenderer({ antialias: false, clearColor: 0x000000 }); renderer.autoClear = false; renderer.sortObjects = false; renderer.setSize(width, height); document.body.appendChild(renderer.domElement);

- 38. renderer.gammaInput = true; renderer.gammaOutput = true; renderer.shadowMapEnabled = true; renderer.shadowMapSoft = true;

- 40. • Blinn-phong – Diffuse + Specular + Normal + Environment maps • THREE.ShaderLib.normal – > Per vertex point lights • Custom shader with per-pixel point lights for the road – > Booster light

- 42. var boosterSprite = new THREE.Sprite( { map: spriteTexture, blending: THREE.AdditiveBlending, useScreenCoordinates: false, color: 0xffffff }); boosterSprite.mergeWith3D = false; boosterMesh.add(boosterSprite);

- 44. var material = new THREE.ParticleBasicMaterial({ color: 0xffffff, map: THREE.ImageUtils.loadTexture(“tex.png”), size: 4, blending: THREE.AdditiveBlending, depthTest: false, transparent: true, vertexColors: true, sizeAttenuation: true });

- 45. var pool = []; var geometry = new THREE.Geometry(); geometry.dynamic = true; for(var i = 0; i < 1000; ++i) { var p = new bkcore.Particle(); pool.push(p); geometry.vertices.push(p.position); geometry.colors.push(p.color); }

- 46. bkcore.Particle = function() { this.position = new THREE.Vector3(); this.velocity = new THREE.Vector3(); this.force = new THREE.Vector3(); this.color = new THREE.Color(0x000000); this.basecolor = new THREE.Color(0x000000); this.life = 0.0; this.available = true; }

- 47. var system = new THREE.ParticleSystem( geometry, material ); system.sort = false; system.position.set(x, y, z); system.rotation.set(a, b, c); scene.add(system);

- 48. // Particle physics var p = pool[i]; p.position.addSelf(p.velocity); //… geometry.verticesNeedUpdate = true; geometry.colorsNeedUpdate = true;

- 49. • Particles (BKcore package) – Github.com/BKcore/Three-extensions

- 50. var clouds = new bkcore.Particles({ opacity: 0.8, tint: 0xffffff, color: 0x666666, color2: 0xa4f1ff, texture: THREE.ImageUtils.loadTexture(“cloud.png”), blending: THREE.NormalBlending, size: 6, life: 60, max: 500, spawn: new THREE.Vector3(3, 3, 0), spawnRadius: new THREE.Vector3(1, 1, 2), velocity: new THREE.Vector3(0, 0, 4), randomness: new THREE.Vector3(5, 5, 1) });

- 53. • Built-in support for off-screen passes • Already has some pre-made post effects – Bloom – FXAA • Easy to use and Extend • Custom shaders

- 54. var renderTargetParameters = { minFilter: THREE.LinearFilter, magFilter: THREE.LinearFilter, format: THREE.RGBFormat, stencilBuffer: false }; var renderTarget = new THREE.WebGLRenderTarget( width, height, renderTargetParameters );

- 55. var composer = new THREE.EffectComposer( renderer, renderTarget ); composer.addPass( … ); composer.render();

- 56. • Generic passes – RenderPass – ShaderPass – SavePass – MaskPass • Pre-made passes – BloomPass – FilmPass – Etc.

- 57. var renderModel = new THREE.RenderPass( scene, camera ); renderModel.clear = false; composer.addPass(renderModel);

- 58. var effectBloom = new THREE.BloomPass( 0.8, // Strengh 25, // Kernel size 4, // Sigma 256 // Resolution ); composer.addPass(effectBloom);

- 60. var hexvignette: { uniforms: { tDiffuse: { type: "t", value: 0, texture: null }, tHex: { type: "t", value: 1, texture: null}, size: { type: "f", value: 512.0}, color: { type: "c", value: new THREE.Color(0x458ab1) } }, fragmentShader: [ "uniform float size;", "uniform vec3 color;", "uniform sampler2D tDiffuse;", "uniform sampler2D tHex;", "varying vec2 vUv;", "void main() { ... }" ].join("n") };

- 61. var effectHex = new THREE.ShaderPass(hexvignette); effectHex.uniforms['size'].value = 512.0; effectHex.uniforms['tHex'].texture = hexTexture; composer.addPass(effectHex); //… effectHex.renderToScreen = true;