Hypergraph for consensus optimization

2 likes499 views

Describes efficient way to implement graph-parallel computing by partitioning graph edges among computing nodes. From IEEE BigData 2013.

1 of 18

Downloaded 15 times

Recommended

Poster2013

Poster2013xinhuima Dr. Xinhui Ma's research focuses on local refinement of T-splines, NURBS compatible subdivision surfaces, and adaptive finite element methods. He aims to bring together the flexibility of subdivision surfaces and the accuracy of NURBS for engineering and entertainment applications. His work also involves developing efficient local refinement approaches for T-splines to improve design and simulation speed.

Parallel Computing 2007: Bring your own parallel application

Parallel Computing 2007: Bring your own parallel applicationGeoffrey Fox This document discusses parallelizing several algorithms and applications including k-means clustering, frequent itemset mining, integer programming, computer chess, and support vector machines (SVM). For k-means and frequent itemset mining, the algorithms can be parallelized by partitioning the data across processors and performing partial computations locally before combining results with an allreduce operation. Computer chess can be parallelized by exploring different game tree branches simultaneously on different processors. SVM problems involve large dense matrices that are difficult to solve in parallel directly due to their size exceeding memory; alternative approaches include solving smaller subproblems independently.

Analysis of Impact of Graph Theory in Computer Application

Analysis of Impact of Graph Theory in Computer ApplicationIRJET Journal This document discusses several applications of graph theory in computer science. It summarizes how graph theory is used in map coloring, mobile phone networks, computer network security, modeling ad-hoc networks, fault tolerant computing systems, and clustering web documents. Graph theory provides structural models that can represent problems in these domains and enable new algorithms and solutions. Key applications mentioned include using graph coloring for frequency assignment in mobile networks, modeling network topology for worm propagation analysis, and representing documents and their relationships as graphs for clustering. Overall, the document outlines how graph theoretical concepts and methodologies are widely utilized to solve problems in computer science research areas.

3D Graph Drawings: Good Viewing for Occluded Vertices

3D Graph Drawings: Good Viewing for Occluded VerticesIJERA Editor This document presents a method for drawing 3D graphs using a force-directed algorithm to minimize vertex-vertex occlusions. The Fruchterman and Reingold algorithm is used as a framework, representing vertices as electrically charged particles and edges as springs. Repulsive forces push vertices apart while attractive forces pull connected vertices together. The algorithm iteratively computes vertex displacements until equilibrium is reached. Experiments in Gephi software show the algorithm effectively separates vertices to produce good 3D graph views without occlusions, even for large graphs with hundreds of vertices.

Graph chi

Graph chiJay Rathod GraphChi: Large-Scale Graph Computation on Just a PC

published by Aapo Kyrola, Guy Blelloch and Carlos Guestrin.

[OSDI 2012]

For handling large graph that containing millions of vertices and billions of edges, a distributed computing cluster is required. The amount of data that the graph contains is also large. By using cloud services we can easily perform operations on the graph in a distributed environment. But the distributed system has some disadvantages like concurrency, security, scalability and failure handling. The reason why large Graphs are so hard from system perspective is therefore in the computation. A bit surprising motivation comes from thinking about scalability in large scale. From the perspective of programmers, debugging and writing & optimizing distributed algorithms are hard.

Now such big problems if we are able to run in single machine with your IDE and its debugger then the productivity and efficiency would be better. GraphChi - a disk-based system able to computing on large scale of graph efficiently. For that a novel “parallel sliding windows” method is very useful. By using this method, GraphChi is able to execute several advanced data mining on very large graph using just a single consumer – level computer.

Clusters are complex, and expensive to scale, while in this new model, it is very simple we can double the throughput by doubling the machines. The industry wants to compute many tasks on the same graph. Cluster just to compute one single task. To compute tasks faster, you grow the cluster. But this work allows a different way. Since one machine can handle one big task, you can dedicate one task per machine.

Paper introduction to Combinatorial Optimization on Graphs of Bounded Treewidth

Paper introduction to Combinatorial Optimization on Graphs of Bounded TreewidthYu Liu This slides introduced the paper: H. L. Bodlaender and a. M. C. a. Koster, “Combinatorial Optimization on Graphs of Bounded Treewidth,” Comput. J., vol. 51, no. 3, pp. 255–269, Nov. 2007.

3D transformation computer graphic

3D transformation computer graphicrishi ram khanal This document discusses 3D transformations in computer graphics. It defines transformations as changing something via rules, and notes they are important for moving objects on screen and specifying camera views of 3D scenes. It specifically discusses translation as moving an object by applying offsets to its x, y, and z coordinates. Rotation is defined as transforming an object around an axis, which can be achieved through sequential x, y, and z axis rotations. References for further information on the topic are also provided.

post119s1-file3

post119s1-file3Venkata Suhas Maringanti This document presents a GPU-accelerated algorithm for solving the Group Steiner Problem (GSP) which arises in routing phases of VLSI circuit design. The algorithm uses a depth-bounded heuristic to construct approximate minimum-cost Steiner trees in parallel using CUDA-aware MPI. Evaluation on a supercomputer shows the parallel implementation achieves up to 302x speedup over serial algorithms and scales well with problem size and processor count.

Implementation and Performance Analysis of a Vedic Multiplier Using Tanner ED...

Implementation and Performance Analysis of a Vedic Multiplier Using Tanner ED...ijsrd.com high density, VLSI chips have led to rapid and innovative development in low power design during the recent years .The need for low power design is becoming a major issue in high performance digital systems such as microprocessor, digital signal processor and other applications. For these applications, Multiplier is the major core block. Based on the Multiplier design, an efficient processor is designed. Power and area efficient multiplier using CMOS logic circuits for applications in various digital signal processors is designed. This multiplier is implemented using Vedic multiplication algorithms mainly the "UrdhvaTriyakBhyam sutra., which is the most generalized one Vedic multiplication algorithm [1] . A multiplier is a very important element in almost all the processors and contributes substantially to the total power consumption of the system. The novel point is the efficient use of Vedic algorithm (sutras) that reduces the number of computational steps considerably compared with any conventional method . The schematic for this multiplier is designed using TANNER TOOL. Paper presents a systematic design methodology for this improved performance digital multiplier based on Vedic mathematics.

A Novel Algebraic Variety based Model for High Quality Free-viewpoint View Sy...

A Novel Algebraic Variety based Model for High Quality Free-viewpoint View Sy...Mansi Sharma This paper presents a new depth-image-based rendering algorithm for free-viewpoint 3DTV applications. The cracks, holes, ghost countors caused by visibility, disocclusion, resampling problems associated with 3D warping lead to serious rendering artifacts in synthesized virtual views. This challenging problem of hole filling is formulated as an algebraic matrix completion problem on a higher dimensional space of monomial features described by a novel variety model. The high level idea of this work is to exploit the linear or nonlinear structures of the data and interpolate missing values by solving algebraic varieties associated with Hankel matrices as a member of Krylov subspace. The proposed model effectively handles artifacts appear in wide-baseline spatial view interpolation and arbitrary camera movements. Our model has a low runtime and results excel with state-of-the-art methods in quantitative and qualitative evaluation.

HPC with Clouds and Cloud Technologies

HPC with Clouds and Cloud TechnologiesInderjeet Singh This document discusses using cloud computing technologies for data analysis applications. It presents different cloud runtimes like Hadoop, DryadLINQ, and CGL-MapReduce and compares their features to MPI. Applications like Cap3 and HEP are well-suited for cloud runtimes while iterative applications show higher overhead. Results show that as the number of VMs per node increases, MPI performance decreases by up to 50% compared to bare metal nodes. Integration of MapReduce and MPI could help improve performance of some applications on clouds.

Bivariatealgebraic integerencoded arai algorithm for

Bivariatealgebraic integerencoded arai algorithm foreSAT Publishing House IJRET : International Journal of Research in Engineering and Technology is an international peer reviewed, online journal published by eSAT Publishing House for the enhancement of research in various disciplines of Engineering and Technology. The aim and scope of the journal is to provide an academic medium and an important reference for the advancement and dissemination of research results that support high-level learning, teaching and research in the fields of Engineering and Technology. We bring together Scientists, Academician, Field Engineers, Scholars and Students of related fields of Engineering and Technology

IRJET- Approximate Multiplier and 8 Bit Dadda Multiplier Implemented through ...

IRJET- Approximate Multiplier and 8 Bit Dadda Multiplier Implemented through ...IRJET Journal This document discusses the design and implementation of approximate multipliers for image processing applications. It proposes two new 4x4 approximate adders to reduce hardware complexity in a Dadda multiplier architecture. An 8x8 unsigned Dadda tree multiplier is modeled to evaluate the impact of using the proposed adders, which reduce the partial products into fewer columns while maintaining accuracy. Experimental results show the proposed multipliers achieve lower power-delay product compared to other approximate designs for similar precision in applications like JPEG image compression.

EfficientNet

EfficientNetChangjin Lee The document proposes a compound scaling method to scale neural networks efficiently along their depth, width, and resolution dimensions simultaneously. It introduces EfficientNets, a new family of models created by applying this compound scaling technique to a baseline architecture found using neural architecture search. Evaluation shows that EfficientNets outperform previous state-of-the-art convolutional neural networks like ResNet and MobileNet in terms of accuracy and efficiency.

Practical implementation of pca on satellite images

Practical implementation of pca on satellite imagesBhanu Pratap This document discusses applying principal component analysis (PCA) to satellite images to reduce dimensionality and increase classification accuracy. It finds that the R and G bands after PCA do not carry significant information, while the B and I bands do and can be used for dimensionality reduction with less computational complexity. The steps are to compute the covariance matrix and eigenvectors/values of the 4-band images, apply a linear transformation to get the principal components, and display the first few principal component images.

Exascale Computing for Autonomous Driving

Exascale Computing for Autonomous DrivingLevent Gürel Autonomous driving is one of the most computationally demanding technologies of modern times. Report here is a high-level roadmap towards satisfying the ever-increasing

computational needs and requirements of autonomous mobility that is easily at exascale level. We demonstrate that hardware solutions alone will not be sufficient, and exascale computing demands should be met with a combination of hardware and software. In that context, algorithms with reduced computational complexities will be crucial to provide software solutions that will be integrated with available hardware, which is also limited by various mobility restrictions, such as power, space, and weight.

Probabilistic Graph Layout for Uncertain Network Visualization

Probabilistic Graph Layout for Uncertain Network VisualizationSubhashis Hazarika This document summarizes a technique for visualizing probabilistic graphs called probabilistic graph layout. It models probabilistic graphs as graphs where edge weights are probability distributions. It samples weighted graphs from the probabilistic graph and computes force-directed layouts for each sample. It then splats the node positions across samples to approximate their distributions. It also bundles edges hierarchically and colors nodes distinctly while avoiding overlap to show connections and clusters amid the uncertainty. The method was demonstrated on synthetic and protein interaction data and its limitations in stability, ambiguity and scalability were discussed.

Distributed graph summarization

Distributed graph summarizationaftab alam The document describes research on distributed graph summarization algorithms. It introduces three distributed graph summarization algorithms (DistGreedy, DistRandom, DistLSH) that can scale to large graphs by distributing computation across machines. The algorithms share a common framework of iteratively merging super-nodes representing aggregated subsets of nodes, but differ in how they select candidate pairs of super-nodes to merge. Experimental evaluation on real-world graphs demonstrates the ability of the proposed distributed algorithms to summarize large graphs in a parallelized manner.

I017425763

I017425763IOSR Journals This document discusses parallelizing graph algorithms on GPUs for optimization. It summarizes previous work on parallel Breadth-First Search (BFS), All Pair Shortest Path (APSP), and Traveling Salesman Problem (TSP) algorithms. It then proposes implementing BFS, APSP, and TSP on GPUs using optimization techniques like reducing data transfers between CPU and GPU and modifying the algorithms to maximize GPU computing power and memory usage. The paper claims this will improve performance and speedup over CPU implementations. It focuses on optimizing graph algorithms for parallel GPU processing to accelerate applications involving large graph analysis and optimization problems.

Pregel - Paper Review

Pregel - Paper ReviewMaria Stylianou The document summarizes the Pregel system, which was designed for large-scale graph processing. Pregel addresses the inefficiency of MapReduce for graph problems by allowing direct message passing between vertices during synchronized iterations. It provides fault tolerance through checkpointing and a master-worker architecture. Key contributions of Pregel include its distributed programming model and APIs for message passing, combining messages to reduce overhead, global communication through aggregators, and mutating graph topology. The paper notes strengths like fault tolerance but also weaknesses such as putting responsibility on the user and lack of master failure detection.

DDGK: Learning Graph Representations for Deep Divergence Graph Kernels

DDGK: Learning Graph Representations for Deep Divergence Graph Kernelsivaderivader This document summarizes a research paper on learning graph representations for deep divergence graph kernels (DDGK). DDGK learns graph representations without supervision or domain knowledge by using a node-to-edges encoder and isomorphism attention. The isomorphism attention provides a bidirectional mapping between nodes in two graphs. DDGK then calculates a divergence score between the source and target graphs as a measure of their (dis)similarity. Experimental results showed DDGK produces representations competitive with other graph kernel baselines. The paper proposes several extensions, including different graph encoders and attention mechanisms, as well as improved regularization and scalability.

Ling liu part 01:big graph processing

Ling liu part 01:big graph processingjins0618 This document discusses challenges in processing large graphs and introduces an approach called GraphLego. It describes how GraphLego models large graphs as 3D cubes partitioned into slices, strips and dices to balance parallel computation. GraphLego optimizes access locality by minimizing disk access and compressing partitions. It also uses regression-based learning to optimize partitioning parameters and runtime. The document evaluates GraphLego on real-world graphs, finding it outperforms existing single-machine graph processing systems in execution efficiency and partitioning decisions.

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds

Parallel Batch-Dynamic Graphs: Algorithms and Lower BoundsSubhajit Sahu Highlighted notes on Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds.

While doing research work under Prof. Kishore Kothapalli.

Laxman Dhulipala, David Durfee, Janardhan Kulkarni, Richard Peng, Saurabh Sawlani, Xiaorui Sun:

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds. SODA 2020: 1300-1319

In this paper we study the problem of dynamically maintaining graph properties under batches of edge insertions and deletions in the massively parallel model of computation. In this setting, the graph is stored on a number of machines, each having space strongly sublinear with respect to the number of vertices, that is, n for some constant 0 < < 1. Our goal is to handle batches of updates and queries where the data for each batch fits onto one machine in constant rounds of parallel computation, as well as to reduce the total communication between the machines. This objective corresponds to the gradual buildup of databases over time, while the goal of obtaining constant rounds of communication for problems in the static setting has been elusive for problems as simple as undirected graph connectivity. We give an algorithm for dynamic graph connectivity in this setting with constant communication rounds and communication cost almost linear in terms of the batch size. Our techniques combine a new graph contraction technique, an independent random sample extractor from correlated samples, as well as distributed data structures supporting parallel updates and queries in batches. We also illustrate the power of dynamic algorithms in the MPC model by showing that the batched version of the adaptive connectivity problem is P-complete in the centralized setting, but sub-linear sized batches can be handled in a constant number of rounds. Due to the wide applicability of our approaches, we believe it represents a practically-motivated workaround to the current difficulties in designing more efficient massively parallel static graph algorithms.

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds

Parallel Batch-Dynamic Graphs: Algorithms and Lower BoundsSubhajit Sahu In this paper we study the problem of dynamically

maintaining graph properties under batches of edge

insertions and deletions in the massively parallel model

of computation. In this setting, the graph is stored

on a number of machines, each having space strongly

sublinear with respect to the number of vertices, that

is, n

for some constant 0 < < 1. Our goal is to

handle batches of updates and queries where the data

for each batch fits onto one machine in constant rounds

of parallel computation, as well as to reduce the total

communication between the machines. This objective

corresponds to the gradual buildup of databases over

time, while the goal of obtaining constant rounds of

communication for problems in the static setting has

been elusive for problems as simple as undirected graph

connectivity.

We give an algorithm for dynamic graph connectivity

in this setting with constant communication rounds and

communication cost almost linear in terms of the batch

size. Our techniques combine a new graph contraction

technique, an independent random sample extractor from

correlated samples, as well as distributed data structures

supporting parallel updates and queries in batches.

We also illustrate the power of dynamic algorithms in

the MPC model by showing that the batched version

of the adaptive connectivity problem is P-complete in

the centralized setting, but sub-linear sized batches can

be handled in a constant number of rounds. Due to

the wide applicability of our approaches, we believe

it represents a practically-motivated workaround to the

current difficulties in designing more efficient massively

parallel static graph algorithms.

NGBT_poster_v0.4

NGBT_poster_v0.4Vineetha Vishnu This document proposes improving the Needleman-Wunsch algorithm for aligning next generation sequencing (NGS) data using Hadoop clusters. It discusses how the algorithm works and the challenges of multiple sequence alignment on large NGS datasets. The solution presented is to implement a parallelized version of Needleman-Wunsch using Hadoop MapReduce to allow pairwise sequence alignment across many nodes, reducing processing time significantly for large inputs. An implementation on a 3-node cluster showed reduced alignment times as input size increased, demonstrating the ability to efficiently handle massive NGS data volumes. Future work could focus on approximation algorithms or further parallelization to improve computational space requirements.

Embarrassingly/Delightfully Parallel Problems

Embarrassingly/Delightfully Parallel ProblemsDilum Bandara This document discusses embarrassingly parallel problems and the MapReduce programming model. It provides examples of MapReduce functions and how they work. Key points include:

- Embarrassingly parallel problems can be easily split into independent parts that can be solved simultaneously without much communication. MapReduce is well-suited for these types of problems.

- MapReduce involves two functions - map and reduce. Map processes a key-value pair to generate intermediate key-value pairs, while reduce merges all intermediate values associated with the same intermediate key.

- Implementations like Hadoop handle distributed execution, parallelization, data partitioning, and fault tolerance. Users just provide map and reduce functions.

GRAPH MATCHING ALGORITHM FOR TASK ASSIGNMENT PROBLEM

GRAPH MATCHING ALGORITHM FOR TASK ASSIGNMENT PROBLEMIJCSEA Journal Task assignment is one of the most challenging problems in distributed computing environment. An optimal task assignment guarantees minimum turnaround time for a given architecture. Several approaches of optimal task assignment have been proposed by various researchers ranging from graph partitioning based tools to heuristic graph matching. Using heuristic graph matching, it is often impossible to get optimal task assignment for practical test cases within an acceptable time limit. In this paper, we have parallelized the basic heuristic graph-matching algorithm of task assignment which is suitable only for cases where processors and inter processor links are homogeneous. This proposal is a derivative of the basic task assignment methodology using heuristic graph matching. The results show that near optimal assignments are obtained much faster than the sequential program in all the cases with reasonable speed-up.

Satellite image contrast enhancement using discrete wavelet transform

Satellite image contrast enhancement using discrete wavelet transformHarishwar Reddy This document discusses contrast enhancement of satellite images using discrete wavelet transform and singular value decomposition. It provides background on contrast and techniques like histogram equalization. It then describes discrete wavelet transform and singular value decomposition, their applications, advantages, and uses. The document concludes that a new technique was proposed combining DWT and SVD for image equalization, which showed better results than conventional techniques in experiments.

20090720 smith

20090720 smithMichael Karpov The document discusses parallel and high performance computing. It begins with definitions of key terms like parallel computing, high performance computing, asymptotic notation, speedup, work and time optimality, latency, bandwidth and concurrency. It then covers parallel architecture and programming models including SIMD, MIMD, shared and distributed memory, data and task parallelism, and synchronization methods. Examples of parallel sorting and prefix sums are provided. Programming models like OpenMP, PPL and work stealing are also summarized.

Recognition as Graph Matching

Recognition as Graph MatchingVishakha Agarwal Digital image processing the statistical and structural approaches and the graph based approach for image recognition with algorithms and examples and applications where graph matching is used in pattern recognition.

More Related Content

What's hot (9)

Implementation and Performance Analysis of a Vedic Multiplier Using Tanner ED...

Implementation and Performance Analysis of a Vedic Multiplier Using Tanner ED...ijsrd.com high density, VLSI chips have led to rapid and innovative development in low power design during the recent years .The need for low power design is becoming a major issue in high performance digital systems such as microprocessor, digital signal processor and other applications. For these applications, Multiplier is the major core block. Based on the Multiplier design, an efficient processor is designed. Power and area efficient multiplier using CMOS logic circuits for applications in various digital signal processors is designed. This multiplier is implemented using Vedic multiplication algorithms mainly the "UrdhvaTriyakBhyam sutra., which is the most generalized one Vedic multiplication algorithm [1] . A multiplier is a very important element in almost all the processors and contributes substantially to the total power consumption of the system. The novel point is the efficient use of Vedic algorithm (sutras) that reduces the number of computational steps considerably compared with any conventional method . The schematic for this multiplier is designed using TANNER TOOL. Paper presents a systematic design methodology for this improved performance digital multiplier based on Vedic mathematics.

A Novel Algebraic Variety based Model for High Quality Free-viewpoint View Sy...

A Novel Algebraic Variety based Model for High Quality Free-viewpoint View Sy...Mansi Sharma This paper presents a new depth-image-based rendering algorithm for free-viewpoint 3DTV applications. The cracks, holes, ghost countors caused by visibility, disocclusion, resampling problems associated with 3D warping lead to serious rendering artifacts in synthesized virtual views. This challenging problem of hole filling is formulated as an algebraic matrix completion problem on a higher dimensional space of monomial features described by a novel variety model. The high level idea of this work is to exploit the linear or nonlinear structures of the data and interpolate missing values by solving algebraic varieties associated with Hankel matrices as a member of Krylov subspace. The proposed model effectively handles artifacts appear in wide-baseline spatial view interpolation and arbitrary camera movements. Our model has a low runtime and results excel with state-of-the-art methods in quantitative and qualitative evaluation.

HPC with Clouds and Cloud Technologies

HPC with Clouds and Cloud TechnologiesInderjeet Singh This document discusses using cloud computing technologies for data analysis applications. It presents different cloud runtimes like Hadoop, DryadLINQ, and CGL-MapReduce and compares their features to MPI. Applications like Cap3 and HEP are well-suited for cloud runtimes while iterative applications show higher overhead. Results show that as the number of VMs per node increases, MPI performance decreases by up to 50% compared to bare metal nodes. Integration of MapReduce and MPI could help improve performance of some applications on clouds.

Bivariatealgebraic integerencoded arai algorithm for

Bivariatealgebraic integerencoded arai algorithm foreSAT Publishing House IJRET : International Journal of Research in Engineering and Technology is an international peer reviewed, online journal published by eSAT Publishing House for the enhancement of research in various disciplines of Engineering and Technology. The aim and scope of the journal is to provide an academic medium and an important reference for the advancement and dissemination of research results that support high-level learning, teaching and research in the fields of Engineering and Technology. We bring together Scientists, Academician, Field Engineers, Scholars and Students of related fields of Engineering and Technology

IRJET- Approximate Multiplier and 8 Bit Dadda Multiplier Implemented through ...

IRJET- Approximate Multiplier and 8 Bit Dadda Multiplier Implemented through ...IRJET Journal This document discusses the design and implementation of approximate multipliers for image processing applications. It proposes two new 4x4 approximate adders to reduce hardware complexity in a Dadda multiplier architecture. An 8x8 unsigned Dadda tree multiplier is modeled to evaluate the impact of using the proposed adders, which reduce the partial products into fewer columns while maintaining accuracy. Experimental results show the proposed multipliers achieve lower power-delay product compared to other approximate designs for similar precision in applications like JPEG image compression.

EfficientNet

EfficientNetChangjin Lee The document proposes a compound scaling method to scale neural networks efficiently along their depth, width, and resolution dimensions simultaneously. It introduces EfficientNets, a new family of models created by applying this compound scaling technique to a baseline architecture found using neural architecture search. Evaluation shows that EfficientNets outperform previous state-of-the-art convolutional neural networks like ResNet and MobileNet in terms of accuracy and efficiency.

Practical implementation of pca on satellite images

Practical implementation of pca on satellite imagesBhanu Pratap This document discusses applying principal component analysis (PCA) to satellite images to reduce dimensionality and increase classification accuracy. It finds that the R and G bands after PCA do not carry significant information, while the B and I bands do and can be used for dimensionality reduction with less computational complexity. The steps are to compute the covariance matrix and eigenvectors/values of the 4-band images, apply a linear transformation to get the principal components, and display the first few principal component images.

Exascale Computing for Autonomous Driving

Exascale Computing for Autonomous DrivingLevent Gürel Autonomous driving is one of the most computationally demanding technologies of modern times. Report here is a high-level roadmap towards satisfying the ever-increasing

computational needs and requirements of autonomous mobility that is easily at exascale level. We demonstrate that hardware solutions alone will not be sufficient, and exascale computing demands should be met with a combination of hardware and software. In that context, algorithms with reduced computational complexities will be crucial to provide software solutions that will be integrated with available hardware, which is also limited by various mobility restrictions, such as power, space, and weight.

Probabilistic Graph Layout for Uncertain Network Visualization

Probabilistic Graph Layout for Uncertain Network VisualizationSubhashis Hazarika This document summarizes a technique for visualizing probabilistic graphs called probabilistic graph layout. It models probabilistic graphs as graphs where edge weights are probability distributions. It samples weighted graphs from the probabilistic graph and computes force-directed layouts for each sample. It then splats the node positions across samples to approximate their distributions. It also bundles edges hierarchically and colors nodes distinctly while avoiding overlap to show connections and clusters amid the uncertainty. The method was demonstrated on synthetic and protein interaction data and its limitations in stability, ambiguity and scalability were discussed.

Similar to Hypergraph for consensus optimization (20)

Distributed graph summarization

Distributed graph summarizationaftab alam The document describes research on distributed graph summarization algorithms. It introduces three distributed graph summarization algorithms (DistGreedy, DistRandom, DistLSH) that can scale to large graphs by distributing computation across machines. The algorithms share a common framework of iteratively merging super-nodes representing aggregated subsets of nodes, but differ in how they select candidate pairs of super-nodes to merge. Experimental evaluation on real-world graphs demonstrates the ability of the proposed distributed algorithms to summarize large graphs in a parallelized manner.

I017425763

I017425763IOSR Journals This document discusses parallelizing graph algorithms on GPUs for optimization. It summarizes previous work on parallel Breadth-First Search (BFS), All Pair Shortest Path (APSP), and Traveling Salesman Problem (TSP) algorithms. It then proposes implementing BFS, APSP, and TSP on GPUs using optimization techniques like reducing data transfers between CPU and GPU and modifying the algorithms to maximize GPU computing power and memory usage. The paper claims this will improve performance and speedup over CPU implementations. It focuses on optimizing graph algorithms for parallel GPU processing to accelerate applications involving large graph analysis and optimization problems.

Pregel - Paper Review

Pregel - Paper ReviewMaria Stylianou The document summarizes the Pregel system, which was designed for large-scale graph processing. Pregel addresses the inefficiency of MapReduce for graph problems by allowing direct message passing between vertices during synchronized iterations. It provides fault tolerance through checkpointing and a master-worker architecture. Key contributions of Pregel include its distributed programming model and APIs for message passing, combining messages to reduce overhead, global communication through aggregators, and mutating graph topology. The paper notes strengths like fault tolerance but also weaknesses such as putting responsibility on the user and lack of master failure detection.

DDGK: Learning Graph Representations for Deep Divergence Graph Kernels

DDGK: Learning Graph Representations for Deep Divergence Graph Kernelsivaderivader This document summarizes a research paper on learning graph representations for deep divergence graph kernels (DDGK). DDGK learns graph representations without supervision or domain knowledge by using a node-to-edges encoder and isomorphism attention. The isomorphism attention provides a bidirectional mapping between nodes in two graphs. DDGK then calculates a divergence score between the source and target graphs as a measure of their (dis)similarity. Experimental results showed DDGK produces representations competitive with other graph kernel baselines. The paper proposes several extensions, including different graph encoders and attention mechanisms, as well as improved regularization and scalability.

Ling liu part 01:big graph processing

Ling liu part 01:big graph processingjins0618 This document discusses challenges in processing large graphs and introduces an approach called GraphLego. It describes how GraphLego models large graphs as 3D cubes partitioned into slices, strips and dices to balance parallel computation. GraphLego optimizes access locality by minimizing disk access and compressing partitions. It also uses regression-based learning to optimize partitioning parameters and runtime. The document evaluates GraphLego on real-world graphs, finding it outperforms existing single-machine graph processing systems in execution efficiency and partitioning decisions.

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds

Parallel Batch-Dynamic Graphs: Algorithms and Lower BoundsSubhajit Sahu Highlighted notes on Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds.

While doing research work under Prof. Kishore Kothapalli.

Laxman Dhulipala, David Durfee, Janardhan Kulkarni, Richard Peng, Saurabh Sawlani, Xiaorui Sun:

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds. SODA 2020: 1300-1319

In this paper we study the problem of dynamically maintaining graph properties under batches of edge insertions and deletions in the massively parallel model of computation. In this setting, the graph is stored on a number of machines, each having space strongly sublinear with respect to the number of vertices, that is, n for some constant 0 < < 1. Our goal is to handle batches of updates and queries where the data for each batch fits onto one machine in constant rounds of parallel computation, as well as to reduce the total communication between the machines. This objective corresponds to the gradual buildup of databases over time, while the goal of obtaining constant rounds of communication for problems in the static setting has been elusive for problems as simple as undirected graph connectivity. We give an algorithm for dynamic graph connectivity in this setting with constant communication rounds and communication cost almost linear in terms of the batch size. Our techniques combine a new graph contraction technique, an independent random sample extractor from correlated samples, as well as distributed data structures supporting parallel updates and queries in batches. We also illustrate the power of dynamic algorithms in the MPC model by showing that the batched version of the adaptive connectivity problem is P-complete in the centralized setting, but sub-linear sized batches can be handled in a constant number of rounds. Due to the wide applicability of our approaches, we believe it represents a practically-motivated workaround to the current difficulties in designing more efficient massively parallel static graph algorithms.

Parallel Batch-Dynamic Graphs: Algorithms and Lower Bounds

Parallel Batch-Dynamic Graphs: Algorithms and Lower BoundsSubhajit Sahu In this paper we study the problem of dynamically

maintaining graph properties under batches of edge

insertions and deletions in the massively parallel model

of computation. In this setting, the graph is stored

on a number of machines, each having space strongly

sublinear with respect to the number of vertices, that

is, n

for some constant 0 < < 1. Our goal is to

handle batches of updates and queries where the data

for each batch fits onto one machine in constant rounds

of parallel computation, as well as to reduce the total

communication between the machines. This objective

corresponds to the gradual buildup of databases over

time, while the goal of obtaining constant rounds of

communication for problems in the static setting has

been elusive for problems as simple as undirected graph

connectivity.

We give an algorithm for dynamic graph connectivity

in this setting with constant communication rounds and

communication cost almost linear in terms of the batch

size. Our techniques combine a new graph contraction

technique, an independent random sample extractor from

correlated samples, as well as distributed data structures

supporting parallel updates and queries in batches.

We also illustrate the power of dynamic algorithms in

the MPC model by showing that the batched version

of the adaptive connectivity problem is P-complete in

the centralized setting, but sub-linear sized batches can

be handled in a constant number of rounds. Due to

the wide applicability of our approaches, we believe

it represents a practically-motivated workaround to the

current difficulties in designing more efficient massively

parallel static graph algorithms.

NGBT_poster_v0.4

NGBT_poster_v0.4Vineetha Vishnu This document proposes improving the Needleman-Wunsch algorithm for aligning next generation sequencing (NGS) data using Hadoop clusters. It discusses how the algorithm works and the challenges of multiple sequence alignment on large NGS datasets. The solution presented is to implement a parallelized version of Needleman-Wunsch using Hadoop MapReduce to allow pairwise sequence alignment across many nodes, reducing processing time significantly for large inputs. An implementation on a 3-node cluster showed reduced alignment times as input size increased, demonstrating the ability to efficiently handle massive NGS data volumes. Future work could focus on approximation algorithms or further parallelization to improve computational space requirements.

Embarrassingly/Delightfully Parallel Problems

Embarrassingly/Delightfully Parallel ProblemsDilum Bandara This document discusses embarrassingly parallel problems and the MapReduce programming model. It provides examples of MapReduce functions and how they work. Key points include:

- Embarrassingly parallel problems can be easily split into independent parts that can be solved simultaneously without much communication. MapReduce is well-suited for these types of problems.

- MapReduce involves two functions - map and reduce. Map processes a key-value pair to generate intermediate key-value pairs, while reduce merges all intermediate values associated with the same intermediate key.

- Implementations like Hadoop handle distributed execution, parallelization, data partitioning, and fault tolerance. Users just provide map and reduce functions.

GRAPH MATCHING ALGORITHM FOR TASK ASSIGNMENT PROBLEM

GRAPH MATCHING ALGORITHM FOR TASK ASSIGNMENT PROBLEMIJCSEA Journal Task assignment is one of the most challenging problems in distributed computing environment. An optimal task assignment guarantees minimum turnaround time for a given architecture. Several approaches of optimal task assignment have been proposed by various researchers ranging from graph partitioning based tools to heuristic graph matching. Using heuristic graph matching, it is often impossible to get optimal task assignment for practical test cases within an acceptable time limit. In this paper, we have parallelized the basic heuristic graph-matching algorithm of task assignment which is suitable only for cases where processors and inter processor links are homogeneous. This proposal is a derivative of the basic task assignment methodology using heuristic graph matching. The results show that near optimal assignments are obtained much faster than the sequential program in all the cases with reasonable speed-up.

Satellite image contrast enhancement using discrete wavelet transform

Satellite image contrast enhancement using discrete wavelet transformHarishwar Reddy This document discusses contrast enhancement of satellite images using discrete wavelet transform and singular value decomposition. It provides background on contrast and techniques like histogram equalization. It then describes discrete wavelet transform and singular value decomposition, their applications, advantages, and uses. The document concludes that a new technique was proposed combining DWT and SVD for image equalization, which showed better results than conventional techniques in experiments.

20090720 smith

20090720 smithMichael Karpov The document discusses parallel and high performance computing. It begins with definitions of key terms like parallel computing, high performance computing, asymptotic notation, speedup, work and time optimality, latency, bandwidth and concurrency. It then covers parallel architecture and programming models including SIMD, MIMD, shared and distributed memory, data and task parallelism, and synchronization methods. Examples of parallel sorting and prefix sums are provided. Programming models like OpenMP, PPL and work stealing are also summarized.

Recognition as Graph Matching

Recognition as Graph MatchingVishakha Agarwal Digital image processing the statistical and structural approaches and the graph based approach for image recognition with algorithms and examples and applications where graph matching is used in pattern recognition.

Graph processing - Graphlab

Graph processing - GraphlabAmir Payberah The document describes GraphLab, a new framework for parallel machine learning. GraphLab allows parallel processing of large-scale graph problems more efficiently than general data-parallel systems by exploiting the graph structure. It uses a vertex-centric programming model that allows update functions to read and modify data within a vertex's scope, addressing limitations of the Pregel model. GraphLab implements consistency models to ensure correctness and employs techniques like graph partitioning and distributed locking to enable parallel execution across multiple machines.

Sigmod11 outsource shortest path

Sigmod11 outsource shortest pathredhatdb The document summarizes research on computing the shortest distance between nodes in a graph while outsourcing the graph to the cloud for computational power and maintaining privacy of the data. The researchers propose (1) transforming the original graph into 1-neighborhood-d-radius graphs for outsourcing, (2) using a greedy algorithm to perform the transformation to minimize overhead, and (3) allowing approximate distances to further reduce overhead. Experiments demonstrate their methods achieve the security and privacy goals with low overhead.

PyData Los Angeles 2020 (Abhilash Majumder)

PyData Los Angeles 2020 (Abhilash Majumder)Abhilash Majumder The document provides an overview of various language embedding models including static word embeddings like Word2Vec, dynamic context embeddings like BERT, ELMO and GPT, graph embeddings like Node2Vec and Graph2Vec, and exponential family embeddings. It discusses the key techniques, algorithms, and architectures of these models for obtaining low dimensional vector representations of words and graphs that encode semantic and syntactic relationships.

CLOUD BIOINFORMATICS Part1

CLOUD BIOINFORMATICS Part1ARPUTHA SELVARAJ A This document discusses using cloud computing to address challenges in genome informatics posed by exponentially growing genomic data. It outlines how the traditional ecosystem is threatened as DNA sequencing costs decrease faster than storage and computing capacity can grow. Cloud computing provides an alternative by allowing users to rent vast computing resources on demand. The document examines applying MapReduce frameworks like Hadoop and DryadLINQ to bioinformatics applications like EST assembly and Alu clustering. Experiments showed these approaches can simplify processing large genomic datasets with performance comparable to local clusters, though virtual machines introduce around 20% overhead. Overall cloud computing may become preferred for its flexibility and ability to move computation to data.

A Decomposition Technique For Solving Integer Programming Problems

A Decomposition Technique For Solving Integer Programming ProblemsCarrie Romero This document summarizes a research paper that develops an algorithm for solving large-scale integer programming problems using Dantzig-Wolfe decomposition and column generation. The algorithm is tested on capital budgeting and scheduling problems. Numerical examples are provided to demonstrate the method. Key aspects of the algorithm include generating columns iteratively to solve a pricing problem at each iteration, and using computer software to code the algorithm and output results.

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...Yahoo Developer Network This document discusses programming abstractions for smart applications on clouds. It proposes a new programming model called Deformable Mesh Abstraction (DMA) that addresses limitations in existing models like MapReduce. DMA allows tasks to recursively spawn new tasks at runtime, supports efficient communication through a shared structure, and can operate on changing datasets. The document describes how DMA can model heuristic problem solving and presents case studies applying DMA to AI planners. It also discusses how DMA could be extended to support file systems and integrated with Hadoop.

Standard Form Of A Linear Programming Problem, Geometry Of Linear Programming...

Standard Form Of A Linear Programming Problem, Geometry Of Linear Programming...sailavanyar1 standard

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...Yahoo Developer Network

Recently uploaded (20)

apidays New York 2025 - Turn API Chaos Into AI-Powered Growth by Jeremy Water...

apidays New York 2025 - Turn API Chaos Into AI-Powered Growth by Jeremy Water...apidays Turn API Chaos Into AI-Powered Growth

Jeremy Waterkotte, Solutions Consultant, Alliances at Boomi

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

apidays New York 2025 - How AI is Transforming Product Management by Shereen ...

apidays New York 2025 - How AI is Transforming Product Management by Shereen ...apidays From Data to Decisions: How AI is Transforming Product Management

Shereen Moussa, Digital Product Owner at PepsiCo

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

How Data Annotation Services Drive Innovation in Autonomous Vehicles.docx

How Data Annotation Services Drive Innovation in Autonomous Vehicles.docxsofiawilliams5966 Autonomous vehicles represent the cutting edge of modern technology, promising to revolutionize transportation by improving safety, efficiency, and accessibility.

apidays New York 2025 - Agentic AI Future by Seena Ganesh (Staples)

apidays New York 2025 - Agentic AI Future by Seena Ganesh (Staples)apidays Agentic AI Future: Agents Reshaping Digital Transformation and API Strategy

Seena Ganesh, Vice President Engineering - B2C & B2B eCommerce & Digital AI at Staples

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

apidays New York 2025 - Build for ALL of Your Users by Anthony Lusardi (liblab)

apidays New York 2025 - Build for ALL of Your Users by Anthony Lusardi (liblab)apidays Build for ALL of Your Users

Anthony Lusardi, Developer Relations Engineer at liblab

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

Computer Applications: An International Journal (CAIJ)

Computer Applications: An International Journal (CAIJ)ijitcs Call For Papers...!!!

Computer Applications: An International Journal (CAIJ)

Web page link: http://airccse.com/caij/index.html

Submission Deadline: May 24, 2025

Submission link:http://airccse.com/caij/index.html

Contact Us: [email protected] or [email protected]

apidays New York 2025 - API Platform Survival Guide by James Higginbotham (La...

apidays New York 2025 - API Platform Survival Guide by James Higginbotham (La...apidays API Platform Survival Guide

James Higginbotham, API Strategist at LaunchAny

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

Ethical Frameworks for Trustworthy AI – Opportunities for Researchers in Huma...

Ethical Frameworks for Trustworthy AI – Opportunities for Researchers in Huma...Karim Baïna Artificial Intelligence (AI) is reshaping societies and raising complex ethical, legal, and geopolitical questions. This talk explores the foundations and limits of Trustworthy AI through the lens of global frameworks such as the EU’s HLEG guidelines, UNESCO’s human rights-based approach, OECD recommendations, and NIST’s taxonomy of AI security risks.

We analyze key principles like fairness, transparency, privacy, robustness, and accountability — not only as ideals, but in terms of their practical implementation and tensions. Special attention is given to real-world contexts such as Morocco’s deployment of 4,000 intelligent cameras and the country’s positioning in AI readiness indexes. These examples raise critical issues about surveillance, accountability, and ethical governance in the Global South.

Rather than relying on standardized terms or ethical "checklists", this presentation advocates for a grounded, interdisciplinary, and context-aware approach to responsible AI — one that balances innovation with human rights, and technological ambition with social responsibility.

This rich Trustworthy and Responsible AI frameworks context is a serious opportunity for Human and Social Sciences Researchers : either operate as gatekeepers, reinforcing existing ethical constraints, or become revolutionaries, pioneering new paradigms that redefine how AI interacts with society, knowledge production, and policymaking ?

Faces of the Future The Impact of a Data Science Course in Kerala.pdf

Faces of the Future The Impact of a Data Science Course in Kerala.pdfjzyphoenix "Faces of the Future: The Impact of a Data Science Course in Kerala," highlights how data science education is empowering diverse learners across Kerala. It explores course structure, student success stories, career outcomes, and the growing tech ecosystem supporting future data professionals.

Block chauin techncology by engineer saniya samreen

Block chauin techncology by engineer saniya samreenShoyeb16 Block chauin techncology by engineer saniya samreen

Hypergraph for consensus optimization

- 1. Vertex programming for bipartite graphs H Miao, X Liu, B Huang, and L Getoor (2013), “A hypergraph- partitioned vertex programming approach for large-scale consensus optimization.” IEEE BigData 2013. Presented by Hershel Safer in Machine Learning :: Reading Group Meetupin Machine Learning :: Reading Group Meetup on 13/11/13 Vertex programming for bipartite graphs – Hershel Safer Page 113 November 2013

- 2. Outline The setting The problem A solution An application and improvement Vertex programming for bipartite graphs – Hershel Safer Page 213 November 2013

- 3. The MapReduce model for processing Big Data The data-parallel paradigm • Map: Many computers process subproblems in parallel • Reduce: Master computer combines subproblem solutions into overall solution Appropriate for embarrassingly parallel problems with independent subproblemsindependent subproblems Does not support computational dependencies between subproblems Does not naturally support iteration Vertex programming for bipartite graphs – Hershel Safer Page 313 November 2013

- 4. Graph-parallel computation for machine learning (ML) Many ML algorithms are iterative, have dependencies Many ML algorithms are expressed naturally on graphs • Vertices represent subproblems • Edges represent computational dependencies GraphLab and Pregel express iterative algorithms with sparse dependencies for implementation on multiple computersdependencies for implementation on multiple computers • Algorithm designer defines computation at each vertex and information exchange on each edge, for each iteration • Package handles mapping of vertices to computers, details of inter-computer communication, and scheduling • Package guarantees data consistency (various models possible) and correctness as compared to serial execution Vertex programming for bipartite graphs – Hershel Safer Page 413 November 2013

- 5. Outline The setting The problem A solution An application and improvement Vertex programming for bipartite graphs – Hershel Safer Page 513 November 2013

- 6. The graph-parallel computing model Sparse graph G = (V, E) Vertex program Q(v), executed in parallel on each vertex v Q(v) can interact with Q(u) for each neighboring vertex u (i.e., (u,v) is an edge of E) Interaction (communication) is via messages or shared state • Vertex information is available to all neighbors• Vertex information is available to all neighbors • Edge information is available to the two adjacent vertices Vertex programming for bipartite graphs – Hershel Safer Page 613 November 2013

- 7. Phases of a vertex program Q: GAS model For each vertex v, iterate these steps (in parallel for all vertices): • Gather: Collect information from neighboring vertices and edges • Apply: Do the local computation and update the local data • Scatter: Update the data on the adjacent edges Vertex programming for bipartite graphs – Hershel Safer Page 713 November 2013

- 8. Implementing efficient graph-parallel computing Use a balanced p-way edge cut to partition vertices more-or-less evenly among the computers to take advantage of parallelism. Communication between vertices u and v is cheap if they are on the same computer, expensive otherwise Gonzalez 2012 the same computer, expensive otherwise If edge (u,v) spans computers, a ghost copy of u is kept on v’s computer and vice versa. Changes to u and v are synchronized across the computer network to all ghost copies. So: Partition vertices so that most neighbors are on the same computer. This is easiest if most vertices have small degree (neighborhood). Vertex programming for bipartite graphs – Hershel Safer Page 813 November 2013

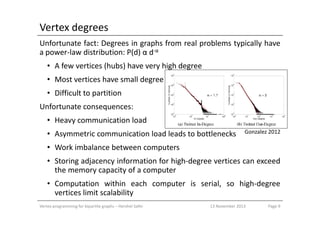

- 9. Vertex degrees Unfortunate fact: Degrees in graphs from real problems typically have a power-law distribution: P(d) α d-α • A few vertices (hubs) have very high degree • Most vertices have small degree • Difficult to partition Unfortunate consequences: • Heavy communication load • Asymmetric communication load leads to bottlenecks • Work imbalance between computers • Storing adjacency information for high-degree vertices can exceed the memory capacity of a computer • Computation within each computer is serial, so high-degree vertices limit scalability Vertex programming for bipartite graphs – Hershel Safer Page 913 November 2013 Gonzalez 2012

- 10. Outline The setting The problem A solution An application and improvement Vertex programming for bipartite graphs – Hershel Safer Page 1013 November 2013

- 11. Breaking the bottleneck: Edge partitioning via vertex cut Instead of partitioning the vertices, use a balanced p-way vertex cut to partition the edges more-or-less evenly among the computers. Vertices, rather than edges, span computers. A vertex with many edges may be replicated on multiple computers. One copy is the master; the Gonzalez 2012 may be replicated on multiple computers. One copy is the master; the rest are mirrors, containing read-only copies of vertex data. Changes to vertices are synchronized across the network. PowerGraph vertex cut formalism: Vertex programming for bipartite graphs – Hershel Safer Page 1113 November 2013 Gonzalez 2012

- 12. Properties of edge partitioning via vertex cut The objective minimizes the average number of replicas, and hence the total storage and network-communication cost. The vertex cut addresses the major issues of the edge cut: work balance, network communication, and communication bottlenecks are improved even in the presence of high-degree vertices. PowerGraph paper describes several way to implement partitioning, including random and greedy assignments Vertex programming for bipartite graphs – Hershel Safer Page 1213 November 2013

- 13. Outline The setting The problem A solution An application and improvement Vertex programming for bipartite graphs – Hershel Safer Page 1313 November 2013

- 14. Consensus optimization and ADMM Consensus optimization decomposes a complex problem into a collection of simpler problems using local variables, and constraining local copies of the variables to be equal to a global consensus variable. Alternating Direction Method of Multipliers (ADMM) is an optimization Miao 2013 Alternating Direction Method of Multipliers (ADMM) is an optimization method that iterates two phases. Although not new, it has gained recent popularity because it can be used for distributed solution of large-scale optimization problems. Vertex programming for bipartite graphs – Hershel Safer Page 1413 November 2013

- 15. Consensus optimization using ADMM ADMM is useful for consensus optimization when the dual problem can be decomposed into simple, independent subproblems. Solve them in parallel in one phase, combine subproblem solutions in the second phase, and iterate. Miao 2013 The graph for solving consensus optimization problems has two kinds of vertices and a bipartite structure. • Subproblem: Calculating x and λ • Consensus: Calculating consensus variables X After a subproblem vertex finishes computing, it transmits results to relevant consensus vertices, & vice versa. This leads to emergent two- phase behavior. Vertex programming for bipartite graphs – Hershel Safer Page 1513 November 2013 Miao 2013

- 16. PowerGraph edge partitioning is not good for ADMM Greedy edge partitioning does not work well for bipartite graphs, such as those used for consensus optimization with ADMM • Consensus vertices tend to have much higher degree than subproblem vertices. As a result, subproblem vertices tend to get replicated by the PowerGraph vertex-cut methods. • Subproblem vertices perform much heavier computations that do consensus vertices, so replicating the former is not desirable. Solution: Split only consensus vertices, not subproblem vertices Vertex programming for bipartite graphs – Hershel Safer Page 1613 November 2013

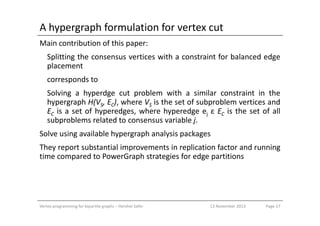

- 17. A hypergraph formulation for vertex cut Main contribution of this paper: Splitting the consensus vertices with a constraint for balanced edge placement corresponds to Solving a hyperdge cut problem with a similar constraint in the hypergraph H(VS, EC), where VS is the set of subproblem vertices and EC is a set of hyperedges, where hyperedge ej ε EC is the set of allEC is a set of hyperedges, where hyperedge ej ε EC is the set of all subproblems related to consensus variable j. Solve using available hypergraph analysis packages They report substantial improvements in replication factor and running time compared to PowerGraph strategies for edge partitions Vertex programming for bipartite graphs – Hershel Safer Page 1713 November 2013

- 18. References Hypergraph-partitioned vertex programming • H. Miao et al., IEEE BigData 2013 • H. Miao et al., Univ. Maryland tech report, 2013 ADMM • J. Eckstein, RUTCOR research report, 2012 • S. Boyd, Foundations & Trends in Machine Learning, 3 (2010), p. 1• S. Boyd, Foundations & Trends in Machine Learning, 3 (2010), p. 1 GraphLab • Y. Low, Uncertainty in Artificial Intelligence 2010 • Y. Low, VLDB 5:8 (2012) • J. Gonzalez, USENIX OSDI 2012 Vertex programming for bipartite graphs – Hershel Safer Page 1813 November 2013