ICCT2017: A user mode implementation of filtering rule management plane using key-value

1 like111 views

2017 17th IEEE International Conference on Communication Technology | Chengdu, China | Oct 27-30, 2017

1 of 14

Download to read offline

![Abstract: Towards alternative access control model

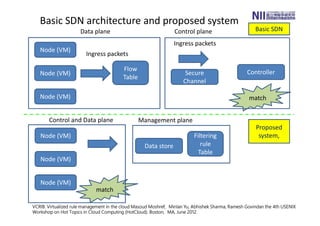

[A] The emergence of network virtualization and related technologies such as

SDN and Cloud computing make us face the new challenge of new alternative

access control model.

[B] Particularly, besides flexibility, fine-grained traffic engineering functionality

for coping with scalability and diversified networks is required for the

deployments of SDN and Cloud Computing.

[C] Our architecture leverages NoSQL data store for handling a large scale of

filtering rules. By adopting NoSQL, we can achieve scalability, availability and

tolerance to network partition. Besides, separating management plane and

control plane, we can achieve responsiveness and strong consistency at the

same time.

[D] In experiment, we have prototyped a lightweight management plane for IP

filtering. Access filtering rules including target IP address, prefix and gateway is

represented as radix tree. It is shown that proposed method can achieve

reasonable utilization in filtering IP packets](https://image.slidesharecdn.com/icct2017-ruoando-171230150245/85/ICCT2017-A-user-mode-implementation-of-filtering-rule-management-plane-using-key-value-2-320.jpg)

![Design requirement: fine grained traffic

functioning for scalability, diversity and flexibility.

[1] Scalability and diversity: Garfinkel pointed that creating a new virtual instance is far easier

than physical environment. the rapid and unpredictable growth can exacerbate management

tasks and in worse case the impact of catastorophic events can be multiplied where all instances

should be patched. Enforcing homogenity is difficult in the situation that users can have their own

special purpose VM easily without expensive cost, like copying files.

[2] Flexibility: In SDN, networks are diversified, programmable and elastic. For a long period, from

active networks to advanced network technologies like cloud and SDN, one of the general goals

of net working research has been arrived at a network which is flexible.

[3] Fine-grained traffic functioning: commercial corporations,private Enterprises and universities

emplos datacenters to run variety of applications and cloud based services. Their study reveals

that existing traffic engineering perform 15%to 20% worse than the optimal solution.

MicroTE: fine grained traffic engineering for data centers, CoNEXT '11 Proceedings of the

Seventh COnference on emerging Networking EXperiments and Technologies

Lucian Popa, Ion Stoica, Sylvia Ratnasamy: Rule-based Forwarding(RBF): Improving Internet’s

flexibility and security. HotNets 2009](https://image.slidesharecdn.com/icct2017-ruoando-171230150245/85/ICCT2017-A-user-mode-implementation-of-filtering-rule-management-plane-using-key-value-6-320.jpg)

![Tradeoffs between manageability and performance

"Logically centralized?: state distribution trade-offs in software defined networks", Dan Levin, Andreas

Wundsam, Brandon Heller, Nikhil Handigol and Anja Feldmann, HotSDN '12 Proceedings of the first workshop

on Hot topics in software defined networks

Controller component choices:

[1] Strongly consistent – controller components always operate on the same

world view. Imposes delay and overhead.

[2] Eventually consistent – controller components incorporate information as

it becomes available but may make decisions on different world views.

http://www.richardclegg.org/node/21

C A

P

NoSQLRDBMS

Consistency Availability

Tolerance to network

partition

CAP Theorem (Eric Brewer 2000)

Enforced Consistency Eventual Consistency

Strongly Consistent is preferred.

With NoSQL and Key-value,

A (availability)

P (Tolerance to network partition)

S (Scalability)

can be achieved.](https://image.slidesharecdn.com/icct2017-ruoando-171230150245/85/ICCT2017-A-user-mode-implementation-of-filtering-rule-management-plane-using-key-value-7-320.jpg)

![Adopting basic datastore on management plane

auto_ptr<mongo::DBClientCursor> cursor =

client.query(ns, mongo::BSONObj());

while(cursor->more()) {

mongo::BSONObj p = cursor->next();

mongo::OID oid = p["_id"].OID();

string dest = p["dest"].str();

int mask = p["mask"].numberInt();

string gateway = p["gateway"].str();

const char *p0 = dest.c_str();

const char *p1 = gateway.c_str();

add_rtentry(p0, mask, p1);

int res;

res = find_route(dstAddress);

if(res==0)

printf("route find ¥n");

/* flush entry /*

rm_rtentry(p0, mask);

{"_id":

"$oid":"53370eaeb1f58908a9837910"

"dest":"10.0.0.0","mask":

8,"gateway":"192.168.0.2"}

Filtering rule with BSON (JSON)

a radix tree (also patricia trie or radix trie or

compact prefix tree) is a space-optimized trie

data structure where each node with only one

child is merged with its parent.

14 entry.addr = ntohl(addr dst.s addr);

15 entry.prefix len = 32;

17 radix tree<rtentry, in addr>::iterator it;

18

19 it = rttable.longest match(entry);

20 if (it == rttable.end()) f

21 std::cout << ‘‘no route to ‘‘ << dst << std::endl;

22 return 1;](https://image.slidesharecdn.com/icct2017-ruoando-171230150245/85/ICCT2017-A-user-mode-implementation-of-filtering-rule-management-plane-using-key-value-10-320.jpg)

![Conclusions: Towards alternative access control model

[A] The emergence of network virtualization and related technologies such as

SDN and Cloud computing make us face the new challenge of new alternative

access control model.

[B] Particularly, besides flexibility, fine-grained traffic engineering functionality

for coping with scalability and diversified networks is required for the

deployments of SDN and Cloud Computing.

[C] Our architecture leverages NoSQL data store for handling a large scale of

filtering rules. By adopting NoSQL, we can achieve scalability, availability and

tolerance to network partition. Besides, separating management plane and

control plane, we can achieve responsiveness and strong consistency at the

same time.

[D] In experiment, we have prototyped a lightweight management plane for IP

filtering. Access filtering rules including target IP address, prefix and gateway is

represented as radix tree. It is shown that proposed method can achieve

reasonable utilization in filtering IP packets](https://image.slidesharecdn.com/icct2017-ruoando-171230150245/85/ICCT2017-A-user-mode-implementation-of-filtering-rule-management-plane-using-key-value-14-320.jpg)

Recommended

A hybrid cloud approach for secure authorized

A hybrid cloud approach for secure authorizedNinad Samel This document summarizes a research paper that proposes a hybrid cloud approach for secure authorized data deduplication. The paper presents a scheme that uses convergent encryption to encrypt files before uploading them to cloud storage. It also considers the differential privileges of users when performing duplicate checks, in addition to file content. A prototype is implemented to test the proposed authorized duplicate check scheme. Experimental results show the scheme incurs minimal overhead compared to normal cloud storage operations. The goal is to better protect data security while supporting deduplication in a hybrid cloud architecture.

Conference Paper: Multistage OCDO: Scalable Security Provisioning Optimizatio...

Conference Paper: Multistage OCDO: Scalable Security Provisioning Optimizatio...Ericsson The document proposes a multistage optimization framework called OCDO that provides scalable security provisioning in SDN-based cloud environments. OCDO uses a decomposition technique that leverages network topology characteristics to allow concurrent optimization. It also uses a segmentation technique to distribute security function availability among network nodes based on semantics. The framework aims to optimize both computing and networking resources simultaneously while ensuring security function precedence requirements and proper handling of stateful functions. Evaluation results show OCDO achieves higher efficiency and can scale to large data center topologies.

Multi- Level Data Security Model for Big Data on Public Cloud: A New Model

Multi- Level Data Security Model for Big Data on Public Cloud: A New ModelEswar Publications With the advent of cloud computing the big data has emerged as a very crucial technology. The certain type of cloud provides the consumers with the free services like storage, computational power etc. This paper is intended to make use of infrastructure as a service where the storage service from the public cloud providers is going to leveraged by an individual or organization. The paper will emphasize the model which can be used by anyone without any cost. They can store the confidential data without any type of security issue, as the data will be altered

in such a way that it cannot be understood by the intruder if any. Not only that but the user can retrieve back the original data within no time. The proposed security model is going to effectively and efficiently provide a robust security while data is on cloud infrastructure as well as when data is getting migrated towards cloud infrastructure or vice versa.

Data Decentralisation: Efficiency, Privacy and Fair Monetisation

Data Decentralisation: Efficiency, Privacy and Fair MonetisationAngelo Corsaro A presentation give at the European H-Cloud Conference to motivate decentralisation as a mean to improve energy efficiency, privacy, and opportunity for monetisation for your digital footprint.

iaetsd Controlling data deuplication in cloud storage

iaetsd Controlling data deuplication in cloud storageIaetsd Iaetsd This document discusses controlling data deduplication in cloud storage. It proposes an architecture that provides duplicate check procedures with minimal overhead compared to normal cloud storage operations. The key aspects of the proposed system are:

1) It uses convergent encryption to encrypt data for privacy while still allowing for deduplication of duplicate files.

2) It introduces a private cloud that manages user privileges and generates tokens for authorized duplicate checking in a hybrid cloud architecture.

3) It evaluates the overhead of the proposed authorized duplicate checking scheme and finds it incurs negligible overhead compared to normal cloud storage operations.

Diploma Paper Contribution

Diploma Paper ContributionMehdi Touati This document proposes and analyzes protocols for building secure routing in mobile ad hoc networks with an incomplete set of security associations between nodes. It first shows that existing secure routing protocols like Ariadne can still function even when security associations are not fully established between all node pairs. It then proposes a new protocol called BISS (Building Secure Routing out of an Incomplete Set of Security Associations) specifically designed for this scenario, and analyzes its security and performance based on simulations. The key idea is that routing can still be secured as long as a fraction of security associations exist between nodes.

A Back Propagation Neural Network Intrusion Detection System Based on KVM

A Back Propagation Neural Network Intrusion Detection System Based on KVMInternational Journal of Innovation Engineering and Science Research A Network Intrusion Detection System (NIDS) monitors a network for malicious activities or policy violations [1]. The Kernel-based Virtual Machine (KVM) is a full virtualization solution for Linux on x86 hardware virtualization extensions [2]. We design and implement a back-propagation network intrusion detection system in KVM. Compared to traditional Back Propagation (BP) NIDS, the Particle Swarm Optimization (PSO) algorithm is applied to improve efficiency. The results show an improved system in terms of recall and precision along with missing detection rates.

Cloud computing and security issues in the

Cloud computing and security issues in theIJNSA Journal Cloud computing has formed the conceptual and infrastructural basis for tomorrow’s computing. The

global computing infrastructure is rapidly moving towards cloud based architecture. While it is important

to take advantages of could based computing by means of deploying it in diversified sectors, the security

aspects in a cloud based computing environment remains at the core of interest. Cloud based services and

service providers are being evolved which has resulted in a new business trend based on cloud technology.

With the introduction of numerous cloud based services and geographically dispersed cloud service

providers, sensitive information of different entities are normally stored in remote servers and locations

with the possibilities of being exposed to unwanted parties in situations where the cloud servers storing

those information are compromised. If security is not robust and consistent, the flexibility and advantages

that cloud computing has to offer will have little credibility. This paper presents a review on the cloud

computing concepts as well as security issues inherent within the context of cloud computing and cloud

infrastructure.

SECURE THIRD PARTY AUDITOR (TPA) FOR ENSURING DATA INTEGRITY IN FOG COMPUTING

SECURE THIRD PARTY AUDITOR (TPA) FOR ENSURING DATA INTEGRITY IN FOG COMPUTINGIJNSA Journal Fog computing is an extended version of Cloud computing. It minimizes the latency by incorporating Fog servers as intermediates between Cloud Server and users. It also provides services similar to Cloud like Storage, Computation and resources utilization and security.Fog systems are capable of processing large amounts of data locally, operate on-premise, are fully portable, and can be installed on the heterogeneous hardware. These features make the Fog platform highly suitable for time and location-sensitive applications. For example, the Internet of Things (IoT) devices isrequired to quickly process a large amount of data. The Significance of enterprise data and increased access rates from low-resource terminal devices demands for reliable and low- cost authentication protocols. Lots of researchers have proposed authentication protocols with varied efficiencies.As a part of our contribution, we propose a protocol to ensure data integrity which is best suited for fog computing environment.

Vortex II -- The Industrial IoT Connectivity Standard

Vortex II -- The Industrial IoT Connectivity StandardAngelo Corsaro The large majority of commercial IoT platforms target consumer applications and fall short in addressing the requirements characteristic of Industrial IoT. Vortex has always focused on addressing the challenges characteristic of Industrial IoT systems and with 2.4 release sets a the a new standard!

This presentation will (1) introduce the new features introduced in with Vortex 2.4, (2) explain how Vortex 2.4 addresses the requirements of Industrial Internet of Things application better than any other existing platform, and (3)showcase how innovative companies are using Vortex for building leading edge Industrial Internet of Things applications.

A Hybrid Cloud Approach for Secure Authorized Deduplication

A Hybrid Cloud Approach for Secure Authorized Deduplication1crore projects - The document proposes a new deduplication system that supports differential or authorized duplicate checking in a hybrid cloud architecture consisting of a public and private cloud. This allows users to securely check for duplicates of files based on their privileges.

- Convergent encryption is used to encrypt files for deduplication while maintaining confidentiality. A new construction is presented that additionally encrypts files with keys derived from user privileges to enforce access control during duplicate checking.

- The system aims to efficiently solve the problem of deduplication with access control in cloud computing. It allows duplicate checking of files marked with a user's corresponding privileges to realize access control while preserving benefits of deduplication.

Privacy-Preserving Public Auditing for Regenerating-Code-Based Cloud Storage

Privacy-Preserving Public Auditing for Regenerating-Code-Based Cloud Storage1crore projects IEEE PROJECTS 2015

1 crore projects is a leading Guide for ieee Projects and real time projects Works Provider.

It has been provided Lot of Guidance for Thousands of Students & made them more beneficial in all Technology Training.

Dot Net

DOTNET Project Domain list 2015

1. IEEE based on datamining and knowledge engineering

2. IEEE based on mobile computing

3. IEEE based on networking

4. IEEE based on Image processing

5. IEEE based on Multimedia

6. IEEE based on Network security

7. IEEE based on parallel and distributed systems

Java Project Domain list 2015

1. IEEE based on datamining and knowledge engineering

2. IEEE based on mobile computing

3. IEEE based on networking

4. IEEE based on Image processing

5. IEEE based on Multimedia

6. IEEE based on Network security

7. IEEE based on parallel and distributed systems

ECE IEEE Projects 2015

1. Matlab project

2. Ns2 project

3. Embedded project

4. Robotics project

Eligibility

Final Year students of

1. BSc (C.S)

2. BCA/B.E(C.S)

3. B.Tech IT

4. BE (C.S)

5. MSc (C.S)

6. MSc (IT)

7. MCA

8. MS (IT)

9. ME(ALL)

10. BE(ECE)(EEE)(E&I)

TECHNOLOGY USED AND FOR TRAINING IN

1. DOT NET

2. C sharp

3. ASP

4. VB

5. SQL SERVER

6. JAVA

7. J2EE

8. STRINGS

9. ORACLE

10. VB dotNET

11. EMBEDDED

12. MAT LAB

13. LAB VIEW

14. Multi Sim

CONTACT US

1 CRORE PROJECTS

Door No: 214/215,2nd Floor,

No. 172, Raahat Plaza, (Shopping Mall) ,Arcot Road, Vadapalani, Chennai,

Tamin Nadu, INDIA - 600 026

Email id: [email protected]

website:1croreprojects.com

Phone : +91 97518 00789 / +91 72999 51536

140320702029 maurya ppt

140320702029 maurya pptMaurya Shah The document discusses using network coding with multi-generation mixing to improve data recovery in cloud storage systems. It provides a literature review of several papers that use techniques like Maximum Distance Separation codes, random linear network coding, and instantly decodable network coding. The proposed work develops an architecture that uses multi-generation mixing and the DODEX+ encoding scheme to encode and retrieve data across multiple mobile clients and cloud storage. This aims to provide more efficient and reliable data delivery over wireless mesh networks. Tools like Amazon S3 and the NS2 network simulator are used to implement and test the proposed system.

zenoh: The Edge Data Fabric

zenoh: The Edge Data FabricAngelo Corsaro This document provides an introduction to Eclipse Zenoh, an open source project that unifies data in motion, data at rest, and computations in a distributed system. Zenoh elegantly blends traditional publish-subscribe with geo-distributed storage, queries, and computations. The presentation will demonstrate Zenoh's advantages for enabling typical edge computing scenarios and simplifying large-scale distributed applications through real-world use cases. It will also provide an overview of Zenoh's architecture, performance, and APIs.

Security and privacy issues of fog

Security and privacy issues of fogRezgar Mohammad This document discusses security and privacy issues of fog computing based on a survey of existing work. It begins with an overview of fog computing, defining it as an extension of cloud computing to the edge of networks. It then identifies several key security and privacy challenges of fog computing, including issues of trust and authentication, network security, secure data storage, and secure and private data computation. Several potential solutions are also briefly discussed, such as reputation-based trust models, biometric authentication, software-defined networking for security, and techniques like homomorphic encryption to enable verifiable and private computation on outsourced data.

zenoh -- the ZEro Network OverHead protocol

zenoh -- the ZEro Network OverHead protocolAngelo Corsaro This presentation introduces the key ideas behind zenoh -- an Internet scale data-centric protocol that unifies data-sharing between any kind of device including those constrained with respect to the node resources, such as computational resources and power, as well as the network.

The Death Of Computer Forensics: Digital Forensics After the Singularity

The Death Of Computer Forensics: Digital Forensics After the SingularityTech and Law Center The document summarizes a workshop discussion on the challenges of digital forensics in the cloud computing era. Participants including lawyers, computer scientists, and law enforcement discussed both technical and legal issues.

Key technical challenges discussed were that cloud computing data is distributed across networks in various deployment models, requiring different forensic techniques. Additionally, in cloud systems data may not remain in one location as in traditional forensics, and encryption of data in transit and execution makes analysis more difficult.

Legal issues around jurisdiction and access to cloud data across international borders were also raised. The group aims to draft a paper further exploring these challenges to digital forensics in light of increased cloud computing.

Building IoT Applications with Vortex and the Intel Edison Starter Kit

Building IoT Applications with Vortex and the Intel Edison Starter KitAngelo Corsaro Whilst there isn’t a universal agreement on what exactly is IoT, nor on the line that separates Consumer and Industrial IoT, everyone unanimously agrees that unconstrained access to data is the game changing dimension of IoT.

Vortex positions as the best data sharing platform for IoT enabling data to flow unconstrained across devices and at any scale.

This presentation, will demonstrate how quickly and effectively you can build real-world IoT applications that scale using Vortex and the Intel Edison Starter Kit. Specifically, you will learn how to leverage vortex to virtualise devices, integrate different protocols, flexibly execute analytics where it makes the most sense and leverage Cloud as well as Fog computing architectures.

Throughout the webcast we will leverage Intel’s Edison starter kit, available at https://software.intel.com/en-us/iot/hardware/edison, you will be able to download our code examples before the webcast to particulate to the live demo!

The Data Distribution Service

The Data Distribution ServiceAngelo Corsaro DDS (Data Distribution Service) is a standard for real-time data sharing across networked devices. It provides a global data space abstraction that allows applications to asynchronously publish and subscribe to data topics. DDS supports features like dynamic discovery, decentralized implementation, and adaptive connectivity to enable interoperable and efficient data distribution.

Turn InSecure And High Speed Intra-Cloud and Inter-Cloud Communication

Turn InSecure And High Speed Intra-Cloud and Inter-Cloud CommunicationRichard Jung The document discusses secure and high-speed communication within and between cloud infrastructures. It aims to analyze different data migration techniques for optimizing security and performance of intra-cloud and inter-cloud communication. A private cloud network was created using OpenStack's Nova Architecture to communicate with Amazon's Elastic Cloud public cloud platform. Results show that the Virtual Private Networking technique OpenVPN provides strong security while HTTPS and Secure Copy provide minimal security without sacrificing performance for data migration within and between clouds.

Lessons Learned from Porting HelenOS to RISC-V

Lessons Learned from Porting HelenOS to RISC-VMartin Děcký HelenOS is an open source operating system based on the microkernel multiserver design principles. One of its goals is to provide excellent target platform portability. From the time of its inception, HelenOS already supported 4 different hardware platforms and currently it supports platforms as diverse as x86, SPARCv9 and ARM. This talk presents practical experiences and lessons learned from porting HelenOS to RISC-V.

While the unprivileged (user space) instruction set architecture of RISC-V has been declared stable in 2014, the privileged instruction set architecture is technically still allowed to change in the future. Likewise, many major design features and building blocks of HelenOS are already in place, but no official commitment to ABI or API stability has been made yet. This gives an interesting perspective on the pros and cons of both HelenOS and RISC-V. The talk also points to some possible research directions with respect to hardware/software co-design.

Fluid IoT Architectures

Fluid IoT ArchitecturesAngelo Corsaro The document discusses the evolution of IoT architectures from cloud-centric to more distributed models like fog and mist computing. It argues that a unifying "Fluid IoT Architecture" is needed to eliminate technological segregation across cloud, fog, and mist layers. This proposed Fluid IoT Architecture would abstract computing, storage, and networking resources from end to end.

Covert Flow Confinement For Vm Coalition

Covert Flow Confinement For Vm CoalitionLogic Solutions, Inc. The document proposes a Covert Flows Confinement mechanism (CFCC) for virtual machine (VM) coalitions in cloud computing environments. CFCC uses a prioritized Chinese Wall model to control covert information flows between VMs based on assigned labels, allowing flows between similarly-labeled VMs but disallowing flows between VMs from different conflict of interest sets. The architecture features distributed mandatory access control for all VMs and centralized information exchange. Experiments show the performance overhead of CFCC is acceptable. Future work will add application-level flow control for VM coalitions.

A NEW FRAMEWORK FOR SECURING PERSONAL DATA USING THE MULTI-CLOUD

A NEW FRAMEWORK FOR SECURING PERSONAL DATA USING THE MULTI-CLOUDijsptm Relaying On A Single Cloud As A Storage Service Is Not A Proper Solution For A Number Of Reasons; For Instance, The Data Could Be Captured While Uploaded To The Cloud, And The Data Could Be Stolen From The Cloud Using A Stolen Id. In This Paper, We Propose A Solution That Aims At Offering A Secure Data Storage For Mobile Cloud Computing Based On The Multi-Clouds Scheme. The Proposed Solution

Will Take The Advantages Of Multi-Clouds, Data Cryptography, And Data Compression To Secure The

Distributed Data; By Splitting The Data Into Segments, Encrypting The Segments, Compressing The

Segments, Distributing The Segments Via Multi-Clouds While Keeping One Segment On The Mobile Device

Memory; Which Will Prevent Extracting The Data If The Distributed Segments Have Been Intercepted

Data Sharing in Extremely Resource Constrained Envionrments

Data Sharing in Extremely Resource Constrained EnvionrmentsAngelo Corsaro This presentation introduces XRCE a new protocol for very efficiently distributing data in resource constrained (power, network, computation, and storage) environments. XRCE greatly improves the wire efficiency of existing protocol and in many cases provides higher level abstractions.

An Efficient PDP Scheme for Distributed Cloud Storage

An Efficient PDP Scheme for Distributed Cloud StorageIJMER International Journal of Modern Engineering Research (IJMER) is Peer reviewed, online Journal. It serves as an international archival forum of scholarly research related to engineering and science education.

International Journal of Modern Engineering Research (IJMER) covers all the fields of engineering and science: Electrical Engineering, Mechanical Engineering, Civil Engineering, Chemical Engineering, Computer Engineering, Agricultural Engineering, Aerospace Engineering, Thermodynamics, Structural Engineering, Control Engineering, Robotics, Mechatronics, Fluid Mechanics, Nanotechnology, Simulators, Web-based Learning, Remote Laboratories, Engineering Design Methods, Education Research, Students' Satisfaction and Motivation, Global Projects, and Assessment…. And many more.

Microkernels in the Era of Data-Centric Computing

Microkernels in the Era of Data-Centric ComputingMartin Děcký Martin Děcký presented on microkernels in the era of data-centric computing. He discussed how emerging memory technologies and near-data processing can break away from the von Neumann architecture. Near-data processing provides benefits like reduced latency, increased throughput, and lower energy consumption. This leads to more distributed and heterogeneous systems that are well-matched to multi-microkernel architectures, with microkernels providing isolation and message passing between cores. The talk outlined an incremental approach from initial workload offloading to developing a full multi-microkernel system.

Cloud Camp Milan 2K9 Telecom Italia: Where P2P?

Cloud Camp Milan 2K9 Telecom Italia: Where P2P?Gabriele Bozzi 1. The document discusses the potential for peer-to-peer (P2P) computing as an alternative or complement to the traditional client-server model, especially in the context of cloud computing.

2. It notes challenges with P2P such as lack of centralized control and potential for freeloading, but also advantages like harnessing unused resources.

3. Emerging technologies like autonomic and cognitive networking aim to address P2P challenges by enabling self-configuration and optimization of distributed resources.

csec66 a user mode implementation of filtering rule management plane on virtu...

csec66 a user mode implementation of filtering rule management plane on virtu...Ruo Ando The document proposes a user mode implementation of a management plane for scalable and manageable filtering rule management in a virtualized networking environment. It leverages a NoSQL data store to handle large numbers of filtering rules, separating the management plane from the control plane. This allows responsiveness and strong consistency while achieving scalability, availability and tolerance to network partitions. An experiment prototyping lightweight IP filtering showed the proposed method can achieve reasonable CPU utilization of under 3% for rulesets of 10-100 rules. Further optimization is needed to handle larger rulesets of 1000-10000 rules.

ZCloud Consensus on Hardware for Distributed Systems

ZCloud Consensus on Hardware for Distributed SystemsGokhan Boranalp 3rd Workshop on Dependability,

May 8, Monday 2017, İYTE,

https://goo.gl/fSVnZy

http://dcs.iyte.edu.tr/ws/ppt/10/presentation.pdf

In distributed applications where the number of members in the cluster increases, the

separation of the consensus related operations at the hardware level is essential for the

following reasons:

1. At the operating system level, messages broadcast on the protocol stack cause latency.

2. It is necessary to increase the number of completed transactions in the communication of

distributed system components and on the network unit (throughput).

3. For devices with limited storage and CPU computing facilities that use embedded operating

systems such as IOT devices, it is also necessary to reduce the processing burden due to

"consensus" operations.

4. A common consensus communication model is needed for different applications that need

to work together in (BFT) distributed systems.

More Related Content

What's hot (19)

SECURE THIRD PARTY AUDITOR (TPA) FOR ENSURING DATA INTEGRITY IN FOG COMPUTING

SECURE THIRD PARTY AUDITOR (TPA) FOR ENSURING DATA INTEGRITY IN FOG COMPUTINGIJNSA Journal Fog computing is an extended version of Cloud computing. It minimizes the latency by incorporating Fog servers as intermediates between Cloud Server and users. It also provides services similar to Cloud like Storage, Computation and resources utilization and security.Fog systems are capable of processing large amounts of data locally, operate on-premise, are fully portable, and can be installed on the heterogeneous hardware. These features make the Fog platform highly suitable for time and location-sensitive applications. For example, the Internet of Things (IoT) devices isrequired to quickly process a large amount of data. The Significance of enterprise data and increased access rates from low-resource terminal devices demands for reliable and low- cost authentication protocols. Lots of researchers have proposed authentication protocols with varied efficiencies.As a part of our contribution, we propose a protocol to ensure data integrity which is best suited for fog computing environment.

Vortex II -- The Industrial IoT Connectivity Standard

Vortex II -- The Industrial IoT Connectivity StandardAngelo Corsaro The large majority of commercial IoT platforms target consumer applications and fall short in addressing the requirements characteristic of Industrial IoT. Vortex has always focused on addressing the challenges characteristic of Industrial IoT systems and with 2.4 release sets a the a new standard!

This presentation will (1) introduce the new features introduced in with Vortex 2.4, (2) explain how Vortex 2.4 addresses the requirements of Industrial Internet of Things application better than any other existing platform, and (3)showcase how innovative companies are using Vortex for building leading edge Industrial Internet of Things applications.

A Hybrid Cloud Approach for Secure Authorized Deduplication

A Hybrid Cloud Approach for Secure Authorized Deduplication1crore projects - The document proposes a new deduplication system that supports differential or authorized duplicate checking in a hybrid cloud architecture consisting of a public and private cloud. This allows users to securely check for duplicates of files based on their privileges.

- Convergent encryption is used to encrypt files for deduplication while maintaining confidentiality. A new construction is presented that additionally encrypts files with keys derived from user privileges to enforce access control during duplicate checking.

- The system aims to efficiently solve the problem of deduplication with access control in cloud computing. It allows duplicate checking of files marked with a user's corresponding privileges to realize access control while preserving benefits of deduplication.

Privacy-Preserving Public Auditing for Regenerating-Code-Based Cloud Storage

Privacy-Preserving Public Auditing for Regenerating-Code-Based Cloud Storage1crore projects IEEE PROJECTS 2015

1 crore projects is a leading Guide for ieee Projects and real time projects Works Provider.

It has been provided Lot of Guidance for Thousands of Students & made them more beneficial in all Technology Training.

Dot Net

DOTNET Project Domain list 2015

1. IEEE based on datamining and knowledge engineering

2. IEEE based on mobile computing

3. IEEE based on networking

4. IEEE based on Image processing

5. IEEE based on Multimedia

6. IEEE based on Network security

7. IEEE based on parallel and distributed systems

Java Project Domain list 2015

1. IEEE based on datamining and knowledge engineering

2. IEEE based on mobile computing

3. IEEE based on networking

4. IEEE based on Image processing

5. IEEE based on Multimedia

6. IEEE based on Network security

7. IEEE based on parallel and distributed systems

ECE IEEE Projects 2015

1. Matlab project

2. Ns2 project

3. Embedded project

4. Robotics project

Eligibility

Final Year students of

1. BSc (C.S)

2. BCA/B.E(C.S)

3. B.Tech IT

4. BE (C.S)

5. MSc (C.S)

6. MSc (IT)

7. MCA

8. MS (IT)

9. ME(ALL)

10. BE(ECE)(EEE)(E&I)

TECHNOLOGY USED AND FOR TRAINING IN

1. DOT NET

2. C sharp

3. ASP

4. VB

5. SQL SERVER

6. JAVA

7. J2EE

8. STRINGS

9. ORACLE

10. VB dotNET

11. EMBEDDED

12. MAT LAB

13. LAB VIEW

14. Multi Sim

CONTACT US

1 CRORE PROJECTS

Door No: 214/215,2nd Floor,

No. 172, Raahat Plaza, (Shopping Mall) ,Arcot Road, Vadapalani, Chennai,

Tamin Nadu, INDIA - 600 026

Email id: [email protected]

website:1croreprojects.com

Phone : +91 97518 00789 / +91 72999 51536

140320702029 maurya ppt

140320702029 maurya pptMaurya Shah The document discusses using network coding with multi-generation mixing to improve data recovery in cloud storage systems. It provides a literature review of several papers that use techniques like Maximum Distance Separation codes, random linear network coding, and instantly decodable network coding. The proposed work develops an architecture that uses multi-generation mixing and the DODEX+ encoding scheme to encode and retrieve data across multiple mobile clients and cloud storage. This aims to provide more efficient and reliable data delivery over wireless mesh networks. Tools like Amazon S3 and the NS2 network simulator are used to implement and test the proposed system.

zenoh: The Edge Data Fabric

zenoh: The Edge Data FabricAngelo Corsaro This document provides an introduction to Eclipse Zenoh, an open source project that unifies data in motion, data at rest, and computations in a distributed system. Zenoh elegantly blends traditional publish-subscribe with geo-distributed storage, queries, and computations. The presentation will demonstrate Zenoh's advantages for enabling typical edge computing scenarios and simplifying large-scale distributed applications through real-world use cases. It will also provide an overview of Zenoh's architecture, performance, and APIs.

Security and privacy issues of fog

Security and privacy issues of fogRezgar Mohammad This document discusses security and privacy issues of fog computing based on a survey of existing work. It begins with an overview of fog computing, defining it as an extension of cloud computing to the edge of networks. It then identifies several key security and privacy challenges of fog computing, including issues of trust and authentication, network security, secure data storage, and secure and private data computation. Several potential solutions are also briefly discussed, such as reputation-based trust models, biometric authentication, software-defined networking for security, and techniques like homomorphic encryption to enable verifiable and private computation on outsourced data.

zenoh -- the ZEro Network OverHead protocol

zenoh -- the ZEro Network OverHead protocolAngelo Corsaro This presentation introduces the key ideas behind zenoh -- an Internet scale data-centric protocol that unifies data-sharing between any kind of device including those constrained with respect to the node resources, such as computational resources and power, as well as the network.

The Death Of Computer Forensics: Digital Forensics After the Singularity

The Death Of Computer Forensics: Digital Forensics After the SingularityTech and Law Center The document summarizes a workshop discussion on the challenges of digital forensics in the cloud computing era. Participants including lawyers, computer scientists, and law enforcement discussed both technical and legal issues.

Key technical challenges discussed were that cloud computing data is distributed across networks in various deployment models, requiring different forensic techniques. Additionally, in cloud systems data may not remain in one location as in traditional forensics, and encryption of data in transit and execution makes analysis more difficult.

Legal issues around jurisdiction and access to cloud data across international borders were also raised. The group aims to draft a paper further exploring these challenges to digital forensics in light of increased cloud computing.

Building IoT Applications with Vortex and the Intel Edison Starter Kit

Building IoT Applications with Vortex and the Intel Edison Starter KitAngelo Corsaro Whilst there isn’t a universal agreement on what exactly is IoT, nor on the line that separates Consumer and Industrial IoT, everyone unanimously agrees that unconstrained access to data is the game changing dimension of IoT.

Vortex positions as the best data sharing platform for IoT enabling data to flow unconstrained across devices and at any scale.

This presentation, will demonstrate how quickly and effectively you can build real-world IoT applications that scale using Vortex and the Intel Edison Starter Kit. Specifically, you will learn how to leverage vortex to virtualise devices, integrate different protocols, flexibly execute analytics where it makes the most sense and leverage Cloud as well as Fog computing architectures.

Throughout the webcast we will leverage Intel’s Edison starter kit, available at https://software.intel.com/en-us/iot/hardware/edison, you will be able to download our code examples before the webcast to particulate to the live demo!

The Data Distribution Service

The Data Distribution ServiceAngelo Corsaro DDS (Data Distribution Service) is a standard for real-time data sharing across networked devices. It provides a global data space abstraction that allows applications to asynchronously publish and subscribe to data topics. DDS supports features like dynamic discovery, decentralized implementation, and adaptive connectivity to enable interoperable and efficient data distribution.

Turn InSecure And High Speed Intra-Cloud and Inter-Cloud Communication

Turn InSecure And High Speed Intra-Cloud and Inter-Cloud CommunicationRichard Jung The document discusses secure and high-speed communication within and between cloud infrastructures. It aims to analyze different data migration techniques for optimizing security and performance of intra-cloud and inter-cloud communication. A private cloud network was created using OpenStack's Nova Architecture to communicate with Amazon's Elastic Cloud public cloud platform. Results show that the Virtual Private Networking technique OpenVPN provides strong security while HTTPS and Secure Copy provide minimal security without sacrificing performance for data migration within and between clouds.

Lessons Learned from Porting HelenOS to RISC-V

Lessons Learned from Porting HelenOS to RISC-VMartin Děcký HelenOS is an open source operating system based on the microkernel multiserver design principles. One of its goals is to provide excellent target platform portability. From the time of its inception, HelenOS already supported 4 different hardware platforms and currently it supports platforms as diverse as x86, SPARCv9 and ARM. This talk presents practical experiences and lessons learned from porting HelenOS to RISC-V.

While the unprivileged (user space) instruction set architecture of RISC-V has been declared stable in 2014, the privileged instruction set architecture is technically still allowed to change in the future. Likewise, many major design features and building blocks of HelenOS are already in place, but no official commitment to ABI or API stability has been made yet. This gives an interesting perspective on the pros and cons of both HelenOS and RISC-V. The talk also points to some possible research directions with respect to hardware/software co-design.

Fluid IoT Architectures

Fluid IoT ArchitecturesAngelo Corsaro The document discusses the evolution of IoT architectures from cloud-centric to more distributed models like fog and mist computing. It argues that a unifying "Fluid IoT Architecture" is needed to eliminate technological segregation across cloud, fog, and mist layers. This proposed Fluid IoT Architecture would abstract computing, storage, and networking resources from end to end.

Covert Flow Confinement For Vm Coalition

Covert Flow Confinement For Vm CoalitionLogic Solutions, Inc. The document proposes a Covert Flows Confinement mechanism (CFCC) for virtual machine (VM) coalitions in cloud computing environments. CFCC uses a prioritized Chinese Wall model to control covert information flows between VMs based on assigned labels, allowing flows between similarly-labeled VMs but disallowing flows between VMs from different conflict of interest sets. The architecture features distributed mandatory access control for all VMs and centralized information exchange. Experiments show the performance overhead of CFCC is acceptable. Future work will add application-level flow control for VM coalitions.

A NEW FRAMEWORK FOR SECURING PERSONAL DATA USING THE MULTI-CLOUD

A NEW FRAMEWORK FOR SECURING PERSONAL DATA USING THE MULTI-CLOUDijsptm Relaying On A Single Cloud As A Storage Service Is Not A Proper Solution For A Number Of Reasons; For Instance, The Data Could Be Captured While Uploaded To The Cloud, And The Data Could Be Stolen From The Cloud Using A Stolen Id. In This Paper, We Propose A Solution That Aims At Offering A Secure Data Storage For Mobile Cloud Computing Based On The Multi-Clouds Scheme. The Proposed Solution

Will Take The Advantages Of Multi-Clouds, Data Cryptography, And Data Compression To Secure The

Distributed Data; By Splitting The Data Into Segments, Encrypting The Segments, Compressing The

Segments, Distributing The Segments Via Multi-Clouds While Keeping One Segment On The Mobile Device

Memory; Which Will Prevent Extracting The Data If The Distributed Segments Have Been Intercepted

Data Sharing in Extremely Resource Constrained Envionrments

Data Sharing in Extremely Resource Constrained EnvionrmentsAngelo Corsaro This presentation introduces XRCE a new protocol for very efficiently distributing data in resource constrained (power, network, computation, and storage) environments. XRCE greatly improves the wire efficiency of existing protocol and in many cases provides higher level abstractions.

An Efficient PDP Scheme for Distributed Cloud Storage

An Efficient PDP Scheme for Distributed Cloud StorageIJMER International Journal of Modern Engineering Research (IJMER) is Peer reviewed, online Journal. It serves as an international archival forum of scholarly research related to engineering and science education.

International Journal of Modern Engineering Research (IJMER) covers all the fields of engineering and science: Electrical Engineering, Mechanical Engineering, Civil Engineering, Chemical Engineering, Computer Engineering, Agricultural Engineering, Aerospace Engineering, Thermodynamics, Structural Engineering, Control Engineering, Robotics, Mechatronics, Fluid Mechanics, Nanotechnology, Simulators, Web-based Learning, Remote Laboratories, Engineering Design Methods, Education Research, Students' Satisfaction and Motivation, Global Projects, and Assessment…. And many more.

Microkernels in the Era of Data-Centric Computing

Microkernels in the Era of Data-Centric ComputingMartin Děcký Martin Děcký presented on microkernels in the era of data-centric computing. He discussed how emerging memory technologies and near-data processing can break away from the von Neumann architecture. Near-data processing provides benefits like reduced latency, increased throughput, and lower energy consumption. This leads to more distributed and heterogeneous systems that are well-matched to multi-microkernel architectures, with microkernels providing isolation and message passing between cores. The talk outlined an incremental approach from initial workload offloading to developing a full multi-microkernel system.

Similar to ICCT2017: A user mode implementation of filtering rule management plane using key-value (20)

Cloud Camp Milan 2K9 Telecom Italia: Where P2P?

Cloud Camp Milan 2K9 Telecom Italia: Where P2P?Gabriele Bozzi 1. The document discusses the potential for peer-to-peer (P2P) computing as an alternative or complement to the traditional client-server model, especially in the context of cloud computing.

2. It notes challenges with P2P such as lack of centralized control and potential for freeloading, but also advantages like harnessing unused resources.

3. Emerging technologies like autonomic and cognitive networking aim to address P2P challenges by enabling self-configuration and optimization of distributed resources.

csec66 a user mode implementation of filtering rule management plane on virtu...

csec66 a user mode implementation of filtering rule management plane on virtu...Ruo Ando The document proposes a user mode implementation of a management plane for scalable and manageable filtering rule management in a virtualized networking environment. It leverages a NoSQL data store to handle large numbers of filtering rules, separating the management plane from the control plane. This allows responsiveness and strong consistency while achieving scalability, availability and tolerance to network partitions. An experiment prototyping lightweight IP filtering showed the proposed method can achieve reasonable CPU utilization of under 3% for rulesets of 10-100 rules. Further optimization is needed to handle larger rulesets of 1000-10000 rules.

ZCloud Consensus on Hardware for Distributed Systems

ZCloud Consensus on Hardware for Distributed SystemsGokhan Boranalp 3rd Workshop on Dependability,

May 8, Monday 2017, İYTE,

https://goo.gl/fSVnZy

http://dcs.iyte.edu.tr/ws/ppt/10/presentation.pdf

In distributed applications where the number of members in the cluster increases, the

separation of the consensus related operations at the hardware level is essential for the

following reasons:

1. At the operating system level, messages broadcast on the protocol stack cause latency.

2. It is necessary to increase the number of completed transactions in the communication of

distributed system components and on the network unit (throughput).

3. For devices with limited storage and CPU computing facilities that use embedded operating

systems such as IOT devices, it is also necessary to reduce the processing burden due to

"consensus" operations.

4. A common consensus communication model is needed for different applications that need

to work together in (BFT) distributed systems.

IEEE HPSR 2017 Keynote: Softwarized Dataplanes and the P^3 trade-offs: Progra...

IEEE HPSR 2017 Keynote: Softwarized Dataplanes and the P^3 trade-offs: Progra...Christian Esteve Rothenberg The realization of network softwarization, an overarching buzzword to encompass all software-centric developments from the Software-Defined Networking (SDN) and Network Function Virtualization (NFV) trends, is being enabled through a set of innovations in high-speed data plane design and implementation. Recent efforts include te-architecting the hardware-software interfaces and exposing programmatic interfaces (e.g., OpenFlow), programmable hardware-based pipelines (e.g. Protocol Independent Switch Architecture – PISA) along suitabe programming languages (e.g., P4), and multiple advances on low overhead virtualization and fast packet processing libraries (e.g. DPDK, FD.io) for Linux based general purpose processor platforms. This talk provides an overview of relevant ongoing work and discusses the trade-offs of each design and implementation choice of software-defined dataplanes regarding Programmability, Performance, and Portability.

Security and risk analysis in the cloud with software defined networking arch...

Security and risk analysis in the cloud with software defined networking arch...IJECEIAES Cloud computing has emerged as the actual trend in business information technology service models, since it provides processing that is both costeffective and scalable. Enterprise networks are adopting software-defined networking (SDN) for network management flexibility and lower operating costs. Information technology (IT) services for enterprises tend to use both technologies. Yet, the effects of cloud computing and software defined networking on business network security are unclear. This study addresses this crucial issue. In a business network that uses both technologies, we start by looking at security, namely distributed denial-of-service (DDoS) attack defensive methods. SDN technology may help organizations protect against DDoS assaults provided the defensive architecture is structured appropriately. To mitigate DDoS attacks, we offer a highly configurable network monitoring and flexible control framework. We present a dataset shift-resistant graphic model-based attack detection system for the new architecture. The simulation findings demonstrate that our architecture can efficiently meet the security concerns of the new network paradigm and that our attack detection system can report numerous threats using real-world network data.

IEEE 2014 NS2 Projects

IEEE 2014 NS2 ProjectsVijay Karan List of IEEE NS2 Projects. It Contains the IEEE Projects in the Language NS2 with ieee publication year 2014

IEEE 2014 NS2 Projects

IEEE 2014 NS2 ProjectsVijay Karan List of IEEE NS2 Projects. It Contains the IEEE Projects in the Language NS2 with ieee publication year 2014

Infrastructure SecurityChapter 10Principles of Compute.docx

Infrastructure SecurityChapter 10Principles of Compute.docxannettsparrow Infrastructure Security

Chapter 10

Principles of Computer Security, Fifth Edition

Copyright © 2018 by McGraw-Hill Education. All rights reserved.

Objectives (1 of 2)

Construct networks using different types of network devices.

Enhance security using security devices.

Understand virtualization concepts.

Enhance security using NAC/NAP methodologies.

Identify the different types of media used to carry network signals.

Describe the different types of storage media used to store information.

Principles of Computer Security, Fifth Edition

Copyright © 2018 by McGraw-Hill Education. All rights reserved.

2

Objectives (2 of 2)

Use basic terminology associated with network functions related to information security.

Describe the different types and uses of cloud computing.

Principles of Computer Security, Fifth Edition

Copyright © 2018 by McGraw-Hill Education. All rights reserved.

3

Key Terms (1 of 3)

Basic packet filtering

Bridge

Cloud computing

Coaxial cable

Collision domain

Concentrator

Data loss prevention (DLP)

Firewall

Hypervisor

Hub

Infrastructure as a Service (IaaS)

Internet content filters

Load balancer

Modem

Network access control

Principles of Computer Security, Fifth Edition

Copyright © 2018 by McGraw-Hill Education. All rights reserved.

Basic packet filtering – Filtering that looks at each packet entering or leaving the network and then either accepts the packet or rejects the packet based on user-defined rules. Each packet is examined separately.

Bridge – A network device that separates traffic into separate collision domains at the data layer of the OSI model.

Cloud computing – The automatic provisioning of on demand computational resources across a network.

Coaxial cable – A network cable that consists of a solid center core conductor and a physical spacer to the outer conductor which is wrapped around it. Commonly used in video systems.

Collision domain – An area of shared traffic in a network where packets from different conversations can collide.

Concentrator – A device used to manage multiple similar networking operations, such as provide a VPN endpoint for multiple VPNs.

Data loss prevention (DLP) – Technology, processes, and procedures designed to detect when unauthorized removal of data from a system occurs. DLP is typically

active, preventing the loss of data, either by blocking the transfer or dropping the connection.

Firewall – A network device used to segregate traffic based on rules.

Hypervisor - A low-level program that allows multiple operating systems to run concurrently on a single host computer.

Hub – A network device used to connect devices at the physical layer of the OSI model.

Infrastructure as a Service (IaaS) – The automatic, on-demand provisioning of infrastructure elements, operating as a service; a common element of cloud computing.

Internet content filters – A content-filtering system use to protect corporations from employees’ viewing of inappropriate or illegal content at the workplace.

Virtualization in Distributed System: A Brief Overview

Virtualization in Distributed System: A Brief OverviewBOHR International Journal of Computer Science (BIJCS) Virtual machines are popular because of their efficiency, ease of use, and flexibility. There has been an increasing demand for the deployment of a robust distributed network for maximizing the performance of such systems and minimizing the infrastructural cost. In this study, we have discussed various levels at which virtualization can be implemented for distributed computing, which can contribute to increased efficiency and performance of distributed computing. The study gives an overview of various types of virtualization techniques and their benefits. For example, server virtualization helps to create multiple server instances from one physical server. Such techniques will decrease the infrastructure costs, make the system more scalable, and help in the full utilization of available resources.

Virtualization in Distributed System: A Brief Overview

Virtualization in Distributed System: A Brief OverviewBOHR International Journal of Intelligent Instrumentation and Computing Virtual machines are popular because of their efficiency, ease of use and flexibility. There has been an increasing demand for deployment of a robust distributed network for maximizing the performance of such systems

and minimizing the infrastructural cost. In this paper we have discussed various levels at which virtualization can be implemented for distributed computing which can contribute to increased efficiency and performance of distributed

computing. The paper gives an overview of various types of virtualization techniques and their benefits. For eg: Server virtualization helps to create multiple server instances from one physical server. Such techniques will decrease

the infrastructure cost, make the system more scalable and help in full utilization of available resources.

DDS Advanced Tutorial - OMG June 2013 Berlin Meeting

DDS Advanced Tutorial - OMG June 2013 Berlin MeetingJaime Martin Losa An extended, in-depth tutorial explaining how to fully exploit the standard's unique communication capabilities.Presented at the OMG June 2013 Berlin Meeting.

Users upgrading to DDS from a homegrown solution or a legacy-messaging infrastructure often limit themselves to using its most basic publish-subscribe features. This allows applications to take advantage of reliable multicast and other performance and scalability features of the DDS wire protocol, as well as the enhanced robustness of the DDS peer-to-peer architecture. However, applications that do not use DDS's data-centricity do not take advantage of many of its QoS-related, scalability and availability features, such as the KeepLast History Cache, Instance Ownership and Deadline Monitoring. As a consequence some developers duplicate these features in custom application code, resulting in increased costs, lower performance, and compromised portability and interoperability.

This tutorial will formally define the data-centric publish-subscribe model as specified in the OMG DDS specification and define a set of best-practice guidelines and patterns for the design and implementation of systems based on DDS.

Drops division and replication of data in cloud for optimal performance and s...

Drops division and replication of data in cloud for optimal performance and s...Pvrtechnologies Nellore This document proposes a method called DROPS (Division and Replication of Data in Cloud for Optimal Performance and Security) that divides files into fragments and replicates the fragments across cloud nodes for improved security and performance. The method stores each file fragment on a separate node to prevent attackers from accessing full files even if some nodes are compromised. It also separates nodes storing fragments using graph coloring to obscure fragment locations. The method aims to improve retrieval time by selecting central nodes and replicating fragments on high-traffic nodes. The document compares DROPS to 10 replication strategies and evaluates it using 3 data center network architectures.

Security in Software Defined Networks (SDN): Challenges and Research Opportun...

Security in Software Defined Networks (SDN): Challenges and Research Opportun...Editor IJCATR In networks, the rapidly changing traffic patterns of search engines, Internet of Things (IoT) devices, Big Data and data centers has thrown up new challenges for legacy; existing networks; and prompted the need for a more intelligent and innovative way to dynamically manage traffic and allocate limited network resources. Software Defined Network (SDN) which decouples the control plane from the data plane through network vitalizations aims to address these challenges. This paper has explored the SDN architecture and its implementation with the OpenFlow protocol. It has also assessed some of its benefits over traditional network architectures, security concerns and how it can be addressed in future research and related works in emerging economies such as Nigeria.

High performance and flexible networking

High performance and flexible networkingJohn Berkmans This document summarizes a paper presented at the 11th USENIX Symposium on Networked Systems Design and Implementation (NSDI '14) held from April 2-4, 2014 in Seattle, WA. The paper proposes NetVM, a platform that uses virtualization to run complex network functions at line speed on commodity servers while providing flexibility. NetVM leverages the DPDK framework to allow virtual machines to directly access packets from the NIC without kernel involvement. It introduces innovations such as inter-VM communication through shared memory, a hypervisor switch for state-dependent packet routing, and security domains. Evaluation shows NetVM can process packets at 10Gbps throughput across multiple VMs, over 250% faster than existing SR

Sigcomm16 sdn-nvf-topics-preview

Sigcomm16 sdn-nvf-topics-previewChristian Esteve Rothenberg SDN and NFV aim to make networks more flexible and simplify their management by separating the network control plane from the data plane and decoupling software functions from hardware. Key benefits include virtualization, orchestration, programmability, dynamic scaling, automation, visibility, performance optimization, multi-tenancy, service integration and openness. SDN controls the data plane through a centralized controller and interface, while NFV virtualizes network functions. Different SDN models take varying approaches to where the control plane resides and how the control and data planes communicate and are programmed.

CONTAINERIZED SERVICES ORCHESTRATION FOR EDGE COMPUTING IN SOFTWARE-DEFINED W...

CONTAINERIZED SERVICES ORCHESTRATION FOR EDGE COMPUTING IN SOFTWARE-DEFINED W...IJCNCJournal As SD-WAN disrupts legacy WAN technologies and becomes the preferred WAN technology adopted by corporations, and Kubernetes becomes the de-facto container orchestration tool, the opportunities for deploying edge-computing containerized applications running over SD-WAN are vast. Service orchestration in SD-WAN has not been provided with enough attention, resulting in the lack of research focused on service discovery in these scenarios. In this article, an in-house service discovery solution that works alongside Kubernetes’ master node for allowing improved traffic handling and better user experience when running micro-services is developed. The service discovery solution was conceived following a design science research approach. Our research includes the implementation of a proof-ofconcept SD-WAN topology alongside a Kubernetes cluster that allows us to deploy custom services and delimit the necessary characteristics of our in-house solution. Also, the implementation's performance is tested based on the required times for updating the discovery solution according to service updates. Finally, some conclusions and modifications are pointed out based on the results, while also discussing possible enhancements.

What Is Openstack And Its Importance

What Is Openstack And Its ImportanceLorie Harris OpenStack is open-source software for building private and public clouds. It provides services for compute, storage, and networking (networking projects allow resources to be shared across clouds). Using OpenStack can help enterprises reduce costs compared to vendor lock-in agreements and manage large server clusters, storage, and networks more efficiently.

Necos keynote ii_mobislice

Necos keynote ii_mobisliceAugusto Neto The international keynote speech of Prof. Augusto Neto at the II MOBISLICE workshop, co-located in the IEEE MFV/SDN 2019: “NECOS Project: Vision Towards Deeper Cloud Network Slicing”.

9-2020.pdf

9-2020.pdffermanrw This document proposes an artificial intelligence enabled routing (AIER) mechanism for software defined networking (SDN) that can alleviate issues with monitoring periods in dynamic routing and provide superior route decisions using artificial neural networks (ANNs). The key aspects of the proposed AIER mechanism are:

1) It installs three additional modules in the SDN control plane: a topology discovery module, a monitoring period module, and an ANN module.

2) The ANN module is trained to learn from past routing experiences and avoid ineffective route decisions.

3) Evaluation on the Mininet simulator shows the AIER mechanism improves performance metrics like average throughput, packet loss ratio, and packet delay compared to different monitoring periods in dynamic

Computing_Paradigms_An_Overview.pdf

Computing_Paradigms_An_Overview.pdfHODCS6 This document discusses various computing paradigms such as fog computing, cloud computing, edge computing, mobile cloud computing, and fog-based computing. It provides an overview of fog computing, describing its layered architecture and comparing it to similar paradigms like cloud and edge computing. Some key points discussed include:

- Fog computing enhances cloud computing by extending services and resources to the network edge, supporting low-latency applications.

- It has a 3-layer architecture with end devices, fog nodes, and cloud layers, placing resources closer to end users than the cloud.

- Characteristics of fog computing include low latency, mobility support, location awareness, and decentralized storage and analytics.

- Challen

IEEE HPSR 2017 Keynote: Softwarized Dataplanes and the P^3 trade-offs: Progra...

IEEE HPSR 2017 Keynote: Softwarized Dataplanes and the P^3 trade-offs: Progra...Christian Esteve Rothenberg

Virtualization in Distributed System: A Brief Overview

Virtualization in Distributed System: A Brief OverviewBOHR International Journal of Computer Science (BIJCS)

Virtualization in Distributed System: A Brief Overview

Virtualization in Distributed System: A Brief OverviewBOHR International Journal of Intelligent Instrumentation and Computing

Drops division and replication of data in cloud for optimal performance and s...

Drops division and replication of data in cloud for optimal performance and s...Pvrtechnologies Nellore

More from Ruo Ando (20)

KISTI-NII Joint Security Workshop 2023.pdf

KISTI-NII Joint Security Workshop 2023.pdfRuo Ando The document summarizes various techniques for automated software testing using fuzzing, including coverage-based fuzzing (AFL), directed greybox fuzzing (AflGO), and neural network-based approaches (FuzzGuard). It discusses how genetic algorithms and simulated annealing are used in AFL and AflGO respectively to guide test case mutation towards new code areas. It also provides examples of vulnerabilities found using these fuzzing tools.

Gartner 「セキュリティ&リスクマネジメントサミット 2019」- 安藤

Gartner 「セキュリティ&リスクマネジメントサミット 2019」- 安藤Ruo Ando 特異点(シンギュラリティ)上のプロメテウス ~AI創発特性時代のサバイバル術~

Gartner 「セキュリティ&リスクマネジメントサミット 2019」

2019年8月5日(月)10:30-11:30

国立情報学研究所 サイバーセキュリティ研究開発センター

安藤類央

解説#86 決定木 - ss.pdf

解説#86 決定木 - ss.pdfRuo Ando 決定木の解説スライド

http://www.youtube.com/user/blinknetmonitoring

SaaSアカデミー for バックオフィス アイドルと学ぶDX講座 ~アイドル戦略に見るDXを専門家が徹底解説~

SaaSアカデミー for バックオフィス アイドルと学ぶDX講座 ~アイドル戦略に見るDXを専門家が徹底解説~Ruo Ando SaaSアカデミー for バックオフィス アイドルと学ぶDX講座 ~アイドル戦略に見るDXを専門家が徹底解説~

2002年5月25日

15:10-15:40

凸版印刷株式会社

国立情報学研究所 安藤類央

イベントの詳細はこちら

https://eventory.cc/event/saasacademy-2021spring

このイベントでは、企業をアイドルに例えて「DX」をわかりやすく解説するというユニークなセッションが開催されます。

現役アイドル、北野めぐみさんをゲストに招き、彼女自身をデジタル化するとどうなるのか。

例えばファンを増やす(取引先を増やす)にはどうしたら良いのかなど、普段と変わった視点からDXを解説します。

解説#83 情報エントロピー

解説#83 情報エントロピーRuo Ando 情報エントロピーと秤とコインのパズルの解説です。

動画はこちら

https://youtu.be/lJdimIrPB2A

解説#74 連結リスト

解説#74 連結リストRuo Ando C言語の連結リストについて50分ほど話しました。連結リストは重宝するデータ構造です。ポインタが本領を発揮します。

❏ 連結リストとは?

❏ 連結リストの基本的性質(配列と比較して)

❏ 挿入1:リストの途中で要素を挿入

❏ 挿入2:リストの先頭に要素を挿入

❏ 削除1:リストの途中の要素を削除

❏ 削除2:リストの先頭の要素を削除

❏(発展)挿入3:境界条件用に改良した挿入

解説#76 福岡正信

解説#76 福岡正信Ruo Ando 福岡正信さんは農学者で、自然農法の提唱者です。農学は専門ではありませんが、人間観や仕事間について40分ほど話しました。

❏ 遺伝子

❏ 自由意志

❏ 真・善・美

❏ 無為

❏ 事に使える

〇「福岡正信の〈自然〉を生きる」 福岡 正信,金光 寿郎 春秋社; 改題版 (2021/9/28)

〇「ホモ・デウス : テクノロジーとサピエンスの未来」 ユヴァル・ノア・ハラリ(著), 柴田裕之(翻訳) 河出書房新社 (2018/9/6)

〇「反脆弱性――不確実な世界を生き延びる唯一の考え方」ナシーム・ニコラス・タレブ (著), 望月 衛 (監修), 千葉 敏生 (翻訳) ダイヤモンド社; 第1版 (2017/6/21)

解説#77 非加算無限

解説#77 非加算無限Ruo Ando 非可算無限について25分間ほど話しました。

❏ ヒルベルトホテル

❏ 加算無限

❏ モナド

❏ べき集合

❏ 非可算無限

❏ ゲーデル不完全性定理

〇「スマリヤンの無限の論理パズル―ゲーデルとカントールをめぐる難問奇問」レイモンド スマリヤン (著), Raymond Smullyan (原著), 長尾 確 (翻訳), 白揚社 (2007/12/1)

〇「発見・創発できる人工知能OTTER」安藤 類央 (著), 武藤 佳恭 (著), 近代科学社 (2018/8/30)

https://www.youtube.com/watch?v=fSNvfYLj2cA

解説#1 C言語ポインタとアドレス

解説#1 C言語ポインタとアドレスRuo Ando 「C言語のポインタ(型の変数)は、可変長配列を扱うために使う」という点に絞って、50分間程度の解説をしています。

最終的に下記の12行のプログラムを47分間使って解説します。

(7行目、11行目の”<”は除いています)

1: int size = N;

2: int x[size];

3: int *p;

4:

5: p = x;

6:

7: for ( int = 0; i size; i++)

8: p[i] = i;

9:

10: int y = 0

11: for ( int i = 0; i size; i++)

12: y = y + p[i];

https://www.youtube.com/watch?v=KLFlk1dohKQ&t=1496s

解説#78 誤差逆伝播

解説#78 誤差逆伝播Ruo Ando 鉄板トピックの誤差逆伝播について40分ほど話しました。

❏ 多層パーセプトロン

❏ 勾配降下法

❏ リバースモード自動微分

❏ 確率的勾配降下法

❏ 実装(モジュール化)

Rumelhart, David E.; Hinton, Geoffrey E., Williams, Ronald J. (8 October 1986). “Learning representations by back-propagating errors”. Nature 323 (6088): 533–536. doi:10.1038/323533a0.

解説#73 ハフマン符号

解説#73 ハフマン符号Ruo Ando ハフマン符号について、1時間ほど話しています。

❏ 符号化と復号

❏ 符号の効率

❏ 生起確率

❏ ハフマン符号とエントロピー

❏ 情報源とエントロピー

❏ エントロピーを計算する

❏ 平均符号語長

❏ 平均符号長Lを計算する

❏ ハフマン符号の構成方法

https://www.youtube.com/watch?v=qTOuQXcBt5c&t=254s

【技術解説4】assertion failureとuse after-free

【技術解説4】assertion failureとuse after-freeRuo Ando 動画はこちら

https://youtu.be/71dp5XCcB38

ITmedia Security Week 2021 講演資料

ITmedia Security Week 2021 講演資料 Ruo Ando ITmedia Security Week 2021 講演資料

標的がテレワーク、そのとき攻撃者は

ある日のこと、私のもとに一通の怪しいメールが届いた。技術者である私の興味を引くに十分なその挑戦的な文面は標的型攻撃を疑わせた。コロナ禍で急増したテレワークと組織の混乱。これに便乗し、さまざまな攻撃キャンペーンが展開されている。入り口はメールや脆弱なシステム機器など多岐にわたる。本セッションでは、悪質なサイバー攻撃のあらましに加え、テレワークで働く社員一人ひとりのリスクを低減するために見直したいポイントを整理する。

AI(機械学習・深層学習)との協働スキルとOperational AIの事例紹介 @ ビジネス+ITセミナー 2020年11月

AI(機械学習・深層学習)との協働スキルとOperational AIの事例紹介 @ ビジネス+ITセミナー 2020年11月Ruo Ando サイバーセキュリティにおいて、導入が進むAI(機械学習・深層学習)と協働することになるセキュリティ管理者・セキュリティアナリストに求めれる能力・仕事・チームの質の変化について解説するとともに、Operational AIというコンセプトを紹介します。

【AI実装4】TensorFlowのプログラムを読む2 非線形回帰

【AI実装4】TensorFlowのプログラムを読む2 非線形回帰Ruo Ando 1. The model is a polynomial regression model that fits a polynomial function to the training data.

2. The loss function used is the sum of squares of the differences between the predicted and actual target values.

3. The optimizer used is GradientDescentOptimizer which minimizes the loss function to fit the model parameters.

Recently uploaded (20)

Compiler Design Unit1 PPT Phases of Compiler.pptx

Compiler Design Unit1 PPT Phases of Compiler.pptxRushaliDeshmukh2 Compiler phases

Lexical analysis

Syntax analysis

Semantic analysis

Intermediate (machine-independent) code generation

Intermediate code optimization

Target (machine-dependent) code generation

Target code optimization

MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx![MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/module03-cloudcomputing-250506150212-e107fd7e-thumbnail.jpg?width=560&fit=bounds)

![MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/module03-cloudcomputing-250506150212-e107fd7e-thumbnail.jpg?width=560&fit=bounds)

![MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/module03-cloudcomputing-250506150212-e107fd7e-thumbnail.jpg?width=560&fit=bounds)

![MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/module03-cloudcomputing-250506150212-e107fd7e-thumbnail.jpg?width=560&fit=bounds)

MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptxAlvas Institute of Engineering and technology, Moodabidri Cloud Platform Architecture over Virtualized Datacenters: Cloud Computing and

Service Models, Data Center Design and Interconnection Networks, Architectural Design of Compute and Storage Clouds, Public Cloud Platforms: GAE, AWS and Azure, Inter-Cloud

Resource Management.

"Boiler Feed Pump (BFP): Working, Applications, Advantages, and Limitations E...

"Boiler Feed Pump (BFP): Working, Applications, Advantages, and Limitations E...Infopitaara A Boiler Feed Pump (BFP) is a critical component in thermal power plants. It supplies high-pressure water (feedwater) to the boiler, ensuring continuous steam generation.

⚙️ How a Boiler Feed Pump Works

Water Collection:

Feedwater is collected from the deaerator or feedwater tank.

Pressurization:

The pump increases water pressure using multiple impellers/stages in centrifugal types.

Discharge to Boiler:

Pressurized water is then supplied to the boiler drum or economizer section, depending on design.

🌀 Types of Boiler Feed Pumps

Centrifugal Pumps (most common):

Multistage for higher pressure.

Used in large thermal power stations.

Positive Displacement Pumps (less common):

For smaller or specific applications.

Precise flow control but less efficient for large volumes.

🛠️ Key Operations and Controls

Recirculation Line: Protects the pump from overheating at low flow.

Throttle Valve: Regulates flow based on boiler demand.

Control System: Often automated via DCS/PLC for variable load conditions.

Sealing & Cooling Systems: Prevent leakage and maintain pump health.

⚠️ Common BFP Issues

Cavitation due to low NPSH (Net Positive Suction Head).

Seal or bearing failure.

Overheating from improper flow or recirculation.

RICS Membership-(The Royal Institution of Chartered Surveyors).pdf

RICS Membership-(The Royal Institution of Chartered Surveyors).pdfMohamedAbdelkader115 Glad to be one of only 14 members inside Kuwait to hold this credential.

Please check the members inside kuwait from this link:

https://www.rics.org/networking/find-a-member.html?firstname=&lastname=&town=&country=Kuwait&member_grade=(AssocRICS)&expert_witness=&accrediation=&page=1

Efficient Algorithms for Isogeny Computation on Hyperelliptic Curves: Their A...

Efficient Algorithms for Isogeny Computation on Hyperelliptic Curves: Their A...IJCNCJournal We present efficient algorithms for computing isogenies between hyperelliptic curves, leveraging higher genus curves to enhance cryptographic protocols in the post-quantum context. Our algorithms reduce the computational complexity of isogeny computations from O(g4) to O(g3) operations for genus 2 curves, achieving significant efficiency gains over traditional elliptic curve methods. Detailed pseudocode and comprehensive complexity analyses demonstrate these improvements both theoretically and empirically. Additionally, we provide a thorough security analysis, including proofs of resistance to quantum attacks such as Shor's and Grover's algorithms. Our findings establish hyperelliptic isogeny-based cryptography as a promising candidate for secure and efficient post-quantum cryptographic systems.

SICPA: Fabien Keller - background introduction