Introduction to Deep Learning

- 1. Deep Learning 1 In the name of God Mehrnaz Faraz Faculty of Electrical Engineering K. N. Toosi University of Technology Milad Abbasi Faculty of Electrical Engineering Sharif University of Technology

- 2. AI, ML and DL 2

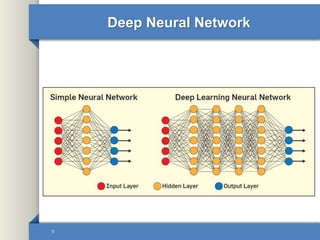

- 3. Why Deep Learning? • Perform better for classification than other traditional Machine Learning methods, because: – Deep learning methods include multi layer processing with less time and better accuracy performance. 3

- 4. Deep Learning Applications 4 Image recognitionSpeech recognition Robots and self driving carsHealthcare Portfolio management Weather forecast

- 5. Strengths and Challenges • Strengths: – No need for feature engineering – Best results with unstructured data – No need for labeling of data – Efficient at delivering high-quality results – Hardware and software support • Challenges: – The need for lots of data – Neural networks at the core of deep learning are black boxes – Overfitting the model – Lack of flexibility 5

- 6. Deep Learning Algorithms • Unsupervised Learning – Auto Encoders (AE) – Generative Adversarial Networks (GAN) • Supervised Learning – Recurrent Neural Networks (RNN) – Convolutional Neural Networks (CNN) • Semi-Supervised Learning • Reinforcement Learning 6

- 7. Unsupervised Learning • Find structure or patterns in the unlabeled data – Clustering – Compression – Feature & Representation learning – Dimensionality reduction – Generative models 7

- 8. Supervised Learning • Learn a mapping function f where: y = f(x) – Classification – Regression 8 Data: (x,y)

- 10. Overfitting • The model is fit too well to the training set, but does not perform as well on the test set • Occurs in complex networks with small data set 10 Overfitting

- 11. Overfitting • Steps for reducing overfitting: – Add more data – Use data augmentation – Use architectures that generalize well – Add regularization (mostly dropout, L1/L2 regularization are also possible) – Reduce architecture complexity 11

- 12. Training Neural Network • Data preparation • Choosing a network architecture • Training algorithm and optimization • Improving training algorithm – Improve convergence rate 12

- 13. Data preparation • Data need to be made adequate for a given method • Data in the real world is dirty – Incomplete: Lacking attribute values, lacking certain attributes of interest – Noisy: Containing errors or outliers 13 More data = Better training Removing incomplete and ruined data

- 14. Data preparation • Data pre-processing: – Normalization: Helps to prevent that attributes with large ranges out-weight attributes with small ranges • Min-max normalization: • Z-score normalization: – Doesn’t eliminate outliers – Mean: 0 , Std: 1 14 min _ max _ min _ min max min old old new old old x x x new new new x x minold old new old x x x std x

- 15. Data preparation • Histogram equalization: – Is a technique for adjusting image intensities to enhance contrast 15 Before histogram equalization After histogram equalization

- 16. Data preparation • Data augmentation – Means increasing the number of data points. In terms of images, it may mean that increasing the number of images in the dataset. – Popular augmentation techniques (in terms of image): • Flip • Rotation • Scale • Crop • Translation • Gaussian noise • Conditional GANs 16 Flip

- 17. Choosing a network architecture • Layer’s type – 1 dimensional data (signal, vector): FC, AE – N dimensional data (image, tensor): CNN – Time series data (speech, video, text): RNN • Number of parameters – Number of layers – Number of neurons • Start with a minimum of hidden layers and nodes. Increase the hidden layers and nodes number until get a good performance. 17 Try and error

- 18. Training algorithm and optimization • Training: • Error calculation: – Cost/ Loss function: • Mean squared • Cross-entropy • Softmax 18 Feed forward Error calculation Back propagationUpdating parameters Input 21 , i i i L y y y y N

- 19. Training algorithm and optimization • Optimization: – Step by step to get the lowest amount of error with modifying and updating the weights. – Difference value for cost function – Goal: Get to global minimum 19 Loss surface

- 20. Training algorithm and optimization – Gradient descent variants: • Stochastic gradient descent • Batch gradient descent • Mini-batch gradient descent – Gradient descent optimization algorithm: • Momentum • Adagrad • Adadelta • Adam • RMSprop • … 20

- 21. Stochastic Gradient Descent • A parameter updating for each training example 𝑥 𝑖 and label 𝑦 𝑖 • Performs redundant computations for large datasets • Performs frequent updates with a high variance (Fluctuation) 21

- 22. Batch Gradient Descent • Calculate the gradients for the whole dataset to perform just one update • can be very slow • Learning rate (η) determining how big of an update we perform 22 new old J

- 23. Mini-batch Gradient Descent • Performs an update for every mini-batch of n training examples – reduces the variance of the parameter updates – can make use of highly optimized matrix optimizations – Common mini-batch sizes range between 50 and 256 23 , , ; ; i i n i i n new old J x y

- 24. Improving Training Algorithms • Batch normalization • Regularization • Dropout • Transfer learning 24

- 25. • Is a normalization method/layer for neural networks • Preventing from overfitting • Reduces the dependence of network to weight initialization • Improves the gradient flow through the network • Improves the speed (η), performance, and stability of NN • Allows higher learning rates Batch Normalization 25

- 26. Batch Normalization • How batch normalization prevents from overfitting? – Reducing overfitting because of slight regularization effects – Similar to dropout, it adds some noise to each hidden layer’s activations. 26

- 27. Regularization • The process of introducing additional information to the loss function: Regularization term • Adds a penalty to explore certain regions in function space • V is the loss function • λ is importance factor of regularization 27 1 min ( ( ), ) ( ) n i i w i V h x y R w

- 28. Regularization • How regularization prevents from overfitting? • L1 & L2 regularization: – L1: Lasso Regression adds “absolute value of magnitude” of coefficient as penalty term to the loss function. – L2: Ridge regression adds “squared magnitude” of coefficient as penalty term to the loss function. 28

- 29. Regularization • • • Increasing λ Decreasing R(w) Decreasing w 29 T f w x b Under-fitting Over-fittingAppropriate-fitting 1 min ( ( ), ) ( ) n i i w i V h x y R w

- 30. • Ignoring neurons during the training which is chosen at random. • These neurons are not considered during a particular forward or backward pass. • Dropout probability = 0.5 usually works well • Not used on the output layer Dropout 30 Standard Neural Network After applying dropout

- 31. Dropout • How dropout prevents from overfitting? – Dropout is a fast regularization method – Layer's "over-reliance" on a few of its inputs – Network becomes less sensitive to the specific weights of neurons – Better generalization 31

- 32. Transfer Learning • A model trained on one task is re-purposed on a second related task. • We first train a base network on a base (big) dataset and task • Repurpose the learned features, or transfer them, to a second target network to be trained on a target dataset and task 32

- 33. Transfer Learning 33 Big Data Conv Conv Conv Pool Pool FC Conv Soft Max TrainingwithIMAGENET Transfer the weights Our Data Conv Conv Conv Pool Pool FC Conv Soft Max Freeze these (Low Data) Train these

- 34. Hyper Parameter Optimization • Hyper parameter: – A parameter whose value is set before the learning process begins – Initial weights – Number of layers – Number of neurons – Learning rate – Convolution kernel width,… 34

- 35. Hyper Parameter Optimization • Manual tuning – Monitor and visualize the loss curve/ accuracy • Automatic optimization – Random search – Grid search – Bayesian Hyper parameter optimization 35

- 36. Hyper Parameter Optimization • Bayesian Hyper parameter optimization: – Build a probability model of the objective function and use it to select the most promising hyper parameters to evaluate in the true objective function 36

- 37. Cross Validation • K-fold cross validation: – A model is trained using (k-1) of the folds as training data – The resulting model is validated on the remaining part of the data 37

- 38. Weight initialization • Zero initialization: – Fully connected, no asymmetry – In a layer, every neuron has the same output – In a layer, all the weights update in the same way 38 InputSignal OutputSignal ⋮ ⋮ ⋮⋮ 0 0 0

- 39. Weight initialization • Small random numbers: – Symmetry breaks – Causes “Vanishing Gradient” flowing backward through the network • A concern for deep networks 39 '2 2 '1 11 2 2 1 1 1 1 1 1 1 2 2 1 1 1 1 1 1 1 1 1 1e xf w f E E e o net o net w e o net o net w

- 40. Weight initialization • Large random numbers: – Symmetry breaks – Causes saturation – Causes “Exploding Gradient ” flowing backward through the network 40 '2 2 '1 11 2 2 1 1 1 1 1 1 1 2 2 1 1 1 1 1 1 1 1 1 1e xf w f E E e o net o net w e o net o net w T f w x b

- 41. Weight initialization • Train with multiple small random numbers • Measure the errors • Select the initial weights that produce the smallest errors 41 … 𝑤1 𝑤2 𝑤3 𝑤′1 𝑤′2 𝑤′3 𝐸 𝑛𝐸1

- 42. Feature Selection • Automatic • Handy – Forward selection – Backward elimination 42

- 43. Forward Selection • Begins with an empty model and adds in variables one by one • Adds the one variable that gives the single best improvement to our model 43 𝒙 𝟏 𝒙 𝟑 𝒙 𝟐 𝒆 𝟏 𝒆 𝟐 𝒆 𝟑 1 2 3min( , , )e e e Feature with minimum e is selected

- 44. Forward Selection • Suppose that 𝑥1 is selected • 𝑥1 and 𝑥2 𝑒12 • 𝑥1 and 𝑥3 𝑒13 44 12 13min( , )e e Features with minimum e are selected

- 45. Backward Elimination • Removes the least significant feature at each iteration • Steps: – Train the model with all the independent variables – Eliminate independent variables with no improvement on performance – Repeat training until no improvement is observed on removal of features 45