Introduction to deep learning using python

2 likes213 views

A brief introduction to machine learning and a short example on how to use Python and TensorFlow to build a neural network.

1 of 32

Download to read offline

![MNIST training data

>>> import matplotlib.pyplot as plt

>>> plt.imshow(train_images[0])

>>> plt.gray()

>>> plt.show()

>>> train_labels

array([5, 0, 4, ..., 5, 6, 8], dtype=uint8)](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-19-320.jpg)

![MNIST testing data

>>> test_images.shape

(10000, 28, 28)

>>> test_labels

array([7, 2, 1, ..., 4, 5, 6], dtype=uint8)

Testing input: 10,000 images (28 pixels * 28 pixels)

Testing labels: 10,000

Training

60,000 images

60,000 labels

Testing

10,000 images

10,000 labels](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-20-320.jpg)

![Data Reshaping (Input X)

# Train images

>>> train_images = train_images.reshape((60000, 28 * 28))

>>> train_images = train_images.astype('float32') / 255

# Test images

>>> test_images = test_images.reshape((10000, 28 * 28))

>>> test_images = test_images.astype('float32') / 255

1. 28 * 28 image (int values 0 -

255)

2. 28 * 28 = 784-element vector:

[row 0, row 1, …, row 27]

3. 784-element vectors with float

values in range 0 to 1](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-21-320.jpg)

![Data Reshaping (Labels Y)

>>> from keras.utils import to_categorical

# Train labels

>>> train_labels = to_categorical(train_labels)

# Test labels

>>> test_labels = to_categorical(test_labels)

1. train_labels = [5 0 4 ... 5 6 8]

2. train_labels =

[[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

…

[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]]](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-22-320.jpg)

![Network compilation

>>> network.compile(optimizer='rmsprop',

... loss='categorical_crossentropy',

... metrics=['accuracy'])](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-26-320.jpg)

![Let’s train!

>>> network.fit(train_images, train_labels, epochs=5, batch_size=128)

Epoch 1/5

60000/60000 [==============================] - 2s 33us/step - loss: 0.2179 - acc: 0.9329

Epoch 2/5

60000/60000 [==============================] - 2s 31us/step - loss: 0.0805 - acc: 0.9750

Epoch 3/5

60000/60000 [==============================] - 2s 32us/step - loss: 0.0531 - acc: 0.9841

Epoch 4/5

60000/60000 [==============================] - 2s 31us/step - loss: 0.0385 - acc: 0.9883

Epoch 5/5

60000/60000 [==============================] - 2s 31us/step - loss: 0.0290 - acc: 0.9908

Train

Accuracy: 99.08%](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-28-320.jpg)

![Test Accuracy

>>> test_loss, test_acc = network.evaluate(test_images, test_labels)

10000/10000 [==============================] - 0s 47us/step

>>> print('Test accuracy: %s' % test_acc)

Test accuracy: 0.9799

Test Accuracy: 97.99%](https://image.slidesharecdn.com/introductiontodeeplearningusingpython-180516210151/85/Introduction-to-deep-learning-using-python-29-320.jpg)

Ad

Recommended

Afsar ml applied_svm

Afsar ml applied_svmUmmeHaniAsif This document provides an overview of support vector machines (SVMs) for classification problems. It discusses how SVMs find the optimal separating hyperplane that maximizes the margin between the two classes. SVMs can handle non-separable data by introducing slack variables that allow some misclassification with a penalty. The document presents SVM optimization as minimizing the norm of w subject to margin constraints, and explains how kernels can transform the data to a higher dimensional space where linear separation is possible. Examples are provided to illustrate soft-margin SVM optimization.

ModuLab DLC-Medical3

ModuLab DLC-Medical3Dongheon Lee This document summarizes a neural architecture search technique using parameter sharing between child models. Specifically:

- It improves efficiency over traditional NAS by forcing all child models to share weights, avoiding training each from scratch to convergence. This reduces GPU hours needed for search from tens of thousands to less than 16 hours on a single GPU.

- The search is done in two stages: first a macro search over 7 hours to determine the overall structure, then a micro search over 11.5 hours to determine the operations within each cell block.

- It uses a directed acyclic graph to represent the neural network, with nodes representing local computations and edges determining information flow. The controller searches over the space of possible child models within

Introduction to ml and dl

Introduction to ml and dlSuyashSingh70 ML refers to systems that can learn by themselves. Systems that get smarter and smarter over time without human intervention. Deep Learning (DL) is ML but applied to large data sets.

Deep learning

Deep learningDrBaljitSinghKhehra The document discusses deep learning and convolutional neural networks. It provides details on concepts like convolution, activation maps, pooling, and the general architecture of CNNs. CNNs are made up of repeating sequences of convolutional layers and pooling layers, followed by fully connected layers at the end. Convolutional layers apply filters to input images or feature maps from previous layers to extract features. Pooling layers reduce the spatial size to make representations more manageable.

Python 的文件系統

Python 的文件系統Weizhong Yang This document discusses Python documentation tools including docstrings, pydoc, IPython, doctest, and Sphinx. Docstrings provide documentation for modules, classes, and methods and can be accessed via the __doc__ attribute. Pydoc generates documentation from docstrings. IPython provides an enhanced interactive Python shell. Doctests embed examples in docstrings to test documentation. Sphinx can generate documentation from docstrings and external files in multiple formats.

“Can You See What I See? The Power of Deep Learning,” a Presentation from Str...

“Can You See What I See? The Power of Deep Learning,” a Presentation from Str...Edge AI and Vision Alliance The document discusses three major computer vision tasks solved using deep learning: image classification, object detection, and embeddings. Image classification involves categorizing whole images into classes. Object detection combines classification and localization to identify multiple objects within an image. Embeddings represent inputs as vectors to capture semantics and preserve similarity. Pre-trained models and large datasets are used to train systems that can then be applied to problems like medical diagnosis, quality control, and autonomous vehicles.

Large scale object recognition (AMMAI presentation)

Large scale object recognition (AMMAI presentation)Po-Jen Lai This document summarizes two papers on large-scale object recognition and improving the Fisher kernel method.

The first paper discusses how people can recognize tens of thousands of objects but computers have struggled with this task. It evaluates several datasets and algorithms for classifying over 10,000 image categories. The second paper revisits the Fisher vector representation and proposes normalization and spatial pyramid techniques to improve its performance for large-scale image classification. It evaluates the improved Fisher kernel on several datasets containing thousands to tens of thousands of categories.

Gan seminar

Gan seminarSan Kim The document discusses neural networks, generative adversarial networks, and image-to-image translation. It begins by explaining how neural networks learn through forward propagation, calculating loss, and using the loss to update weights via backpropagation. Generative adversarial networks are introduced as a game between a generator and discriminator, where the generator tries to fool the discriminator and vice versa. Image-to-image translation uses conditional GANs to translate images from one domain to another, such as maps to aerial photos.

Object classification using deep neural network

Object classification using deep neural networknishakushwah4 In this presentation, we have included some points to understand how the computer understands image and classify the object present in the image.

HDDM: Hierarchical Bayesian estimation of the Drift Diffusion Model

HDDM: Hierarchical Bayesian estimation of the Drift Diffusion Modeltwiecki HDDM is a hierarchical Bayesian model that fits drift-diffusion models to behavioral data from multiple subjects. It estimates both group-level and subject-specific parameters, leveraging information across subjects to obtain more reliable estimates. This allows modeling experimental effects like difficulty while accounting for individual differences. HDDM implements efficient Markov chain Monte Carlo sampling in Python to estimate the full posterior distribution. It provides tools to assess model fit and visualize parameter estimates.

Global Load Instruction Aggregation Based on Code Motion

Global Load Instruction Aggregation Based on Code MotionYasunobu Sumikawa The 2012 International Symposium on Parallel Architecture, Algorithm and Programming.

If you download this file, you can see explanation of each slides.

Machine learning in R

Machine learning in Rapolol92 This document provides an overview and examples of various machine learning models in R, including linear regression, decision trees, k-means clustering, k-nearest neighbors (KNN), support vector machines (SVM), naive Bayes, and neural networks. Code examples are given to demonstrate how to build models for predicting iris flower species using each algorithm and evaluate predictions against test data. Models are trained on standard iris, women's height and weight, and randomized iris datasets. Visualizations are also created to analyze model outputs.

The Ring programming language version 1.5.2 book - Part 59 of 181

The Ring programming language version 1.5.2 book - Part 59 of 181Mahmoud Samir Fayed This document provides examples of using various Qt GUI classes in Ring, including QToolBar, QStatusBar, QDockWidget, QTabWidget, QTableWidget, QProgressBar, QSpinBox, QSlider, and QDateEdit. For each class, it shows a short code sample to create and configure an instance of that class within a basic window application. The examples are intended to demonstrate how to load the GUI library and use these common Qt widgets and containers.

Using parallel programming to improve performance of image processing

Using parallel programming to improve performance of image processingChan Le Implement Anisotropic Diffusion on CUDA platform

1 thread handle 1 pixel

Dividing the image to multiple sub-regions, process them parallely to exploit multiple cores

Back propagation

Back propagationSan Kim explain backpropagation with a simple example.

normally, we use cross-entropy as loss function.

and we set the activation function of the output layer as the logistic sigmoid. because we want to maximize (log) likelihood. (or minimize negative (log) likelihood), and we suppose that the function is a binomial distribution which is the maximum entropy function in two-class classification.

but in this example, we set the loss function (objective function or cost function) as sum of square, which is normally used in logistic regression, for simplifying the problem.

Viktor Tsykunov: Azure Machine Learning Service

Viktor Tsykunov: Azure Machine Learning ServiceLviv Startup Club Viktor Tsykunov: Azure Machine Learning Service

AI & BigData Online Day 2021

Website - http://aiconf.com.ua

Youtube - https://www.youtube.com/startuplviv

FB - https://www.facebook.com/aiconf

3D Brain Image Segmentation Model using Deep Learning and Hidden Markov Rando...

3D Brain Image Segmentation Model using Deep Learning and Hidden Markov Rando...EL-Hachemi Guerrout 17th ACS/IEEE International Conference on Computer Systems and Applications AICCSA 2020

November 2nd - 5th, 2020 (ONLINE)

Held in Antalya, Turkey.

Graph Analyses with Python and NetworkX

Graph Analyses with Python and NetworkXBenjamin Bengfort Social networks are not new, even though websites like Facebook and Twitter might make you want to believe they are; and trust me- I’m not talking about Myspace! Social networks are extremely interesting models for human behavior, whose study dates back to the early twentieth century. However, because of those websites, data scientists have access to much more data than the anthropologists who studied the networks of tribes!

Because networks take a relationship-centered view of the world, the data structures that we will analyze model real world behaviors and community. Through a suite of algorithms derived from mathematical Graph theory we are able to compute and predict behavior of individuals and communities through these types of analyses. Clearly this has a number of practical applications from recommendation to law enforcement to election prediction, and more.

The Ring programming language version 1.8 book - Part 67 of 202

The Ring programming language version 1.8 book - Part 67 of 202Mahmoud Samir Fayed This chapter discusses using Qt framework classes in Ring applications to create desktop and mobile applications. It provides examples of using various GUI classes like QTextEdit, QListWidget, QTreeView, QTreeWidget, and QComboBox. The examples demonstrate how to create basic GUI elements, add items and data, handle events, and display the application at runtime.

Machine learning and_nlp

Machine learning and_nlpankit_ppt This document discusses machine learning and natural language processing (NLP) techniques for text classification. It provides an overview of supervised vs. unsupervised learning and classification vs. regression problems. It then walks through the steps to perform binary text classification using logistic regression and Naive Bayes models on an SMS spam collection dataset. The steps include preparing and splitting the data, numerically encoding text with Count Vectorization, fitting models on the training data, and evaluating model performance on the test set using metrics like accuracy, precision, recall and F1 score. Naive Bayes classification is also introduced as an alternative simpler technique to logistic regression for text classification tasks.

Image Processing using Matlab ( using a built in Matlab function(Histogram eq...

Image Processing using Matlab ( using a built in Matlab function(Histogram eq...Majd Khaleel The document summarizes three digital image processing techniques demonstrated in MATLAB:

1. Histogram equalization was used to increase contrast in an image by redistributing intensity values. This made low contrast images appear lighter.

2. Gamma correction was used to make images lighter or darker by adjusting the gamma value in the imadjust function. A gamma less than 1 lightened images, while a gamma greater than 1 darkened images.

3. Spatial filtering was performed using a filter mask to lighten images by increasing or decreasing values in the filter. This inverse of gamma correction, with higher filter values lightening images more.

Learning Predictive Modeling with TSA and Kaggle

Learning Predictive Modeling with TSA and KaggleYvonne K. Matos This document summarizes Yvonne Matos' presentation on learning predictive modeling by participating in Kaggle challenges using TSA passenger screening data.

The key points are:

1) Matos started with a small subset of 120 images from one body zone to build initial neural network models and address challenges of large data sizes and compute requirements.

2) Through iterative tuning, her best model achieved good performance identifying non-threat images but had a high false negative rate for threats.

3) Her next steps were to reduce the false negative rate, run models on Google Cloud to handle full data sizes, and prepare the best model for real-world use.

Session 4 start coding Tensorflow 2.0

Session 4 start coding Tensorflow 2.0Rajagopal A 1. The document outlines the 7 steps to develop a deep learning model: define the problem, prepare the data, design the network architecture, define the loss, train the network, validate and improve the model, and predict outcomes.

2. It provides an example of developing a model to recognize handwritten digits using the MNIST dataset. The model is a simple multi-layer perceptron with 3 dense layers trained on the MNIST images and labels to classify handwritten digits.

3. The model achieves a test accuracy of 91.57% showing it has learned to accurately recognize handwritten digits based on the training process outlined.

Dimension reduction techniques[Feature Selection]![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

Dimension reduction techniques[Feature Selection]AAKANKSHA JAIN Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data

Edge AI: Bringing Intelligence to Embedded Devices

Edge AI: Bringing Intelligence to Embedded DevicesSpeck&Tech ABSTRACT: Artificial intelligence is no longer confined to the cloud. Thanks to Edge AI, we can now run AI models directly on embedded devices with limited power and resources. This session will explore the full pipeline of developing a Tiny Machine Learning (TinyML) model, from data collection to deployment, addressing key challenges such as dataset preparation, model training, quantization, and optimization for embedded systems. We’ll explore real-world use cases where AI-powered embedded systems enable smart decision-making in applications like predictive maintenance, anomaly detection, and voice recognition. The talk will include a live hands-on demonstration on how to train and deploy a model using popular tools like Google Colab and TensorFlow, and then run real-time inference on an Arduino board.

BIO: Leonardo Cavagnis is an experienced embedded software engineer, interested in IoT and AI applications. At Arduino, he works as a firmware engineer, developing libraries and core functionalities for boards while also focusing on communication and engaging with the community.

Yufeng Guo | Coding the 7 steps of machine learning | Codemotion Madrid 2018

Yufeng Guo | Coding the 7 steps of machine learning | Codemotion Madrid 2018 Codemotion Machine learning has gained a lot of attention as the next big thing. But what is it, really, and how can we use it? In this talk, you'll learn the meaning behind buzzwords like hyperparameter tuning, and see the code behind each step of machine learning. This talk will help demystify the "magic" behind machine learning. You'll come away with a foundation that you can build on, and an understanding of the tools to build with!

DN 2017 | Multi-Paradigm Data Science - On the many dimensions of Knowledge D...

DN 2017 | Multi-Paradigm Data Science - On the many dimensions of Knowledge D...Dataconomy Media Gaining insight from data is not as straightforward as we often wish it would be – as diverse as the questions we’re asking are the quality and the quantity of the data we may have at hand. Any attempt to turn data into knowledge thus strongly depends on it dealing with big or not-so-big data, high- or low-dimensional data, exact or fuzzy data, exact or fuzzy questions, and the goal being accurate prediction or understanding. This presentation emphasizes the need for a multi-paradigm data science to tackle all the challenges we are facing today and may be facing in the future. Luckily, solutions are starting to emerge...

IRJET- Unabridged Review of Supervised Machine Learning Regression and Classi...

IRJET- Unabridged Review of Supervised Machine Learning Regression and Classi...IRJET Journal This document provides an unabridged review of supervised machine learning regression and classification techniques. It begins with an introduction to machine learning and artificial intelligence. It then describes regression and classification techniques for supervised learning problems, including linear regression, logistic regression, k-nearest neighbors, naive bayes, decision trees, support vector machines, and random forests. Practical examples are provided using Python code for applying these techniques to housing price prediction and iris species classification problems. The document concludes that the primary goal was to provide an extensive review of supervised machine learning methods.

Power ai tensorflowworkloadtutorial-20171117

Power ai tensorflowworkloadtutorial-20171117Ganesan Narayanasamy This document provides an overview of running an image classification workload using IBM PowerAI and the MNIST dataset. It discusses deep learning concepts like neural networks and training flows. It then demonstrates how to set up TensorFlow on an IBM PowerAI trial server, load the MNIST dataset, build and train a basic neural network model for image classification, and evaluate the trained model's accuracy on test data.

GANS Project for Image idetification.pdf

GANS Project for Image idetification.pdfVivekanandaGN1 The document describes implementing a generative adversarial network (GAN) to generate realistic images. It involves defining generator and discriminator neural networks, training the GAN by having the generator try to generate images that fool the discriminator while the discriminator tries to accurately classify real vs. generated images. The GAN is trained on a small dataset of images to generate new similar images.

Ad

More Related Content

What's hot (13)

Object classification using deep neural network

Object classification using deep neural networknishakushwah4 In this presentation, we have included some points to understand how the computer understands image and classify the object present in the image.

HDDM: Hierarchical Bayesian estimation of the Drift Diffusion Model

HDDM: Hierarchical Bayesian estimation of the Drift Diffusion Modeltwiecki HDDM is a hierarchical Bayesian model that fits drift-diffusion models to behavioral data from multiple subjects. It estimates both group-level and subject-specific parameters, leveraging information across subjects to obtain more reliable estimates. This allows modeling experimental effects like difficulty while accounting for individual differences. HDDM implements efficient Markov chain Monte Carlo sampling in Python to estimate the full posterior distribution. It provides tools to assess model fit and visualize parameter estimates.

Global Load Instruction Aggregation Based on Code Motion

Global Load Instruction Aggregation Based on Code MotionYasunobu Sumikawa The 2012 International Symposium on Parallel Architecture, Algorithm and Programming.

If you download this file, you can see explanation of each slides.

Machine learning in R

Machine learning in Rapolol92 This document provides an overview and examples of various machine learning models in R, including linear regression, decision trees, k-means clustering, k-nearest neighbors (KNN), support vector machines (SVM), naive Bayes, and neural networks. Code examples are given to demonstrate how to build models for predicting iris flower species using each algorithm and evaluate predictions against test data. Models are trained on standard iris, women's height and weight, and randomized iris datasets. Visualizations are also created to analyze model outputs.

The Ring programming language version 1.5.2 book - Part 59 of 181

The Ring programming language version 1.5.2 book - Part 59 of 181Mahmoud Samir Fayed This document provides examples of using various Qt GUI classes in Ring, including QToolBar, QStatusBar, QDockWidget, QTabWidget, QTableWidget, QProgressBar, QSpinBox, QSlider, and QDateEdit. For each class, it shows a short code sample to create and configure an instance of that class within a basic window application. The examples are intended to demonstrate how to load the GUI library and use these common Qt widgets and containers.

Using parallel programming to improve performance of image processing

Using parallel programming to improve performance of image processingChan Le Implement Anisotropic Diffusion on CUDA platform

1 thread handle 1 pixel

Dividing the image to multiple sub-regions, process them parallely to exploit multiple cores

Back propagation

Back propagationSan Kim explain backpropagation with a simple example.

normally, we use cross-entropy as loss function.

and we set the activation function of the output layer as the logistic sigmoid. because we want to maximize (log) likelihood. (or minimize negative (log) likelihood), and we suppose that the function is a binomial distribution which is the maximum entropy function in two-class classification.

but in this example, we set the loss function (objective function or cost function) as sum of square, which is normally used in logistic regression, for simplifying the problem.

Viktor Tsykunov: Azure Machine Learning Service

Viktor Tsykunov: Azure Machine Learning ServiceLviv Startup Club Viktor Tsykunov: Azure Machine Learning Service

AI & BigData Online Day 2021

Website - http://aiconf.com.ua

Youtube - https://www.youtube.com/startuplviv

FB - https://www.facebook.com/aiconf

3D Brain Image Segmentation Model using Deep Learning and Hidden Markov Rando...

3D Brain Image Segmentation Model using Deep Learning and Hidden Markov Rando...EL-Hachemi Guerrout 17th ACS/IEEE International Conference on Computer Systems and Applications AICCSA 2020

November 2nd - 5th, 2020 (ONLINE)

Held in Antalya, Turkey.

Graph Analyses with Python and NetworkX

Graph Analyses with Python and NetworkXBenjamin Bengfort Social networks are not new, even though websites like Facebook and Twitter might make you want to believe they are; and trust me- I’m not talking about Myspace! Social networks are extremely interesting models for human behavior, whose study dates back to the early twentieth century. However, because of those websites, data scientists have access to much more data than the anthropologists who studied the networks of tribes!

Because networks take a relationship-centered view of the world, the data structures that we will analyze model real world behaviors and community. Through a suite of algorithms derived from mathematical Graph theory we are able to compute and predict behavior of individuals and communities through these types of analyses. Clearly this has a number of practical applications from recommendation to law enforcement to election prediction, and more.

The Ring programming language version 1.8 book - Part 67 of 202

The Ring programming language version 1.8 book - Part 67 of 202Mahmoud Samir Fayed This chapter discusses using Qt framework classes in Ring applications to create desktop and mobile applications. It provides examples of using various GUI classes like QTextEdit, QListWidget, QTreeView, QTreeWidget, and QComboBox. The examples demonstrate how to create basic GUI elements, add items and data, handle events, and display the application at runtime.

Machine learning and_nlp

Machine learning and_nlpankit_ppt This document discusses machine learning and natural language processing (NLP) techniques for text classification. It provides an overview of supervised vs. unsupervised learning and classification vs. regression problems. It then walks through the steps to perform binary text classification using logistic regression and Naive Bayes models on an SMS spam collection dataset. The steps include preparing and splitting the data, numerically encoding text with Count Vectorization, fitting models on the training data, and evaluating model performance on the test set using metrics like accuracy, precision, recall and F1 score. Naive Bayes classification is also introduced as an alternative simpler technique to logistic regression for text classification tasks.

Image Processing using Matlab ( using a built in Matlab function(Histogram eq...

Image Processing using Matlab ( using a built in Matlab function(Histogram eq...Majd Khaleel The document summarizes three digital image processing techniques demonstrated in MATLAB:

1. Histogram equalization was used to increase contrast in an image by redistributing intensity values. This made low contrast images appear lighter.

2. Gamma correction was used to make images lighter or darker by adjusting the gamma value in the imadjust function. A gamma less than 1 lightened images, while a gamma greater than 1 darkened images.

3. Spatial filtering was performed using a filter mask to lighten images by increasing or decreasing values in the filter. This inverse of gamma correction, with higher filter values lightening images more.

Similar to Introduction to deep learning using python (20)

Learning Predictive Modeling with TSA and Kaggle

Learning Predictive Modeling with TSA and KaggleYvonne K. Matos This document summarizes Yvonne Matos' presentation on learning predictive modeling by participating in Kaggle challenges using TSA passenger screening data.

The key points are:

1) Matos started with a small subset of 120 images from one body zone to build initial neural network models and address challenges of large data sizes and compute requirements.

2) Through iterative tuning, her best model achieved good performance identifying non-threat images but had a high false negative rate for threats.

3) Her next steps were to reduce the false negative rate, run models on Google Cloud to handle full data sizes, and prepare the best model for real-world use.

Session 4 start coding Tensorflow 2.0

Session 4 start coding Tensorflow 2.0Rajagopal A 1. The document outlines the 7 steps to develop a deep learning model: define the problem, prepare the data, design the network architecture, define the loss, train the network, validate and improve the model, and predict outcomes.

2. It provides an example of developing a model to recognize handwritten digits using the MNIST dataset. The model is a simple multi-layer perceptron with 3 dense layers trained on the MNIST images and labels to classify handwritten digits.

3. The model achieves a test accuracy of 91.57% showing it has learned to accurately recognize handwritten digits based on the training process outlined.

Dimension reduction techniques[Feature Selection]![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

![Dimension reduction techniques[Feature Selection]](https://cdn.slidesharecdn.com/ss_thumbnails/dimensionreductiontechnibyaakankshajain-210625102243-thumbnail.jpg?width=560&fit=bounds)

Dimension reduction techniques[Feature Selection]AAKANKSHA JAIN Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data

Edge AI: Bringing Intelligence to Embedded Devices

Edge AI: Bringing Intelligence to Embedded DevicesSpeck&Tech ABSTRACT: Artificial intelligence is no longer confined to the cloud. Thanks to Edge AI, we can now run AI models directly on embedded devices with limited power and resources. This session will explore the full pipeline of developing a Tiny Machine Learning (TinyML) model, from data collection to deployment, addressing key challenges such as dataset preparation, model training, quantization, and optimization for embedded systems. We’ll explore real-world use cases where AI-powered embedded systems enable smart decision-making in applications like predictive maintenance, anomaly detection, and voice recognition. The talk will include a live hands-on demonstration on how to train and deploy a model using popular tools like Google Colab and TensorFlow, and then run real-time inference on an Arduino board.

BIO: Leonardo Cavagnis is an experienced embedded software engineer, interested in IoT and AI applications. At Arduino, he works as a firmware engineer, developing libraries and core functionalities for boards while also focusing on communication and engaging with the community.

Yufeng Guo | Coding the 7 steps of machine learning | Codemotion Madrid 2018

Yufeng Guo | Coding the 7 steps of machine learning | Codemotion Madrid 2018 Codemotion Machine learning has gained a lot of attention as the next big thing. But what is it, really, and how can we use it? In this talk, you'll learn the meaning behind buzzwords like hyperparameter tuning, and see the code behind each step of machine learning. This talk will help demystify the "magic" behind machine learning. You'll come away with a foundation that you can build on, and an understanding of the tools to build with!

DN 2017 | Multi-Paradigm Data Science - On the many dimensions of Knowledge D...

DN 2017 | Multi-Paradigm Data Science - On the many dimensions of Knowledge D...Dataconomy Media Gaining insight from data is not as straightforward as we often wish it would be – as diverse as the questions we’re asking are the quality and the quantity of the data we may have at hand. Any attempt to turn data into knowledge thus strongly depends on it dealing with big or not-so-big data, high- or low-dimensional data, exact or fuzzy data, exact or fuzzy questions, and the goal being accurate prediction or understanding. This presentation emphasizes the need for a multi-paradigm data science to tackle all the challenges we are facing today and may be facing in the future. Luckily, solutions are starting to emerge...

IRJET- Unabridged Review of Supervised Machine Learning Regression and Classi...

IRJET- Unabridged Review of Supervised Machine Learning Regression and Classi...IRJET Journal This document provides an unabridged review of supervised machine learning regression and classification techniques. It begins with an introduction to machine learning and artificial intelligence. It then describes regression and classification techniques for supervised learning problems, including linear regression, logistic regression, k-nearest neighbors, naive bayes, decision trees, support vector machines, and random forests. Practical examples are provided using Python code for applying these techniques to housing price prediction and iris species classification problems. The document concludes that the primary goal was to provide an extensive review of supervised machine learning methods.

Power ai tensorflowworkloadtutorial-20171117

Power ai tensorflowworkloadtutorial-20171117Ganesan Narayanasamy This document provides an overview of running an image classification workload using IBM PowerAI and the MNIST dataset. It discusses deep learning concepts like neural networks and training flows. It then demonstrates how to set up TensorFlow on an IBM PowerAI trial server, load the MNIST dataset, build and train a basic neural network model for image classification, and evaluate the trained model's accuracy on test data.

GANS Project for Image idetification.pdf

GANS Project for Image idetification.pdfVivekanandaGN1 The document describes implementing a generative adversarial network (GAN) to generate realistic images. It involves defining generator and discriminator neural networks, training the GAN by having the generator try to generate images that fool the discriminator while the discriminator tries to accurately classify real vs. generated images. The GAN is trained on a small dataset of images to generate new similar images.

Need an detailed analysis of what this code-model is doing- Thanks #St.pdf

Need an detailed analysis of what this code-model is doing- Thanks #St.pdfactexerode Need an detailed analysis of what this code/model is doing. Thanks

#Step 1: Import the required Python libraries:

import numpy as np

import matplotlib.pyplot as plt

import keras

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam,SGD

from keras.datasets import cifar10

#Step 2: Load the data.

#Loading the CIFAR10 data

(X, y), (_, _) = keras.datasets.cifar10.load_data()

#Selecting a single class of images

#The number was randomly chosen and any number

#between 1 and 10 can be chosen

X = X[y.flatten() == 8]

#Step 3: Define parameters to be used in later processes.

#Defining the Input shape

image_shape = (32, 32, 3)

latent_dimensions = 100

#Step 4: Define a utility function to build the generator.

def build_generator():

model = Sequential()

#Building the input layer

model.add(Dense(128 * 8 * 8, activation="relu",

input_dim=latent_dimensions))

model.add(Reshape((8, 8, 128)))

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.78))

model.add(Activation("relu"))

model.add(Conv2D(3, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

#Generating the output image

noise = Input(shape=(latent_dimensions,))

image = model(noise)

return Model(noise, image)

#Step 5: Define a utility function to build the discriminator.

def build_discriminator():

#Building the convolutional layers

#to classify whether an image is real or fake

model = Sequential()

model.add(Conv2D(32, kernel_size=3, strides=2,

input_shape=image_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(ZeroPadding2D(padding=((0,1),(0,1))))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.82))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.25))

model.add(Dropout(0.25))

#Building the output layer

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

image = Input(shape=image_shape)

validity = model(image)

return Model(image, validity)

#Step 6: Define a utility function to display the generated images.

def display_images():

# Generate a batch of random noise

noise = np.random.normal(0, 1, (16, latent_dimensions))

# Generate images from the noise

generated_images = generator.predict(noise)

# Rescale the images to 0.

Feature Engineering - Getting most out of data for predictive models

Feature Engineering - Getting most out of data for predictive modelsGabriel Moreira How should data be preprocessed for use in machine learning algorithms? How to identify the most predictive attributes of a dataset? What features can generate to improve the accuracy of a model?

Feature Engineering is the process of extracting and selecting, from raw data, features that can be used effectively in predictive models. As the quality of the features greatly influences the quality of the results, knowing the main techniques and pitfalls will help you to succeed in the use of machine learning in your projects.

In this talk, we will present methods and techniques that allow us to extract the maximum potential of the features of a dataset, increasing flexibility, simplicity and accuracy of the models. The analysis of the distribution of features and their correlations, the transformation of numeric attributes (such as scaling, normalization, log-based transformation, binning), categorical attributes (such as one-hot encoding, feature hashing, Temporal (date / time), and free-text attributes (text vectorization, topic modeling).

Python, Python, Scikit-learn, and Spark SQL examples will be presented and how to use domain knowledge and intuition to select and generate features relevant to predictive models.

Feature Engineering - Getting most out of data for predictive models - TDC 2017

Feature Engineering - Getting most out of data for predictive models - TDC 2017Gabriel Moreira How should data be preprocessed for use in machine learning algorithms? How to identify the most predictive attributes of a dataset? What features can generate to improve the accuracy of a model?

Feature Engineering is the process of extracting and selecting, from raw data, features that can be used effectively in predictive models. As the quality of the features greatly influences the quality of the results, knowing the main techniques and pitfalls will help you to succeed in the use of machine learning in your projects.

In this talk, we will present methods and techniques that allow us to extract the maximum potential of the features of a dataset, increasing flexibility, simplicity and accuracy of the models. The analysis of the distribution of features and their correlations, the transformation of numeric attributes (such as scaling, normalization, log-based transformation, binning), categorical attributes (such as one-hot encoding, feature hashing, Temporal (date / time), and free-text attributes (text vectorization, topic modeling).

Python, Python, Scikit-learn, and Spark SQL examples will be presented and how to use domain knowledge and intuition to select and generate features relevant to predictive models.

AIML4 CNN lab256 1hr (111-1).pdf

AIML4 CNN lab256 1hr (111-1).pdfssuserb4d806 The document discusses CNN Lab 256 and various labs involving image classification using ImageNet and MNIST datasets. Lab 2 focuses on image classification using ImageNet, which contains over 14 million images across 20,000 categories. The script classify_image.py is used to classify images using a pre-trained model. Retraining the model on a custom dataset is also discussed. Lab 5 involves classifying handwritten digits from the MNIST dataset using a convolutional neural network model defined in TensorFlow. The model achieves an accuracy of over 99% after training for 15,000 epochs in batches of 100 images.

Image classification using cnn

Image classification using cnnDebarko De CNNs can be used for image classification by using trainable convolutional and pooling layers to extract features from images, followed by dense layers for classification. CNNs were made practical by increased computational power and large datasets. Libraries like Keras make it easy to build and train CNNs. Example projects include sentiment analysis, customer conversion analysis, and inventory management using computer vision and natural language processing with CNNs.

Machine Learning : why we should know and how it works

Machine Learning : why we should know and how it worksKevin Lee This document provides an overview of machine learning, including:

- An introduction to machine learning and why it is important.

- The main types of machine learning algorithms: supervised learning, unsupervised learning, and deep neural networks.

- Examples of how machine learning algorithms work, such as logistic regression, support vector machines, and k-means clustering.

- How machine learning is being applied in various industries like healthcare, commerce, and more.

Gradient Descent Code Implementation.pdf

Gradient Descent Code Implementation.pdfMubashirHussain792093 https://www.slideshare.net/slideshow/chapter-1-ob-38248150/38248150https://www.slideshare.net/slideshow/chapter-1-ob-38248150/38248150https://www.slideshare.net/slideshow/chapter-1-ob-38248150/38248150

Deep learning image classification aplicado al mundo de la moda

Deep learning image classification aplicado al mundo de la modaJavier Abadía slides de la charla impartida en Codemotion 2016

http://2016.codemotion.es/agenda.html#5732408326356992/86464003

Machine Learning Algorithms

Machine Learning AlgorithmsHichem Felouat This document provides an overview of machine learning algorithms and scikit-learn. It begins with an introduction and table of contents. Then it covers topics like dataset loading from files, pandas, scikit-learn datasets, preprocessing data like handling missing values, feature selection, dimensionality reduction, training and test sets, supervised and unsupervised learning models, and saving/loading machine learning models. For each topic, it provides code examples and explanations.

Pytorch and Machine Learning for the Math Impaired

Pytorch and Machine Learning for the Math ImpairedTyrel Denison High level introduction to machine learning and deep learning concepts, followed by examples in Pytorch

Baseball Prediction Model on Tensorflow

Baseball Prediction Model on TensorflowJay Ryu This document describes a baseball prediction model using deep learning. It outlines scraping game data and transforming it into JSON format. An autoencoder model is designed to take the previous games statistics of both teams playing as input and output the predicted score. The model is tested across different hyperparameters and the best performing configuration achieved 66% accuracy, limited by the small dataset size. Improvements suggested include obtaining more data, exploring alternative neural network architectures, and identifying meaningful predictive values to weight.

Ad

Recently uploaded (20)

04302025_CCC TUG_DataVista: The Design Story

04302025_CCC TUG_DataVista: The Design Storyccctableauusergroup CCCCO and WestEd share the story behind how DataVista came together from a design standpoint and in Tableau.

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.

However, not all commercial systems will share their “thought traces” which are like a “debug mode” for LLMs. This includes OpenAI’s o1, o3 or DeepSeek’s R1 models. Guardrails systems can provide a “goal consistency analysis”, between the goals given to the system and the behavior of the system. Cautious users may consider not using these commercial frontier LLM systems, and make use of open-source Llama or a system with their own reasoning implementation, to provide all thought traces.

Architectural solutions can include sandboxing, to prevent or control models from executing operating system commands to alter files, send network requests, and modify their environment. Tight controls to prevent models from copying their model weights would be appropriate as well. Running multiple instances of the same model on the same prompt to detect behavior variations helps. The running redundant instances can be limited to the most crucial decisions, as an additional check. Preventing self-modifying code, ... (see link for full description)

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

Process Mining and Data Science in the Financial Industry

Process Mining and Data Science in the Financial IndustryProcess mining Evangelist Lalit Wangikar, a partner at CKM Advisors, is an experienced strategic consultant and analytics expert. He started looking for data driven ways of conducting process discovery workshops. When he read about process mining the first time around, about 2 years ago, the first feeling was: “I wish I knew of this while doing the last several projects!".

Interviews are subject to all the whims human recollection is subject to: specifically, recency, simplification and self preservation. Interview-based process discovery, therefore, leaves out a lot of “outliers” that usually end up being one of the biggest opportunity area. Process mining, in contrast, provides an unbiased, fact-based, and a very comprehensive understanding of actual process execution.

Customer Segmentation using K-Means clustering

Customer Segmentation using K-Means clusteringIngrid Nyakerario This project demonstrates the application of machine learning—specifically K-Means Clustering—to segment customers based on behavioral and demographic data. The objective is to identify distinct customer groups to enable targeted marketing strategies and personalized customer engagement.

The presentation walks through:

Data preprocessing and exploratory data analysis (EDA)

Feature scaling and dimensionality reduction

K-Means clustering and silhouette analysis

Insights and business recommendations from each customer segment

This work showcases practical data science skills applied to a real-world business problem, using Python and visualization tools to generate actionable insights for decision-makers.

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

Deloitte - A Framework for Process Mining Projects

Deloitte - A Framework for Process Mining ProjectsProcess mining Evangelist Tijn van der Heijden is a business analyst with Deloitte. He learned about process mining during his studies in a BPM course at Eindhoven University of Technology and became fascinated with the fact that it was possible to get a process model and so much performance information out of automatically logged events of an information system.

Tijn successfully introduced process mining as a new standard to achieve continuous improvement for the Rabobank during his Master project. At his work at Deloitte, Tijn has now successfully been using this framework in client projects.

Ad

Introduction to deep learning using python

- 1. Introduction to Deep Learning using Python Lino Coria [email protected] @linocoria

- 2. About Wiivv Wiivv is a technology company transforming footwear and apparel for every human body. Wiivv Insoles and Sandals are created uniquely for you, based on measurements taken from the award-winning Wiivv app.

- 3. Testing our sandals - Boston Marathon 2018

- 4. What is Deep Learning?

- 5. AI, Machine Learning, Deep Learning Artificial Intelligence Machine Learning Deep Learning

- 6. Artificial Intelligence - Deep Learning

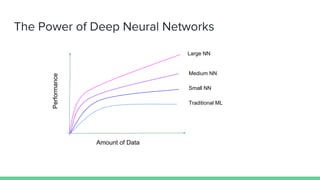

- 8. The Power of Deep Neural Networks Amount of Data Performance Traditional ML Small NN Medium NN Large NN

- 9. Why is Deep Learning in Vogue? ● Hardware ○ GPUs ○ NVIDIA leading the way ● Tons of Data ○ ImageNet dataset: 1.4 million annotated images ● Better Algorithms ● Democratic ○ If you know Python, you can do deep learning ○ Many tutorials, pre-trained models

- 10. Deep Learning and Python

- 11. Open-Source Resources for Deep Learning Keras

- 12. A good way to start Keras

- 13. How to get Data

- 14. Example: MNIST Dataset A classic Machine Learning problem Input X (Images) Labels Y: 5 0 4 (Answers)

- 15. Training Data + Testing Data Input X (Images) Labels Y Training Data Input X (Images) Labels Y Testing Data

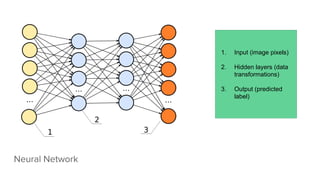

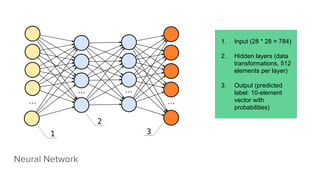

- 16. Neural Network 1. Input (image pixels) 2. Hidden layers (data transformations) 3. Output (predicted label)

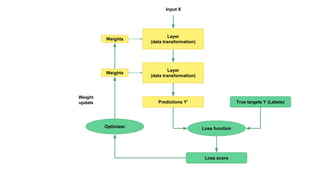

- 17. Weights Layer (data transformation) Input X Weights Layer (data transformation) Predictions Y’ True targets Y (Labels) Loss function Loss score Optimizer Weight update

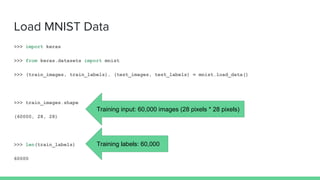

- 18. Load MNIST Data >>> import keras >>> from keras.datasets import mnist >>> (train_images, train_labels), (test_images, test_labels) = mnist.load_data() >>> train_images.shape (60000, 28, 28) >>> len(train_labels) 60000 Training input: 60,000 images (28 pixels * 28 pixels) Training labels: 60,000

- 19. MNIST training data >>> import matplotlib.pyplot as plt >>> plt.imshow(train_images[0]) >>> plt.gray() >>> plt.show() >>> train_labels array([5, 0, 4, ..., 5, 6, 8], dtype=uint8)

- 20. MNIST testing data >>> test_images.shape (10000, 28, 28) >>> test_labels array([7, 2, 1, ..., 4, 5, 6], dtype=uint8) Testing input: 10,000 images (28 pixels * 28 pixels) Testing labels: 10,000 Training 60,000 images 60,000 labels Testing 10,000 images 10,000 labels

- 21. Data Reshaping (Input X) # Train images >>> train_images = train_images.reshape((60000, 28 * 28)) >>> train_images = train_images.astype('float32') / 255 # Test images >>> test_images = test_images.reshape((10000, 28 * 28)) >>> test_images = test_images.astype('float32') / 255 1. 28 * 28 image (int values 0 - 255) 2. 28 * 28 = 784-element vector: [row 0, row 1, …, row 27] 3. 784-element vectors with float values in range 0 to 1

- 22. Data Reshaping (Labels Y) >>> from keras.utils import to_categorical # Train labels >>> train_labels = to_categorical(train_labels) # Test labels >>> test_labels = to_categorical(test_labels) 1. train_labels = [5 0 4 ... 5 6 8] 2. train_labels = [[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.] [1. 0. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 1. 0. 0. 0. 0. 0.] … [0. 0. 0. 0. 0. 1. 0. 0. 0. 0.] [0. 0. 0. 0. 0. 0. 1. 0. 0. 0.] [0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]]

- 23. Building our Network >>> from keras import models >>> from keras import layers >>> network = models.Sequential() >>> network.add(layers.Dense(512, activation='relu', input_shape=(28 * 28,))) >>> network.add(layers.Dense(512, activation='relu')) >>> network.add(layers.Dense(10, activation='softmax'))

- 24. Neural Network 1. Input (28 * 28 = 784) 2. Hidden layers (data transformations, 512 elements per layer) 3. Output (predicted label: 10-element vector with probabilities)

- 25. Weights Layer (data transformation) Input X Weights Layer (data transformation) Predictions Y’ True targets Y (Labels) Loss function Loss score Optimizer Weight update

- 26. Network compilation >>> network.compile(optimizer='rmsprop', ... loss='categorical_crossentropy', ... metrics=['accuracy'])

- 27. Weights Layer (data transformation) Input X Weights Layer (data transformation) Predictions Y’ True targets Y (Labels) Loss function Loss score Optimizer Weight update

- 28. Let’s train! >>> network.fit(train_images, train_labels, epochs=5, batch_size=128) Epoch 1/5 60000/60000 [==============================] - 2s 33us/step - loss: 0.2179 - acc: 0.9329 Epoch 2/5 60000/60000 [==============================] - 2s 31us/step - loss: 0.0805 - acc: 0.9750 Epoch 3/5 60000/60000 [==============================] - 2s 32us/step - loss: 0.0531 - acc: 0.9841 Epoch 4/5 60000/60000 [==============================] - 2s 31us/step - loss: 0.0385 - acc: 0.9883 Epoch 5/5 60000/60000 [==============================] - 2s 31us/step - loss: 0.0290 - acc: 0.9908 Train Accuracy: 99.08%

- 29. Test Accuracy >>> test_loss, test_acc = network.evaluate(test_images, test_labels) 10000/10000 [==============================] - 0s 47us/step >>> print('Test accuracy: %s' % test_acc) Test accuracy: 0.9799 Test Accuracy: 97.99%

- 30. Resources Online Udacity, Coursera PyImageSearch Book Deep Learning with Python by Francois Chollet