Introduction to elasticsearch

1 like1,410 views

Introduction to several aspects of elasticsearch: Full text search, Scaling, Aggregations and centralized logging. Talk for an internal meetup at a bank in Singapore at 18.11.2016.

1 of 53

Downloaded 25 times

![Indexing

POST /library/book

{

"title": "Elasticsearch in Action",

"author": [ "Radu Gheorghe",

"Matthew Lee Hinman",

"Roy Russo" ],

"published": "2015-06-30T00:00:00.000Z",

"publisher": {

"name": "Manning",

"country": "USA"

},

"tags": [ "Computer Science", "Development"]

}](https://image.slidesharecdn.com/introduction-to-elasticsearch-online-161119023116/85/Introduction-to-elasticsearch-8-320.jpg)

![Parameter Search

GET /library/book/_search?q=elasticsearch

{

[...]

"hits": {

"hits": [

{

"_index": "library",

"_type": "book",

"_source": {

"title": "Elasticsearch in Action",

[...]](https://image.slidesharecdn.com/introduction-to-elasticsearch-online-161119023116/85/Introduction-to-elasticsearch-9-320.jpg)

![Terms-Aggregation

"aggregations": {

"common-tags": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "Development",

"doc_count": 2

},

{

"key": "Computer Science",

"doc_count": 1

}]

[...]](https://image.slidesharecdn.com/introduction-to-elasticsearch-online-161119023116/85/Introduction-to-elasticsearch-34-320.jpg)

Ad

Recommended

Java clients for elasticsearch

Java clients for elasticsearchFlorian Hopf Slides for a talk on Java clients for elasticsearch for Singapore JUG. Covers the TransportClient, RestClient, Jest and Spring Data Elasticsearch

Elasticsearch und die Java-Welt

Elasticsearch und die Java-WeltFlorian Hopf Elasticsearch is an open source search and analytics engine that is distributed, horizontally scalable, reliable, and easy to manage. The document discusses how to install and interact with Elasticsearch using various Java clients and frameworks. It covers using the standard Java client directly, the Jest HTTP client, and Spring Data Elasticsearch which provides abstractions and dynamic repositories.

Elasticsearch 5.0

Elasticsearch 5.0Matias Cascallares What's new in Elasticsearch 5.0? Take a look at all the new cool features we introduced in version 5.

Elastic Search

Elastic SearchNexThoughts Technologies Elasticsearch is a highly scalable open-source full-text search and analytics engine. It allows you to store, search, and analyze big volumes of data quickly and in near real time.

Elasticsearch for Data Analytics

Elasticsearch for Data AnalyticsFelipe This document provides an overview of using Elasticsearch for data analytics. It discusses various aggregation techniques in Elasticsearch like terms, min/max/avg/sum, cardinality, histogram, date_histogram, and nested aggregations. It also covers mappings, dynamic templates, and general tips for working with aggregations. The main takeaways are that aggregations in Elasticsearch provide insights into data distributions and relationships similarly to GROUP BY in SQL, and that mappings and templates can optimize how data is indexed for aggregation purposes.

Introduction to Elasticsearch

Introduction to ElasticsearchRuslan Zavacky ElasticSearch introduction talk. Overview of the API, functionality, use cases. What can be achieved, how to scale? What is Kibana, how it can benefit your business.

Using ElasticSearch as a fast, flexible, and scalable solution to search occu...

Using ElasticSearch as a fast, flexible, and scalable solution to search occu...kristgen Elasticsearch is an open source search engine that provides fast, flexible, and scalable search of occurrence records and checklists. It allows adding and querying data through a REST API or Java API. Data can be imported from databases or other sources using rivers. Mappings customize indexing and querying. Elasticsearch has been used at Canadensys to index vascular plant names with filters for autocompletion, genus filtering, and epithet hierarchy. It is also used at GBIF France to search biodiversity data from MongoDB with filters and calculate statistics with facets.

ElasticSearch - index server used as a document database

ElasticSearch - index server used as a document databaseRobert Lujo Presentation held on 5.10.2014 on http://2014.webcampzg.org/talks/.

Although ElasticSearch (ES) primary purpose is to be used as index/search server, in its featureset ES overlaps with common NoSql database; better to say, document database.

Why this could be interesting and how this could be used effectively?

Talk overview:

- ES - history, background, philosophy, featureset overview, focus on indexing/search features

- short presentation on how to get started - installation, indexing and search/retrieving

- Database should provide following functions: store, search, retrieve -> differences between relational, document and search databases

- it is not unusual to use ES additionally as an document database (store and retrieve)

- an use-case will be presented where ES can be used as a single database in the system (benefits and drawbacks)

- what if a relational database is introduced in previosly demonstrated system (benefits and drawbacks)

ES is a nice and in reality ready-to-use example that can change perspective of development of some type of software systems.

What's new in Elasticsearch v5

What's new in Elasticsearch v5Idan Tohami A lecture given at the Elastic Day as part of the Big Data Month by Christoph Wurm - Solution Architect at Elasic

曾勇 Elastic search-intro

曾勇 Elastic search-introShaoning Pan This document summarizes an LAMP人主题分享交流会 about new generation behavior targeting advertising technology challenges and optimizations. It will be held on the 12th period and include a brand interaction special session. Contact information is provided for the LAMPER website, QQ group, Weibo account.

ElasticSearch - Introduction to Aggregations

ElasticSearch - Introduction to Aggregationsenterprisesearchmeetup This document provides examples of using aggregations in Elasticsearch to calculate statistics and group documents. It shows terms, range, and histogram facets/aggregations to group documents by fields like state or population range and calculate statistics like average density. It also demonstrates nesting aggregations to first group by one field like state and then further group and calculate stats within each state group. Finally it lists the built-in aggregation bucketizers and calculators available in Elasticsearch.

Elastic search intro-@lamper

Elastic search intro-@lampermedcl Elasticsearch is a distributed, open source, RESTful search and analytics engine capable of addressing crawling, indexing, and searching requirements for both small and large applications and datasets. It provides rich search features, high availability, and easy extensibility. The document provides an overview of Elasticsearch's core functionality and capabilities including indexing and querying documents, distributed searching, scalability, and high availability features.

Elasticsearch presentation 1

Elasticsearch presentation 1Maruf Hassan This document provides an introduction and overview of Elasticsearch. It discusses installing Elasticsearch and configuring it through the elasticsearch.yml file. It describes tools like Marvel and Sense that can be used for monitoring Elasticsearch. Key terms used in Elasticsearch like nodes, clusters, indices, and documents are explained. The document outlines how to index and retrieve data from Elasticsearch through its RESTful API using either search lite queries or the query DSL.

Elasticsearch Introduction

Elasticsearch IntroductionRoopendra Vishwakarma Elasticsearch is a distributed, open source search and analytics engine built on Apache Lucene. It allows storing and searching of documents of any schema in JSON format. Documents are organized into indexes which can have multiple shards and replicas for scalability and high availability. Elasticsearch provides a RESTful API and can be easily extended with plugins. It is widely used for full-text search, structured search, analytics and more in applications requiring real-time search and analytics of large volumes of data.

Side by Side with Elasticsearch and Solr

Side by Side with Elasticsearch and SolrSematext Group, Inc. This document summarizes a presentation comparing Solr and Elasticsearch. It outlines the main topics covered, including documents, queries, mapping, indexing, aggregations, percolations, scaling, searches, and tools. Examples of specific features like bool queries, facets, nesting aggregations, and backups are demonstrated for both Solr and Elasticsearch. The presentation concludes by noting most projects work well with either system and to choose based on your use case.

What I learnt: Elastic search & Kibana : introduction, installtion & configur...

What I learnt: Elastic search & Kibana : introduction, installtion & configur...Rahul K Chauhan This document provides an overview of the ELK stack components Elasticsearch, Logstash, and Kibana. It describes what each component is used for at a high level: Elasticsearch is a search and analytics engine, Logstash is used for data collection and normalization, and Kibana is a data visualization platform. It also provides basic instructions for installing and running Elasticsearch and Kibana.

Elasticsearch War Stories

Elasticsearch War StoriesArno Broekhof Elasticsearch war stories, a talk about things i have encountered when running various elasticsearch clusters in production

Centralized log-management-with-elastic-stack

Centralized log-management-with-elastic-stackRich Lee Centralized log management is implemented using the Elastic Stack including Filebeat, Logstash, Elasticsearch, and Kibana. Filebeat ships logs to Logstash which transforms and indexes the data into Elasticsearch. Logs can then be queried and visualized in Kibana. For large volumes of logs, Kafka may be used as a buffer between the shipper and indexer. Backups are performed using Elasticsearch snapshots to a shared file system or cloud storage. Logs are indexed into time-based indices and a cron job deletes old indices to control storage usage.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

quick intro to elastic search

quick intro to elastic search medcl ElasticSearch is an open source, distributed, RESTful search and analytics engine. It allows storage and search of documents in near real-time. Documents are indexed and stored across multiple nodes in a cluster. The documents can be queried using a RESTful API or client libraries. ElasticSearch is built on top of Lucene and provides scalability, reliability and availability.

Elasticsearch - DevNexus 2015

Elasticsearch - DevNexus 2015Roy Russo This document provides an introduction to Elasticsearch. It begins by introducing the speaker and their background. It then discusses what search is and how search engines work by using an inverted index to map tokens to documents. Elasticsearch is introduced as a search and analytics engine that is document-oriented, distributed, schema-free, and uses HTTP and JSON. It can be used for real-time search and analytics. The document discusses how Elasticsearch is based on Apache Lucene and can be run on multiple nodes in a cluster for high availability. It provides examples of using Elasticsearch for centralized logging, and discusses indexing, querying, and interacting with Elasticsearch via its RESTful API.

Elasticsearch quick Intro (English)

Elasticsearch quick Intro (English)Federico Panini Elasticsearch what is it ? How can I use it in my stack ? I will explain how to set up a working environment with Elasticsearch. The slides are in English.

elasticsearch - advanced features in practice

elasticsearch - advanced features in practiceJano Suchal How we used faceted search, percolator and scroll api to identify suspicious contracts published by slovak government.

ElasticSearch for data mining

ElasticSearch for data mining William Simms We went over what Big Data is and it's value. This talk will cover the details of Elasticsearch, a Big Data solution. Elasticsearch is an NoSQL-backed search engine using a HDFS-based filesystem.

We'll cover:

• Elasticsearch basics

• Setting up a development environment

• Loading data

• Searching data using REST

• Searching data using NEST, the .NET interface

• Understanding Scores

Finally, I show a use-case for data mining using Elasticsearch.

You'll walk away from this armed with the knowledge to add Elasticsearch to your data analysis toolkit and your applications.

Intro to elasticsearch

Intro to elasticsearchJoey Wen 1) The document discusses information retrieval and search engines. It describes how search engines work by indexing documents, building inverted indexes, and allowing users to search indexed terms.

2) It then focuses on Elasticsearch, describing it as a distributed, open source search and analytics engine that allows for real-time search, analytics, and storage of schema-free JSON documents.

3) The key concepts of Elasticsearch include clusters, nodes, indexes, types, shards, and documents. Clusters hold the data and provide search capabilities across nodes.

Simple search with elastic search

Simple search with elastic searchmarkstory Elasticsearch is a JSON document database that allows for powerful full-text search capabilities. It uses Lucene under the hood for indexing and search. Documents are stored in indexes and types which are analogous to tables in a relational database. Documents can be created, read, updated, and deleted via a RESTful API. Searches can be performed across multiple indexes and types. Elasticsearch offers advanced search features like facets, highlighting, and custom analyzers. Mappings allow for customization of how documents are indexed. Shards and replicas improve performance and availability. Multi-tenancy can be achieved through separate indexes or filters.

mongoDB Performance

mongoDB PerformanceMoshe Kaplan The document discusses MongoDB performance optimization strategies presented by Moshe Kaplan at a VP R&D Open Seminar. It covers topics like sharding, in-memory databases, MapReduce, profiling, indexes, server stats, schema design, and locking in MongoDB. Slides include information on tuning configuration parameters, analyzing profiling results, explain plans, index management, and database stats.

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...Voxxed Athens This document discusses the evolution of Elasticsearch from versions 5.0 to 8.0. It covers topics like strict bootstrap checks, rolling upgrades, floodstage watermarks, sequence numbers, mapping types removal, automatic queue resizing, adaptive replica selection, shard shrinking and splitting. The document provides demos of some of these features and recommends benchmarks and meetups for further learning.

Dogs Rule

Dogs Ruleknollnook The document discusses the removal of shade cloth over Memorial Day weekend with the help of slow and older women workers, canines, and an unnamed hero, while a person named Tui supervises and once the task is completed, it is time for a break.

Pew government-online-100427082251-phpapp02

Pew government-online-100427082251-phpapp02Kirsten Deshler This document summarizes a report about Americans' use of government websites and online services. Some key findings include:

- 82% of internet users looked for information or completed a transaction on a government website in the past year. Common activities included looking up policies, services, downloading forms, and renewing licenses.

- 40% of online adults accessed government data online regarding topics like stimulus spending and campaign contributions.

- 31% of online adults use tools like social media, email and video to get information from government agencies.

- High-income, educated internet users are more likely to engage with government services online.

Ad

More Related Content

What's hot (20)

What's new in Elasticsearch v5

What's new in Elasticsearch v5Idan Tohami A lecture given at the Elastic Day as part of the Big Data Month by Christoph Wurm - Solution Architect at Elasic

曾勇 Elastic search-intro

曾勇 Elastic search-introShaoning Pan This document summarizes an LAMP人主题分享交流会 about new generation behavior targeting advertising technology challenges and optimizations. It will be held on the 12th period and include a brand interaction special session. Contact information is provided for the LAMPER website, QQ group, Weibo account.

ElasticSearch - Introduction to Aggregations

ElasticSearch - Introduction to Aggregationsenterprisesearchmeetup This document provides examples of using aggregations in Elasticsearch to calculate statistics and group documents. It shows terms, range, and histogram facets/aggregations to group documents by fields like state or population range and calculate statistics like average density. It also demonstrates nesting aggregations to first group by one field like state and then further group and calculate stats within each state group. Finally it lists the built-in aggregation bucketizers and calculators available in Elasticsearch.

Elastic search intro-@lamper

Elastic search intro-@lampermedcl Elasticsearch is a distributed, open source, RESTful search and analytics engine capable of addressing crawling, indexing, and searching requirements for both small and large applications and datasets. It provides rich search features, high availability, and easy extensibility. The document provides an overview of Elasticsearch's core functionality and capabilities including indexing and querying documents, distributed searching, scalability, and high availability features.

Elasticsearch presentation 1

Elasticsearch presentation 1Maruf Hassan This document provides an introduction and overview of Elasticsearch. It discusses installing Elasticsearch and configuring it through the elasticsearch.yml file. It describes tools like Marvel and Sense that can be used for monitoring Elasticsearch. Key terms used in Elasticsearch like nodes, clusters, indices, and documents are explained. The document outlines how to index and retrieve data from Elasticsearch through its RESTful API using either search lite queries or the query DSL.

Elasticsearch Introduction

Elasticsearch IntroductionRoopendra Vishwakarma Elasticsearch is a distributed, open source search and analytics engine built on Apache Lucene. It allows storing and searching of documents of any schema in JSON format. Documents are organized into indexes which can have multiple shards and replicas for scalability and high availability. Elasticsearch provides a RESTful API and can be easily extended with plugins. It is widely used for full-text search, structured search, analytics and more in applications requiring real-time search and analytics of large volumes of data.

Side by Side with Elasticsearch and Solr

Side by Side with Elasticsearch and SolrSematext Group, Inc. This document summarizes a presentation comparing Solr and Elasticsearch. It outlines the main topics covered, including documents, queries, mapping, indexing, aggregations, percolations, scaling, searches, and tools. Examples of specific features like bool queries, facets, nesting aggregations, and backups are demonstrated for both Solr and Elasticsearch. The presentation concludes by noting most projects work well with either system and to choose based on your use case.

What I learnt: Elastic search & Kibana : introduction, installtion & configur...

What I learnt: Elastic search & Kibana : introduction, installtion & configur...Rahul K Chauhan This document provides an overview of the ELK stack components Elasticsearch, Logstash, and Kibana. It describes what each component is used for at a high level: Elasticsearch is a search and analytics engine, Logstash is used for data collection and normalization, and Kibana is a data visualization platform. It also provides basic instructions for installing and running Elasticsearch and Kibana.

Elasticsearch War Stories

Elasticsearch War StoriesArno Broekhof Elasticsearch war stories, a talk about things i have encountered when running various elasticsearch clusters in production

Centralized log-management-with-elastic-stack

Centralized log-management-with-elastic-stackRich Lee Centralized log management is implemented using the Elastic Stack including Filebeat, Logstash, Elasticsearch, and Kibana. Filebeat ships logs to Logstash which transforms and indexes the data into Elasticsearch. Logs can then be queried and visualized in Kibana. For large volumes of logs, Kafka may be used as a buffer between the shipper and indexer. Backups are performed using Elasticsearch snapshots to a shared file system or cloud storage. Logs are indexed into time-based indices and a cron job deletes old indices to control storage usage.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

quick intro to elastic search

quick intro to elastic search medcl ElasticSearch is an open source, distributed, RESTful search and analytics engine. It allows storage and search of documents in near real-time. Documents are indexed and stored across multiple nodes in a cluster. The documents can be queried using a RESTful API or client libraries. ElasticSearch is built on top of Lucene and provides scalability, reliability and availability.

Elasticsearch - DevNexus 2015

Elasticsearch - DevNexus 2015Roy Russo This document provides an introduction to Elasticsearch. It begins by introducing the speaker and their background. It then discusses what search is and how search engines work by using an inverted index to map tokens to documents. Elasticsearch is introduced as a search and analytics engine that is document-oriented, distributed, schema-free, and uses HTTP and JSON. It can be used for real-time search and analytics. The document discusses how Elasticsearch is based on Apache Lucene and can be run on multiple nodes in a cluster for high availability. It provides examples of using Elasticsearch for centralized logging, and discusses indexing, querying, and interacting with Elasticsearch via its RESTful API.

Elasticsearch quick Intro (English)

Elasticsearch quick Intro (English)Federico Panini Elasticsearch what is it ? How can I use it in my stack ? I will explain how to set up a working environment with Elasticsearch. The slides are in English.

elasticsearch - advanced features in practice

elasticsearch - advanced features in practiceJano Suchal How we used faceted search, percolator and scroll api to identify suspicious contracts published by slovak government.

ElasticSearch for data mining

ElasticSearch for data mining William Simms We went over what Big Data is and it's value. This talk will cover the details of Elasticsearch, a Big Data solution. Elasticsearch is an NoSQL-backed search engine using a HDFS-based filesystem.

We'll cover:

• Elasticsearch basics

• Setting up a development environment

• Loading data

• Searching data using REST

• Searching data using NEST, the .NET interface

• Understanding Scores

Finally, I show a use-case for data mining using Elasticsearch.

You'll walk away from this armed with the knowledge to add Elasticsearch to your data analysis toolkit and your applications.

Intro to elasticsearch

Intro to elasticsearchJoey Wen 1) The document discusses information retrieval and search engines. It describes how search engines work by indexing documents, building inverted indexes, and allowing users to search indexed terms.

2) It then focuses on Elasticsearch, describing it as a distributed, open source search and analytics engine that allows for real-time search, analytics, and storage of schema-free JSON documents.

3) The key concepts of Elasticsearch include clusters, nodes, indexes, types, shards, and documents. Clusters hold the data and provide search capabilities across nodes.

Simple search with elastic search

Simple search with elastic searchmarkstory Elasticsearch is a JSON document database that allows for powerful full-text search capabilities. It uses Lucene under the hood for indexing and search. Documents are stored in indexes and types which are analogous to tables in a relational database. Documents can be created, read, updated, and deleted via a RESTful API. Searches can be performed across multiple indexes and types. Elasticsearch offers advanced search features like facets, highlighting, and custom analyzers. Mappings allow for customization of how documents are indexed. Shards and replicas improve performance and availability. Multi-tenancy can be achieved through separate indexes or filters.

mongoDB Performance

mongoDB PerformanceMoshe Kaplan The document discusses MongoDB performance optimization strategies presented by Moshe Kaplan at a VP R&D Open Seminar. It covers topics like sharding, in-memory databases, MapReduce, profiling, indexes, server stats, schema design, and locking in MongoDB. Slides include information on tuning configuration parameters, analyzing profiling results, explain plans, index management, and database stats.

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...Voxxed Athens This document discusses the evolution of Elasticsearch from versions 5.0 to 8.0. It covers topics like strict bootstrap checks, rolling upgrades, floodstage watermarks, sequence numbers, mapping types removal, automatic queue resizing, adaptive replica selection, shard shrinking and splitting. The document provides demos of some of these features and recommends benchmarks and meetups for further learning.

Viewers also liked (20)

Dogs Rule

Dogs Ruleknollnook The document discusses the removal of shade cloth over Memorial Day weekend with the help of slow and older women workers, canines, and an unnamed hero, while a person named Tui supervises and once the task is completed, it is time for a break.

Pew government-online-100427082251-phpapp02

Pew government-online-100427082251-phpapp02Kirsten Deshler This document summarizes a report about Americans' use of government websites and online services. Some key findings include:

- 82% of internet users looked for information or completed a transaction on a government website in the past year. Common activities included looking up policies, services, downloading forms, and renewing licenses.

- 40% of online adults accessed government data online regarding topics like stimulus spending and campaign contributions.

- 31% of online adults use tools like social media, email and video to get information from government agencies.

- High-income, educated internet users are more likely to engage with government services online.

Progressions

ProgressionsEmmanuel Palestras The document provides information about using the present continuous tense in English. It discusses the structure of the present continuous, using "be" verbs plus the "-ing" form of the main verb. Examples are given such as "She is dancing" and "They are playing soccer." Rules for verbs ending in consonant-vowel-consonant and verbs ending in "ie" are explained. Practice questions are included to check understanding. The document then discusses verbs and expressions that can be followed by infinitives or gerunds. More practice and examples are given to reinforce use of the present continuous tense.

Final Presentation for SMEDA-JICA

Final Presentation for SMEDA-JICAshahir20 Presentation Date: 17th July, 2012.

Venue: Electropolymers (Pvt.) Ltd.

Presenter: Shaheer Hassan

Final Presentation regarding the Project carried out with the assistance of JICA expert Mr. Yoshida San.

Team Members: Shaheer Hassan, Muhammad Danish Kazmi, Saleem Sharif, Faizan Arshad and Mr. Adnan Islam.

SMEDA team members: Syed Babar Umar and Muhammad Naeem Ansari.

Adj new

Adj newannalouie1 This document provides information about a lesson on adjectives, including:

1. The objectives of identifying types of adjectives, constructing sentences using adjectives, and participating in class recitation.

2. Details about different types of adjectives like articles, proper adjectives, and predicate adjectives.

3. Information on the degrees of comparison for adjectives including positive, comparative, and superlative forms.

4. Exercises for students to practice using adjectives in sentences and a quiz to identify adjectives and their forms of comparison.

22. TCI Climate of the Nation Flagship Report 2012

22. TCI Climate of the Nation Flagship Report 2012Richard Plumpton This document summarizes the findings of a report on Australian attitudes toward climate change in 2012. It was conducted through focus groups and surveys between April and May 2012, a time of highly politicized debate around climate change policies in Australia. The research found that Australians were uncertain about the science of climate change, unconvinced by carbon pricing solutions due to fears over rising costs of living, and had lost confidence in experts and governments on the issue. However, attitudes remained fluid and could still be influenced on both the reality and solutions regarding climate change.

Grade 1lessonplans

Grade 1lessonplansSt. Joseph School The document outlines the weekly computer lab activities for several 1st grade classes. Over the course of 4 weeks, students learned how to properly turn on and shut down computers. They identified computer parts using a worksheet and practiced mouse skills like clicking, double clicking, and navigating websites. Students were also introduced to internet safety and watched videos about responsible computer and internet use.

Amoeba's general equipment

Amoeba's general equipmentcv. Amoeba Biosintesa This document provides an update on equipment from various brands that the company distributes for use in food, chemical, microbiology, biotechnology, and environmental testing. It lists over 30 brands and types of equipment, including refractometers, microplate readers, fume hoods, centrifuges, ovens, freezers, balances, pipettes, spectrophotometers, and more. The company invites the recipient to contact them for any other queries or questions.

Government and media response to disaster gwestmoreland

Government and media response to disaster gwestmorelandgwestmo The document discusses two research studies on the negative effects of frightening or exaggerated news content. A survey found that 6th graders had vivid memories of disturbing news content they had seen. Another study looked at how news sourcing during the 2001 anthrax attacks exaggerated risks and caused a more negative public reaction. Both examples show how alarming or overstated news coverage can impact viewers, especially children, by causing fear and anxiety.

Pesawat sederhana

Pesawat sederhanaZahra Nur Azizah Dokumen tersebut memberikan penjelasan tentang prinsip tuas sederhana dan contoh soal perhitungan keuntungan mekanis tuas. Pada gambar ditunjukkan hubungan antara beban, gaya, lengan beban, dan lengan kuasa pada tuas. Contoh soal dijelaskan cara menghitung besaran gaya dan nilai keuntungan mekanis berdasarkan data yang diketahui seperti besaran beban dan panjang lengan.

Akka Presentation Schule@synyx

Akka Presentation Schule@synyxFlorian Hopf This document summarizes key aspects of the actor model framework Akka:

1. It allows concurrent processing using message passing between actors that can scale up using multiple threads on a single machine.

2. It can scale out by deploying actor instances across multiple remote machines.

3. Actors provide fault tolerance through hierarchical supervision where errors are handled by restarting or resuming actors from their parent.

Probability

Probabilitycwalt54 This short document promotes the creation of presentations using Haiku Deck on SlideShare, highlighting that it allows adding photos from various photographers to inspire decks. It lists the photo credits for 4 stock photos and encourages the reader to get started making their own Haiku Deck presentation on SlideShare.

Presentation

PresentationKiran Ghosh This document appears to be a collection of messages between two friends, Shivani and Kiran, reminiscing about their friendship over the years. They discuss inside jokes and memories from their time in college, their shared interests and dislikes, how their first impressions of each other changed, conflicts they have overcome, and the important role they play in each other's lives. Kiran wishes Shivani a happy birthday and says he will celebrate with her next year.

Thinking tools - From top motors through s'ware proc improv't to context-driv...

Thinking tools - From top motors through s'ware proc improv't to context-driv...Neil Thompson This document summarizes Neil Thompson's presentation on applying principles from manufacturing process improvement to software development processes. Some key points:

1. Toyota's production system and product development system emphasize continuous process flow, problem solving, visual controls, and a learning organization to drive quality and innovation.

2. Principles from Toyota, the Toyota Production System, and Eliyahu Goldratt's Theory of Constraints can be applied beyond manufacturing to areas like software development.

3. Analogies will be drawn between manufacturing concepts like value streams, constraints, and critical chains, and how they relate to software development processes and problem solving. The presentation aims to provide simple thinking tools to identify and address constraints in software development lif

Wh questions

Wh questionsannalouie1 This document provides a summary of wh- questions and their functions:

1) Wh- questions ask for specific information that cannot be answered with a simple yes or no. They include who, what, where, when, why, and how.

2) Questions using "who" ask about people, "what" asks about things, "where" asks about places, and "why" asks for a reason.

3) Contractions are often used in wh- questions in informal speech, such as "Where's" instead of "Where is." Answers to wh- questions are usually short but can also be longer explanations.

Ad

Similar to Introduction to elasticsearch (20)

Anwendungsfälle für Elasticsearch JAX 2015

Anwendungsfälle für Elasticsearch JAX 2015Florian Hopf The document discusses various use cases for Elasticsearch including document storage, full text search, geo search, log file analysis, and analytics. It provides examples of installing Elasticsearch, indexing and retrieving documents, performing searches using query DSL, filtering and sorting results, and aggregating data. Popular users mentioned include GitHub, Stack Overflow, and Microsoft. The presentation aims to demonstrate the flexibility and power of Elasticsearch beyond just full text search.

Anwendungsfaelle für Elasticsearch

Anwendungsfaelle für ElasticsearchFlorian Hopf The document discusses Elasticsearch and provides an overview of its capabilities and use cases. It covers how to install and access Elasticsearch, store and retrieve documents, perform full-text search using queries and filters, analyze text using mappings, handle large datasets with sharding, and use Elasticsearch for applications like logging, analytics and more. Real-world examples of companies using Elasticsearch are also provided.

Anwendungsfälle für Elasticsearch JavaLand 2015

Anwendungsfälle für Elasticsearch JavaLand 2015Florian Hopf The document discusses Elasticsearch and provides examples of how to use it for document storage, full text search, analytics, and log file analysis. It demonstrates how to install Elasticsearch, index and retrieve documents, perform queries using the query DSL, add geospatial data and filtering, aggregate data, and visualize analytics using Kibana. Real-world use cases are also presented, such as by GitHub, Stack Overflow, and log analysis platforms.

Attack monitoring using ElasticSearch Logstash and Kibana

Attack monitoring using ElasticSearch Logstash and KibanaPrajal Kulkarni This document discusses using the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It provides an overview of each component, describes how to set up ELK and configure Logstash for log collection and parsing. It also demonstrates log forwarding using Logstash Forwarder, and shows how to configure alerts and dashboards in Kibana for attack monitoring. Examples are given for parsing Apache logs and syslog using Grok filters in Logstash.

Making your elastic cluster perform - Jettro Coenradie - Codemotion Amsterdam...

Making your elastic cluster perform - Jettro Coenradie - Codemotion Amsterdam...Codemotion In the past few years I have helped a lot of customers optimising their elastic cluster. With each version elasticsearch has more options to track performance of your nodes and recently profiling your queries was added. In this talk I am going to discuss the steps you have to take when starting with elasticsearch. The choices you have to make for the size of your cluster, the amount of indexes, amount of shards, choosing the right mappings, and creating better queries. After the setup I'll continue showing how to monitor your cluster and profile your queries.

Beyond the Basics 2: Aggregation Framework

Beyond the Basics 2: Aggregation Framework MongoDB The aggregation framework is one of the most powerful analytical tools available with MongoDB.

Learn how to create a pipeline of operations that can reshape and transform your data and apply a range of analytics functions and calculations to produce summary results across a data set.

The Heron Mapping Client - Overview, Functions, Concepts

The Heron Mapping Client - Overview, Functions, Concepts Just van den Broecke High-level introduction to the Heron Mapping Client (Heron MC).

Heron is an Open Source JavaScript framework for creating web mapping (client) apps for the browser. Heron builds on/wraps standard mapping libs like OpenLayers and GeoExt. The unique feature of Heron is that a complete app is created with just a (JSON) configuration. Heron has a minimal backend, relying mainly on OGC standards like WMS, WFS and the like.

This presentation was first given on behalf of the OpenGeoGroep at the OGG Customer Day on sept, 5, 2013 at Geofort, The Netherlands.

Back to Basics Webinar 2 - Your First MongoDB Application

Back to Basics Webinar 2 - Your First MongoDB ApplicationJoe Drumgoole How to build a MongoDB application from scratch in the MongoDB Shell and Python. How to add indexes and use explain to make sure you are using them properly.

Back to Basics Webinar 2: Your First MongoDB Application

Back to Basics Webinar 2: Your First MongoDB ApplicationMongoDB The document provides instructions for installing and using MongoDB to build a simple blogging application. It demonstrates how to install MongoDB, connect to it using the mongo shell, insert and query sample data like users and blog posts, update documents to add comments, and more. The goal is to illustrate how to model and interact with data in MongoDB for a basic blogging use case.

Webinaire 2 de la série « Retour aux fondamentaux » : Votre première applicat...

Webinaire 2 de la série « Retour aux fondamentaux » : Votre première applicat...MongoDB This document contains the slides from a webinar on building a basic MongoDB application. It introduces MongoDB concepts and terminology, shows how to install MongoDB, create a basic blogging application with articles, users and comments, and add and query data. Key steps include installing MongoDB, launching the mongod process, connecting with the mongo shell, inserting documents, finding and querying documents, and updating documents by adding fields and pushing to arrays.

10 Key MongoDB Performance Indicators

10 Key MongoDB Performance Indicators iammutex The document discusses 10 key performance indicators for MongoDB:

1) Slow operations using the profiler

2) Replication lag by checking oplog timestamps

3) High resident memory usage indicating paging is occurring

4) High page faults

5) High write lock percentage indicating concurrency issues

6) Large reader/writer queues indicating lock contention

7) Frequent background flushing indicating I/O issues

8) Too many connections

9) High network traffic

10) Collection fragmentation leading to increased storage size

It provides examples of how to check for each indicator using the db.serverStatus() command.

ELK - What's new and showcases

ELK - What's new and showcasesAndrii Gakhov Overview of ELK current state

Elasticsearch new aggregations and examples how to easy use them to solve some interesting problems

Elasticsearch an overview

Elasticsearch an overviewAmit Juneja This document discusses Elasticsearch and provides examples of its real-world uses and basic functionality. It contains:

1) An overview of Elasticsearch and how it can be used for full-text search, analytics, and structured querying of large datasets. Dell and The Guardian are discussed as real-world use cases.

2) Explanations of basic Elasticsearch concepts like indexes, types, mappings, and inverted indexes. Examples of indexing, updating, and deleting documents.

3) Details on searching and filtering documents through queries, filters, aggregations, and aliases. Query DSL and examples of common queries like term, match, range are provided.

4) A discussion of potential data modeling designs for indexing user

Webinar: The Anatomy of the Cloudant Data Layer

Webinar: The Anatomy of the Cloudant Data LayerIBM Cloud Data Services BM Cloudant is a NoSQL Database-as-a-Service. Discover how you can outsource the data layer of your mobile or web application to Cloudant to provide high availability, scalability and tools to take you to the next level.

Visualizing Web Data Query Results

Visualizing Web Data Query ResultsAnja Jentzsch This document outlines a tutorial on visualizing web data by processing SPARQL query results. It discusses preliminaries like using JavaScript and jQuery to send queries. It then covers manually parsing and displaying SPARQL JSON results by building a table. Pre-built toolkits like Sparqk and Sgvizler that simplify this process are also introduced. The document concludes by providing hands-on examples for attendees to try.

WWW2012 Tutorial Visualizing SPARQL Queries

WWW2012 Tutorial Visualizing SPARQL QueriesPablo Mendes This document outlines a tutorial on visualizing web data by processing SPARQL query results. It discusses preliminaries like using JavaScript and jQuery to send queries. It then covers manually parsing and displaying SPARQL JSON results by building a table. Pre-built toolkits like Sparqk and Sgvizler that simplify this process are also introduced. The document concludes by providing hands-on examples for attendees to try.

Null Bachaav - May 07 Attack Monitoring workshop.

Null Bachaav - May 07 Attack Monitoring workshop.Prajal Kulkarni This document provides an overview and instructions for setting up the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It discusses the components, architecture, and configuration of ELK. It also covers installing and configuring Filebeat for centralized logging, using Kibana dashboards for visualization, and integrating osquery for internal alerting and attack monitoring.

Elastic search and Symfony3 - A practical approach

Elastic search and Symfony3 - A practical approachSymfonyMu The document discusses using Elasticsearch for full-text search and analytics. It introduces Elasticsearch and how it compares to relational databases. It covers installing and interacting with Elasticsearch via its RESTful API, including indexing, retrieving, updating and deleting documents. The document explains Elasticsearch's architecture including clusters, nodes, shards, indexes, types and documents. It demonstrates querying Elasticsearch using its query domain specific language. Finally, it presents a case study of building a restaurant search engine with Symfony and Elasticsearch.

Connecting the Dots: Kong for GraphQL Endpoints

Connecting the Dots: Kong for GraphQL EndpointsJulien Bataillé GraphQL is a popular alternative to REST for front-end applications as it offers flexibility and developer-friendly tooling. In this talk, we will look into the differences between REST and GraphQL, how GraphQL API Management presents a new set of challenges, and finally, how we can address those challenges by leveraging Kong extensibility.

Philipp Krenn "Elasticsearch (R)Evolution — You Know, for Search…"

Philipp Krenn "Elasticsearch (R)Evolution — You Know, for Search…"Fwdays Elasticsearch is a distributed, RESTful search and analytics engine built on top of Apache Lucene. After the initial release in 2010 it has become the most widely used full-text search engine, but it is not stopping there.

The revolution happened and now it is time for evolution. We dive into the following questions:

- What are shards, how do they work, and why are they making Elasticsearch so fast?

- How do shard allocations (which were hard to debug even for us) work and how can you find out what is going wrong with them?

- How can you search efficiently across clusters and why did it take two implementations to get this right?

- How can new resiliency features improve recovery scenarios and add totally new features?

- Why are types finally disappearing and how are we avoid upgrade pains as much as possible?

- How can upgrades be improved so that fewer applications are stuck on old or even ancient versions?

Ad

More from Florian Hopf (8)

Modern Java Features

Modern Java Features Florian Hopf This document discusses several modern Java features including try-with-resources for automatic resource management, Optional for handling null values, lambda expressions, streams for functional operations on collections, and the new Date and Time API. It provides examples and explanations of how to use each feature to improve code quality by preventing exceptions, making code more concise and readable, and allowing functional-style processing of data.

Einführung in Elasticsearch

Einführung in ElasticsearchFlorian Hopf Vortrag über Elasticsearch bei den IT-Tagen 2016 in Frankfurt

Einfuehrung in Elasticsearch

Einfuehrung in ElasticsearchFlorian Hopf Vortrag über Elasticsearch bei der JUG Münster am 04.11.2015

Data modeling for Elasticsearch

Data modeling for ElasticsearchFlorian Hopf Describes the basics of data storage and design decisions when building applications with Elasticsearch.

Einführung in Elasticsearch

Einführung in ElasticsearchFlorian Hopf Einführung in unterschiedliche Aspekte von Elasticsearch: Suche, Verteilung, Java-Integration, Aggregationen und zentralisiertes Logging. Java User Group Switzerland am 07.10. in Bern

Search Evolution - Von Lucene zu Solr und ElasticSearch (Majug 20.06.2013)

Search Evolution - Von Lucene zu Solr und ElasticSearch (Majug 20.06.2013)Florian Hopf Slides zum Vortrag über Lucene, Solr und ElasticSearch bei der Java User Group Mannheim am 20.06.2013

Search Evolution - Von Lucene zu Solr und ElasticSearch

Search Evolution - Von Lucene zu Solr und ElasticSearchFlorian Hopf 1. Lucene is a search library that provides indexing and search capabilities. Solr and Elasticsearch build on Lucene to provide additional features like full-text search, hit highlighting, faceted search, geo-location support, and distributed capabilities.

2. The document discusses the evolution from Lucene to Solr and Elasticsearch. It covers indexing, searching, faceting and distributed capabilities in Solr and Elasticsearch.

3. Key components in Lucene, Solr and Elasticsearch include analyzers, inverted indexes, query syntax, scoring, and the ability to customize schemas and configurations.

Lucene Solr talk at Java User Group Karlsruhe

Lucene Solr talk at Java User Group KarlsruheFlorian Hopf German slides introducing Lucene and Solr.

Recently uploaded (20)

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptx

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptxfatimalazaar2004 BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptx

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptxPareaRusan planning and calculation monitoring project

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Introduction to elasticsearch

- 6. Installation # download archive wget https://artifacts.elastic.co/downloads/ elasticsearch/elasticsearch-5.0.0.zip unzip elasticsearch-5.0.0.zip # on windows: elasticsearch.bat elasticsearch-5.0.0/bin/elasticsearch

- 7. Access via HTTP curl -XGET "http://localhost:9200" { "name" : "LI8ZN-t", "cluster_name" : "elasticsearch", "cluster_uuid" : "UvbMAoJ8TieUqugCGw7Xrw", "version" : { "number" : "5.0.0", "build_hash" : "253032b", "build_date" : "2016-10-26T04:37:51.531Z", "build_snapshot" : false, "lucene_version" : "6.2.0" }, "tagline" : "You Know, for Search" }

- 8. Indexing POST /library/book { "title": "Elasticsearch in Action", "author": [ "Radu Gheorghe", "Matthew Lee Hinman", "Roy Russo" ], "published": "2015-06-30T00:00:00.000Z", "publisher": { "name": "Manning", "country": "USA" }, "tags": [ "Computer Science", "Development"] }

- 9. Parameter Search GET /library/book/_search?q=elasticsearch { [...] "hits": { "hits": [ { "_index": "library", "_type": "book", "_source": { "title": "Elasticsearch in Action", [...]

- 10. Search via Query DSL POST /library/book/_search { "query": { "bool": { "must": { "match": { "title": "elasticsearch" } }, "filter": { "term": { "publisher.name": "manning" } } } } }

- 11. Language Specific Search POST /library/book/_search { "query": { "match": { "tags": "development" } } }

- 12. Language Specific Search POST /library/book/_search { "query": { "match": { "tags": "developing" } } }

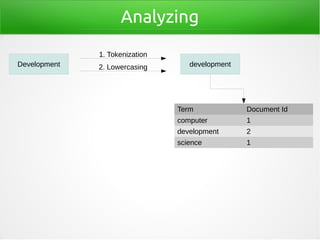

- 13. Analyzing 1. Tokenization Computer Science Development Term Document Id computer 1 development 2 science 1

- 14. Analyzing 1. Tokenization Computer Science Development 2. Lowercasing Term Document Id computer 1 development 2 science 1

- 15. Analyzing 1. Tokenization 2. LowercasingDevelopment development Term Document Id computer 1 development 2 science 1

- 16. Analyzing 1. Tokenization 2. LowercasingDeveloping developing Term Document Id computer 1 development 2 science 1

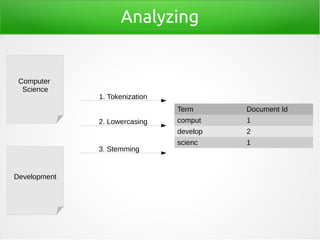

- 17. Analyzing Term Document Id comput 1 develop 2 scienc 1 1. Tokenization Computer Science Development 2. Lowercasing 3. Stemming

- 18. Analyzing 1. Tokenization 2. LowercasingDeveloping develop Term Document Id comput 1 develop 2 scienc 1 3. Stemming

- 19. Mapping PUT /library/book/_mapping { "book": { "properties": { "title": { "type": "text", "analyzer": "english" } } } }

- 20. Full Text Search Features ● Analyzer, preconfigured or custom ● Relevance ● Paging, sorting ● Highlighting, auto completion, ... ● Faceting using Aggregations

- 21. Recap ● Java-based search server ● Communication HTTP and JSON ● Document based storage ● Search using Query DSL ● Inverted index

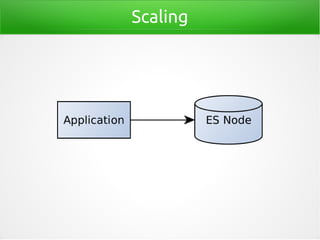

- 22. Scaling

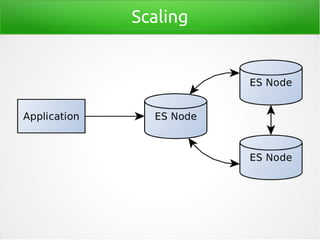

- 23. Scaling

- 24. Scaling

- 25. Scaling

- 26. Scaling

- 27. Scaling

- 28. Scaling

- 29. Recap ● Nodes form a cluster ● Distribute data using shards ● Replicas for request load, fault tolerance

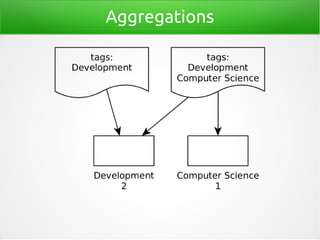

- 30. Aggregations

- 31. Aggregations ● Information on data ● Faceting ● Search applications and analytics

- 32. Aggregations

- 33. Terms-Aggregation POST /library/book/_search { "size": 0, "aggs": { "common-tags": { "terms": { "field": "tags.keyword" } } } }

- 34. Terms-Aggregation "aggregations": { "common-tags": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "Development", "doc_count": 2 }, { "key": "Computer Science", "doc_count": 1 }] [...]

- 36. Metric-Aggregations ● Calculate one or more values ● Often on numeric fields ● Stats, Percentiles, Min, Max, Sum, Avg, ...

- 37. Stats-Aggregation GET /library/book/_search { "aggs": { "published_stats": { "stats": { "field": "published" } } } }

- 38. Stats-Aggregation "aggregations": { "published_stats": { "count": 5, "min": 1419292800000, "max": 1445990400000, "avg": 1440547200000, "sum": 7202736000000, "min_as_string": "2014-12-23T00:00:00.000Z", "max_as_string": "2015-10-28T00:00:00.000Z", "avg_as_string": "2015-08-26T00:00:00.000Z", "sum_as_string": "2198-03-31T00:00:00.000Z" } }

- 39. Combine Aggregations ● Aggregations can be combined ● Even more detailed view of data ● Tags per publishing date range ● Tags per author ● ...

- 40. Recap ● Aggregations allow new view at data ● Faceting ● Basis for visualization

- 42. Centralized Logging ● Centralize logs of applications ● Centralize logs of machines ● No machine access for developers ● Easy searching ● Real-Time-Analysis ● Visualization

- 46. Logstash-Config input { file { path => "/var/log/apache2/access.log" } } filter { grok { match => { message => "%{COMBINEDAPACHELOG}" } } } output { elasticsearch_http { host => "localhost" } }

- 47. Kibana

- 48. Kibana

- 49. Recap ● Ingestion, enrichment and storage of log events ● Countless inputs in Logstash ● Centralization ● Visualization ● Analysis

- 50. Accessing Elasticsearch ● Lots of clients available ● Access via HTTP ● Sniffing ● Java ● Transport Client, RestClient

- 51. There‘s a lot more! ● Different full text search features ● Lots of aggregations ● Geodata ● Percolator

- 52. More Information

- 53. More Information ● http://elastic.co ● Elasticsearch – The definitive Guide ● https://www.elastic.co/guide/en/elasticsearch/gui de/master/index.html ● Elasticsearch in Action ● https://www.manning.com/books/elasticsearch-in- action ● http://blog.florian-hopf.de