Introduction to Machine Learning

- 1. Introduction to machine learning: Wake up being a data scientist one day by Oleksandr Khryplyvenko [email protected]

- 2. My path to DS 2002 2003 2004 2008 2014 2016 code work linux master CS ML Ph.D. student WorkResearch I use ML for(order matters):

- 3. Required skills programming basic linear algebra use existing models High level ML frameworks (keras) + 1 yr

- 4. Required skills programming basic linear algebra use existing models High level ML frameworks (keras) low level ML frameworks (TF) basic mathematical analysis basic statistics implement models using papers enhance existing models basic information theory + 1 yr + 2 yrs

- 5. Required skills programming basic linear algebra use existing models High level ML frameworks (keras) low level ML frameworks (TF) advanced linear algebra basic mathematical analysis basic statistics advanced information theory implement models using papers enhance existing models create new models understanding patterns & laws hidden in data advanced statistics advanced mathematical analysis basic information theory + 1 yr + 2 yrs + 4 yrs

- 6. Required skills programming basic linear algebra use existing models High level ML frameworks (keras) low level ML frameworks (TF) advanced linear algebra basic mathematical analysis basic statistics advanced information theory implement models using papers enhance existing models create new models understanding patterns & laws hidden in data advanced statistics advanced mathematical analysis basic information theory creating new theories Riemmanian geometry Set theory Topology Abstract algebra + 1 yr + 2 yrs + 4 yrs + life

- 7. ML in a nutshell ML is a set of tricks on data Magic (ML)

- 8. Approaches

- 9. Trends • Deep learning • Reinforcement learning Problems • One shot learning • Imbalanced datasets

- 10. Ukraine & my experience • production: better take & use existing • in prod: if yo don’t have time for analysis just try differend approaches • research: sample of RL digging • in UA science is dying. But if you wanna lean, you’ll find a way to learn

- 11. Cook your data • choose models suitable for dataset size. • how balanced a dataset is (skewed, normal, contains all classes you want to learn) • use public datasets, pretrained models • if you gather values of variable X and they are bad and you can gather values of variable Y - switch to Y • most of algorithms require normalization. you can’t compare bitter and orange without normalization. • properly initialize free parameters(if needed)

- 12. 3 main questions • Which entity you want teach to • What should be taught • How should be taught

- 13. 3 main questions • model • cost function: - regression - classification - clusterization • teaching algorithm - backpropagation(1st order, 2nd order methods) - hypotheses check(statistics) - genetic algorithms …

- 14. 3 main questions(sample) • DQN • MSE(target Q*, agent Q*): • backpropagation

- 15. parametristic models • depend on parameters • parameters change models • nonlinear models with complex logic (you are what you interact with) + W AT W OW input(X) model output

- 16. parametristic models linear regression 10.7 10-0.1 X1 X2 8 3 Y1 input outputtrainset your interest in ML time spent on ML your ML level

- 17. linear regression introducing free parameters * generally we have train set(number of equasions) > number of free parameters and WX + b = Y doesn’t have solutions X2 1 X1 0.7 X2 10 X1 -0.1 Y1 8 Y1 3 W1 W1 W2 W2 +* * + * * = = free parameters free parameters b b + + You introduce hyphotesis, that if you linearly combine unseen inputs, you’ll get plausible outputs

- 18. What is the trick behind free parameters? (main trick of parametristic ML) W = known output | known input unknown output = unknown input * W | unknown input unknown output = unknown input * (known output | known input) | unknown input If your model gives right distribution, unknown output ~ known output There is similarity with Bayes That’s why you need statistics ;) Information obtained from seen data allows you use unseen data to solve tasks the way you solved them on seen data

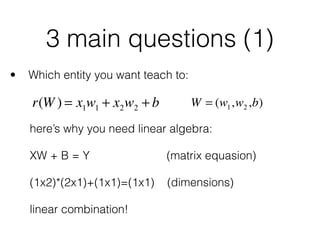

- 19. 3 main questions (1) • Which entity you want teach to: here’s why you need linear algebra: XW + B = Y (matrix equasion) (1x2)*(2x1)+(1x1)=(1x1) (dimensions) linear combination! r(W ) = x1w1 + x2w2 + b W = (w1,w2,b)

- 20. 3 main questions (2) • What should be taught: You want your system’s output to be as close as possible to target(marked) output for EACH vector in dataset: 1 sample(SGD) batch of m samples(batch GD) 1 2m (ri (W )− i=1 m ∑ yi )21 2 (r(W )− y)2

- 21. 3 main questions (3) • How should be taught: (that’s why you need some math analysis) ∂ 1 2 (r(W )− y)2 ∂wi = ∂ 1 2 (x1w1 + x2w2 + b − y)2 ∂wi = (r(W )− y)xi wi = wi − (r(W )− y)xi ???

- 22. 3 main questions (3) • How should be taught: (that’s why you need some math analysis) 1 2 (r(W )− y)2 W r(W ) (r(W )− y)xi wi = 0.1

- 23. 3 main questions (3) • How should be taught: wi = wi − (r(W )− y)xi But if you move your free parameters totally into sample’s direction, your network would classify this sample good. But other samples - bad!!! Solution: wi = wi −η(r(W )− y)xi η = (0..1) learning speed(hyperparameter)

- 24. 3 main questions (3) • How should be taught: This is a simple optimizer, but there are much more. wi = wi −η(r(W )− y)xi SGD You may use precise information about error curvature (2nd order optimization)[1], or try to simulate 2nd order optimization for faster convergence[2]

- 25. Training

- 26. Regularization • There is always some noise in data • so you do regularization • lots of techniques: - L1 - L2 - ensembles - dropout - batch-normalization …

- 27. Back to linear regression In [3]: from sklearn import linear_model In [4]: regr = linear_model.LinearRegression() In [5]: x_train = [[0.7, 1], [-0.1, 10]] In [6]: x_test = [[0.8, 2]] In [7]: y_train = [8, 3] In [8]: y_test = [9] In [9]: regr.fit(x_train, y_train) Out[9]: LinearRegression(copy_X=True, fit_intercept=True, n_jobs=1, normalize=False) In [10]: regr.coef_ Out[10]: array([ 0.04899559, -0.55120039]) In [11]: regr.predict(x_test) Out[11]: array([ 7.45369917]) find what’s wrong?

- 28. Linear regression is a simple linear neuron! w1 w2 Y1 x1 x2 b * in linear case, least squares may be used: (learns in 1 iteration) But for non-linear, it can’t. SGD is most common.

- 29. Neural networks? • There are lots of types & variations of neural networks: MLP, RNN, CNN • They use the same ideas, concepts and mathematics as you’ve been just introduced. Just a bit more complex. • So you can start your ML path right now!

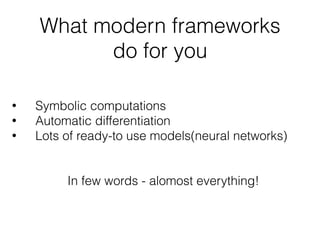

- 31. What modern frameworks do for you • Symbolic computations • Automatic differentiation • Lots of ready-to use models(neural networks) In few words - alomost everything!

- 32. Benefits: - simpler automatic differentiation - easier parallelisation - differentiation of graph produces graph, so you can get high order derivatives for no cost(PROFIT!!!) You say how to symbolically compute the gradient for an op when you make a new op in tf - single method @ops.RegisterGradient("MyOP") Symbolic computations • You don’t actually compute. You just say how to compute • You can think of it as meta programming • Symbolic computation shows how to get symbolic (common, analytical) solution • by substituting numerical values to vars, you obtain partial numerical solutions Symbolic: c = a + b given a=…, b=… Numerical: 7 = 3 + 4

- 33. Symbolic computations. TF sample (a-b)+ cos(x) /gpu:0 + /cpu:0 cos /gpu:0 - /cpu:0 x /gpu:0 a /gpu:0 b import tensorflow as tf import numpy as np with tf.device('/cpu:0'): x = tf.constant(np.ones((100,100))) y = tf.cos(x) with tf.device('/gpu:0'): a = tf.constant(np.zeros((100,100))) b = tf.constant(np.ones((100,100))) result = a-b+y tf_session = tf.Session( config=tf.ConfigProto( log_device_placement=True ) ) writer = tf.train.SummaryWriter( “/tmp/trainlogs2", tf_session.graph ) # then run # tensorboard —-logdir=/tmp/trainlogs2 in shell, # go to the location suggested by tensorboard, # `graphs` tab, click on each node/leaf, # and check where it has been placed

- 34. Automatic differentiation Automatic differentiation is based on chain rule: http://colah.github.io/posts/2015-08-Backprop/ ∂E(ƒ(w)) ∂w ∂E(ƒ(w)) ∂ƒ(w) ∂ƒ(w) ∂w But f(w) not depend directly on w, it may depend on g(w)… In TF it’s much more convenient than in Torch or Theano You can think of TF op = torch layer (in terms of automatic differentiation) • Allows us to compute partial derivatives of objective function with respect to each free parameter in one pass. • Efficient when # of objective functions is small

- 36. non-parametristic models • Depend on metric (distance function between 2 objects) • Depend on hyper parameters (number of classes, number of neighbors, etc) • if you don’t know how to define hyperparameter value, you fail • if you do know how to define hyperparameter value, you may perform even better than in parametristic models !2k → !

- 37. ML branches (supervising slice) • Supervised - use when you have labeled datasets • Unsupervised - use when you have unlabeled datasets • Reinforcement - use when you have to interact with environment

- 38. Starter links http://openclassroom.stanford.edu/MainFolder/ CoursePage.php?course=MachineLearning [1] http://andrew.gibiansky.com/blog/machine-learning/ hessian-free-optimization/ [2] http://sebastianruder.com/optimizing-gradient-descent/ index.html#momentum

![3 main questions (3)

• How should be taught:

This is a simple optimizer, but there are much more.

wi = wi −η(r(W )− y)xi SGD

You may use precise information about error curvature

(2nd order optimization)[1],

or try to simulate 2nd order optimization for faster convergence[2]](https://image.slidesharecdn.com/intro2mlandhowtobecomedsinyrs-161122192126/85/Introduction-to-Machine-Learning-24-320.jpg)

![Back to linear regression

In [3]: from sklearn import linear_model

In [4]: regr = linear_model.LinearRegression()

In [5]: x_train = [[0.7, 1], [-0.1, 10]]

In [6]: x_test = [[0.8, 2]]

In [7]: y_train = [8, 3]

In [8]: y_test = [9]

In [9]: regr.fit(x_train, y_train)

Out[9]: LinearRegression(copy_X=True, fit_intercept=True, n_jobs=1, normalize=False)

In [10]: regr.coef_

Out[10]: array([ 0.04899559, -0.55120039])

In [11]: regr.predict(x_test)

Out[11]: array([ 7.45369917])

find what’s wrong?](https://image.slidesharecdn.com/intro2mlandhowtobecomedsinyrs-161122192126/85/Introduction-to-Machine-Learning-27-320.jpg)

![Starter links

http://openclassroom.stanford.edu/MainFolder/

CoursePage.php?course=MachineLearning

[1] http://andrew.gibiansky.com/blog/machine-learning/

hessian-free-optimization/

[2] http://sebastianruder.com/optimizing-gradient-descent/

index.html#momentum](https://image.slidesharecdn.com/intro2mlandhowtobecomedsinyrs-161122192126/85/Introduction-to-Machine-Learning-38-320.jpg)