Introduction to Microsoft Hadoop

Download as PPTX, PDF2 likes403 views

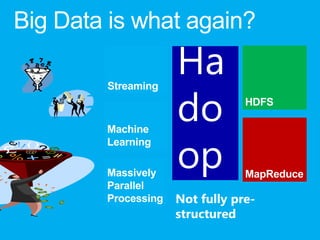

This is the slide deck from the June meeting of the Boise Web Technologies Group. Cindy Gross from Microsoft presented on Hadoop.

1 of 21

Downloaded 16 times

Recommended

How to measure everything - a million metrics per second with minimal develop...

How to measure everything - a million metrics per second with minimal develop...Jos Boumans Krux is an infrastructure provider for many of the websites you

use online today, like NYTimes.com, WSJ.com, Wikia and NBCU. For

every request on those properties, Krux will get one or more as

well. We grew from zero traffic to several billion requests per

day in the span of 2 years, and we did so exclusively in AWS.

To make the right decisions in such a volatile environment, we

knew that data is everything; without it, you can't possibly make

informed decisions. However, collecting it efficiently, at scale,

at minimal cost and without burdening developers is a tremendous

challenge.

Join me in this session to learn how we overcame this challenge

at Krux; I will share with you the details of how we set up our

global infrastructure, entirely managed by Puppet, to capture over

a million data points every second on virtually every part of the

system, including inside the web server, user apps and Puppet itself,

for under $2000/month using off the shelf Open Source software and

some code we've released as Open Source ourselves. In addition, I’ll

show you how you can take (a subset of) these metrics and send them

to advanced analytics and alerting tools like Circonus or Zabbix.

This content will be applicable for anyone collecting or desiring to

collect vast amounts of metrics in a cloud or datacenter setting and

making sense of them.

Emphemeral hadoop clusters in the cloud

Emphemeral hadoop clusters in the cloudgfodor This document discusses how Etsy uses ephemeral Hadoop clusters in the cloud to process and analyze their large amounts of data. They move data from databases and logs to S3, then use Cascading to run jobs that perform joins, grouping, etc. on the data in Hadoop. They also leverage Hadoop streaming to run MATLAB scripts for more complex analysis, and build a system called Barnum to coordinate jobs and return results. This approach allows them to flexibly scale processing from zero to thousands of nodes as needed in a cost effective and isolated manner.

Distributed tracing for Node.js

Distributed tracing for Node.jsNikolay Stoitsev Distributed tracing is a very useful practice for Node.js because it gives you a good visibility over the way your async code executes and the lifecycle of your external calls as they travels between many services.

Winning with Big Data: Secrets of the Successful Data Scientist

Winning with Big Data: Secrets of the Successful Data ScientistDataspora A new class of professionals, called data scientists, have emerged to address the Big Data revolution. In this talk, I discuss nine skills for munging, modeling, and visualizing Big Data. Then I present a case study of using these skills: the analysis of billions of call records to predict customer churn at a North American telecom.

http://en.oreilly.com/datascience/public/schedule/detail/15316

"Spark: from interactivity to production and back", Yurii Ostapchuk

"Spark: from interactivity to production and back", Yurii OstapchukFwdays Going from experiment to deployed prototype as fast as possible in a dynamic startup environment is invaluable. Being able to respond quickly to changes not less important.

From interactive ad-hoc analysis to production applications with Spark and back - this is a story of one spirited engineer trying to make his life a little easier and a little more efficient while wrangling the data, writing Scala code, deploying Spark applications. The problems faced, the lessons learned, the options found and some smart solutions and ideas - this is what we will go through.

Capedwarf

CapedwarfJonathan Franchesco Torres Baca CapeDwarf is an open-source alternative to Google App Engine that allows applications written for GAE to run on WildFly Application Server without modification. It uses Infinispan to emulate Google's DataStore and the appengine-java-sdk along with Javassist to provide compatibility. CapeDwarf can be run on Linux by starting the WildFly server with specific parameters and configuration.

Константин Макарычев (Sofware Engineer): ИСПОЛЬЗОВАНИЕ SPARK ДЛЯ МАШИННОГО ОБ...

Константин Макарычев (Sofware Engineer): ИСПОЛЬЗОВАНИЕ SPARK ДЛЯ МАШИННОГО ОБ...Provectus "Apache Spark – опенсорсный движок для обработки больших объёмов данных. Помимо прочего, spark содержит в себе всё необходимое для машинного обучения, и это действительно просто до тех пор, пока не нужно использовать результаты на продакшне. Я расскажу, как работает machine learning на spark и в целом, как вывести всё это в продакшн, и что можно сделать из этого интересного"

121129 umls yes

121129 umls yesEunsil Yoon The document describes the Unified Medical Language System (UMLS) which brings together many health and biomedical vocabularies and standards to enable interoperability between computer systems. It discusses the three main UMLS knowledge sources: the Metathesaurus, Semantic Network, and SPECIALIST Lexicon. The Metathesaurus contains over 100 vocabularies and provides concept unique identifiers to link synonymous terms. The Semantic Network categorizes terms into 133 semantic types and 54 relationships. The SPECIALIST Lexicon contains syntactic and morphological information to support natural language processing. An example journal article analyzing UMLS term occurrences in clinical notes is also mentioned.

Apache Hadoop Java API

Apache Hadoop Java APIAdam Kawa This document provides an overview of the Apache Hadoop API for input formats. It discusses the responsibilities of input formats, common input formats like TextInputFormat and KeyValueTextInputFormat, and binary formats like SequenceFileInputFormat. It also covers the InputFormat and RecordReader classes, using mappers to process input splits, and considerations for keys and values.

Hadoop과 SQL-on-Hadoop (A short intro to Hadoop and SQL-on-Hadoop)

Hadoop과 SQL-on-Hadoop (A short intro to Hadoop and SQL-on-Hadoop)Matthew (정재화) 얼마전 비전공자들에게 하둡 개요를 주제로 발표했던 슬라이드입니다. 하둡의 개발 배경과 기본 컨셉, 최근 유행하고 있는 SQL-on-Hadoop에 대해서 설명합니다.

Big data Hadoop Analytic and Data warehouse comparison guide

Big data Hadoop Analytic and Data warehouse comparison guideDanairat Thanabodithammachari This document provides an overview of 4 solutions for processing big data using Hadoop and compares them. Solution 1 involves using core Hadoop processing without data staging or movement. Solution 2 uses BI tools to analyze Hadoop data after a single CSV transformation. Solution 3 creates a data warehouse in Hadoop after a single transformation. Solution 4 implements a traditional data warehouse. The solutions are then compared based on benefits like cloud readiness, parallel processing, and investment required. The document also includes steps for installing a Hadoop cluster and running sample MapReduce jobs and Excel processing.

Hadoop HDFS Detailed Introduction

Hadoop HDFS Detailed IntroductionHanborq Inc. The document provides an overview of the Hadoop Distributed File System (HDFS). It describes HDFS's master-slave architecture with a single NameNode master and multiple DataNode slaves. The NameNode manages filesystem metadata and data placement, while DataNodes store data blocks. The document outlines HDFS components like the SecondaryNameNode, DataNodes, and how files are written and read. It also discusses high availability solutions, operational tools, and the future of HDFS.

Introduction To Map Reduce

Introduction To Map Reducerantav This document provides an overview of MapReduce, a programming model developed by Google for processing and generating large datasets in a distributed computing environment. It describes how MapReduce abstracts away the complexities of parallelization, fault tolerance, and load balancing to allow developers to focus on the problem logic. Examples are given showing how MapReduce can be used for tasks like word counting in documents and joining datasets. Implementation details and usage statistics from Google demonstrate how MapReduce has scaled to process exabytes of data across thousands of machines.

introduction to data processing using Hadoop and Pig

introduction to data processing using Hadoop and PigRicardo Varela In this talk we make an introduction to data processing with big data and review the basic concepts in MapReduce programming with Hadoop. We also comment about the use of Pig to simplify the development of data processing applications

YDN Tuesdays are geek meetups organized the first Tuesday of each month by YDN in London

Pig, Making Hadoop Easy

Pig, Making Hadoop EasyNick Dimiduk This document introduces Pig, an open source platform for analyzing large datasets that sits on top of Hadoop. It provides an example of using Pig Latin to find the top 5 most visited websites by users aged 18-25 from user and website data. Key points covered include who uses Pig, how it works, performance advantages over MapReduce, and upcoming new features. The document encourages learning more about Pig through online documentation and tutorials.

learning spark - Chatper8. Tuning and Debugging

learning spark - Chatper8. Tuning and DebuggingMungyu Choi learning spark chapter8 tuning and debugging spark

Integration of Hive and HBase

Integration of Hive and HBaseHortonworks Apache Hive provides SQL-like access to your stored data in Apache Hadoop. Apache HBase stores tabular data in Hadoop and supports update operations. The combination of these two capabilities is often desired, however, the current integration show limitations such as performance issues. In this talk, Enis Soztutar will present an overview of Hive and HBase and discuss new updates/improvements from the community on the integration of these two projects. Various techniques used to reduce data exchange and improve efficiency will also be provided.

HADOOP ONLINE TRAINING

HADOOP ONLINE TRAININGSanthosh Sap For Free Demo Please Contact

AcuteSoft:

India: +91-9848346149, +91-7702226149

Land Line: +91 (0)40 - 42627705

USA: +1 973-619-0109, +1 312-235-6527

UK : +44 207-993-2319

skype : acutesoft

email : [email protected]

www.acutesoft.com

http://training.acutesoft.com

HADOOP ONLINE TRAINING

HADOOP ONLINE TRAININGtraining3 This document provides an overview of the Hadoop Online Training course offered by AcuteSoft. The course covers Hadoop architecture including HDFS, MapReduce and YARN. It also covers related tools like HBase, Hive, Pig and Sqoop. The course includes lectures, demonstrations and hands-on exercises on Hadoop installation, configuration, administration and development tasks. It also includes a proof of concept mini project on analyzing Facebook data using Hive. Contact information is provided for free demo and pricing.

More Related Content

Viewers also liked (20)

Apache Hadoop Java API

Apache Hadoop Java APIAdam Kawa This document provides an overview of the Apache Hadoop API for input formats. It discusses the responsibilities of input formats, common input formats like TextInputFormat and KeyValueTextInputFormat, and binary formats like SequenceFileInputFormat. It also covers the InputFormat and RecordReader classes, using mappers to process input splits, and considerations for keys and values.

Hadoop과 SQL-on-Hadoop (A short intro to Hadoop and SQL-on-Hadoop)

Hadoop과 SQL-on-Hadoop (A short intro to Hadoop and SQL-on-Hadoop)Matthew (정재화) 얼마전 비전공자들에게 하둡 개요를 주제로 발표했던 슬라이드입니다. 하둡의 개발 배경과 기본 컨셉, 최근 유행하고 있는 SQL-on-Hadoop에 대해서 설명합니다.

Big data Hadoop Analytic and Data warehouse comparison guide

Big data Hadoop Analytic and Data warehouse comparison guideDanairat Thanabodithammachari This document provides an overview of 4 solutions for processing big data using Hadoop and compares them. Solution 1 involves using core Hadoop processing without data staging or movement. Solution 2 uses BI tools to analyze Hadoop data after a single CSV transformation. Solution 3 creates a data warehouse in Hadoop after a single transformation. Solution 4 implements a traditional data warehouse. The solutions are then compared based on benefits like cloud readiness, parallel processing, and investment required. The document also includes steps for installing a Hadoop cluster and running sample MapReduce jobs and Excel processing.

Hadoop HDFS Detailed Introduction

Hadoop HDFS Detailed IntroductionHanborq Inc. The document provides an overview of the Hadoop Distributed File System (HDFS). It describes HDFS's master-slave architecture with a single NameNode master and multiple DataNode slaves. The NameNode manages filesystem metadata and data placement, while DataNodes store data blocks. The document outlines HDFS components like the SecondaryNameNode, DataNodes, and how files are written and read. It also discusses high availability solutions, operational tools, and the future of HDFS.

Introduction To Map Reduce

Introduction To Map Reducerantav This document provides an overview of MapReduce, a programming model developed by Google for processing and generating large datasets in a distributed computing environment. It describes how MapReduce abstracts away the complexities of parallelization, fault tolerance, and load balancing to allow developers to focus on the problem logic. Examples are given showing how MapReduce can be used for tasks like word counting in documents and joining datasets. Implementation details and usage statistics from Google demonstrate how MapReduce has scaled to process exabytes of data across thousands of machines.

introduction to data processing using Hadoop and Pig

introduction to data processing using Hadoop and PigRicardo Varela In this talk we make an introduction to data processing with big data and review the basic concepts in MapReduce programming with Hadoop. We also comment about the use of Pig to simplify the development of data processing applications

YDN Tuesdays are geek meetups organized the first Tuesday of each month by YDN in London

Pig, Making Hadoop Easy

Pig, Making Hadoop EasyNick Dimiduk This document introduces Pig, an open source platform for analyzing large datasets that sits on top of Hadoop. It provides an example of using Pig Latin to find the top 5 most visited websites by users aged 18-25 from user and website data. Key points covered include who uses Pig, how it works, performance advantages over MapReduce, and upcoming new features. The document encourages learning more about Pig through online documentation and tutorials.

learning spark - Chatper8. Tuning and Debugging

learning spark - Chatper8. Tuning and DebuggingMungyu Choi learning spark chapter8 tuning and debugging spark

Integration of Hive and HBase

Integration of Hive and HBaseHortonworks Apache Hive provides SQL-like access to your stored data in Apache Hadoop. Apache HBase stores tabular data in Hadoop and supports update operations. The combination of these two capabilities is often desired, however, the current integration show limitations such as performance issues. In this talk, Enis Soztutar will present an overview of Hive and HBase and discuss new updates/improvements from the community on the integration of these two projects. Various techniques used to reduce data exchange and improve efficiency will also be provided.

Similar to Introduction to Microsoft Hadoop (20)

HADOOP ONLINE TRAINING

HADOOP ONLINE TRAININGSanthosh Sap For Free Demo Please Contact

AcuteSoft:

India: +91-9848346149, +91-7702226149

Land Line: +91 (0)40 - 42627705

USA: +1 973-619-0109, +1 312-235-6527

UK : +44 207-993-2319

skype : acutesoft

email : [email protected]

www.acutesoft.com

http://training.acutesoft.com

HADOOP ONLINE TRAINING

HADOOP ONLINE TRAININGtraining3 This document provides an overview of the Hadoop Online Training course offered by AcuteSoft. The course covers Hadoop architecture including HDFS, MapReduce and YARN. It also covers related tools like HBase, Hive, Pig and Sqoop. The course includes lectures, demonstrations and hands-on exercises on Hadoop installation, configuration, administration and development tasks. It also includes a proof of concept mini project on analyzing Facebook data using Hive. Contact information is provided for free demo and pricing.

Getting your Big Data on with HDInsight

Getting your Big Data on with HDInsightSimon Elliston Ball Introduction to HDInsight, and its capabilities, including Azure Storage, Hive, MapReduce, Mahout and HBase. See also some of the tools mentioned at http://bigdata.red-gate.com/ and source code at https://github.com/simonellistonball/GettingYourBigDataOnMapReduce

CloudOps CloudStack Days, Austin April 2015

CloudOps CloudStack Days, Austin April 2015CloudOps2005 Cloud-Init is a tool that initializes virtual machines on first boot. It retrieves metadata from CloudStack like SSH keys and VM details. User-data can be passed to Cloud-Init to run scripts or configure VMs like deploying RabbitMQ. There are some issues with CloudStack and Cloud-Init around password/key changes not being detected on reboot. Alternatives include custom init scripts.

Orienit hadoop practical cluster setup screenshots

Orienit hadoop practical cluster setup screenshotsKalyan Hadoop The document contains screenshots and descriptions of the setup and configuration of a Hadoop cluster. It includes images showing the cluster with different numbers of live and dead nodes, replication settings across nodes, and outputs of commands like fsck and job execution information. The screenshots demonstrate how to view cluster health metrics, manage nodes, and run MapReduce jobs on the Hadoop cluster.

NYC_2016_slides

NYC_2016_slidesNathan Halko This document provides an overview of a machine learning workshop including tutorials on decision tree classification for flight delays, clustering news articles with k-means clustering, and collaborative filtering for movie recommendations using Spark. The tutorials demonstrate loading and preparing data, training models, evaluating performance, and making predictions or recommendations. They use Spark MLlib and are run in Apache Zeppelin notebooks.

Uotm workshop

Uotm workshopRavi Patel This document provides an overview of Big Data and Hadoop concepts, architectures, and hands-on demonstrations using Microsoft Azure HDInsight. It begins with definitions of Big Data and Hadoop, then demonstrates sample end-to-end architectures using Azure services. Hands-on labs explore creating storage, streaming jobs, and querying data using HDInsight. The document emphasizes that Hadoop is well-suited for large-scale data exploration and analytics on unknown datasets. It shows how running Hadoop on Azure provides elasticity, low costs, and easier management compared to on-premises Hadoop deployments.

Big Data in the Cloud - Montreal April 2015

Big Data in the Cloud - Montreal April 2015Cindy Gross slides:

Basic Big Data and Hadoop terminology

What projects fit well with Hadoop

Why Hadoop in the cloud is so Powerful

Sample end-to-end architecture

See: Data, Hadoop, Hive, Analytics, BI

Do: Data, Hadoop, Hive, Analytics, BI

How this tech solves your business problems

Big Data Integration Webinar: Getting Started With Hadoop Big Data

Big Data Integration Webinar: Getting Started With Hadoop Big DataPentaho This document discusses getting started with big data analytics using Hadoop and Pentaho. It provides an overview of installing and configuring Hadoop and Pentaho on a single machine or cluster. Dell's Crowbar tool is presented as a way to quickly deploy Hadoop clusters on Dell hardware in about two hours. The document also covers best practices like leveraging different technologies, starting with small datasets, and not overloading networks. A demo is given and contact information provided.

HP Helion European Webinar Series ,Webinar #3

HP Helion European Webinar Series ,Webinar #3 BeMyApp The document discusses building cloud native applications using the Helion Development Platform. It provides information on connecting applications to services like databases, using buildpacks to deploy different programming languages, and Windows support in Cloud Foundry including .NET applications and SQL Server. The presentation includes code examples and polls questions to engage webinar participants.

2016 05-cloudsoft-amp-and-brooklyn-new

2016 05-cloudsoft-amp-and-brooklyn-newBradDesAulniers2 Using Apache Brooklyn to manage your application stack. Brooklyn is a cloud agnostic orchestrator that can deploy an application to any cloud (including the creation of infrastructure) without changing the blueprint.

Practical Hadoop Big Data Training Course by Certified Architect

Practical Hadoop Big Data Training Course by Certified ArchitectKamal A Practical Hadoop Big Data Training Course by Certified Architect.

Real time hadoop project on how you implement hadoop based projects for Insurance and Financial domain clients. Also includes live project exp discussion , case study discussion and how to position yourself as hadoop /bigdata consultant. Core Java and Linux basics are covered for appropriate persons.

Hadoop content

Hadoop contentHadoop online training This 40-hour course provides training to become a Hadoop developer. It covers Hadoop and big data fundamentals, Hadoop file systems, administering Hadoop clusters, importing and exporting data with Sqoop, processing data using Hive, Pig, and MapReduce, the YARN architecture, NoSQL programming with MongoDB, and reporting tools. The course includes hands-on exercises, datasets, installation support, interview preparation, and guidance from instructors with over 8 years of experience working with Hadoop.

Zeronights 2015 - Big problems with big data - Hadoop interfaces security

Zeronights 2015 - Big problems with big data - Hadoop interfaces securityJakub Kałużny Did "cloud computing" and "big data" buzzwords bring new challenges for security testers?

Apart from complexity of Hadoop installations and number of interfaces, standard techniques can be applied to test for: web application vulnerabilities, SSL security and encryption at rest. We tested popular Hadoop environments and found a few critical vulnerabilities, which for sure cast a shadow on big data security.

Effective DevOps by using Docker and Chef together !

Effective DevOps by using Docker and Chef together !WhiteHedge Technologies Inc. WhiteHedge is New Jersey, US based company that is Docker certified consulting and training partner. WhiteHedge also has partnered with Chef and contributes to Chef OS.

Docker containers and Chef are very popular tools. Learn how to use Chef and Docker together for effective DevOps.

Big problems with big data – Hadoop interfaces security

Big problems with big data – Hadoop interfaces securitySecuRing Did "cloud computing" and "big data" buzzwords bring new challenges for security testers?

Apart from complexity of Hadoop installations and number of interfaces, standard techniques can be applied to test for: web application vulnerabilities, SSL security and encryption at rest. We tested popular Hadoop environments and found a few critical vulnerabilities, which for sure cast a shadow on big data security.

Hadoop and Mapreduce Certification

Hadoop and Mapreduce CertificationVskills Vskills certification for Hadoop and Mapreduce assesses the candidate for skills on Hadoop and Mapreduce platform for big data applications. The certification tests the candidates on various areas in Hadoop and Mapreduce which includes knowledge of Hadoop, Mapreduce, their configuration and administration, cluster installation and configuration, using pig, zookeeper and Hbase.

http://www.vskills.in/certification/Certified-Hadoop-and-Mapreduce-Professional

Hadoop Hive Tutorial | Hive Fundamentals | Hive Architecture

Hadoop Hive Tutorial | Hive Fundamentals | Hive ArchitectureSkillspeed This Hadoop Hive Tutorial will unravel the complete Introduction to Hive, Hive Architecture, Hive Commands, Hive Fundamentals & HiveQL. In addition to this, even fundamental concepts of BIG Data & Hadoop are extensively covered.

At the end, you'll have a strong knowledge regarding Hadoop Hive Basics.

PPT Agenda

✓ Introduction to BIG Data & Hadoop

✓ What is Hive?

✓ Hive Data Flows

✓ Hive Programming

----------

What is Apache Hive?

Apache Hive is a data warehousing infrastructure built over Hadoop which is targeted towards SQL programmers. Hive permits SQL programmers to directly enter the Hadoop ecosystem without any pre-requisites in Java or other programming languages. HiveQL is similar to SQL, it is utilized to process Hadoop & MapReduce operations by managing & querying data.

----------

Hive has the following 5 Components:

1. Driver

2. Compiler

3. Shell

4. Metastore

5. Execution Engine

----------

Applications of Hive

1. Data Mining

2. Document Indexing

3. Business Intelligence

4. Predictive Modelling

5. Hypothesis Testing

----------

Skillspeed is a live e-learning company focusing on high-technology courses. We provide live instructor led training in BIG Data & Hadoop featuring Realtime Projects, 24/7 Lifetime Support & 100% Placement Assistance.

Email: [email protected]

Website: https://www.skillspeed.com

Capital onehadoopintro

Capital onehadoopintroDoug Chang This document provides an overview of Capital One's plans to introduce Hadoop and discusses several proof of concepts (POCs) that could be developed. It summarizes the history and practices of using Hadoop at other companies like LinkedIn, Netflix, and Yahoo. It then outlines possible POCs for Hadoop distributions, ETL/analytics frameworks, performance testing, and developing a scaling layer. The goal is to contribute open source code and help with Capital One's transition to using Hadoop in production.

MongoDB & Hadoop, Sittin' in a Tree

MongoDB & Hadoop, Sittin' in a TreeMongoDB K Young, CEO of Mortar, gave a presentation on using MongoDB and Hadoop/Pig together. He began with a brief introduction to Hadoop and Pig, explaining their uses for processing large datasets. He then demonstrated how to load data from MongoDB into Pig using a connector, and store data from Pig back into MongoDB. The rest of the presentation focused on use cases for combining MongoDB and Pig, such as being able to separately manage data storage and processing. Young also showed some Mortar utilities for working with MongoDB data in Pig.

Recently uploaded (20)

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Introduction to Microsoft Hadoop

- 1. INTRODUCTION TO HADOOP Cindy Gross | @SQLCindy | SQLCAT PM http://blogs.msdn.com/cindygross

- 4. SELECT deviceplatform, state, c ountry FROM hivesampletable LIMIT 200;

- 19. Hadoop: The Definitive Guide by Tom White SQL Server Sqoop http://bit.ly/rulsjX JavaScript http://bit.ly/wdaTv6 Twitter https://twitter.com/#!/search/%23bigdata Hive http://hive.apache.org Excel to Hadoop via Hive ODBC http://tinyurl.com/7c4qjjj Hadoop On Azure Videos http://tinyurl.com/6munnx2 Klout http://tinyurl.com/6qu9php Microsoft Big Data http://microsoft.com/bigdata Denny Lee http://dennyglee.com/category/bigdata/ Carl Nolan http://tinyurl.com/6wbfxy9 Cindy Gross http://tinyurl.com/SmallBitesBigData

- 21. INTRODUCTION TO HADOOP Cindy Gross | @SQLCindy | SQLCAT PM http://blogs.msdn.com/cindygross

Editor's Notes

- #4: Hadoop is part of NOSQL (Not Only SQL) and it’s a bit wild. You explore in/with Hadoop. You learn new things. You test hypotheses on unstructured jungle data. You eliminate noise.Then you take the best learnings and share them with the world via a relational or multidimensional database.Atomicity, consistency, isolation, durability (ACID) is used in relational databases to ensure immediate consistency. But what if eventual consistency is good enough? In stomps BASE – Basically available, soft state, eventual consistencyScale up or scale out?Pay up front or pay as you go?Which IT skills do you utilize?

- #5: Hive is a database that sits on top of Hadoop. HiveQL (HQL) generates (possibly multiple) MapReduce programs to execute the joins, filters, aggregates, etc. The language is very SQL-like, perhaps closer to MySQL but still very familiar.

- #6: Get your data from anywhere. There’s a data explosion and we can now use more of it than ever before. The HadoopOnAzure.com portal provides an easy interface to pull in data from sources including secure FTP, Amazon S3, Azure blob store, Azure Data Market. Use Sqoop to move data between Hadoop and SQL Server, PDW, SQL Azure. The Hive ODBC driver lets you display Hive data in Excel or apps.

- #7: Many equate big data to MapReduce and in particular Hadoop. However, other applications like streaming, machine learning, and PDW type systems can also be described as big data solutions. Big Data is unstructured, flows fast, has many formats, and/or has quickly changing formats. How big is “big” really depends on what is too big/complex for your environment (hardware, people, software, processes). It’s done by scaling out on commodity (low end enterprise level) hardware.

- #8: Big data solutions are comprised of matching the right set of tools to the right set of problems (architectures are compositional, not monolithic)Need to select appropriate combinations of storage, analytics and consumers.

- #9: For demo steps see: http://blogs.msdn.com/b/cindygross/archive/2012/05/07/load-data-from-the-azure-datamarket-to-hadoop-on-azure-small-bites-of-big-data.aspx

- #11: Big data is often described as problems that have one or more of the 3 (or 4) Vs – volume, velocity, variety, variability. Think about big data when you describe a problem with terms like tame the chaos, reduce the complexity, explore, I don’t know what I don’t know, unknown unknowns, unstructured, changing quickly, too much for what my environment can handle now, unused data.Volume = more data than the current environment can handle with vertical scaling, need to make sure of data that it is currently too expensive to useVelocity = Small decision window compared to data change rate, ask how quickly you need to analyze and how quickly data arrivesVariety = many different formats that are expensive to integrate, probably from many data sources/feedsVariability = many possible interpretations of the data

- #12: It’s not the hammer for every problem and it’s not the answer to every large store of data. It does not replace relational or multi-dimensional dbs, it’s a solution to a different sort of problem. It’s a new, specialized type of db for certain scenarios. It will feed other types of dbs.

- #13: Microsoft takes what is already there, makes it run on Windows, and offers the option of full control or simplificationHadoop in the cloud simplifies managementHadoop on Windows lets you reuse existing skillsJavaScript opens up more hiring optionsHive ODBC Driver / Excel Addin lets you combine data, move dataSqoop moves data – Linux based version to/from SQL available now, Windows based soon

- #14: Demo2 –Mashup1) Hive Panea. Excel, blank worksheet, datab. Use your HadoopOnAzure clusterc. Object = Gender2007 or whatever table you pre-loaded in Hive (select * from gender2007 limit 200)d. KEY POINT = pulled data from multiple files across many nodes and displayed via ODBC is user friendly format – not easy in Hadoop world2) PowerPivota. KEY POINTS = uses local memory, pulls data from multiple data sources (structured and unstructured), can be stored/scheduled in Sharepoint, creates relationships to add value -- MASHUPb. Excel file DeviceAnalysisByRegion.xlsx (worksheet with region/country data, relationship defined between Gender2007 country and this country data), click on PowerPivot tab and open blank tabc. Click on PowerPivot Window – show each tab is data from a different source – hivesampletable (Hadoop/unstructured) and regions (could be anything/structured)d. Click on diagram view – show relationships, rich valuee. Pivot table.pivotchart.newf. Close hive query windowg. Values = count of platform, axis=platform, zoom to selectionh. Slicers Vertical = regions hierarchyi. Region = North America, country = Canada == Windows Phone jokesj. KEY Load to Sharepoint, schedule refreshes, use for Power View

- #15: Expand your audience of decision makers by making BI easier with self-service, visualizationOur products interact and work together + one company for questions/issuesUse existing hardware, employeesExpand options for hiring/training/re-training with familiar tools Familiar tools = less rampup timeCloud = elasticity, easy scale up/down, pay for what you useEasier to move data to/from HDFS

- #16: It’s about separating the signal from the noise so you have insight to make decisions to take action. Discover, explore, gain insight.

- #17: Familiar tools, new tools, ease of use

- #18: Take action! All the exploring doesn’t help if you don’t do something! Something might be starting another round of exploring, but eventually DO SOMETHING!