Introduction to Monitoring Tools for DevOps

Download as PPTX, PDF0 likes27 views

Introduction to Monitoring Tools for DevOps

1 of 20

Download to read offline

Ad

Recommended

Centralized Logging System Using ELK Stack

Centralized Logging System Using ELK StackRohit Sharma Centralized Logging System using ELK Stack

The document discusses setting up a centralized logging system (CLS) using the ELK stack. The ELK stack consists of Logstash to capture and filter logs, Elasticsearch to index and store logs, and Kibana to visualize logs. Logstash agents on each server ship logs to Logstash, which filters and sends logs to Elasticsearch for indexing. Kibana queries Elasticsearch and presents logs through interactive dashboards. A CLS provides benefits like log analysis, auditing, compliance, and a single point of control. The ELK stack is an open-source solution that is scalable, customizable, and integrates with other tools.

Lesson_08_Continuous_Monitoring.pdf

Lesson_08_Continuous_Monitoring.pdfMinh Quân Đoàn Here are the steps to install Nagios monitoring tool:

1. Install prerequisite packages like Apache web server, PHP, gd library etc. on the server.

2. Download the latest version of Nagios Core from the official website.

3. Extract the downloaded Nagios Core package.

4. Run configure script with ./configure and make commands to compile the source code.

5. Run make install to install Nagios binaries, configuration files and plugins.

6. Configure Nagios by editing the nagios.cfg file and define host/service details.

7. Start and enable Nagios process using commands like /etc/init.d/nagios start.

8. Access

What is Continuous Monitoring in DevOps.pdf

What is Continuous Monitoring in DevOps.pdfflufftailshop Continuous quality monitoring in DevOps is the process of identifying threats to the security and compliance rules of a software development cycle and architecture.

What is Continuous Monitoring in DevOps.pdf

What is Continuous Monitoring in DevOps.pdfkalichargn70th171 Android platforms are ubiquitous today, with their users rising more than ever. The increased usage of Androids has stirred competition in the market, urging every company to adopt the best possible testing strategies and solutions to ensure the building of high-quality apps and create superior brand loyalty. Choosing the right testing framework that is critical to any Android automation project.

Elasticsearch features and ecosystem

Elasticsearch features and ecosystemPavel Alexeev Around elasticsearch ecosystem - some components and all work together. With some real dashboard examples from ELK of lesegais.ru/portal

Internship msc cs

Internship msc csPooja Bhojwani The document describes a travel agency management system that offers the following key features:

- Integrated travel agents located directly in companies to make reservations and issue tickets.

- An electronic booking system that is IATA approved along with state-of-the-art technology.

- Dedicated and bilingual staff that provide personalized service and account management for corporate travel needs.

- One-stop shopping for all travel arrangements along with corporate agreements with airlines.

Top 10 dev ops tools (1)

Top 10 dev ops tools (1)yalini97 Get Devops Training in Chennai with real-time experts at Besant Technologies, OMR. We believe that learning Devops with practical and theoretical will be the easiest way to understand the technology in quick manner. We designed this Devops from basic level to the latest advanced level

http://www.traininginsholinganallur.in/devops-training-in-chennai.html

What is Spinnaker? Spinnaker tutorial

What is Spinnaker? Spinnaker tutorialjeetendra mandal Spinnaker is an open source continuous delivery platform that provides automated deployment capabilities for releasing software changes. It is designed to increase release velocity and reduce risk associated with updating applications. Spinnaker uses a microservices architecture and provides features like multicloud deployments, automated pipelines, deployment verification, and flexibility and extensibility through customization and extensions. It works by managing applications and their deployments through concepts like pipelines, stages, server groups, and deployment strategies.

Kubernetes Infra 2.0

Kubernetes Infra 2.0Deepak Sood The document outlines an infrastructure 2.0 approach based on cloud native technologies. It advocates for infrastructure as code, test-driven deployments, open source tools, and seamless developer workflows. The approach uses microservices, containers, service meshes, and orchestration with Kubernetes. It recommends tools like Terraform, Jenkins, Kubernetes, Istio, Prometheus, Elasticsearch and Airflow for infrastructure provisioning, CI/CD, container management, service mesh, monitoring, logging, and job scheduling. It also discusses Docker, data pipelines, and processes for onboarding new applications.

Triangle Devops Meetup 10/2015

Triangle Devops Meetup 10/2015aspyker Triangle Devops Meetup covering Netflix open source, cloud architecture, and what Andrew did in his first year working as a senior software engineer in the cloud platform group.

Splunk metrics via telegraf

Splunk metrics via telegrafAshvin Pandey The document discusses a Splunk user group meeting about using Telegraf to monitor metrics. The agenda includes an introduction to Telegraf architecture, how to connect Telegraf with Splunk, deploying Telegraf, and analyzing metrics with Splunk. Attendees are encouraged to join the Slack channel and ask questions during the session, and the slides and recording will later be posted online.

Infrastructure as Code & its Impact on DevOps

Infrastructure as Code & its Impact on DevOps Bahaa Al Zubaidi Embrace Infrastructure as Code (#IaC) in #DevOps for faster, secure, and scalable deployments! Boost collaboration and efficiency while automating infrastructure with tools like #Ansible, #CloudFormation, and #AzureResourceManager. #Technology #Efficiency #Automation

Read more at https://bahaaalzubaidi.com/infrastructure-as-code-devops/

A Big Data Lake Based on Spark for BBVA Bank-(Oscar Mendez, STRATIO)

A Big Data Lake Based on Spark for BBVA Bank-(Oscar Mendez, STRATIO)Spark Summit This document describes BBVA's implementation of a Big Data Lake using Apache Spark for log collection, storage, and analytics. It discusses:

1) Using Syslog-ng for log collection from over 2,000 applications and devices, distributing logs to Kafka.

2) Storing normalized logs in HDFS and performing analytics using Spark, with outputs to analytics, compliance, and indexing systems.

3) Choosing Spark because it allows interactive, batch, and stream processing with one system using RDDs, SQL, streaming, and machine learning.

CV_RishabhDixit

CV_RishabhDixitRishabh Dixit Rishabh Dixit is a DevOps Engineer with over 2 years of experience in build engineering, build management, software configuration management, and process automation. He has skills in technologies like Git, Jira, Jenkins, Apache, Tomcat, PostgreSQL, RabbitMQ, Elasticsearch, and Linux. Some of his responsibilities include setting up continuous integration pipelines, managing Jenkins nodes, PostgreSQL administration, Tomcat configuration, load balancing with Nginx and HAProxy, application monitoring with New Relic and PagerDuty, and release support. He is looking to leverage his skills and experience in a challenging DevOps role.

the tooling of a modern and agile oracle dba

the tooling of a modern and agile oracle dbaBertrandDrouvot This presentation discusses tools for automating database operations and monitoring databases. It introduces Ansible for automation, and Telegraf, Influxdb, Grafana, Logstash, Elasticsearch, Kibana, and Kapacitor for collecting, storing, and visualizing metrics, logs, and alerts. The presentation includes a live demo of using these tools to provision an Oracle database, create performance dashboards, generate alerts, and view logs and audits. The goal is to provide a modern, agile approach to database administration.

2015 03-16-elk at-bsides

2015 03-16-elk at-bsidesJeremy Cohoe Jeremy Cohoe presented on using the ELK (Elasticsearch, Logstash, Kibana) stack for log analysis. He began with an overview of what ELK is and its components - Logstash parses logs, Elasticsearch is the database, and Kibana provides the GUI. Cohoe then demonstrated using ELK to monitor 802.11 client probes with a software defined radio and parse Flex pager signals. Finally, he discussed implementing ELK in production for a Linux central syslog system, including scaling out with Redis, common plugins, and cluster monitoring tools.

Devops

DevopsJyothirmaiG4 Devops is an approach that aims to increase an organization's ability to deliver applications and services at high velocity by combining cultural philosophies, practices, and tools that align development and operations teams. Under a DevOps model, development and operations teams work closely together across the entire application lifecycle from development through deployment to operations. They use automation, monitoring, and collaboration tools to accelerate delivery while improving quality and security. Popular DevOps tools include Git, Jenkins, Puppet, Chef, Ansible, Docker, and Nagios.

Deep Dive Into Elasticsearch: Establish A Powerful Log Analysis System With E...

Deep Dive Into Elasticsearch: Establish A Powerful Log Analysis System With E...Tyler Nguyen We will have a deep view of the Elastic Stack - which is the next evolution of ELK Stack, learn how to build the powerful log analysis system with Elastic Stack and have an overview of specifications and comparison details between the self-managed cluster vs Elastic stack provided as SaaS from cloud providers.

Graylog

GraylogKnoldus Inc. Graylog is a leading centralized log management solution for capturing, storing, and enabling real-time analysis of terabytes of machine data.

Co 4, session 2, aws analytics services

Co 4, session 2, aws analytics servicesm vaishnavi AWS offers several analytics services to help process and provide insights from data. These include Amazon Athena for interactive querying of data stored in S3 using SQL, Amazon EMR for processing large amounts of data using Hadoop and other open source tools, Amazon CloudSearch for setting up a search solution easily, and Amazon Kinesis for collecting, processing, and analyzing real-time data. Other services are Amazon Redshift for data warehousing, Amazon Quicksight for interactive dashboards, AWS Glue for ETL jobs, and Amazon Lake Formation for securing data lakes.

Netflix Cloud Architecture and Open Source

Netflix Cloud Architecture and Open Sourceaspyker A presentation on the Netflix Cloud Architecture and NetflixOSS open source. For the All Things Open 2015 conference in Raleigh 2015/10/19. #ATO2015 #NetflixOSS

Talkbits service architecture and deployment

Talkbits service architecture and deploymentOpen-IT The document discusses the architecture and deployment of the Talkbits service. It describes how the service is packaged as a single executable JAR file along with installation and init scripts. It also covers how the service implements logging through SLF4J and Logback, sends logs to loggly for aggregation. Metrics are collected via CodaHale and exposed via Jolokia for monitoring. Fabric is used for environment provisioning and deployment across tagged Amazon instances. Monitoring is handled by Datadog which collects various metrics through its agent.

Relay: The Next Leg, Eric Sorenson, Puppet

Relay: The Next Leg, Eric Sorenson, PuppetPuppet This document discusses Relay, a new project that aims to provide a complete solution for continuously deploying applications and infrastructure. Relay will orchestrate actions across existing tools and services by listening to cloud events and triggers from services. It will use a taxonomy of reusable, modular workflow steps that can be combined to build workflows as code in YAML. The document provides examples of step types like trigger steps, action steps, and query steps. It also outlines Relay's integration ecosystem and upcoming release timeline.

Stay productive_while_slicing_up_the_monolith

Stay productive_while_slicing_up_the_monolithMarkus Eisele The document discusses strategies for transitioning from monolithic architectures to microservice architectures. It outlines some of the challenges with maintaining large monolithic applications and reasons for modernizing, such as handling more data and needing faster changes. It then covers microservice design principles and best practices, including service decomposition, distributed systems strategies, and reactive design. Finally it introduces Lagom as a framework for building reactive microservices on the JVM and outlines its key components and development environment.

Ananth_Ravishankar

Ananth_Ravishankarananth R Ananth Ravishankar has over 9 years of experience developing web and distributed applications using Java/J2EE technologies. He has expertise in all phases of the software development lifecycle and experience working with agile methodologies. Ananth has worked on projects in various domains including retail, investment banking, and media research. He is proficient in technologies such as Java, JSP, Servlets, Struts, Hadoop, Hive, and relational databases.

Splunk

SplunkDeep Mehta Splunk is a time-series data platform that handles the three V's of data (volume, velocity, and variety) very well. It collects, indexes, and allows searching and analysis of data. Splunk can collect data from files, directories, network ports, programs/scripts, and databases. It breaks data down into searchable events and builds a high-performance index. This allows users to search, manipulate, and visualize data in reports, charts, and dashboards. Splunk can analyze structured, unstructured, and multistructured data from various sources like logs, networks, clicks, and more.

Python Conditional_Statements_and_Functions

Python Conditional_Statements_and_FunctionsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Python Conditional_Statements_and_Functions

Web programming using python frameworks.

Web programming using python frameworks.Puneet Kumar Bhatia (MBA, ITIL V3 Certified) Web programming using python frameworks.

Ad

More Related Content

Similar to Introduction to Monitoring Tools for DevOps (20)

What is Spinnaker? Spinnaker tutorial

What is Spinnaker? Spinnaker tutorialjeetendra mandal Spinnaker is an open source continuous delivery platform that provides automated deployment capabilities for releasing software changes. It is designed to increase release velocity and reduce risk associated with updating applications. Spinnaker uses a microservices architecture and provides features like multicloud deployments, automated pipelines, deployment verification, and flexibility and extensibility through customization and extensions. It works by managing applications and their deployments through concepts like pipelines, stages, server groups, and deployment strategies.

Kubernetes Infra 2.0

Kubernetes Infra 2.0Deepak Sood The document outlines an infrastructure 2.0 approach based on cloud native technologies. It advocates for infrastructure as code, test-driven deployments, open source tools, and seamless developer workflows. The approach uses microservices, containers, service meshes, and orchestration with Kubernetes. It recommends tools like Terraform, Jenkins, Kubernetes, Istio, Prometheus, Elasticsearch and Airflow for infrastructure provisioning, CI/CD, container management, service mesh, monitoring, logging, and job scheduling. It also discusses Docker, data pipelines, and processes for onboarding new applications.

Triangle Devops Meetup 10/2015

Triangle Devops Meetup 10/2015aspyker Triangle Devops Meetup covering Netflix open source, cloud architecture, and what Andrew did in his first year working as a senior software engineer in the cloud platform group.

Splunk metrics via telegraf

Splunk metrics via telegrafAshvin Pandey The document discusses a Splunk user group meeting about using Telegraf to monitor metrics. The agenda includes an introduction to Telegraf architecture, how to connect Telegraf with Splunk, deploying Telegraf, and analyzing metrics with Splunk. Attendees are encouraged to join the Slack channel and ask questions during the session, and the slides and recording will later be posted online.

Infrastructure as Code & its Impact on DevOps

Infrastructure as Code & its Impact on DevOps Bahaa Al Zubaidi Embrace Infrastructure as Code (#IaC) in #DevOps for faster, secure, and scalable deployments! Boost collaboration and efficiency while automating infrastructure with tools like #Ansible, #CloudFormation, and #AzureResourceManager. #Technology #Efficiency #Automation

Read more at https://bahaaalzubaidi.com/infrastructure-as-code-devops/

A Big Data Lake Based on Spark for BBVA Bank-(Oscar Mendez, STRATIO)

A Big Data Lake Based on Spark for BBVA Bank-(Oscar Mendez, STRATIO)Spark Summit This document describes BBVA's implementation of a Big Data Lake using Apache Spark for log collection, storage, and analytics. It discusses:

1) Using Syslog-ng for log collection from over 2,000 applications and devices, distributing logs to Kafka.

2) Storing normalized logs in HDFS and performing analytics using Spark, with outputs to analytics, compliance, and indexing systems.

3) Choosing Spark because it allows interactive, batch, and stream processing with one system using RDDs, SQL, streaming, and machine learning.

CV_RishabhDixit

CV_RishabhDixitRishabh Dixit Rishabh Dixit is a DevOps Engineer with over 2 years of experience in build engineering, build management, software configuration management, and process automation. He has skills in technologies like Git, Jira, Jenkins, Apache, Tomcat, PostgreSQL, RabbitMQ, Elasticsearch, and Linux. Some of his responsibilities include setting up continuous integration pipelines, managing Jenkins nodes, PostgreSQL administration, Tomcat configuration, load balancing with Nginx and HAProxy, application monitoring with New Relic and PagerDuty, and release support. He is looking to leverage his skills and experience in a challenging DevOps role.

the tooling of a modern and agile oracle dba

the tooling of a modern and agile oracle dbaBertrandDrouvot This presentation discusses tools for automating database operations and monitoring databases. It introduces Ansible for automation, and Telegraf, Influxdb, Grafana, Logstash, Elasticsearch, Kibana, and Kapacitor for collecting, storing, and visualizing metrics, logs, and alerts. The presentation includes a live demo of using these tools to provision an Oracle database, create performance dashboards, generate alerts, and view logs and audits. The goal is to provide a modern, agile approach to database administration.

2015 03-16-elk at-bsides

2015 03-16-elk at-bsidesJeremy Cohoe Jeremy Cohoe presented on using the ELK (Elasticsearch, Logstash, Kibana) stack for log analysis. He began with an overview of what ELK is and its components - Logstash parses logs, Elasticsearch is the database, and Kibana provides the GUI. Cohoe then demonstrated using ELK to monitor 802.11 client probes with a software defined radio and parse Flex pager signals. Finally, he discussed implementing ELK in production for a Linux central syslog system, including scaling out with Redis, common plugins, and cluster monitoring tools.

Devops

DevopsJyothirmaiG4 Devops is an approach that aims to increase an organization's ability to deliver applications and services at high velocity by combining cultural philosophies, practices, and tools that align development and operations teams. Under a DevOps model, development and operations teams work closely together across the entire application lifecycle from development through deployment to operations. They use automation, monitoring, and collaboration tools to accelerate delivery while improving quality and security. Popular DevOps tools include Git, Jenkins, Puppet, Chef, Ansible, Docker, and Nagios.

Deep Dive Into Elasticsearch: Establish A Powerful Log Analysis System With E...

Deep Dive Into Elasticsearch: Establish A Powerful Log Analysis System With E...Tyler Nguyen We will have a deep view of the Elastic Stack - which is the next evolution of ELK Stack, learn how to build the powerful log analysis system with Elastic Stack and have an overview of specifications and comparison details between the self-managed cluster vs Elastic stack provided as SaaS from cloud providers.

Graylog

GraylogKnoldus Inc. Graylog is a leading centralized log management solution for capturing, storing, and enabling real-time analysis of terabytes of machine data.

Co 4, session 2, aws analytics services

Co 4, session 2, aws analytics servicesm vaishnavi AWS offers several analytics services to help process and provide insights from data. These include Amazon Athena for interactive querying of data stored in S3 using SQL, Amazon EMR for processing large amounts of data using Hadoop and other open source tools, Amazon CloudSearch for setting up a search solution easily, and Amazon Kinesis for collecting, processing, and analyzing real-time data. Other services are Amazon Redshift for data warehousing, Amazon Quicksight for interactive dashboards, AWS Glue for ETL jobs, and Amazon Lake Formation for securing data lakes.

Netflix Cloud Architecture and Open Source

Netflix Cloud Architecture and Open Sourceaspyker A presentation on the Netflix Cloud Architecture and NetflixOSS open source. For the All Things Open 2015 conference in Raleigh 2015/10/19. #ATO2015 #NetflixOSS

Talkbits service architecture and deployment

Talkbits service architecture and deploymentOpen-IT The document discusses the architecture and deployment of the Talkbits service. It describes how the service is packaged as a single executable JAR file along with installation and init scripts. It also covers how the service implements logging through SLF4J and Logback, sends logs to loggly for aggregation. Metrics are collected via CodaHale and exposed via Jolokia for monitoring. Fabric is used for environment provisioning and deployment across tagged Amazon instances. Monitoring is handled by Datadog which collects various metrics through its agent.

Relay: The Next Leg, Eric Sorenson, Puppet

Relay: The Next Leg, Eric Sorenson, PuppetPuppet This document discusses Relay, a new project that aims to provide a complete solution for continuously deploying applications and infrastructure. Relay will orchestrate actions across existing tools and services by listening to cloud events and triggers from services. It will use a taxonomy of reusable, modular workflow steps that can be combined to build workflows as code in YAML. The document provides examples of step types like trigger steps, action steps, and query steps. It also outlines Relay's integration ecosystem and upcoming release timeline.

Stay productive_while_slicing_up_the_monolith

Stay productive_while_slicing_up_the_monolithMarkus Eisele The document discusses strategies for transitioning from monolithic architectures to microservice architectures. It outlines some of the challenges with maintaining large monolithic applications and reasons for modernizing, such as handling more data and needing faster changes. It then covers microservice design principles and best practices, including service decomposition, distributed systems strategies, and reactive design. Finally it introduces Lagom as a framework for building reactive microservices on the JVM and outlines its key components and development environment.

Ananth_Ravishankar

Ananth_Ravishankarananth R Ananth Ravishankar has over 9 years of experience developing web and distributed applications using Java/J2EE technologies. He has expertise in all phases of the software development lifecycle and experience working with agile methodologies. Ananth has worked on projects in various domains including retail, investment banking, and media research. He is proficient in technologies such as Java, JSP, Servlets, Struts, Hadoop, Hive, and relational databases.

Splunk

SplunkDeep Mehta Splunk is a time-series data platform that handles the three V's of data (volume, velocity, and variety) very well. It collects, indexes, and allows searching and analysis of data. Splunk can collect data from files, directories, network ports, programs/scripts, and databases. It breaks data down into searchable events and builds a high-performance index. This allows users to search, manipulate, and visualize data in reports, charts, and dashboards. Splunk can analyze structured, unstructured, and multistructured data from various sources like logs, networks, clicks, and more.

More from Puneet Kumar Bhatia (MBA, ITIL V3 Certified) (20)

Python Conditional_Statements_and_Functions

Python Conditional_Statements_and_FunctionsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Python Conditional_Statements_and_Functions

Web programming using python frameworks.

Web programming using python frameworks.Puneet Kumar Bhatia (MBA, ITIL V3 Certified) Web programming using python frameworks.

Azure Fubdamentals (Az-900) presentation.

Azure Fubdamentals (Az-900) presentation.Puneet Kumar Bhatia (MBA, ITIL V3 Certified) Azure Fubdamentals Az-900.pptx

Azure - Basic concepts and overview.pptx

Azure - Basic concepts and overview.pptxPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Azure basic concepts

Cloud Computing basics - an overview.pptx

Cloud Computing basics - an overview.pptxPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Cloud Computing overview

Ansible as configuration management tool for devops

Ansible as configuration management tool for devopsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Ansible

Introduction to Monitoring Tools for DevOps

Introduction to Monitoring Tools for DevOpsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) Monitoring tools

Introduction to Devops and its applications

Introduction to Devops and its applicationsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) DevOps is a solution that brings together development and operations teams to address challenges in the traditional approach where developers want fast changes while operations values stability. DevOps provides benefits like accelerated time-to-market through continuous integration, delivery, and deployment across the application lifecycle. It also allows for technological innovation, business agility, and infrastructure flexibility. Major companies like Apple, Amazon, and eBay introduced DevOps teams to reduce release cycles from months to weeks. DevOps is a journey that requires new skills to be fully realized.

Container Orchestration using kubernetes

Container Orchestration using kubernetesPuneet Kumar Bhatia (MBA, ITIL V3 Certified) This document provides an overview of Kubernetes concepts including:

- Kubernetes architecture with masters running control plane components like the API server, scheduler, and controller manager, and nodes running pods and node agents.

- Key Kubernetes objects like pods, services, deployments, statefulsets, jobs and cronjobs that define and manage workloads.

- Networking concepts like services for service discovery, and ingress for external access.

- Storage with volumes, persistentvolumes, persistentvolumeclaims and storageclasses.

- Configuration with configmaps and secrets.

- Authentication and authorization using roles, rolebindings and serviceaccounts.

It also discusses Kubernetes installation with minikube, and common networking and deployment

Containerization using docker and its applications

Containerization using docker and its applicationsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) This document discusses Docker, containers, and how Docker addresses challenges with complex application deployment. It provides examples of how Docker has helped companies reduce deployment times and improve infrastructure utilization. Key points covered include:

- Docker provides a platform to build, ship and run distributed applications using containers.

- Containers allow for decoupled services, fast iterative development, and scaling applications across multiple environments like development, testing, and production.

- Docker addresses the complexity of deploying applications with different dependencies and targets by using a standardized "container system" analogous to intermodal shipping containers.

- Companies using Docker have seen benefits like reducing deployment times from 9 months to 15 minutes and improving infrastructure utilization.

Containerization using docker and its applications

Containerization using docker and its applicationsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) This document discusses Docker, containers, and containerization. It begins by explaining why containers and Docker have become popular, noting that modern applications are increasingly decoupled services that require fast, iterative development and deployment to multiple environments. It then discusses how deployment has become complex with diverse stacks, frameworks, databases and targets. Docker addresses this problem by providing a standardized way to package applications into containers that are portable and can run anywhere. The document provides examples of results organizations have seen from using Docker, such as significantly reduced deployment times and increased infrastructure efficiency. It also covers Docker concepts like images, containers, the Dockerfile and Docker Compose.

Java Microservices_64 Hours_Day wise plan (002).pdf

Java Microservices_64 Hours_Day wise plan (002).pdfPuneet Kumar Bhatia (MBA, ITIL V3 Certified) This 16-day program teaches Java developers how to build microservices using Spring Boot. Participants will learn microservice architecture and design patterns, how to create microservices from scratch using Spring Boot, secure microservices, and deploy microservices on Docker containers to the cloud. Hands-on labs and exercises are included to help developers build RESTful APIs, integrate SQL and NoSQL databases, implement inter-microservice communication, and deploy a sample mini-project.

Java Microservices_64 Hours_Day wise plan (002).pdf

Java Microservices_64 Hours_Day wise plan (002).pdfPuneet Kumar Bhatia (MBA, ITIL V3 Certified) This 16-day program teaches Java developers how to build microservices using Spring Boot. Participants will learn microservice architecture and design patterns, how to create microservices from scratch using Spring Boot, secure microservices, and deploy microservices on Docker containers to the cloud. Hands-on labs are included to build REST APIs, integrate SQL and NoSQL databases, implement inter-microservice communication, and deploy a sample project using microservices techniques.

Aws interview questions

Aws interview questionsPuneet Kumar Bhatia (MBA, ITIL V3 Certified) AWS is a cloud computing platform that provides on-demand computing resources and services. The key components of AWS include Route 53 (DNS), S3 (storage), EC2 (computing), EBS (storage volumes), and CloudWatch (monitoring). S3 provides scalable object storage, while EC2 allows users to launch virtual servers called instances. An AMI is a template used to launch EC2 instances that defines the OS, apps, and server configuration. Security best practices for EC2 include using IAM for access control and only opening required ports using security groups.

Changing paradigm in job market

Changing paradigm in job marketPuneet Kumar Bhatia (MBA, ITIL V3 Certified) The document discusses how the future job market is changing due to new technologies. It notes that while technology can increase efficiency, many workers may become unemployed unless they update their skills. It outlines several trends that will impact work, such as the rise of contract work, the importance of digital skills and analytics. Critical skills gaps are identified in both technical and management areas. Emerging in-demand jobs are listed like VR/AR architects and data scientists. The conclusion emphasizes that workers must enhance their skills through continuous learning to adapt to an automated future job market.

Kaizen08

Kaizen08Puneet Kumar Bhatia (MBA, ITIL V3 Certified) Kaizen is a Japanese philosophy of continuous improvement involving everyone in an organization. It is based on the idea that all processes can always be improved. Key aspects of Kaizen include focusing on processes, not individuals, using tools like visual controls and charts to identify problems and track improvements, and emphasizing small, incremental changes. Kaizen was influential in Japan's manufacturing success and aims to continuously challenge the status quo through team-based problem solving.

Writing first-hudson-plugin

Writing first-hudson-pluginPuneet Kumar Bhatia (MBA, ITIL V3 Certified) The document provides instructions for a hands-on lab on creating a Hudson plugin. The lab includes exercises on:

1) Creating a skeleton Hudson plugin project using Maven.

2) Building and running the plugin project in NetBeans to see it installed and functioning on a test Hudson server.

3) Exploring how the plugin extends the "Builder" extension point to add a custom "HelloBuilder" that prints a message.

Ad

Recently uploaded (20)

Buckeye Dreamin' 2023: De-fogging Debug Logs

Buckeye Dreamin' 2023: De-fogging Debug LogsLynda Kane Slide Deck from Buckeye Dreamin' 2023: De-fogging Debug Logs which went over how to capture and read Salesforce Debug Logs

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Automation Hour 1/28/2022: Capture User Feedback from Anywhere

Automation Hour 1/28/2022: Capture User Feedback from AnywhereLynda Kane Slide Deck from Automation Hour 1/28/2022 presentation Capture User Feedback from Anywhere presenting setting up a Custom Object and Flow to collection User Feedback in Dynamic Pages and schedule a report to act on that feedback regularly.

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Automation Dreamin': Capture User Feedback From Anywhere

Automation Dreamin': Capture User Feedback From AnywhereLynda Kane Slide Deck from Automation Dreamin' 2022 presentation Capture User Feedback from Anywhere

Buckeye Dreamin 2024: Assessing and Resolving Technical Debt

Buckeye Dreamin 2024: Assessing and Resolving Technical DebtLynda Kane Slide Deck from Buckeye Dreamin' 2024 presentation Assessing and Resolving Technical Debt. Focused on identifying technical debt in Salesforce and working towards resolving it.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Hands On: Create a Lightning Aura Component with force:RecordData

Hands On: Create a Lightning Aura Component with force:RecordDataLynda Kane Slide Deck from the 3/26/2020 virtual meeting of the Cleveland Developer Group presentation on creating a Lightning Aura Component using force:RecordData.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Ad

Introduction to Monitoring Tools for DevOps

- 1. Continuous Monitoring Continuous Monitoring is all about the ability of an organization to detect, report, respond, contain and mitigate the attacks that occur, in its infrastructure.

- 2. Type of Monitoring Depending on how complicated your monitoring needs are, there are many different services available that can help you monitor you applications at various levels. Some of commonly used monitoring activities are. • Real Time Monitoring - Perform real-time continuous monitoring of business processes and data analytics. For example Splunk. • Application Performance Monitoring - To fully manage and monitor the performance of an application. For Example AppDynamics, Scout • Infrastructure Monitoring – SolarWinds, Nagios, Zabbix • Log Monitoring – SumoLogic, Splunk, ELK Stack

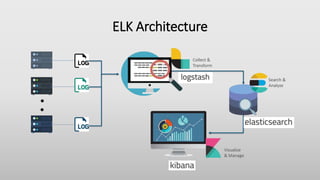

- 3. ELK Stack

- 4. ELK Stack • Popularly known as ELK Stack has been recently re-branded as Elastic Stack. It is a powerful collection of three open source tools: Elasticsearch, Logstash, and Kibana. • These three different products are most commonly used together for log analysis in different IT environments. Using ELK Stack you can perform centralized logging which helps in identifying the problems with the web servers or applications. It lets you search through all the logs at a single place and identify the issues spanning through multiple servers by correlating their logs within a specific time frame.

- 6. Logstash Logstash is the data collection pipeline tool. It the first component of ELK Stack which collects data inputs and feeds it to the Elasticsearch. It collects various types of data from different sources, all at once and makes it available immediately for further use.

- 7. Elasticsearch Elasticsearch is a NoSQL database which is based on Lucene search engine and is built with RESTful APIs that uses JSON as the data exchange format. It is a highly flexible and distributed search and analytics engine. Also, it provides simple deployment, maximum reliability, and easy management through horizontal scalability. It provides advanced queries to perform detailed analysis and stores all the data centrally for quick search of the documents.

- 8. Kibana Kibana is a data visualization tool. It is used for visualizing the Elasticsearch documents and helps the developers to have an immediate insight into it. Kibana dashboard provides various interactive diagrams, geospatial data, timelines, and graphs to visualize the complex queries done using Elasticsearch. Using Kibana you can create and save custom graphs according to your specific needs.

- 9. ELK Configuration… All these three tools are based on JVM and before start installing them, please verify that JDK has been properly configured. Check that standard JDK 1.8 installation, JAVA_HOME and PATH set up is already done. Elasticsearch •Download latest version of Elasticsearch from this download page and unzip it any folder. •Run binelasticsearch.bat from command prompt. •By default, it would start at http://localhost:9200

- 10. ELK Configuration… Kibana •Download the latest distribution from download page and unzip into any folder. •Open config/kibana.yml in an editor and set elasticsearch.url to point at your Elasticsearch instance. In our case as we will use the local instance just uncomment elasticsearch.url: "http://localhost:9200" •Run binkibana.bat from command prompt. •Once started successfully, Kibana will start on default port 5601 and Kibana UI will be available at http://localhost:5601 Logstash •Download the latest distribution from download page and unzip into any folder. •Create one file logstash.conf as per configuration instructions. We will again come to this point during actual demo time for exact configuration. Now run bin/logstash -f logstash.conf to start logstash

- 12. Nagios

- 13. What is Nagios? • Nagios is used for Continuous monitoring of systems, applications, services, and business processes etc in a DevOps culture. In the event of a failure, Nagios can alert technical staff of the problem, allowing them to begin remediation processes before outages affect business processes, end-users, or customers. With Nagios, you don’t have to explain why an unseen infrastructure outage affect your organization’s bottom line.

- 14. Nagios Architecture •Nagios is built on a server/agents architecture. •Usually, on a network, a Nagios server is running on a host, and Plugins interact with local and all the remote hosts that need to be monitored. •These plugins will send information to the Scheduler, which displays that in a GUI.

- 15. Nagios Remote Plugin Executor (NRPE) •The check_nrpe plugin, resides on the local monitoring machine. •The NRPE daemon, runs on the remote Linux/Unix machine. •There is a SSL (Secure Socket Layer) connection between monitoring host and remote host as shown in the diagram above.

- 16. Nagios configuration files • The main configuration file is “nagios.cfg” in etc • cfg_file=contactgroups.cfg • cfg_file=contacts.cfg • cfg_file=dependencies.cfg • cfg_file=escalations.cfg • cfg_file=hostgroups.cfg • cfg_file=hosts.cfg • cfg_file=services.cfg • cfg_file=timeperiods.cfg • These are much like #include statements, allowing you to structure your files.

- 17. Hosts.cfg define host{ use generic-host ; Name of host template host_name server1 ; name of computer alias server1.localdomain ; canonical name address 10.0.0.1 ; ip address check_command check-host-alive ; defined in commands.cfg max_check_attempts 10 ; used when check fails notification_interval 60 ; how long between notification events notification_period 24x7 ; defined in timeperiods.cfg notification_options d,u,r ; }

- 18. Services.cfg define service{ use generic-service ; template host_name server1 ; defined in hosts.cfg service_description PING ; is_volatile 0 check_period 24x7 max_check_attempts 3 normal_check_interval 5 retry_check_interval 1 contact_groups peoplewhocare ;defined in contactgroups notification_interval 60 notification_period 24x7 notification_options c,r check_command check_ping!100.0,20%!500.0,60% }

- 19. Nagios Web UI

- 20. Thanks