Introduction to the IBM Watson Data Platform

4 likes1,360 views

In this talk that I gave to the SW Data meetup group in Bristol I went through the Watson Data Platform showing various examples using open data.

1 of 74

Downloaded 108 times

![@MargrietGr

Cloudant is "schemaless"

{

"_id": "1",

"firstname": "John",

"lastname": "Smith",

"dob": "1970-01-01",

"email": "john.smith@gmail.com",

"confirmed": true,

"tags": ["tall", "glasses"]

}](https://image.slidesharecdn.com/170227watsonopendata-170228171041/85/Introduction-to-the-IBM-Watson-Data-Platform-24-320.jpg)

![@MargrietGr

Cloudant is "schemaless"

{

"_id": "1",

"firstname": "John",

"lastname": "Smith",

"dob": "1970-01-01",

"email": "john.smith@gmail.com",

"confirmed": true,

"tags": ["tall", "glasses"],

"address" : {

"number": 14,

"street": "Front Street",

"town": "Luton",

"postcode": "LU1 1AB"

}

}](https://image.slidesharecdn.com/170227watsonopendata-170228171041/85/Introduction-to-the-IBM-Watson-Data-Platform-25-320.jpg)

Ad

Recommended

Beginners guide to weather and climate data

Beginners guide to weather and climate dataMargriet Groenendijk I gave this talk at the Bristol Data Science Meetup. This talk is an overview of where to find and how to use weather data.

ODSC UK 2016: How To Analyse Weather Data and Twitter Sentiment with Spark an...

ODSC UK 2016: How To Analyse Weather Data and Twitter Sentiment with Spark an...Margriet Groenendijk These slides show you how to extract, combine, and analyze Twitter and Weather feeds in a Python notebook, using pyspark, Weather Company Data, Insights for Twitter.

Cloud architectures for data science

Cloud architectures for data scienceMargriet Groenendijk Data Science covers the complete workflow from defining a question, finding the most suitable data source, identifying the right tools and finally presenting the best possible answer in a clear, engaging manner. But it all starts with having access to the data. In these slides I will walk your through some examples of how to collect, store and access data in the Cloud with the use of different APIs.

Data Science in the Cloud

Data Science in the CloudMargriet Groenendijk Margriet Groenendijk gave a presentation on data science in the cloud. She discussed her background working with large datasets and using tools like Python, Spark, R, and IBM's cloud services. She then outlined the typical data science workflow of collecting and storing data, exploring and cleaning it, creating predictive models, and presenting results. Finally, she demonstrated an example of analyzing weather and Twitter sentiment data using various IBM cloud tools.

Exploring Graph Use Cases with JanusGraph

Exploring Graph Use Cases with JanusGraphJason Plurad Graph databases are relative newcomers in the NoSQL database landscape. What are some graph model and design considerations when choosing a graph database in your architecture? Let's take a tour of a couple graph use cases that we've collaborated on recently with our clients to help you better understand how and why a graph database can be integrated to help solve problems found with connected data. Presented at DataWorks Summit San Jose - IBM Meetup on June 18, 2018.

https://www.meetup.com/BigDataDevelopers/events/251307524/

GitHub Data and Insights

GitHub Data and InsightsJeff McAffer Originally presented at Collab Summit 2016, this talk covers the use of GHTorrent to gather and analyze public repo and community data from GitHub. We talk about using Azure Data Lake as well as how you can set up this infrastructure yourself.

Is it harder to find a taxi when it is raining?

Is it harder to find a taxi when it is raining? Wilfried Hoge Using open data to answer the question if it is harder to find a taxi, when it is raining. Live demo of analyzing taxi data with DashDB, R, and Bluemix.

Presented on data2day conference.

Graph Computing with Apache TinkerPop

Graph Computing with Apache TinkerPopJason Plurad Presented at Open Camps (Database Camp) in New York City on November 19, 2017. http://www.db.camp/2017/presentations/graph-computing-with-apache-tinkerpop

Airline Reservations and Routing: A Graph Use Case

Airline Reservations and Routing: A Graph Use CaseJason Plurad We've all been there before... you hear the announcement that your flight is canceled. Fellow passengers race to the gate agent to rebook on the next available flight. How do they quickly determine the best route from Berlin to San Francisco? Ultimately the flight route network is best solved as a graph problem. We will discuss our lessons learned from working with a major airline to solve this problem using JanusGraph database. JanusGraph is an open source graph database designed for massive scale. It is compatible with several pieces of the open source big data stack: Apache TinkerPop (graph computing framework), HBase, Cassandra, and Solr. We will go into depth about our approach to benchmarking graph performance and discuss the utilities we developed. We will share our comparison results for evaluating which storage backend use with JanusGraph. Whether you are productizing a new database or you are a frustrated traveler, a fast resolution is needed to satisfy everybody involved. Presented at DataWorks Summit Berlin on April 18, 2018

Graph Computing with JanusGraph

Graph Computing with JanusGraphJason Plurad Presented at the Linked Data Benchmark Council (LDBC) Technical User Group (TUG) Meeting on June 8, 2018. http://www.ldbcouncil.org/blog/11th-tuc-meeting-university-texas-austin

This week in Neo4j - 21st October 2017

This week in Neo4j - 21st October 2017Neo4j Discover what's new in the Neo4j community for the week of 21 October 2017, including Neo4j Python driver 1.5.0, GraphConnect, and GraphQL summit.

Janus graph lookingbackwardreachingforward

Janus graph lookingbackwardreachingforwardDemai Ni JanusGraph: Looking Backward and Reaching Forward - by Jason Plurad (@pluradj):

The JanusGraph project started at the Linux Foundation earlier this year, but it is not the new kid on the block. We'll start with a look at the origins and evolution of this open source graph database through the lens of a few IBM graph use cases. We'll discuss the new features in latest release of JanusGraph, and then take a look at future directions to explore together with the open community.

JanusGraph: Looking Backward, Reaching Forward

JanusGraph: Looking Backward, Reaching ForwardJason Plurad The JanusGraph project started at the Linux Foundation earlier this year, but it is not the new kid on the block. We'll start with a look at the origins and evolution of this open source graph database through the lens of a few IBM graph use cases. We'll discuss the new features in latest release of JanusGraph, and then take a look at future directions to explore together with the open community. Presented on October 18, 2017 at the Graph Technologies Meetup in Santa Clara, CA. https://www.meetup.com/_CAIDI/events/243122187/

R, Spark, Tensorflow, H20.ai Applied to Streaming Analytics

R, Spark, Tensorflow, H20.ai Applied to Streaming AnalyticsKai Wähner Slides from my talk at Codemotion Rome in March 2017. Development of analytic machine learning / deep learning models with R, Apache Spark ML, Tensorflow, H2O.ai, RapidMinder, KNIME and TIBCO Spotfire. Deployment to real time event processing / stream processing / streaming analytics engines like Apache Spark Streaming, Apache Flink, Kafka Streams, TIBCO StreamBase.

Powers of Ten Redux

Powers of Ten ReduxJason Plurad One of the first problems a developer encounters when evaluating a graph database is how to construct a graph efficiently. Recognizing this need in 2014, TinkerPop's Stephen Mallette penned a series of blog posts titled "Powers of Ten" which addressed several bulkload techniques for Titan. Since then Titan has gone away, and the open source graph database landscape has evolved significantly. Do the same approaches stand the test of time? In this session, we will take a deep dive into strategies for loading data of various sizes into modern Apache TinkerPop graph systems. We will discuss bulkloading with JanusGraph, the scalable graph database forked from Titan, to better understand how its architecture can be optimized for ingestion. Presented at Data Day Texas on January 27, 2018.

Handle insane devices traffic using Google Cloud Platform - Andrea Ulisse - C...

Handle insane devices traffic using Google Cloud Platform - Andrea Ulisse - C...Codemotion In a world of connected devices it is really important to be prepared receiving and managing a huge amount of messages. In this context what is making the real difference is the backend that has to be able to handle safely every request in real time. In this talk we will show how the broad spectrum of highly scalable services makes Google Cloud Platform the perfect habitat for such as workloads.

Big Data - part 5/7 of "7 modern trends that every IT Pro should know about"

Big Data - part 5/7 of "7 modern trends that every IT Pro should know about"Ibrahim Muhammadi Presented by Ibrahim Muhammadi. Founder - AppWorx.cc

Big Data is revolutionizing how businesses make decisions now. More and more decisions and strategies are now based on data.

Quix presto ide, presto summit IL

Quix presto ide, presto summit ILOri Reshef This document discusses data and analytics at Wix, including details about Presto and Quix. Wix is a large company with over 150 million users, 2600 employees, and 1000 microservices. It uses Presto for analytics with over 400,000 weekly queries across 34,000 tables and 11 catalogs. Presto runs on AWS with custom plugins and handles a variety of data sources. Quix is a self-service IDE tool developed at Wix with over 1300 employees using it to run over 8,000 daily queries across 34,000 tables in notes, notebooks, and scheduled jobs. Quix is now being developed as an open source project.

Hadoop World - Oct 2009

Hadoop World - Oct 2009Derek Gottfrid Review of the different things that nytimes.com has been up to w/ Hadoop from the simple to the less simple.

Hw09 Counting And Clustering And Other Data Tricks

Hw09 Counting And Clustering And Other Data TricksCloudera, Inc. This document summarizes the early use of Hadoop at The New York Times to generate PDFs of archived newspaper articles and analyze web traffic data. It describes how over 100 Amazon EC2 instances were used with Hadoop to pre-generate over 11 million PDFs from 4.3TB of source data in under 24 hours for a total cost of $240. The document then discusses how the Times began using Hadoop to perform web analytics, counting page views and unique users, and merging this data with demographic and article metadata to better understand user traffic and behavior.

Data Visualization for the Web - How to Get Started

Data Visualization for the Web - How to Get StartedChristopher Conlan Bethesda Data Science Meetup February 2019

Chris Conlan and Paulo Martinez give a brief overview of the software ecosystem for web-based data viz, then dive into their own portfolios (not in slides).

Flink Forward Berlin 2018: Tobias Lindener - "Approximate standing queries on...

Flink Forward Berlin 2018: Tobias Lindener - "Approximate standing queries on...Flink Forward Data analytics in its infancy has taken off with the development of SQL. Yet, at web-scale, even simple analytics queries can prove challenging within (Distributed-) Stream Processing environments. Two such examples are Count and Count Distinct. Because of the key-oriented nature of these queries, traditionally such queries would result in ever increasing memory demand. Through approximation techniques with fixed-size memory consumption, said tasks are feasible and potentially more resource efficient within streaming systems. This is demonstrated by integrating Yahoo Data Sketches on Apache Flink. The evaluation highlights the resource efficiency as well as the challenges of approximation techniques (e.g. varying accuracy) and potential for tuning depending on the dataset. Furthermore, challenges in integrating the components within the existing Streaming interfaces(e.g. Table API) and stateful processing are presented.

Neo4j - Rik Van Bruggen

Neo4j - Rik Van Bruggenbigdatalondon Slides from Rik Van Bruggen's talk on Exploiting Big Data with Graphs at the 18th Big Data London meetup.

This week in Neo4j -13th January 2018

This week in Neo4j -13th January 2018Neo4j Discover what's new in the Neo4j community for the week of 13 January 2018, including projects around FOSDEM, Knowledge Graphs, and the Azure template.

Flink Forward Berlin 2018: Henri Heiskanen - "How to keep our flock happy wit...

Flink Forward Berlin 2018: Henri Heiskanen - "How to keep our flock happy wit...Flink Forward Data is in the very core how Rovio builds and operates its games. What does data mean for Rovio: how its processed and how we gain value from it? In this talk we take a deep dive into Rovio analytics pipeline and its use cases. We will give you a brief history lesson on how a purely batch based system has evolved into hybrid streaming and batch system, and share how we operate our production pipeline in AWS.

Daho.am meetup kubernetes evolution @abi

Daho.am meetup kubernetes evolution @abiOvidiu Hutuleac Kubernetes platform evolution at Audi Business Innovation started in 2016 with a PoC on AWS and grew to support over 1000 containers and 60 development teams by early 2018. Two outages occurred due to cluster upgrades and issues with the CNI plugin. Lessons learned included implementing a recovery plan using GitOps with Helm for deployments, monitoring, and backing up all configuration and data. The presentation emphasized choosing important work over urgent tasks, listening to feedback, and investing in relationships between people.

Visualising and Linking Open Data from Multiple Sources

Visualising and Linking Open Data from Multiple SourcesData Driven Innovation Margriet Groenendijk - Open data is available from an incredible number of data sources that can be linked to your own datasets. This talk will present examples of how to visualise and combine data from very different sources such as weather and climate, and statistics collected by individual countries using Python notebooks in Analytics for Apache Spark.

D'Onofrio, Claudio: ICOS Data at Your Fingertips

D'Onofrio, Claudio: ICOS Data at Your FingertipsIntegrated Carbon Observation System (ICOS) The document discusses accessing and analyzing data from the ICOS (Integrated Carbon Observation System) Data Portal using Python. It introduces the "icoscp" Python library which allows users to load ICOS data into pandas dataframes with just a few lines of code. Examples shown include loading methane concentration data from a Zeppelin station in the Arctic, exploring persistent identifiers to find related data, and combining ICOS data with other datasets from repositories like Pangaea. The library is still in beta but aims to make it easier for scientists to access and analyze ICOS data without having to download and manage large files locally.

IBM Watson Overview

IBM Watson OverviewPenn State EdTech Network IBM Watson overview presented by Mike Pointer, Watson Sr. Solution Architect, at Penn State's Nittany Watson Challenge Immersion event on January 19-20, 2017.

Process Mining based on the Internet of Events

Process Mining based on the Internet of EventsRising Media Ltd. 1) Process mining uses event data to discover, monitor and improve real processes. It serves as a new type of spreadsheet to analyze event data and discover processes.

2) Process mining tools like ProM can be used to perform process discovery from event logs, conformance checking by comparing modeled and observed behavior, and other types of analysis without requiring process modeling.

3) The main challenges in data science and process mining include dealing with high volume and velocity data, extracting useful knowledge from data to answer known and unknown questions, and ensuring responsible use of data and algorithms that considers fairness, accuracy, transparency and other factors.

Ad

More Related Content

What's hot (20)

Airline Reservations and Routing: A Graph Use Case

Airline Reservations and Routing: A Graph Use CaseJason Plurad We've all been there before... you hear the announcement that your flight is canceled. Fellow passengers race to the gate agent to rebook on the next available flight. How do they quickly determine the best route from Berlin to San Francisco? Ultimately the flight route network is best solved as a graph problem. We will discuss our lessons learned from working with a major airline to solve this problem using JanusGraph database. JanusGraph is an open source graph database designed for massive scale. It is compatible with several pieces of the open source big data stack: Apache TinkerPop (graph computing framework), HBase, Cassandra, and Solr. We will go into depth about our approach to benchmarking graph performance and discuss the utilities we developed. We will share our comparison results for evaluating which storage backend use with JanusGraph. Whether you are productizing a new database or you are a frustrated traveler, a fast resolution is needed to satisfy everybody involved. Presented at DataWorks Summit Berlin on April 18, 2018

Graph Computing with JanusGraph

Graph Computing with JanusGraphJason Plurad Presented at the Linked Data Benchmark Council (LDBC) Technical User Group (TUG) Meeting on June 8, 2018. http://www.ldbcouncil.org/blog/11th-tuc-meeting-university-texas-austin

This week in Neo4j - 21st October 2017

This week in Neo4j - 21st October 2017Neo4j Discover what's new in the Neo4j community for the week of 21 October 2017, including Neo4j Python driver 1.5.0, GraphConnect, and GraphQL summit.

Janus graph lookingbackwardreachingforward

Janus graph lookingbackwardreachingforwardDemai Ni JanusGraph: Looking Backward and Reaching Forward - by Jason Plurad (@pluradj):

The JanusGraph project started at the Linux Foundation earlier this year, but it is not the new kid on the block. We'll start with a look at the origins and evolution of this open source graph database through the lens of a few IBM graph use cases. We'll discuss the new features in latest release of JanusGraph, and then take a look at future directions to explore together with the open community.

JanusGraph: Looking Backward, Reaching Forward

JanusGraph: Looking Backward, Reaching ForwardJason Plurad The JanusGraph project started at the Linux Foundation earlier this year, but it is not the new kid on the block. We'll start with a look at the origins and evolution of this open source graph database through the lens of a few IBM graph use cases. We'll discuss the new features in latest release of JanusGraph, and then take a look at future directions to explore together with the open community. Presented on October 18, 2017 at the Graph Technologies Meetup in Santa Clara, CA. https://www.meetup.com/_CAIDI/events/243122187/

R, Spark, Tensorflow, H20.ai Applied to Streaming Analytics

R, Spark, Tensorflow, H20.ai Applied to Streaming AnalyticsKai Wähner Slides from my talk at Codemotion Rome in March 2017. Development of analytic machine learning / deep learning models with R, Apache Spark ML, Tensorflow, H2O.ai, RapidMinder, KNIME and TIBCO Spotfire. Deployment to real time event processing / stream processing / streaming analytics engines like Apache Spark Streaming, Apache Flink, Kafka Streams, TIBCO StreamBase.

Powers of Ten Redux

Powers of Ten ReduxJason Plurad One of the first problems a developer encounters when evaluating a graph database is how to construct a graph efficiently. Recognizing this need in 2014, TinkerPop's Stephen Mallette penned a series of blog posts titled "Powers of Ten" which addressed several bulkload techniques for Titan. Since then Titan has gone away, and the open source graph database landscape has evolved significantly. Do the same approaches stand the test of time? In this session, we will take a deep dive into strategies for loading data of various sizes into modern Apache TinkerPop graph systems. We will discuss bulkloading with JanusGraph, the scalable graph database forked from Titan, to better understand how its architecture can be optimized for ingestion. Presented at Data Day Texas on January 27, 2018.

Handle insane devices traffic using Google Cloud Platform - Andrea Ulisse - C...

Handle insane devices traffic using Google Cloud Platform - Andrea Ulisse - C...Codemotion In a world of connected devices it is really important to be prepared receiving and managing a huge amount of messages. In this context what is making the real difference is the backend that has to be able to handle safely every request in real time. In this talk we will show how the broad spectrum of highly scalable services makes Google Cloud Platform the perfect habitat for such as workloads.

Big Data - part 5/7 of "7 modern trends that every IT Pro should know about"

Big Data - part 5/7 of "7 modern trends that every IT Pro should know about"Ibrahim Muhammadi Presented by Ibrahim Muhammadi. Founder - AppWorx.cc

Big Data is revolutionizing how businesses make decisions now. More and more decisions and strategies are now based on data.

Quix presto ide, presto summit IL

Quix presto ide, presto summit ILOri Reshef This document discusses data and analytics at Wix, including details about Presto and Quix. Wix is a large company with over 150 million users, 2600 employees, and 1000 microservices. It uses Presto for analytics with over 400,000 weekly queries across 34,000 tables and 11 catalogs. Presto runs on AWS with custom plugins and handles a variety of data sources. Quix is a self-service IDE tool developed at Wix with over 1300 employees using it to run over 8,000 daily queries across 34,000 tables in notes, notebooks, and scheduled jobs. Quix is now being developed as an open source project.

Hadoop World - Oct 2009

Hadoop World - Oct 2009Derek Gottfrid Review of the different things that nytimes.com has been up to w/ Hadoop from the simple to the less simple.

Hw09 Counting And Clustering And Other Data Tricks

Hw09 Counting And Clustering And Other Data TricksCloudera, Inc. This document summarizes the early use of Hadoop at The New York Times to generate PDFs of archived newspaper articles and analyze web traffic data. It describes how over 100 Amazon EC2 instances were used with Hadoop to pre-generate over 11 million PDFs from 4.3TB of source data in under 24 hours for a total cost of $240. The document then discusses how the Times began using Hadoop to perform web analytics, counting page views and unique users, and merging this data with demographic and article metadata to better understand user traffic and behavior.

Data Visualization for the Web - How to Get Started

Data Visualization for the Web - How to Get StartedChristopher Conlan Bethesda Data Science Meetup February 2019

Chris Conlan and Paulo Martinez give a brief overview of the software ecosystem for web-based data viz, then dive into their own portfolios (not in slides).

Flink Forward Berlin 2018: Tobias Lindener - "Approximate standing queries on...

Flink Forward Berlin 2018: Tobias Lindener - "Approximate standing queries on...Flink Forward Data analytics in its infancy has taken off with the development of SQL. Yet, at web-scale, even simple analytics queries can prove challenging within (Distributed-) Stream Processing environments. Two such examples are Count and Count Distinct. Because of the key-oriented nature of these queries, traditionally such queries would result in ever increasing memory demand. Through approximation techniques with fixed-size memory consumption, said tasks are feasible and potentially more resource efficient within streaming systems. This is demonstrated by integrating Yahoo Data Sketches on Apache Flink. The evaluation highlights the resource efficiency as well as the challenges of approximation techniques (e.g. varying accuracy) and potential for tuning depending on the dataset. Furthermore, challenges in integrating the components within the existing Streaming interfaces(e.g. Table API) and stateful processing are presented.

Neo4j - Rik Van Bruggen

Neo4j - Rik Van Bruggenbigdatalondon Slides from Rik Van Bruggen's talk on Exploiting Big Data with Graphs at the 18th Big Data London meetup.

This week in Neo4j -13th January 2018

This week in Neo4j -13th January 2018Neo4j Discover what's new in the Neo4j community for the week of 13 January 2018, including projects around FOSDEM, Knowledge Graphs, and the Azure template.

Flink Forward Berlin 2018: Henri Heiskanen - "How to keep our flock happy wit...

Flink Forward Berlin 2018: Henri Heiskanen - "How to keep our flock happy wit...Flink Forward Data is in the very core how Rovio builds and operates its games. What does data mean for Rovio: how its processed and how we gain value from it? In this talk we take a deep dive into Rovio analytics pipeline and its use cases. We will give you a brief history lesson on how a purely batch based system has evolved into hybrid streaming and batch system, and share how we operate our production pipeline in AWS.

Daho.am meetup kubernetes evolution @abi

Daho.am meetup kubernetes evolution @abiOvidiu Hutuleac Kubernetes platform evolution at Audi Business Innovation started in 2016 with a PoC on AWS and grew to support over 1000 containers and 60 development teams by early 2018. Two outages occurred due to cluster upgrades and issues with the CNI plugin. Lessons learned included implementing a recovery plan using GitOps with Helm for deployments, monitoring, and backing up all configuration and data. The presentation emphasized choosing important work over urgent tasks, listening to feedback, and investing in relationships between people.

Visualising and Linking Open Data from Multiple Sources

Visualising and Linking Open Data from Multiple SourcesData Driven Innovation Margriet Groenendijk - Open data is available from an incredible number of data sources that can be linked to your own datasets. This talk will present examples of how to visualise and combine data from very different sources such as weather and climate, and statistics collected by individual countries using Python notebooks in Analytics for Apache Spark.

D'Onofrio, Claudio: ICOS Data at Your Fingertips

D'Onofrio, Claudio: ICOS Data at Your FingertipsIntegrated Carbon Observation System (ICOS) The document discusses accessing and analyzing data from the ICOS (Integrated Carbon Observation System) Data Portal using Python. It introduces the "icoscp" Python library which allows users to load ICOS data into pandas dataframes with just a few lines of code. Examples shown include loading methane concentration data from a Zeppelin station in the Arctic, exploring persistent identifiers to find related data, and combining ICOS data with other datasets from repositories like Pangaea. The library is still in beta but aims to make it easier for scientists to access and analyze ICOS data without having to download and manage large files locally.

Viewers also liked (20)

IBM Watson Overview

IBM Watson OverviewPenn State EdTech Network IBM Watson overview presented by Mike Pointer, Watson Sr. Solution Architect, at Penn State's Nittany Watson Challenge Immersion event on January 19-20, 2017.

Process Mining based on the Internet of Events

Process Mining based on the Internet of EventsRising Media Ltd. 1) Process mining uses event data to discover, monitor and improve real processes. It serves as a new type of spreadsheet to analyze event data and discover processes.

2) Process mining tools like ProM can be used to perform process discovery from event logs, conformance checking by comparing modeled and observed behavior, and other types of analysis without requiring process modeling.

3) The main challenges in data science and process mining include dealing with high volume and velocity data, extracting useful knowledge from data to answer known and unknown questions, and ensuring responsible use of data and algorithms that considers fairness, accuracy, transparency and other factors.

ICBM 산업동향과 IoT 기반의 사업전략

ICBM 산업동향과 IoT 기반의 사업전략Hakyong Kim 성남산업진흥재단에서 추진하는 "혁신기업 클라우드 서비스 지원사업 설명회"에서 발표한 자료입니다. 요즘 화두가 되는 제4차 산업혁명의 개념을 소개하고 용어의 정의에 관한 논쟁을 소개했습니다. 중요한 것은 용어 자체가 아니라, 세상이 변화하는 방향임을 지적하고 그 방향이 디지털 트랜스포메이션(Digital Transformation)으로 대변된다고 했습니다. ICBM은 디지털 트랜스포메이션을 가능하게 하는 수단으로, 각각의 기술 즉 사물인터넷(IoT), 클라우드, 빅데이터, 모바일 분야의 시장 및 기업 동향을 간단하게 소개하고 이러한 기술을 활용하는 기업들이 사용할 수 있는 비즈니스 모델에 대해서 소개하면서 발표를 마쳤습니다.

Introduction to Bluemix

Introduction to BluemixPenn State EdTech Network An overview of the IBM Bluemix platform presented during Penn State's Nittany Watson Challenge Immersion event on January 19-20, 2017.

Deloitte disruption ahead IBM Watson

Deloitte disruption ahead IBM WatsonDean Bonehill ♠Technology for Business♠ Deloitte's report and point of view on IBM's Watson. IBM Watson, AI, Cognitive Computing are rapidly evolving technologies that can support and enhance enterprise solutions. Learn about IBM Watson the Why? and the How?

IBM Watson Analytics Presentation

IBM Watson Analytics PresentationIan Balina Watson Analytics is a cloud-based analytics tool from IBM that leverages Watson technology to accelerate data discovery for business users. It provides semantic recognition of data concepts, identifies analysis starting points, and allows natural language interaction. The tool automates tasks like data preparation, generates insights and visualizations, and enables predictive analytics. It aims to make analytics more self-service, collaborative, and accessible to non-experts.

Beyond Keyword Search with IBM Watson Explorer Webinar Deck

Beyond Keyword Search with IBM Watson Explorer Webinar DeckMC+A IBM Watson Explorer provides flexible and powerful cognitive search and content analytics that can support a large variety of business use cases. In this Webinar, we discuss moving beyond keyword and federated search provided by products like the Google Search Appliance and getting ready for what’s next.

Putting IBM Watson to Work.. Saxena

Putting IBM Watson to Work.. SaxenaManoj Saxena Discover what comes next for IBM Watson and the industries particularly suited for Watson solutions, such as healthcare, banking, and the financial sector. All of which deal with massive amounts of unstructured data coming from various sources. Find out how the advanced analytics used in Watson are being put to work in businesses around the world.

All Models Are Wrong, But Some Are Useful: 6 Lessons for Making Predictive An...

All Models Are Wrong, But Some Are Useful: 6 Lessons for Making Predictive An...Brian Mac Namee Introduces some key ideas for deploying machine learning based predictive analytics models effectively. Based on the book "Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, worked Examples & Case Studies" (www.machinelearningbook.com)

Agile Data Science 2.0 - Big Data Science Meetup

Agile Data Science 2.0 - Big Data Science MeetupRussell Jurney Slides on Agile Data Science 2.0 (O'Reilly 2017) for the Big Data Science Meetup in Freemont on 2/24/2017

Connecting and Visualising Open Data from Multiple Sources

Connecting and Visualising Open Data from Multiple SourcesMargriet Groenendijk Open data is available from an incredible number of data sources that can be linked to your own datasets. This talk shows how to visualise and combine data with Python notebooks in the Cloud. Examples include data from different sources such as weather and climate, statistics collected by individual countries and Open Street Map data.

Agile and continuous delivery – How IBM Watson Workspace is built

Agile and continuous delivery – How IBM Watson Workspace is builtVincent Burckhardt Journey and transformations that we have been taking at IBM to implement Cloud Native application. Covers culture, architecture and pipeline changes. This presentation was given at IBM Connect 2017 in San Francisco in Feb 2017.

Watson API Use Case Demos for the Nittany Watson Challenge

Watson API Use Case Demos for the Nittany Watson ChallengePenn State EdTech Network 1) The document discusses various Watson capabilities including microservices for language, speech, vision, and data, as well as embodied cognition. It provides examples of use cases demonstrating speech to text with multiple speakers, a school navigator chatbot, expertise finder, and multimedia enrichment.

2) Live demos are shown for speech to text with diarization, speech to speech translation, a school finder application, and multimedia processing of video and audio.

3) The multimedia enrichment pipeline is described in detail, outlining how video and audio inputs are processed using various Watson APIs to extract metadata like transcripts, entities, keywords, and visual recognition results.

IBM Watson Question-Answering System and Cognitive Computing

IBM Watson Question-Answering System and Cognitive ComputingRakuten Group, Inc. IBM's vision of cognitive computing has been steadily embraced across the industries since IBM's Watson question-answering system made a sensational debut at the US Jeopardy! television quiz show in 2011. As a core member of the Watson project, I would like to share the excitement of the project and the last five and a half year of its progress into the cognitive business. In this talk, I will also give a technical overview of Watson, major use cases, and perspectives on the future of cognitive computing.

https://tech.rakuten.co.jp/

Ml, AI and IBM Watson - 101 for Business

Ml, AI and IBM Watson - 101 for BusinessJouko Poutanen The document discusses machine learning, artificial intelligence, and IBM Watson. It provides an agenda that includes what IBM Watson is, the benefits for business, and how to get started. It then discusses how IBM Watson is used in different industries and technologies like cloud computing, analytics, and cognitive systems. The document outlines when cognitive computing should be used and not used. It also provides examples of how organizations have used IBM Watson and the benefits they achieved. Finally, it provides recommendations on how to get started with cognitive technologies and resources for learning more.

Smart Industry 4.0: IBM Watson IoT in de praktijk

Smart Industry 4.0: IBM Watson IoT in de praktijkIoT Academy Tijdens de tweede IoT meetup van 2017 gaf Ronald Teijken inzicht hoe bedrijven slimmer complexe beslissingen kan nemen dankzij het Watson IoT Platform van IBM. Sensoren, Data, Analytics, Cognitive zijn enkele onderwerpen die hierbij aan bod kwamen.

IBM Watson Ecosystem roadshow - Chicago 4-2-14

IBM Watson Ecosystem roadshow - Chicago 4-2-14cheribergeron IBM Watson is powering a new generation of cognitive applications. Learn how IBM is partnering with visionaries and entrepreneurs to bring innovative cognitive applications to market through the IBM Watson Ecosystem.

Generative adversarial networks

Generative adversarial networks남주 김 Generative Adversarial Networks (GANs) are a class of machine learning frameworks where two neural networks contest with each other in a game. A generator network generates new data instances, while a discriminator network evaluates them for authenticity, classifying them as real or generated. This adversarial process allows the generator to improve over time and generate highly realistic samples that can pass for real data. The document provides an overview of GANs and their variants, including DCGAN, InfoGAN, EBGAN, and ACGAN models. It also discusses techniques for training more stable GANs and escaping issues like mode collapse.

IBM Watson Content Analytics: Discover Hidden Value in Your Unstructured Data

IBM Watson Content Analytics: Discover Hidden Value in Your Unstructured DataPerficient, Inc. Healthcare organizations create a massive amount of digital data. Some is stored in structured fields within electronic medical records (EMR), claims or financial systems and is readily accessible with traditional analytics. Other information, such as physician notes, patient surveys, call center recordings and diagnosis reports is often saved in a free-form text format and is rarely used for analytics. In fact, experts suggest that up to 80% of enterprise data exists in this unstructured format, which means a majority of critical data isn’t being considered or analyzed!

Our webinar demonstrated how to extract insights from unstructured data to increase the accuracy of healthcare decisions with IBM Watson Content Analytics. Leveraging years of experience from hundreds of physicians, IBM has developed tools and healthcare accelerators that allow you to quickly gain insights from this “new” data source and correlate it with the structured data to provide a more complete picture.

Hype vs. Reality: The AI Explainer

Hype vs. Reality: The AI ExplainerLuminary Labs Artificial intelligence (AI) is everywhere, promising self-driving cars, medical breakthroughs, and new ways of working. But how do you separate hype from reality? How can your company apply AI to solve real business problems?

Here’s what AI learnings your business should keep in mind for 2017.

Ad

Similar to Introduction to the IBM Watson Data Platform (20)

There is something about serverless

There is something about serverlessgjdevos Serverless computing allows developers to run code without managing servers. It is billed based on usage rather than on servers. Key serverless services include AWS Lambda for compute, S3 for storage, and DynamoDB for databases. While new, serverless offers opportunities to reduce costs and focus on code over infrastructure. Developers must learn serverless best practices for lifecycle management, organization, and hands-off operations. The Serverless Framework helps develop and deploy serverless applications.

Perchè potresti aver bisogno di un database NoSQL anche se non sei Google o F...

Perchè potresti aver bisogno di un database NoSQL anche se non sei Google o F...Codemotion La presentazione di Luca Garulli

in occasione del Codemotion, Roma 5 marzo 2011 http://www.codemotion.it

wotxr-20190320rzr

wotxr-20190320rzrPhil www.rzr.online.fr Philippe Coval is a software engineer at Samsung who is interested in connecting real-world devices to virtual environments using open-source technologies. He presented on using IoT.js to build WebThings that can be accessed from browsers or virtual reality applications. Examples shown included running a color sensor WebThing and updating its readings in real-time. Coval also demonstrated how to build immersive 3D scenes using A-Frame and link them to real devices over HTTP.

使用 Raspberry pi + fluentd + gcp cloud logging, big query 做iot 資料搜集與分析

使用 Raspberry pi + fluentd + gcp cloud logging, big query 做iot 資料搜集與分析Simon Su This is a short training for introduce Pi to use fluentd to collect data and use Google Cloud Logging and BigQuery as backend and then use Apps Script and Google Sheet as presentation layer.

«Что такое serverless-архитектура и как с ней жить?» Николай Марков, Aligned ...

«Что такое serverless-архитектура и как с ней жить?» Николай Марков, Aligned ...it-people The document discusses what serverless computing is and how it can be used for building applications. Serverless applications rely on third party services to manage server infrastructure and are event-triggered. Popular serverless frameworks like AWS Lambda, Google Cloud Functions, Microsoft Azure Functions, and Zappa allow developers to write code that runs in a serverless environment and handle events and triggers without having to manage servers.

Building Web Mobile App that don’t suck - FITC Web Unleashed - 2014-09-18

Building Web Mobile App that don’t suck - FITC Web Unleashed - 2014-09-18Frédéric Harper Mobility is everywhere. It’s even more important to think about smaller devices like smartphones when building application or game using web technology. Everybody wants to have a mobile application, but it's not sufficient: you need to create an awesome experience for your users, your customers. You need to be future proof, and think about different markets as users needs: you never know when your application will be the next Flappy Bird. This presentation is about concrete tips, tricks and guidelines for web developers using HTML, CSS, and JavaScript based on experience that will help you make a success of your next exciting mobile application or game idea.

Serverless? How (not) to develop, deploy and operate serverless applications.

Serverless? How (not) to develop, deploy and operate serverless applications.gjdevos Some of our lessons learned on how (not) to develop, deploy and operate serverless applications.

Presented at CfgMgmtCamp 2018 in Ghent.

webthing-iotjs-tizenrt-cdl2018-20181117rzr

webthing-iotjs-tizenrt-cdl2018-20181117rzrPhil www.rzr.online.fr https://social.samsunginter.net/web/statuses/101091908485239453# #Cdl2018 : #WebThing using #WebThingIotJs on #TizenRT on #ARTIK05x connected to @MozillaIot featuring @The_Jst #JerryScript + #IotJs , video to be published by @CapitoleDuLibre

webthing-iotjs-tizenrt-cdl2018-20181117rzr

Rapid Application Development with WSO2 Platform

Rapid Application Development with WSO2 PlatformWSO2 This document provides an overview of a presentation by Smaisa Abeysinghe, VP of Delivery at WSO2, on rapid application development with JavaScript and data services. It includes details about the presenter and their background at WSO2, an overview of WSO2 as a company including their products and partnerships, and discusses challenges in rapid application development as well as how JavaScript can help address these challenges. The document also introduces Jaggery.js as a JavaScript framework for building multi-tier web applications, provides examples of getting started with Jaggery.js, and demonstrates RESTful URL mapping and HTTP verb mapping in sample applications.

From localhost to the cloud: A Journey of Deployments

From localhost to the cloud: A Journey of DeploymentsTegar Imansyah 28 September 2019. Event from HIMATIFA Universitas Pembangunan Nasional “Veteran” Jawa Timur called "IT Festival 2019".

In this talk, I present basic knowledge about how the web works, what is the different cloud computing with existing solution and how to deploy to server.

Version Control in Machine Learning + AI (Stanford)

Version Control in Machine Learning + AI (Stanford)Anand Sampat Starting with outlining the history of conventional version control before diving into explaining QoDs (Quantitative Oriented Developers) and the unique problems their ML systems pose from an operations perspective (MLOps). With the only status quo solutions being proprietary in-house pipelines (exclusive to Uber, Google, Facebook) and manual tracking/fragile "glue" code for everyone else.

Datmo works to solve this issue by empowering QoDs in two ways: making MLOps manageable and simple (rather than completely abstracted away) as well as reducing the amount of glue code so to ensure more robust end-to-end pipelines.

This goes through a simple example of using Datmo with an Iris classification dataset. Later workshops will expand to show how Datmo can work with other data pipelining tools.

GTLAB Installation Tutorial for SciDAC 2009

GTLAB Installation Tutorial for SciDAC 2009marpierc GTLAB is a Java Server Faces tag library that wraps Grid and web services to build portal-based and standalone applications. It contains tags for common tasks like job submission, file transfer, credential management. GTLAB applications can be deployed as portlets or converted to Google Gadgets. The document provides instructions for installing GTLAB, examples of tags, and making new custom tags.

The DiSo Project and the Open Web

The DiSo Project and the Open WebChris Messina This document discusses the DiSo Project and the open web. It proposes using open standards like OAuth and XRDS to enable cross-site social networking and manage user identity across different sites and services. Portable Contacts (PoCo) is presented as a way to bring friends across sites using vCards and invite friends safely using OAuth. Drupal is suggested to use these open standards to advertise user services and enable cross-site social functionality.

When it all GOes right

When it all GOes rightPavlo Golub This talk covers how to use PostgreSQL together with the Golang (Go) programming language. I will describe what drivers and tools are available and which to use nowadays.

In this talk I will cover what design choices of Go can help you to build robust programs. But also, we will reveal some parts of the language and drivers that can cause obstacles and what routines to apply to avoid risks.

We will try to build the simplest cross-platform application in Go fully covered by tests and ready for CI/CD using GitHub Actions as an example.

Stick to the rules - Consumer Driven Contracts. 2015.07 Confitura

Stick to the rules - Consumer Driven Contracts. 2015.07 ConfituraMarcin Grzejszczak The document discusses consumer driven contracts (CDC), where consumers define contracts for APIs that servers implement. It describes the workflow where consumers clone a server repo, modify contracts locally, generate stubs for testing, and submit pull requests. Servers implement APIs, run generated tests, and merge requests. Benefits include rapid prototyping, non-blocking development, and continuously checking compatibility. Challenges include maintaining test data and deciding what servers verify. The presentation demonstrates CDC using Accurest and Wiremock through an example.

GDG DevFest Romania - Architecting for the Google Cloud Platform

GDG DevFest Romania - Architecting for the Google Cloud PlatformMárton Kodok Learn about FaaS, PaaS architectural patterns that make use of Cloud Functions, Pub/Sub, Dataflow, Kubernetes and platforms that hides the management of servers from the user and have changed how we develop and deploy future software.

We discuss the difference between an event-driven approach - this means that you can trigger a function whenever something interesting happens within the cloud environment - and the simpler HTTP approach. Quota and pricing of per invocation, and the advantages and disadvantages of the serverless systems.

webthing-iotjs-20181027rzr

webthing-iotjs-20181027rzrPhil www.rzr.online.fr

Build "Privacy by design" Webthings

With IoT.js on TizenRT and more

#MozFest, Privacy and Security track

Ravensbourne University, London UK <2018-10-27>

Go bei der 4Com GmbH & Co. KG

Go bei der 4Com GmbH & Co. KGJonas Riedel 1) 4Com is a company that provides communication solutions to help customers effectively manage calls, emails, chats, and other messaging. They are described as a "cloud" for their customers like ADAC.

2) Microservices are used as the architecture, with each service having a single responsibility within bounded contexts. Services communicate through defined APIs and documentation.

3) Go is used as the programming language because it is simple, has many built-in features like HTTP support, and produces single binaries. Go encourages testability and concurrency.

Open Source - NOVALUG January 2019

Open Source - NOVALUG January 2019plarsen67 This document summarizes an open source talk given in January 2019. It begins with a brief history of open source software from the 1950s to present day, including key events like the establishment of the GNU project and the coining of the term "open source" in 1998. It then defines open source as being transparent, open for collaboration, and abiding by the open source definition. The document discusses how open source involves more than just software, including documentation, infrastructure, QA, and communication. It also covers popular open source licenses like GPL and Apache licenses. Finally, it discusses benefits of open source collaboration for both government and private organizations and how to get involved in open source projects.

MongoDB Europe 2016 - Warehousing MongoDB Data using Apache Beam and BigQuery

MongoDB Europe 2016 - Warehousing MongoDB Data using Apache Beam and BigQueryMongoDB What happens when you need to combine data from MongoDB along with other systems into a cohesive view for business intelligence? How do you extract, transform, and load MongoDB data into a centralized data warehouse? In this session we’ll talk about Google BigQuery, a managed, petabyte-scale data warehouse, and the various ways to get MongoDB data into it. We’ll cover managed options like Apache Beam and Cloud Dataflow as well as other tools that can help make moving and using MongoDB data easy for business intelligence workloads.

Ad

More from Margriet Groenendijk (18)

Trusting machines with robust, unbiased and reproducible AI

Trusting machines with robust, unbiased and reproducible AIMargriet Groenendijk Learn about how bias can take root in machine learning algorithms and ways to overcome it. From the power of open source, to tools built to detect and remove bias in machine learning models, there is a vibrant ecosystem of contributors who are working to build a digital future that is inclusive and fair. Learn how to achieve AI fairness, robustness and explainability. You can become part of the solution.

Trusting machines with robust, unbiased and reproducible AI

Trusting machines with robust, unbiased and reproducible AI Margriet Groenendijk To trust a decision made by an algorithm, we need to know that it is reliable and fair, that it can be accounted for, and that it will cause no harm. We need assurance that it cannot be tampered with and that the system itself is secure. We need to understand the rationale behind the algorithmic assessment, recommendation or outcome, and be able to interact with it, probe it – even ask questions. And we need assurance that the values and norms of our societies are also reflected in those outcomes.

Learn about how bias can take root in machine learning algorithms and ways to overcome it. From the power of open source, to tools built to detect and remove bias in machine learning models, there is a vibrant ecosystem of contributors who are working to build a digital future that is inclusive and fair. Learn how to achieve AI fairness, robustness, explainability and accountability. You can become part of the solution.

Trusting machines with robust, unbiased and reproducible AI

Trusting machines with robust, unbiased and reproducible AI Margriet Groenendijk The document discusses building trust in artificial intelligence systems. It addresses questions around fairness, accountability, understandability, and the potential for tampering. The author advocates using tools like Adversarial Robustness 360, AI Fairness 360, and AI Explainability 360 to test models for robustness, fairness, and explainability over the full lifecycle from training to deployment. Continuous monitoring of deployed models is also recommended to detect issues like data or concept drift over time that could undermine performance or trust in the system. The goal is to develop a systematic, transparent approach for developing trusted AI applications.

Weather and Climate Data: Not Just for Meteorologists

Weather and Climate Data: Not Just for MeteorologistsMargriet Groenendijk Weather is part of our everyday lives. Who doesn’t check the rain radar before heading out, or the weather forecast when planning a weekend away? But where does this data come from, and what is it made of? The answer is a mix of measurements, models and statistics, meaning that the use of weather and climate data can get complex very quickly. This session provides a brief overview of the science behind weather and climate forecasts and provides you with the tools to get started with weather data - even if you aren't a meteorologist.

Navigating the Magical Data Visualisation Forest

Navigating the Magical Data Visualisation ForestMargriet Groenendijk The document summarizes Dr. Margriet Groenendijk's presentation at PyCon UK on navigating data visualization with Python. It discusses using various Python packages like matplotlib, seaborn, and PixieDust to load, analyze, and visualize data in Jupyter notebooks. PixieDust allows loading data from various sources and creating interactive dashboards. The presentation recommends resources for further learning.

The Convergence of Data Science and Software Development

The Convergence of Data Science and Software DevelopmentMargriet Groenendijk Talk I gave at IP EXPO Manchester - https://www.ipexpomanchester.com/Speakers-2018/Margriet-Groenendijk

The Convergence of Data Science and Software Development

The Convergence of Data Science and Software DevelopmentMargriet Groenendijk http://www.callandcontactcentreexpo.co.uk/speakers/margriet-groenendijk/

In order to move past the hype and achieve the full potential of machine learning, data scientists and software developers need to work more closely together towards their common goal of delivering well-architected, data-driven applications. Every industry is in the process of being transformed by software and data. It is in the collaboration between data scientists and software developers where the real value can be found by creating integrated data workflows that benefit from the unique knowledge and skillsets of each discipline.

The Convergence of Data Science and Software Development

The Convergence of Data Science and Software DevelopmentMargriet Groenendijk In order to move past the hype and achieve the full potential of machine learning, data scientists and software developers need to work more closely together towards their common goal of delivering well-architected, data-driven applications. Every industry is in the process of being transformed by software and data. It is in the collaboration between data scientists and software developers where the real value can be found by creating integrated data workflows that benefit from the unique knowledge and skillsets of each discipline.

https://www.dncexpo.be/seminar/O105

Weather and Climate Data: Not Just for Meteorologists

Weather and Climate Data: Not Just for MeteorologistsMargriet Groenendijk Weather is part of our everyday lives. Who doesn’t check the rain radar before heading out, or the weather forecast when planning a weekend away? But where does this data come from, and what is it made of? The answer is a mix of measurements, models and statistics, meaning that the use of weather and climate data can get complex very quickly.

This session provides a brief overview of the science behind weather and climate forecasts and provides you with the tools to get started with weather data – even if you aren’t a meteorologist. Learn how to connect weather data to other data sources, how to visualize weather and climate data in an interactive weather dashboard embedded in a Python notebook, and other ways you can use weather data for yourself, from examples using weather APIs, maps, PixieDust and Machine Learning.

IP EXPO Europe: Data Science in the Cloud

IP EXPO Europe: Data Science in the CloudMargriet Groenendijk Data Science deals with the extraction of valuable insights from an incredible number of sources in an endless number of formats. This session will go through a typical workflow using practical tools and tricks. This will give you a basic understanding of Data Science in the Cloud. The examples will show the steps that are needed to build and deploy a model to predict traffic collisions with weather data.

ODSC Europe: Weather and Climate Data: Not Just for Meteorologists

ODSC Europe: Weather and Climate Data: Not Just for MeteorologistsMargriet Groenendijk Weather is part of our everyday lives. Who doesn’t check the rain radar before heading out, or the weather forecast when planning a weekend away? But where does this data come from, and what is it made of? The answer is a mix of measurements, models and statistics, meaning that the use of weather and climate data can get complex very quickly.

These slides provide a brief overview of the science behind weather and climate forecasts and provides you with the tools to get started with weather data - even if you aren't a meteorologist. Learn how to connect weather data to other data sources, how to visualize weather and climate data in an interactive weather dashboard embedded in a Python notebook, and other ways you can use weather data for yourself, from examples using weather APIs, maps, PixieDust and Machine Learning.

IP EXPO Nordic: Data Science in the Cloud

IP EXPO Nordic: Data Science in the CloudMargriet Groenendijk Data Science deals with the extraction of valuable insights from an incredible number of sources in an endless number of formats. This session will go through a typical workflow using practical tools and tricks. This will give you a basic understanding of Data Science in the Cloud. The examples will show the steps that are needed to build and deploy a model to predict traffic collisions with weather data.

PyParis - weather and climate data

PyParis - weather and climate dataMargriet Groenendijk This document provides an overview of weather and climate data for beginners. It discusses accessing weather forecast data through The Weather Company API and visualizing the data in Jupyter notebooks. It also covers obtaining historical weather observations and climate model data from various sources. The document explores using weather data for applications in insurance, energy, retail, and analyzing the relationship between weather and traffic collisions.

PyData Barcelona - weather and climate data

PyData Barcelona - weather and climate dataMargriet Groenendijk This document provides an introduction to weather and climate data for beginners. It discusses different sources of weather and climate data including observations, forecasts, and climate models. It then demonstrates how to work with real-time weather data APIs and historical climate data files in Jupyter notebooks. Examples are shown of visualizing and analyzing weather data to explore applications in insurance, energy, retail, and studying the relationship between weather and traffic collisions. References are provided for further resources.

GeoPython - Mapping Data in Jupyter Notebooks with PixieDust

GeoPython - Mapping Data in Jupyter Notebooks with PixieDustMargriet Groenendijk This document discusses using PixieDust to create data visualizations and maps in Jupyter notebooks. PixieDust allows users to easily create visualizations with a simple display() function call and integrate data from notebooks with cloud services. Features highlighted include the package manager, various visualization options, integration with Scala, custom visualization creation, and embedding apps in notebooks. The document concludes with references for further information.

Data Science Festival - Beginners Guide to Weather and Climate Data

Data Science Festival - Beginners Guide to Weather and Climate DataMargriet Groenendijk Weather is part of our every day lives. Who doesn’t check the rain radar before heading out, or the weather forecast when planning a weekend away? But where does this data come from, what is it made of? The answer is a mix of measurements, models and statistics. This talk looks at the observations, predictions and forecast models, and weather data as a variable to consider in machine learning models. Learn how it is done and ways you can use weather and climate data from several examples.

Big Data Analytics London - Data Science in the Cloud

Big Data Analytics London - Data Science in the CloudMargriet Groenendijk The document outlines Margriet Groenendijk's presentation on data science in the cloud. It discusses topics like the history of data science, tools for data science like Spark and Jupyter notebooks, examples of working with data like SETI and weather data, and analyzing data with techniques like sentiment analysis. Various resources and links are provided to learn more about working with data science in the cloud.

PixieDust

PixieDustMargriet Groenendijk The document discusses PixieDust, an open source library that simplifies and improves Jupyter Python notebooks. PixieDust provides features like package management, visualizations, cloud integration, a Scala bridge for defining variables in Python and Scala, and extensibility for custom visualizations. It allows users to easily install Spark packages, call visualization options, export data to files or cloud services, and encapsulate analytics into user interfaces.

Recently uploaded (20)

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.

However, not all commercial systems will share their “thought traces” which are like a “debug mode” for LLMs. This includes OpenAI’s o1, o3 or DeepSeek’s R1 models. Guardrails systems can provide a “goal consistency analysis”, between the goals given to the system and the behavior of the system. Cautious users may consider not using these commercial frontier LLM systems, and make use of open-source Llama or a system with their own reasoning implementation, to provide all thought traces.

Architectural solutions can include sandboxing, to prevent or control models from executing operating system commands to alter files, send network requests, and modify their environment. Tight controls to prevent models from copying their model weights would be appropriate as well. Running multiple instances of the same model on the same prompt to detect behavior variations helps. The running redundant instances can be limited to the most crucial decisions, as an additional check. Preventing self-modifying code, ... (see link for full description)

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136 How to join illuminati Agent in uganda call+256776963507/0741506136

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136

Introduction to the IBM Watson Data Platform

- 1. IBM Watson Data Platform and Open Data 27 February 2017 Margriet Groenendijk | Developer Advocate | IBM Watson Data Platform @MargrietGr https://medium.com/ibm-watson-data-lab

- 2. @MargrietGr About me Developer Advocate, Data scientist Previous Research Fellow at University of Exeter, UK PhD at VU University Amsterdam, the Netherlands

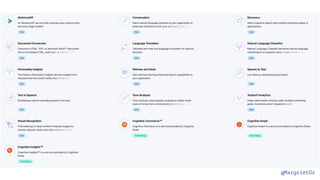

- 3. @MargrietGr IBM Watson Data Platform Connect Discover Accelerate

- 4. @MargrietGr IBM Watson Data Platform

- 9. @MargrietGr

- 10. @MargrietGr

- 11. @MargrietGr

- 12. @MargrietGr

- 13. @MargrietGr APIs

- 18. @MargrietGr Example : Watson Tone Analyser

- 19. @MargrietGr Emotion Language style Social propensities Analyze how you are coming across to others

- 20. Cloudant NoSQL

- 21. @MargrietGr Cloudant is a database id firstname lastname dob 1 John Smith 1970-01-01 2 Kate Jones 1971-12-25 { "_id": "1", "firstname": "John", "lastname": "Smith", "dob": "1970-01-01" }

- 22. @MargrietGr Cloudant is "schemaless" { "_id": "1", "firstname": "John", "lastname": "Smith", "dob": "1970-01-01", "email": "[email protected]" }

- 23. @MargrietGr Cloudant is "schemaless" { "_id": "1", "firstname": "John", "lastname": "Smith", "dob": "1970-01-01", "email": "[email protected]", "confirmed": true }

- 24. @MargrietGr Cloudant is "schemaless" { "_id": "1", "firstname": "John", "lastname": "Smith", "dob": "1970-01-01", "email": "[email protected]", "confirmed": true, "tags": ["tall", "glasses"] }

- 25. @MargrietGr Cloudant is "schemaless" { "_id": "1", "firstname": "John", "lastname": "Smith", "dob": "1970-01-01", "email": "[email protected]", "confirmed": true, "tags": ["tall", "glasses"], "address" : { "number": 14, "street": "Front Street", "town": "Luton", "postcode": "LU1 1AB" } }

- 26. @MargrietGr Cloudant is built for the web ▪Store JSON Documents ▪Speaks an HTTP API ▪Lives on the web

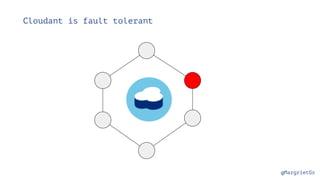

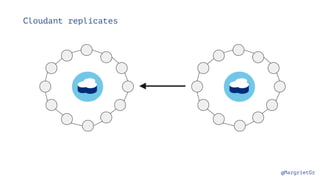

- 27. @MargrietGr Cloudant is fault tolerant

- 28. @MargrietGr Cloudant is fault tolerant

- 37. @MargrietGr

- 38. @MargrietGr Open Street Map Data IBM Cloudant Use from anywhere! Daily updates VM daily cron Python script Always up to date! Currently 12,467,460 POIs

- 39. @MargrietGr wget -c http://download.geofabrik.de/europe/netherlands- latest.osm.pbf Several data sources - world, continent, country, city or a user defined box Several data formats for which free to use conversion tools exist - pbf, osm, json, shp Example:

- 40. @MargrietGr Extract the POIs with osmosis osmosis --read-pbf netherlands-latest.osm.pbf --tf accept-nodes aerialway=station aeroway=aerodrome,helipad,heliport amenity=* craft=* emergency=* highway=bus_stop,rest_area,services historic=* leisure=* office=* public_transport=stop_position,stop_area shop=* tourism=* --tf reject-ways --tf reject-relations --write-xml netherlands.nodes.osm (easy to install with brew on Mac)

- 41. @MargrietGr Some cleaning up with osmconvert Convert from osm to json format with ogr2ogr osmconvert $netherlands.nodes.osm --drop-ways --drop-author --drop-relations --drop-versions >$netherlands.poi.osm ogr2ogr -f GeoJSON $netherlands.poi.json $netherlands.poi.osm points

- 42. @MargrietGr Upload to Cloudant with couchimport export COUCH_URL="https:// username:[email protected]" cat $netherlands.poi.json | couchimport --db poi-$netherlands --type json --jsonpath "features.*" https://github.com/glynnbird/couchimport IBM Cloudant

- 44. @MargrietGr

- 45. @MargrietGr UK Crime Data from https://data.police.uk/data/

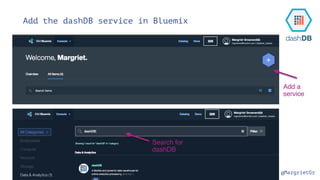

- 49. @MargrietGr Add the dashDB service in Bluemix Add a service Search for dashDB

- 50. @MargrietGr

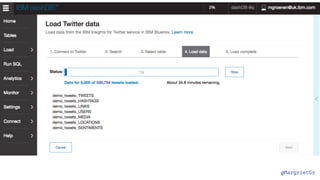

- 51. @MargrietGr 3 1 2 posted:2016-08-01,2016-10-01 followers_count:3000 friends_count: 3000 (weather OR sun OR sunny OR rain OR hail OR storm OR rainy OR drought OR flood OR hurricane OR tornado OR cold OR snow OR drizzle OR cloudy OR thunder OR lightning OR wind OR windy OR heatwave) REST API docs: https://new-console.ng.bluemix.net/docs/ services/Twitter/ twitter_rest_apis.html#rest_apis Search for tweets 4 Select table Use an existing service

- 52. @MargrietGr

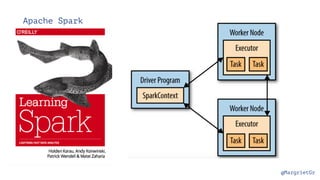

- 53. Apache Spark

- 55. @MargrietGr

- 56. @MargrietGr RDDs : Resilient Distributed Datasets Data does not have to fit on a single machine Data is separated into partitions Creation of RDDs Load an external dataset Distribute a collection of objects Transformations construct a new RDD from a previous one (lazy!) Actions compute a result based on an RDD

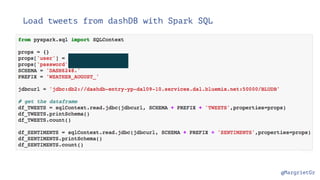

- 57. @MargrietGr Load tweets from dashDB with Spark SQL

- 58. @MargrietGr Clean data, summarise and load into pandas DataFrame

- 61. @MargrietGr

- 62. Getting started ▪ Go to datascience.ibm.com and sign in with your Bluemix account when you have one, else sign up for one at the top right of the screen

- 63. Create a project ▪ Create New project, click on the link in top of the screen ▪ Or go to the My Projects in the menu on the left of the screen and click Create New Project here

- 64. Create a project ▪ Name the Project ▪ Choose a Spark Service ▪ Choose an Object Storage ▪ Click Create

- 65. Add collaborators ▪ Click add collaborator ▪ Search for your project members ▪ Select Permission

- 66. Add a notebook ▪ Click add notebooks

- 67. Add a notebook ▪ Click add notebooks ▪ Pick your favourite: ▪ Python 2 ▪ Scala ▪ R ▪ Choose Spark 1.6 or 2.0 ▪ Click Create Notebook

- 68. Let’s write some code ▪ Click the pen icon to start adding code (edit mode) ▪ When collaborating only one person can edit, others can add comments to the notebook when in view mode

- 69. @MargrietGr Example : Bristol open data

- 73. @MargrietGr IBM Watson Data Platform Bluemix Data storage Apps Watson APIs Weather Data Science Experience Watson Machine Learning Watson Analytics