Introduction to word embeddings with Python

4 likes3,966 views

This document discusses word embeddings and various techniques for generating them, including word2vec and GloVe models. Word2vec uses neural networks to learn vector representations of words from large amounts of text by predicting surrounding words. It has two architectures: CBOW and skip-gram. GloVe learns word vectors by leveraging global word-word co-occurrence statistics from a corpus, which helps represent rare words. The document provides examples of using word embeddings and discusses how they can be applied to tasks like generating hashtags and user interests.

1 of 74

Downloaded 69 times

![credits: [x]](https://image.slidesharecdn.com/introductiontowordembeddingswithpython1-150915104728-lva1-app6892/85/Introduction-to-word-embeddings-with-Python-15-320.jpg)

![here comes word2vec

Distributed Representations of Words and Phrases and their Compositionality, Mikolov et al: [paper]](https://image.slidesharecdn.com/introductiontowordembeddingswithpython1-150915104728-lva1-app6892/85/Introduction-to-word-embeddings-with-Python-24-320.jpg)

![word2vec Explained: Deriving Mikolov et al.’s Negative-Sampling

Word-Embedding Method, Goldberg et al, 2014 [arxiv]](https://image.slidesharecdn.com/introductiontowordembeddingswithpython1-150915104728-lva1-app6892/85/Introduction-to-word-embeddings-with-Python-38-320.jpg)

![deep walk:

DeepWalk: Online Learning of Social

Representations [link]](https://image.slidesharecdn.com/introductiontowordembeddingswithpython1-150915104728-lva1-app6892/85/Introduction-to-word-embeddings-with-Python-67-320.jpg)

![predicting hashtags

interesting read: #TAGSPACE: Semantic

Embeddings from Hashtags [link]](https://image.slidesharecdn.com/introductiontowordembeddingswithpython1-150915104728-lva1-app6892/85/Introduction-to-word-embeddings-with-Python-69-320.jpg)

Ad

Recommended

Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec👋 Christopher Moody (Data Day 2016)

Standard natural language processing (NLP) is a messy and difficult affair. It requires teaching a computer about English-specific word ambiguities as well as the hierarchical, sparse nature of words in sentences. At Stitch Fix, word vectors help computers learn from the raw text in customer notes. Our systems need to identify a medical professional when she writes that she 'used to wear scrubs to work', and distill 'taking a trip' into a Fix for vacation clothing. Applied appropriately, word vectors are dramatically more meaningful and more flexible than current techniques and let computers peer into text in a fundamentally new way. I'll try to convince you that word vectors give us a simple and flexible platform for understanding text while speaking about word2vec, LDA, and introduce our hybrid algorithm lda2vec.

word2vec - From theory to practice

word2vec - From theory to practicehen_drik My talk at the Stockholm Natural Language Processing Meetup. I explained how word2vec is implemented and how to use it in Python with gensim. When words are represented as points in space, the spatial distance between words describes a similarity between these words. In this talk, I explore how to use this in practice and how to visualize the results (using t-SNE)

ورشة تضمين الكلمات في التعلم العميق Word embeddings workshop

ورشة تضمين الكلمات في التعلم العميق Word embeddings workshopiwan_rg This document provides an introduction to word embeddings in deep learning. It defines word embeddings as vectors of real numbers that represent words, where similar words have similar vector representations. Word embeddings are needed because they allow words to be treated as numeric inputs for machine learning algorithms. The document outlines different types of word embeddings, including frequency-based methods like count vectors and co-occurrence matrices, and prediction-based methods like CBOW and skip-gram models from Word2Vec. It also discusses tools for generating word embeddings like Word2Vec, GloVe, and fastText. Finally, it provides a tutorial on implementing Word2Vec in Python using Gensim.

Word Embedding to Document distances

Word Embedding to Document distancesGanesh Borle From Word Embeddings To Document Distances

We present the Word Mover’s Distance (WMD), a novel distance function between text documents. Our work is based on recent results in word embeddings that learn semantically meaningful representations for words from local cooccurrences in sentences. The WMD distance measures the dissimilarity between two text documents as the minimum amount of distance that the embedded words of one document need to “travel” to reach the embedded words of another document. We show that this distance metric can be cast as an instance of the Earth Mover’s Distance, a well studied transportation problem for which several highly efficient solvers have been developed. Our metric has no hyperparameters and is straight-forward to implement. Further, we demonstrate on eight real world document classification data sets, in comparison with seven state-of-the-art baselines, that the WMD metric leads to unprecedented low k-nearest neighbor document classification error rates.

A Simple Introduction to Word Embeddings

A Simple Introduction to Word EmbeddingsBhaskar Mitra In information retrieval there is a long history of learning vector representations for words. In recent times, neural word embeddings have gained significant popularity for many natural language processing tasks, such as word analogy and machine translation. The goal of this talk is to introduce basic intuitions behind these simple but elegant models of text representation. We will start our discussion with classic vector space models and then make our way to recently proposed neural word embeddings. We will see how these models can be useful for analogical reasoning as well applied to many information retrieval tasks.

Word Embeddings, why the hype ?

Word Embeddings, why the hype ? Hady Elsahar Continuous representations of words and documents, which is recently referred to as Word Embeddings, have recently demonstrated large advancements in many of the Natural language processing tasks.

In this presentation we will provide an introduction to the most common methods of learning these representations. As well as previous methods in building these representations before the recent advances in deep learning, such as dimensionality reduction on the word co-occurrence matrix.

Moreover, we will present the continuous bag of word model (CBOW), one of the most successful models for word embeddings and one of the core models in word2vec, and in brief a glance of many other models of building representations for other tasks such as knowledge base embeddings.

Finally, we will motivate the potential of using such embeddings for many tasks that could be of importance for the group, such as semantic similarity, document clustering and retrieval.

Word2Vec

Word2Vechyunyoung Lee This is for seminar in NLP(Natural Language Processing) labs about what word2vec is and how to embed word in a vector.

(Kpi summer school 2015) word embeddings and neural language modeling

(Kpi summer school 2015) word embeddings and neural language modelingSerhii Havrylov https://github.com/sergii-gavrylov/ShowAndTell

https://github.com/sergii-gavrylov/theano-tutorial-AACIMP2015

Yoav Goldberg: Word Embeddings What, How and Whither

Yoav Goldberg: Word Embeddings What, How and WhitherMLReview This document discusses word embeddings and how they work. It begins by explaining how the author became an expert in distributional semantics without realizing it. It then discusses how word2vec works, specifically skip-gram models with negative sampling. The key points are that word2vec is learning word and context vectors such that related words and contexts have similar vectors, and that this is implicitly factorizing the word-context pointwise mutual information matrix. Later sections discuss how hyperparameters are important to word2vec's success and provide critiques of common evaluation tasks like word analogies that don't capture true semantic similarity. The overall message is that word embeddings are fundamentally doing the same thing as older distributional semantic models through matrix factorization.

word embeddings and applications to machine translation and sentiment analysis

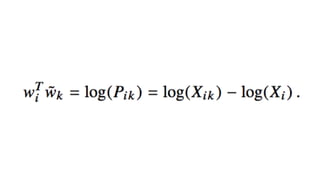

word embeddings and applications to machine translation and sentiment analysisMostapha Benhenda This document provides an overview of word embeddings and their applications. It discusses how word embeddings represent words as vectors such that similar words have similar vectors. Applications discussed include machine translation, sentiment analysis, and convolutional neural networks. It also provides an example of the GloVe algorithm for creating word embeddings, which involves building a co-occurrence matrix from text and factorizing the matrix to obtain word vectors.

Tomáš Mikolov - Distributed Representations for NLP

Tomáš Mikolov - Distributed Representations for NLPMachine Learning Prague The document discusses word embedding techniques, specifically Word2vec. It introduces the motivation for distributed word representations and describes the Skip-gram and CBOW architectures. Word2vec produces word vectors that encode linguistic regularities, with simple examples showing words with similar relationships have similar vector offsets. Evaluation shows Word2vec outperforms previous methods, and its word vectors are now widely used in NLP applications.

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec👋 Christopher Moody This document summarizes the lda2vec model, which combines aspects of word2vec and LDA. Word2vec learns word embeddings based on local context, while LDA learns document-level topic mixtures. Lda2vec models words based on both their local context and global document topic mixtures to leverage both approaches. It represents documents as mixtures over sparse topic vectors similar to LDA to maintain interpretability. This allows it to predict words based on local context and global document content.

Using Text Embeddings for Information Retrieval

Using Text Embeddings for Information RetrievalBhaskar Mitra Neural text embeddings provide dense vector representations of words and documents that encode various notions of semantic relatedness. Word2vec models typical similarity by representing words based on neighboring context words, while models like latent semantic analysis encode topical similarity through co-occurrence in documents. Dual embedding spaces can separately model both typical and topical similarities. Recent work has applied text embeddings to tasks like query auto-completion, session modeling, and document ranking, demonstrating their ability to capture semantic relationships between text beyond just words.

Word2Vec

Word2Vecmohammad javad hasani This document provides an overview of Word2Vec, a neural network model for learning word embeddings developed by researchers led by Tomas Mikolov at Google in 2013. It describes the goal of reconstructing word contexts, different word embedding techniques like one-hot vectors, and the two main Word2Vec models - Continuous Bag of Words (CBOW) and Skip-Gram. These models map words to vectors in a neural network and are trained to predict words from contexts or predict contexts from words. The document also discusses Word2Vec parameters, implementations, and other applications that build upon its approach to word embeddings.

Word2vec algorithm

Word2vec algorithmAndrew Koo Word2vec works by using documents to train a neural network model to learn word vectors that encode the words' semantic meanings. It trains the model to predict a word's context by learning vector representations of words. It then represents sentences as the average of the word vectors, and constructs a similarity matrix between sentences to score them using PageRank to identify important summary sentences.

Word2vec slide(lab seminar)

Word2vec slide(lab seminar)Jinpyo Lee #Korean

word2vec관련 논문을 읽고 정리해본 발표자료입니다.

상세한 내용에는 아직 모르는 부분이 많아 생략된 부분이 있습니다.

비평이나, 피드백은 환영합니다.

Word2vec ultimate beginner

Word2vec ultimate beginnerSungmin Yang word2vec beginner.

vector space, distributional semantics, word embedding, vector representation for word, word vector representation, sparse and dense representation, vector representation, Google word2vec, tensorflow

Neural Text Embeddings for Information Retrieval (WSDM 2017)

Neural Text Embeddings for Information Retrieval (WSDM 2017)Bhaskar Mitra The document describes a tutorial on using neural networks for information retrieval. It discusses an agenda for the tutorial that includes fundamentals of IR, word embeddings, using word embeddings for IR, deep neural networks, and applications of neural networks to IR problems. It provides context on the increasing use of neural methods in IR applications and research.

Representation Learning of Vectors of Words and Phrases

Representation Learning of Vectors of Words and PhrasesFelipe Moraes Talk about representation learning using word vectors such as Word2Vec, Paragraph Vector. Also introduced to neural network language models. Expose some applications using NNLM such as sentiment analysis and information retrieval.

Word2Vec: Learning of word representations in a vector space - Di Mitri & Her...

Word2Vec: Learning of word representations in a vector space - Di Mitri & Her...Daniele Di Mitri This document discusses the Word2Vec model for learning word representations. It outlines some limitations of classic NLP techniques, such as treating words as atomic units. Word2Vec uses a neural network model to learn vector representations of words in a way that captures semantic and syntactic relationships. Specifically, it describes the skip-gram and negative sampling techniques used to efficiently train the model on large amounts of unlabeled text data. Applications mentioned include machine translation and dimensionality reduction.

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastText

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastTextrudolf eremyan This presentation about comparing different word embedding models and libraries like word2vec and fastText, describing their difference and showing pros and cons.

Word2vec: From intuition to practice using gensim

Word2vec: From intuition to practice using gensimEdgar Marca Edgar Marca gives a presentation on word2vec and its applications. He begins by introducing word2vec, a model for learning word embeddings from raw text. Word2vec maps words to vectors in a continuous vector space, where semantically similar words are mapped close together. Next, he discusses how word2vec can be used for applications like understanding Trump supporters' tweets, making restaurant recommendations, and generating song playlists. In closing, he emphasizes that pre-trained word2vec models can be used when data is limited, but results vary based on the specific dataset.

(Deep) Neural Networks在 NLP 和 Text Mining 总结

(Deep) Neural Networks在 NLP 和 Text Mining 总结君 廖 The document discusses the use of deep neural networks and text mining. It provides an overview of key developments in deep learning for natural language processing, including word embeddings using Word2Vec, convolutional neural networks for modeling sentences and documents, and applications such as machine translation, relation classification and topic modeling. The document also discusses parameter tuning for deep learning models.

Word2vec and Friends

Word2vec and FriendsBruno Gonçalves The document discusses word embedding techniques such as word2vec. It explains that word2vec represents words as vectors in a way that preserves semantic meaning, such that words with similar meanings have similar vector representations. It describes how word2vec uses a neural network model to learn vector representations of words from a large corpus by predicting nearby words given a target word. The document provides details on how word2vec is trained using gradient descent to minimize the cross-entropy error. It also discusses extensions like skip-gram, continuous bag-of-words, negative sampling and hierarchical softmax.

Word representations in vector space

Word representations in vector spaceAbdullah Khan Zehady - The document discusses neural word embeddings, which represent words as dense real-valued vectors in a continuous vector space. This allows words with similar meanings to have similar vector representations.

- It describes how neural network language models like skip-gram and CBOW can be used to efficiently learn these word embeddings from unlabeled text data in an unsupervised manner. Techniques like hierarchical softmax and negative sampling help reduce computational complexity.

- The learned word embeddings show meaningful syntactic and semantic relationships between words and allow performing analogy and similarity tasks without any supervision during training.

Tutorial on word2vec

Tutorial on word2vecLeiden University General background and conceptual explanation of word embeddings (word2vec in particular). Mostly aimed at linguists, but also understandable for non-linguists.

Leiden University, 23 March 2018

Skip gram and cbow

Skip gram and cbowhyunyoung Lee This is presentation about what skip-gram and CBOW is in seminar of Natural Language Processing Labs.

- how to make vector of words using skip-gram & CBOW.

Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes👋 Christopher Moody lda2vec with presenter notes

Without notes:

http://www.slideshare.net/ChristopherMoody3/lda2vec-text-by-the-bay-2016

Word embeddings

Word embeddingsShruti kar Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.

Ad

More Related Content

What's hot (20)

(Kpi summer school 2015) word embeddings and neural language modeling

(Kpi summer school 2015) word embeddings and neural language modelingSerhii Havrylov https://github.com/sergii-gavrylov/ShowAndTell

https://github.com/sergii-gavrylov/theano-tutorial-AACIMP2015

Yoav Goldberg: Word Embeddings What, How and Whither

Yoav Goldberg: Word Embeddings What, How and WhitherMLReview This document discusses word embeddings and how they work. It begins by explaining how the author became an expert in distributional semantics without realizing it. It then discusses how word2vec works, specifically skip-gram models with negative sampling. The key points are that word2vec is learning word and context vectors such that related words and contexts have similar vectors, and that this is implicitly factorizing the word-context pointwise mutual information matrix. Later sections discuss how hyperparameters are important to word2vec's success and provide critiques of common evaluation tasks like word analogies that don't capture true semantic similarity. The overall message is that word embeddings are fundamentally doing the same thing as older distributional semantic models through matrix factorization.

word embeddings and applications to machine translation and sentiment analysis

word embeddings and applications to machine translation and sentiment analysisMostapha Benhenda This document provides an overview of word embeddings and their applications. It discusses how word embeddings represent words as vectors such that similar words have similar vectors. Applications discussed include machine translation, sentiment analysis, and convolutional neural networks. It also provides an example of the GloVe algorithm for creating word embeddings, which involves building a co-occurrence matrix from text and factorizing the matrix to obtain word vectors.

Tomáš Mikolov - Distributed Representations for NLP

Tomáš Mikolov - Distributed Representations for NLPMachine Learning Prague The document discusses word embedding techniques, specifically Word2vec. It introduces the motivation for distributed word representations and describes the Skip-gram and CBOW architectures. Word2vec produces word vectors that encode linguistic regularities, with simple examples showing words with similar relationships have similar vector offsets. Evaluation shows Word2vec outperforms previous methods, and its word vectors are now widely used in NLP applications.

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec

word2vec, LDA, and introducing a new hybrid algorithm: lda2vec👋 Christopher Moody This document summarizes the lda2vec model, which combines aspects of word2vec and LDA. Word2vec learns word embeddings based on local context, while LDA learns document-level topic mixtures. Lda2vec models words based on both their local context and global document topic mixtures to leverage both approaches. It represents documents as mixtures over sparse topic vectors similar to LDA to maintain interpretability. This allows it to predict words based on local context and global document content.

Using Text Embeddings for Information Retrieval

Using Text Embeddings for Information RetrievalBhaskar Mitra Neural text embeddings provide dense vector representations of words and documents that encode various notions of semantic relatedness. Word2vec models typical similarity by representing words based on neighboring context words, while models like latent semantic analysis encode topical similarity through co-occurrence in documents. Dual embedding spaces can separately model both typical and topical similarities. Recent work has applied text embeddings to tasks like query auto-completion, session modeling, and document ranking, demonstrating their ability to capture semantic relationships between text beyond just words.

Word2Vec

Word2Vecmohammad javad hasani This document provides an overview of Word2Vec, a neural network model for learning word embeddings developed by researchers led by Tomas Mikolov at Google in 2013. It describes the goal of reconstructing word contexts, different word embedding techniques like one-hot vectors, and the two main Word2Vec models - Continuous Bag of Words (CBOW) and Skip-Gram. These models map words to vectors in a neural network and are trained to predict words from contexts or predict contexts from words. The document also discusses Word2Vec parameters, implementations, and other applications that build upon its approach to word embeddings.

Word2vec algorithm

Word2vec algorithmAndrew Koo Word2vec works by using documents to train a neural network model to learn word vectors that encode the words' semantic meanings. It trains the model to predict a word's context by learning vector representations of words. It then represents sentences as the average of the word vectors, and constructs a similarity matrix between sentences to score them using PageRank to identify important summary sentences.

Word2vec slide(lab seminar)

Word2vec slide(lab seminar)Jinpyo Lee #Korean

word2vec관련 논문을 읽고 정리해본 발표자료입니다.

상세한 내용에는 아직 모르는 부분이 많아 생략된 부분이 있습니다.

비평이나, 피드백은 환영합니다.

Word2vec ultimate beginner

Word2vec ultimate beginnerSungmin Yang word2vec beginner.

vector space, distributional semantics, word embedding, vector representation for word, word vector representation, sparse and dense representation, vector representation, Google word2vec, tensorflow

Neural Text Embeddings for Information Retrieval (WSDM 2017)

Neural Text Embeddings for Information Retrieval (WSDM 2017)Bhaskar Mitra The document describes a tutorial on using neural networks for information retrieval. It discusses an agenda for the tutorial that includes fundamentals of IR, word embeddings, using word embeddings for IR, deep neural networks, and applications of neural networks to IR problems. It provides context on the increasing use of neural methods in IR applications and research.

Representation Learning of Vectors of Words and Phrases

Representation Learning of Vectors of Words and PhrasesFelipe Moraes Talk about representation learning using word vectors such as Word2Vec, Paragraph Vector. Also introduced to neural network language models. Expose some applications using NNLM such as sentiment analysis and information retrieval.

Word2Vec: Learning of word representations in a vector space - Di Mitri & Her...

Word2Vec: Learning of word representations in a vector space - Di Mitri & Her...Daniele Di Mitri This document discusses the Word2Vec model for learning word representations. It outlines some limitations of classic NLP techniques, such as treating words as atomic units. Word2Vec uses a neural network model to learn vector representations of words in a way that captures semantic and syntactic relationships. Specifically, it describes the skip-gram and negative sampling techniques used to efficiently train the model on large amounts of unlabeled text data. Applications mentioned include machine translation and dimensionality reduction.

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastText

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastTextrudolf eremyan This presentation about comparing different word embedding models and libraries like word2vec and fastText, describing their difference and showing pros and cons.

Word2vec: From intuition to practice using gensim

Word2vec: From intuition to practice using gensimEdgar Marca Edgar Marca gives a presentation on word2vec and its applications. He begins by introducing word2vec, a model for learning word embeddings from raw text. Word2vec maps words to vectors in a continuous vector space, where semantically similar words are mapped close together. Next, he discusses how word2vec can be used for applications like understanding Trump supporters' tweets, making restaurant recommendations, and generating song playlists. In closing, he emphasizes that pre-trained word2vec models can be used when data is limited, but results vary based on the specific dataset.

(Deep) Neural Networks在 NLP 和 Text Mining 总结

(Deep) Neural Networks在 NLP 和 Text Mining 总结君 廖 The document discusses the use of deep neural networks and text mining. It provides an overview of key developments in deep learning for natural language processing, including word embeddings using Word2Vec, convolutional neural networks for modeling sentences and documents, and applications such as machine translation, relation classification and topic modeling. The document also discusses parameter tuning for deep learning models.

Word2vec and Friends

Word2vec and FriendsBruno Gonçalves The document discusses word embedding techniques such as word2vec. It explains that word2vec represents words as vectors in a way that preserves semantic meaning, such that words with similar meanings have similar vector representations. It describes how word2vec uses a neural network model to learn vector representations of words from a large corpus by predicting nearby words given a target word. The document provides details on how word2vec is trained using gradient descent to minimize the cross-entropy error. It also discusses extensions like skip-gram, continuous bag-of-words, negative sampling and hierarchical softmax.

Word representations in vector space

Word representations in vector spaceAbdullah Khan Zehady - The document discusses neural word embeddings, which represent words as dense real-valued vectors in a continuous vector space. This allows words with similar meanings to have similar vector representations.

- It describes how neural network language models like skip-gram and CBOW can be used to efficiently learn these word embeddings from unlabeled text data in an unsupervised manner. Techniques like hierarchical softmax and negative sampling help reduce computational complexity.

- The learned word embeddings show meaningful syntactic and semantic relationships between words and allow performing analogy and similarity tasks without any supervision during training.

Tutorial on word2vec

Tutorial on word2vecLeiden University General background and conceptual explanation of word embeddings (word2vec in particular). Mostly aimed at linguists, but also understandable for non-linguists.

Leiden University, 23 March 2018

Skip gram and cbow

Skip gram and cbowhyunyoung Lee This is presentation about what skip-gram and CBOW is in seminar of Natural Language Processing Labs.

- how to make vector of words using skip-gram & CBOW.

Similar to Introduction to word embeddings with Python (20)

Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes👋 Christopher Moody lda2vec with presenter notes

Without notes:

http://www.slideshare.net/ChristopherMoody3/lda2vec-text-by-the-bay-2016

Word embeddings

Word embeddingsShruti kar Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.

Paper dissected glove_ global vectors for word representation_ explained _ ...

Paper dissected glove_ global vectors for word representation_ explained _ ...Nikhil Jaiswal This document summarizes and explains the GloVe model for generating word embeddings. GloVe aims to capture word meaning in vector space while taking advantage of global word co-occurrence counts. Unlike word2vec, GloVe learns embeddings based on a co-occurrence matrix rather than streaming sentences. It trains vectors so their differences predict co-occurrence ratios. The document outlines the key steps in building GloVe, including data preparation, defining the prediction task, deriving the GloVe equation, and comparisons to word2vec.

Vectorization In NLP.pptx

Vectorization In NLP.pptxChode Amarnath Vectorization is the process of converting words into numerical representations. Common techniques include bag-of-words which counts word frequencies, and TF-IDF which weights words based on frequency and importance. Word embedding techniques like Word2Vec and GloVe generate vector representations of words that encode semantic and syntactic relationships. Word2Vec uses the CBOW and Skip-gram models to predict words from contexts to learn embeddings, while GloVe uses global word co-occurrence statistics from a corpus. These pre-trained word embeddings can then be used for downstream NLP tasks.

Query Understanding

Query UnderstandingEoin Hurrell, PhD Talk given at the 6th Irish NLP Meetup on query understanding using conceptual slices and word embeddings.

https://www.meetup.com/NLP-Dublin/events/237998517/

Word_Embeddings.pptx

Word_Embeddings.pptxGowrySailaja The document discusses word embeddings, which learn vector representations of words from large corpora of text. It describes two popular methods for learning word embeddings: continuous bag-of-words (CBOW) and skip-gram. CBOW predicts a word based on surrounding context words, while skip-gram predicts surrounding words from the target word. The document also discusses techniques like subsampling frequent words and negative sampling that improve the training of word embeddings on large datasets. Finally, it outlines several applications of word embeddings, such as multi-task learning across languages and embedding images with text.

Lda and it's applications

Lda and it's applicationsBabu Priyavrat LDA (latent Dirichlet allocation) is a probabilistic model for topic modeling that represents documents as mixtures of topics and topics as mixtures of words. It uses the Dirichlet distribution to model the probability of topics occurring in documents and words occurring in topics. LDA can be represented as a Bayesian network. It has been used for applications like identifying topics in sentences and documents. Python packages like NLTK, Gensim, and Stopwords can be used for preprocessing text and building LDA models.

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017MLconf This document provides an overview of representation learning techniques used at Red Hat, including word2vec, doc2vec, url2vec, and customer2vec. Word2vec is used to learn word embeddings from text, while doc2vec extends it to learn embeddings for documents. Url2vec and customer2vec apply the same technique to learn embeddings for URLs and customer accounts based on browsing behavior. These embeddings can be used for tasks like search, troubleshooting, and data-driven customer segmentation. Duplicate detection is another application, where title and content embeddings are compared. Representation learning is also explored for baseball players to model player value.

Designing, Visualizing and Understanding Deep Neural Networks

Designing, Visualizing and Understanding Deep Neural Networksconnectbeubax The document discusses different approaches for representing the semantics and meaning of text, including propositional models that represent sentences as logical formulas and vector-based models that embed texts in a high-dimensional semantic space. It describes word embedding models like Word2vec that learn vector representations of words based on their contexts, and how these embeddings capture linguistic regularities and semantic relationships between words. The document also discusses how composition operations can be performed in the vector space to model the meanings of multi-word expressions.

NLP_guest_lecture.pdf

NLP_guest_lecture.pdfSoha82 This document provides an overview of natural language processing (NLP). It discusses how NLP systems have achieved shallow matching to understand language but still have fundamental limitations in deep understanding that requires context and linguistic structure. It also describes technologies like speech recognition, text-to-speech, question answering and machine translation. It notes that while text data may seem superficial, language is complex with many levels of structure and meaning. Corpus-based statistical methods are presented as one approach in NLP.

Subword tokenizers

Subword tokenizersHa Loc Do A brief literature review on language-agnostic tokenization, covering state of the art algorithms: BPE and Unigram model. This slide is part of a weekly sharing activity.

[Emnlp] what is glo ve part ii - towards data science![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

[Emnlp] what is glo ve part ii - towards data scienceNikhil Jaiswal GloVe is a new model for learning word embeddings from co-occurrence matrices that combines elements of global matrix factorization and local context window methods. It trains on the nonzero elements in a word-word co-occurrence matrix rather than the entire sparse matrix or individual context windows. This allows it to efficiently leverage statistical information from the corpus. The model produces a vector space with meaningful structure, as shown by its performance of 75% on a word analogy task. It outperforms related models on similarity tasks and named entity recognition. The full paper describes GloVe's global log-bilinear regression model and how it addresses drawbacks of previous models to encode linear directions of meaning in the vector space.

Explanations in Dialogue Systems through Uncertain RDF Knowledge Bases

Explanations in Dialogue Systems through Uncertain RDF Knowledge BasesDaniel Sonntag We implemented a generic dialogue shell that can be configured for and applied to domain-specific dialogue applications. The dialogue system works robustly for a new domain when the application backend can automatically infer previously unknown knowledge (facts) and provide explanations for the inference steps involved. For this purpose, we employ URDF, a query engine for uncertain and potentially inconsistent RDF knowledge bases. URDF supports rule-based, first-order predicate logic as used in OWL-Lite and OWL-DL, with simple and effective top-down reasoning capabilities. This mechanism also generates explanation graphs. These graphs can then be displayed in the GUI of the dialogue shell and help the user understand the underlying reasoning processes. We believe that proper explanations are a main factor for increasing the level of user trust in end-to-end human-computer interaction systems.

Semantic Web: From Representations to Applications

Semantic Web: From Representations to ApplicationsGuus Schreiber This document discusses semantic web representations and applications. It provides an overview of the W3C Web Ontology Working Group and Semantic Web Best Practices and Deployment Working Group, including their goals and key issues addressed. Examples of semantic web applications are also described, such as using ontologies to integrate information from heterogeneous cultural heritage sources.

Language Modelling in Natural Language Processing-Part II.pdf

Language Modelling in Natural Language Processing-Part II.pdfDeptii Chaudhari This presenation explains the concepts related to Word2Vec models and Vector Sematics.

SNLI_presentation_2

SNLI_presentation_2Viral Gupta This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.

New word analogy corpus

New word analogy corpusLukáš Svoboda The document introduces a new word analogy corpus for the Czech language to evaluate word embedding models. It contains over 22,000 semantic and syntactic analogy questions across various categories. The authors experiment with Word2Vec (CBOW and Skip-gram) and GloVe models on the Czech Wikipedia corpus. Their results show that CBOW generally performs best, with accuracy improving as vector dimension and training epochs increase. Performance is better on syntactic tasks than semantic ones. The new Czech analogy corpus allows further exploration of how word embeddings represent semantics and syntax for highly inflected languages.

AINL 2016: Nikolenko

AINL 2016: NikolenkoLidia Pivovarova This document provides an overview of deep learning techniques for natural language processing. It begins with an introduction to distributed word representations like word2vec and GloVe. It then discusses methods for generating sentence embeddings, including paragraph vectors and recursive neural networks. Character-level models are presented as an alternative to word embeddings that can handle morphology and out-of-vocabulary words. Finally, some general deep learning approaches for NLP tasks like text generation and word sense disambiguation are briefly outlined.

Ad

Recently uploaded (20)

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

Data Science Courses in India iim skills

Data Science Courses in India iim skillsdharnathakur29 This comprehensive Data Science course is designed to equip learners with the essential skills and knowledge required to analyze, interpret, and visualize complex data. Covering both theoretical concepts and practical applications, the course introduces tools and techniques used in the data science field, such as Python programming, data wrangling, statistical analysis, machine learning, and data visualization.

Ad

Introduction to word embeddings with Python

- 1. Introduction to word embeddings Pavel Kalaidin @facultyofwonder Moscow Data Fest, September, 12th, 2015

- 5. лойс

- 6. годно, лойс лойс за песню из принципа не поставлю лойс взаимные лойсы лойс, если согласен What is the meaning of лойс?

- 7. годно, лойс лойс за песню из принципа не поставлю лойс взаимные лойсы лойс, если согласен What is the meaning of лойс?

- 8. кек

- 9. кек, что ли? кек))))))) ну ты кек What is the meaning of кек?

- 10. кек, что ли? кек))))))) ну ты кек What is the meaning of кек?

- 12. simple and flexible platform for understanding text and probably not messing up

- 13. one-hot encoding? 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

- 14. co-occurrence matrix recall: word-document co-occurrence matrix for LSA

- 15. credits: [x]

- 16. from entire document to window (length 5-10)

- 17. still seems suboptimal -> big, sparse, etc.

- 18. lower dimensions, we want dense vectors (say, 25-1000)

- 19. How?

- 22. lots of memory?

- 23. idea: directly learn low- dimensional vectors

- 24. here comes word2vec Distributed Representations of Words and Phrases and their Compositionality, Mikolov et al: [paper]

- 25. idea: instead of capturing co- occurrence counts predict surrounding words

- 26. Two models: C-BOW predicting the word given its context skip-gram predicting the context given a word Explained in great detail here, so we’ll skip it for now Also see: word2vec Parameter Learning Explained, Rong, paper

- 28. CBOW: several times faster than skip-gram, slightly better accuracy for the frequent words Skip-Gram: works well with small amount of data, represents well rare words or phrases

- 29. Examples?

- 36. Wwoman - Wman = Wqueen - Wking classic example

- 38. word2vec Explained: Deriving Mikolov et al.’s Negative-Sampling Word-Embedding Method, Goldberg et al, 2014 [arxiv]

- 39. all done with gensim: github.com/piskvorky/gensim/

- 40. ...failing to take advantage of the vast amount of repetition in the data

- 41. so back to co-occurrences

- 42. GloVe for Global Vectors Pennington et al, 2014: nlp.stanford. edu/pubs/glove.pdf

- 43. Ratios seem to cancel noise

- 44. The gist: model ratios with vectors

- 45. The model

- 49. recall:

- 51. Restoring symmetry, part 2

- 52. Least squares problem it is now

- 53. SGD->AdaGrad

- 54. ok, Python code

- 56. two sets of vectors input and context + bias average/sum/drop

- 57. complexity |V|2

- 65. Abusing models

- 67. deep walk: DeepWalk: Online Learning of Social Representations [link]

- 68. user interests Paragraph vectors: cs.stanford. edu/~quocle/paragraph_vector.pdf

- 69. predicting hashtags interesting read: #TAGSPACE: Semantic Embeddings from Hashtags [link]

- 70. RusVectōrēs: distributional semantic models for Russian: ling.go.mail. ru/dsm/en/

- 72. corpus matters

- 73. building block for bigger models ╰(*´︶`*)╯

- 74. </slides>