Ad

Istqb : Test automation Engineer

- 1. ISTQB Certified Tester Advanced Level Syllabus Test Automation Engineer 約10分にまとめてみた Sadaaki Emura

- 2. 2 Purpose of Test Automation ■WHY is automation? • テスト効率改善 • テストカバレッジを上げる • テストコストを下げる • パフォーマンステスト • テスト速度を上げる • テスト(リリース)サイクルを上げる 直接的に“品質を上げる”と記載 されてないんだw ■WHERE is suitable? • テスト環境の構築(データ作成等) • テストの実行 • テスト結果と期待値の比較(Validation) • 実施結果のレポーティング テスト実施環境の構築は思った以 上に時間節約できる!

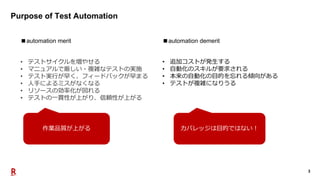

- 3. 3 Purpose of Test Automation ■automation merit 作業品質が上がる ■automation demerit • 追加コストが発生する • 自動化のスキルが要求される • 本来の自動化の目的を忘れる傾向がある • テストが複雑になりうる カバレッジは目的ではない! • テストサイクルを増やせる • マニュアルで厳しい・複雑なテストの実施 • テスト実行が早く、フィードバックが早まる • 人手によるミスがなくなる • リソースの効率化が図れる • テストの一貫性が上がり、信頼性が上がる

- 4. 4 Success Factors in Test Automation • テストレベル(Unit, IT ..)によって自動化のアプローチは異なる • システムのアーキテクチャ (IF , 開発言語 ..)によって自動化のアーキテクチャは異なる • システムの複雑さ (外部連携も含む) によって自動化の構成は異なる Unit Test Integration Test phpUnit Selenium,appium GUI Selenium,appium SoapUI テスト自動化導入時に念頭に入れる事 V字モデル

- 5. 5 Design for Testability and Automation • Observability: システムの状態を判断できるI/Fを提供。 • Control(ability): システムを操作するI/Fを提供 • Clearly defined architecture: 上記I/Fを一意に判別でき る テスタビリティとは? • 機械(自動化)が合否を判断できる仕組みが必要 • 機械が操作できる手段(I/F , Object)が必要 • 機械が操作する手段を特定できることが必要 Button A Button B Button C Process is success! Text text text GUI Validateが しやすい 操作でき る Objectを 一意に特 定できる

- 6. 6 The Generic Test Automation Architecture テスト自動化アーキテクチャの構成 Test Generation Layer Test Definition Layer Test Execution Layer Test Adaption Layer Manual Design Test Models Test Conditions Test Cases Test Procedures Test Data , Library Test Execution Test Logging Test Reporting GUI API Proto cols Data base Simul ator • テストハーネスの制御 • システムのモニタリング • システムのシミュレーション/エミュレーション • テストケースの自動実行 • テストケース実行のロギング • テスト結果のレポート •テストケース、テストデータ、手順を定義 •テストケース実行のテストSCRIPTを定義 •テストライブラリ(キーワード駆動)の利用 • マニュアルでテストケースの設計 • テストデータの生成、取得 • モデルから自動でテストケースを作成 ※ほとんどの自動化は下のlayerから検討、実装していくが全体像を考慮する必要がある

- 7. 7 The Generic Test Automation Architecture テスト自動化アーキテクチャの活動 1. 自動化の目的(要求)をクリアにする → どのテストプロセス、レベル、タイプ? 2. 自動化アプローチの比較 3. 抽象度の検討(キーワード駆動) → 保守性、拡張性等を考慮 4. システムとの接続方法の検討 → システムを動かすトリガを明確化 5. テスト環境の検討 6. 自動化の工数見積もり 7. 自動化の運用に求められる要素の洗い出し → 使いやすさ、レポート、フィードバック機能

- 8. 8 Approaches for Automating Test Cases Method concept pros cons capture & playback ユーザーが操作した手順を記録、それ を再実施する Ex. Selenium IDE • GUI, APIに利用可能 • 簡単 • 保守性が低い • システムがないと実装できない Linear scripting Capture & playbackと類似 ユーザー操作を実施し、その手順を SCRIPTに出力する Ex. Ranorex • 簡単 • プログラムスキルが不要(あると better) • テスト手順分、SCRIPTが増える • SCRIPTはプログラムスキルがないと読 めない Structured scripting Linear scripting + library化 • 共通機能のライブラリ化で保守性が 上がる • 初期コストが高い • プログラムスキルが必要 Data driven Dataと処理を分離する Dataをinputとしてscriptを再利用する • バリエーションテストに効果 • テストデータの管理が追加で必要にな る Keyword driven 非自動化エンジニアでも理解できる SCRIPT キーワードの中に処理が隠蔽される • 保守性が上がる • 非エンジニアでも操作ができる • キーワードのSCRIPT工数が大きい Process driven Keyword drivenからさらにhigh levelな もの • テストケースがワークフローに合わ せて定義できる • テスト対象のプロセスの理解性が下が る Model driven SCRIPTから抽出したモデルで定義す る • テストの本質に集中できる • モデリングの技術が求められる 主な自動化scripting手法

- 9. 9 Test Automation Solution Development テスト自動化のアクティビティは開発projectと同等である。 そのため、以下の点を考慮する • テスト自動化にもSDLCがある • テスト対象との互換性を保つ → 開発ツール等との互換性 • テスト対象のversionとの同期を保つ → テスト対象に修正が入れば、自動化も修正する • テスト自動化の再利用を検討 → 保守性を考慮

- 10. 10 Selection of Test Automation Approach and Planning of Deployment/Rollout pilot deploy 初めて自動化を導入するときの流れ • 自動化するprojectの選定(大きすぎず、小さすぎず) • 自動化の実施 • 自動化の効果測定 • 他プロジェクトへ展開 • (開発、リリース)プロセス、ガイドライン、育成への展開 • 自動化のメトリックスの可視化

- 11. 11 Risk Assessment and Mitigation Strategies 自動化導入によるリスク • プロジェクトリリースの遅延 • 自動化エンジニアのリソース確保 • 自動化導入の遅延 • テスト自動化の失敗リスク • 異なる環境での実施エラー • 新しい機能リリース時、自動化テストエラー • リソース、スケジュールの確保 • 自動化テストの実行時間の考慮 • システムに追従して自動化の更新 • 更新前にテスト実施、パフォーマンスの確認 • 自動化の新、既存機能のテスト

- 12. 12 Test Automation Maintenance テスト自動化の保守のタイプ • テストカバレッジ向上(様々なテストタイプ、バージョンの保証) • テスト自動化の不具合修正(システム変更等による) • テスト自動化のパフォーマンス(実行時間等)の改善 • 新しい標準、要求、法律等への追従

- 13. 13 Selection of Test Automation System Metrics External metrics : テスト自動化がほかの活動へ与える影響度を測る • 自動化のROI(自動化による工数削減、自動化構築工数、維持工数等) • 自動化の処理時間(パフォーマンス) • 自動化の精度(失敗率、偽陰性、偽陽性等) Internal metrics : テスト自動化の効果、効率を測る • 自動化Scripting工数 • 自動化Scripting欠陥密度 • 自動化の処理効率(パフォーマンス) テスト自動化戦略、効果や効率をモニタリングするためのメトリックス

- 14. 14 Test Automation Logging and Reporting 自動化のログは以下のようなものがあり、様々な分析に利用される • 自動化の現在のステータス、実行結果 • 実行ステップの詳細ログ、スクリーンショット、テストデータ(特にエラー時) • システム側のログ(クラッシュダンプ、スタックトレース等) 自動化のレポートは受信側に応じたフォーマットを用意する Content of the reports : テスト失敗時の分析に必要な情報を要する Publishing the reports : テストの実行可否を知るための情報を要する

- 15. 15 Criteria for Automation マニュアルテストを自動化にするための基準を設けたほうが良い • ROI (利用頻度、自動化工数) • スキルレベル(ツールのサポート、テストプロセスへの追従可能、成熟度) • テスト自動化環境の整備 (自動化環境の持続可能性、テスト対象の制御可能性) テスト自動化の導入効果を測る前に、以下の課題を対処する必要がある • チーム体制(育成体制、役割の明確化) • テスト環境整備(ツールの利用可能、データの正確性) • テスタビリティ(開発と協調)

- 16. 16 Identify Steps Needed to Implement Automation within Regression Testing リグレッションテストを自動化するとき、考慮すべき点 • テストの実行頻度、時間 (繰り返しの実行による定期的な機能確認、すばやいフィードバック) • テストの重複実行(マニュアルテストの重複実施の見直し) • テストの依存性(前処理、後処理等) • カバレッジの定義(自動化がなにをカバーしているのかをクリアにする) • マニュアルテストの妥当性(間違ったマニュアルテストフローを自動化してはいけない)

- 17. 17 Verifying Automated Test Environment Components, Test suite 自動化環境、実施テストスイートの妥当性を維持、検証するためにすること 1. テスト環境のSetup、setup自体の自動化 、異なる環境も保証 2. テストSCRIPTが正しく動くところ、失敗するところを把握 3. テスト自動化の設定方法などのドキュメンテーション 4. テスト自動化自身のテスト 5. テストスイートが正しく、最新のバージョンを保つ 6. 繰り返しのテストでは、基本同じ結果をだすこと 7. 検証結果、ログを残す

- 18. 18 Continuous Improvement 以下のような自動化を改善し続けることにより、プロジェクトへ良き影響を与える 1. スクリプトの改善(共通化、非ハードコード、エラー自動復旧性) 2. 実行時間の改善(重複処理の回避、処理分割) 3. 前処理、後処理の実装(共通性の高いもの) 4. 自動化の付加価値(レポーティング、ログ、他システムとの連携) 5. 変更に対する影響の確認(実行処理、パフォーマンス) 6. システムの変更への追従