Keras CNN Pre-trained Deep Learning models for Flower Recognition

Download as PPTX, PDF3 likes1,868 views

Using Keras CNN pre-trained deep learning model for recognizing 17 different types of flowers. The accuracy is high i.e. 98.53%.

1 of 28

Downloaded 15 times

Ad

Recommended

project ppt.pptx

project ppt.pptxGYamini22 This document outlines a project to develop a system for detecting motorcyclists who are violating helmet laws using image processing and convolutional neural networks. The system is designed to detect motorbikes, determine if the rider is wearing a helmet or not, and if not, extract and recognize the license plate number. The document includes sections on the abstract, introduction, objectives, system analysis, specification, design including UML diagrams, modules, inputs/outputs, and conclusion.

Data Augmentation

Data AugmentationMd Tajul Islam The document discusses data augmentation techniques for improving machine learning models. It begins with definitions of data augmentation and reasons for using it, such as enlarging datasets and preventing overfitting. Examples of data augmentation for images, text, and audio are provided. The document then demonstrates how to perform data augmentation for natural language processing tasks like text classification. It shows an example of augmenting a movie review dataset and evaluating a text classifier. Pros and cons of data augmentation are discussed, along with key takeaways about using it to boost performance of models with small datasets.

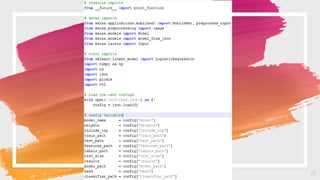

Vehicle Number Plate Recognition System

Vehicle Number Plate Recognition Systemprashantdahake This document presents a seminar on a vehicle number plate recognition system by Prashant Dahake. The system uses image processing techniques to identify vehicles from their number plates in order to increase security and reduce crime. It works by capturing an image of a vehicle, extracting the license plate, recognizing the numbers on the plate, and identifying the vehicle from a database stored on a PC. The system utilizes a series of image processing technologies including OCR to recognize plates more accurately than previous neural network-based methods. It was implemented in Matlab and tested on real images.

Convolution Neural Network (CNN)

Convolution Neural Network (CNN)Suraj Aavula The presentation is made on CNN's which is explained using the image classification problem, the presentation was prepared in perspective of understanding computer vision and its applications. I tried to explain the CNN in the most simple way possible as for my understanding. This presentation helps the beginners of CNN to have a brief idea about the architecture and different layers in the architecture of CNN with the example. Please do refer the references in the last slide for a better idea on working of CNN. In this presentation, I have also discussed the different types of CNN(not all) and the applications of Computer Vision.

Computer Vision - Real Time Face Recognition using Open CV and Python

Computer Vision - Real Time Face Recognition using Open CV and PythonAkash Satamkar This project is used for creation,training and detection/recognition of Face Datasets using Machine learning algorithm on Laptop’s Webcam.

Automatic Attendance system using Facial Recognition

Automatic Attendance system using Facial RecognitionNikyaa7 It is a boimetric based App,which is gradually evolving in the universal boimetric solution with a virtually zero effort from the user end when compared with other boimetric options.

Lecture 8 (Stereo imaging) (Digital Image Processing)

Lecture 8 (Stereo imaging) (Digital Image Processing)VARUN KUMAR This document discusses stereo imaging and camera calibration. It explains the mathematical modeling of the stereo imaging process using transformation matrices to relate camera and world coordinates. Camera calibration is introduced as a way to estimate the parameters of the transformation matrix for a given camera setup. Stereo correspondence and finding correspondences between image points in two images is also covered, along with an example of how stereo imaging geometry can be used to solve the correspondence problem.

Artificial Intelligence, Machine Learning and Deep Learning

Artificial Intelligence, Machine Learning and Deep LearningSujit Pal Slides for talk Abhishek Sharma and I gave at the Gennovation tech talks (https://gennovationtalks.com/) at Genesis. The talk was part of outreach for the Deep Learning Enthusiasts meetup group at San Francisco. My part of the talk is covered from slides 19-34.

Semi-Supervised Learning

Semi-Supervised LearningLukas Tencer Review presentation about Semi-Supervised techniques in Machine Learning. Presentation was done as part of Montreal Data series.

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2021/09/an-introduction-to-data-augmentation-techniques-in-ml-frameworks-a-presentation-from-amd/

Rajy Rawther, PMTS Software Architect at AMD, presents the “Introduction to Data Augmentation Techniques in ML Frameworks” tutorial at the May 2021 Embedded Vision Summit.

Data augmentation is a set of techniques that expand the diversity of data available for training machine learning models by generating new data from existing data. This talk introduces different types of data augmentation techniques as well as their uses in various training scenarios.

Rawther explores some built-in augmentation methods in popular ML frameworks like PyTorch and TensorFlow. She also discusses some tips and tricks that are commonly used to randomly select parameters to avoid having model overfit to a particular dataset.

automatic number plate recognition

automatic number plate recognitionSairam Taduvai Automatic number plate recognition (ANPR) uses optical character recognition on images to read vehicle registration plates. It has seven elements: cameras, illumination, frame grabbers, computers, software, hardware, and databases. ANPR detects vehicles, captures plate images, and processes the images to recognize plates. It has advantages like improving safety and reducing crime. Applications include parking, access control, tolling, border control, and traffic monitoring.

Transfer Learning

Transfer LearningHichem Felouat The document discusses transfer learning and building complex models using Keras and TensorFlow. It provides examples of using the functional API to build models with multiple inputs and outputs. It also discusses reusing pretrained layers from models like ResNet, Xception, and VGG to perform transfer learning for new tasks with limited labeled data. Freezing pretrained layers initially and then training the entire model is recommended for transfer learning.

Hidden Markov Model & It's Application in Python

Hidden Markov Model & It's Application in PythonAbhay Dodiya This document provides an overview of Hidden Markov Models (HMM) including:

- The three main elements of HMMs - forward-backward algorithm for evaluation, Baum-Welch algorithm for learning parameters, and Viterbi algorithm for decoding states.

- An example of using HMM for weather prediction with two states (sunny, rainy) and three observations (walk, shop, travel).

- How HMMs can be applied in Python to model stock market returns using a Gaussian model with daily NIFTY index data over 10 years.

Facial emotion detection on babies' emotional face using Deep Learning.

Facial emotion detection on babies' emotional face using Deep Learning.Takrim Ul Islam Laskar phase- 1

Face Detection.

Facial Landmark detection.

phase- 2

Neural Network Training and Testing.

validation and implementation.

phase - 1 has been completed successfully.

Automated attendance system based on facial recognition

Automated attendance system based on facial recognitionDhanush Kasargod A MATLAB based system to take attendance in a classroom automatically using a camera. This project was carried out as a final year project in our Electronics and Communications Engineering course. The entire MATLAB code I've uploaded it in mathworks.com. Also the entire report will be available at academia.edu page. Will be delighted to hear from you.

Facial Emotion Recognition: A Deep Learning approach

Facial Emotion Recognition: A Deep Learning approachAshwinRachha Neural Networks lie at the apogee of Machine Learning algorithms. With a large set of data and automatic feature selection and extraction process, Convolutional Neural Networks are second to none. Neural Networks can be very effective in classification problems.

Facial Emotion Recognition is a technology that helps companies and individuals evaluate customers and optimize their products and services by most relevant and pertinent feedback.

Edge linking in image processing

Edge linking in image processingVARUN KUMAR This document discusses image segmentation techniques, specifically linking edge points through local and global processing. Local processing involves linking edge-detected pixels that are similar in gradient strength and direction within a neighborhood. Global processing uses the Hough transform to link edge points into lines by mapping points in the image space to the parameter space of slope-intercept or polar coordinates. Thresholding in parameter space identifies coherent lines composed of edge points. The Hough transform allows finding lines even if there are gaps or other defects in detected edge points.

Object detection presentation

Object detection presentationAshwinBicholiya The document describes a project that aims to develop a mobile application for real-time object and pose detection. The application will take in a real-time image as input and output bounding boxes identifying the objects in the image along with their class. The methodology involves preprocessing the image, then using the YOLO framework for object classification and localization. The goals are to achieve high accuracy detection that can be used for applications like vehicle counting and human activity recognition.

AUTOMATIC LICENSE PLATE RECOGNITION SYSTEM FOR INDIAN VEHICLE IDENTIFICATION ...

AUTOMATIC LICENSE PLATE RECOGNITION SYSTEM FOR INDIAN VEHICLE IDENTIFICATION ...Kuntal Bhowmick Automatic License Plate Recognition (ANPR) is a practical application of image processing which uses number (license) plate is used to identify the vehicle. The aim is to design an efficient automatic vehicle identification system by using the

vehicle license plate. The system is implemented on the entrance for security control of a highly restricted area like

military zones or area around top government offices e.g.Parliament, Supreme Court etc.

It is worth mentioning that there is a scarcity in researches that introduce an automatic number plate recognition for indian vechicles.In this paper, a new algorithm is presented for Indian vehicle’s number plate recognition system. The proposed algorithm consists of two major parts: plate region extraction and plate recognition.Vehicle number plate region is extracted using the image segmentation in a vechicle image.Optical character recognition technique is used for the character recognition. And finally the resulting data is used to compare with the records on a database so as to come up with the specific information like the vehicle’s owner, registration state, address, etc.

The performance of the proposed algorithm has been tested on real license plate images of indian vechicles. Based on the experimental results, we noted that our algorithm shows superior performance special in number plate recognition phase.

Presentation on FACE MASK DETECTION

Presentation on FACE MASK DETECTIONShantaJha2 This document describes a minor project on developing a face mask detector using computer vision and deep learning techniques. The project aims to create a model that can detect faces with and without masks using OpenCV, Keras/TensorFlow. A two-class classifier was trained on a mask/no mask dataset to obtain 99% accuracy. The fine-tuned MobileNetV2 model can accurately detect faces and identify whether masks are being worn, making it suitable for deployment on embedded systems.

Face detection

Face detectionAfsanaLaskar1 This document summarizes a student project on developing an image-based attendance system using face recognition. It was submitted by two students, Swarup Das and Somodeep Seal, to fulfill the requirements for a Bachelor of Technology degree. The project involved building a system that can automatically detect faces in images and identify students to mark attendance. It aimed to streamline the attendance process and reduce administrative work for faculty compared to traditional paper-based methods. The document includes sections on background, methodology, implementation, results and future work. It discusses using computer vision and machine learning algorithms like Haar cascade for face detection and recognition.

Object detection and Instance Segmentation

Object detection and Instance SegmentationHichem Felouat The document discusses object detection and instance segmentation models like YOLOv5, Faster R-CNN, EfficientDet, Mask R-CNN, and TensorFlow's object detection API. It provides information on labeling images with bounding boxes for training these models, including open-source and commercial annotation tools. The document also covers evaluating object detection models using metrics like mean average precision (mAP) and intersection over union (IoU). It includes an example of training YOLOv5 on a custom dataset.

Facial Expression Recognition System using Deep Convolutional Neural Networks.

Facial Expression Recognition System using Deep Convolutional Neural Networks.Sandeep Wakchaure This presentation tells about how Deep convolutional neural networs is used in facial expression recognition.

Simple Introduction to AutoEncoder

Simple Introduction to AutoEncoderJun Lang The document introduces autoencoders, which are neural networks that compress an input into a lower-dimensional code and then reconstruct the output from that code. It discusses that autoencoders can be trained using an unsupervised pre-training method called restricted Boltzmann machines to minimize the reconstruction error. Autoencoders can be used for dimensionality reduction, document retrieval by compressing documents into codes, and data visualization by compressing high-dimensional data points into 2D for plotting with different categories colored separately.

Transfer Learning: An overview

Transfer Learning: An overviewjins0618 Transfer learning aims to improve learning in a target domain by leveraging knowledge from a related source domain. It is useful when the target domain has limited labeled data. There are several approaches, including instance-based approaches that reweight or resample source instances, and feature-based approaches that learn a transformation to align features across domains. Spectral feature alignment is one technique that builds a graph of correlations between pivot features shared across domains and domain-specific features, then applies spectral clustering to derive new shared features.

Face detection presentation slide

Face detection presentation slideSanjoy Dutta The slide was prepared on the purpose of presentation of our project face detection highlighting the basics of theory used and project details like goal, approach. Hope it's helpful.

Face Recognition Technology

Face Recognition TechnologyShravan Halankar Humans often use faces to recognize individuals, and advancements in computing capability over the past few decades now enable similar recognitions automatically. Early facial recognition algorithms used simple geometric models, but the recognition process has now matured into a science of sophisticated mathematical representations and matching processes. Major advancements and initiatives in the past 10 to 15 years have propelled facial recognition technology into the spotlight. Facial recognition can be used for both verification and identification.

Case study on deep learning

Case study on deep learningHarshitBarde This document provides an overview of deep learning including:

- A brief history of deep learning from 1943 to present day.

- An explanation of what deep learning is and how it works using neural networks similar to the human brain.

- Descriptions of common deep learning architectures like deep neural networks, deep belief networks, and recurrent neural networks.

- Examples of types of deep learning networks including feed forward neural networks and recurrent neural networks.

- Applications of deep learning in areas like computer vision, natural language processing, robotics, and more.

Hands On Intro to Node.js

Hands On Intro to Node.jsChris Cowan A hands on journey to awesomeness. Learn the very basics of using Node.js with 5 basic exercises to get you started.

Deployment with ExpressionEngine

Deployment with ExpressionEngineGreen Egg Media An overview of deployment methods and procedures for ExpressionEngine 1 and 2.

Originally from the November 15th BostonEErs meeting.

Ad

More Related Content

What's hot (20)

Semi-Supervised Learning

Semi-Supervised LearningLukas Tencer Review presentation about Semi-Supervised techniques in Machine Learning. Presentation was done as part of Montreal Data series.

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2021/09/an-introduction-to-data-augmentation-techniques-in-ml-frameworks-a-presentation-from-amd/

Rajy Rawther, PMTS Software Architect at AMD, presents the “Introduction to Data Augmentation Techniques in ML Frameworks” tutorial at the May 2021 Embedded Vision Summit.

Data augmentation is a set of techniques that expand the diversity of data available for training machine learning models by generating new data from existing data. This talk introduces different types of data augmentation techniques as well as their uses in various training scenarios.

Rawther explores some built-in augmentation methods in popular ML frameworks like PyTorch and TensorFlow. She also discusses some tips and tricks that are commonly used to randomly select parameters to avoid having model overfit to a particular dataset.

automatic number plate recognition

automatic number plate recognitionSairam Taduvai Automatic number plate recognition (ANPR) uses optical character recognition on images to read vehicle registration plates. It has seven elements: cameras, illumination, frame grabbers, computers, software, hardware, and databases. ANPR detects vehicles, captures plate images, and processes the images to recognize plates. It has advantages like improving safety and reducing crime. Applications include parking, access control, tolling, border control, and traffic monitoring.

Transfer Learning

Transfer LearningHichem Felouat The document discusses transfer learning and building complex models using Keras and TensorFlow. It provides examples of using the functional API to build models with multiple inputs and outputs. It also discusses reusing pretrained layers from models like ResNet, Xception, and VGG to perform transfer learning for new tasks with limited labeled data. Freezing pretrained layers initially and then training the entire model is recommended for transfer learning.

Hidden Markov Model & It's Application in Python

Hidden Markov Model & It's Application in PythonAbhay Dodiya This document provides an overview of Hidden Markov Models (HMM) including:

- The three main elements of HMMs - forward-backward algorithm for evaluation, Baum-Welch algorithm for learning parameters, and Viterbi algorithm for decoding states.

- An example of using HMM for weather prediction with two states (sunny, rainy) and three observations (walk, shop, travel).

- How HMMs can be applied in Python to model stock market returns using a Gaussian model with daily NIFTY index data over 10 years.

Facial emotion detection on babies' emotional face using Deep Learning.

Facial emotion detection on babies' emotional face using Deep Learning.Takrim Ul Islam Laskar phase- 1

Face Detection.

Facial Landmark detection.

phase- 2

Neural Network Training and Testing.

validation and implementation.

phase - 1 has been completed successfully.

Automated attendance system based on facial recognition

Automated attendance system based on facial recognitionDhanush Kasargod A MATLAB based system to take attendance in a classroom automatically using a camera. This project was carried out as a final year project in our Electronics and Communications Engineering course. The entire MATLAB code I've uploaded it in mathworks.com. Also the entire report will be available at academia.edu page. Will be delighted to hear from you.

Facial Emotion Recognition: A Deep Learning approach

Facial Emotion Recognition: A Deep Learning approachAshwinRachha Neural Networks lie at the apogee of Machine Learning algorithms. With a large set of data and automatic feature selection and extraction process, Convolutional Neural Networks are second to none. Neural Networks can be very effective in classification problems.

Facial Emotion Recognition is a technology that helps companies and individuals evaluate customers and optimize their products and services by most relevant and pertinent feedback.

Edge linking in image processing

Edge linking in image processingVARUN KUMAR This document discusses image segmentation techniques, specifically linking edge points through local and global processing. Local processing involves linking edge-detected pixels that are similar in gradient strength and direction within a neighborhood. Global processing uses the Hough transform to link edge points into lines by mapping points in the image space to the parameter space of slope-intercept or polar coordinates. Thresholding in parameter space identifies coherent lines composed of edge points. The Hough transform allows finding lines even if there are gaps or other defects in detected edge points.

Object detection presentation

Object detection presentationAshwinBicholiya The document describes a project that aims to develop a mobile application for real-time object and pose detection. The application will take in a real-time image as input and output bounding boxes identifying the objects in the image along with their class. The methodology involves preprocessing the image, then using the YOLO framework for object classification and localization. The goals are to achieve high accuracy detection that can be used for applications like vehicle counting and human activity recognition.

AUTOMATIC LICENSE PLATE RECOGNITION SYSTEM FOR INDIAN VEHICLE IDENTIFICATION ...

AUTOMATIC LICENSE PLATE RECOGNITION SYSTEM FOR INDIAN VEHICLE IDENTIFICATION ...Kuntal Bhowmick Automatic License Plate Recognition (ANPR) is a practical application of image processing which uses number (license) plate is used to identify the vehicle. The aim is to design an efficient automatic vehicle identification system by using the

vehicle license plate. The system is implemented on the entrance for security control of a highly restricted area like

military zones or area around top government offices e.g.Parliament, Supreme Court etc.

It is worth mentioning that there is a scarcity in researches that introduce an automatic number plate recognition for indian vechicles.In this paper, a new algorithm is presented for Indian vehicle’s number plate recognition system. The proposed algorithm consists of two major parts: plate region extraction and plate recognition.Vehicle number plate region is extracted using the image segmentation in a vechicle image.Optical character recognition technique is used for the character recognition. And finally the resulting data is used to compare with the records on a database so as to come up with the specific information like the vehicle’s owner, registration state, address, etc.

The performance of the proposed algorithm has been tested on real license plate images of indian vechicles. Based on the experimental results, we noted that our algorithm shows superior performance special in number plate recognition phase.

Presentation on FACE MASK DETECTION

Presentation on FACE MASK DETECTIONShantaJha2 This document describes a minor project on developing a face mask detector using computer vision and deep learning techniques. The project aims to create a model that can detect faces with and without masks using OpenCV, Keras/TensorFlow. A two-class classifier was trained on a mask/no mask dataset to obtain 99% accuracy. The fine-tuned MobileNetV2 model can accurately detect faces and identify whether masks are being worn, making it suitable for deployment on embedded systems.

Face detection

Face detectionAfsanaLaskar1 This document summarizes a student project on developing an image-based attendance system using face recognition. It was submitted by two students, Swarup Das and Somodeep Seal, to fulfill the requirements for a Bachelor of Technology degree. The project involved building a system that can automatically detect faces in images and identify students to mark attendance. It aimed to streamline the attendance process and reduce administrative work for faculty compared to traditional paper-based methods. The document includes sections on background, methodology, implementation, results and future work. It discusses using computer vision and machine learning algorithms like Haar cascade for face detection and recognition.

Object detection and Instance Segmentation

Object detection and Instance SegmentationHichem Felouat The document discusses object detection and instance segmentation models like YOLOv5, Faster R-CNN, EfficientDet, Mask R-CNN, and TensorFlow's object detection API. It provides information on labeling images with bounding boxes for training these models, including open-source and commercial annotation tools. The document also covers evaluating object detection models using metrics like mean average precision (mAP) and intersection over union (IoU). It includes an example of training YOLOv5 on a custom dataset.

Facial Expression Recognition System using Deep Convolutional Neural Networks.

Facial Expression Recognition System using Deep Convolutional Neural Networks.Sandeep Wakchaure This presentation tells about how Deep convolutional neural networs is used in facial expression recognition.

Simple Introduction to AutoEncoder

Simple Introduction to AutoEncoderJun Lang The document introduces autoencoders, which are neural networks that compress an input into a lower-dimensional code and then reconstruct the output from that code. It discusses that autoencoders can be trained using an unsupervised pre-training method called restricted Boltzmann machines to minimize the reconstruction error. Autoencoders can be used for dimensionality reduction, document retrieval by compressing documents into codes, and data visualization by compressing high-dimensional data points into 2D for plotting with different categories colored separately.

Transfer Learning: An overview

Transfer Learning: An overviewjins0618 Transfer learning aims to improve learning in a target domain by leveraging knowledge from a related source domain. It is useful when the target domain has limited labeled data. There are several approaches, including instance-based approaches that reweight or resample source instances, and feature-based approaches that learn a transformation to align features across domains. Spectral feature alignment is one technique that builds a graph of correlations between pivot features shared across domains and domain-specific features, then applies spectral clustering to derive new shared features.

Face detection presentation slide

Face detection presentation slideSanjoy Dutta The slide was prepared on the purpose of presentation of our project face detection highlighting the basics of theory used and project details like goal, approach. Hope it's helpful.

Face Recognition Technology

Face Recognition TechnologyShravan Halankar Humans often use faces to recognize individuals, and advancements in computing capability over the past few decades now enable similar recognitions automatically. Early facial recognition algorithms used simple geometric models, but the recognition process has now matured into a science of sophisticated mathematical representations and matching processes. Major advancements and initiatives in the past 10 to 15 years have propelled facial recognition technology into the spotlight. Facial recognition can be used for both verification and identification.

Case study on deep learning

Case study on deep learningHarshitBarde This document provides an overview of deep learning including:

- A brief history of deep learning from 1943 to present day.

- An explanation of what deep learning is and how it works using neural networks similar to the human brain.

- Descriptions of common deep learning architectures like deep neural networks, deep belief networks, and recurrent neural networks.

- Examples of types of deep learning networks including feed forward neural networks and recurrent neural networks.

- Applications of deep learning in areas like computer vision, natural language processing, robotics, and more.

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...

“An Introduction to Data Augmentation Techniques in ML Frameworks,” a Present...Edge AI and Vision Alliance

Similar to Keras CNN Pre-trained Deep Learning models for Flower Recognition (20)

Hands On Intro to Node.js

Hands On Intro to Node.jsChris Cowan A hands on journey to awesomeness. Learn the very basics of using Node.js with 5 basic exercises to get you started.

Deployment with ExpressionEngine

Deployment with ExpressionEngineGreen Egg Media An overview of deployment methods and procedures for ExpressionEngine 1 and 2.

Originally from the November 15th BostonEErs meeting.

Data_Processing_Program

Data_Processing_ProgramNeil Dahlqvist The data processing program creates decision rules from a dataset using the ID3 algorithm. It cleanses data by removing inconsistencies and selects attributes using thresholds. The program builds an ID3 tree visualized in another window. Users can prune the tree and generate rules to test using different testing tools. The program has menus and tools to process data, visualize results, and test rule accuracy.

Question IYou are going to use the semaphores for process sy.docx

Question IYou are going to use the semaphores for process sy.docxaudeleypearl Question I

You are going to use the semaphores for process synchronization. Therefore, you are asked to develop a consumer and producer multithreaded program.

Let us assume, that we have a thread (producer, we will call it producer_thread) reading data (positive integer numbers) from the keyboard, entered by a user to be stored in an array (dynamic array). (Assume that the array can hold all numbers entered without overflow.)

Another thread (consumer, we will call it consumer_thread) should read data from the array and write them into a file. This thread should run concurrently with the producer (producer_thread).

Your program should make sure that the consumer_thread can read from the array only after the producer_thread has stored new data. Both threads will stop when the user enters a negative number (well synchronized).

Another thread (testing_thread) should start reading the array data as well as the file data and display them on the screen in order to verify if the consumer and producer have worked in a correctly synchronized fashion. This thread should not be synchronized with other threads, it is intended for testing that consumer thread is synchronized with produce thread.

Provide your tutor with the source code as well as screen snapshots that show the work of the testing_thread.

1

TM298_TMA_Q2/build.xml

Builds, tests, and runs the project TM298_TMA_Q2.

TM298_TMA_Q2/manifest.mf

Manifest-Version: 1.0

X-COMMENT: Main-Class will be added automatically by build

TM298_TMA_Q2/nbproject/build-impl.xml

...

Data Storage In Android

Data Storage In Android Aakash Ugale The document discusses various data storage options in Android including shared preferences, internal storage, external storage, SQLite databases, and network/cloud storage. It provides details on how to use shared preferences to store private data in key-value pairs, how to read and write files to internal and external storage, how to create and manage SQLite databases to store structured data, and lists some popular cloud storage providers.

Formats

FormatsLee Dohyup The document discusses the internal structure of Python eggs. There are two main formats for Python eggs: .egg and .egg-info. The .egg format packages the code and resources into a single directory or zipfile, while the .egg-info format places metadata adjacent to the code and resources. Both formats can include Python code, resources, and metadata, but each is optimized for different purposes like distribution, installation, or compatibility. Project metadata is stored in files like PKG-INFO, requires.txt, and entry_points.txt to describe dependencies and plugins.

Data load utility

Data load utilityLaxmi Kanth Kshatriya This document provides tutorials for customizing the Data Load utility for various tasks related to loading data into WebSphere Commerce applications. The first tutorial demonstrates how to customize the utility to encrypt user password data when loading it from a CSV file into the database. It involves creating a CSV file with password data, configuring the utility, and extending the generic table loader to encrypt passwords. The second tutorial shows how to customize the utility to load data from an XML input file by creating an XML file, custom data reader, and configuring the utility. The third tutorial demonstrates loading data into custom tables by configuring the utility, custom tables, and configuration files.

International Journal of Engineering Research and Development (IJERD)

International Journal of Engineering Research and Development (IJERD)IJERD Editor International Journal of Engineering Research and Development is an international premier peer reviewed open access engineering and technology journal promoting the discovery, innovation, advancement and dissemination of basic and transitional knowledge in engineering, technology and related disciplines.

Diploma ii cfpc- u-5.2 pointer, structure ,union and intro to file handling

Diploma ii cfpc- u-5.2 pointer, structure ,union and intro to file handlingRai University 1) A file is a collection of stored information that can be accessed by a program. Files have names and extensions that identify the file type and contents.

2) To use a file, a program must open the file, read or write data, and then close the file. Files can be opened for input, output, or both.

3) The stream insertion and extraction operators << and >> are used to write and read data to and from files similar to screen input/output. Files must be properly opened before data can be accessed.

pointer, structure ,union and intro to file handling

pointer, structure ,union and intro to file handlingRai University This document discusses file handling in C++. It defines what a file is and how they are named. It explains the process of opening, reading from, writing to, and closing files. It discusses file stream objects and how to check for errors when opening or reading/writing files. Functions like open(), close(), <<, >>, eof() and their usage are explained along with examples. Passing file streams to functions and more detailed error checking using stream state bits are also covered.

Oracle_Retail_Xstore_Suite_Install.pdf

Oracle_Retail_Xstore_Suite_Install.pdfvamshikkrishna1 This document provides instructions to install the Oracle Retail Xstore Software Suite, including:

1) Installing Oracle Database 12c PDB

2) Installing Java JRE and JDK

3) Creating certificates for Xcenter and Xstore mobile

4) Creating the Xcenter database by running SQL scripts

5) Installing the Xstore Office and POS software

Backup of Data Residing on DSS V6 with Backup Exec

Backup of Data Residing on DSS V6 with Backup Execopen-e Backup of Data Residing on DSS V6 with Backup Exec. For more information go to: http://www.open-e.com

ExplanationThe files into which we are writing the date area called.pdf

ExplanationThe files into which we are writing the date area called.pdfaquacare2008 Explanation:The files into which we are writing the date area called as output files.If we want to

write the result of the program in the user required format we can write in these files.

2.2) When storing and retrieving data, a sequential access file is much like a VCR tapes

In floppy disks and hard disks data retrieval and storing will be done in Random wise.But this is

not possible in case of VCR tapes.this is the disadvante of this.That why now a days we are using

hard disks to store data.

3.3) If you want to add data to an new file, what is the correct operation to perform?

Ans) create a file

4.4) To create an output file object, you would use what kind of type?

Ans)ofstream

Syntax: ofstream myfile;

myfile.open (\"D:\\\\bankbal.txt\");

5.5) What do the following statements accomplish?

ofstream theFile;

theFile.open( myFile.txt , ios::app);

Ans) Opens myFile in append mode

ios::app – If we opened a file in this mode,If any content we are writing will be appended to the

end of the file.I.e the new content we are wrinting will be appended to the end of the already

existing content.

6.6) When a file is opened in output mode, the file pointer is positioned at the beginning of the

file.

For input file and output file the pointer will we positioned at the beginning of the file only.

7.7) The is_open function returns what kind of data type?

Ans)bool

If(myfile.is_open) we use this to check whether the file opened successfully or not.

8.8) In general, which of the following contains the least amount of data?

Ans)byte

We can store only a single character or single number or single symbol in this byte.like ‘a’,7,#

9.9) To use an output file, the program must include fstream

Explanation: If we want to use either an input file or an output file we have to include fstream.h

header file. Like #include

10.10) Which of the following is not true about files?

Ans) istream, ostream, and iostream are derived from ifstream, ofstream, and fstream,

respectively.

11.11) Compare and contrast the mode operators ios::in, ios::app, and ios::out. Provide a C++

code segment that illustrates the use of these mode operators.

Ans)

To open a file we have to use this member function.

As a first argument we have to supply the filename.

As a second argument we can supply the mode of opening the file.this is an optional parameter.

Syntax: open(filename,mode);

ios::in – if we want to read the data from the file we have to use this mode.This is used for input

operations.

Code:

ifstream file;

file.open(\"D:\\\\bank.txt\"); or file.open(\"D:\\\\bank.txt\",ios::in);

here we are trying to read the data from the file named bank.txt which is available in D

drive.Here we didn’t specify the mode here.By default the file will be opened in ios::in mode

ios::out - if we want to write the data from to a file we have to use this mode.This is used for

output operations.

Code:

ofstream myfile;

myfile.open (\"D:\\\\bankbal.txt\"); or myfile.open (\"D:\\\\bankbal.txt\", ios::out);

myfile <<”Hell.

How do I get Main-java to compile- the program is incomplete - Need.docx

How do I get Main-java to compile- the program is incomplete - Need.docxJuliang56Parsonso How do I get Main.java to compile, the program is

incomplete ? Need a complete program in Java. Thanks!

Class 3 Chapter 17 (Store data in a file)

Due Wednesday by 8pm Points 10 Submitting a file upload Available Feb 8 at 7pm - Feb 15 at 8pm

Program 3.1 Requirements

Write/Read Test Program using RandomAccessFile and Objects

*Note you should be using methods for the code, everything should not just be dumped in the main method.

Create a new binary data file using RandomAccessFile populate it with integers from 0 to 100

Read the integer binary file and print it to the screen with 10 numbers on each line

Update the integer binary file so that all of the even numbers are 2

Read the integer binary file and print it to the screen with 10 numbers on each line

Create a new binary data file using RandomAccessFile populate it with doubles from 0 to 100

Read the double binary file and print it to the screen with 10 numbers on each line

Update the double binary file so that all of the odd numbers are 1

Read the double binary file and print it to the screen with 10 numbers on each line

Create a new binary data file using RandomAccessFile populate it with Strings "Hello", "Good Morning", "Good Afternoon", "Good Evening"

Note: make all of the Strings the same size.

Read the String binary file and print each String on it's own line

Update the String binary file so that the last String says "Good Bye"

Read the String binary file and print each String on it's own line

Create a new binary data file using ObjectOutputStream

Use the Employee object from EmployeeManager Interface Part 1 to populate the file with the data below:

1, 100, "John", "Wayne", Employee.EmployeeType.CONTRACTOR)

2, 1000.0, "Selina", "Kyle", Employee.EmployeeType.CONTRACTOR

Create a new binary data file using RandomAccessFile

Use the Employee object from EmployeeManager Interface Part 1 to populate the file with the data below:

1, 100, "John", "Wayne", Employee.EmployeeType.CONTRACTOR)

2, 1000.0, "Selina", "Kyle", Employee.EmployeeType.CONTRACTOR

Example output:

0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10,

11, 12, 13, 14, 15, 16, 17, 18, 19, 20,

21, 22, 23, 24, 25, 26, 27, 28, 29, 30,

31, 32, 33, 34, 35, 36, 37, 38, 39, 40,

41, 42, 43, 44, 45, 46, 47, 48, 49, 50,

51, 52, 53, 54, 55, 56, 57, 58, 59, 60,

61, 62, 63, 64, 65, 66, 67, 68, 69, 70,

71, 72, 73, 74, 75, 76, 77, 78, 79, 80,

81, 82, 83, 84, 85, 86, 87, 88, 89, 90,

91, 92, 93, 94, 95, 96, 97, 98, 99, 100,

2, 1, 2, 3, 2, 5, 2, 7, 2, 9, 2,

11, 2, 13, 2, 15, 2, 17, 2, 19, 2,

21, 2, 23, 2, 25, 2, 27, 2, 29, 2,

31, 2, 33, 2, 35, 2, 37, 2, 39, 2,

41, 2, 43, 2, 45, 2, 47, 2, 49, 2,

51, 2, 53, 2, 55, 2, 57, 2, 59, 2,

61, 2, 63, 2, 65, 2, 67, 2, 69, 2,

71, 2, 73, 2, 75, 2, 77, 2, 79, 2,

81, 2, 83, 2, 85, 2, 87, 2, 89, 2,

91, 2, 93, 2, 95, 2, 97, 2, 99, 2,

0.0, 1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0,

11.0, 12.0, 13.0, 14.0, 15.0, 16.0, 17.0, 18.0, 19.0, 20.0,

21.0, 22.0, 23.0, 24.0, 25.0, 26.0, 27.0, 28.0, 29.0.

Princeton's Maximum Entropy Tutorial

Princeton's Maximum Entropy TutorialDashiell Cruz This document provides a tutorial for using Princeton's Maximum Entropy (MaxEnt) software to create an environmental suitability map. The 11 step process includes: 1) converting species occurrence data to a CSV file, 2) downloading and installing MaxEnt, 3) importing environmental layers into GIS software, 4) standardizing the layers, 5) running MaxEnt to model species distributions based on the environmental variables, and 6) interpreting the results, which predict habitat suitability as a map with red areas being most suitable. The example application is using MaxEnt to predict where ancient Chacoan heritage ruins are likely to exist in the San Juan Basin.

Murach: How to use Entity Framework EF Core

Murach: How to use Entity Framework EF CoreMahmoudOHassouna How to use Entity Framework EF Core

Mary Delamater, Joel Murach - Murach's ASP.NET Core MVC-Mike Murach & Associates, Inc. (2020) (1)

Apache Kite

Apache KiteAlwin James The document discusses Apache Kite, which aims to make building data-oriented systems and applications in Hadoop easier. It provides APIs and utilities for defining and interacting with datasets in Hadoop via the Kite Data Module. This includes capabilities for defining entities and schemas, creating and partitioning datasets, loading and viewing data, and performing the full lifecycle of a dataset from generation to annihilation.

.NET Core, ASP.NET Core Course, Session 8

.NET Core, ASP.NET Core Course, Session 8Amin Mesbahi This document provides an overview of configuration in ASP.NET Core, including:

- Configuration supports JSON, XML, INI, environment variables, command line arguments, and custom providers.

- The configuration system provides access to key-value settings from multiple sources in a hierarchical structure.

- Options patterns use classes to represent related settings that can be injected via IOptions and configured from appsettings.

- The Secret Manager tool can store secrets during development without checking them into source control.

Requiring your own files.pptx

Requiring your own files.pptxLovely Professional University js uses asynchronous programming: All APIs of Node. js library are asynchronous (i.e., non-blocking), so a Node. js-based server does not wait for the API to return data. The server calls the API, and in the event that no data is returned, the server moves to the next API the Events module of Node.

Node.js is an open-source and cross-platform JavaScript runtime environment. It is a popular tool for almost any kind of project!

Node.js runs the V8 JavaScript engine, the core of Google Chrome, outside of the browser. This allows Node.js to be very performant.

A Node.js app runs in a single process, without creating a new thread for every request. Node.js provides a set of asynchronous I/O primitives in its standard library that prevent JavaScript code from blocking and generally, libraries in Node.js are written using non-blocking paradigms, making blocking behavior the exception rather than the norm.

When Node.js performs an I/O operation, like reading from the network, accessing a database or the filesystem, instead of blocking the thread and wasting CPU cycles waiting, Node.js will resume the operations when the response comes back.

This allows Node.js to handle thousands of concurrent connections with a single server without introducing the burden of managing thread concurrency, which could be a significant source of bugs.

Node.js has a unique advantage because millions of frontend developers that write JavaScript for the browser are now able to write the server-side code in addition to the client-side code without the need to learn a completely different language.

In Node.js the new ECMAScript standards can be used without problems, as you don't have to wait for all your users to update their browsers - you are in charge of deciding which ECMAScript version to use by changing the Node.js version, and you can also enable specific experimental features by running Node.js with flags.

Ad

More from Fatima Qayyum (18)

GPU Architecture NVIDIA (GTX GeForce 480)

GPU Architecture NVIDIA (GTX GeForce 480)Fatima Qayyum GPU architecture of NVIDIA's GPUs of series GeForce 400 - series GeForce 500. As both use same architecture i.e. Fermi Architecture.

DNS spoofing/poisoning Attack Report (Word Document)

DNS spoofing/poisoning Attack Report (Word Document)Fatima Qayyum This document discusses DNS spoofing/poisoning attacks. It begins by explaining how DNS works, translating domain names to IP addresses. It then discusses different types of DNS attacks, focusing on DNS spoofing/poisoning. The document outlines how DNS spoofing occurs by tampering with DNS resolvers or using malicious DNS servers. It explains the goals of attackers, such as launching denial of service attacks or redirecting users to fake websites. The document also provides ways to exploit DNS spoofing through amplification attacks and discusses recommendations for preventing DNS spoofing, such as checking and flushing DNS settings on Windows systems.

A Low-Cost IoT Application for the Urban Traffic of Vehicles, Based on Wirele...

A Low-Cost IoT Application for the Urban Traffic of Vehicles, Based on Wirele...Fatima Qayyum This document describes a low-cost IoT application to monitor urban traffic using wireless sensors and GSM technology. The system aims to address issues like traffic congestion, accidents, pollution and fuel consumption. It implements a distributed multilayer model using Arduino boards, laser sensors and a GSM module. Real-time traffic data is collected and stored in a database. Data mining techniques are then applied using the Pentaho platform to analyze traffic trends and patterns under different conditions. Functional and unit testing was performed to validate the system. The results from the deployment provide valuable traffic insights to help address congestion problems.

DNS spoofing/poisoning Attack

DNS spoofing/poisoning AttackFatima Qayyum This document discusses DNS spoofing attacks. It defines DNS as the internet's equivalent of a phone book that translates domain names to IP addresses. It describes several types of DNS attacks including denial of service attacks and DNS amplification attacks. It explains how DNS spoofing works by introducing corrupt DNS data that causes the name server to return an incorrect IP address, diverting traffic to the attacker. The document also discusses ways to prevent DNS spoofing such as using DNSSEC to add cryptographic signatures to DNS records and verifying responses.

Gamification of Internet Security by Next Generation CAPTCHAs

Gamification of Internet Security by Next Generation CAPTCHAs Fatima Qayyum The document discusses next generation CAPTCHAs that use simple mini-games instead of text-based challenges to differentiate humans from bots. It describes four types of game CAPTCHAs tested: Bird Shooting, Connecting Dots, Duck Hunt, and Drag-and-Drop. A usability study found the game CAPTCHAs took half the time to complete compared to other types and were easier for users to understand. The game CAPTCHAs also have security advantages over traditional text-based CAPTCHAs and don't require plugins to function across different browsers and devices.

Srs (Software Requirement Specification Document)

Srs (Software Requirement Specification Document) Fatima Qayyum This document provides an overview of an online food delivery system project. It describes using the Rational Unified Process (RUP) model to implement the system in an iterative and incremental way. Key elements include functional requirements like online ordering and payment, non-functional requirements like security, and UML diagrams to model the system. Testing strategies include unit, integration, system, and acceptance testing.

Stress managment

Stress managmentFatima Qayyum description, types of stressors, types of stress, cost of stress, main causes of stress, stress control strategies, stress management approaches, individual and organizational approach

Waterfall model

Waterfall modelFatima Qayyum Waterfall model is a classical life cycle model. which is widely known, understood and used. It is a sequential model for development of softwares etc, and is the most easy one to implement and use.

Artificial Intelligence presentation

Artificial Intelligence presentation Fatima Qayyum Artificial Intelligence presentation about latest technologies (robots: Stevie and Kuri)

Stevie: Elderly Care Robot

Kuri: Home helper friendly Robot

Subnetting

SubnettingFatima Qayyum Subnetting allows dividing a single network into multiple subnets. Each subnet is treated as a separate network and can be a LAN or WAN. Subnetting transforms host bits in the IP address into network bits, creating additional network identifiers from a single address block. The default subnet masks divide networks into classes A, B, and C. An example shows subnetting a Class C network with address 192.168.1.0/24 to create two /25 networks with 126 hosts each by using 1 host bit as a network bit. Transforming 2 host bits creates four /26 networks each with 62 hosts.

UNIX Operating System

UNIX Operating SystemFatima Qayyum Unix operating system is breifly discussed including the process management, memory management anf file management in UNIX OS.

Define & Undefine in SQL

Define & Undefine in SQLFatima Qayyum The document discusses the DEFINE and UNDEFINE commands in Oracle. The DEFINE command is used to define user variables and assign values, display the value of a specific variable, or display values of all variables. The UNDEFINE command deletes variable definitions and can delete multiple variables with one command by listing them. A variable remains defined until undefined or when exiting SQL Plus.

Security System using XOR & NOR

Security System using XOR & NOR Fatima Qayyum This document describes a 4-bit password security system using XOR and NOR gates. The system uses 4 key code switches to hold the static password and 4 data entry switches to input a code. If the input code matches the static code, a green LED lights up and the lock opens. If the codes do not match, a red LED lights up as an alarm. The system is implemented on a breadboard using XOR and NOR gates, switches, LEDs, resistors and batteries according to a provided circuit diagram and truth tables. Photos show the system lighting the green LED when codes match and the red LED when they do not match.

Communication skills (English) 3

Communication skills (English) 3Fatima Qayyum This document provides guidance for teaching communication skills to middle school students through various listening, speaking, reading, and writing activities. It outlines methods and materials for activities that target listening skills through a passage and questions about hamburgers. Speaking activities include a word game and an activity where students share two truths and a lie. Reading and writing activities provide passages to read and questions to answer, with writing focusing on the structure and composition of effective writing. The document aims to enhance students' communication abilities in multiple areas through engaging exercises.

Creativity and arts presentation (1)

Creativity and arts presentation (1)Fatima Qayyum Creativity

Creative process

Attributes & Features

Relevance with arts

difference between arts and Creativity

BCD Adder

BCD AdderFatima Qayyum The document discusses a 4-bit binary coded decimal (BCD) adder. A BCD adder can add two 4-bit BCD numbers and produce a 4-bit BCD sum. If the sum exceeds 9 in decimal, a carry is generated. The document also mentions full adders, 7-segment displays, and 74LS47 BCD to 7-segment decoder/driver ICs. It provides an explanation of why 6 is added to BCD sums that exceed 9.

World religon (islam & judaism)

World religon (islam & judaism)Fatima Qayyum The document provides information about key similarities and differences between Islam and Judaism. It discusses their concepts of prayer, charity, marriage rituals, funerary practices, and holy days. Both religions believe in one God and prophets, and require prayer, charity, and burial of the deceased. However, they differ in the number of daily prayers, the direction of prayer, and languages used. Charity in Islam is obligatory while optional in Judaism. Marriage and funeral rituals share some elements but also have differences. Their holy days of Jumu'ah and Sabbath involve rest and prayer but have distinctive rules and observances.

Communication Skills

Communication SkillsFatima Qayyum The document discusses various communication skills. It states that listening comprises 45% of communication, speaking 30%, reading 16%, and writing 9%. It provides tips for improving listening skills, such as listening actively and without distraction. It also discusses the importance of speaking skills and provides tips like speaking clearly. Additionally, it covers reading skills like skimming and scanning. Finally, it discusses writing skills and emphasizes choosing the appropriate format and audience as well as avoiding grammatical errors. It includes some related classroom activities to practice these skills.

Ad

Recently uploaded (20)

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Keras CNN Pre-trained Deep Learning models for Flower Recognition

- 1. Keras CNN Pre- trained Deep Learning models for Flower Recognition

- 2. “ 2

- 3. Steps 3 Step 1 Dataset Acquisition Step 3 Testing Step 2 Training Deep Learning

- 6. Data Acquisition o Download the FLOWER17 dataset from here: http://www.robots.ox.ac. uk/~vgg/data/flowers/17/ o Unzip the file and you will see all the 1360 images listed in one single folder named *.jpg. o The FLOWERS17 dataset has 680 images of 17 flower species classes with 40 images per class. 6 o To build our training dataset, we need to create a master folder named dataset, and create Train and Test folder inside it. o Inside train folder, create 17 folders corresponding to the flower species labels.

- 9. 9

- 10. 2. Data Training

- 11. Configuration File ◦ This is the configuration file or the settings file we will be using to provide inputs to our system. ◦ This is just a .json file which is a key-value pair file format to store data effectively. 11

- 12. 12 1. Model: The model key takes the parameters – ’mobilenet’. 2. Weights: The weights key takes the value ’imagenet’ as we are using weights from imagenet. You can also set this to ’None’. 3. Include-top: This key takes the value false specifying that we are going to take the features from any intermediate layer of the network. 4. Test_size: The test_size key takes the value in the range (0.10 - 0.90). This is to make a split between your overall data into training and testing. 5. Seed: The seed key takes any value to reproduce same results everytime you run the code. 6. Num_classes: The num_classes specifies the number of classes or labels considered for the image classification problem.

- 14. “ 14

- 15. “ 15 Output

- 16. Output Files 16

- 17. 17

- 18. 18

- 19. Output 19

- 25. 25

- 26. 26