Kerberos, Token and Hadoop

Download as PPTX, PDF11 likes3,621 views

It introduces and illustrates use cases, benefits and problems for Kerberos deployment on Hadoop; how Token support and TokenPreauth can help solve the problems. It also briefly introduces Haox project, a Java client library for Kerberos.

1 of 40

Downloaded 200 times

![TokenPreauth mechanism (cont’d)

Client principal may exist or not during token validating

and ticket issuing

kinit –X token=[Your-Token], by default ref. ~/.kerbtoken

How token being generated may be out of scope, left for

token authority

Identity Token -> Ticket Granting Ticket, Access Token ->

Service Ticket

Ticket lifetime derived from token SHOULD be in the time

frame of the token

Ticket derived from token may be not renewable

20](https://image.slidesharecdn.com/kerberosonhadoop-141016043552-conversion-gate01/85/Kerberos-Token-and-Hadoop-20-320.jpg)

Ad

Recommended

Hadoop security

Hadoop securityshrey mehrotra - Kerberos is used to authenticate Hadoop services and clients running on different nodes communicating over a non-secure network. It uses tickets for authentication.

- Key configuration changes are required to enable Kerberos authentication in Hadoop including setting hadoop.security.authentication to kerberos and generating keytabs containing principal keys for HDFS services.

- Services are associated with Kerberos principles using keytabs which are then configured for use by the relevant Hadoop processes and services.

Securing Big Data at rest with encryption for Hadoop, Cassandra and MongoDB o...

Securing Big Data at rest with encryption for Hadoop, Cassandra and MongoDB o...Big Data Spain This document discusses securing big data at rest using encryption for Hadoop, Cassandra, and MongoDB on Red Hat. It provides an overview of these NoSQL databases and Hadoop, describes common use cases for big data, and demonstrates how to use encryption solutions like dm-crypt, eCryptfs, and Cloudera Navigator Encrypt to encrypt data for these platforms. It includes steps for profiling processes, adding ACLs, and encrypting data directories for Hadoop, Cassandra, and MongoDB. Performance costs for encryption are typically around 5-10%.

Hadoop Security, Cloudera - Todd Lipcon and Aaron Myers - Hadoop World 2010

Hadoop Security, Cloudera - Todd Lipcon and Aaron Myers - Hadoop World 2010Cloudera, Inc. Hadoop Security

Todd Lipcon and Aaron Myers, Cloudera

Learn more @ http://www.cloudera.com/hadoop/

An Introduction to Kerberos

An Introduction to KerberosShumon Huque This document provides a high-level overview of how Kerberos authentication works. It explains that Kerberos uses a trusted third party called the Key Distribution Center (KDC) to mediate authentication between users and services. The KDC distributes session keys to allow communication and verifies users' identities through cryptographic operations. It also describes how Kerberos implements single sign-on through the use of ticket-granting tickets obtained from the KDC. Some advantages of Kerberos include strong authentication without sending passwords over the network and more convenient single sign-on for users.

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 edition

Hadoop and Kerberos: the Madness Beyond the Gate: January 2016 editionSteve Loughran An update of the "Hadoop and Kerberos: the Madness Beyond the Gate" talk, covering recent work "the Fix Kerberos" JIRA and its first deliverable: KDiag

TriHUG 2/14: Apache Sentry

TriHUG 2/14: Apache Sentrytrihug Deploying enterprise grade security for Hadoop with Apache Sentry (incubating).

Apache Hive is deployed in the vast majority of Hadoop use cases despite the major practical flaws in it's most secure operational mode (Kerberos + User Impersonation).

In this talk we will discuss these flaws and how Apache Sentry addresses them. We will then enable Apache Sentry on a existing cluster. Additional topics will include Hadoop security and Role Based Access Control (RBAC).

Technical tips for secure Apache Hadoop cluster #ApacheConAsia #ApacheCon

Technical tips for secure Apache Hadoop cluster #ApacheConAsia #ApacheConYahoo!デベロッパーネットワーク Presentation slide in ApacheCon Asia 2021 (https://www.apachecon.com/acasia2021/index.html) held on August 8, 2021.

Kerberos Survival Guide - St. Louis Day of .Net

Kerberos Survival Guide - St. Louis Day of .NetJ.D. Wade This document provides an overview and introduction to Kerberos authentication. It discusses the logon process, accessing a web site, troubleshooting Kerberos, and delegation. The presenter JD Wade is a SharePoint consultant who will demonstrate how Kerberos works and common troubleshooting techniques. The agenda includes details on the Kerberos protocol, dependencies, service principal names, and references for further reading.

Bridging the gap: Adding missing client (security) features using OpenLDAP pr...

Bridging the gap: Adding missing client (security) features using OpenLDAP pr...LDAPCon This document discusses using OpenLDAP proxy servers to bridge the gap for "dumb" legacy LDAP clients that lack support for security features like TLS and SASL. It describes two approaches: 1) A (Start)TLS-wrapping proxy that introduces TLS between the client and server. 2) A SASL/GSSAPI-wrapping proxy that uses Kerberos tickets to authenticate to the backend server since not all directories support TLS. It also mentions commercial solutions that perform full conversion from simple binds to SASL/GSSAPI by looking up Kerberos principals and requesting tickets.

Building Open Source Identity Management with FreeIPA

Building Open Source Identity Management with FreeIPALDAPCon FreeIPA is an open source identity management solution that integrates authentication, authorization, policies and other identity management features in a centralized manner. It aims to simplify identity management for Linux and Unix systems in a similar way that Active Directory does for Windows. FreeIPA also allows for integration with Active Directory domains through cross-realm Kerberos trusts, allowing single sign-on for users between Linux and Windows systems.

Kerberos and its application in cross realm operations

Kerberos and its application in cross realm operationsArunangshu Bhakta Here are some ways to mitigate DoS attacks on the foreign KDC:

1. Rate limit AS_REQ requests from unknown users. Drop requests above a threshold.

2. Have the foreign KDC cache authentication failures. Subsequent requests from clients with failures are dropped without processing.

3. Implement challenge-response authentication for unknown users. This adds computational cost to requests making large-scale attacks harder.

4. Use client IP address/port to detect and block sources of excessive requests.

5. Implement authentication via the home KDC only for unknown users, offloading work from the foreign KDC.

6. Use techniques like reverse Turing tests to filter out non-human request sources during

Deep Dive into Keystone Tokens and Lessons Learned

Deep Dive into Keystone Tokens and Lessons LearnedPriti Desai Keystone supports four different types of tokens, UUID, PKI, PKIZ, and Fernet. Let’s take a deep dive into:

Understanding token formats

Pros and Cons of each format in Production

Performance across multiple data centers

Token revocation workflow for each of the formats

Horizon usage of the different token types

We previously deployed UUID and PKI in Production and are now moving towards the latest format, Fernet. We would like to share our lessons learned with different formats and help you decide on which format is suitable for your cloud.

Securing Hadoop by Sr. Principal Technologist Keys Botzum

Securing Hadoop by Sr. Principal Technologist Keys BotzumMapR Technologies Presentation given at the Hadoop DC meetup on July of 2014. Keys presented on why Hadoop should be secured, what it takes, and how to get security beyond the core.

Keys Botzum - Senior Principal Technologist at MapR

Keys Botzum has over 15 years of experience in large scale distributed system design. At MapR his primary responsibility is working with customers as a consultant, but he also teaches classes, contributes to documentation, and works with MapR engineering. Previously he was a Senior Technical Staff Member with IBM and a respected author of many articles on WebSphere Application Server as well as a book. He holds a Masters degree in Computer Science from Stanford University and a B.S. in Applied Mathematics/Computer Science from Carnegie Mellon University.

OpenStack Identity - Keystone (kilo) by Lorenzo Carnevale and Silvio Tavilla

OpenStack Identity - Keystone (kilo) by Lorenzo Carnevale and Silvio TavillaLorenzo Carnevale OpenStack Identity Service (Keystone) seminar.

Distributed Systems course at Engineering and Computer Science (ECS), University of Messina.

By Lorenzo Carnevale and Silvio Tavilla.

Seminar’s topics

❖ OpenStack Identity - Keystone (kilo)

❖ Installation and first configuration of Keystone

❖ Workshop

❖ Identity service configuration

➢ Identity API protection with RBAC

➢ Use Trusts

➢ Certificates for PKI

❖ Hierarchical Projects

❖ Identity API v3 client example

Map r hadoop-security-mar2014 (2)

Map r hadoop-security-mar2014 (2)MapR Technologies This document discusses securing Hadoop with MapR. It begins by explaining why Hadoop security is now important given the sensitive data it handles. It then outlines weaknesses in typical Hadoop deployments. The rest of the document details how MapR secures Hadoop, including wire-level authentication and encryption, authorization integration, and security for ecosystem components like Hive and Oozie without requiring Kerberos.

OpenStack Keystone

OpenStack KeystoneDeepti Ramakrishna Keystone is the identity service for OpenStack. It handles authentication, authorization, and managing service catalogs and endpoints. Keystone provides a user directory and authentication mechanism for other OpenStack services to use. It supports user management, project/tenant isolation, role-based access control and token validation. Keystone uses pluggable backends like SQL, LDAP or Memcached to store user and credential data.

SPS Ozarks 2012: Kerberos Survival Guide

SPS Ozarks 2012: Kerberos Survival GuideJ.D. Wade This document provides an overview and agenda for a Kerberos survival guide presentation. The presentation will cover Kerberos logon process, accessing a web site using Kerberos, miscellaneous Kerberos information, and complex Kerberos configurations. It includes dependencies, service principal names (SPNs), and troubleshooting tools for Kerberos. The presentation aims to provide essential information about Kerberos without overcomplicating details.

Deep Dive: OpenStack Summit (Red Hat Summit 2014)

Deep Dive: OpenStack Summit (Red Hat Summit 2014)Stephen Gordon This deck begins with a high-level overview of where OpenStack Compute (Nova) fits into the overall OpenStack architecture, as demonstrated in Red Hat Enterprise Linux OpenStack Platform. Before illustrating how OpenStack Compute interacts with other OpenStack components.

The session will also provide a grounding in some common Compute terminology and a deep-dive look into key areas of OpenStack Compute, including the:

Compute APIs.

Compute Scheduler.

Compute Conductor.

Compute Service.

Compute Instance lifecycle.

Intertwined with the architectural information are details on horizontally scaling and dividing compute resources as well as customization of the Compute scheduler. You’ll also learn valuable insights into key OpenStack Compute features present in OpenStack Icehouse.

IBM Spectrum Scale Authentication For Object - Deep Dive

IBM Spectrum Scale Authentication For Object - Deep Dive Smita Raut This presentation describes authentication concepts for IBM Spectrum Scale object protocol and the administration guide for the same.

BSides SG Practical Red Teaming Workshop

BSides SG Practical Red Teaming WorkshopAjay Choudhary Practical Red Teaming is a hands-on class designed to teach participants with various techniques and tools for performing red teaming attacks. The goal of the training is to give a red teamer’s perspective to participants who want to go beyond VAPT. This intense course immerses students in a simulated enterprise environment, with multiple domains, up-to-date and patched operating systems. We will cover several phases of a Red Team engagement in depth – Local Privilege escalation, Domain Enumeration, Admin Recon, Lateral movement, Domain Admin privileges etc.

If you want to learn how to perform Red Team operations, sharpen your red teaming skillset, or understand how to defend against modern attacks, Practical Red Teaming is the course for you.

Topics :

• Red Team philosophy/overview

• Red Teaming vs Penetration Testing

• Active Directory Fundamentals – Forests, Domains, OU’s etc

• Assume Breach Methodology

• Insider Attack Simulation

• Introduction to PowerShell

• Initial access methods

• Privilege escalation methods through abuse of misconfigurations

• Domain Enumeration

• Lateral Movement and Pivoting

• Single sign-on in Active Directory

• Abusing built-in functionality for code execution

• Credential Replay

• Domain privileges abuse

• Dumping System and Domain Secrets

• Kerberos – Basics and its Fundamentals

• Kerberos Attack and Defense (Kerberoasting, Silver ticket, Golden ticket attack etc)

https://bsidessg.org/schedule/2019-ajaychoudhary-and-niteshmalviya/

OpenStack Identity - Keystone (liberty) by Lorenzo Carnevale and Silvio Tavilla

OpenStack Identity - Keystone (liberty) by Lorenzo Carnevale and Silvio TavillaLorenzo Carnevale OpenStack Identity Service (Keystone) seminar.

Distributed Systems course at Engineering and Computer Science (ECS), University of Messina.

By Lorenzo Carnevale and Silvio Tavilla.

Seminar’s topics

❖ OpenStack Identity - Keystone (liberty)

❖ Installation and first configuration of Keystone

❖ Identity service configuration

➢ Identity API protection with RBAC

➢ Use Trusts

➢ Certificates for PKI

❖ Hierarchical Projects

❖ Identity API v3 client example

Kerberos Survival Guide: SharePoint Saturday Nashville 2015

Kerberos Survival Guide: SharePoint Saturday Nashville 2015J.D. Wade If it were just BI, Kerberos, and you alone in a jungle, would you be able to survive the encounter? You will after you attend this once in a lifetime event! OK…in reality, if you come to this session, you will understand an important component you need to setup Microsoft Business Intelligence solutions with SharePoint and SQL. You will the learn basics of how Kerberos (an authentication protocol) works, when you want to use it, configuration tips, and what delegation is all about.

A Backend to tie them all?

A Backend to tie them all?LDAPCon The document discusses different types of backends that can be used to store directory data in LDAP servers. It describes in-memory, LDIF, B-tree, MVCC, RDBMS, and NoSQL backends. Each backend has different performance, size, cost and reliability characteristics that make them suitable for different use cases like caching, tests, or storing very large directories.

Kerberos Survival Guide SPS Chicago

Kerberos Survival Guide SPS ChicagoJ.D. Wade This document provides an overview and guide to Kerberos authentication including:

- The logon process involving the KDC and TGTs

- Accessing a web site using Kerberos and the request for a service ticket

- Common troubleshooting steps like checking SPNs and time sync

- Demos of delegation and forms-based authentication

- References for further Kerberos reading

Open Source Security Tools for Big Data

Open Source Security Tools for Big DataRommel Garcia Bringing together different open source security tools to secure Hadoop clusters from data to services to transmission.

Keystone deep dive 1

Keystone deep dive 1Jsonr4 The document summarizes an OpenStack Keystone meetup presentation on identity services. It introduces key concepts of Keystone like actors, credentials, tokens, roles, projects and domains. It covers the Keystone architecture and configuration file. Demos were presented on creating entities in DevStack and integrating Keystone with an IPA/LDAP backend. The presentation concludes with references and information on getting involved in the Keystone community.

Building IAM for OpenStack

Building IAM for OpenStackSteve Martinelli Building IAM for OpenStack, presented at CIS (Cloud Identity Summit) 2015.

Discuss Identity Sources, Authentication, Managing Access and Federating Identities

Security_of_openstack_keystone

Security_of_openstack_keystoneUT, San Antonio This document summarizes two experiments on testing the security of Keystone, the authentication module of OpenStack. The first experiment examines Keystone's resiliency to DDoS attacks by monitoring processing time and resource usage under simulated attacks. The second analyzes the randomness of generated tokens by generating a large number of tokens and analyzing their uniqueness. Challenges mentioned include incomplete documentation of Keystone.

77201924

77201924IJRAT This document summarizes a research paper that proposes using blockchain technology for authentication in Hadoop instead of the traditional Kerberos protocol. It describes some security issues with Kerberos, such as single point of failure and replay attacks. The authors created a model for a distributed authentication mechanism using blockchain concepts that is integrated with the HDFS client. Key features of the blockchain authentication method include decentralized authentication without keys, an unalterable record of transactions, zero single points of failure, and prevention of data theft. The implementation uses a private blockchain to store user information that is verified for authentication to access data on HDFS.

Ad

More Related Content

What's hot (20)

Bridging the gap: Adding missing client (security) features using OpenLDAP pr...

Bridging the gap: Adding missing client (security) features using OpenLDAP pr...LDAPCon This document discusses using OpenLDAP proxy servers to bridge the gap for "dumb" legacy LDAP clients that lack support for security features like TLS and SASL. It describes two approaches: 1) A (Start)TLS-wrapping proxy that introduces TLS between the client and server. 2) A SASL/GSSAPI-wrapping proxy that uses Kerberos tickets to authenticate to the backend server since not all directories support TLS. It also mentions commercial solutions that perform full conversion from simple binds to SASL/GSSAPI by looking up Kerberos principals and requesting tickets.

Building Open Source Identity Management with FreeIPA

Building Open Source Identity Management with FreeIPALDAPCon FreeIPA is an open source identity management solution that integrates authentication, authorization, policies and other identity management features in a centralized manner. It aims to simplify identity management for Linux and Unix systems in a similar way that Active Directory does for Windows. FreeIPA also allows for integration with Active Directory domains through cross-realm Kerberos trusts, allowing single sign-on for users between Linux and Windows systems.

Kerberos and its application in cross realm operations

Kerberos and its application in cross realm operationsArunangshu Bhakta Here are some ways to mitigate DoS attacks on the foreign KDC:

1. Rate limit AS_REQ requests from unknown users. Drop requests above a threshold.

2. Have the foreign KDC cache authentication failures. Subsequent requests from clients with failures are dropped without processing.

3. Implement challenge-response authentication for unknown users. This adds computational cost to requests making large-scale attacks harder.

4. Use client IP address/port to detect and block sources of excessive requests.

5. Implement authentication via the home KDC only for unknown users, offloading work from the foreign KDC.

6. Use techniques like reverse Turing tests to filter out non-human request sources during

Deep Dive into Keystone Tokens and Lessons Learned

Deep Dive into Keystone Tokens and Lessons LearnedPriti Desai Keystone supports four different types of tokens, UUID, PKI, PKIZ, and Fernet. Let’s take a deep dive into:

Understanding token formats

Pros and Cons of each format in Production

Performance across multiple data centers

Token revocation workflow for each of the formats

Horizon usage of the different token types

We previously deployed UUID and PKI in Production and are now moving towards the latest format, Fernet. We would like to share our lessons learned with different formats and help you decide on which format is suitable for your cloud.

Securing Hadoop by Sr. Principal Technologist Keys Botzum

Securing Hadoop by Sr. Principal Technologist Keys BotzumMapR Technologies Presentation given at the Hadoop DC meetup on July of 2014. Keys presented on why Hadoop should be secured, what it takes, and how to get security beyond the core.

Keys Botzum - Senior Principal Technologist at MapR

Keys Botzum has over 15 years of experience in large scale distributed system design. At MapR his primary responsibility is working with customers as a consultant, but he also teaches classes, contributes to documentation, and works with MapR engineering. Previously he was a Senior Technical Staff Member with IBM and a respected author of many articles on WebSphere Application Server as well as a book. He holds a Masters degree in Computer Science from Stanford University and a B.S. in Applied Mathematics/Computer Science from Carnegie Mellon University.

OpenStack Identity - Keystone (kilo) by Lorenzo Carnevale and Silvio Tavilla

OpenStack Identity - Keystone (kilo) by Lorenzo Carnevale and Silvio TavillaLorenzo Carnevale OpenStack Identity Service (Keystone) seminar.

Distributed Systems course at Engineering and Computer Science (ECS), University of Messina.

By Lorenzo Carnevale and Silvio Tavilla.

Seminar’s topics

❖ OpenStack Identity - Keystone (kilo)

❖ Installation and first configuration of Keystone

❖ Workshop

❖ Identity service configuration

➢ Identity API protection with RBAC

➢ Use Trusts

➢ Certificates for PKI

❖ Hierarchical Projects

❖ Identity API v3 client example

Map r hadoop-security-mar2014 (2)

Map r hadoop-security-mar2014 (2)MapR Technologies This document discusses securing Hadoop with MapR. It begins by explaining why Hadoop security is now important given the sensitive data it handles. It then outlines weaknesses in typical Hadoop deployments. The rest of the document details how MapR secures Hadoop, including wire-level authentication and encryption, authorization integration, and security for ecosystem components like Hive and Oozie without requiring Kerberos.

OpenStack Keystone

OpenStack KeystoneDeepti Ramakrishna Keystone is the identity service for OpenStack. It handles authentication, authorization, and managing service catalogs and endpoints. Keystone provides a user directory and authentication mechanism for other OpenStack services to use. It supports user management, project/tenant isolation, role-based access control and token validation. Keystone uses pluggable backends like SQL, LDAP or Memcached to store user and credential data.

SPS Ozarks 2012: Kerberos Survival Guide

SPS Ozarks 2012: Kerberos Survival GuideJ.D. Wade This document provides an overview and agenda for a Kerberos survival guide presentation. The presentation will cover Kerberos logon process, accessing a web site using Kerberos, miscellaneous Kerberos information, and complex Kerberos configurations. It includes dependencies, service principal names (SPNs), and troubleshooting tools for Kerberos. The presentation aims to provide essential information about Kerberos without overcomplicating details.

Deep Dive: OpenStack Summit (Red Hat Summit 2014)

Deep Dive: OpenStack Summit (Red Hat Summit 2014)Stephen Gordon This deck begins with a high-level overview of where OpenStack Compute (Nova) fits into the overall OpenStack architecture, as demonstrated in Red Hat Enterprise Linux OpenStack Platform. Before illustrating how OpenStack Compute interacts with other OpenStack components.

The session will also provide a grounding in some common Compute terminology and a deep-dive look into key areas of OpenStack Compute, including the:

Compute APIs.

Compute Scheduler.

Compute Conductor.

Compute Service.

Compute Instance lifecycle.

Intertwined with the architectural information are details on horizontally scaling and dividing compute resources as well as customization of the Compute scheduler. You’ll also learn valuable insights into key OpenStack Compute features present in OpenStack Icehouse.

IBM Spectrum Scale Authentication For Object - Deep Dive

IBM Spectrum Scale Authentication For Object - Deep Dive Smita Raut This presentation describes authentication concepts for IBM Spectrum Scale object protocol and the administration guide for the same.

BSides SG Practical Red Teaming Workshop

BSides SG Practical Red Teaming WorkshopAjay Choudhary Practical Red Teaming is a hands-on class designed to teach participants with various techniques and tools for performing red teaming attacks. The goal of the training is to give a red teamer’s perspective to participants who want to go beyond VAPT. This intense course immerses students in a simulated enterprise environment, with multiple domains, up-to-date and patched operating systems. We will cover several phases of a Red Team engagement in depth – Local Privilege escalation, Domain Enumeration, Admin Recon, Lateral movement, Domain Admin privileges etc.

If you want to learn how to perform Red Team operations, sharpen your red teaming skillset, or understand how to defend against modern attacks, Practical Red Teaming is the course for you.

Topics :

• Red Team philosophy/overview

• Red Teaming vs Penetration Testing

• Active Directory Fundamentals – Forests, Domains, OU’s etc

• Assume Breach Methodology

• Insider Attack Simulation

• Introduction to PowerShell

• Initial access methods

• Privilege escalation methods through abuse of misconfigurations

• Domain Enumeration

• Lateral Movement and Pivoting

• Single sign-on in Active Directory

• Abusing built-in functionality for code execution

• Credential Replay

• Domain privileges abuse

• Dumping System and Domain Secrets

• Kerberos – Basics and its Fundamentals

• Kerberos Attack and Defense (Kerberoasting, Silver ticket, Golden ticket attack etc)

https://bsidessg.org/schedule/2019-ajaychoudhary-and-niteshmalviya/

OpenStack Identity - Keystone (liberty) by Lorenzo Carnevale and Silvio Tavilla

OpenStack Identity - Keystone (liberty) by Lorenzo Carnevale and Silvio TavillaLorenzo Carnevale OpenStack Identity Service (Keystone) seminar.

Distributed Systems course at Engineering and Computer Science (ECS), University of Messina.

By Lorenzo Carnevale and Silvio Tavilla.

Seminar’s topics

❖ OpenStack Identity - Keystone (liberty)

❖ Installation and first configuration of Keystone

❖ Identity service configuration

➢ Identity API protection with RBAC

➢ Use Trusts

➢ Certificates for PKI

❖ Hierarchical Projects

❖ Identity API v3 client example

Kerberos Survival Guide: SharePoint Saturday Nashville 2015

Kerberos Survival Guide: SharePoint Saturday Nashville 2015J.D. Wade If it were just BI, Kerberos, and you alone in a jungle, would you be able to survive the encounter? You will after you attend this once in a lifetime event! OK…in reality, if you come to this session, you will understand an important component you need to setup Microsoft Business Intelligence solutions with SharePoint and SQL. You will the learn basics of how Kerberos (an authentication protocol) works, when you want to use it, configuration tips, and what delegation is all about.

A Backend to tie them all?

A Backend to tie them all?LDAPCon The document discusses different types of backends that can be used to store directory data in LDAP servers. It describes in-memory, LDIF, B-tree, MVCC, RDBMS, and NoSQL backends. Each backend has different performance, size, cost and reliability characteristics that make them suitable for different use cases like caching, tests, or storing very large directories.

Kerberos Survival Guide SPS Chicago

Kerberos Survival Guide SPS ChicagoJ.D. Wade This document provides an overview and guide to Kerberos authentication including:

- The logon process involving the KDC and TGTs

- Accessing a web site using Kerberos and the request for a service ticket

- Common troubleshooting steps like checking SPNs and time sync

- Demos of delegation and forms-based authentication

- References for further Kerberos reading

Open Source Security Tools for Big Data

Open Source Security Tools for Big DataRommel Garcia Bringing together different open source security tools to secure Hadoop clusters from data to services to transmission.

Keystone deep dive 1

Keystone deep dive 1Jsonr4 The document summarizes an OpenStack Keystone meetup presentation on identity services. It introduces key concepts of Keystone like actors, credentials, tokens, roles, projects and domains. It covers the Keystone architecture and configuration file. Demos were presented on creating entities in DevStack and integrating Keystone with an IPA/LDAP backend. The presentation concludes with references and information on getting involved in the Keystone community.

Building IAM for OpenStack

Building IAM for OpenStackSteve Martinelli Building IAM for OpenStack, presented at CIS (Cloud Identity Summit) 2015.

Discuss Identity Sources, Authentication, Managing Access and Federating Identities

Security_of_openstack_keystone

Security_of_openstack_keystoneUT, San Antonio This document summarizes two experiments on testing the security of Keystone, the authentication module of OpenStack. The first experiment examines Keystone's resiliency to DDoS attacks by monitoring processing time and resource usage under simulated attacks. The second analyzes the randomness of generated tokens by generating a large number of tokens and analyzing their uniqueness. Challenges mentioned include incomplete documentation of Keystone.

Similar to Kerberos, Token and Hadoop (20)

77201924

77201924IJRAT This document summarizes a research paper that proposes using blockchain technology for authentication in Hadoop instead of the traditional Kerberos protocol. It describes some security issues with Kerberos, such as single point of failure and replay attacks. The authors created a model for a distributed authentication mechanism using blockchain concepts that is integrated with the HDFS client. Key features of the blockchain authentication method include decentralized authentication without keys, an unalterable record of transactions, zero single points of failure, and prevention of data theft. The implementation uses a private blockchain to store user information that is verified for authentication to access data on HDFS.

77201924

77201924IJRAT VOLUME-7 ISSUE-7, JULY 2019 , International Journal of Research in Advent Technology (IJRAT) , ISSN: 2321-9637 (Online)

Securing Your Resources with Short-Lived Certificates!

Securing Your Resources with Short-Lived Certificates!All Things Open Presented by: Allen Vailliencourt

Presented at the All Things Open 2021

Raleigh, NC, USA

Raleigh Convention Center

Abstract: There is a better way to manage access to servers, Databases, and Kubernetes than using passwords and/or public and private keys. Come and see how this is done with short-lived certificates and see a demo of Teleport!

IRJET- Proof of Document using Multichain and Ethereum

IRJET- Proof of Document using Multichain and EthereumIRJET Journal This document proposes a proof of document system using Multichain and Ethereum blockchain technologies. It involves developing a frontend using HTML, CSS, JavaScript, and frameworks like Bootstrap and jQuery. Documents are uploaded and a unique hash is generated using SHA-256. The hash is stored on a private Multichain blockchain to prove the document's existence. An API is created using PHP frameworks like Slim to allow interaction with the blockchain. The system aims to provide a secure and verifiable way to prove ownership and existence of digital documents and records. Potential applications mentioned include securing medical, academic, and business records and agreements.

Enabling Web Apps For DoD Security via PKI/CAC Enablement (Forge.Mil case study)

Enabling Web Apps For DoD Security via PKI/CAC Enablement (Forge.Mil case study)Richard Bullington-McGuire Richard Bullington-McGuire presented this talk on PKI enabling web applications for the DoD at the 2009 MIL-OSS conference:

http://www.mil-oss.org/

It is a case study that shares some of the challenges and solutions surrounding the implementation of the Forge.mil system.

Hyperledger Besu for Private & Public Enterprise introduction slides

Hyperledger Besu for Private & Public Enterprise introduction slidesssuser36a70f Hyperledger Besu introduction

Application portability with kubernetes

Application portability with kubernetesOleg Chunikhin The presentation was given on 11/12/2018 on CloudExpo NY. The presentation talks about software portability approaches and technologies on Kubernetes, microservices, service mesh, and serverless platforms

Cloud Platform Symantec Meetup Nov 2014

Cloud Platform Symantec Meetup Nov 2014Miguel Zuniga Openstack Lessons learned

Continuous Integration and Deployment using Openstack

Tuning Openstack for High Availability and Performance in Large Production Deployments

The New Stack Container Summit Talk

The New Stack Container Summit TalkThe New Stack There have been many changes in the use of container technology over the last year. Data from a recent survey demonstrates how those changes are manifesting themselves in terms of the tools and vendors being used to manage containers. In addition, details are provided about the products being used for storage, networking and containers as a service.

Blockchain Based Decentralized Cloud System

Blockchain Based Decentralized Cloud SystemDhruvdoshi25071999 This document proposes a decentralized cloud storage system using blockchain technology and cryptocurrency payments. It describes a prototype that divides files into fragments stored across multiple peer nodes to improve security, availability and performance over centralized cloud storage. Access control is provided through attribute-based encryption without a centralized authority. Payments between users and peer nodes hosting storage are handled through a custom cryptocurrency implemented with Ethereum smart contracts and the ERC-20 standard. The system aims to provide transparent, redundant and censorship-resistant cloud storage without high upfront costs through a decentralized peer-to-peer network model.

[English]Medium Inc Company Profile![[English]Medium Inc Company Profile](https://cdn.slidesharecdn.com/ss_thumbnails/enmediumcompanyintro-200828053602-thumbnail.jpg?width=560&fit=bounds)

![[English]Medium Inc Company Profile](https://cdn.slidesharecdn.com/ss_thumbnails/enmediumcompanyintro-200828053602-thumbnail.jpg?width=560&fit=bounds)

![[English]Medium Inc Company Profile](https://cdn.slidesharecdn.com/ss_thumbnails/enmediumcompanyintro-200828053602-thumbnail.jpg?width=560&fit=bounds)

![[English]Medium Inc Company Profile](https://cdn.slidesharecdn.com/ss_thumbnails/enmediumcompanyintro-200828053602-thumbnail.jpg?width=560&fit=bounds)

[English]Medium Inc Company ProfileJaeKwon9 Medium Inc. is an enterprise blockchain solution company based in South Korea. Products including MDL1.0 and MDL3.0 offer the industry's finest Hyperledger Fabric solution in terms of transaction speed and scalability.

Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]![Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]](https://cdn.slidesharecdn.com/ss_thumbnails/as2015-hwx-sponsored-talkrinaldibillie-150501220921-conversion-gate02-thumbnail.jpg?width=560&fit=bounds)

![Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]](https://cdn.slidesharecdn.com/ss_thumbnails/as2015-hwx-sponsored-talkrinaldibillie-150501220921-conversion-gate02-thumbnail.jpg?width=560&fit=bounds)

![Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]](https://cdn.slidesharecdn.com/ss_thumbnails/as2015-hwx-sponsored-talkrinaldibillie-150501220921-conversion-gate02-thumbnail.jpg?width=560&fit=bounds)

![Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]](https://cdn.slidesharecdn.com/ss_thumbnails/as2015-hwx-sponsored-talkrinaldibillie-150501220921-conversion-gate02-thumbnail.jpg?width=560&fit=bounds)

Accumulo Summit 2015: Ambari and Accumulo: HDP 2.3 Upcoming Features [Sponsored]Accumulo Summit Talk Abstract

The upcoming Hortonworks Data Platform (HDP) 2.3 includes significant additions to Accumulo, within the project itself and in its interactions with the larger Hadoop ecosystem.This session will cover high-level changes that improve usability, management and security of Accumulo. Administrators of Accumulo now have the ability to deploy, manage and dynamically configure Accumulo clusters using Apache Ambari.As a part of Ambari integration, the metrics system in Accumulo has been updated to use the standard “Hadoop Metrics2” metrics subsystem which provides native Ganglia and Graphite support as well as supporting the new Ambari Metrics System. On the security front,Accumulo was also improved to support client authentication via Kerberos, while earlier versions of Accumulo only supported Kerberos authentication for server processes.With these changes,Accumulo clients can authenticate solely using their Kerberos identity across the entire Hadoop cluster without the need to manage passwords.

Speakers

Billie Rinaldi Senior Member of Technical Staff, Hortonworks

Billie Rinaldi is a Senior Member of Technical Staff at Hortonworks, Inc., currently prototyping new features related to application monitoring and deployment in the Apache Hadoop ecosystem. Prior to August 2012, Billie engaged in big data science and research at the National Security Agency. Since 2008, she has been providing technical leadership regarding the software that is now Apache Accumulo. Billie is the VP of Apache Accumulo, the Accumulo Project Management Committee Chair, and a member of the Apache Software Foundation. She holds a Ph.D. in applied mathematics from Rensselaer Polytechnic Institute.

Josh Elser Member of Technical Staff, Hortonworks

Josh is a member of the engineering staff at Hortonworks. He is strong advocate for open source software and is an Apache Accumulo committer and PMC member. He is also a committer and PMC member of Apache Slider (incubating) and regularly contributes to other Apache projects in the Apache Hadoop ecosystem. He holds a Bachelor's degree in Computer Science from Rensselaer Polytechnic Institute.

System Design SpecificationsThere are various methods of pro.docx

System Design SpecificationsThere are various methods of pro.docxdeanmtaylor1545 System Design Specifications

There are various methods of protecting data-in-transit, also referred to as data-in-motion. However, the most significant vulnerability with cloud storage is not securing the data in transit; it is the security of the data at rest. Therefore, before transmitting data, it is essential to ensure the data is encrypted with tools such as VeraCrypt, which is a tool that enables the use of encrypted containers to protect data at rest.

Secure encryption must be used in order to maintain confidentiality and integrity when transmitting data between the cloud server and the client. Encryption will ensure that only users with the key that was used to encrypt the data will be able to decrypt the data and view the contents (Alsulami, Alharbi, & Monowar, 2015). One method of encryption would be a technique such as the hybrid cryptographic scheme shown in Figure 1.

Figure 1. Hybrid Cryptographic Scheme.

As we see in Figure 1, Alice is sending an encrypted message to Bob using the Hybrid Cryptographic Scheme, which utilizes a combination of Public Key Crypto, Secret Key Crypto, and a Hash Function. Alice’s Private Key and the Hash Function are used to creating a digital signature, and Bob’s public key is combined with a random session key and public key crypto to create the encrypted session key. Alice’s message and the random session key are used in conjunction with the hash function and secret key crypto to formulate the encrypted message.

The combination of the encrypted message and the encrypted session key becomes what is known as the digital envelope. The hash function is a one-way encryption algorithm that uses no key, but instead uses a fixed-length hash value that computes based upon the plaintext, which makes it impossible for both the contents and length of the plaintext to be recovered, thus providing a digital fingerprint to ensure the integrity of the file. Bob recovers the hash value by decrypting the digital signature with Alice's public key. Then Bob recovers the secret session key using his private key and decrypts the encrypted message. If the resultant hash value is different from the value supplied by Alice, then Bob knows that the message has been altered; if the hash values are the same, Bob can have confidence that the message he received is identical to the one that Alice sent (Kessler, 2019).

Now that the messages are encrypted, we will need to use a secure means of transmitting the messages from point A to point B. Various protocols can provide security, such as Hypertext Transfer Protocol Secure (HTTPS), which is a variant of HTTP that adds a layer of security through an SSL or TLS protocol connection (“What is HTTPS,” n.d.). SSL ensures that before communication is established between a client browser and a cloud server, an encrypted link is created between the two (“What is Secure Sockets Layer,” n.d.). TLS is more efficient and secure than SSL as it has stronger message authentication, key mat.

IRJET - Confidential Image De-Duplication in Cloud Storage

IRJET - Confidential Image De-Duplication in Cloud StorageIRJET Journal This document proposes a confidential image de-duplication system for cloud storage. It introduces a hybrid cloud architecture using both public and private clouds. To provide greater security, the private cloud employs tiered authentication. The system performs de-duplication by comparing hash values of files generated using MD5 and SHA algorithms, to detect duplicate files and reduce storage usage. It encrypts files using AES before storage in the cloud. The private cloud server manages encryption keys and performs de-duplication checks by comparing file hashes and contents. This allows detection of duplicate files while preserving data privacy through encryption.

Introduction to Blockchain and Hyperledger

Introduction to Blockchain and HyperledgerDev_Events Nitesh Thakrar, IT Software Architect,

IBM @niteshpthakrar and Benjamin Fuentes, Software

Architect and Developer, IBM, @benji_fuentes

This workshop will be in 3 stages:

1. A brief presentation on Blockchain and why

Hyperledger

2. A demo use case to explain the architecture and the code behind the demo

3. Finally, the attendees will create their own blockchain application on the cloud. The hands-on

will also invite them to use the appropriate APIs and event update a smart contract.Majority of

the time will be in doing the hands-on (step 3) so that the attendees are able to continue

developing their application after the event.Requirements: Attendees will need to bring their

laptops and be able to connect to wifi.

Hadoop and Big Data Security

Hadoop and Big Data SecurityChicago Hadoop Users Group This document discusses security challenges related to big data and Hadoop. It notes that as data grows exponentially, the complexity of managing, securing, and enforcing privacy restrictions on data sets increases. Organizations now need to control access to data scientists based on authorization levels and what data they are allowed to see. Mismanagement of data sets can be costly, as shown by incidents at AOL, Netflix, and a Massachusetts hospital that led to lawsuits and fines. The document then provides a brief history of Hadoop security, noting that it was originally developed without security in mind. It outlines the current Kerberos-centric security model and talks about some vendor solutions emerging to enhance Hadoop security. Finally, it provides guidance on developing security and privacy

Migrating Hundreds of Legacy Applications to Kubernetes - The Good, the Bad, ...

Migrating Hundreds of Legacy Applications to Kubernetes - The Good, the Bad, ...QAware GmbH CloudNativeCon North America 2017, Austin (Texas, USA): Talk by Josef Adersberger (@adersberger, CTO at QAware)

Abstract:

Running applications on Kubernetes can provide a lot of benefits: more dev speed, lower ops costs, and a higher elasticity & resiliency in production. Kubernetes is the place to be for cloud native apps. But what to do if you’ve no shiny new cloud native apps but a whole bunch of JEE legacy systems? No chance to leverage the advantages of Kubernetes? Yes you can!

We’re facing the challenge of migrating hundreds of JEE legacy applications of a major German insurance company onto a Kubernetes cluster within one year. We're now close to the finish line and it worked pretty well so far.

The talk will be about the lessons we've learned - the best practices and pitfalls we've discovered along our way. We'll provide our answers to life, the universe and a cloud native journey like:

- What technical constraints of Kubernetes can be obstacles for applications and how to tackle these?

- How to architect a landscape of hundreds of containerized applications with their surrounding infrastructure like DBs MQs and IAM and heavy requirements on security?

- How to industrialize and govern the migration process?

- How to leverage the possibilities of a cloud native platform like Kubernetes without challenging the tight timeline?

The Good, the Bad and the Ugly of Migrating Hundreds of Legacy Applications ...

The Good, the Bad and the Ugly of Migrating Hundreds of Legacy Applications ...Josef Adersberger

Running applications on Kubernetes can provide a lot of benefits: more dev speed, lower ops costs, and a higher elasticity & resiliency in production. Kubernetes is the place to be for cloud native apps. But what to do if you’ve no shiny new cloud native apps but a whole bunch of JEE legacy systems? No chance to leverage the advantages of Kubernetes? Yes you can!

We’re facing the challenge of migrating hundreds of JEE legacy applications of a major German insurance company onto a Kubernetes cluster within one year. We're now close to the finish line and it worked pretty well so far.

The talk will be about the lessons we've learned - the best practices and pitfalls we've discovered along our way. We'll provide our answers to life, the universe and a cloud native journey like:

- What technical constraints of Kubernetes can be obstacles for applications and how to tackle these?

- How to architect a landscape of hundreds of containerized applications with their surrounding infrastructure like DBs MQs and IAM and heavy requirements on security?

- How to industrialize and govern the migration process?

- How to leverage the possibilities of a cloud native platform like Kubernetes without challenging the tight timeline?

Keystone - Openstack Identity Service

Keystone - Openstack Identity Service Prasad Mukhedkar This document provides an overview of Keystone, the OpenStack identity service. It discusses key Keystone concepts like projects, domains, actors (users and groups), service catalogs, and identity providers. It also covers token types in Keystone including UUID, PKI, and Fernet tokens. The document outlines Keystone's architecture and APIs. It describes how tokens are used to authenticate to OpenStack services and how the service catalog provides endpoint information. Troubleshooting tips are also provided like checking Keystone logs and enabling debug output.

Enabling Web Apps For DoD Security via PKI/CAC Enablement (Forge.Mil case study)

Enabling Web Apps For DoD Security via PKI/CAC Enablement (Forge.Mil case study)Richard Bullington-McGuire

Ad

Recently uploaded (20)

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

Kerberos, Token and Hadoop

- 1. Kerberos, Token and Hadoop MIT Kerberos Day Intel Big Data Technologies [email protected] 1

- 2. Outline 1. Kerberos and Hadoop 2. Token and Hadoop 3. Token and Kerberos 4. Kerberos, Token and Hadoop 5. Future work 2

- 4. 4 When Hadoop adding security Initially no authentication at all Kerberos or SSL/TLS? Adding security should not impact performance much Kerberos is used to authenticate users, GSSAPI/SASL is used between C/S, encryption on wire could be optional

- 5. End users to services, using password Services to services, using service credentials/keytabs Services to services, delegating users, using service credentials MR tasks to services, delegating users, using delegation token Kerberos authentication 5

- 7. 7 Deploying Kerberos Provisioning service credentials/keytabs

- 8. 8 Deploying Kerberos (cont'd)

- 9. Strengths Symmetric encryption, mutual authentication Flexible SASL QoP, authentication (privacy) by default Command line (kinit, SSO) + Browser (SPNEGO) Mature, available in Linux/Windows + J2SE 9

- 10. Challenges Hadoop ecosystem is large and still fast evolving, other authentication solutions are desired Hadoop cluster can be large, the traffic can be huge Services are dynamically provisioned and relocated on demand Applications are to run in containerized environment, and can be dynamically scheduled and relocated to other nodes automatically Different deployment environments and scenarios, with different requirements 10

- 11. Lagged Kerberos feature support in Java (PKINIT, S2U only added recently, etc.) Lacking fine-grained authorization support Lacking strong delegation support in Kerberos/Java stack Inconvenient and limited browser access via SPNEGO, for work around to bypass Kerberos exposing internal delegation token Encryption not set in SASL via (QoP) by default, and might involve performance impact (benchmark and optimization?) AES 256 isn’t supported by Java by default Just get it work, allow_weak_crypto is used; kinit –R issue Problems 11

- 12. Outline 1. Kerberos and Hadoop 2. Token and Hadoop 3. Token and Kerberos 4. Kerberos, Token and Hadoop 5. Future work 12

- 13. Hadoop tokens Existing Hadoop tokens for internal authentication: delegation token, job token, block access token … 13

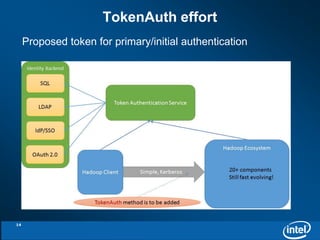

- 14. TokenAuth effort Proposed token for primary/initial authentication 14

- 15. Requirements Allow to integrate 3rd party authentication solutions Help enforce fine-grained authorization Supporting OAuth 2.0 token and work flow is desired for cloud deployment 15

- 16. Challenges Involve great change over the ecosystem May break existing applications built on the platform Over complex, involving both Identity Token and Access Token with related services, the work flow is quite complex. (Reinvent Kerberos?) Big impact for performance or security concerns We either use TLS/SSL to protect token or don’t care about it at all. The former involves performance impact, the latter suffers security consideration. 16

- 17. Outline 1. Kerberos and Hadoop 2. Token and Hadoop 3. Token and Kerberos 4. Kerberos, Token and Hadoop 5. Future work 17

- 18. TokenPreauth mechanism Allows user to authenticate to KDC using 3rd party tokens instead of password 18

- 19. TokenPreauth mechanism (cont’d) Defines required token attribute values based on JWT token, reusing existing attributes Support Bearer Token and allows to support Holder-of-Key Token in future Support Identity Token (or ID Token) and allows to support Access Token in future 19

- 20. TokenPreauth mechanism (cont’d) Client principal may exist or not during token validating and ticket issuing kinit –X token=[Your-Token], by default ref. ~/.kerbtoken How token being generated may be out of scope, left for token authority Identity Token -> Ticket Granting Ticket, Access Token -> Service Ticket Ticket lifetime derived from token SHOULD be in the time frame of the token Ticket derived from token may be not renewable 20

- 21. Access Token profile Based on TokenPreauth, allow Access Token to be used to request Service Ticket directly in AS exchange Should be useful to support OAuth 2.0 Web flow in Kerberized Resource Server with backend service 21

- 22. Why it matters Token and OAuth are widely used in Internet, cloud and mobile, more and more popular It allows Kerberized systems to be supported in token’s world Also allows Kerberized systems to integrate other authentication solutions thru token and Token Authority, without modification of existing codes. May help Kerberos evolve in both cloud and big data platform Make extra sense for Hadoop, supporting token across the ecosystem without performance impact 22

- 23. How it is going We’re collaborating with MIT to standardize Initial drafts, under MIT team’s review Should be submitted to KITTEN WG soon PoC done targeting for Hadoop 23

- 24. Outline 1. Kerberos and Hadoop 2. Token and Hadoop 3. Token and Kerberos 4. Kerberos, Token and Hadoop 5. Future work 24

- 25. Kerberos + Token for Hadoop Let’s combine all of these together

- 26. PoC: TokenPreauth plugin 26

- 27. PoC: Token authentication JAAS module 27

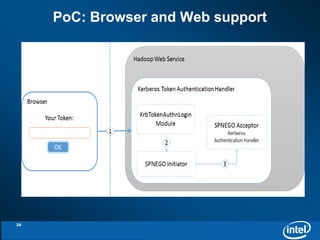

- 28. PoC: Browser and Web support 28

- 29. Implement the mechanism and have it included in next MIT Kerberos release, collaborating with MIT team Or at least, provide the plugin binary download and source codes repository for public usage and review Make a complete token solution based on Kerberos for Hadoop Next step 29

- 30. The Repo: https://github.com/drankye/haox Working on a first class Java Kerberos client library Catch up with latest Kerberos features and fill gaps lagged by Java – PKINIT – TokenPreauth Haox project 30

- 31. Haox-asn1 A data driven ASN-1 encoding/decoding framework A simple example, AuthorizationData type from RFC4210 31

- 32. Haox-asn1 (cont’d) A data driven ASN-1 encoding/decoding framework A simple example, AuthorizationData type from RFC4210 32

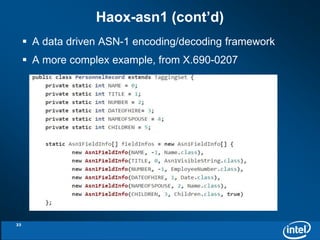

- 33. Haox-asn1 (cont’d) A data driven ASN-1 encoding/decoding framework A more complex example, from X.690-0207 33

- 34. Haox kerb-crypto Implementing des, des3, rc4, aes, camellia encryption and corresponding checksum types Interoperates with MIT Kerberos Independent with Kerberos codes in JRE, but rely on JCE 34

- 35. ASN-1 (done) Core spec types (done) Crypto (done) AS client (going) Preauth framework (going) PKINIT (going) Haox Status 35

- 36. Future work Combining all of these effort together, make a complete token solution for Hadoop Additionally, we’d also like to make Kerberos deployment be more easily and readily even for large Hadoop clusters It’s Intel’s mission that makes Hadoop more enterprise-grade security ready We’re also interested in evolving Kerberos for cloud platform, particularly, how Kerberized services and applications can be dynamically scheduled to nodes and bootstrap Will investigate how Intel’s technology like TEE/TXT can help thru all of these 36

- 37. Trusted Execution Technology (TXT) Establishing root of trust through measurement of hardware and pre-launch software components, and utilizing the result, 1.Run your workload and data on a trusted 2.Protect your workload and data 3.Avoid compromising security in the cloud 4.Sealed and secured storage 37

- 38. Kerberos with TXT With the secured storage provided by TXT, 1.Protect credential cache to store TGTs for Kerberos 2.Protect token cache for Hadoop 3.Protect encryption keys for data 4.Protect key store for management

- 39. Kerberos with TXT (cont’d) With secured token cache and trusted execution by TXT, TokenPreauth can be deployed with host keytab/cert

- 40. Thanks! You feedback are very welcome Please contact [email protected] for update. 40