Kinectic vision looking deep into depth

- 1. 1 Looking Deep into Depth Prof. Partha Pratim Das, [email protected] & partha.p.das@gm Department of Computer Science & Engineering Indian Institute of Technology Kharagpur 18th December 2013 Tutorial: NCVPRIPG 2013

- 2. Natural Interaction Understanding human nature How we interact with other people How we interact with environment How we learn Interaction methods the user is familiar and comfortable with Touch Gesture Voice Eye Gaze

- 6. Natural User Interface Revolutionary change in Human-Computer interaction Interpret natural human communication Computers communicate more like people The user interface becomes invisible or looks and feels so real that it is no longer iconic

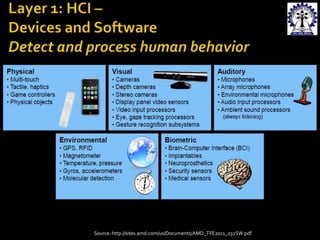

- 11. Factors for NUI Revolution

- 12. Sensor – Detect human behavior NUI Middleware Libraries – Interprets human behavior Cloud – Extend NUI across multiple device Sensors Libraries Application Cloud

- 14. Touch Mobile Phones Tablets and Ultrabooks Portable Audio Players Portable Game Consoles Touch Less RGB camera Depth camera – Lidar, CamBoard nano RGBD camera – Kinect, Asus Xtion pro, Carmine 1.08 Eye tracker – Tobii eye tracker Voice recognizer – Kinect, Asus Xtion pro, Carmine 1.08

- 17. Kinect The most popular NUI Sensor

- 18. 19

- 19. 20 Games / Apps Sports Dance/Music Fitness Family Action Adventure Videos Xbox 360 Fitnect Humanoid Magic Mirror

- 20. 21 Xbox 360 Fitnect – Virtual Fitting Room http://www.openni.org/solutions/fitnect-virtual-fitting-room/ http://www.fitnect.hu/ Controlling a Humanoid Robot with Kinect http://www.youtube.com/watch?v=_xLTI-b-cZU http://www.wimp.com/robotkinect/ Magic Mirror in Bathroom http://www.extremetech.com/computing/94751-the-newyork-times-magic-mirror-will-bring-shopping-to-thebathroom

- 21. 22 Low-cost sensor device for providing real-time depth, color and audio data.

- 22. 23 How Kinect supports NUI?

- 23. 24

- 24. 25

- 25. 26 1. 2. Kinect hardware - The hardware components, including the Kinect sensor and the USB hub through which the Kinect sensor is connected to the computer. Kinect drivers - Windows drivers for the Kinect: 3. 4. Audio and Video Components Kinect NUI for skeleton tracking, audio, and color and depth imaging DirectX Media Object (DMO) 5. Microphone array as a kernel-mode audio device –std audio APIs in Windows. Audio and video streaming controls (color, depth, and skeleton). Device enumeration functions for more than one Kinect microphone array beamforming audio source localization. Windows 7 standard APIs - The audio, speech, and media APIs and the Microsoft Speech SDK.

- 26. 27 Hardware

- 27. 28

- 28. 29 RGB camera Infrared (IR) emitter and an IR depth sensor – estimate depth Multi-array microphone - four microphones for capturing sound. 3-axis Accelerometer - configured for a 2g range, where g is the acceleration due to gravity. Determines the current orientation of the Kinect.

- 29. 30

- 30. 31 NUI API

- 31. 32 NUI API lets the user programmatically control and access four data streams. Color Stream Infrared Stream Depth Stream Audio Stream

- 32. 33 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Color Basics - D2D Infrared Basics - D2D Depth Basics - D2D / Depth - D3D Depth with Color - D3D Kinect Explorer - D2D Kinect Explorer - WPF

- 33. 34 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Color Basics - D2D

- 34. 35 Color Format RGB YUV Bayer Resolution 640x480 Fps30 1280x960 Fps12 640x480 Fps15 640x480 Fps30 1280x960 Fps12 New features of SDK 1.6 onwards: • Low light (or brightly lit). • Use hue, brightness, or contrast to improve visual clarity. • Use gamma to adjust the way the display appears on certain hardware.

- 35. 36 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Infrared Basics - D2D

- 36. 37 IR stream is the test pattern observed from both the RGB and IR camera Resolution 640x480 Fps 30

- 37. 38 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Depth Basics - D2D Depth - D3D Depth with Color - D3D

- 38. 39 The depth data stream merges two separate types of data: Depth data, in millimeters. Player segmentation data Each player segmentation value is an integer indicating the index of a unique player detected in the scene. The Kinect runtime processes depth data to identify up to six human figures in a segmentation map.

- 39. 40

- 40. 41 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Kinect Explorer - D2D Kinect Explorer - WPF

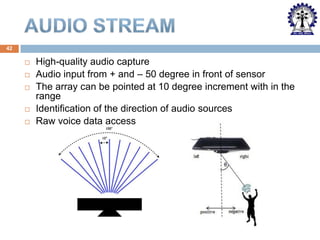

- 41. 42 High-quality audio capture Audio input from + and – 50 degree in front of sensor The array can be pointed at 10 degree increment with in the range Identification of the direction of audio sources Raw voice data access

- 42. 43

- 43. 44 In addition to the hardware capabilities, the Kinect software runtime implements: Skeleton Tracking Speech Recognition - Integration with the Microsoft Speech APIs.

- 44. 45

- 45. 46 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Skeleton Basics - D2D Kinect Explorer - D2D Kinect Explorer - WPF

- 46. 47 Tracking Modes (Seated/Default) Tracking Skeletons in Near Depth Range Joint Orientation Joint Filtering Skeleton Tracking With Multiple Kinect Sensors

- 47. 48 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Speech Recognition - Integration with the Microsoft Speech APIs. Speech Basics - D2D Tic-Tac-Toe - WPF

- 48. 49 Microphone array is an excellent input device for speech recognition. Applications can use Microsoft.Speech API for latest acoustical algorithms. Acoustic models have been created to allow speech recognition in several locales in addition to the default locale of en-US.

- 49. 50 Face Tracking Kinect Fusion Kinect Interaction

- 50. 51 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Face Tracking 3D - WPF Face Tracking Basics - WPF Face Tracking Visualization

- 51. 52 Input Images The Face Tracking SDK accepts Kinect color and depth images as input. The tracking quality may be affected by the image quality of these input frames Face Tracking Outputs Tracking status 2D points 3D head pose Action Units (Aus) Source: http://msdn.microsoft.com/en-us/library/jj130970.aspx

- 53. 54 3D head pose Source: http://msdn.microsoft.com/en-us/library/jj130970.aspx

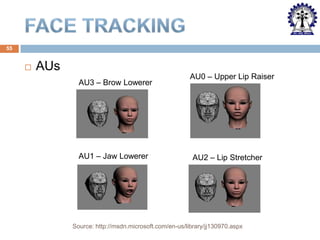

- 54. 55 AUs AU3 – Brow Lowerer AU1 – Jaw Lowerer AU0 – Upper Lip Raiser AU2 – Lip Stretcher Source: http://msdn.microsoft.com/en-us/library/jj130970.aspx

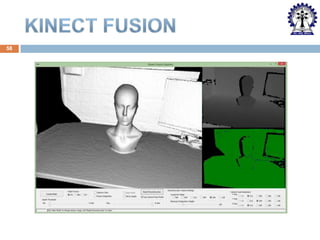

- 55. 56 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Kinect Fusion Basics - D2D Kinect Fusion Color Basics - D2D Kinect Fusion Explorer - D2D

- 56. 57 Take depth images from the Kinect camera with lots of missing data Within a few seconds producing a realistic smooth 3D reconstruction of a static scene by moving the Kinect sensor around. From this, a point cloud or a 3D mesh can be produced.

- 57. 58

- 58. 59 Source: Kinect for Windows Developer Toolkit v1.8 http://www.microsoft.com/en- in/download/details.aspx?id=40276 App Examples: Interaction Gallery - WPF

- 59. 60 Kinect Interaction provides: Identification of up to 2 users and identification and tracking of their primary interaction hand. Detection services for user's hand location and state. Grip and grip release detection. Press and scroll detection. Information on the control targeted by the user.

- 61. 62 Matlab OpenCV PCL (Point Cloud Library) BoofCV Library ROS

- 62. 63 Matlab is a high-level language and interactive environment for numerical computation, visualization, and programming. Open Source Computer Vision (OpenCV) is a library of programming functions for real-time computer vision. MATLAB and OpenCV functions perform numerical computation and visualization on video and depth data from sensor. Source: Maltlab – http://msdn.microsoft.com/enus/library/dn188693.aspx

- 63. 64 Source: PCL –

- 64. 65 Provides libraries and tools to help software developers create robot applications. Provides hardware abstraction, device drivers, libraries, visualizers, messagepassing, package management, and more. Provides additional RGB-D processing capabilities. Source: ROS –

- 65. 66 Binary Image processing Image registration and model fitting Interest point detection Camera calibration Provides RGB-D processing capabilities. Depth image from Kinect sensor Source: BoofCV – 3D point cloud created from RGB & depth images

- 66. 67 Applications

- 67. 68 Gesture Recognition Gait Detection Activity Recognition

- 68. 69 Body Parts Gestures Applications Referenc es Fingers 9 hand Gestures for number 1to 9 Sudoku game Ren et al. 2011 Hand, Fingers Pointing gestures: pointing at objects or locations of interest Real-time hand gesture interaction with the robot. Pointing gestures translated into goals for the robot. Bergh et al. 2011 Arms Lifting both Physical rehabilitation arms to the front, to the side and upwards Chang 2011 Hand 10 hand Gestures for Doliotis 2011 HCI system. Experimental datasets in-clude hand signed digits

- 69. 70 Body Parts Gestures Applications Referenc es Hand and Head Clap, Call, Greet, Yes, No, Wave, Clasp, Rest HCI applications. Not build for any specic application Biswas 2011 Single Hand Grasp and Drop Various HCI applications Tang 2012 Right hand Push, Hover (default), Left hand, Head, Foot or Knee View and select recipes for cooking in Kitchen when hands are messy Panger 2012 Hand, Arms Various HCI applications. Not build for any specific application Lai 2012 Right / Left-arm swing, Right / Left-arm push, Right / Left-arm back, Zoom-in/out

- 70. 71 Image source : Kinect for Windows Human Interface Guidelines v1.5 Left and right swipe Start presentation End presentation Hand as a marker Circling gesture Push gesture Image source : Kinect for Windows Human Interface Guidelines v1.5

- 71. 72 Right swipe gesture paths 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 Series1 0.1 0.1 0 0 -0.4 -0.2 -0.1 0 0.2 0.4 -0.4 0.6 -0.2 -0.1 0 -0.2 0.2 0.4 -0.2 -0.3 -0.3 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 Series1 0.1 0.1 0 -0.4 Series1 -0.2 -0.1 0 -0.2 -0.3 Series1 0 0.2 0.4 0.6 -0.4 -0.2 -0.1 0 -0.2 -0.3 0.2 0.4 0.6

- 72. 73 • Consider an example : Take a left swipe path -0.4 -0.2 0.5 0.4 0.3 0.2 0.1 0 -0.1 0 -0.2 -0.3 Series1 0.2 0.4 • The sequence of angles will be of the form [180,170,182, … ,170,180,185] • The corresponding quantized feature vector is [9,9,9, … , 9,9,9] • How does it distinguish left swipe from right swipe? straightforward! Very

- 73. 74 HMM model for right swipe L3 L2 L1 L4 Real time gesture • Returns likelihoods which are then converted to probabilities by normalization, followed by thresholding (empirical) to classify gestures

- 74. 75 • Here are the results obtained by the implementation Correct Gesture Incorrect gesture Correctly classified 281 8 Wrongly classified 19 292 • Precision = 97.23 % • Recall = 93.66 % • F-measure = 95.41 % • Accuracy = 95.5 %

- 75. 76 Source:

- 76. 77 (a) Fixed Camera (b) Freely moving camera Recognition Rate – 97%

- 77. 78 Source:

- 79. ‹#›

- 80. 81 Additional libraries and APIs Skeleton Tracking KinectInteraction Kinect Fusion Face Tracking OpenNI Middleware NITE 3D Hand KSCAN3D 3-D FACE Source: NITE – http://www.openni.org/files/nite/ 3D Hand – http://www.openni.org/files/3d-hand-tracking-library/ 3D face – http://www.openni.org/files/3-d-face-modeling/ KSCAN3D – http://www.openni.org/files/kscan3d-2/

- 81. 82 Evaluating a Dancer’s Performance using Kinectbased Skeleton Tracking Joint Positions Joint Velocity 3D Flow Error Source:

- 82. 83 Kinect SDK Better skeleton representation 20 joints skeleton Joints are mobile Higher frame rate – better for tracking actions involving rapid pose changes Significantly better skeletal tracking algorithm (by Shotton, 2013). Robust and fast tracking even in complex body poses OpenNi Simpler skeleton representation 15 joints skeleton Fixed basic frame of [shoulder left: shoulder right: hip] triangle and [head:shoulder] vector Lower frame rate – slower tracking of actions Weak skeletal tracking.

- 83. 84

- 84. 85 Depth Sensing

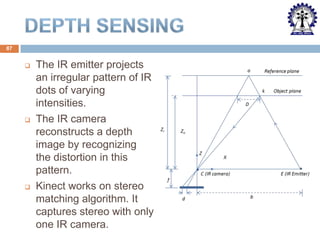

- 86. 87 The IR emitter projects an irregular pattern of IR dots of varying intensities. The IR camera reconstructs a depth image by recognizing the distortion in this pattern. Kinect works on stereo matching algorithm. It captures stereo with only one IR camera.

- 87. 88 Project Speckle pattern onto the scene Infer depth from the deformation of the Speckle pattern Source: http://users.dickinson.edu/~jmac/selected-talks/kinect.pdf

- 89. 90 capture depth image & remove background infer body parts per pixel cluster pixels to hypothesize body joint positions Source: fit model & track skeleton

- 90. 91 Highly varied training dataset - driving, dancing, kicking, running, navigating menus, etc (500k images) The pairs of depth and body part images - used for learning the classifier. Randomized decision trees and forests - used to classify each pixel to a body part based on some depth image feature and threshold

- 91. 92 Find joint point in each body parts - local mode-finding approach based on mean shift with a weighted Gaussian kernel. This process considers both the inferred body part probability at the pixel and the world surface area of the pixel.

- 92. 93 Input Depth Inferred body parts Side view Top view Front view Inferred body joint position No tracking or smoothing Source: http://research.microsoft.com/pubs/145347/Kinect%20Slides%20CVPR2011.pptx

- 93. 94

- 94. PROS Multimedia Sensor Ease of Use Low Cost Strong Library Support CONS 2.5D depth data Limited field of view Limited Range 43o vert, 57o horz 0.8 – 3.5 m Uni-Directional View Depth Shadows Limited resolution Missing depth values

- 95. 96 For a single Kinect, minimum of 10 feet by 10 feet space (3 meters by 3 meters) is needed. Side View Top View Source: iPiSoft Wiki: http://wiki.ipisoft.com/User_Guide_for_Dual_Depth_Sensor_Configuration

- 96. 97 Source: Multiple Kinects -- possible? Moving while capturing -- possible? http://www.youtube.com/watch?v=ttMHme2EI9I

- 98. 3600 Reconstruction Source: Huawei/3DLife ACM Multimedia Grand Challenge for 2013 http://mmv.eecs.qmul.ac.uk/mmgc2013/

- 99. Increases field of view Super Resolution Source: Maimone & Fuchs, 2012

- 100. IR Interference Noise Source: Berger et. al., 2011a

- 101. Individual capture Simultaneous capture Difference Source: Lihi Zelnik-Manor & Roy Or–El, 2012: http://webee.technion.ac.il/~lihi/Teaching/2012_winter_048921/PPT/Roy.pdf

- 102. Estimate interference noise in 2 configuration Parallel Perpendicular Parallel Perpendicular

- 104. Perpendicular Source: Mallick et. al. Parallel

- 105. 6.92 10.93 Human 4.01 8.25 Human 4.01 7.40 180 Room 329 639 Human 206 624 Human 206 408 180 Room 89.15 185.71 180 Human 58.26 168.91 90 % Pixels with dmin = 0 & dmax > 0 Room 90 Average Standard Deviation 180 180 Avg (dmax - dmin) Scene 90 % ZD Error Angle (0) 180 Noise Measure Human 58.26 118.65 180 Room 8.08% 17.52% 180 Human 5.32% 19.84% 90 Human 5.32% 11.87% Single Kinect Two Kinects Source: Mallick et. al. 2014:

- 106. SDM: Space Division Multiplexing Circular Placement Axial and Diagonal Placement Vertical Placement TDM: Time Division Multiplexing Mechanical Shutter (TDM-MS) Electronic Shutter (TDM-ES) Software Shutter (TDM-SS) PDM: Pattern Division Multiplexing Body-Attached Vibrators Stand-Mounted Vibrators Source: Mallick et. al.

- 107. Source: Caon et. at., 2011

- 108. Source: Caon et. at., 2011

- 109. 110 Top View Side View Source: iPiSoft Wiki: http://wiki.ipisoft.com/User_Guide_for_Dual_Depth_Sensor_Configuration

- 110. Source: Ahmed, 2012: http://www.mpiinf.mpg.de/~nahmed/casa2012.pdf

- 111. 112 Top View Side View Source: iPiSoft Wiki: http://wiki.ipisoft.com/User_Guide_for_Dual_Depth_Sensor_Configuration

- 112. 113 Source: Tong et. al. 2012

- 113. Moving Shaft Shutter Shutter Open Shutter Closed Source: Kramer et. al., 2011

- 114. A Revolving Disk Shutter Source: Schroder et. al., 2011

- 115. 4-phase Mechanical Shuttering Source: Berger et. al., 2011b

- 116. Source: Faion et. al., 2012: Toy train tracking

- 117. Microsoft's Kinect SDK v1.6 has introduced a new API (KinectSensor.ForceInfraredEmitterOff) to control the IR emitter as a Software Shutter. This API works for Kinect for Windows only. Earlier the IR emitter was always active when the camera was active. Using this API the IR emitter can be put o as needed. This is the most effective TDM scheme. However, no multi-Kinect application has yet been reported with TDM-SS.

- 118. Source: Butler et. al., 2012: Shake’n’Sense

- 119. Source: Kainz et. al., 2012: OmniKinect Setup

- 120. Quality Factor SDM TDM PDM Accuracy Excellent Bad Very Good Scalability Good (no overlaps) Bad Excellent Frame rate 30 fps 30/n fps 30 fps Ease of Use Very easy Cumbersome shutters Inconvenient vibrators Cost Low High Moderate Limitations Few configurations Unstable Blurred RGB Robustness Change Geometry Adjust Set-up Quite robust

- 121. 122

- 122. Kinect for Xbox 360 –Good for Gaming The gaming console of Xbox. Costs around $100 without the Xbox http://www.xbox.com/en-IN/Kinect Kinect Windows SDK is partially supported for this Recommended for Kinect for Windows –Research This is for Kinect App development. Costs around $250 http://www.microsoft.com/en-us/kinectforwindows/ Kinect Windows SDK is fully supported for this

- 123. 124 1. Ren, Z., Meng, J., and Yuan, J. Depth camera based hand gesture recognition and its applications in human-computer-interaction. In Information, Communications and Signal Processing (ICICS) 2011 8th International Conference on (2011), pp. 1- 5. 2. Biswas, K. K., and Basu, S. K. Gesture recognition using Microsoft Kinect. In Proceedings of the 5th International Conference on Automation, Robotics and Applications (2011), pp. 100 - 103. 3. Chang, Y.-J., Chen, S.-F., and Huang, J.-D. A Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Research in Developmental Disabilities (2011), 25662570. 4. Doliotis, P., Stefan, A., McMurrough, C., Eckhard, D., and Athitsos, V. Comparing gesture recognition accuracy using color and depth information. In Proceedings of the 4th International Conference on PErvasive Technologies Related to Assistive Environments, PETRA '11 (2011).

- 124. 125 5. Lai, K., Konrad, J., and Ishwar, P. A gesture-driven computer interface using kinect. In Image Analysis and Interpretation (SSIAI), 2012 IEEE Southwest Symposium on (2012), pp. 185 188. 6. Panger, G. Kinect in the kitchen: testing depth camera interactions in practical home environments. In CHI '12 Extended Abstracts on Human Factors in Computing Systems (2012), pp. 1985 - 1990. 7. 8. Tang, M. Recognizing hand gestures with Microsoft’s Kinect. Department of Electrical Engineering, Stanford University. Ahmed, N. A system for 360 acquisition and 3D animation reconstruction using multiple RGB-D cameras. Unpublished article. 2012. URL: http://www.mpiinf.mpg.de/~nahmed/casa2012.pdf.

- 125. 126 9. Caon, M., Yue, Y., Tscherrig, J., Mugellini, E., and Khaled, O. A. Contextaware 3D gesture interaction based on multiple Kinects. In Ambient Computing, Applications, Services and Technologies, Proc. AMBIENT First Int'l. Conf. on, pages 7-12, 2011. 10. Berger, K., Ruhl, K., Albers, M., Schroder, Y., Scholz, A., Kokem•uller, J., Guthe, S., and Magnor, M. The capturing of turbulent gas flows using multiple Kinects. In Computer Vision Workshops, IEEE Int'l. Conf. on, pages 1108-1113, 2011a. 11. Berger, K., Ruhl, K., Brmmer, C., Schr•oder, Y., Scholz, A., and Magnor, M. Markerless motion capture using multiple color-depth sensors. In Vision, Modeling and Visualization, Proc. 16th Int'l. Workshop on, pages 317-324, 2011b. 12. Faion, F., Friedberger, S., Zea, A., and Hanebeck, U. D. Intelligent sensor scheduling for multi-Kinect-tracking. In Intelligent Robots and Systems (IROS), IEEE/RSJ Int'l. Conf. on, pages 3993-3999, 2012.

- 126. 127 13. Butler, A., Izadi, S., Hilliges, O., Molyneaux, D., Hodges, S., and Kim, D. Shake'n'Sense: Reducing interference for overlapping structured light depth cameras. In Human Factors in Computing Systems, Proc. ACM CHI Conf. on, pages 1933-1936, 2012. 14. Kainz, B., Hauswiesner, S., Reitmayr, G., Steinberger, M., Grasset, R., Gruber, L., Veas, E., Kalkofen, D., Seichter, H., and Schmalstieg, D. (2012). OmniKinect: Real-time dense volumetric data acquisition and applications. In Virtual reality software and technology, Proc. VRST'12: 18th ACM symposium on, pages 2532, 2012. 15. Maimone, A. and Fuchs, H Reducing interference between multiple structured light depth sensors using motion. In Virtual Reality, Proc. IEEE Conf. on, pages 51-54, 2012. 16. Kramer, J., Burrus, N., Echtler, F., C., D. H., and Parker, M. Hacking the Kinect. Apress, 2012.

- 127. 128 17. Zelnik-Manor, L. & Or–El, R. Kinect Depth Map, Seminar in “Advanced Topics in Computer Vision” (048921 –Winter 2013). URL: http://webee.technion.ac.il/~lihi/Teaching/2012_winter_048921/PP T/Roy.pdf 18. iPiSoft Wiki. User Guide for Dual Depth Sensor Configurations. Last accessed 17-Dec-2013. URL: http://wiki.ipisoft.com/User_Guide_for_Dual_Depth_Sensor_Configuration 19. Tong, J., Zhou, J., Liu, L., Pan, Z., and Yan, H. (2012). Scanning 3D full human bodies using Kinects. Visualization and Computer Graphics, IEEE Transactions on, 18:643-650, 2012. 20. Mallick, T., Das, P.P., Majumdar, A. Study of Interference Noise in Multi-Kinect Set-up, Accepted for presentation at VISAPP 2014: 9th Int’l Jt. Conf. on Computer Vision, Imaging and Computer Graphics Theory and Applications, Lisbon, Portugal, 5-8, Jan, 2014.

- 128. 129 21. Huawei/3DLife ACM Multimedia Grand Challenge for 2013. http://mmv.eecs.qmul.ac.uk/mmgc2013/ 22. Schroder, Y., Scholz, A., Berger, K., Ruhl, K., Guthe, S., and Magnor, M. Multiple Kinect studies. Technical Report 0915, ICG, 2011.

- 129. 130 Tanwi Mallick, TCS Research Scholar, Department of Computer Science and Engineering, IIT, Kharagpur has prepared this presentation.

- 130. 131

Editor's Notes

- #5: http://www.google.co.in/imgres?espv=210&es_sm=93&biw=1366&bih=624&tbm=isch&tbnid=wfnEl7vR6I9XMM:&imgrefurl=http://en.wikipedia.org/wiki/Natural_user_interface&docid=8iCRReaH83F3kM&imgurl=http://upload.wikimedia.org/wikipedia/en/7/7c/CLI-GUI-NUI.png&w=1310&h=652&ei=lt59Uv_fC4SRrAe7n4CQCQ&zoom=1&ved=1t:3588,r:3,s:0,i:92&iact=rc&page=1&tbnh=158&tbnw=318&start=0&ndsp=5&tx=235&ty=72

- #6: http://www.google.co.in/imgres?start=103&espv=210&es_sm=93&biw=1366&bih=624&tbm=isch&tbnid=WLHUUs3mnYzdiM:&imgrefurl=http://www.xtremelabs.com/2013/08/the-future-of-uiux-the-natural-user-interface/&docid=S2ZkYOXSFNHfBM&imgurl=http://www.xtremelabs.com/wp-content/uploads/2013/07/futureUIUX1.jpg&w=600&h=300&ei=y959UubRNJGyrAeZiIGICA&zoom=1&ved=1t:3588,r:3,s:100,i:13&iact=rc&page=7&tbnh=159&tbnw=318&ndsp=20&tx=211&ty=70

- #19: Full Body GamingController-free gaming means full body play. Kinect responds to how you move. So if you have to kick, then kick. If you have to jump, then jump. You already know how to play. All you have to do now is to get off the couch.It’s All About YouOnce you wave your hand to activate the sensor, your Kinect will be able to recognize you and access your Avatar. Then you'll be able to jump in and out of different games, and show off and share your moves.Something For EveryoneWhether you're a gamer or not, anyone can play and have a blast. And with advanced parental controls, Kinect promises a gaming experience that's safe, secure and fun for everyone.

- #20: Simply step in front of the sensor and Kinect recognizes you and responds to your gestures.

- #26: Kinect hardware - The hardware components, including the Kinect sensor and the USB hub through which the Kinect sensor is connected to the computer.Kinect drivers - The Windows drivers for the Kinect, which are installed as part of the SDK setup process as described in this document. The Kinect drivers support: The Kinect microphone array as a kernel-mode audio device that you can access through the standard audio APIs in Windows.Audio and video streaming controls for streaming audio and video (color, depth, and skeleton).Device enumeration functions that enable an application to use more than one KinectAudio and Video Components Kinect natural user interface for skeleton tracking, audio, and color and depth imaging DirectX Media Object (DMO) for microphone array beam forming and audio source localization.Windows 7 standard APIs - The audio, speech, and media APIs in Windows 7, as described in the Windows 7 SDK and the Microsoft Speech SDK. These APIs are also available to desktop applications in Windows 8.

- #27: Kinect hardware - The hardware components, including the Kinect sensor and the USB hub through which the Kinect sensor is connected to the computer.Kinect drivers - The Windows drivers for the Kinect, which are installed as part of the SDK setup process as described in this document. The Kinect drivers support: The Kinect microphone array as a kernel-mode audio device that you can access through the standard audio APIs in Windows.Audio and video streaming controls for streaming audio and video (color, depth, and skeleton).Device enumeration functions that enable an application to use more than one KinectAudio and Video Components Kinect natural user interface for skeleton tracking, audio, and color and depth imaging DirectX Media Object (DMO) for microphone array beam forming and audio source localization.Windows 7 standard APIs - The audio, speech, and media APIs in Windows 7, as described in the Windows 7 SDK and the Microsoft Speech SDK. These APIs are also available to desktop applications in Windows 8.

- #38: There is only one color image stream available per sensor. The infrared image stream is provided by the color image stream, therefore, it is impossible to have a color image stream and an infrared image stream open at the same time on the same sensor.

- #43: The beamforming functionality supports 11 fixed beams, which range from -0.875 to 0.875 radians (approximately -50 to 50 degrees in 10-degree increments). Applications can use the DMO’s adaptive beamforming option, which automatically selects the optimal beam, or specify a particular beam. The DMO also includes a source localization algorithm, which estimates the source direction.

- #53: The Face Tracking SDK uses the Kinect coordinate system to output its 3D tracking results. The origin is located at the camera’s optical center (sensor), Z axis is pointing towards a user, Y axis is pointing up. The measurement units are meters for translation and degrees for rotation angles.

- #54: The Face Tracking SDK tracks the 87 2D points indicated in the following image (in addition to 13 points that aren’t shown in Figure 2 - Tracked Points):

- #55: The X,Y, and Z position of the user’s head are reported based on a right-handed coordinate system (with the origin at the sensor, Z pointed towards the user and Y pointed UP – this is the same as the Kinect’s skeleton coordinate frame). Translations are in meters.The user’s head pose is captured by three angles: pitch, roll, and yaw.

- #56: The Face Tracking SDK results are also expressed in terms of weights of six AUs and 11 SUs, which are a subset of what is defined in the Candide3 model (http://www.icg.isy.liu.se/candide/). The SUs estimate the particular shape of the user’s head: the neutral position of their mouth, brows, eyes, and so on. The AUs are deltas from the neutral shape that you can use to morph targets on animated avatar models so that the avatar acts as the tracked user does.The Face Tracking SDK tracks the following AUs. Each AU is expressed as a numeric weight varying between -1 and +1.

- #61: http://msdn.microsoft.com/en-us/library/dn188673.aspx

- #64: Maltlab – http://msdn.microsoft.com/en-us/library/dn188693.aspxOpenCV - http://msdn.microsoft.com/en-us/library/dn188694.aspx

- #65: http://pointclouds.org/documentation/The Point Cloud Library (PCL) is a large scale, open project for point cloud processing. The PCL framework contains numerous algorithms including filtering, feature estimation, surface reconstruction, registration, model fitting and segmentation. These algorithms can be used, for example, to filter outliers from noisy data, stitch 3D point clouds together, segment relevant parts of a scene, extract keypoints and compute descriptors to recognize objects in the world based on their geometric appearance, and create surfaces from point clouds and visualize them – to name a few.PCL is cross-platform, and has been successfully compiled and deployed on Linux, MacOS, Windows, and Android. To simplify development, PCL is split into a series of smaller code libraries, that can be compiled separately

- #66: http://wiki.ros.org/openni_camera

- #67: http://boofcv.org/index.php?title=Tutorial_Kinecthttp://www.codeproject.com/Articles/293269/BoofCV-Real-Time-Computer-Vision-in-Java

- #77: http://www.serc.iisc.ernet.in/~venky/Papers/Kinect_gait.pdf

- #79: http://www.tnstate.edu/itmrl/documents/Team%20Activity%20Analysis.pdf

- #82: NITE - http://www.openni.org/files/nite/3D Hand - http://www.openni.org/files/3d-hand-tracking-library/3D face - http://www.openni.org/files/3-d-face-modeling/KSCAN3D - http://www.openni.org/files/kscan3d-2/

- #83: http://doras.dcu.ie/16574/1/ACMGC_Doras.pdf

- #92: Highly varied training dataset allows the classifier to estimate body parts invariant to pose, body shape, clothing, etc. (500k images)The pairs of depth and body part images are used as fully labelled data for learning the classifier.Randomized decision trees and forests are used to classify each pixel to a body part based on some depth image feature and threshold

![73

•

Consider an example : Take a left swipe path

-0.4

-0.2

0.5

0.4

0.3

0.2

0.1

0

-0.1 0

-0.2

-0.3

Series1

0.2

0.4

•

The sequence of angles will be of the form [180,170,182, … ,170,180,185]

•

The corresponding quantized feature vector is [9,9,9, … , 9,9,9]

•

How does it distinguish left swipe from right swipe?

straightforward!

Very](https://image.slidesharecdn.com/kinecticvision-lookingdeepintodepth-131225091422-phpapp02/85/Kinectic-vision-looking-deep-into-depth-72-320.jpg)

![83

Kinect SDK

Better skeleton

representation

20 joints skeleton

Joints are mobile

Higher frame rate – better for

tracking actions involving

rapid pose changes

Significantly better skeletal

tracking algorithm (by

Shotton, 2013). Robust and

fast tracking even in complex

body poses

OpenNi

Simpler skeleton

representation

15 joints skeleton

Fixed basic frame of

[shoulder left: shoulder right:

hip] triangle and

[head:shoulder] vector

Lower frame rate – slower

tracking of actions

Weak skeletal tracking.](https://image.slidesharecdn.com/kinecticvision-lookingdeepintodepth-131225091422-phpapp02/85/Kinectic-vision-looking-deep-into-depth-82-320.jpg)