Ad

Klug pacemaker the opensource high-availability_1.0_f

- 1. Pacemaker The Open Source, High Availability Cluster Research Institute for Software technology Kim, donghyun Saturday, July 23, 2016 [email protected] 한국 리눅스 사용자 그룹 Korea Linux User Group

- 2. # Whoami Systems and Infrastructure Geek Enterprise Linux Infrastructure Engineer (Red Hat) Work - Technology Research - Technical Support : Troubleshooting, Debugging, Performace Tuning….. - Consulting : Linux (Red Hat, SUSE, OEL), Virtualization, High-Availability...... Hobby - Trevelling - Drawing (cartoon) I love linux ♥ - Blog : http://rhlinux.tistory.com/ - Café : http://cafe.naver.com/iamstrong - SNS : https://www.facebook.com/groups/korelnxuser 1제3회 난공불락 오픈소스 인프라세미나

- 3. In this Session Pacemaker’s Story - The Open Source, High Availability Cluster Overview of HA architectural components Use case examples Future endeavors 2제3회 난공불락 오픈소스 인프라세미나

- 4. Pacemaker - The Open Source, High Availability Cluster 3제3회 난공불락 오픈소스 인프라세미나

- 5. HA for OpenSource Technology 4제3회 난공불락 오픈소스 인프라세미나

- 6. 5 “Mission Critical Linux” 제3회 난공불락 오픈소스 인프라세미나

- 7. High-Availability Clustering in the Open Source Ecosystem 6 1990s, 오픈 소스 고가용성 플랫폼을 만들 수있는 두 개의 완전히 독립적 인 시도는 1990년대 후반에 시작 - SUSE's "Linux HA" project - Red Hat's “Cluster Services" 1998s, "Heartbeat“ 불리우는 새로운 프로토콜 'Linux-HA'프로젝트, 이후 heartbeat v1.0 발표 2000s, “Mission Critical Linux” 2000s, ‘Sistina Software’ 회사 창립 “Global File System” 2007, Pacemaker (Heartbeat v 2.1.3) - Heartbeat package called "Pacemaker“ 2008s, SUSE 및 레드햇 개발자 모두 몇 가지 코드를 재사용 논의에 대해 비공식 회의 - SUSE's CRM/pacemaker - Red Hat's OpenAIS 2008, Pacemaker version 0.6.0 was release - support for OpenAIS 2009s, “Corosync” 새로운 Project 발표 2005, RedHat’s cman+rgmanager (RHCS, Cluster Services version 2) 2007s, two projects remained entirely separate until 2007 when, out of the Linux HA project, Pacemaker was born as a cluster resource manager that could take membership from and communicate via Red Hat's OpenAIS or SUSE's Heartbeat. 1998–2007s, Heartbeat Old Linux-HA cluster manager - Alan Robertson Pacemaker 1.1.10, released with RHEL6.5 Red Hat 에서는 기존 cman과rgmanager 방식을 RHEL6 라이프사이클이 종료되는 시점(2020년)까지 지원예정 Global Vendors 간 기술 협약을 통해 적용범위 확대 오늘날, Clusterlabs는 Heartbeat Project 에서생성된 Component들과 다른 솔루션형태로 빠르게 통합 및 변화 2002, REDHAT "Red Hat Cluster Manager" Version 1 - RHEL2.1 2003, SUSE's Lars Marowsky-Brée conceived of a new project called the "crm" 2003, Red Hat purchased Sistina Software ‘GFS’ 2004, Cluster Summit에 SUSE와 Red Hat developers 함께 참석 2004, SUSE, in partnership with Oracle, released OCFS2 2005, "Heartbeat version 2“released 2010s, Pacemaker version 1.1 - CIB (Cluster Information Base, XML Configure) - Red Hat’s “cman” Support - SLES11 SP1 (OpenAIS to Corosync) - Hawk, a web-based 2010, Pacemaker added support for cman Heartbeat project reached version 3 https://alteeve.ca/w/High-Availability_Clustering_in_the_Open_Source_Ecosystem 1998s2002s2004s 2008s 2010s ~2014s ~ 2006s 제3회 난공불락 오픈소스 인프라세미나

- 8. OpenSource Project Progress 7 Linux-HA / ClusterLabs SLES HA RHEL HA Add-on Pacemaker-mgmt Hwak (GUI) booth crmsh pacemaker pacemaker rgmanager lucipcs_gui pcs resource-agents Heartbeat corosync fence-agents cman cluster-glue Community Developer Novell Developer Red Hat Developer 제3회 난공불락 오픈소스 인프라세미나

- 9. Architectural Software Components Corosync: - Messaging(framework) and membership service. Pacemaker: - Cluster resource manager Resource Agents (RAs): - 사용가능한 서비스를 구성/관리 및 모니터링 Fencing Devices: - Pacemaker에서 fencing 을 STONITH라 부름 User Interface: - crmsh (Cluster Resource Manager Shell) CLI tools and Hawk web UI (SLES) - pcs (Pacemaker Configuration System) CLI tools and pcs_gui (RHEL) 8제3회 난공불락 오픈소스 인프라세미나

- 10. More …. 9 LVS : (=Keepalive) - Kernel space, Layer 4, ip + port. HAproxy : - user space, Layer 7, HTTP based. Shared filesystem : - OCFS2 / GFS2 Block device replication : - DRBD, cLVM mirroring, Cluster md raid1 제3회 난공불락 오픈소스 인프라세미나

- 11. Pacemaker : the resources manager Pacemaker (Python-based Unified, scriptable, cluster shell) - 리눅스플랫폼을 위한 고가용성과 로드밸런싱 스택 제공 - Resource Agents(RAs)를 통한 Application 간 상호작용을 통한 설정이 가능 클러스터 리소스의 정책을 사용자가 직접 결정 - Resource Agents 설정을 만들고 지우고 변경하는 것에 대한 자유로움 - 여러 산업 (공공, 증권/금융, 통신 등)환경의 어플리케이션에서 요구하는 HA조건들을 대체로 만족 - 리소스형태 fence agents 설정관리 용이 Monitor and Control Resource : - SystemD / LSB / OCF Services - Cloned Services : N+1, N+M, N nodes - Multi-state (Master/Slave, Primary/Secondary) STONITH (Shoot The Other Node In The Head) : - Fencing with Power Management 10제3회 난공불락 오픈소스 인프라세미나

- 12. Pacemaker - Architecture Component 11 Resource Agents - Agent Scripts - Open Cluster Framework Resource Agents Pacemaker - Resource Management LRMd Stonith CRMd CIB PEngine Corosync - Membership - Messaging - Quorum Cluster Abstraction Layer Corosync 제3회 난공불락 오픈소스 인프라세미나

- 13. Pacemaker - High level architecture 12 Messaging / Infrastructure Layer Resource Allocation Layer Resources Layer XML XML Cluster Node #1 Cluster Node #2 Corosync Cluster Resource Manager CRM Corosync Services (Apache, PostgreSQL 등) Local Resource Manager LRM Policy Engine Cluster Information Base CIB (복제) Resource Agents RAs 제3회 난공불락 오픈소스 인프라세미나

- 14. Quick Overview of Components - CRMd CRMd (Cluster Resource Management daemon) - main controlling process 역할 담당 - 모든 리소스 작업을 라우팅해주는 데몬 - Resource Allocation Layer내에서 동작되는 모든 동작 처리 - Maintains the Cluster Information Base (CIB) - CRMd에 의해 관리된 리소스는 필요에 따라 클라이언트 시스템에 전달, 쿼리되거나 이동, 인스턴스화, 변경 13 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync RA RA CIB (XML) STONITH CRM 제3회 난공불락 오픈소스 인프라세미나

- 15. Quick Overview of Components - CIB CIB (Cluster Information Base) - 설정 정보 관리 데몬. XML파일로 설정 (In-memory data) - DC(Designated Co-ordinator)에 의해 제공되는 각 노드별 설정내용 및 상태 정보를 동기화 - CIB 은 cibadmin 명령어를 사용하여 변경할수 있고, crm shell 또는 pcs utility 사용 14 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync RA RA CIB (XML) STONITH CRM 제3회 난공불락 오픈소스 인프라세미나

- 16. Quick Overview of Components - PEngine PEngine (PE or Policy Engine) - PE프로세스는 각 노드에서 실행되지만, DC[1]에서만 활성화 - 여러 서비스환경에 따라 Clone 및 domain 등 사용자요구에 따라 정책 부여 - 다른 클러스터 노드로 리소스 전환시 의존성 확인 15 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync RA RA CIB (XML) STONITH CRM 제3회 난공불락 오픈소스 인프라세미나 [1] DC = Designated Controller (master node)

- 17. Quick Overview of Components - LRMd LRMd (Local Resource Management Daemon) - CRMd와 각 리소스 사이에 인터페이스 역할을 수행하며, CRMd의 명령을 agent에 전달 - CRM을 대신하여 자기 자신의 RAs(Resource Agents) 호출 - CRM수행되어 보고된 결과에 따라 start / stop / monitor를 동작 16 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync RA RA CIB (XML) STONITH CRM 제3회 난공불락 오픈소스 인프라세미나

- 18. Quick Overview of Components - Resource Agents (1/2) RAs (Resource Agents) 는 클러스터리소스를 위해 정의된 규격화된 인터페이스 - local resource의 start / stops / monitors 스크립트 제공 - RAs(Resource Agents)는 LRM에 의해 호출 Pacemaker support three types of RA’s : - LSB : Linux Standard Base “init scripts” • /etc/init.d/resource - OCF : Open Cluster Framework (LSB Resource agents 확장자) Resource types : Standard:provider:name • /usr/lib/ocf/resource.d/heartbeat • /usr/lib/ocf/resource.d/pacemaker - Stonith Resource Agents Resource = Service clone = multiple instances of a resource ms = master-slave instances of a resource 수백만명의 많은 Contributer 들이 여러 Application환경에 적용될수 있도록 github 통해 배포 17 http://linux-ha.org/wiki/OCF_Resource_Agent http://linux-ha.org/wiki/LSB_Resource_Agents https://github.com/ClusterLabs/resource-agents RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync CIB (XML) STONITH CRM RA RA 제3회 난공불락 오픈소스 인프라세미나

- 19. Quick Overview of Components - Resource Agents (2/2) 기본적으로 Resource Agents 제공되어지는 기능: - start / stop / monitor - Validate-all : resource 설정 확인 - Meta-data : Resource Agents 대한 정보를 스스로 회신 (GUI or Other tools) OCF Resource Agents를 통해 제공되는 추가 기능 - promote : master/ Primary - demote : slave/secondary - Notify : 이벤트 발생된 리소스를 사전에 클러스터 에이전트를 통해 통보하여 알림 - Reload : 리소스 설정정보를 갱신 - Migrate_from/migrate_to : 리소스 live migration 수행 Resource scores 값의 의미 - 대부분 리소스는 Score가 정의되어 있지만 종종 지정되어 있지 않은 경우가 있음 - 어느 Cluster nodes에서 리소스가 사용되고 결정되어지는 경우 필요 - Higfest Score INF (1.000.000), lowest score –INF(-1.000.000) - 해당 값의 Positive의미는 “Can run”, Negative한 의미로는 “Can not run” +/-INF 변경값으로 “can 또는 must” 가능 18제3회 난공불락 오픈소스 인프라세미나

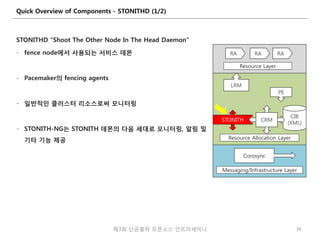

- 20. Quick Overview of Components - STONITHD (1/2) STONITHD “Shoot The Other Node In The Head Daemon” - fence node에서 사용되는 서비스 데몬 - Pacemaker의 fencing agents - 일반적인 클러스터 리소스로써 모니터링 - STONITH-NG는 STONITH 데몬의 다음 세대로 모니터링, 알림 및 기타 기능 제공 19제3회 난공불락 오픈소스 인프라세미나 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync CIB (XML) STONITH CRM RA RA

- 21. Quick Overview of Components - STONITHD (2/2) Application-level fencing 설정 가능 - Pacemaker 에서 직접 fencing 조정 - fenced (X) stonithd (O) 실무에서 가장 많이 사용되는 fence devices : - APC PDU (Networked Power Switch) - HP iLO, Dell DRAC, IBM IMM, IPMI Appliance 등 - KVM, Xen, VMware (Software library) - 소프트웨어 기반의 SBD (SUSE진영 가장 많이 사용) Data integrity (데이터 무결성)을 위해 반드시 필요 - 클러스터내 다른 노드로 리소스를 전환하기 위한 가장 최상의 방법 - “Enterprise”을 지향하는 Linux HA Cluster에서는 선택이 아닌 필수 제3회 난공불락 오픈소스 인프라세미나 20

- 22. ‘Planned or Unplanned’ 시스템 다운타임으로 부터 데이타보호하고 예방하기 위한 장치 (I/O Fencing) What is fencing? 21 Kernel panic System freeze Live hang / recovery 제3회 난공불락 오픈소스 인프라세미나

- 23. 일반적인 클러스터, 클라우드컴퓨팅 그리고 고가용성 환경에서 사용되는 오픈소스 그룹 메시징시스템. open source group messaging system typically used in clusters, cloud computing, and other high availability environments. Pacemaker 작동에 필요한 기본 클러스터 인프라 Communication Layer : messaging and membership - Totem single-ring ordering and membership protocol - 기본적인 제약 조건 : 브로드캐스트를 통한 멀티캐스트 통신 방식을 선호 - UDP/IP and InfiniBand 기반의 networks 통신 - UDPU (RHEL경우 6.2+ 이상부터 지원) - Corosync (OpenAIS) cman (Only RHEL6) 클러스터 파일시스템 지원 (GFS2, OCFS2, cLVM2 등) Quick Overview of Components - Corosync 22제3회 난공불락 오픈소스 인프라세미나 RA Resource Layer LRM PE Resource Allocation Layer Messaging/Infrastructure Layer Corosync CIB (XML) STONITH CRM RA RA

- 24. Corosync Cluster Engine Architecture Handle Database Manager : - maps in O1 order a unique 64-bit handle identifier to a memory address. Live Component Replacement : - Corosync 클러스터 엔진에 의해 사용되는 Plug-in 시스템 (LCR object) Object Database : - 설정엔진과 서비스엔진을 in-memory 형태의 저장 메커니즘 제공 The Totem Stack : - Totem Single Ring Ordering and Membership Protocol Synchronization Engine : - 클러스터 failure 또는 추가 등의 상태 변경후에는 모든 서비스엔진 Service Manager : - responsible for loading and unloading plug-in service engines Service Engines : - 클러스터 전체 서비스중 하나로 third-party제품 필요에 의해 제공 (pacemaker or CMAN) 제3회 난공불락 오픈소스 인프라세미나 23 Handle Database Manager Live Component Replacement Object Database Logging System Timers The Totem Stack Synchronization Engine Service Manager IPC Manager Configuration Engines Service Engines https://landley.net/kdocs/ols/2008/ols2008v1-pages-85-100.pdf

- 25. Quick Overview of Components - User Interface 고가용성 관리 Pacemaker 설정 시스템은 통일된 클러스터 설정 및 관리 도구로 제공 장점 : - 명령행 도구 - 클러스터를 쉽게 부트스트랩할 수 있어 첫 번째 클러스터를 시작 및 실행할 수 있음 - 클러스터 옵션을 설정할 수 있음 - 리소스 및 상호 관련성을 추가, 삭제, 변경할 수 있음 - crm shell : Cluster Resource Manager Shell (SLES) - pcs : Pacemaker Cluster System (Red Hat) 24 Hawk web UI (SLES)pcs_gui (RHEL) 제3회 난공불락 오픈소스 인프라세미나

- 26. Cluster Filesystem on shared disk 25 클러스터 노드가 노드 사이에서 공유되는 블록 장치로 동시에 액세스하게 하는 클러스터 파일 시스템 - 클러스터에서의 실행 최적화를 위해 분산 메타데이터와 다중 저널 사용 - 파일 시스템 무결성을 유지하기 위해 GFS는 I/O를 관리하여 잠금 관리 기능 사용 GFS (Global File System, Red Hat) - Linux 커널 파일 시스템 인터페이스의 VFS 레이어와 직접적으로 통신하는 파일 시스템 OCFS2 (Oracle Cluster File System, SLES) - 오라클이 개발한 범용 클러스터 파일 시스템으로 Enterprise Linux 커널과 통합 - 전체 노드가 클러스터 파일 시스템에 동시 접근하는 것을 가능하게 하며, 로우 디바이스 관리의 필요성을 제거 제3회 난공불락 오픈소스 인프라세미나

- 27. Cluster Block Device DRBD (Device Replication Block Devices) - 네트워크 기반의 raid 1(Mirroring) - 네트워크를 통해 고속 성능 데이타복제 기능 제공 (10Gb Network Bandwidth) CLVM2 (Cluster LVM2) - LVM으로의 클러스터 확장 모음 (LVM TYPE=3, lvm.conf) - 클러스터에서 LVM 메타데이터 업데이트를 사용하는 데몬 - 하나 이상의 클러스터 노드가 활성 노드 사이에서 공유되는 스토리지로의 액세스를 필요로할 경우 - 단, 데이타복제 속도가 현저히 느려질수 있음 Cluster md raid1 (Cluster Software Based RAID 1) - 여러 클러스터 노드가 공유하는 소프트웨어레이드 기반의 스토리지 제공 - 클러스터환경에서 제공하는 성능(High Performance) 과 안정성(RAID 1)을 보장 - 참고문헌 https://lwn.net/Articles/674085/ 제3회 난공불락 오픈소스 인프라세미나 26

- 28. Debugging Pacemaker Configuration : /var/lib/pacemaker/cib/cib.xml Cluster status : # pcs staus (or crm_mon) Failed Actions: * pgsqld_monitor_0 on pcmk-srv1 'master (failed)' (9): call=6, status=complete, exitreason='none', last-rc-change='Fri Jul 1 06:39:58 2016', queued=0ms, exec=484ms System Log Debug : # /var/log/cluster/corosync.log # journalctl -u pacemaker.service # crm_reports - sosreport 와 유사한 형태의 기능 제공 Examples : crm_report -f "2016-07-21 13:05:00" unexplained-apache-failure 27제3회 난공불락 오픈소스 인프라세미나

- 29. Use case samples (1/3) – Enterprise Linux환경 PostgreSQL 이중화 적용 검토 오픈소스기반의 이중화 솔루션 도입 검토 필요성 - 특정 vendors 종속성 탈피 - 기술력 내재화를 통한 운영관리 효율화 - Hardware 도입 비용 절감 - 인프라 운영 인력 리소스 투입 축소 - 기술 트랜드에 따른 R&D목적 28제3회 난공불락 오픈소스 인프라세미나

- 30. Architecture - Dedication 환경 PoC 적용 검토 진행 29 High-Availability (Pacemaker) NIC #1 eth0 eth1 NIC #2 eth2 eth3 HBA #1 Port0 HBA #2 port1 High-Availability (Pacemaker) NIC #1 eth0 eth1 NIC #2 eth2 eth3 HBA #1 Port0 HBA #2 Port1 L2 Switch SAN Switch bond0 bond0 Shared Volume Shared Volume device-mapper-multipath fence_scsi (SDB) EMC CX300 SAN Storage Clients / Application Servers Service / Heartbeat Network 1Gb Service Networking 제3회 난공불락 오픈소스 인프라세미나

- 31. PostgreSQL Active/Stanby Status 30제3회 난공불락 오픈소스 인프라세미나 # pcs status Cluster name: pcmk-ha Last updated: Thu Jul 21 16:32:06 2016 Last change: Thu Jul 21 16:26:01 2016 by root via crmd on pcmk-ha2 Stack: cman Current DC: pcmk-ha2 (version 1.1.14-8.el6-70404b0) - partition with quorum 2 nodes and 12 resources configured Online: [ pcmk-ha1 pcmk-ha2 ] Full list of resources: fence1 (stonith:fence_scsi): Started pcmk-ha2 fence2 (stonith:fence_scsi): Started pcmk-ha1 Resource Group: pgsql lvm-archive (ocf::heartbeat:LVM): Started pcmk-ha1 lvm-xlog (ocf::heartbeat:LVM): Started pcmk-ha1 lvm-data (ocf::heartbeat:LVM): Started pcmk-ha1 fs-archive (ocf::heartbeat:Filesystem): Started pcmk-ha1 fs-xlog (ocf::heartbeat:Filesystem): Started pcmk-ha1 fs-data (ocf::heartbeat:Filesystem): Started pcmk-ha1 vip (ocf::heartbeat:IPaddr): Started pcmk-ha1 pgsqld (ocf::heartbeat:pgsql): Started pcmk-ha1 PCSD Status: pcmk-ha1: Online pcmk-ha2: Online

- 32. Detail : Configuration 제3회 난공불락 오픈소스 인프라세미나 31 HA-LVM 권고사항이 아닌 필수! pcs resource create lvm-archive LVM volgrpname=pg_archive exclusive=true --group pgsql pcs resource create fs-archive Filesystem device="/dev/pg_archive/archive" directory="/archive" options="noatime,nodiratime" fstype="ext4" --group pgsql pcs resource create lvm-xlog LVM volgrpname=pg_xlog exclusive=true --group pgsql pcs resource create fs-xlog Filesystem device="/dev/pg_xlog/xlog" directory="/pg_xlog" options="noatime,nodiratime" fstype="ext4" --group pgsql pcs resource create lvm-data LVM volgrpname=pg_data exclusive=true --group pgsql pcs resource create fs-data Filesystem device="/dev/pg_data/data" directory="/data" options="noatime,nodiratime" fstype="ext4" --group pgsql pcs resource create vip ocf:heartbeat:IPaddr ip=xxx.xxx.xxx.xxx --group pgsql HA Logical Volume Manager # vi /etc/lvm/lvm.conf volume_list = [ "vg1", "vg2/lvol1", "@tag1", "@*" ]

- 33. Use case samples (2/3) - PostgreSQL Streaming Replication 이중화 기술 검토 PostgreSQL Streaming Replication 이중화 기술 검토 필요성 - 공유볼륨이 필요하지 않는 아키텍쳐 WAL (transaction log) 기반의 복제 Single master, multi-slave 제공 - locking 문제와 Split brain 네트워크와 같은 이슈로 부터 자유로움 - 다양한 인프라환경 이중화 구축 기술연구 32 Streaming Replication Primary / Master Standby / Slave WAL Sender WAL Reciever Application / Client Write (A) Read (A) Read (B) 제3회 난공불락 오픈소스 인프라세미나

- 34. PostgreSQL Master/Slave Status 33 # pcs status Cluster name: passr Last updated: Fri Jul 1 09:03:06 2016 Last change: Fri Jul 1 08:44:55 2016 by root via cibadmin on pcmk-srv1 Stack: cman Current DC: pcmk-srv1 (version 1.1.14-8.el6-70404b0) - partition with quorum 2 nodes and 4 resources configured Online: [ pcmk-srv1 pcmk-srv2 ] Full list of resources: fence1 (stonith:fence_kvm): Started pcmk-srv2 fence2 (stonith:fence_kvm): Started pcmk-srv1 vip (ocf::heartbeat:IPaddr2): Started pcmk-srv1 Master/Slave Set: pgsql-ha [pgsqld] Masters: [ pcmk-srv1 ] Slaves: [ pcmk-srv2 ] PCSD Status: pcmk-srv1: Online pcmk-srv2: Online 제3회 난공불락 오픈소스 인프라세미나

- 35. Detail : Configuration 34제3회 난공불락 오픈소스 인프라세미나 vip # pcs resource create vip ocf:heartbeat:IPaddr2 ip=xxx.xxx.xxx.xxx cidr_netmask=24 op monitor interval=10s pgsql # pcs -f pgsql.xml resource create pgsqld ocf:heartbeat:pgsqlms bindir=/postgres/9.5/bin pgdata=/pg_data/data95 op start timeout=60s op stop timeout=60s op reload timeout=20s op promote timeout=30s op demote timeout=120s op monitor interval=15s timeout=10s role="Master" op monitor interval=16s timeout=10s role="Slave" op notify timeout=60s op meta-data timeout=5s op validate-all timeout=5s Resource constraint # pcs resource mater pgsql-ha pgsqld meta is-managed=true notify=true clone-max=2 clone-node-max=1 interleave=true # pcs constraint colocation add vip with master pgsql-ha 2000 # pcs constraint order promote pgsql-ha then start vip symmetrical=false # pcs constraint order demote pgsql-ha then stop vip symmetrical=false # pcs constraint order pgsqld then pgsql-ha symmetrical=false

- 36. Detail : Configuration (2/2) http://dalibo.github.io/PAF/Quick_Start-CentOS-6.html 35제3회 난공불락 오픈소스 인프라세미나

- 37. Use case samples (3/3) – ERP환경 SAP 대개체 36 X3850 X6 SAPHANA Appliance X3850 X6 SAPHANA Appliance bond2 52.2.X.X/24 bond3 10.10.10.X/24 Service bond1 100.100.100.X/24 10000 Mbps Replicated RHEL6 Update 6 High-Availability Pacemaker RHEL6 Update 6 High-Availability Pacemaker SAP HANA SPS 10 SAP HANA SPS 10 fence_ipmillan fence_ipmillan Clients / Application Servers Hearbeart 제3회 난공불락 오픈소스 인프라세미나

- 38. RHEL for SAP HANA Certified Automates the failover of a SAP HANA System Replication RHEL 6.5, 6.6, and 6.7 (as of Feb. 2016) - HANA Version: HANA ~ SPS10 (later new feature SPS11 supported) - CPU Architecture: Intel Ivy Bridge-EX, Haswell-EX RHEL Pacemaker cluster stack 사용 - RHEL HA add-on 제품형태로 제공 Resource agents - SAPHana : SAP Replication 환경 설정 및 관리 - SAPHanaTopology : SAP Replication 상태 정보 수집 및 보고 - Bundle 패키지 필요 : resource-agents-sap-hana Configuration Guide https://access.redhat.com/sites/default/files/attachments/automated_sap_hana_sr_setup_guide_20150709.pdf 37제3회 난공불락 오픈소스 인프라세미나

- 39. Failover Scenario - Primary Node Down 38 Data Volume Data Volume X3850 X6 SAP HANA Appliance VIP System Replication “Sync” mode AUTOMATED_REGISTER=false 권고 SAPHana Master/Slave Resource Master SAPHanaTopology Clone Resource Clone Slave Clone 제3회 난공불락 오픈소스 인프라세미나 Clients / Application Servers

- 40. Failover Scenario - Secondary Node Take-Over 39 Data Volume Data Volume failoverVIP VIP SAPHana Master/Slave Resource System Replication “Sync” mode X3850 X6 SAP HANA Appliance HW/SW 장애발생 Clients / Application Servers AUTOMATED_REGISTER=false 권고 Master SAPHanaTopology Clone Resource Clone Master Clone 제3회 난공불락 오픈소스 인프라세미나

- 41. 40 # pcs status Cluster name: hanacluster Last updated: Mon May 2 17:41:52 2016 Last change: Mon May 2 17:41:24 2016 Stack: cman Current DC: saphana-ha1 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured 12 Resources configured Online: [ saphana-ha1 saphana-ha2 ] Full list of resources: fence1 (stonith:fence_ipmilan): Started saphana-ha2 fence2 (stonith:fence_ipmilan): Started saphana-ha1 rsc_vip_52_SAPHana_ONP_HDB00 (ocf::heartbeat:IPaddr): Started saphana-ha1 Clone Set: bond2-monitor-clone [bond2-monitor] Started: [ saphana-ha1 saphana-ha2 ] rsc_vip_100_SAPHana_ONP_HDB00 (ocf::heartbeat:IPaddr): Started saphana-ha1 Clone Set: bond1-monitor-clone [bond1-monitor] Started: [ saphana-ha1 saphana-ha2 ] Clone Set: rsc_SAPHanaTopology_ONP_HDB00-clone [rsc_SAPHanaTopology_ONP_HDB00] Started: [ saphana-ha1 saphana-ha2 ] Master/Slave Set: msl_rsc_SAPHana_ONP_HDB00 [rsc_SAPHana_ONP_HDB00] Masters: [ saphana-ha1 ] Slaves: [ saphana-ha2 ] SAP HANADB Master/Slave Status 제3회 난공불락 오픈소스 인프라세미나

- 42. Detail : Configuration VIP # pcs resource create rsc_vip_52_SAPHana_ONP_HDB00 ocf:heartbeat:IPaddr ip=xxx.xxx.xxxx.xxxx # pcs resource create bond2-monitor ethmonitor interface=bond2 --clone # pcs resource create rsc_vip_100_SAPHana_ONP_HDB00 ocf:heartbeat:IPaddr ip=xxxx.xxxx.xxxx.xxxx # pcs resource create bond1-monitor ethmonitor interface=bond1 --clone SAPHanaTopology # pcs resource create rsc_SAPHanaTopology_ONP_HDB00 SAPHanaTopology SID=ONP InstanceNumber=00 op start timeout=600 op stop timeout=300 op monitor interval=10 timeout=600 # pcs resource clone rsc_SAPHanaTopology_ONP_HDB00 meta is-managed=true clone-max=2 clone-node-max=1 interleave=true SAPHana # pcs resource create rsc_SAPHana_ONP_HDB00 SAPHana SID=ONP InstanceNumber=00 PREFER_SITE_TAKEOVER=true DUPLICATE_PRIMARY_TIMEOUT=7200 AUTOMATED_REGISTER=false op start timeout=3600 op stop timeout=3600 op promote timeout=3600 op demote timeout=3600 op monitor interval=59 role="Master“ timeout=700 op monitor interval=61 role="Slave" timeout=700 # pcs resource master msl_rsc_SAPHana_ONP_HDB00 rsc_SAPHana_ONP_HDB00 meta is-managed=true notify=true clone-max=2 clone-node-max=1 interleave=true Resource constraint # pcs constraint location msl_rsc_SAPHana_ONP_HDB00 rule score=-INFINITY ethmonitor-bond2 ne 1 # pcs constraint location msl_rsc_SAPHana_ONP_HDB00 rule score=-INFINITY ethmonitor-bond1 ne 1 # pcs constraint colocation add rsc_vip_xx_SAPHana_ONP_HDB00 with master msl_rsc_SAPHana_ONP_HDB00 2000 # pcs constraint colocation add rsc_vip_xxx_SAPHana_ONP_HDB00 with master msl_rsc_SAPHana_ONP_HDB00 2000 # pcs constraint order rsc_SAPHanaTopology_ONP_HDB00-clone then msl_rsc_SAPHana_ONP_HDB00 symmetrical=false 41제3회 난공불락 오픈소스 인프라세미나 bonding 절체를 통한 resource 감지가 필요하다면 ethmonitor 추가 필요 권고사항

- 43. Future endeavors (1/2) Future Development (개발 진행중이거나 통합 및 업데이트) - Pacemaker Cloud • 클라우드 환경에서 monitoring, isolation, recovery, and notification 기술을 사용하여 고가용성제공 • Our developers have very high scale requirements. Our goal is multi-hundred assembly (virtual machine) deployments, with many thousands of deployments within a system. • https://www.openhub.net/p/pacemaker-cloud - 여러 기술들을 통한 연계 • DeltaCloud : REST 기반 (HATEOAS) 클라우드 추상화 API, IaaS간의 API를 통합하여 관리 할 수 있도록 해 주는 오픈소스 (1세대 PaaS 벤더 종속성 해방) • Matahari : Systems Management and Monitoring APIs (w/ pacemaker-cloud) 42제3회 난공불락 오픈소스 인프라세미나

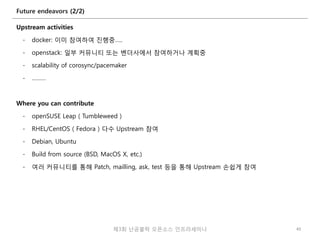

- 44. Future endeavors (2/2) Upstream activities - docker: 이미 참여하여 진행중….. - openstack: 일부 커뮤니티 또는 벤더사에서 참여하거나 계획중 - scalability of corosync/pacemaker - ……… Where you can contribute - openSUSE Leap ( Tumbleweed ) - RHEL/CentOS ( Fedora ) 다수 Upstream 참여 - Debian, Ubuntu - Build from source (BSD, MacOS X, etc.) - 여러 커뮤니티를 통해 Patch, mailling, ask, test 등을 통해 Upstream 손쉽게 참여 43제3회 난공불락 오픈소스 인프라세미나

- 45. At the end of…. 여러분이 생각하는 고가용성이란 무엇인가요? 또한 어떻게 생각하고 계셨나요? 제3회 난공불락 오픈소스 인프라세미나 44 어떠한 솔루션도 99.9999999% 보장해주는 시스템은 세상 어디에도 없습니다. 또한, Best Practice 란 없습니다. 오로지 Test! Test! TEST!!!

- 46. REFERENCE All *open-source* in the whole stack. Go, googling, … Configuring the Red Hat High Availability Add-On with Pacemaker https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/6/html-single/Configuring_the_Red_Hat_High_Availability_Add- On_with_Pacemaker/index.html SAP on Red Hat Technical Documents http://www.redhat.com/f/pdf/ha-sap-v1-6-4.pdf Red Hat Reference Architecture Series http://www.sistina.com/rhel/resource_center/reference_architecture.html Clusterlabs http://clusterlabs.org/doc/ http://blog.clusterlabs.org/ OpenStack HA http://www.slideshare.net/kenhui65/openstack-ha High Availability on Linux - the SUSE way https://tuna.moe/assets/slides/SFD2015/SUSE_HA_arch_overview.pdf 45제3회 난공불락 오픈소스 인프라세미나

- 47. 46제3회 난공불락 오픈소스 인프라세미나

- 48. 별첨1) CLUSTER CREATION 47 Task Pacemaker Create cluster # pcs cluster setup [--start] --name clustername node1 node2 ... Start cluster Start on one node: # pcs cluster start Start on all cluster members: # pcs cluster start –all Enable cluster # pcs cluster --enable –all Stop cluster Stop one node: # pcs cluster stop Stop on all cluster members: # pcs cluster stop –all Add node to cluster # pcs cluster node add node Remove node from cluster # pcs cluster node remove node Show configured cluster nodes # pcs cluster status Show cluster configuration # pcs config show Sync cluster configuration Propagation is automatic on configuration for pacemaker cib.xml file To sync corosync.conf # pcs cluster sync 제3회 난공불락 오픈소스 인프라세미나

- 49. 별첨2) FENCING & CLUSTER RESOURCES AND RESOURCE GROUPS 48 Task Pacemaker Show fence agents # pcs stonith list Show fence agent options # pcs stonith describe fenceagent Create fence device # pcs stonith create stonith_id stonith_device_type[stonith_device_options] Configure backup fence device # pcs stonith level add level node devices Remove a fence device # pcs stonith delete stonith_id Modify a fence device # pcs stonith update stonith_id stonith_device_options Display configured fence devices # pcs stonith show Task Pacemaker List available resource agents # pcs resource list List options for a specific resource # pcs resource describe resourcetype Create resource # pcs resource create resource_id resourcetyperesource_options Create resource groups # pcs resource group add group_name resource_id1[resource_id2] [...] or create group on resource creation # pcs resource create resource_id resourcetyperesource_options --group group_name Create resource to run on all nodes # pcs resource create resource_id resourcetyperesource_options --clone clone_options Display configured resources # pcs resource show [--full] Configure resource contraints Order constraints (if not using service groups) # pcs constraint order [action] resource_id1 then [action]resource_id2 Location constraints # pcs constraint location rsc prefers node[=score] # pcs constraint location rsc avoids node[=score] Colocation constraints # pcs constraint colocation add source_resource withtarget_resource [=score] Modify a resource option # pcs resource update resource_id resource_options 제3회 난공불락 오픈소스 인프라세미나

- 50. 별첨3) TROUBLESHOOTING 49 Task Pacemaker Display current cluster and resource status # pcs status Display current version information # pcs status Stop and disable a cluster element # pcs resource disable resource | group Enable a cluster element # pcs resource enable resource | group Freeze a cluster element (prevent status check) # pcs resource unmanage resource | group Unfreeze a cluster element (resume status check) # pcs resource manage resource | group Disable cluster resource management Disable Pacemaker resource management: # pcs property set maintenance-mode=true Re-enable Pacemaker resource management: # pcs property set maintenance-mode=false Disable single node # pcs cluster standby node Re-enable node # pcs cluster unstandby node Move cluster element to another node pcs resource move groupname move_to_nodename (move_to_nodename remains preferred node until "pcs resource clear" is e xecuted) 제3회 난공불락 오픈소스 인프라세미나

![Quick Overview of Components - PEngine

PEngine (PE or Policy Engine)

- PE프로세스는 각 노드에서 실행되지만, DC[1]에서만 활성화

- 여러 서비스환경에 따라 Clone 및 domain 등 사용자요구에 따라

정책 부여

- 다른 클러스터 노드로 리소스 전환시 의존성 확인

15

RA

Resource Layer

LRM

PE

Resource Allocation Layer

Messaging/Infrastructure Layer

Corosync

RA RA

CIB

(XML)

STONITH CRM

제3회 난공불락 오픈소스 인프라세미나

[1] DC = Designated Controller (master node)](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-16-320.jpg)

![PostgreSQL Active/Stanby Status

30제3회 난공불락 오픈소스 인프라세미나

# pcs status

Cluster name: pcmk-ha

Last updated: Thu Jul 21 16:32:06 2016 Last change: Thu Jul 21 16:26:01 2016 by root via crmd on pcmk-ha2

Stack: cman

Current DC: pcmk-ha2 (version 1.1.14-8.el6-70404b0) - partition with quorum

2 nodes and 12 resources configured

Online: [ pcmk-ha1 pcmk-ha2 ]

Full list of resources:

fence1 (stonith:fence_scsi): Started pcmk-ha2

fence2 (stonith:fence_scsi): Started pcmk-ha1

Resource Group: pgsql

lvm-archive (ocf::heartbeat:LVM): Started pcmk-ha1

lvm-xlog (ocf::heartbeat:LVM): Started pcmk-ha1

lvm-data (ocf::heartbeat:LVM): Started pcmk-ha1

fs-archive (ocf::heartbeat:Filesystem): Started pcmk-ha1

fs-xlog (ocf::heartbeat:Filesystem): Started pcmk-ha1

fs-data (ocf::heartbeat:Filesystem): Started pcmk-ha1

vip (ocf::heartbeat:IPaddr): Started pcmk-ha1

pgsqld (ocf::heartbeat:pgsql): Started pcmk-ha1

PCSD Status:

pcmk-ha1: Online

pcmk-ha2: Online](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-31-320.jpg)

![Detail : Configuration

제3회 난공불락 오픈소스 인프라세미나 31

HA-LVM 권고사항이 아닌 필수!

pcs resource create lvm-archive LVM volgrpname=pg_archive exclusive=true --group pgsql

pcs resource create fs-archive Filesystem device="/dev/pg_archive/archive" directory="/archive"

options="noatime,nodiratime" fstype="ext4" --group pgsql

pcs resource create lvm-xlog LVM volgrpname=pg_xlog exclusive=true --group pgsql

pcs resource create fs-xlog Filesystem device="/dev/pg_xlog/xlog" directory="/pg_xlog"

options="noatime,nodiratime" fstype="ext4" --group pgsql

pcs resource create lvm-data LVM volgrpname=pg_data exclusive=true --group pgsql

pcs resource create fs-data Filesystem device="/dev/pg_data/data" directory="/data"

options="noatime,nodiratime" fstype="ext4" --group pgsql

pcs resource create vip ocf:heartbeat:IPaddr ip=xxx.xxx.xxx.xxx --group pgsql

HA Logical Volume Manager

# vi /etc/lvm/lvm.conf

volume_list = [ "vg1", "vg2/lvol1", "@tag1", "@*" ]](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-32-320.jpg)

![PostgreSQL Master/Slave Status

33

# pcs status

Cluster name: passr

Last updated: Fri Jul 1 09:03:06 2016 Last change: Fri Jul 1 08:44:55 2016 by root via

cibadmin on pcmk-srv1

Stack: cman

Current DC: pcmk-srv1 (version 1.1.14-8.el6-70404b0) - partition with quorum

2 nodes and 4 resources configured

Online: [ pcmk-srv1 pcmk-srv2 ]

Full list of resources:

fence1 (stonith:fence_kvm): Started pcmk-srv2

fence2 (stonith:fence_kvm): Started pcmk-srv1

vip (ocf::heartbeat:IPaddr2): Started pcmk-srv1

Master/Slave Set: pgsql-ha [pgsqld]

Masters: [ pcmk-srv1 ]

Slaves: [ pcmk-srv2 ]

PCSD Status:

pcmk-srv1: Online

pcmk-srv2: Online

제3회 난공불락 오픈소스 인프라세미나](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-34-320.jpg)

![40

# pcs status

Cluster name: hanacluster

Last updated: Mon May 2 17:41:52 2016

Last change: Mon May 2 17:41:24 2016

Stack: cman

Current DC: saphana-ha1 - partition with quorum

Version: 1.1.11-97629de

2 Nodes configured

12 Resources configured

Online: [ saphana-ha1 saphana-ha2 ]

Full list of resources:

fence1 (stonith:fence_ipmilan): Started saphana-ha2

fence2 (stonith:fence_ipmilan): Started saphana-ha1

rsc_vip_52_SAPHana_ONP_HDB00 (ocf::heartbeat:IPaddr): Started saphana-ha1

Clone Set: bond2-monitor-clone [bond2-monitor]

Started: [ saphana-ha1 saphana-ha2 ]

rsc_vip_100_SAPHana_ONP_HDB00 (ocf::heartbeat:IPaddr): Started saphana-ha1

Clone Set: bond1-monitor-clone [bond1-monitor]

Started: [ saphana-ha1 saphana-ha2 ]

Clone Set: rsc_SAPHanaTopology_ONP_HDB00-clone [rsc_SAPHanaTopology_ONP_HDB00]

Started: [ saphana-ha1 saphana-ha2 ]

Master/Slave Set: msl_rsc_SAPHana_ONP_HDB00 [rsc_SAPHana_ONP_HDB00]

Masters: [ saphana-ha1 ]

Slaves: [ saphana-ha2 ]

SAP HANADB Master/Slave Status

제3회 난공불락 오픈소스 인프라세미나](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-41-320.jpg)

![별첨1) CLUSTER CREATION

47

Task Pacemaker

Create cluster # pcs cluster setup [--start] --name clustername node1 node2 ...

Start cluster

Start on one node:

# pcs cluster start

Start on all cluster members:

# pcs cluster start –all

Enable cluster # pcs cluster --enable –all

Stop cluster

Stop one node:

# pcs cluster stop

Stop on all cluster members:

# pcs cluster stop –all

Add node to cluster # pcs cluster node add node

Remove node from cluster # pcs cluster node remove node

Show configured cluster nodes # pcs cluster status

Show cluster configuration # pcs config show

Sync cluster configuration

Propagation is automatic on configuration for pacemaker cib.xml file

To sync corosync.conf

# pcs cluster sync

제3회 난공불락 오픈소스 인프라세미나](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-48-320.jpg)

![별첨2) FENCING & CLUSTER RESOURCES AND RESOURCE GROUPS

48

Task Pacemaker

Show fence agents # pcs stonith list

Show fence agent options # pcs stonith describe fenceagent

Create fence device # pcs stonith create stonith_id stonith_device_type[stonith_device_options]

Configure backup fence device # pcs stonith level add level node devices

Remove a fence device # pcs stonith delete stonith_id

Modify a fence device # pcs stonith update stonith_id stonith_device_options

Display configured fence devices # pcs stonith show

Task Pacemaker

List available resource agents # pcs resource list

List options for a specific resource # pcs resource describe resourcetype

Create resource # pcs resource create resource_id resourcetyperesource_options

Create resource groups

# pcs resource group add group_name resource_id1[resource_id2] [...]

or create group on resource creation

# pcs resource create resource_id resourcetyperesource_options --group group_name

Create resource to run on all nodes # pcs resource create resource_id resourcetyperesource_options --clone clone_options

Display configured resources # pcs resource show [--full]

Configure resource contraints

Order constraints (if not using service groups)

# pcs constraint order [action] resource_id1 then [action]resource_id2

Location constraints

# pcs constraint location rsc prefers node[=score]

# pcs constraint location rsc avoids node[=score]

Colocation constraints

# pcs constraint colocation add source_resource withtarget_resource [=score]

Modify a resource option # pcs resource update resource_id resource_options

제3회 난공불락 오픈소스 인프라세미나](https://image.slidesharecdn.com/klugpacemakertheopensourcehigh-availability1-161205080102/85/Klug-pacemaker-the-opensource-high-availability_1-0_f-49-320.jpg)

![3.[d2 오픈세미나]분산시스템 개발 및 교훈 n base arc](https://cdn.slidesharecdn.com/ss_thumbnails/3-140905000012-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![[OpenStack Days Korea 2016] Innovating OpenStack Network with SDN solution](https://cdn.slidesharecdn.com/ss_thumbnails/05kulcloud-160226170318-thumbnail.jpg?width=560&fit=bounds)

![[개방형 클라우드 플랫폼 오픈세미나 오픈클라우드 Pub] 3.open shift 분석](https://cdn.slidesharecdn.com/ss_thumbnails/pub3-140818080510-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[232] 수퍼컴퓨팅과 데이터 어낼리틱스](https://cdn.slidesharecdn.com/ss_thumbnails/223-150915022242-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)

![[OpenStack Days Korea 2016] Track2 - How to speed up OpenStack network with P...](https://cdn.slidesharecdn.com/ss_thumbnails/22kulcloud-160226172151-thumbnail.jpg?width=560&fit=bounds)

![[오픈소스컨설팅]유닉스의 리눅스 마이그레이션 전략_v3](https://cdn.slidesharecdn.com/ss_thumbnails/unixtolinuxarchitecturev3-140807111733-phpapp01-thumbnail.jpg?width=560&fit=bounds)