Lect2 scan convertinglines

- 1. Computer Graphics: Line Drawing Algorithms Sudipta Mondal [email protected]

- 2. 2 of 32 Contents Graphics hardware The problem of scan conversion Considerations Line equations Scan converting algorithms – A very simple solution – The DDA algorithm Conclusion

- 3. 3 of 32 Graphics Hardware It’s worth taking a little look at how graphics hardware works before we go any further Images taken from Hearn & Baker, “Computer Graphics C version How do things end up on the screen?

- 4. 4 of 32 Architecture Of A Graphics System Display Frame Video Processor Monitor Memory Buffer Controller Display System CPU Processor Memory System Bus

- 5. 5 of 32 Output Devices There are a range of output devices currently available: – Printers/plotters – Cathode ray tube displays – Plasma displays – LCD displays – 3 dimensional viewers – Virtual/augmented reality headsets We will look briefly at some of the more common display devices

- 6. 6 of 32 Basic Cathode Ray Tube (CRT) Fire an electron beam at a phosphor coated screen Images taken from Hearn & Baker, “Computer Graphics C version

- 7. Images taken from Hearn & Baker, “Computer Graphics C version 7 of 32 Draw one line at a time Raster Scan Systems

- 8. 8 of 32 Colour CRT An electron gun for each colour – red, green and blue Images taken from Hearn & Baker, “Computer Graphics C version

- 9. 9 of 32 Plasma-Panel Displays Applying voltages to crossing pairs of Images taken from Hearn & Baker, “Computer Graphics C version conductors causes the gas (usually a mixture including neon) to break down into a glowing plasma of electrons and ions

- 10. 10 of 32 Liquid Crystal Displays Light passing through the liquid Images taken from Hearn & Baker, “Computer Graphics C version crystal is twisted so it gets through the polarizer A voltage is applied using the crisscrossing conductors to stop the twisting and turn pixels off

- 11. 11 of 32 The Problem Of Scan Conversion A line segment in a scene is defined by the coordinate positions of the line end-points y (7, 5) (2, 2) x

- 12. 12 of 32 The Problem (cont…) But what happens when we try to draw this on a pixel based display? How do we choose which pixels to turn on?

- 13. 13 of 32 Considerations Considerations to keep in mind: – The line has to look good • Avoid jaggies – It has to be lightening fast! • How many lines need to be drawn in a typical scene? • This is going to come back to bite us again and again

- 14. 14 of 32 Line Equations Let’s quickly review the equations involved in drawing lines y Slope-intercept line equation: yend y = m× x +b where: yend - y0 y0 m= xend - x0 b = y 0 - m × x0 x x0 xend

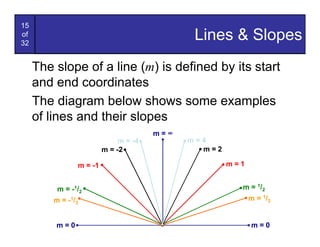

- 15. 15 of 32 Lines & Slopes The slope of a line (m) is defined by its start and end coordinates The diagram below shows some examples of lines and their slopes m=∞ m = -4 m=4 m = -2 m=2 m = -1 m=1 m = - 1 /2 m = 1 /2 m = - 1 /3 m = 1 /3 m=0 m=0

- 16. 16 of 32 A Very Simple Solution We could simply work out the corresponding y coordinate for each unit x coordinate Let’s consider the following example: y (7, 5) 5 2 (2, 2) x 2 7

- 17. 17 of 32 A Very Simple Solution (cont…) 5 4 3 2 1 0 0 1 2 3 4 5 6 7

- 18. 18 of 32 A Very Simple Solution (cont…) y (7, 5) 5 First work out m and b: 5-2 3 m= = 2 7-2 5 (2, 2) 3 4 b = 2- *2 = 2 3 4 5 6 7 x 5 5 Now for each x value work out the y value: 3 4 3 3 4 1 y (3) = × 3 + = 2 y ( 4) = × 4 + = 3 5 5 5 5 5 5 3 4 4 3 4 2 y (5) = × 5 + = 3 y (6) = × 6 + = 4 5 5 5 5 5 5

- 19. 19 of 32 A Very Simple Solution (cont…) Now just round off the results and turn on these pixels to draw our line 3 7 y (3) = 2 » 3 6 5 5 1 4 y (4) = 3 » 3 5 3 4 2 y (5) = 3 » 4 1 5 0 2 y (6) = 4 » 4 0 1 2 3 4 5 6 7 8 5

- 20. 20 of 32 A Very Simple Solution (cont…) However, this approach is just way too slow In particular look out for: – The equation y = mx + b requires the multiplication of m by x – Rounding off the resulting y coordinates We need a faster solution

- 21. 21 of 32 A Quick Note About Slopes In the previous example we chose to solve the parametric line equation to give us the y coordinate for each unit x coordinate What if we had done it the other way around? y -b So this gives us: x = m yend - y0 where: m = and b = y0 - m × x0 xend - x0

- 22. 22 of 32 A Quick Note About Slopes (cont…) Leaving out the details this gives us: 2 1 x(3) = 3 » 4 x ( 4) = 5 » 5 3 3 We can see easily that 7 this line doesn’t look 6 very good! 5 4 We choose which way 3 to work out the line 2 pixels based on the 1 0 slope of the line 0 1 2 3 4 5 6 7 8

- 23. 23 of 32 A Quick Note About Slopes (cont…) If the slope of a line is between -1 and 1 then we work out the y coordinates for a line based on it’s unit x coordinates Otherwise we do the opposite – x coordinates are computed based on unit y coordinates m=∞ m = -4 m=4 m = -2 m=2 m = -1 m=1 m = - 1 /2 m = 1 /2 m = - 1 /3 m = 1 /3 m=0 m=0

- 24. 24 of 32 A Quick Note About Slopes (cont…) 5 4 3 2 1 0 0 1 2 3 4 5 6 7

- 25. 25 of 32 The DDA Algorithm The digital differential analyzer (DDA) algorithm takes an incremental approach in order to speed up scan conversion The original differential Simply calculate yk+1 a n a l yze r w as a p h ys i c a l based on yk machine developed by Vannevar Bush at MIT in the 1930’s in order to sol ve ordinary differential equations.

- 26. 26 of 32 The DDA Algorithm (cont…) Consider the list of points that we determined for the line in our previous example: (2, 2), (3, 23/5), (4, 31/5), (5, 34/5), (6, 42/5), (7, 5) Notice that as the x coordinates go up by one, the y coordinates simply go up by the slope of the line This is the key insight in the DDA algorithm

- 27. 27 of 32 The DDA Algorithm (cont…) When the slope of the line is between -1 and 1 begin at the first point in the line and, by incrementing the x coordinate by 1, calculate the corresponding y coordinates as follows: yk +1 = yk + m When the slope is outside these limits, increment the y coordinate by 1 and calculate the corresponding x coordinates as follows: 1 xk +1 = xk + m

- 28. 28 of 32 The DDA Algorithm (cont…) Again the values calculated by the equations used by the DDA algorithm must be rounded to match pixel values (xk+1, round(yk+m)) (round(xk+ 1/m), yk+1) (xk, yk) (xk+ 1/m, yk+1) (xk+1, yk+m) (xk, yk) (xk, round(yk)) (round(xk), yk)

- 29. 29 of 32 DDA Algorithm Example Let’s try out the following examples: y y (2, 7) 7 (7, 5) 5 2 2 (2, 2) (3, 2) x x 2 7 2 3

- 30. 30 of 32 DDA Algorithm Example (cont…) 7 6 5 4 3 2 0 1 2 3 4 5 6 7

- 31. 31 of 32 The DDA Algorithm Summary The DDA algorithm is much faster than our previous attempt – In particular, there are no longer any multiplications involved However, there are still two big issues: – Accumulation of round-off errors can make the pixelated line drift away from what was intended – The rounding operations and floating point arithmetic involved are time consuming

- 32. 32 of 32 Conclusion In this lecture we took a very brief look at how graphics hardware works Drawing lines to pixel based displays is time consuming so we need good ways to do it The DDA algorithm is pretty good – but we can do better Next time we’ll like at the Bresenham line algorithm and how to draw circles, fill polygons and anti-aliasing