Lecture 10

- 2. Supervised Learning: Classification: Predict a discrete value(label) associated with feature vector. Regression: Predict a real number associated with a feature vector. E.g., Use linear regression to fit a curve to data.

- 3. Example:

- 5. Using Distance Matrix for Classification: Simplest approach is probably nearest neighbors. Remember training data When predicting the label of a new example Find the nearest example in the training data Predict the label associated with that example.

- 9. Advantages and Disadvantages of KNN: Advantages: Learning Fast, no explicit training No theory Required Easy to explain method and results Disadvantages: Memory intensive and predictions can take a long time. No model to shed light on process that generated data.

- 10. Naïve Baye’s Text classification: Why? Learn which news articles are of interest. Learn to classify web pages category Basic Intuition: Simple (naïve) classification method based on Bayes rule. Relies on very simple representation of documents Bag of words

- 11. Bag of words representation:

- 12. Naïve Bayes Text Classification: Bayes Rule: For a document d and class c Goal of Classifier:

- 13. Learn to Classify Text using Naïve Bayes: Target concept interesting? : Document {+, -} Represent each document by vector of words One attribute per word position in document Learning : Use training examples to estimate P(+), P(-), P(doc|+), P(doc|-) Naïve Bayes conditional independence assumption Where P(ai = Wk|Vj) is probability that a word in position in i is Wk , given Vj

- 14. An example: Movie Review Dictionary: 10 Unique words < I, loved, the, movie, hated, a, great, good, poor, acting>

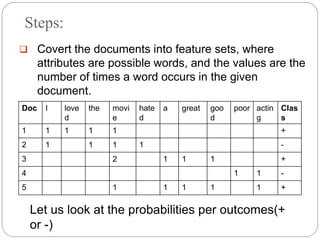

- 15. Steps: Covert the documents into feature sets, where attributes are possible words, and the values are the number of times a word occurs in the given document. Doc I love d the movi e hate d a great goo d poor actin g Clas s 1 1 1 1 1 + 2 1 1 1 1 - 3 2 1 1 1 + 4 1 1 - 5 1 1 1 1 1 + Let us look at the probabilities per outcomes(+ or -)

- 16. Naïve Bayes… Documents with positive outcomes: P(+)= 3/5= 0.6 Compute: P(I|+), P(loved|+), P(the|+), P(movie|+), P(a|+), P(great|+), P(good|+), P(acting|+) Let n be the number of words in the (+) case: 14, nk the number of word k occurs in these case(+) Let P(Wk|+) = (n k + 1)/(n +|vocabulary|) Doc I loved the movie hate d a great goo d poo r actin g Clas s 1 1 1 1 1 + 3 2 1 1 1 + 5 1 1 1 1 1 +

- 17. Naïve Bayes… P(I|+)=0.0833 P(acting|+)= 0.0833 P(loved|+)=0.0833 P(poor|+)= 0.0417 P(the|+)= 0.0833 P(hated|+) = 0.0417 P(movie|+)= 0.2083 P(great|+)= 0.1250 P(a|+)= 0.1250 P(good|+)= 0.1250 Now, Documents with negative class: Doc I love d the movie hate d a gre at goo d poo r acting Clas s 2 1 1 1 1 - 4 1 1 -

- 18. P(I|-)= 0.1250 P(acting|-)= 0.1250 P(loved|-)= 0.0625 P(poor|-)= 0.1250 P(the|-)= 0.1250 P(hated|-) = 0.1250 P(movie|-)= 0.1250 P(great|-)= 0.0625 P(a|-)= 0.0625 P(good|-)= 0.0625 Now, Let’s classify a new sentence w.r.t our training samples: Test document: I hated the poor acting If Vj= +; P(+)*P(I|+)*P(hated|+)*P(the|+)*P(poor|+)*P(acting|+) 6.03× 10^(-7) If Vj= - ; P(-)*P(I|-)*P(hated|-)*P(the|-)*P(poor|-)*P(acting|-) 1.22 × 10^(-5)