Lecture - 10 Transformer Model, Motivation to Transformers, Principles, and Design of Transformer model

0 likes84 views

Learn about the limitations of earlier Deep Sequence Models like RNNs, GRUs and LSTMs; Evolution of Attention Model as the Transformer Model with the paper, "Attention is All You Need". This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2024 first half of the year.

1 of 22

Download to read offline

Recommended

[Paper Reading] Attention is All You Need![[Paper Reading] Attention is All You Need](https://cdn.slidesharecdn.com/ss_thumbnails/reading20181228-190111054908-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Attention is All You Need](https://cdn.slidesharecdn.com/ss_thumbnails/reading20181228-190111054908-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Attention is All You Need](https://cdn.slidesharecdn.com/ss_thumbnails/reading20181228-190111054908-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Attention is All You Need](https://cdn.slidesharecdn.com/ss_thumbnails/reading20181228-190111054908-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Attention is All You NeedDaiki Tanaka The document summarizes the "Attention Is All You Need" paper, which introduced the Transformer model for natural language processing. The Transformer uses attention mechanisms rather than recurrent or convolutional layers, allowing for more parallelization. It achieved state-of-the-art results in machine translation tasks using techniques like multi-head attention, positional encoding, and beam search decoding. The paper demonstrated the Transformer's ability to draw global dependencies between input and output with constant computational complexity.

240318_JW_labseminar[Attention Is All You Need].pptx![240318_JW_labseminar[Attention Is All You Need].pptx](https://cdn.slidesharecdn.com/ss_thumbnails/240318jwlabseminartransformer-240409103857-bb3838b7-thumbnail.jpg?width=560&fit=bounds)

![240318_JW_labseminar[Attention Is All You Need].pptx](https://cdn.slidesharecdn.com/ss_thumbnails/240318jwlabseminartransformer-240409103857-bb3838b7-thumbnail.jpg?width=560&fit=bounds)

![240318_JW_labseminar[Attention Is All You Need].pptx](https://cdn.slidesharecdn.com/ss_thumbnails/240318jwlabseminartransformer-240409103857-bb3838b7-thumbnail.jpg?width=560&fit=bounds)

![240318_JW_labseminar[Attention Is All You Need].pptx](https://cdn.slidesharecdn.com/ss_thumbnails/240318jwlabseminartransformer-240409103857-bb3838b7-thumbnail.jpg?width=560&fit=bounds)

240318_JW_labseminar[Attention Is All You Need].pptxthanhdowork This document describes the Transformer, a novel neural network architecture based solely on attention mechanisms rather than recurrent or convolutional layers. The Transformer uses stacked encoder and decoder blocks with multi-head self-attention and feed-forward layers to achieve state-of-the-art results in machine translation tasks. Key aspects of the Transformer include multi-head attention to jointly attend to information from different representation subspaces, positional encoding to embed positional information, and an attention mask to prevent positions from attending to subsequent positions. The Transformer achieves superior performance compared to RNN-based models on translation benchmarks, with fewer parameters and computation that can be fully parallelized.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

BERT: Pre-training of Deep Bidirectional Transformers for Language Understandinggohyunwoong This presentation is for SotA models in NLP called Transformer & BERT review materials. I reviewed many model in here Word2Vec, ELMo, GPT, ... etc

reference 1 : Kim Dong Ha (https://www.youtube.com/watch?v=xhY7m8QVKjo)

reference 2 : Raimi Karim (https://towardsdatascience.com/attn-illustrated-attention-5ec4ad276ee3)

05-transformers.pdf

05-transformers.pdfChaoYang81 The document discusses Transformers and sequence-to-sequence learning. It provides an overview of:

- The encoder-decoder architecture of sequence-to-sequence models including self-attention.

- Key components of the Transformer model including multi-head self-attention, positional encodings, and residual connections.

- Training procedures like label smoothing and techniques used to improve Transformer performance like adding feedforward layers and stacking multiple Transformer blocks.

Demystifying NLP Transformers: Understanding the Power and Architecture behin...

Demystifying NLP Transformers: Understanding the Power and Architecture behin...NILESH VERMA n this SlideShare presentation, we delve into the intricate world of NLP Transformers, exploring their underlying architecture and uncovering their immense power in Natural Language Processing (NLP). Join us as we demystify the complexities and provide a comprehensive overview of how Transformers revolutionize tasks such as machine translation, sentiment analysis, question answering, and more. Gain valuable insights into the transformer model, attention mechanisms, self-attention, and the transformer encoder-decoder structure. Whether you're an NLP enthusiast or a beginner, this presentation will equip you with a solid foundation to comprehend and harness the potential of NLP Transformers.

From_seq2seq_to_BERT

From_seq2seq_to_BERTHuali Zhao This is a paper about observations on the history of how the encoder-decoder frameworks along with the attention family evolves

Language Model Basics - Components of a Generic Attention Mechanism

Language Model Basics - Components of a Generic Attention Mechanismcniclsh1 •We want to give you free AWS credits! Make sure to do the “AWS Quiz”

on Canvas by next Friday to give us your account number.

•Homework 1 coming out on next Tuesday.

•Piazza is now up. Find the link on Canvas.

attention is all you need.pdf attention is all you need.pdfattention is all y...

attention is all you need.pdf attention is all you need.pdfattention is all y...Amit Ranjan attention is all you need.pdfattention is all you need.pdfattention is all you need.pdfattention is all you need.pdf

240122_Attention Is All You Need (2017 NIPS)2.pptx

240122_Attention Is All You Need (2017 NIPS)2.pptxthanhdowork This document discusses the Transformer model for natural language processing. It describes how the Transformer is divided into an encoder and decoder. The encoder takes a sentence as input and generates a context vector representing the sentence. The decoder takes the context vector and generates an output sentence. Self-attention allows the model to learn relationships between all tokens in a sentence in parallel rather than sequentially. Key details of the encoder, encoder blocks, and scaled dot product attention calculation are provided.

15_NEW-2020-ATTENTION-ENC-DEC-TRANSFORMERS-Lect15.pptx

15_NEW-2020-ATTENTION-ENC-DEC-TRANSFORMERS-Lect15.pptxNibrasulIslam The document summarizes key topics from a lecture on computational linguistics, including encoder-decoder networks, attention mechanisms, and transformers. It discusses how encoder-decoder networks can be used for machine translation by generating a target sequence conditioned on the encoded source sequence. It also explains how attention allows the decoder to attend to different parts of the encoded source at each time step. Finally, it provides a high-level overview of transformers, noting that they replace the encoder-decoder architecture with self-attention and parallelization.

Natural Language Processing Advancements By Deep Learning: A Survey

Natural Language Processing Advancements By Deep Learning: A SurveyRimzim Thube This document provides an overview of advancements in natural language processing through deep learning techniques. It describes several deep learning architectures used for NLP tasks, including multi-layer perceptrons, convolutional neural networks, recurrent neural networks, auto-encoders, and generative adversarial networks. It also summarizes applications of these techniques to common NLP problems such as part-of-speech tagging, parsing, named entity recognition, sentiment analysis, machine translation, question answering, and text summarization.

Deep Learning to Text

Deep Learning to TextJian-Kai Wang This document discusses the evolution of deep learning models for natural language processing tasks from RNNs to Transformers. It provides an overview of sequence-to-sequence models, attention mechanisms, and how Transformer models use multi-head attention and feedforward networks. The document also covers BERT and how it represents language by pre-training bidirectional representations from unlabeled text.

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)Universitat Politècnica de Catalunya Slides reviewing the paper:

Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." In Advances in Neural Information Processing Systems, pp. 6000-6010. 2017.

The dominant sequence transduction models are based on complex recurrent orconvolutional neural networks in an encoder and decoder configuration. The best performing such models also connect the encoder and decoder through an attentionm echanisms. We propose a novel, simple network architecture based solely onan attention mechanism, dispensing with recurrence and convolutions entirely.Experiments on two machine translation tasks show these models to be superiorin quality while being more parallelizable and requiring significantly less timeto train. Our single model with 165 million parameters, achieves 27.5 BLEU onEnglish-to-German translation, improving over the existing best ensemble result by over 1 BLEU. On English-to-French translation, we outperform the previoussingle state-of-the-art with model by 0.7 BLEU, achieving a BLEU score of 41.1.

Transformers and BERT with SageMaker

Transformers and BERT with SageMakerSuman Debnath The document provides an overview of Transformers and BERT models for natural language processing tasks. It explains that Transformers use self-attention mechanisms to overcome limitations of RNNs in capturing long-term dependencies. The encoder-decoder architecture is described, with the encoder generating representations and the decoder generating target sequences. Key aspects like multi-head attention, positional encoding, and pre-training are summarized. The document details how BERT is pretrained using masked language modeling and next sentence prediction to learn contextual representations. It shows how BERT can then be fine-tuned for downstream tasks like sentiment analysis and named entity recognition.

Transformer Zoo

Transformer ZooGrigory Sapunov A talk on Transformers at GDG DevParty

27.06.2020

Link to Google Slides version: https://docs.google.com/presentation/d/1N7ayCRqgsFO7TqSjN4OWW-dMOQPT5DZcHXsZvw8-6FU/edit?usp=sharing

Transformer_tutorial.pdf

Transformer_tutorial.pdffikki11 This document provides a tutorial and survey on attention mechanisms, transformers, BERT, and GPT. It first explains attention in sequence-to-sequence models, including the basic sequence-to-sequence model without attention and with attention. It then explains self-attention and how it allows for computing attention over sequences. The document goes on to describe transformers, which are composed solely of attention modules without recurrence. It explains the encoder and decoder components of transformers, including positional encoding, multi-head attention, and masked multi-head attention. Finally, it introduces BERT and GPT, which are stacks of transformer encoders and decoders, respectively, and explains their characteristics and workings.

Attention is All You Need (Transformer)

Attention is All You Need (Transformer)Jeong-Gwan Lee The document summarizes the Transformer neural network model proposed in the paper "Attention is All You Need". The Transformer uses self-attention mechanisms rather than recurrent or convolutional layers. It achieves state-of-the-art results in machine translation by allowing the model to jointly attend to information from different representation subspaces. The key components of the Transformer include multi-head self-attention layers in the encoder and masked multi-head self-attention layers in the decoder. Self-attention allows the model to learn long-range dependencies in sequence data more effectively than RNNs.

NLP using transformers

NLP using transformers Arvind Devaraj This document discusses neural network models for natural language processing tasks like machine translation. It describes how recurrent neural networks (RNNs) were used initially but had limitations in capturing long-term dependencies and parallelization. The encoder-decoder framework addressed some issues but still lost context. Attention mechanisms allowed focusing on relevant parts of the input and using all encoded states. Transformers replaced RNNs entirely with self-attention and encoder-decoder attention, allowing parallelization while generating a richer representation capturing word relationships. This revolutionized NLP tasks like machine translation.

encoder-decoder for large language model

encoder-decoder for large language modelShrideviS7 An **encoder** transforms input data into a compressed, abstract representation, capturing essential features. A **decoder** then reconstructs the original or target data from this encoded representation, often used in machine translation, image generation, and sequence-to-sequence tasks.

encoder and decoder for language modelss

encoder and decoder for language modelssShrideviS7 An **encoder** transforms input data into a compressed, abstract representation, capturing essential features. A **decoder** then reconstructs the original or target data from this encoded representation, often used in machine translation, image generation, and sequence-to-sequence tasks.

DotNet 2019 | Pablo Doval - Recurrent Neural Networks with TF2.0

DotNet 2019 | Pablo Doval - Recurrent Neural Networks with TF2.0Plain Concepts This document provides an overview of recurrent neural networks and their applications. It discusses how RNNs can remember previous inputs through feedback loops and internal states. Long short-term memory networks are presented as an improvement over standard RNNs in dealing with long-term dependencies. The document also introduces word embeddings to map words to vectors, and transformers which provide an alternative to RNNs using self-attention. Code examples of RNNs in TensorFlow 2.0 are also shown.

NLP Project: Machine Comprehension Using Attention-Based LSTM Encoder-Decoder...

NLP Project: Machine Comprehension Using Attention-Based LSTM Encoder-Decoder...Eugene Nho Machine comprehension remains a challenging open area of research. While many question answering models have been explored for existing datasets, little work has been done with the newly released MS MARCO dataset, which mirrors the reality much more closely and poses many unique challenges. We explore an end-to-end neural architecture with attention mechanisms for comprehending relevant information and generating text answers for MS MARCO.

Monotonic Multihead Attention review

Monotonic Multihead Attention reviewJune-Woo Kim Monotonic Multihead Attention, Ma, Xutai, et al. "Monotonic Multihead Attention." International Conference on Learning Representations. 2020. review by June-Woo Kim

How to build a GPT model.pdf

How to build a GPT model.pdfStephenAmell4 GPT stands for Generative Pre-trained Transformer, the first generalized language model in NLP. Previously, language models were only designed for single tasks like text generation, summarization or classification.

Improving neural question generation using answer separation

Improving neural question generation using answer separationNAVER Engineering Neural question generation (NQG) is the task of generating a question from a given passage with deep neural networks. Previous NQG models suffer from a problem that a significant proportion of the generated questions include words in the question target, resulting in the generation of unintended questions. In this paper, we propose answer-separated seq2seq, which better utilizes the information from both the passage and the target answer. By replacing the target answer in the original passage with a special token, our model learns to identify which interrogative word should be used. We also propose a new module termed keyword-net, which helps the model better capture the key information in the target answer and generate an appropriate question. Experimental results demonstrate that our answer separation method significantly reduces the number of improper questions which include answers. Consequently, our model significantly outperforms previous state-of-the-art NQG models.

Lecture 11 - Advance Learning Techniques

Lecture 11 - Advance Learning TechniquesManinda Edirisooriya Learn End-to-End Learning, Multi-Task Learning, Transfer Learning and Meta Learning. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2024 first half of the year.

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...Maninda Edirisooriya Learn Recurrent Neural Networks (RNN), GRU and LSTM networks and their architecture. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2024 first half of the year.

More Related Content

Similar to Lecture - 10 Transformer Model, Motivation to Transformers, Principles, and Design of Transformer model (20)

attention is all you need.pdf attention is all you need.pdfattention is all y...

attention is all you need.pdf attention is all you need.pdfattention is all y...Amit Ranjan attention is all you need.pdfattention is all you need.pdfattention is all you need.pdfattention is all you need.pdf

240122_Attention Is All You Need (2017 NIPS)2.pptx

240122_Attention Is All You Need (2017 NIPS)2.pptxthanhdowork This document discusses the Transformer model for natural language processing. It describes how the Transformer is divided into an encoder and decoder. The encoder takes a sentence as input and generates a context vector representing the sentence. The decoder takes the context vector and generates an output sentence. Self-attention allows the model to learn relationships between all tokens in a sentence in parallel rather than sequentially. Key details of the encoder, encoder blocks, and scaled dot product attention calculation are provided.

15_NEW-2020-ATTENTION-ENC-DEC-TRANSFORMERS-Lect15.pptx

15_NEW-2020-ATTENTION-ENC-DEC-TRANSFORMERS-Lect15.pptxNibrasulIslam The document summarizes key topics from a lecture on computational linguistics, including encoder-decoder networks, attention mechanisms, and transformers. It discusses how encoder-decoder networks can be used for machine translation by generating a target sequence conditioned on the encoded source sequence. It also explains how attention allows the decoder to attend to different parts of the encoded source at each time step. Finally, it provides a high-level overview of transformers, noting that they replace the encoder-decoder architecture with self-attention and parallelization.

Natural Language Processing Advancements By Deep Learning: A Survey

Natural Language Processing Advancements By Deep Learning: A SurveyRimzim Thube This document provides an overview of advancements in natural language processing through deep learning techniques. It describes several deep learning architectures used for NLP tasks, including multi-layer perceptrons, convolutional neural networks, recurrent neural networks, auto-encoders, and generative adversarial networks. It also summarizes applications of these techniques to common NLP problems such as part-of-speech tagging, parsing, named entity recognition, sentiment analysis, machine translation, question answering, and text summarization.

Deep Learning to Text

Deep Learning to TextJian-Kai Wang This document discusses the evolution of deep learning models for natural language processing tasks from RNNs to Transformers. It provides an overview of sequence-to-sequence models, attention mechanisms, and how Transformer models use multi-head attention and feedforward networks. The document also covers BERT and how it represents language by pre-training bidirectional representations from unlabeled text.

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)Universitat Politècnica de Catalunya Slides reviewing the paper:

Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." In Advances in Neural Information Processing Systems, pp. 6000-6010. 2017.

The dominant sequence transduction models are based on complex recurrent orconvolutional neural networks in an encoder and decoder configuration. The best performing such models also connect the encoder and decoder through an attentionm echanisms. We propose a novel, simple network architecture based solely onan attention mechanism, dispensing with recurrence and convolutions entirely.Experiments on two machine translation tasks show these models to be superiorin quality while being more parallelizable and requiring significantly less timeto train. Our single model with 165 million parameters, achieves 27.5 BLEU onEnglish-to-German translation, improving over the existing best ensemble result by over 1 BLEU. On English-to-French translation, we outperform the previoussingle state-of-the-art with model by 0.7 BLEU, achieving a BLEU score of 41.1.

Transformers and BERT with SageMaker

Transformers and BERT with SageMakerSuman Debnath The document provides an overview of Transformers and BERT models for natural language processing tasks. It explains that Transformers use self-attention mechanisms to overcome limitations of RNNs in capturing long-term dependencies. The encoder-decoder architecture is described, with the encoder generating representations and the decoder generating target sequences. Key aspects like multi-head attention, positional encoding, and pre-training are summarized. The document details how BERT is pretrained using masked language modeling and next sentence prediction to learn contextual representations. It shows how BERT can then be fine-tuned for downstream tasks like sentiment analysis and named entity recognition.

Transformer Zoo

Transformer ZooGrigory Sapunov A talk on Transformers at GDG DevParty

27.06.2020

Link to Google Slides version: https://docs.google.com/presentation/d/1N7ayCRqgsFO7TqSjN4OWW-dMOQPT5DZcHXsZvw8-6FU/edit?usp=sharing

Transformer_tutorial.pdf

Transformer_tutorial.pdffikki11 This document provides a tutorial and survey on attention mechanisms, transformers, BERT, and GPT. It first explains attention in sequence-to-sequence models, including the basic sequence-to-sequence model without attention and with attention. It then explains self-attention and how it allows for computing attention over sequences. The document goes on to describe transformers, which are composed solely of attention modules without recurrence. It explains the encoder and decoder components of transformers, including positional encoding, multi-head attention, and masked multi-head attention. Finally, it introduces BERT and GPT, which are stacks of transformer encoders and decoders, respectively, and explains their characteristics and workings.

Attention is All You Need (Transformer)

Attention is All You Need (Transformer)Jeong-Gwan Lee The document summarizes the Transformer neural network model proposed in the paper "Attention is All You Need". The Transformer uses self-attention mechanisms rather than recurrent or convolutional layers. It achieves state-of-the-art results in machine translation by allowing the model to jointly attend to information from different representation subspaces. The key components of the Transformer include multi-head self-attention layers in the encoder and masked multi-head self-attention layers in the decoder. Self-attention allows the model to learn long-range dependencies in sequence data more effectively than RNNs.

NLP using transformers

NLP using transformers Arvind Devaraj This document discusses neural network models for natural language processing tasks like machine translation. It describes how recurrent neural networks (RNNs) were used initially but had limitations in capturing long-term dependencies and parallelization. The encoder-decoder framework addressed some issues but still lost context. Attention mechanisms allowed focusing on relevant parts of the input and using all encoded states. Transformers replaced RNNs entirely with self-attention and encoder-decoder attention, allowing parallelization while generating a richer representation capturing word relationships. This revolutionized NLP tasks like machine translation.

encoder-decoder for large language model

encoder-decoder for large language modelShrideviS7 An **encoder** transforms input data into a compressed, abstract representation, capturing essential features. A **decoder** then reconstructs the original or target data from this encoded representation, often used in machine translation, image generation, and sequence-to-sequence tasks.

encoder and decoder for language modelss

encoder and decoder for language modelssShrideviS7 An **encoder** transforms input data into a compressed, abstract representation, capturing essential features. A **decoder** then reconstructs the original or target data from this encoded representation, often used in machine translation, image generation, and sequence-to-sequence tasks.

DotNet 2019 | Pablo Doval - Recurrent Neural Networks with TF2.0

DotNet 2019 | Pablo Doval - Recurrent Neural Networks with TF2.0Plain Concepts This document provides an overview of recurrent neural networks and their applications. It discusses how RNNs can remember previous inputs through feedback loops and internal states. Long short-term memory networks are presented as an improvement over standard RNNs in dealing with long-term dependencies. The document also introduces word embeddings to map words to vectors, and transformers which provide an alternative to RNNs using self-attention. Code examples of RNNs in TensorFlow 2.0 are also shown.

NLP Project: Machine Comprehension Using Attention-Based LSTM Encoder-Decoder...

NLP Project: Machine Comprehension Using Attention-Based LSTM Encoder-Decoder...Eugene Nho Machine comprehension remains a challenging open area of research. While many question answering models have been explored for existing datasets, little work has been done with the newly released MS MARCO dataset, which mirrors the reality much more closely and poses many unique challenges. We explore an end-to-end neural architecture with attention mechanisms for comprehending relevant information and generating text answers for MS MARCO.

Monotonic Multihead Attention review

Monotonic Multihead Attention reviewJune-Woo Kim Monotonic Multihead Attention, Ma, Xutai, et al. "Monotonic Multihead Attention." International Conference on Learning Representations. 2020. review by June-Woo Kim

How to build a GPT model.pdf

How to build a GPT model.pdfStephenAmell4 GPT stands for Generative Pre-trained Transformer, the first generalized language model in NLP. Previously, language models were only designed for single tasks like text generation, summarization or classification.

Improving neural question generation using answer separation

Improving neural question generation using answer separationNAVER Engineering Neural question generation (NQG) is the task of generating a question from a given passage with deep neural networks. Previous NQG models suffer from a problem that a significant proportion of the generated questions include words in the question target, resulting in the generation of unintended questions. In this paper, we propose answer-separated seq2seq, which better utilizes the information from both the passage and the target answer. By replacing the target answer in the original passage with a special token, our model learns to identify which interrogative word should be used. We also propose a new module termed keyword-net, which helps the model better capture the key information in the target answer and generate an appropriate question. Experimental results demonstrate that our answer separation method significantly reduces the number of improper questions which include answers. Consequently, our model significantly outperforms previous state-of-the-art NQG models.

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)

Attention is all you need (UPC Reading Group 2018, by Santi Pascual)Universitat Politècnica de Catalunya

More from Maninda Edirisooriya (20)

Lecture 11 - Advance Learning Techniques

Lecture 11 - Advance Learning TechniquesManinda Edirisooriya Learn End-to-End Learning, Multi-Task Learning, Transfer Learning and Meta Learning. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2024 first half of the year.

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...Maninda Edirisooriya Learn Recurrent Neural Networks (RNN), GRU and LSTM networks and their architecture. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2024 first half of the year.

Extra Lecture - Support Vector Machines (SVM), a lecture in subject module St...

Extra Lecture - Support Vector Machines (SVM), a lecture in subject module St...Maninda Edirisooriya Support Vector Machines are one of the main tool in classical Machine Learning toolbox. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 11 - KNN and Clustering, a lecture in subject module Statistical & Ma...

Lecture 11 - KNN and Clustering, a lecture in subject module Statistical & Ma...Maninda Edirisooriya Supervised ML technique, K-Nearest Neighbor and Unsupervised Clustering techniques are learnt in this lesson. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 10 - Model Testing and Evaluation, a lecture in subject module Statis...

Lecture 10 - Model Testing and Evaluation, a lecture in subject module Statis...Maninda Edirisooriya Model Testing and Evaluation is a lesson where you learn how to train different ML models with changes and evaluating them to select the best model out of them. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...

Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...Maninda Edirisooriya Decision Trees and Ensemble Methods is a different form of Machine Learning algorithm classes. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 8 - Feature Engineering and Optimization, a lecture in subject module...

Lecture 8 - Feature Engineering and Optimization, a lecture in subject module...Maninda Edirisooriya This lesson covers the core data science related content required for applying ML. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 7 - Bias, Variance and Regularization, a lecture in subject module St...

Lecture 7 - Bias, Variance and Regularization, a lecture in subject module St...Maninda Edirisooriya Bias and Variance are the deepest concepts in ML which drives the decision making of a ML project. Regularization is a solution for the high variance problem. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 6 - Logistic Regression, a lecture in subject module Statistical & Ma...

Lecture 6 - Logistic Regression, a lecture in subject module Statistical & Ma...Maninda Edirisooriya Logistic Regression is the first non-linear classification ML algorithm taught in this course. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 5 - Gradient Descent, a lecture in subject module Statistical & Machi...

Lecture 5 - Gradient Descent, a lecture in subject module Statistical & Machi...Maninda Edirisooriya Gradient Descent is the most commonly used learning algorithm for learning, including Deep Neural Networks with Back Propagation. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 4 - Linear Regression, a lecture in subject module Statistical & Mach...

Lecture 4 - Linear Regression, a lecture in subject module Statistical & Mach...Maninda Edirisooriya Simplest Machine Learning algorithm or one of the most fundamental Statistical Learning technique is Linear Regression. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 3 - Exploratory Data Analytics (EDA), a lecture in subject module Sta...

Lecture 3 - Exploratory Data Analytics (EDA), a lecture in subject module Sta...Maninda Edirisooriya Exploratory Data Analytics (EDA) is a data Pre-Processing, manual data summarization and visualization related discipline which is an earlier phase of data processing. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Lecture 2 - Introduction to Machine Learning, a lecture in subject module Sta...

Lecture 2 - Introduction to Machine Learning, a lecture in subject module Sta...Maninda Edirisooriya Introduction to Statistical and Machine Learning. Explains basics of ML, fundamental concepts of ML, Statistical Learning and Deep Learning. Recommends the learning sources and techniques of Machine Learning. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.

Analyzing the effectiveness of mobile and web channels using WSO2 BAM

Analyzing the effectiveness of mobile and web channels using WSO2 BAMManinda Edirisooriya This document summarizes a presentation about using WSO2 BAM to analyze the effectiveness of mobile and web channels for e-commerce. It discusses how both channels have advantages and are growing in popularity for business applications. WSO2 BAM is presented as a solution to monitor usage patterns of both channels, including user behavior, interactions, and preferences. A demo is shown of WSO2 BAM monitoring an online ticket booking system with both a web app and mobile app to analyze and compare usage of each channel.

WSO2 BAM - Your big data toolbox

WSO2 BAM - Your big data toolboxManinda Edirisooriya WSO2 BAM is a big data analytics and monitoring tool that provides scalable data flow, storage, and processing. It allows users to publish data, analyze and summarize it using Hadoop clusters and Cassandra storage, and then visualize the results. The document discusses WSO2 BAM's architecture and configuration options. It also describes its out-of-the-box monitoring and analytics solutions for services, APIs, applications, and platforms.

Training Report

Training ReportManinda Edirisooriya The document provides an overview of the training organization Zone24x7. It describes Zone24x7 as a technological company that provides hardware and software solutions. It details Zone24x7's organizational structure, products and services, partners and clients, and an assessment of its current position including strengths, weaknesses and suggestions. The training experience involved working on various software development projects at Zone24x7 to gain exposure to tools, technologies and company practices.

GViz - Project Report

GViz - Project ReportManinda Edirisooriya The document is a final project report submitted by four students for their Bachelor's degree. It presents the Geo-Data Visualization Framework (GViz) developed as part of the project. The framework enables visualization of geospatial data on the web using existing JavaScript APIs and libraries. It describes the design and implementation of GViz over multiple iterations to address common challenges in visualizing geographic data.

Mortivation

MortivationManinda Edirisooriya The presentation I created for presenting the books read, in mentoring program in University of Moratuwa.

Hafnium impact 2008

Hafnium impact 2008Maninda Edirisooriya This document provides an overview of a remotely operated toy car project. It outlines the main requirements, functionality, features, implementation challenges, production process, and marketing plan. The key requirements are for the car to be operated remotely via a computer and wireless camera. Functionality includes transmitting control signals from the computer to a receiver and microcontroller in the car. Challenges include minimizing circuit size and integrating components. The production process involves specialized team roles, programming, and interfacing. Marketing targets children and emphasizes the affordable price and attractive design.

ChatCrypt

ChatCryptManinda Edirisooriya This document describes an encryption Chrome extension for online chat. The extension encrypts chat text using 128-bit AES encryption with a common password between users. It was created to provide a cheap, private chat solution without third parties analyzing conversations or filtering keywords. The extension works by encrypting text on one end, sending the encrypted ciphertext over the network, and decrypting it on the other end. It sets passwords by hashing them to generate an encryption key and encrypts/decrypts text by breaking it into blocks and applying the AES cipher. The document demonstrates how to use the extension for encrypted chat and discusses its limitations, such as an inability to send emojis or a key sharing mechanism.

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...

Lecture 9 - Deep Sequence Models, Learn Recurrent Neural Networks (RNN), GRU ...Maninda Edirisooriya

Extra Lecture - Support Vector Machines (SVM), a lecture in subject module St...

Extra Lecture - Support Vector Machines (SVM), a lecture in subject module St...Maninda Edirisooriya

Lecture 11 - KNN and Clustering, a lecture in subject module Statistical & Ma...

Lecture 11 - KNN and Clustering, a lecture in subject module Statistical & Ma...Maninda Edirisooriya

Lecture 10 - Model Testing and Evaluation, a lecture in subject module Statis...

Lecture 10 - Model Testing and Evaluation, a lecture in subject module Statis...Maninda Edirisooriya

Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...

Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...Maninda Edirisooriya

Lecture 8 - Feature Engineering and Optimization, a lecture in subject module...

Lecture 8 - Feature Engineering and Optimization, a lecture in subject module...Maninda Edirisooriya

Lecture 7 - Bias, Variance and Regularization, a lecture in subject module St...

Lecture 7 - Bias, Variance and Regularization, a lecture in subject module St...Maninda Edirisooriya

Lecture 6 - Logistic Regression, a lecture in subject module Statistical & Ma...

Lecture 6 - Logistic Regression, a lecture in subject module Statistical & Ma...Maninda Edirisooriya

Lecture 5 - Gradient Descent, a lecture in subject module Statistical & Machi...

Lecture 5 - Gradient Descent, a lecture in subject module Statistical & Machi...Maninda Edirisooriya

Lecture 4 - Linear Regression, a lecture in subject module Statistical & Mach...

Lecture 4 - Linear Regression, a lecture in subject module Statistical & Mach...Maninda Edirisooriya

Lecture 3 - Exploratory Data Analytics (EDA), a lecture in subject module Sta...

Lecture 3 - Exploratory Data Analytics (EDA), a lecture in subject module Sta...Maninda Edirisooriya

Lecture 2 - Introduction to Machine Learning, a lecture in subject module Sta...

Lecture 2 - Introduction to Machine Learning, a lecture in subject module Sta...Maninda Edirisooriya

Recently uploaded (20)

Proposed EPA Municipal Waste Combustor Rule

Proposed EPA Municipal Waste Combustor RuleAlvaroLinero2 Florida Section AWMA Presentation on Proposed EPA Municipal Waste Combustor Rule. Reviews EPA procedures to set standards and pitfalls.

May 2025: Top 10 Cited Articles in Software Engineering & Applications Intern...

May 2025: Top 10 Cited Articles in Software Engineering & Applications Intern...sebastianku31 The International Journal of Software Engineering & Applications (IJSEA) is a bi-monthly open access peer-reviewed journal that publishes articles which contribute new results in all areas of the Software Engineering & Applications. The goal of this journal is to bring together researchers and practitioners from academia and industry to focus on understanding Modern software engineering concepts & establishing new collaborations in these areas.

Digital Crime – Substantive Criminal Law – General Conditions – Offenses – In...

Digital Crime – Substantive Criminal Law – General Conditions – Offenses – In...ManiMaran230751 Digital Crime – Substantive Criminal Law – General Conditions – Offenses – Investigation Methods for

Collecting Digital Evidence – International Cooperation to Collect Digital Evidence.

Tesia Dobrydnia - A Leader In Her Industry

Tesia Dobrydnia - A Leader In Her IndustryTesia Dobrydnia Tesia Dobrydnia brings her many talents to her career as a chemical engineer in the oil and gas industry. With the same enthusiasm she puts into her work, she engages in hobbies and activities including watching movies and television shows, reading, backpacking, and snowboarding. She is a Relief Senior Engineer for Chevron and has been employed by the company since 2007. Tesia is considered a leader in her industry and is known to for her grasp of relief design standards.

Structural Health and Factors affecting.pptx

Structural Health and Factors affecting.pptxgunjalsachin Structural Health- Factors affecting Health of Structures,

Causes of deterioration in RC structures-Permeability of concrete, capillary porosity, air voids, Micro cracks and macro cracks, corrosion of reinforcing bars, sulphate attack, alkali silica reaction

Causes of deterioration in Steel Structures: corrosion, Uniform deterioration, pitting, crevice, galvanic, laminar, Erosion, cavitations, fretting, Exfoliation, Stress, causes of defects in connection

Maintenance and inspection of structures.

Introduction of Structural Audit and Health Montoring.pptx

Introduction of Structural Audit and Health Montoring.pptxgunjalsachin Introduction to Structural Audit - Introduction, Objectives, Bye-laws, Importance, Various Stages involved, Visual inspection: scope, coverage, limitations, Factors to be keenly observed. Investigation Management, Aspects of audit of Masonry buildings, RC frame buildings, Steel Structures

UNIT-4-PPT UNIT COMMITMENT AND ECONOMIC DISPATCH

UNIT-4-PPT UNIT COMMITMENT AND ECONOMIC DISPATCHSridhar191373 Statement of unit commitment problem-constraints: spinning reserve, thermal unit constraints, hydro constraints, fuel constraints and other constraints. Solution methods: priority list methods, forward dynamic programming approach. Numerical problems only in priority list method using full load average production cost. Statement of economic dispatch problem-cost of generation-incremental cost curve –co-ordination equations without loss and with loss- solution by direct method and lamda iteration method (No derivation of loss coefficients)

Forensic Science – Digital Forensics – Digital Evidence – The Digital Forensi...

Forensic Science – Digital Forensics – Digital Evidence – The Digital Forensi...ManiMaran230751 Forensic Science – Digital Forensics – Digital Evidence – The Digital Forensics Process – Introduction – The

Identification Phase – The Collection Phase – The Examination Phase – The Analysis Phase – The

Presentation Phase.

Utilizing Biomedical Waste for Sustainable Brick Manufacturing: A Novel Appro...

Utilizing Biomedical Waste for Sustainable Brick Manufacturing: A Novel Appro...IRJET Journal https://www.irjet.net/archives/V11/i2/IRJET-V11I209.pdf

ISO 4020-6.1- Filter Cleanliness Test Rig Catalogue.pdf

ISO 4020-6.1- Filter Cleanliness Test Rig Catalogue.pdf FILTRATION ENGINEERING & CUNSULTANT ISO 4020-6.1 – Filter Cleanliness Test Rig: Precision Testing for Fuel Filter Integrity

Explore the design, functionality, and standards compliance of our advanced Filter Cleanliness Test Rig developed according to ISO 4020-6.1. This rig is engineered to evaluate fuel filter cleanliness levels with high accuracy and repeatability—critical for ensuring the performance and durability of fuel systems.

🔬 Inside This Presentation:

Overview of ISO 4020-6.1 testing protocols

Rig components and schematic layout

Test methodology and data acquisition

Applications in automotive and industrial filtration

Key benefits: accuracy, reliability, compliance

Perfect for R&D engineers, quality assurance teams, and lab technicians focused on filtration performance and standard compliance.

🛠️ Ensure Filter Cleanliness — Validate with Confidence.

Software Engineering Project Presentation Tanisha Tasnuva

Software Engineering Project Presentation Tanisha Tasnuvatanishatasnuva76 Software Engineering Presentation.

ISO 4548-9 Oil Filter Anti Drain Catalogue.pdf

ISO 4548-9 Oil Filter Anti Drain Catalogue.pdf FILTRATION ENGINEERING & CUNSULTANT This presentation showcases a detailed catalogue of testing solutions aligned with ISO 4548-9, the international standard for evaluating the anti-drain valve performance in full-flow lubricating oil filters used in internal combustion engines.

Topics covered include:

Direct Current circuitsDirect Current circuitsDirect Current circuitsDirect C...

Direct Current circuitsDirect Current circuitsDirect Current circuitsDirect C...BeHappy728244 Direct Current circuits

ENERGY STORING DEVICES-Primary Battery.pdf

ENERGY STORING DEVICES-Primary Battery.pdfTAMILISAI R ENERGY STORING DEVICES

Batteries -Introduction – Cells – Batteries –Types of Batteries- Primary batteries – silver button cell

Axial Capacity Estimation of FRP-strengthened Corroded Concrete Columns

Axial Capacity Estimation of FRP-strengthened Corroded Concrete ColumnsJournal of Soft Computing in Civil Engineering This research presents a machine learning (ML) based model to estimate the axial strength of corroded RC columns reinforced with fiber-reinforced polymer (FRP) composites. Estimating the axial strength of corroded columns is complex due to the intricate interplay between corrosion and FRP reinforcement. To address this, a dataset of 102 samples from various literature sources was compiled. Subsequently, this dataset was employed to create and train the ML models. The parameters influencing axial strength included the geometry of the column, properties of the FRP material, degree of corrosion, and properties of the concrete. Considering the scarcity of reliable design guidelines for estimating the axial strength of RC columns considering corrosion effects, artificial neural network (ANN), Gaussian process regression (GPR), and support vector machine (SVM) techniques were employed. These techniques were used to predict the axial strength of corroded RC columns reinforced with FRP. When comparing the results of the proposed ML models with existing design guidelines, the ANN model demonstrated higher predictive accuracy. The ANN model achieved an R-value of 98.08% and an RMSE value of 132.69 kN which is the lowest among all other models. This model fills the existing gap in knowledge and provides a precise means of assessment. This model can be used in the scientific community by researchers and practitioners to predict the axial strength of FRP-strengthened corroded columns. In addition, the GPR and SVM models obtained an accuracy of 98.26% and 97.99%, respectively.

UNIT-1-PPT-Introduction about Power System Operation and Control

UNIT-1-PPT-Introduction about Power System Operation and ControlSridhar191373 Power scenario in Indian grid – National and Regional load dispatching centers –requirements of good power system - necessity of voltage and frequency regulation – real power vs frequency and reactive power vs voltage control loops - system load variation, load curves and basic concepts of load dispatching - load forecasting - Basics of speed governing mechanisms and modeling - speed load characteristics - regulation of two generators in parallel.

[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)![[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)](https://cdn.slidesharecdn.com/ss_thumbnails/lokfittingen-250528072439-8696f1c6-thumbnail.jpg?width=560&fit=bounds)

![[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)](https://cdn.slidesharecdn.com/ss_thumbnails/lokfittingen-250528072439-8696f1c6-thumbnail.jpg?width=560&fit=bounds)

![[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)](https://cdn.slidesharecdn.com/ss_thumbnails/lokfittingen-250528072439-8696f1c6-thumbnail.jpg?width=560&fit=bounds)

![[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)](https://cdn.slidesharecdn.com/ss_thumbnails/lokfittingen-250528072439-8696f1c6-thumbnail.jpg?width=560&fit=bounds)

[HIFLUX] Lok Fitting&Valve Catalog 2025 (Eng)하이플럭스 / HIFLUX Co., Ltd. Lok Fitting, VCR Fitting, Pipe Fitting

Axial Capacity Estimation of FRP-strengthened Corroded Concrete Columns

Axial Capacity Estimation of FRP-strengthened Corroded Concrete ColumnsJournal of Soft Computing in Civil Engineering

Lecture - 10 Transformer Model, Motivation to Transformers, Principles, and Design of Transformer model

- 1. DA 5330 – Advanced Machine Learning Applications Lecture 10 – Transformers Maninda Edirisooriya [email protected]

- 2. Limitations of RNN Models • Slow computation for longer sequences as the computation cannot be done in parallel due to the dependencies in timesteps • As there are significant number of timesteps the backpropagation depth increases which increases Vanishing Gradient and Exploding Gradient problems • As information is passed from the history as a hidden state vector the amount of information is limited to that vector size • As information passed from the history gets updated in each time step, the history is forgotten after number of time steps

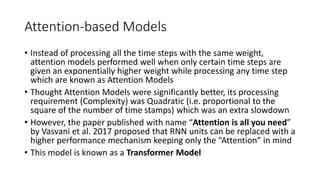

- 3. Attention-based Models • Instead of processing all the time steps with the same weight, attention models performed well when only certain time steps are given an exponentially higher weight while processing any time step which are known as Attention Models • Thought Attention Models were significantly better, its processing requirement (Complexity) was Quadratic (i.e. proportional to the square of the number of time stamps) which was an extra slowdown • However, the paper published with name “Attention is all you need” by Vasvani et al. 2017 proposed that RNN units can be replaced with a higher performance mechanism keeping only the “Attention” in mind • This model is known as a Transformer Model

- 4. Transformer Model Architecture Encoder Decoder

- 5. Transformer Model • The original paper defined this model (with both Encoder and Decoder) for the application of Natural Language Translation • However, the Encoder and Decoder models were separately used independently in some later models for different tasks Source: https://pub.aimind.so/unraveling-the-power-of-language-models-understanding-llms-and-transformer-variants-71bfc42e0b21

- 6. Encoder Only (Autoencoding) Models • Only the Encoder of the Transformer is used • Pre-Trained with Masked Language Models • Some random tokens of the input sequence are masked • Try to predict the missing (masked) tokens to reconstruct the original sequence • This process learns the Bidirectional Context of the tokens in a sequence (probabilities of being around certain tokens in both right and left) • Used in applications like Sentence Classification for Sentiment Analysis and token level operations like Named Entity Recognition • BERT and RoBERTa are some examples

- 7. Decoder Only (Autoregressive) Models • Only the Decoder of the Transformer is used • Pre-Trained with Causal Language Models • Last token of the input sequence is masked • Try to predict the last token to reconstruct the original sequence • Also known as Full Language Model as well • This process learns the Unidirectional Context of the tokens in a sequence (probabilities of being the next token given the tokens at the left) • Used in applications like Text Generation • GPT and BLOOM are some examples

- 8. Encoder Decoder (Sequence-to-Sequence) Models • Use both Encoder and the Decoder of the Transformer • Pre-Training objective may depend on the requirement. In T5 model, • In Encoder, some random tokens of the input sequence are masked with a unique placeholder token, added to the vocabulary, known as Sentinel token • This process is known as Span Corruption • Decoder tries to predict the missing (masked) tokens to reconstruct the original sequence, replacing the Sentinel tokens, with auto-regression • Used in applications like Translation, Summarization and Question- answering • T5 and BART are some examples

- 9. Encoder – Input and Embedding • Inputs is the sequence of tokens (words in case of Natural Language Processing (NLP)) • Each input token is converted to a vector using Input Embedding (Word Embedding in case of NLP) Output

- 10. Encoder – Input and Embedding Source: https://www.youtube.com/watch?v=bCz4OMemCcA

- 11. Encoder – Positional Encoding Output Source: https://www.youtube.com/watch?v=bCz4OMemCcA

- 12. Encoder – Input and Embedding Source: https://www.youtube.com/watch?v=bCz4OMemCcA

- 13. Encoder – Multi-Head Attention • Multi-Head Attention is about applying multiple similar operations known as Single-Head Attention or simply Attention Attention(Q, K, V) = softmax( 𝑄𝑘𝑇 𝑑𝑘 )V • The type of attention used here is known as Self Attention where each token is having a attention against all the tokens in the input sequence • For the Encoder we take, Q = K = V = X Output

- 14. Self Attention Source: https://jalammar.github.io/illustrated-transformer/ • Self Attention formula is inspired by the data query from a data store where Q is the query which is matched against the K key values where V is the actual value • 𝐐𝐊𝐓 is a measure between the similarity between Q and K • 𝐝𝐤 is used to normalize by dividing it by the dimensionality of the K • Softmax is used to give the attention to the largest • Finally, normalized similarity is used to the weight V resulting the Attention

- 16. Encoder – Multi-Head Attention • When Single-Head Attention is defined as, Attention(Q, K, V) = softmax( 𝑄𝑘𝑇 𝑑𝑘 )V • Multi-Head Attention Head is defined as, headi(Q, K, V) = Attention(QWi Q, KWi K, VWi V) • i.e.: We can have arbitrary number of heads where parameter weight matrices have to be defined for Q, K, and V for all heads • Multi-Head is defined as, MultiHead(Q, K, V) = Concat(head1, head2, … headh)WO • i.e. MultiHead is the concatenation of all the heads multiplied by another parameter matrix WO Output

- 17. Encoder – Add & Normalization • Input given to the multi-head attention is added to the output as the Residual Inputs (remember ResNet?) • Then the result is Layer Normalized • Similar to the Batch Norm but instead of normalizing on the items in the batch (or the minibatch), normalization happens on the values in the layer Output

- 18. Decoder – Masked Multi-Head Attention • Multi-Head Attention for the Decoder is same as for the Encoder • However, only the query, Q is received from the previous layer • K and V are received from the Encoder output • Here, K and V contain the context related information that are required to process Q which is generated only from the input to decoder

- 19. Masking the Multi-Head Attention Source: https://www.youtube.com/watch?v=bCz4OMemCcA • The model must not see the tokens on the right side of the sequence • Therefore, the softmax output related this attention should be zero • For that, all the values that are right from the diagonal will be replaced with minus infinite, before the Softmax is applied

- 20. Training a Transformer Source: https://www.youtube.com/watch?v=bCz4OMemCcA • Vocabulary have special tokens, • <SOS> for the Start of the Sentence • <EOS> for the End of the Sentence • Encoded output is given to the Decoder (as K and V) to translate its input to Italian • Linear layer maps the Decoder output to the vocabulary size • Softmax layer outputs the positional encodings of the tokens in one timestep • Cross Entropy loss is used

- 21. Making Inferences with a Transformer • Unlike training a transformer, while making inferences, a transformer needs one timestep to generate a single token • The reason is because we have to use that generated token to generate the next token

- 22. Questions?