Lecture on Deep Learning

Download as PPTX, PDF1 like147 views

Lecture conducted by me on Deep Learning concepts and applications. Discussed FNNs, CNNs, Simple RNNs and LSTM Networks in detail. Finally conducted a hands-on session on deep-learning using Keras and scikit-learn.

1 of 32

Download to read offline

Ad

Recommended

Deep Learning

Deep Learning Roshan Chettri This document provides an overview of deep learning in neural networks. It defines deep learning as using artificial neural networks with multiple levels that learn higher-level concepts from lower-level ones. It describes how deep learning networks have many layers that build improved feature spaces, with earlier layers learning simple features that are combined in later layers. Deep learning networks are categorized as unsupervised or supervised, or hybrids. Common deep learning architectures like deep neural networks, deep belief networks, convolutional neural networks, and deep Boltzmann machines are also described. The document explains why GPUs are useful for deep learning due to their throughput-oriented design that speeds up model training.

Neural networks

Neural networksGeethika Ramani Ravinutala This document provides an overview of neural networks, including their history, components, connection types, learning methods, applications, and comparison to conventional computers. It discusses how biological neurons inspired the development of artificial neurons and neural networks. The key components of biological and artificial neurons are described. Connection types in neural networks include static feedforward and dynamic feedbackward connections. Learning methods include supervised, unsupervised, and reinforcement learning. Applications span mobile computing, forecasting, character recognition, and more. Neural networks learn by example rather than requiring explicitly programmed algorithms.

Methods for interpreting and understanding deep neural networks

Methods for interpreting and understanding deep neural networksKIMMINHA3 This paper "methods for interpreting and understanding deep neural networks" was presented in ICASSP 2017, and introduced by G Montavon et al.

Now, the number of citations is more than 1,370. That is, this paper has a lot of things to study for deep learning technology.

Neural Networks Ver1

Neural Networks Ver1ncct final Year Projects, Final Year Projects in Chennai, Software Projects, Embedded Projects, Microcontrollers Projects, DSP Projects, VLSI Projects, Matlab Projects, Java Projects, .NET Projects, IEEE Projects, IEEE 2009 Projects, IEEE 2009 Projects, Software, IEEE 2009 Projects, Embedded, Software IEEE 2009 Projects, Embedded IEEE 2009 Projects, Final Year Project Titles, Final Year Project Reports, Final Year Project Review, Robotics Projects, Mechanical Projects, Electrical Projects, Power Electronics Projects, Power System Projects, Model Projects, Java Projects, J2EE Projects, Engineering Projects, Student Projects, Engineering College Projects, MCA Projects, BE Projects, BTech Projects, ME Projects, MTech Projects, Wireless Networks Projects, Network Security Projects, Networking Projects, final year projects, ieee projects, student projects, college projects, ieee projects in chennai, java projects, software ieee projects, embedded ieee projects, "ieee2009projects", "final year projects", "ieee projects", "Engineering Projects", "Final Year Projects in Chennai", "Final year Projects at Chennai", Java Projects, ASP.NET Projects, VB.NET Projects, C# Projects, Visual C++ Projects, Matlab Projects, NS2 Projects, C Projects, Microcontroller Projects, ATMEL Projects, PIC Projects, ARM Projects, DSP Projects, VLSI Projects, FPGA Projects, CPLD Projects, Power Electronics Projects, Electrical Projects, Robotics Projects, Solor Projects, MEMS Projects, J2EE Projects, J2ME Projects, AJAX Projects, Structs Projects, EJB Projects, Real Time Projects, Live Projects, Student Projects, Engineering Projects, MCA Projects, MBA Projects, College Projects, BE Projects, BTech Projects, ME Projects, MTech Projects, M.Sc Projects, Final Year Java Projects, Final Year ASP.NET Projects, Final Year VB.NET Projects, Final Year C# Projects, Final Year Visual C++ Projects, Final Year Matlab Projects, Final Year NS2 Projects, Final Year C Projects, Final Year Microcontroller Projects, Final Year ATMEL Projects, Final Year PIC Projects, Final Year ARM Projects, Final Year DSP Projects, Final Year VLSI Projects, Final Year FPGA Projects, Final Year CPLD Projects, Final Year Power Electronics Projects, Final Year Electrical Projects, Final Year Robotics Projects, Final Year Solor Projects, Final Year MEMS Projects, Final Year J2EE Projects, Final Year J2ME Projects, Final Year AJAX Projects, Final Year Structs Projects, Final Year EJB Projects, Final Year Real Time Projects, Final Year Live Projects, Final Year Student Projects, Final Year Engineering Projects, Final Year MCA Projects, Final Year MBA Projects, Final Year College Projects, Final Year BE Projects, Final Year BTech Projects, Final Year ME Projects, Final Year MTech Projects, Final Year M.Sc Projects, IEEE Java Projects, ASP.NET Projects, VB.NET Projects, C# Projects, Visual C++ Projects, Matlab Projects, NS2 Projects, C Projects, Microcontroller Projects, ATMEL Projects, PIC Projects, ARM Projects, DSP Projects, VLSI Projects, FPGA Projects, CPLD Projects, Power Electronics Projects, Electrical Projects, Robotics Projects, Solor Projects, MEMS Projects, J2EE Projects, J2ME Projects, AJAX Projects, Structs Projects, EJB Projects, Real Time Projects, Live Projects, Student Projects, Engineering Projects, MCA Projects, MBA Projects, College Projects, BE Projects, BTech Projects, ME Projects, MTech Projects, M.Sc Projects, IEEE 2009 Java Projects, IEEE 2009 ASP.NET Projects, IEEE 2009 VB.NET Projects, IEEE 2009 C# Projects, IEEE 2009 Visual C++ Projects, IEEE 2009 Matlab Projects, IEEE 2009 NS2 Projects, IEEE 2009 C Projects, IEEE 2009 Microcontroller Projects, IEEE 2009 ATMEL Projects, IEEE 2009 PIC Projects, IEEE 2009 ARM Projects, IEEE 2009 DSP Projects, IEEE 2009 VLSI Projects, IEEE 2009 FPGA Projects, IEEE 2009 CPLD Projects, IEEE 2009 Power Electronics Projects, IEEE 2009 Electrical Projects, IEEE 2009 Robotics Projects, IEEE 2009 Solor Projects, IEEE 2009 MEMS Projects, IEEE 2009 J2EE P

Deep Belief Networks

Deep Belief NetworksHasan H Topcu DBNs are graphical models which learn to extract a deep hierarchical representation of the training data.

Deep learning lecture - part 1 (basics, CNN)

Deep learning lecture - part 1 (basics, CNN)SungminYou This presentation is a lecture with the Deep Learning book. (Bengio, Yoshua, Ian Goodfellow, and Aaron Courville. MIT press, 2017) It contains the basics of deep learning and theories about the convolutional neural network.

Image processing by manish myst, ssgbcoet

Image processing by manish myst, ssgbcoetManish Myst This document discusses image and speech processing. It provides an overview of image processing techniques including dithering, erosion, dilation, opening, and closing. These techniques are used to manipulate digital images by modifying pixels at image boundaries or within images. The document also discusses using speech recognition to improve human-computer interfaces and synchronization of image and speech processing.

Convolutional neural network from VGG to DenseNet

Convolutional neural network from VGG to DenseNetSungminYou This document summarizes recent developments in convolutional neural networks (CNNs) for image recognition, including residual networks (ResNets) and densely connected convolutional networks (DenseNets). It reviews CNN structure and components like convolution, pooling, and ReLU. ResNets address degradation problems in deep networks by introducing identity-based skip connections. DenseNets connect each layer to every other layer to encourage feature reuse, addressing vanishing gradients. The document outlines the structures of ResNets and DenseNets and their advantages over traditional CNNs.

CNN

CNNUkjae Jeong 1. The document discusses the history and development of convolutional neural networks (CNNs) for computer vision tasks like image classification.

2. Early CNN models from 2012 included AlexNet which achieved breakthrough results on ImageNet classification. Later models improved performance through increased depth like VGGNet in 2014.

3. Recent models like ResNet in 2015 and DenseNet in 2016 addressed the degradation problem of deeper networks through shortcut connections, achieving even better results on image classification tasks. New regularization techniques like Dropout, Batch Normalization, and DropBlock have helped training of deeper CNNs.

Introduction to Convolutional Neural Networks

Introduction to Convolutional Neural NetworksHannes Hapke This document provides an introduction to machine learning using convolutional neural networks (CNNs) for image classification. It discusses how to prepare image data, build and train a simple CNN model using Keras, and optimize training using GPUs. The document outlines steps to normalize image sizes, convert images to matrices, save data formats, assemble a CNN in Keras including layers, compilation, and fitting. It provides resources for learning more about CNNs and deep learning frameworks like Keras and TensorFlow.

Efficient Neural Network Architecture for Image Classfication

Efficient Neural Network Architecture for Image ClassficationYogendra Tamang The document outlines the objectives, methodology, and work accomplished for a project involving designing an efficient convolutional neural network architecture for image classification. The objectives were to classify images using CNNs and design an effective CNN architecture. The methodology involved designing convolution and pooling layers, and using gradient descent to train the network. Work accomplished included GPU configuration, designing CNN architectures for CIFAR-10 and MNIST datasets, and tracking training loss, validation loss, and accuracy over epochs.

Handwritten Digit Recognition(Convolutional Neural Network) PPT

Handwritten Digit Recognition(Convolutional Neural Network) PPTRishabhTyagi48 This is a presentation on Handwritten Digit Recognition using Convolutional Neural Networks. Convolutional Neural Networks give better results as compared to conventional Artificial Neural Networks.

Digit recognition using mnist database

Digit recognition using mnist databasebtandale The document discusses using a convolutional neural network to recognize handwritten digits from the MNIST database. It describes training a CNN on the MNIST training dataset, consisting of 60,000 examples, to classify images of handwritten digits from 0-9. The CNN architecture uses two convolutional layers followed by a flatten layer and fully connected layer with softmax activation. The model achieves high accuracy on the MNIST test set. However, the document notes that the model may struggle with color images or images with more complex backgrounds compared to the simple black and white MNIST digits. Improving preprocessing and adapting the model for more complex real-world images is suggested for future work.

Convolutional Neural Network and RNN for OCR problem.

Convolutional Neural Network and RNN for OCR problem.Vishal Mishra This document presents a thesis on using sequence-to-sequence learning with deep learning techniques for optical character recognition. The author aims to convert images of mathematical equations into LaTeX representations. Convolutional neural networks, recurrent neural networks, long short-term memory networks, and attention models are discussed as approaches. Details are provided on the architecture and workings of CNNs, RNNs, and LSTMs. The thesis will propose a model and discuss results and future work.

Convolutional neural network

Convolutional neural networkFerdous ahmed Convolutional neural networks (CNNs) are a type of neural network used for image recognition tasks. CNNs use convolutional layers that apply filters to input images to extract features, followed by pooling layers that reduce the dimensionality. The extracted features are then fed into fully connected layers for classification. CNNs are inspired by biological processes and are well-suited for computer vision tasks like image classification, detection, and segmentation.

Using Multi-layered Feed-forward Neural Network (MLFNN) Architecture as Bidir...

Using Multi-layered Feed-forward Neural Network (MLFNN) Architecture as Bidir...IOSR Journals This document presents a method for using a multi-layered feed-forward neural network (MLFNN) architecture as a bidirectional associative memory (BAM) for function approximation. It proposes applying the backpropagation algorithm in two phases - first in the forward direction, then in the backward direction - which allows the MLFNN to work like a BAM. Simulation results show that this two-phase backpropagation algorithm achieves convergence faster than standard backpropagation when approximating the sine function, demonstrating that the MLFNN architecture is better suited for function approximation when trained this way.

RNN and its applications

RNN and its applicationsSungjoon Choi Basics of RNNs and its applications with following papers:

- Generating Sequences With Recurrent Neural Networks, 2013

- Show and Tell: A Neural Image Caption Generator, 2014

- Show, Attend and Tell: Neural Image Caption Generation with Visual Attention, 2015

- DenseCap: Fully Convolutional Localization Networks for Dense Captioning, 2015

- Deep Tracking- Seeing Beyond Seeing Using Recurrent Neural Networks, 2016

- Robust Modeling and Prediction in Dynamic Environments Using Recurrent Flow Networks, 2016

- Social LSTM- Human Trajectory Prediction in Crowded Spaces, 2016

- DESIRE- Distant Future Prediction in Dynamic Scenes with Interacting Agents, 2017

- Predictive State Recurrent Neural Networks, 2017

Artificial Neural Networks: Pointers

Artificial Neural Networks: PointersFariz Darari Featuring pointers for: Single-layer neural networks and multi-layer neural networks, gradient descent, backpropagation. Slides are for introduction, for deep explanation on deep learning, please consult other slides.

Image classification with Deep Neural Networks

Image classification with Deep Neural NetworksYogendra Tamang This document discusses image classification using deep neural networks. It provides background on image classification and convolutional neural networks. The document outlines techniques like activation functions, pooling, dropout and data augmentation to prevent overfitting. It summarizes a paper on ImageNet classification using CNNs with multiple convolutional and fully connected layers. The paper achieved state-of-the-art results on ImageNet in 2010 and 2012 by training CNNs on a large dataset using multiple GPUs.

From neural networks to deep learning

From neural networks to deep learningViet-Trung TRAN The document discusses the history and development of artificial neural networks and deep learning. It describes early neural network models like perceptrons from the 1950s and their use of weighted sums and activation functions. It then explains how additional developments led to modern deep learning architectures like convolutional neural networks and recurrent neural networks, which use techniques such as hidden layers, backpropagation, and word embeddings to learn from large datasets.

Cnn method

Cnn methodAmirSajedi1 This document provides an overview of convolutional neural networks (CNNs). It describes that CNNs are a type of deep learning model used in computer vision tasks. The key components of a CNN include convolutional layers that extract features, pooling layers that reduce spatial size, and fully-connected layers at the end for classification. Convolutional layers apply learnable filters in a local receptive field, while pooling layers perform downsampling. The document outlines common CNN architectures, such as types of layers, hyperparameters like stride and padding, and provides examples to illustrate how CNNs work.

Overview of Convolutional Neural Networks

Overview of Convolutional Neural Networksananth In this presentation we discuss the convolution operation, the architecture of a convolution neural network, different layers such as pooling etc. This presentation draws heavily from A Karpathy's Stanford Course CS 231n

Neural networks

Neural networksHarshitGupta367 - The document presents a neural network model for recognizing handwritten digits. It uses a dataset of 20x20 pixel grayscale images of digits 0-9.

- The proposed neural network has an input layer of 400 nodes, a hidden layer of 25 nodes, and an output layer of 10 nodes. It is trained using backpropagation to classify images.

- The model achieves an accuracy of over 96.5% on test data after 200 iterations of training, outperforming a logistic regression model which achieved 91.5% accuracy. Future work could involve classifying more complex natural images.

Digit recognition

Digit recognitionbtandale Handwritten digit recognition uses convolutional neural networks to recognize handwritten digits from images. The MNIST dataset, containing 60,000 training images and 10,000 test images of handwritten digits, is used to train models. Convolutional neural network architectures for this task typically involve convolutional layers to extract features, followed by flatten and dense layers to classify digits. When trained on the MNIST dataset, convolutional neural networks can accurately recognize handwritten digits in test images.

Neural network

Neural networkFacebook this is an presentation which is based on neural network which is easy and simple to understand. this is for the students.

Deep learning

Deep learningRouyun Pan This document provides an overview of deep learning concepts including neural networks, regression and classification, convolutional neural networks, and applications of deep learning such as housing price prediction. It discusses techniques for training neural networks including feature extraction, cost functions, gradient descent, and regularization. The document also reviews deep learning frameworks and notable deep learning models like AlexNet that have achieved success in tasks such as image classification.

Introduction to Neural networks (under graduate course) Lecture 1 of 9

Introduction to Neural networks (under graduate course) Lecture 1 of 9Randa Elanwar Undergraduate course content:

Introduction and a historical review

Neural network concepts

Basic models of ANN

Linearly separable functions

Non Linearly separable functions

NN Learning techniques

Associative networks

Mapping networks

Spatiotemporal Network

Stochastic Networks

Artificial neural networks

Artificial neural networksernj Artificial neural networks are computational models inspired by biological neural networks. They are composed of artificial neurons that are connected and communicate with each other. Each neuron receives inputs, performs simple computations, and transmits outputs. The connections between neurons are associated with adaptive weights that are adjusted during learning. Neural networks can be trained to perform complex tasks like pattern recognition, prediction, and classification. They have many applications in business including data mining, resource allocation, and prediction.

Introduction to deep learning

Introduction to deep learningJunaid Bhat Deep learning (also known as deep structured learning or hierarchical learning) is the application of artificial neural networks (ANNs) to learning tasks that contain more than one hidden layer. Deep learning is part of a broader family of machine learning methods based on learning data representations, as opposed to task-specific algorithms. Learning can be supervised, partially supervised or unsupervised.

Ad

More Related Content

What's hot (20)

CNN

CNNUkjae Jeong 1. The document discusses the history and development of convolutional neural networks (CNNs) for computer vision tasks like image classification.

2. Early CNN models from 2012 included AlexNet which achieved breakthrough results on ImageNet classification. Later models improved performance through increased depth like VGGNet in 2014.

3. Recent models like ResNet in 2015 and DenseNet in 2016 addressed the degradation problem of deeper networks through shortcut connections, achieving even better results on image classification tasks. New regularization techniques like Dropout, Batch Normalization, and DropBlock have helped training of deeper CNNs.

Introduction to Convolutional Neural Networks

Introduction to Convolutional Neural NetworksHannes Hapke This document provides an introduction to machine learning using convolutional neural networks (CNNs) for image classification. It discusses how to prepare image data, build and train a simple CNN model using Keras, and optimize training using GPUs. The document outlines steps to normalize image sizes, convert images to matrices, save data formats, assemble a CNN in Keras including layers, compilation, and fitting. It provides resources for learning more about CNNs and deep learning frameworks like Keras and TensorFlow.

Efficient Neural Network Architecture for Image Classfication

Efficient Neural Network Architecture for Image ClassficationYogendra Tamang The document outlines the objectives, methodology, and work accomplished for a project involving designing an efficient convolutional neural network architecture for image classification. The objectives were to classify images using CNNs and design an effective CNN architecture. The methodology involved designing convolution and pooling layers, and using gradient descent to train the network. Work accomplished included GPU configuration, designing CNN architectures for CIFAR-10 and MNIST datasets, and tracking training loss, validation loss, and accuracy over epochs.

Handwritten Digit Recognition(Convolutional Neural Network) PPT

Handwritten Digit Recognition(Convolutional Neural Network) PPTRishabhTyagi48 This is a presentation on Handwritten Digit Recognition using Convolutional Neural Networks. Convolutional Neural Networks give better results as compared to conventional Artificial Neural Networks.

Digit recognition using mnist database

Digit recognition using mnist databasebtandale The document discusses using a convolutional neural network to recognize handwritten digits from the MNIST database. It describes training a CNN on the MNIST training dataset, consisting of 60,000 examples, to classify images of handwritten digits from 0-9. The CNN architecture uses two convolutional layers followed by a flatten layer and fully connected layer with softmax activation. The model achieves high accuracy on the MNIST test set. However, the document notes that the model may struggle with color images or images with more complex backgrounds compared to the simple black and white MNIST digits. Improving preprocessing and adapting the model for more complex real-world images is suggested for future work.

Convolutional Neural Network and RNN for OCR problem.

Convolutional Neural Network and RNN for OCR problem.Vishal Mishra This document presents a thesis on using sequence-to-sequence learning with deep learning techniques for optical character recognition. The author aims to convert images of mathematical equations into LaTeX representations. Convolutional neural networks, recurrent neural networks, long short-term memory networks, and attention models are discussed as approaches. Details are provided on the architecture and workings of CNNs, RNNs, and LSTMs. The thesis will propose a model and discuss results and future work.

Convolutional neural network

Convolutional neural networkFerdous ahmed Convolutional neural networks (CNNs) are a type of neural network used for image recognition tasks. CNNs use convolutional layers that apply filters to input images to extract features, followed by pooling layers that reduce the dimensionality. The extracted features are then fed into fully connected layers for classification. CNNs are inspired by biological processes and are well-suited for computer vision tasks like image classification, detection, and segmentation.

Using Multi-layered Feed-forward Neural Network (MLFNN) Architecture as Bidir...

Using Multi-layered Feed-forward Neural Network (MLFNN) Architecture as Bidir...IOSR Journals This document presents a method for using a multi-layered feed-forward neural network (MLFNN) architecture as a bidirectional associative memory (BAM) for function approximation. It proposes applying the backpropagation algorithm in two phases - first in the forward direction, then in the backward direction - which allows the MLFNN to work like a BAM. Simulation results show that this two-phase backpropagation algorithm achieves convergence faster than standard backpropagation when approximating the sine function, demonstrating that the MLFNN architecture is better suited for function approximation when trained this way.

RNN and its applications

RNN and its applicationsSungjoon Choi Basics of RNNs and its applications with following papers:

- Generating Sequences With Recurrent Neural Networks, 2013

- Show and Tell: A Neural Image Caption Generator, 2014

- Show, Attend and Tell: Neural Image Caption Generation with Visual Attention, 2015

- DenseCap: Fully Convolutional Localization Networks for Dense Captioning, 2015

- Deep Tracking- Seeing Beyond Seeing Using Recurrent Neural Networks, 2016

- Robust Modeling and Prediction in Dynamic Environments Using Recurrent Flow Networks, 2016

- Social LSTM- Human Trajectory Prediction in Crowded Spaces, 2016

- DESIRE- Distant Future Prediction in Dynamic Scenes with Interacting Agents, 2017

- Predictive State Recurrent Neural Networks, 2017

Artificial Neural Networks: Pointers

Artificial Neural Networks: PointersFariz Darari Featuring pointers for: Single-layer neural networks and multi-layer neural networks, gradient descent, backpropagation. Slides are for introduction, for deep explanation on deep learning, please consult other slides.

Image classification with Deep Neural Networks

Image classification with Deep Neural NetworksYogendra Tamang This document discusses image classification using deep neural networks. It provides background on image classification and convolutional neural networks. The document outlines techniques like activation functions, pooling, dropout and data augmentation to prevent overfitting. It summarizes a paper on ImageNet classification using CNNs with multiple convolutional and fully connected layers. The paper achieved state-of-the-art results on ImageNet in 2010 and 2012 by training CNNs on a large dataset using multiple GPUs.

From neural networks to deep learning

From neural networks to deep learningViet-Trung TRAN The document discusses the history and development of artificial neural networks and deep learning. It describes early neural network models like perceptrons from the 1950s and their use of weighted sums and activation functions. It then explains how additional developments led to modern deep learning architectures like convolutional neural networks and recurrent neural networks, which use techniques such as hidden layers, backpropagation, and word embeddings to learn from large datasets.

Cnn method

Cnn methodAmirSajedi1 This document provides an overview of convolutional neural networks (CNNs). It describes that CNNs are a type of deep learning model used in computer vision tasks. The key components of a CNN include convolutional layers that extract features, pooling layers that reduce spatial size, and fully-connected layers at the end for classification. Convolutional layers apply learnable filters in a local receptive field, while pooling layers perform downsampling. The document outlines common CNN architectures, such as types of layers, hyperparameters like stride and padding, and provides examples to illustrate how CNNs work.

Overview of Convolutional Neural Networks

Overview of Convolutional Neural Networksananth In this presentation we discuss the convolution operation, the architecture of a convolution neural network, different layers such as pooling etc. This presentation draws heavily from A Karpathy's Stanford Course CS 231n

Neural networks

Neural networksHarshitGupta367 - The document presents a neural network model for recognizing handwritten digits. It uses a dataset of 20x20 pixel grayscale images of digits 0-9.

- The proposed neural network has an input layer of 400 nodes, a hidden layer of 25 nodes, and an output layer of 10 nodes. It is trained using backpropagation to classify images.

- The model achieves an accuracy of over 96.5% on test data after 200 iterations of training, outperforming a logistic regression model which achieved 91.5% accuracy. Future work could involve classifying more complex natural images.

Digit recognition

Digit recognitionbtandale Handwritten digit recognition uses convolutional neural networks to recognize handwritten digits from images. The MNIST dataset, containing 60,000 training images and 10,000 test images of handwritten digits, is used to train models. Convolutional neural network architectures for this task typically involve convolutional layers to extract features, followed by flatten and dense layers to classify digits. When trained on the MNIST dataset, convolutional neural networks can accurately recognize handwritten digits in test images.

Neural network

Neural networkFacebook this is an presentation which is based on neural network which is easy and simple to understand. this is for the students.

Deep learning

Deep learningRouyun Pan This document provides an overview of deep learning concepts including neural networks, regression and classification, convolutional neural networks, and applications of deep learning such as housing price prediction. It discusses techniques for training neural networks including feature extraction, cost functions, gradient descent, and regularization. The document also reviews deep learning frameworks and notable deep learning models like AlexNet that have achieved success in tasks such as image classification.

Introduction to Neural networks (under graduate course) Lecture 1 of 9

Introduction to Neural networks (under graduate course) Lecture 1 of 9Randa Elanwar Undergraduate course content:

Introduction and a historical review

Neural network concepts

Basic models of ANN

Linearly separable functions

Non Linearly separable functions

NN Learning techniques

Associative networks

Mapping networks

Spatiotemporal Network

Stochastic Networks

Artificial neural networks

Artificial neural networksernj Artificial neural networks are computational models inspired by biological neural networks. They are composed of artificial neurons that are connected and communicate with each other. Each neuron receives inputs, performs simple computations, and transmits outputs. The connections between neurons are associated with adaptive weights that are adjusted during learning. Neural networks can be trained to perform complex tasks like pattern recognition, prediction, and classification. They have many applications in business including data mining, resource allocation, and prediction.

Similar to Lecture on Deep Learning (20)

Introduction to deep learning

Introduction to deep learningJunaid Bhat Deep learning (also known as deep structured learning or hierarchical learning) is the application of artificial neural networks (ANNs) to learning tasks that contain more than one hidden layer. Deep learning is part of a broader family of machine learning methods based on learning data representations, as opposed to task-specific algorithms. Learning can be supervised, partially supervised or unsupervised.

Deep Learning

Deep LearningPierre de Lacaze This presentation is Part 2 of my September Lisp NYC presentation on Reinforcement Learning and Artificial Neural Nets. We will continue from where we left off by covering Convolutional Neural Nets (CNN) and Recurrent Neural Nets (RNN) in depth.

Time permitting I also plan on having a few slides on each of the following topics:

1. Generative Adversarial Networks (GANs)

2. Differentiable Neural Computers (DNCs)

3. Deep Reinforcement Learning (DRL)

Some code examples will be provided in Clojure.

After a very brief recap of Part 1 (ANN & RL), we will jump right into CNN and their appropriateness for image recognition. We will start by covering the convolution operator. We will then explain feature maps and pooling operations and then explain the LeNet 5 architecture. The MNIST data will be used to illustrate a fully functioning CNN.

Next we cover Recurrent Neural Nets in depth and describe how they have been used in Natural Language Processing. We will explain why gated networks and LSTM are used in practice.

Please note that some exposure or familiarity with Gradient Descent and Backpropagation will be assumed. These are covered in the first part of the talk for which both video and slides are available online.

A lot of material will be drawn from the new Deep Learning book by Goodfellow & Bengio as well as Michael Nielsen's online book on Neural Networks and Deep Learning as well several other online resources.

Bio

Pierre de Lacaze has over 20 years industry experience with AI and Lisp based technologies. He holds a Bachelor of Science in Applied Mathematics and a Master’s Degree in Computer Science.

https://www.linkedin.com/in/pierre-de-lacaze-b11026b/

DSRLab seminar Introduction to deep learning

DSRLab seminar Introduction to deep learningPoo Kuan Hoong Deep learning is a subfield of machine learning that has shown tremendous progress in the past 10 years. The success can be attributed to large datasets, cheap computing like GPUs, and improved machine learning models. Deep learning primarily uses neural networks, which are interconnected nodes that can perform complex tasks like object recognition. Key deep learning models include Restricted Boltzmann Machines (RBMs), Deep Belief Networks (DBNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs). CNNs are commonly used for computer vision tasks while RNNs are well-suited for sequential data like text or time series. Deep learning provides benefits like automatic feature learning and robustness, but also has weaknesses such

Artificial Neural Network in Medical Diagnosis

Artificial Neural Network in Medical DiagnosisAdityendra Kumar Singh This document provides an overview of artificial neural networks. It begins with definitions of artificial neural networks and how they are analogous to biological neural networks. It then discusses the basic structure of artificial neural networks, including different types of networks like feedforward, recurrent, and convolutional networks. Key concepts in artificial neural networks like neurons, weights, forward/backward propagation, and overfitting/underfitting are also explained. The document concludes with limitations of neural networks and references.

Deep Learning: Application & Opportunity

Deep Learning: Application & OpportunityiTrain This document provides an overview of deep learning, including its history, algorithms, tools, and applications. It begins with the history and evolution of deep learning techniques. It then discusses popular deep learning algorithms like convolutional neural networks, recurrent neural networks, autoencoders, and deep reinforcement learning. It also covers commonly used tools for deep learning and highlights applications in areas such as computer vision, natural language processing, and games. In the end, it discusses the future outlook and opportunities of deep learning.

Neural

NeuralArchit Rastogi Neural networks are computing systems inspired by biological neural networks in the brain. They are composed of interconnected artificial neurons that process information using a connectionist approach. Neural networks can be used for applications like pattern recognition, classification, prediction, and filtering. They have the ability to learn from and recognize patterns in data, allowing them to perform complex tasks. Some examples of neural network applications discussed include face recognition, handwritten digit recognition, fingerprint recognition, medical diagnosis, and more.

A Survey of Convolutional Neural Networks

A Survey of Convolutional Neural NetworksRimzim Thube Convolutional neural networks (CNNs) are widely used for tasks like image classification, object detection, and face recognition. CNNs extract features from data using convolutional structures and are inspired by biological visual perception. Early CNNs include LeNet for handwritten text recognition and AlexNet which introduced ReLU and dropout to improve performance. Newer CNNs like VGGNet, GoogLeNet, ResNet and MobileNets aim to improve accuracy while reducing parameters. CNNs require activation functions, loss functions, and optimizers to learn from data during training. They have various applications in domains like computer vision, natural language processing and time series forecasting.

Artificial neural network

Artificial neural networknainabhatt2 - An artificial neural network (ANN) is a computational model inspired by biological neural networks in the brain. ANNs contain interconnected nodes that can learn relationships and patterns from data using a process similar to biological learning.

- The basic ANN architecture consists of an input layer, hidden layers, and an output layer. Information flows from the input to output layers through the hidden layers as the network learns.

- There are different types of ANNs that vary in their structure and learning methods, including multilayer perceptrons, convolutional neural networks, and recurrent neural networks. ANNs can perform tasks using supervised, unsupervised, or reinforcement learning.

- ANNs have many applications including face recognition, ridesharing, handwriting

Artificial Neural Network

Artificial Neural NetworkNainaBhatt1 - An artificial neural network (ANN) is a computational model inspired by biological neural networks in the brain. ANNs contain interconnected nodes that can learn relationships and patterns from data through a process of training.

- The basic ANN architecture includes an input layer, hidden layers, and an output layer. Information flows from the input to the output layers through the hidden layers as the network learns.

- There are different types of ANNs that vary in their structure and learning methods, including multilayer perceptrons, convolutional neural networks, and recurrent neural networks. ANNs can perform tasks like face recognition, prediction, and classification through supervised, unsupervised, or reinforcement learning.

- While ANNs have advantages like fault tolerance

Deep learning from a novice perspective

Deep learning from a novice perspectiveAnirban Santara This is a set of slides that introduces the layman to Deep Learning and also presents a road-map for further studies.

Deep Neural Networks (D1L2 Insight@DCU Machine Learning Workshop 2017)

Deep Neural Networks (D1L2 Insight@DCU Machine Learning Workshop 2017)Universitat Politècnica de Catalunya https://github.com/telecombcn-dl/dlmm-2017-dcu

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of big annotated data and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which had been addressed until now with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks and Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles and applications of deep learning to computer vision problems, such as image classification, object detection or text captioning.

DL.pdf

DL.pdfssuserd23711 This document provides an overview of convolutional neural networks (CNNs). It explains that CNNs are a type of neural network that has been successfully applied to analyzing visual imagery. The document then discusses the motivation and biology behind CNNs, describes common CNN architectures, and explains the key operations of convolution, nonlinearity, pooling, and fully connected layers. It provides examples of CNN applications in computer vision tasks like image classification, object detection, and speech recognition. Finally, it notes several large tech companies that utilize CNNs for features like automatic tagging, photo search, and personalized recommendations.

Convolutional Neural Network and Its Applications

Convolutional Neural Network and Its ApplicationsKasun Chinthaka Piyarathna In machine learning, a convolutional neural network is a class of deep, feed-forward artificial neural networks that have successfully been applied fpr analyzing visual imagery.

1.Introduction to Artificial Neural Networks.pptx

1.Introduction to Artificial Neural Networks.pptxsalahidddin 1.Introduction to Artificial Neural Networks.pptx

1.Introduction to Artificial Neural Networks.pptx

1.Introduction to Artificial Neural Networks.pptxsalahidddin 1.Introduction to Artificial Neural Networks.pptx

Towards better analysis of deep convolutional neural networks

Towards better analysis of deep convolutional neural networks曾 子芸 更好的理解分析深度卷積神經網路(Towards Better Analysis of Deep Convolutional Neural Networks )

這篇文章提出了一個視覺化分析系統--CNNVis,以便支持機器學習專家更好的理解、分析、設計深度卷積神經網路。

Lec 1-2-3-intr.

Lec 1-2-3-intr.Taymoor Nazmy 1. The document describes an introductory course on neural networks. It includes information on topics covered, textbooks, assignments, and report topics.

2. The main topics covered are comprehensive introduction, learning algorithms, and types of neural networks. Report topics include the McCulloch-Pitts model, applications of neural networks, and various learning algorithms.

3. The document also provides background information on biological neural networks and the basic components and functioning of artificial neural networks at a high level.

intro-to-cnn-April_2020.pptx

intro-to-cnn-April_2020.pptxssuser3aa461 This document provides an overview of convolutional neural networks (CNNs) and describes a research study that used a two-dimensional heterogeneous CNN (2D-hetero CNN) for mobile health analytics. The study developed a 2D-hetero CNN model to assess fall risk using motion sensor data from 5 sensor locations on participants. The model extracts low-level local features using convolutional layers and integrates them into high-level global features to classify fall risk. The 2D-hetero CNN was evaluated against feature-based approaches and other CNN architectures and performed ablation analysis.

Neural networks and deep learning

Neural networks and deep learningRADO7900 Neural networks and deep learning are machine learning techniques inspired by the human brain. Neural networks consist of interconnected nodes that process input data and pass signals to other nodes. The main types discussed are artificial neural networks (ANNs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs). ANNs can learn nonlinear relationships between inputs and outputs. CNNs are effective for image processing by learning relevant spatial features. RNNs capture sequential dependencies in data like text. Deep learning uses neural networks with many layers to learn complex patterns in large datasets.

Deep Neural Networks (D1L2 Insight@DCU Machine Learning Workshop 2017)

Deep Neural Networks (D1L2 Insight@DCU Machine Learning Workshop 2017)Universitat Politècnica de Catalunya

Ad

More from Yasas Senarath (7)

Aspect Based Sentiment Analysis

Aspect Based Sentiment AnalysisYasas Senarath Customer reviews express consumer’s opinion towards different aspects of a product or service. Potential customers and businesses are always interested in customer opinion on

the products and services. In this slide we find the attributes which we call aspects, in customer reviews and the respective

opinions using machine learning methods.

Forecasting covid 19 by states with mobility data

Forecasting covid 19 by states with mobility data Yasas Senarath COVID-19 is an ongoing pandemic (2020). We provide a state level analysis of COVID-19 spread in USA and also integrating it with the human mobility data. We model the relationship with Human Mobility Data where mobility explains about the difference in the behaviors.

Evaluating Semantic Feature Representations to Efficiently Detect Hate Intent...

Evaluating Semantic Feature Representations to Efficiently Detect Hate Intent...Yasas Senarath This document presents a framework for detecting hate intent in social media posts using hybrid semantic feature representations. It evaluates different feature types, including corpus-based features like TF-IDF, knowledge-based features from Hatebase and FrameNet, and distributional semantic features like word embeddings. Evaluating these features on two Twitter datasets, it finds that while TF-IDF performs well, knowledge base and embedding features improve generalization across datasets. The proposed approach achieves up to a 3.0% absolute gain in F1 score over baselines, demonstrating the value of diverse semantic representations for hate speech detection.

Solr workshop

Solr workshopYasas Senarath Solr workshop: Simple Tutorial on Information Retrieval using Solr. Solr is an Apache Framework used for Full-Text Search built on top of Lucene.

Affect Level Opinion Mining

Affect Level Opinion MiningYasas Senarath Affect Level Opinion Mining of Twitter Streams: A project proposal for identifying emotions in social media text such as Twitter.

Data science / Big Data

Data science / Big DataYasas Senarath This document provides an introduction to big data and data science. It defines big data as large and complex datasets that cannot be processed by traditional software. Big data comes from various sources like mobile devices, IoT, social media, and more. Data science is the process of extracting knowledge from raw data through techniques like machine learning. The document outlines the data-to-knowledge process and popular tools used in data science like Hadoop, Spark, Python libraries Scikit-Learn and Keras. It also discusses how big data is important in applications like healthcare, predicting hospital admissions, and improving telehealth services.

Twitter sentiment analysis

Twitter sentiment analysisYasas Senarath Demonstration on how to perform classification and clustering. Selected application for this demo was Sentiment Analysis. First we try to build a Sentiment Classifier using TF-IDF as features with Linear kernel SVM as classifier. Then we perform clustering on the documents based on TF-IDF.

I conducted this demo for Information Retrieval lecture at Computer Science and Engineering, University of Moratuwa, Sri Lanka.

Ad

Recently uploaded (20)

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...Abodahab IHOY78T6R5E45TRYTUYIU

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.

However, not all commercial systems will share their “thought traces” which are like a “debug mode” for LLMs. This includes OpenAI’s o1, o3 or DeepSeek’s R1 models. Guardrails systems can provide a “goal consistency analysis”, between the goals given to the system and the behavior of the system. Cautious users may consider not using these commercial frontier LLM systems, and make use of open-source Llama or a system with their own reasoning implementation, to provide all thought traces.

Architectural solutions can include sandboxing, to prevent or control models from executing operating system commands to alter files, send network requests, and modify their environment. Tight controls to prevent models from copying their model weights would be appropriate as well. Running multiple instances of the same model on the same prompt to detect behavior variations helps. The running redundant instances can be limited to the most crucial decisions, as an additional check. Preventing self-modifying code, ... (see link for full description)

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Lecture on Deep Learning

- 1. Deep Learning Presentation By: Yasas Senarath, Research Assistant, University of Moratuwa Lecturer in Charge: Dr. Uthayasanker Thayasivam 1

- 2. Overview 1. Artificial Neural Network Basics 2. Introduction to Deep Learning 3. Convolutional Neural Networks (CNNs) 4. Recurrent neural network (RNNs) 5. Practical Session 2

- 3. Artificial Neural Network Basics 3

- 4. Artificial Neural Network • Computational model based on the structure and functions of biological neural networks The structure of a single artificial neuronThe structure of a basic biological neuron 4

- 5. A Neuron - Function • Receiving information: the processing unit obtains the information as input x1,x2,....,xn. • Weighting: each input is weighted by its corresponding weights denoted as w0,w1,w2,....,wn. • Activation: an activation function f is applied to the sum of all the weighted inputs z. • Output: an output is y generated depending on z. The structure of a single artificial neuron 5

- 6. Activation Function • Threshold Function • Sigmoid Function • Hyperbolic Tangent Function • Rectified Linear Units 6

- 7. Feedforward Neural Network • Connections between the nodes do not form a cycle The structure of a fully connected 3-Layer neural network 7

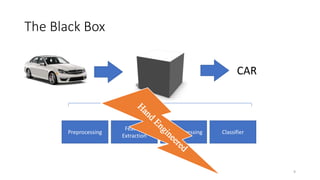

- 9. The Black Box CAR Preprocessing Feature Extraction Post Processing Classifier 9

- 10. Issues with Hand Engineered Features • Most critical for accuracy • Most time-consuming in development • What is the best feature??? • What is next?? Keep on crafting better features? • Let’s learn feature representation directly from data. 10

- 11. Learning Features and classifier together • A non-linear mapping that takes raw pixels directly to labels • How to build? • By combining simple building blocks (i.e. layers in Neural Network) Hmmm… Which is better? Option 2 is better Option 1 Option 2 11

- 12. Intuition behind Deep Neural Nets • Each layer will have parameters subject to learning • Composition makes a highly non-linear system • In case of classification: • Final layer outputs a probability distribution of categories. Final Layer A Layer 12

- 13. Training a Deep Neural Network • Compute loss on small batches(Forward Propagation) • Compute Gradient w.r.t. parameters • Use gradient to update parameters 𝑦1 𝑋 𝑦 Error Number of Hidden Units Number of Hidden Layers Type of Layer Loss Function 13

- 14. Types of Layers • Dense Layer (activation=ReLU) • Convolutional Layer in Convolutional Neural Network (CNN) • Recurrent Neural Network (RNN) Layer • Simple RNN cells • LSTM cell • GRU cell 14

- 15. Convolutional Neural Networks (CNNs) • AKA ConvNets • Regular Neural Nets don’t scale well • 3D volumes of neurons • Depth • Height • Width • Mainly used in • Image Processing • Natural Language Processing 15

- 16. Consider an Image… Example: 1000 X 1000 image 1M Hidden Units 1 B Parameters to Optimize!! 16

- 17. Reduce connections to local regions Example: 1000 x 1000 image 1 M hidden units Filter size: 10 * 10 10 M parameters 17

- 18. Reuse the same kernel everywhere Why? Because interesting features (edges) can happen at anywhere in the image Share the same parameters across different locations Convolution with learned kernels 18

- 19. Convolutional Neural Nets Learn Multiple Filters Example: 1000 x 1000 image 100 Filters Filter size: 10 * 10 10 K parameters 19

- 20. Handling Multiple Channels • Image may contain multiple channels • Eg: 3 channel (R, G, B) image • 3 separate k by k filter is applied to each channel 20

- 21. Translation Invariance Assume we are going to make an Eye detector Problem: How to make the detection robust to exact Eye location? 21

- 22. Translation Invariance Solution: Use pooling (max / average) on the filter responses • Provides robustness to exact spatial location of features • Also sub-samples the image allowing next layer to look @ larger spatial regions 22

- 23. Summary of Complete CNN • Doing all of this consists one layer. • Pooling and normalization is optional • Stack them up and train just like multilayer neural nets • Multiple Conv Layers can be used to learn high level features • Final layer is usually fully connected 𝑛𝑒𝑢𝑟𝑎𝑙 𝑛𝑒𝑡 𝑤𝑖𝑡ℎ 𝑜𝑢𝑡𝑝𝑢𝑡 𝑠𝑖𝑧𝑒 == 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑐𝑙𝑎𝑠𝑠𝑒𝑠 23

- 24. Recurrent neural network (RNN) • Considers sequence • Used in Forecasting • Applications • Language Modelling • Machine Translation • Conversation Bots • Image Description • Image Search 24

- 25. Structure of RNN • Performs the same task for every element of a sequence, with the output being depended on the previous computations • Have a “memory” which captures information about what has been calculated so far An unrolled recurrent neural network. 25

- 26. A Simple RNN • Performs the same task for every element of a sequence, with the output being depended on the previous computations Unrolled RNN 26

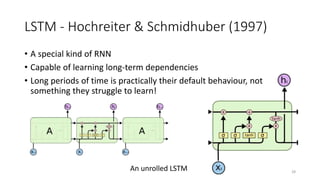

- 27. The Problem of Long-Term Dependencies • Consider a language model trying to predict the next word based on the previous ones • Larger Gap => Unable to learn features by RNN • Theoretically, this should be possible but practically simple RNNs are not capable of representing long-term dependencies 𝑇ℎ𝑒 𝑐𝑙𝑜𝑢𝑑𝑠 𝑎𝑟𝑒 𝑖𝑛 𝑡ℎ𝑒 𝑠𝑘𝑦 𝐼 𝑔𝑟𝑒𝑤 𝑢𝑝 𝑖𝑛 𝐹𝑟𝑎𝑛𝑐𝑒 … 𝐼 𝑠𝑝𝑒𝑎𝑘 𝑓𝑙𝑢𝑒𝑛𝑡 𝐹𝑟𝑒𝑛𝑐ℎ27

- 28. LSTM - Hochreiter & Schmidhuber (1997) • A special kind of RNN • Capable of learning long-term dependencies • Long periods of time is practically their default behaviour, not something they struggle to learn! An unrolled LSTM 28

- 29. LSTM Modes and examples 29

- 30. Example Model (Image Captioning) 30

- 31. Practical Session • See https://online.mrt.ac.lk/mod/folder/view.php?id=65448 • Follow instructions in Moodle to get started using Colab • Then follow the instructions in Python Notebook 31

- 32. Resources 1. http://cs231n.github.io/convolutional-networks/ 2. http://www.cs.umd.edu/~djacobs/CMSC733/CNN.pdf 3. http://colah.github.io/posts/2015-08-Understanding-LSTMs/ 4. https://wiki.tum.de/display/lfdv/Artificial+Neural+Networks 32

Editor's Notes

- #16: Similar to what we discussed in past few slides However, the traditional deep network which involves in taking all the inputs from previous layers are not scalable Images which is one of the things that led to implementation of this network has very complex learning curve and they are large as well, that is the traditional neural networks are

- #17: These are neurons

- #28: In the second sentence, recent information suggests that the next word is probably the name of a language, but if we want to narrow down which language, we need the context of France, from further back. It’s entirely possible for the gap between the relevant information and the point where it is needed to become very large.