lecture_13_jiajun.pdf Generative models GAN

- 1. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 1 Lecture 13: Generative Models

- 2. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Administrative 2 ● A3 is out. Due May 25. ● Milestone was due May 10th ○ Read website page for milestone requirements. ○ Need to Finish data preprocessing and initial results by then. ● Midterm and A2 grades will be out this week

- 3. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Supervised vs Unsupervised Learning 3 Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc.

- 4. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Supervised vs Unsupervised Learning 4 Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Cat Classification This image is CC0 public domain

- 5. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Supervised vs Unsupervised Learning 5 Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Image captioning A cat sitting on a suitcase on the floor Caption generated using neuraltalk2 Image is CC0 Public domain.

- 6. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Supervised vs Unsupervised Learning 6 Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. DOG, DOG, CAT This image is CC0 public domain Object Detection

- 7. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Supervised vs Unsupervised Learning 7 Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Semantic Segmentation GRASS, CAT, TREE, SKY

- 8. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 8 Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. Supervised vs Unsupervised Learning

- 9. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 9 Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, density estimation, etc. Supervised vs Unsupervised Learning K-means clustering This image is CC0 public domain

- 10. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 10 Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, density estimation, etc. Supervised vs Unsupervised Learning Principal Component Analysis (Dimensionality reduction) This image from Matthias Scholz is CC0 public domain 3-d 2-d

- 11. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 11 Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, density estimation, etc. Supervised vs Unsupervised Learning 2-d density estimation 2-d density images left and right are CC0 public domain 1-d density estimation Figure copyright Ian Goodfellow, 2016. Reproduced with permission. Modeling p(x)

- 12. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, density estimation, etc. 12 Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc.

- 13. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Modeling 13 Training data ~ pdata (x) Objectives: 1. Learn pmodel (x) that approximates pdata (x) 2. Sampling new x from pmodel (x) Given training data, generate new samples from same distribution learning pmodel (x) sampling

- 14. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Modeling 14 Training data ~ pdata (x) Given training data, generate new samples from same distribution learning sampling Formulate as density estimation problems: - Explicit density estimation: explicitly define and solve for pmodel (x) - Implicit density estimation: learn model that can sample from pmodel (x) without explicitly defining it. pmodel (x)

- 15. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Why Generative Models? 15 - Realistic samples for artwork, super-resolution, colorization, etc. - Learn useful features for downstream tasks such as classification. - Getting insights from high-dimensional data (physics, medical imaging, etc.) - Modeling physical world for simulation and planning (robotics and reinforcement learning applications) - Many more ... FIgures from L-R are copyright: (1) Alec Radford et al. 2016; (2) Phillip Isola et al. 2017. Reproduced with authors permission (3) BAIR Blog.

- 16. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Taxonomy of Generative Models 16 Generative models Explicit density Implicit density Direct Tractable density Approximate density Markov Chain Variational Markov Chain Variational Autoencoder Boltzmann Machine GSN GAN Figure copyright and adapted from Ian Goodfellow, Tutorial on Generative Adversarial Networks, 2017. Fully Visible Belief Nets - NADE - MADE - PixelRNN/CNN - NICE / RealNVP - Glow - Ffjord

- 17. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Taxonomy of Generative Models 17 Generative models Explicit density Implicit density Direct Tractable density Approximate density Markov Chain Variational Markov Chain Variational Autoencoder Boltzmann Machine GSN GAN Figure copyright and adapted from Ian Goodfellow, Tutorial on Generative Adversarial Networks, 2017. Today: discuss 3 most popular types of generative models today Fully Visible Belief Nets - NADE - MADE - PixelRNN/CNN - NICE / RealNVP - Glow - Ffjord

- 18. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 18 PixelRNN and PixelCNN (A very brief overview)

- 19. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 19 Fully visible belief network (FVBN) Likelihood of image x Explicit density model Joint likelihood of each pixel in the image

- 20. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 20 Fully visible belief network (FVBN) Use chain rule to decompose likelihood of an image x into product of 1-d distributions: Explicit density model Likelihood of image x Probability of i’th pixel value given all previous pixels Then maximize likelihood of training data

- 21. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Then maximize likelihood of training data 21 Fully visible belief network (FVBN) Use chain rule to decompose likelihood of an image x into product of 1-d distributions: Explicit density model Likelihood of image x Probability of i’th pixel value given all previous pixels Complex distribution over pixel values => Express using a neural network!

- 22. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 22 Recurrent Neural Network x1 RNN x2 x2 RNN x3 x3 RNN x4 ... xn- 1 RNN xn h1 h2 h3 h0

- 23. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelRNN 23 Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) [van der Oord et al. 2016]

- 24. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelRNN 24 Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) [van der Oord et al. 2016]

- 25. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelRNN 25 Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) [van der Oord et al. 2016]

- 26. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelRNN 26 Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) [van der Oord et al. 2016] Drawback: sequential generation is slow in both training and inference!

- 27. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelCNN 27 [van der Oord et al. 2016] Still generate image pixels starting from corner Dependency on previous pixels now modeled using a CNN over context region (masked convolution) Figure copyright van der Oord et al., 2016. Reproduced with permission.

- 28. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 PixelCNN 28 [van der Oord et al. 2016] Still generate image pixels starting from corner Dependency on previous pixels now modeled using a CNN over context region (masked convolution) Figure copyright van der Oord et al., 2016. Reproduced with permission. Training is faster than PixelRNN (can parallelize convolutions since context region values known from training images) Generation is still slow: For a 32x32 image, we need to do forward passes of the network 1024 times for a single image

- 29. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generation Samples 29 Figures copyright Aaron van der Oord et al., 2016. Reproduced with permission. 32x32 CIFAR-10 32x32 ImageNet

- 30. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 30 PixelRNN and PixelCNN Improving PixelCNN performance - Gated convolutional layers - Short-cut connections - Discretized logistic loss - Multi-scale - Training tricks - Etc… See - Van der Oord et al. NIPS 2016 - Salimans et al. 2017 (PixelCNN++) Pros: - Can explicitly compute likelihood p(x) - Easy to optimize - Good samples Con: - Sequential generation => slow

- 31. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Taxonomy of Generative Models 31 Generative models Explicit density Implicit density Direct Tractable density Approximate density Markov Chain Variational Markov Chain Variational Autoencoder Boltzmann Machine GSN GAN Figure copyright and adapted from Ian Goodfellow, Tutorial on Generative Adversarial Networks, 2017. Fully Visible Belief Nets - NADE - MADE - PixelRNN/CNN - NICE / RealNVP - Glow - Ffjord

- 32. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 32 Variational Autoencoders (VAE)

- 33. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 33 PixelRNN/CNNs define tractable density function, optimize likelihood of training data: So far...

- 34. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 So far... 34 Variational Autoencoders (VAEs) define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead No dependencies among pixels, can generate all pixels at the same time! PixelRNN/CNNs define tractable density function, optimize likelihood of training data:

- 35. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 So far... 35 Variational Autoencoders (VAEs) define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead No dependencies among pixels, can generate all pixels at the same time! Why latent z? PixelRNN/CNNs define tractable density function, optimize likelihood of training data:

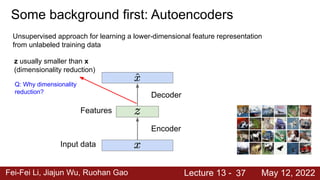

- 36. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 36 Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data Encoder Input data Features Decoder

- 37. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 37 Input data Features Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data z usually smaller than x (dimensionality reduction) Q: Why dimensionality reduction? Decoder Encoder

- 38. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 38 Input data Features Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data z usually smaller than x (dimensionality reduction) Decoder Encoder Q: Why dimensionality reduction? A: Want features to capture meaningful factors of variation in data

- 39. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 39 Encoder Input data Features How to learn this feature representation? Train such that features can be used to reconstruct original data “Autoencoding” - encoding input itself Decoder Reconstructed input data Reconstructed data Encoder: 4-layer conv Decoder: 4-layer upconv Input data

- 40. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 40 Encoder Input data Features Decoder Reconstructed data Input data Encoder: 4-layer conv Decoder: 4-layer upconv L2 Loss function: Train such that features can be used to reconstruct original data Doesn’t use labels!

- 41. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 41 Encoder Input data Features Decoder Reconstructed input data After training, throw away decoder

- 42. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 42 Encoder Input data Features Classifier Predicted Label Fine-tune encoder jointly with classifier Loss function (Softmax, etc) Encoder can be used to initialize a supervised model plane dog deer bird truck Train for final task (sometimes with small data) Transfer from large, unlabeled dataset to small, labeled dataset.

- 43. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Some background first: Autoencoders 43 Encoder Input data Features Decoder Reconstructed input data Autoencoders can reconstruct data, and can learn features to initialize a supervised model Features capture factors of variation in training data. But we can’t generate new images from an autoencoder because we don’t know the space of z. How do we make autoencoder a generative model?

- 44. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 44 Variational Autoencoders Probabilistic spin on autoencoders - will let us sample from the model to generate data!

- 45. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 45 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Assume training data is generated from the distribution of unobserved (latent) representation z Probabilistic spin on autoencoders - will let us sample from the model to generate data! Sample from true conditional

- 46. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 46 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Assume training data is generated from the distribution of unobserved (latent) representation z Probabilistic spin on autoencoders - will let us sample from the model to generate data! Sample from true conditional Intuition (remember from autoencoders!): x is an image, z is latent factors used to generate x: attributes, orientation, etc.

- 47. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 47 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x.

- 48. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 48 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How should we represent this model?

- 49. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 49 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How should we represent this model? Choose prior p(z) to be simple, e.g. Gaussian. Reasonable for latent attributes, e.g. pose, how much smile.

- 50. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 50 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How should we represent this model? Choose prior p(z) to be simple, e.g. Gaussian. Reasonable for latent attributes, e.g. pose, how much smile. Conditional p(x|z) is complex (generates image) => represent with neural network Decoder network

- 51. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 51 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How to train the model? Decoder network

- 52. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 52 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How to train the model? Learn model parameters to maximize likelihood of training data Decoder network

- 53. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 53 Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders Sample from true conditional We want to estimate the true parameters of this generative model given training data x. How to train the model? Learn model parameters to maximize likelihood of training data Q: What is the problem with this? Intractable! Decoder network

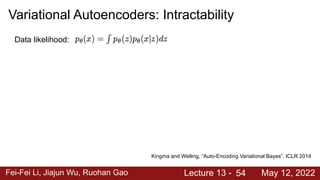

- 54. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 54 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood:

- 55. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 55 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: Simple Gaussian prior ✔

- 56. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 56 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: Decoder neural network ✔ ✔

- 57. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 57 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: Intractable to compute p(x|z) for every z! �� ✔ ✔

- 58. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 58 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: Intractable to compute p(x|z) for every z! �� ✔ ✔ Monte Carlo estimation is too high variance

- 59. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 59 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: �� ✔ ✔ Posterior density: Intractable data likelihood

- 60. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 60 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Variational Autoencoders: Intractability Data likelihood: Solution: In addition to modeling pθ (x|z), learn qɸ (z|x) that approximates the true posterior pθ (z|x). Will see that the approximate posterior allows us to derive a lower bound on the data likelihood that is tractable, which we can optimize. Variational inference is to approximate the unknown posterior distribution from only the observed data x Posterior density also intractable:

- 61. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 61 Variational Autoencoders

- 62. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 62 Variational Autoencoders Taking expectation wrt. z (using encoder network) will come in handy later

- 63. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 63 Variational Autoencoders

- 64. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 64 Variational Autoencoders

- 65. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 65 Variational Autoencoders

- 66. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 66 Variational Autoencoders The expectation wrt. z (using encoder network) let us write nice KL terms

- 67. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 67 Variational Autoencoders This KL term (between Gaussians for encoder and z prior) has nice closed-form solution! pθ (z|x) intractable (saw earlier), can’t compute this KL term :( But we know KL divergence always >= 0. Decoder network gives pθ (x|z), can compute estimate of this term through sampling (need some trick to differentiate through sampling).

- 68. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 68 Variational Autoencoders We want to maximize the data likelihood This KL term (between Gaussians for encoder and z prior) has nice closed-form solution! pθ (z|x) intractable (saw earlier), can’t compute this KL term :( But we know KL divergence always >= 0. Decoder network gives pθ (x|z), can compute estimate of this term through sampling.

- 69. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 69 Variational Autoencoders Tractable lower bound which we can take gradient of and optimize! (pθ (x|z) differentiable, KL term differentiable) We want to maximize the data likelihood

- 70. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 70 Variational Autoencoders Tractable lower bound which we can take gradient of and optimize! (pθ (x|z) differentiable, KL term differentiable) Decoder: reconstruct the input data Encoder: make approximate posterior distribution close to prior

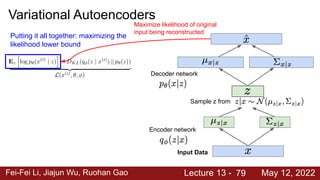

- 71. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 71 Variational Autoencoders Putting it all together: maximizing the likelihood lower bound

- 72. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 72 Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Let’s look at computing the KL divergence between the estimated posterior and the prior given some data

- 73. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 73 Encoder network Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound

- 74. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 74 Encoder network Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Make approximate posterior distribution close to prior Have analytical solution

- 75. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 75 Encoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Make approximate posterior distribution close to prior Not part of the computation graph!

- 76. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 76 Encoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Reparameterization trick to make sampling differentiable: Sample

- 77. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 77 Encoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Reparameterization trick to make sampling differentiable: Sample Part of computation graph Input to the graph

- 78. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 78 Encoder network Decoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound

- 79. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 79 Encoder network Decoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Maximize likelihood of original input being reconstructed

- 80. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 80 Encoder network Decoder network Sample z from Input Data Variational Autoencoders Putting it all together: maximizing the likelihood lower bound For every minibatch of input data: compute this forward pass, and then backprop!

- 81. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 81 Variational Autoencoders: Generating Data! Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Sample from true prior Sample from true conditional Decoder network Our assumption about data generation process

- 82. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 82 Variational Autoencoders: Generating Data! Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Sample from true prior Sample from true conditional Decoder network Our assumption about data generation process Decoder network Sample z from Sample x|z from Now given a trained VAE: use decoder network & sample z from prior!

- 83. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 83 Decoder network Sample z from Sample x|z from Variational Autoencoders: Generating Data! Use decoder network. Now sample z from prior! Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014

- 84. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 84 Decoder network Sample z from Sample x|z from Variational Autoencoders: Generating Data! Use decoder network. Now sample z from prior! Data manifold for 2-d z Vary z1 Vary z2 Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014

- 85. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 85 Variational Autoencoders: Generating Data! Vary z1 Vary z2 Degree of smile Head pose Diagonal prior on z => independent latent variables Different dimensions of z encode interpretable factors of variation Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014

- 86. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 86 Variational Autoencoders: Generating Data! Vary z1 Vary z2 Degree of smile Head pose Diagonal prior on z => independent latent variables Different dimensions of z encode interpretable factors of variation Also good feature representation that can be computed using qɸ (z|x)! Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014

- 87. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 87 Variational Autoencoders: Generating Data! 32x32 CIFAR-10 Labeled Faces in the Wild Figures copyright (L) Dirk Kingma et al. 2016; (R) Anders Larsen et al. 2017. Reproduced with permission.

- 88. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Variational Autoencoders 88 Probabilistic spin to traditional autoencoders => allows generating data Defines an intractable density => derive and optimize a (variational) lower bound Pros: - Principled approach to generative models - Interpretable latent space. - Allows inference of q(z|x), can be useful feature representation for other tasks Cons: - Maximizes lower bound of likelihood: okay, but not as good evaluation as PixelRNN/PixelCNN - Samples blurrier and lower quality compared to state-of-the-art (GANs) Active areas of research: - More flexible approximations, e.g. richer approximate posterior instead of diagonal Gaussian, e.g., Gaussian Mixture Models (GMMs), Categorical Distributions. - Learning disentangled representations.

- 89. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Taxonomy of Generative Models 89 Generative models Explicit density Implicit density Direct Tractable density Approximate density Markov Chain Variational Markov Chain Variational Autoencoder Boltzmann Machine GSN GAN Figure copyright and adapted from Ian Goodfellow, Tutorial on Generative Adversarial Networks, 2017. Fully Visible Belief Nets - NADE - MADE - PixelRNN/CNN - NICE / RealNVP - Glow - Ffjord

- 90. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 90 Generative Adversarial Networks (GANs)

- 91. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 So far... 91 VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead PixelRNN/CNNs define tractable density function, optimize likelihood of training data:

- 92. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 So far... VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead 92 What if we give up on explicitly modeling density, and just want ability to sample? PixelRNN/CNNs define tractable density function, optimize likelihood of training data:

- 93. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 So far... VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead 93 What if we give up on explicitly modeling density, and just want ability to sample? GANs: not modeling any explicit density function! PixelRNN/CNNs define tractable density function, optimize likelihood of training data:

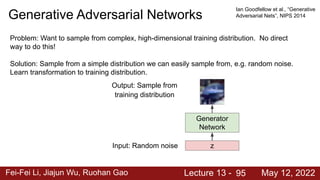

- 94. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Adversarial Networks 94 Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution we can easily sample from, e.g. random noise. Learn transformation to training distribution.

- 95. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution we can easily sample from, e.g. random noise. Learn transformation to training distribution. Generative Adversarial Networks 95 Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 z Input: Random noise Generator Network Output: Sample from training distribution

- 96. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution we can easily sample from, e.g. random noise. Learn transformation to training distribution. Generative Adversarial Networks 96 z Input: Random noise Generator Network Output: Sample from training distribution Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 But we don’t know which sample z maps to which training image -> can’t learn by reconstructing training images

- 97. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution we can easily sample from, e.g. random noise. Learn transformation to training distribution. Generative Adversarial Networks 97 z Input: Random noise Generator Network Output: Sample from training distribution Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 But we don’t know which sample z maps to which training image -> can’t learn by reconstructing training images Objective: generated images should look “real”

- 98. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution we can easily sample from, e.g. random noise. Learn transformation to training distribution. Generative Adversarial Networks 98 z Input: Random noise Generator Network Output: Sample from training distribution Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 But we don’t know which sample z maps to which training image -> can’t learn by reconstructing training images Discriminator Network Real? Fake? Solution: Use a discriminator network to tell whether the generate image is within data distribution (“real”) or not gradient

- 99. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 99 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014

- 100. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 100 z Random noise Generator Network Discriminator Network Fake Images (from generator) Real Images (from training set) Real or Fake Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Fake and real images copyright Emily Denton et al. 2015. Reproduced with permission. Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 101. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 101 z Random noise Generator Network Discriminator Network Fake Images (from generator) Real Images (from training set) Real or Fake Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Fake and real images copyright Emily Denton et al. 2015. Reproduced with permission. Generator learning signal Discriminator learning signal Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 102. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 102 Train jointly in minimax game Minimax objective function: Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images Generator objective Discriminator objective

- 103. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 103 Train jointly in minimax game Minimax objective function: Discriminator output for real data x Discriminator output for generated fake data G(z) Discriminator outputs likelihood in (0,1) of real image Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 104. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 104 Train jointly in minimax game Minimax objective function: Discriminator output for real data x Discriminator output for generated fake data G(z) Discriminator outputs likelihood in (0,1) of real image Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 105. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 105 Train jointly in minimax game Minimax objective function: Discriminator output for real data x Discriminator output for generated fake data G(z) Discriminator outputs likelihood in (0,1) of real image Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 106. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 106 Train jointly in minimax game Minimax objective function: Discriminator output for real data x Discriminator output for generated fake data G(z) Discriminator outputs likelihood in (0,1) of real image - Discriminator (θd ) wants to maximize objective such that D(x) is close to 1 (real) and D(G(z)) is close to 0 (fake) - Generator (θg ) wants to minimize objective such that D(G(z)) is close to 1 (discriminator is fooled into thinking generated G(z) is real) Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Discriminator network: try to distinguish between real and fake images Generator network: try to fool the discriminator by generating real-looking images

- 107. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 107 Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Gradient descent on generator Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014

- 108. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 108 Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Gradient descent on generator In practice, optimizing this generator objective does not work well! Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 When sample is likely fake, want to learn from it to improve generator (move to the right on X axis).

- 109. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 109 Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Gradient descent on generator In practice, optimizing this generator objective does not work well! Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 When sample is likely fake, want to learn from it to improve generator (move to the right on X axis). But gradient in this region is relatively flat! Gradient signal dominated by region where sample is already good

- 110. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 110 Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Instead: Gradient ascent on generator, different objective Instead of minimizing likelihood of discriminator being correct, now maximize likelihood of discriminator being wrong. Same objective of fooling discriminator, but now higher gradient signal for bad samples => works much better! Standard in practice. Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 High gradient signal Low gradient signal

- 111. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 111 Putting it together: GAN training algorithm Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014

- 112. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 112 Putting it together: GAN training algorithm Some find k=1 more stable, others use k > 1, no best rule. Followup work (e.g. Wasserstein GAN, BEGAN) alleviates this problem, better stability! Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Arjovsky et al. "Wasserstein gan." arXiv preprint arXiv:1701.07875 (2017) Berthelot, et al. "Began: Boundary equilibrium generative adversarial networks." arXiv preprint arXiv:1703.10717 (2017)

- 113. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Training GANs: Two-player game 113 Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images z Random noise Generator Network Discriminator Network Fake Images (from generator) Real Images (from training set) Real or Fake After training, use generator network to generate new images Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Fake and real images copyright Emily Denton et al. 2015. Reproduced with permission.

- 114. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Adversarial Nets 114 Nearest neighbor from training set Generated samples Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Figures copyright Ian Goodfellow et al., 2014. Reproduced with permission.

- 115. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Adversarial Nets 115 Nearest neighbor from training set Generated samples (CIFAR-10) Ian Goodfellow et al., “Generative Adversarial Nets”, NIPS 2014 Figures copyright Ian Goodfellow et al., 2014. Reproduced with permission.

- 116. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Adversarial Nets: Convolutional Architectures 116 Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016 Generator is an upsampling network with fractionally-strided convolutions Discriminator is a convolutional network

- 117. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 117 Radford et al, ICLR 2016 Samples from the model look much better! Generative Adversarial Nets: Convolutional Architectures

- 118. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 118 Radford et al, ICLR 2016 Interpolating between random points in latent space Generative Adversarial Nets: Convolutional Architectures

- 119. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Generative Adversarial Nets: Interpretable Vector Math 119 Smiling woman Neutral woman Neutral man Samples from the model Radford et al, ICLR 2016

- 120. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 120 Smiling woman Neutral woman Neutral man Samples from the model Average Z vectors, do arithmetic Radford et al, ICLR 2016 Generative Adversarial Nets: Interpretable Vector Math

- 121. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 121 Smiling woman Neutral woman Neutral man Smiling Man Samples from the model Average Z vectors, do arithmetic Radford et al, ICLR 2016 Generative Adversarial Nets: Interpretable Vector Math

- 122. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 122 Glasses man No glasses man No glasses woman Woman with glasses Radford et al, ICLR 2016 Generative Adversarial Nets: Interpretable Vector Math

- 123. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 123 https://github.com/hindupuravinash/the-gan-zoo See also: https://github.com/soumith/ganhacks for tips and tricks for trainings GANs 2017: Explosion of GANs “The GAN Zoo”

- 124. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 124 Better training and generation LSGAN, Zhu 2017. Wasserstein GAN, Arjovsky 2017. Improved Wasserstein GAN, Gulrajani 2017. Progressive GAN, Karras 2018. 2017: Explosion of GANs

- 125. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 2017: Explosion of GANs 125 CycleGAN. Zhu et al. 2017. Source->Target domain transfer Many GAN applications Pix2pix. Isola 2017. Many examples at https://phillipi.github.io/pix2pix/ Reed et al. 2017. Text -> Image Synthesis

- 126. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 2019: BigGAN 126 Brock et al., 2019

- 127. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Scene graphs to GANs Specifying exactly what kind of image you want to generate. The explicit structure in scene graphs provides better image generation for complex scenes. 127 Johnson et al. Image Generation from Scene Graphs, CVPR 2019 Figures copyright 2019. Reproduced with permission.

- 128. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 HYPE: Human eYe Perceptual Evaluations hype.stanford.edu Zhou, Gordon, Krishna et al. HYPE: Human eYe Perceptual Evaluations, NeurIPS 2019 128 Figures copyright 2019. Reproduced with permission.

- 129. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Summary: GANs 129 Don’t work with an explicit density function Take game-theoretic approach: learn to generate from training distribution through 2-player game Pros: - Beautiful, state-of-the-art samples! Cons: - Trickier / more unstable to train - Can’t solve inference queries such as p(x), p(z|x) Active areas of research: - Better loss functions, more stable training (Wasserstein GAN, LSGAN, many others) - Conditional GANs, GANs for all kinds of applications

- 130. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Summary 130 Autoregressive models: PixelRNN, PixelCNN Van der Oord et al, “Conditional image generation with pixelCNN decoders”, NIPS 2016 Variational Autoencoders Kingma and Welling, “Auto-encoding variational bayes”, ICLR 2013 Generative Adversarial Networks (GANs) Goodfellow et al, “Generative Adversarial Nets”, NIPS 2014

- 131. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Useful Resources on Generative Models CS 236: Deep Generative Models (Stanford) CS 294-158 Deep Unsupervised Learning (Berkeley) 131

- 132. Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022 Next: Self-Supervised Learning 132

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelRNN

23

Generate image pixels starting from corner

Dependency on previous pixels modeled

using an RNN (LSTM)

[van der Oord et al. 2016]](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-23-320.jpg)

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelRNN

24

Generate image pixels starting from corner

Dependency on previous pixels modeled

using an RNN (LSTM)

[van der Oord et al. 2016]](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-24-320.jpg)

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelRNN

25

Generate image pixels starting from corner

Dependency on previous pixels modeled

using an RNN (LSTM)

[van der Oord et al. 2016]](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-25-320.jpg)

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelRNN

26

Generate image pixels starting from corner

Dependency on previous pixels modeled

using an RNN (LSTM)

[van der Oord et al. 2016]

Drawback: sequential generation is slow

in both training and inference!](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-26-320.jpg)

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelCNN

27

[van der Oord et al. 2016]

Still generate image pixels starting from

corner

Dependency on previous pixels now

modeled using a CNN over context region

(masked convolution)

Figure copyright van der Oord et al., 2016. Reproduced with permission.](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-27-320.jpg)

![Fei-Fei Li, Jiajun Wu, Ruohan Gao Lecture 13 - May 12, 2022

PixelCNN

28

[van der Oord et al. 2016]

Still generate image pixels starting from

corner

Dependency on previous pixels now

modeled using a CNN over context region

(masked convolution)

Figure copyright van der Oord et al., 2016. Reproduced with permission.

Training is faster than PixelRNN

(can parallelize convolutions since context region

values known from training images)

Generation is still slow:

For a 32x32 image, we need to do forward passes of

the network 1024 times for a single image](https://image.slidesharecdn.com/lecture13jiajun-241102134159-c60ab04d/85/lecture_13_jiajun-pdf-Generative-models-GAN-28-320.jpg)

![Glary Utilities Pro 5.157.0.183 Crack + Key Download [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/artificialintelligence17-250529071922-ef6fe98e-thumbnail.jpg?width=560&fit=bounds)