Ad

Lexical analysis compiler design to read and study

- 2. Lexical Analyzer • Functions • Grouping input characters into tokens • Stripping out comments and white spaces • Correlating error messages with the source program • Issues (why separating lexical analysis from parsing) • Simpler design • Compiler efficiency • Compiler portability (e.g. Linux to Win)

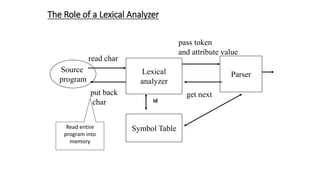

- 3. The Role of a Lexical Analyzer Lexical analyzer Parser Source program read char put back char pass token and attribute value get next Symbol Table Read entire program into memory id

- 4. Lexical Analysis • What do we want to do? Example: if (i == j) Z = 0; else Z = 1; • The input is just a string of characters: t if (i == j) n t t z = 0;n t else n t t z = 1; • Goal: Partition input string into substrings • Where the substrings are tokens

- 8. What’s a Token? • A syntactic category • In English: • noun, verb, adjective, … • In a programming language: • Identifier, Integer, Keyword, Whitespace,

- 9. What are Tokens For? • Classify program substrings according to role • Output of lexical analysis is a stream of tokens . . .which is input to the parser • Parser relies on token distinctions • An identifier is treated differently than a keyword

- 10. Tokens • Tokens correspond to sets of strings. • Identifier: strings of letters or digits, starting with a letter • Integer: a non-empty string of digits • Keyword: “else” or “if” or “begin” or … • Whitespace: a non-empty sequence of blanks, newlines, and tabs

- 11. Typical Tokens in a PL • Symbols: +, -, *, /, =, <, >, ->, … • Keywords: if, while, struct, float, int, … • Integer and Real (floating point) literals 123, 123.45 • Char (string) literals • Identifiers • Comments • White space

- 13. Tokens, Patterns and Lexemes • Pattern: A rule that describes a set of strings • Token: A set of strings in the same pattern • Lexeme: The sequence of characters of a token Token Sample Lexemes Pattern if if if id abc, n, count,… letters+digit NUMBER 3.14, 1000 numerical constant ; ; ;

- 17. Token Attribute • E = C1 ** 10 Token Attribute ID Index to symbol table entry E = ID Index to symbol table entry C1 ** NUM 10

- 18. Lexical Error and Recovery • Error detection • Error reporting • Error recovery • Delete the current character and restart scanning at the next character • Delete the first character read by the scanner and resume scanning at the character following it. • How about runaway strings and comments?

- 20. Specification of Tokens • Regular expressions are an important notation for specifying lexeme patterns. While they cannot express all possible patterns, they are very effective in specifying those types of patterns that we actually need for tokens.

- 21. Strings and Languages • An alphabet is any finite set of symbols such as letters, digits, and punctuation. • The set {0,1) is the binary alphabet • If x and y are strings, then the concatenation of x and y is also string, denoted xy, For example, if x = dog and y = house, then xy = doghouse. • The empty string is the identity under concatenation; that is, for any string s, ES = SE = s. • A string over an alphabet is a finite sequence of symbols drawn from that alphabet. • In language theory, the terms "sentence" and "word" are often used as synonyms for "string." • |s| represents the length of a string s, Ex: banana is a string of length 6 • The empty string, is the string of length zero.

- 22. Strings and Languages (cont.) • A language is any countable set of strings over some fixed alphabet. • Let L = {A, . . . , Z}, then{“A”,”B”,”C”, “BF”…,”ABZ”,…] is consider the language defined by L • Abstract languages like , the empty set, or {},the set containing only the empty string, are languages under this definition.

- 23. Terms for Parts of Strings

- 24. Operations on Languages Example: Let L be the set of letters {A, B, . . . , Z, a, b, . . . , z ) and let D be the set of digits {0,1,.. .9). L and D are, respectively, the alphabets of uppercase and lowercase letters and of digits. other languages can be constructed from L and D, using the operators illustrated above

- 26. Operations on Languages (cont.) 1. L U D is the set of letters and digits - strictly speaking the language with 62 (52+10) strings of length one, each of which strings is either one letter or one digit. 2. LD is the set of 520 strings of length two, each consisting of one letter followed by one digit.(10×52). Ex: A1, a1,B0,etc 3. L4 is the set of all 4-letter strings. (ex: aaba, bcef) 4. L* is the set of all strings of letters, including e, the empty string. 5. L(L U D)* is the set of all strings of letters and digits beginning with a letter. 6. D+ is the set of all strings of one or more digits.

- 27. Regular Expressions • The standard notation for regular languages is regular expressions. • Atomic regular expression: • Compound regular expression:

- 28. Cont. larger regular expressions are built from smaller ones. Let r and s are regular expressions denoting languages L(r) and L(s), respectively. 1. (r) | (s) is a regular expression denoting the language L(r) U L(s). 2. (r) (s) is a regular expression denoting the language L(r) L(s) . 3. (r) * is a regular expression denoting (L (r)) * . 4. (r) is a regular expression denoting L(r). This last rule says that we can add additional pairs of parentheses around expressions without changing the language they denote. for example, we may replace the regular expression (a) | ((b) * (c)) by a| b*c.

- 29. Examples

- 31. Regular Definition • C identifiers are strings of letters, digits, and underscores. The regular definition for the language of C identifiers. • LetterA | B | C|…| Z | a | b | … |z| - • digit 0|1|2 |… | 9 • id letter( letter | digit )* • Unsigned numbers (integer or floating point) are strings such as 5280, 0.01234, 6.336E4, or 1.89E-4. The regular definition • digit 0|1|2 |… | 9 • digits digit digit* • optionalFraction .digits | • optionalExponent ( E( + |- | ) digits ) | • number digits optionalFraction optionalExponent

- 33. RECOGNITION OF TOKENS •The patterns for the given tokens: •Given the grammar of branching statement: The terminals of the grammar, which are if, then, else, relop, id, and number, are the names of tokens as used by the lexical analyzer. The lexical analyzer also has the job of stripping out whitespace, by recognizing the "token" ws defined by:

- 34. Tokens, their patterns, and attribute values

- 36. Recognition of Tokens: Transition Diagram Ex :RELOP = < | <= | = | <> | > | >= 0 1 5 6 2 3 4 7 8 start < = = = > > other other return(relop,LE) return(relop,NE) return(relop,LT) return(relop,GE) return(relop,GT) return(relop,EQ) # # # indicates input retraction

- 39. Recognition of Identifiers • Ex2: ID = letter(letter | digit) * 9 10 11 start letter return(id) # indicates input retraction other # letter or digit Transition Diagram:

- 40. Mapping transition diagrams into C code 9 10 11 start letter return(id) other letter or digit switch (state) { case 9: if (isletter( c) ) state = 10; else state = failure(); break; case 10: c = nextchar(); if (isletter( c) || isdigit( c) ) state = 10; else state 11 case 11: retract(1); insert(id); return;

- 41. Recognition of Reserved Words •Install the reserved words in the symbol table initially. A field of the symbol- table entry indicates that these strings are never ordinary identifiers, and tells which token they represent. •Create separate transition diagrams for each keyword; the transition diagram for the reserved word then

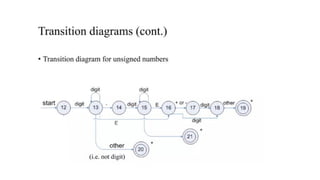

- 42. The transition diagram for token number Multiple accepting state Accepting integer e.g. 12 Accepting float e.g. 12.31 Accepting float e.g. 12.31E4

- 43. Finite Automata • Transition diagram is finite automation • Nondeterministic Finite Automation (NFA) • A set of states • A set of input symbols • A transition function, move(), that maps state-symbol pairs to sets of states. • A start state S0 • A set of states F as accepting (Final) states.

- 44. Example 0 1 3 start a 2 b b a b The set of states = {0,1,2,3} Input symbol = {a,b} Start state is S0, accepting state is S3

![Strings and Languages (cont.)

• A language is any countable set of strings over some fixed alphabet.

• Let L = {A, . . . , Z}, then{“A”,”B”,”C”, “BF”…,”ABZ”,…] is consider the

language defined by L

• Abstract languages like , the empty set, or

{},the set containing only the empty string, are languages under this definition.](https://image.slidesharecdn.com/lexicalanalysis-250418075922-667fcd38/85/Lexical-analysis-compiler-design-to-read-and-study-22-320.jpg)