Lexical Analyzer Implementation

Download as PPTX, PDF0 likes279 views

Lexical analysis involves breaking input text into tokens. It is implemented using regular expressions to specify token patterns and a finite automaton to recognize tokens in the input stream. Lex is a tool that allows specifying a lexical analyzer by defining regular expressions for tokens and actions to perform on each token. It generates code to simulate the finite automaton for token recognition. The generated lexical analyzer converts the input stream into tokens by matching input characters to patterns defined in the Lex source program.

1 of 27

Download to read offline

Ad

Recommended

Lexical analysis - Compiler Design

Lexical analysis - Compiler DesignMuhammed Afsal Villan The document discusses lexical analysis in compilers. It describes how the lexical analyzer reads source code characters and divides them into tokens. Regular expressions are used to specify patterns for token recognition. The lexical analyzer generates a finite state automaton to recognize these patterns. Lexical analysis is the first phase of compilation that separates the input into tokens for the parser.

Lex

LexBBDITM LUCKNOW Lex is a program generator designed for lexical processing of character input streams. It works by translating a table of regular expressions and corresponding program fragments provided by the user into a program. This program then reads an input stream, partitions it into strings matching the given expressions, and executes the associated program fragments in order. Flex is a fast lexical analyzer generator that is an alternative to Lex. It generates scanners that recognize lexical patterns in text based on pairs of regular expressions and C code provided by the user.

Lexical analyzer

Lexical analyzerAshwini Sonawane The document discusses the different phases of a compiler including lexical analysis, syntax analysis, semantic analysis, intermediate code generation, code optimization, and code generation. It provides details on each phase and the techniques involved. The overall structure of a compiler is given as taking a source program through various representations until target machine code is generated. Key terms related to compilers like tokens, lexemes, and parsing techniques are also introduced.

A Role of Lexical Analyzer

A Role of Lexical AnalyzerArchana Gopinath Lexical Analysis, Tokens, Patterns, Lexemes, Example pattern, Stages of a Lexical Analyzer, Regular expressions to the lexical analysis, Implementation of Lexical Analyzer, Lexical analyzer: use as generator.

1.Role lexical Analyzer

1.Role lexical AnalyzerRadhakrishnan Chinnusamy The document discusses the role and process of a lexical analyzer in compiler design. A lexical analyzer groups input characters into lexemes and produces a sequence of tokens as output for the syntactic analyzer. It strips out comments and whitespace, correlates line numbers with errors, and interacts with the symbol table. Lexical analysis improves compiler efficiency, portability, and allows for simpler parser design by separating lexical and syntactic analysis.

Lexical Analysis - Compiler design

Lexical Analysis - Compiler design Aman Sharma The document discusses the role and implementation of a lexical analyzer. It can be summarized as:

1. A lexical analyzer scans source code, groups characters into lexemes, and produces tokens which it returns to the parser upon request. It handles tasks like removing whitespace and expanding macros.

2. It implements buffering techniques to efficiently scan large inputs and uses transition diagrams to represent patterns for matching tokens.

3. Regular expressions are used to specify patterns for tokens, and flex is a common language for implementing lexical analyzers based on these specifications.

02. chapter 3 lexical analysis

02. chapter 3 lexical analysisraosir123 The document discusses lexical analysis in compiler design. It covers the role of the lexical analyzer, tokenization, and representation of tokens using finite automata. Regular expressions are used to formally specify patterns for tokens. A lexical analyzer generator converts these specifications into a finite state machine (FSM) implementation to recognize tokens in the input stream. The FSM is typically a deterministic finite automaton (DFA) for efficiency, even though a nondeterministic finite automaton (NFA) may require fewer states.

Lexical Analysis - Compiler Design

Lexical Analysis - Compiler DesignAkhil Kaushik Lexical analysis is the first phase of compilation. It reads source code characters and divides them into tokens by recognizing patterns using finite automata. It separates tokens, inserts them into a symbol table, and eliminates unnecessary characters. Tokens are passed to the parser along with line numbers for error handling. An input buffer is used to improve efficiency by reading source code in blocks into memory rather than character-by-character from secondary storage. Lexical analysis groups character sequences into lexemes, which are then classified as tokens based on patterns.

Lecture 01 introduction to compiler

Lecture 01 introduction to compilerIffat Anjum This document provides an introduction to compilers, including:

- What compilers are and their role in translating programs to machine code

- The main phases of compilation: lexical analysis, syntax analysis, semantic analysis, code generation, and optimization

- Key concepts like tokens, parsing, symbol tables, and intermediate representations

- Related software tools like preprocessors, assemblers, loaders, and linkers

Compiler Construction introduction

Compiler Construction introductionRana Ehtisham Ul Haq The document discusses the basics of compiler construction. It begins by defining key terms like compilers, source and target languages. It then describes the main phases of compilation as lexical analysis, syntax analysis, semantic analysis, intermediate code generation, code optimization and machine code generation. It also discusses symbol tables, compiler tools and generations of programming languages.

Lexical analyzer generator lex

Lexical analyzer generator lexAnusuya123 LEX is a tool that allows users to specify a lexical analyzer by defining patterns for tokens using regular expressions. The LEX compiler transforms these patterns into a transition diagram and generates C code. It takes a LEX source program as input, compiles it to produce lex.yy.c, which is then compiled with a C compiler to generate an executable that takes an input stream and returns a sequence of tokens. LEX programs have declarations, translation rules that map patterns to actions, and optional auxiliary functions. The actions are fragments of C code that execute when a pattern is matched.

Error detection recovery

Error detection recoveryTech_MX The document discusses error detection and recovery in compilers. It describes how compilers should detect various types of errors and attempt to recover from them to continue processing the program. It covers lexical, syntactic and semantic errors and different strategies compilers can use for error recovery like insertion, deletion or replacement of tokens. It also discusses properties of good error reporting and handling shift-reduce conflicts.

Type checking compiler construction Chapter #6

Type checking compiler construction Chapter #6Daniyal Mughal The document discusses type checking in programming languages. It defines type checking as verifying that each operation respects the language's type system, ensuring operands are of appropriate types and number. The document outlines the type checking process, including identifying available types and language constructs with types. It also discusses static and dynamic type checking, type systems, type expressions, type conversion, coercions, overloaded functions, and polymorphic functions.

Introduction to Compiler Construction

Introduction to Compiler Construction Sarmad Ali Translation of a program written in a source language into a semantically equivalent program written in a target language

It also reports to its users the presence of errors in the source program

Syntax Analysis in Compiler Design

Syntax Analysis in Compiler Design MAHASREEM Syntax analysis is the second phase of compiler design after lexical analysis. The parser checks if the input string follows the rules and structure of the formal grammar. It builds a parse tree to represent the syntactic structure. If the input string can be derived from the parse tree using the grammar, it is syntactically correct. Otherwise, an error is reported. Parsers use various techniques like panic-mode, phrase-level, and global correction to handle syntax errors and attempt to continue parsing. Context-free grammars are commonly used with productions defining the syntax rules. Derivations show the step-by-step application of productions to generate the input string from the start symbol.

Compiler design

Compiler designThakur Ganeshsingh Thakur This document discusses compiler design and how compilers work. It begins with prerequisites and definitions of compilers and their origins. It then describes the architecture of compilers, including lexical analysis, parsing, semantic analysis, code optimization, and code generation. It explains how compilers translate high-level code into machine-executable code. In conclusions, it summarizes that compilers translate code without changing meaning and aim to make code efficient. References for further reading on compiler design principles are also provided.

Compiler Design

Compiler DesignMir Majid This document provides information about the CS416 Compiler Design course, including the instructor details, prerequisites, textbook, grading breakdown, course outline, and an overview of the major parts and phases of a compiler. The course will cover topics such as lexical analysis, syntax analysis using top-down and bottom-up parsing, semantic analysis using attribute grammars, intermediate code generation, code optimization, and code generation.

Syntax analysis

Syntax analysisAkshaya Arunan The document discusses syntax analysis and parsing. It defines a syntax analyzer as creating the syntactic structure of a source program in the form of a parse tree. A syntax analyzer, also called a parser, checks if a program satisfies the rules of a context-free grammar and produces the parse tree if it does, or error messages otherwise. It describes top-down and bottom-up parsing methods and how parsers use grammars to analyze syntax.

Compiler Design

Compiler DesignDr. Jaydeep Patil In this PPT we covered all the points like..Introduction to compilers - Design issues, passes, phases, symbol table

Preliminaries - Memory management, Operating system support for compiler, Compiler support for garbage collection ,Lexical Analysis - Tokens, Regular Expressions, Process of Lexical analysis, Block Schematic, Automatic construction of lexical analyzer using LEX, LEX features and specification.

Syntax Directed Definition and its applications

Syntax Directed Definition and its applicationsShivanandManjaragi2 A syntax-directed definition (SDD) is a context-free grammar with attributes and semantic rules. Attributes are associated with grammar symbols and rules are associated with productions. An SDD can be evaluated on a parse tree to compute attribute values at each node. There are two types of attributes: synthesized attributes depend on child nodes, while inherited attributes depend on parent or sibling nodes. The order of evaluation is determined by a dependency graph showing the flow of information between attribute instances.

Language processing system.pdf

Language processing system.pdfRakibRahman19 The document provides an introduction to compilers and language processors. It discusses:

- A compiler translates a program written in one language (the source language) into an equivalent program in another language (the target language). Compilers detect and report errors during translation.

- An interpreter appears to directly execute the operations in a source program on supplied inputs, rather than producing a translated target program.

- Compilers are usually faster than interpreters at running programs, while interpreters can provide better error diagnostics by executing statements sequentially. Java combines compilation and interpretation through bytecode.

- The key differences between compilers and interpreters are how they translate programs, whether they generate intermediate code, translation and execution speed, memory usage

Compiler design syntax analysis

Compiler design syntax analysisRicha Sharma This document discusses syntax analysis in compiler design. It begins by explaining that the lexer takes a string of characters as input and produces a string of tokens as output, which is then input to the parser. The parser takes the string of tokens and produces a parse tree of the program. Context-free grammars are introduced as a natural way to describe the recursive structure of programming languages. Derivations and parse trees are discussed as ways to parse strings based on a grammar. Issues like ambiguity and left recursion in grammars are covered, along with techniques like left factoring that can be used to transform grammars.

Compiler Design Unit 1

Compiler Design Unit 1Jena Catherine Bel D This document provides an overview of compilers, including their history, components, and construction. It discusses the need for compilers to translate high-level programming languages into machine-readable code. The key phases of a compiler are described as scanning, parsing, semantic analysis, intermediate code generation, optimization, and code generation. Compiler construction relies on tools like scanner and parser generators.

Language for specifying lexical Analyzer

Language for specifying lexical AnalyzerArchana Gopinath The document discusses the role and process of lexical analysis using LEX. LEX is a tool that generates a lexical analyzer from regular expression rules. A LEX source program consists of auxiliary definitions for tokens and translation rules that match regular expressions to actions. The lexical analyzer created by LEX reads input one character at a time and finds the longest matching prefix, executes the corresponding action, and places the token in a buffer.

Sets and disjoint sets union123

Sets and disjoint sets union123Ankita Goyal The document discusses disjoint set data structures and union-find algorithms. Disjoint set data structures track partitions of elements into separate, non-overlapping sets. Union-find algorithms perform two operations on these data structures: find, to determine which set an element belongs to; and union, to combine two sets into a single set. The document describes array-based representations of disjoint sets and algorithms for the union and find operations, including a weighted union algorithm that aims to keep trees relatively balanced by favoring attaching the smaller tree to the root of the larger tree.

Code Optimization

Code OptimizationAkhil Kaushik This document discusses various techniques for optimizing computer code, including:

1. Local optimizations that improve performance within basic blocks, such as constant folding, propagation, and elimination of redundant computations.

2. Global optimizations that analyze control flow across basic blocks, such as common subexpression elimination.

3. Loop optimizations that improve performance of loops by removing invariant data and induction variables.

4. Machine-dependent optimizations like peephole optimizations that replace instructions with more efficient alternatives.

The goal of optimizations is to improve speed and efficiency while preserving program meaning and correctness. Optimizations can occur at multiple stages of development and compilation.

1.8. equivalence of finite automaton and regular expressions

1.8. equivalence of finite automaton and regular expressionsSampath Kumar S This document discusses Thompson's construction algorithm for converting regular expressions to equivalent nondeterministic finite automata (NFAs). It explains the rules for converting the operators in regular expressions, such as empty strings, symbols, union, concatenation, and Kleene star, into NFA transitions and states. Examples of converting regular expressions to NFAs are provided for practice. Thompson's construction allows regular expressions to be matched against strings using the resulting NFA.

Regular Expressions To Finite Automata

Regular Expressions To Finite AutomataInternational Institute of Information Technology (I²IT) It is a well-established fact that each regular expression can be transformed into a nondeterministic finite automaton.

Module4 lex and yacc.ppt

Module4 lex and yacc.pptProddaturNagaVenkata Lex and Yacc are compiler-writing tools that are used to specify lexical tokens and context-free grammars. Lex is used to specify lexical tokens and their processing order, while Yacc is used to specify context-free grammars for LALR(1) parsing. Both tools have a long history in computing and were originally developed in the early days of Unix on minicomputers. Lex reads a specification of lexical patterns and actions and generates a C program that implements a lexical analyzer. The generated C program defines a function called yylex() that scans the input and returns the recognized tokens.

Compiler Design_Lexical Analysis phase.pptx

Compiler Design_Lexical Analysis phase.pptxRushaliDeshmukh2 The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

Convert NFA to DFA

Transforming grammars and regular expressions

Transforming automata to grammars

Language for specifying lexical analyzers

Ad

More Related Content

What's hot (20)

Lecture 01 introduction to compiler

Lecture 01 introduction to compilerIffat Anjum This document provides an introduction to compilers, including:

- What compilers are and their role in translating programs to machine code

- The main phases of compilation: lexical analysis, syntax analysis, semantic analysis, code generation, and optimization

- Key concepts like tokens, parsing, symbol tables, and intermediate representations

- Related software tools like preprocessors, assemblers, loaders, and linkers

Compiler Construction introduction

Compiler Construction introductionRana Ehtisham Ul Haq The document discusses the basics of compiler construction. It begins by defining key terms like compilers, source and target languages. It then describes the main phases of compilation as lexical analysis, syntax analysis, semantic analysis, intermediate code generation, code optimization and machine code generation. It also discusses symbol tables, compiler tools and generations of programming languages.

Lexical analyzer generator lex

Lexical analyzer generator lexAnusuya123 LEX is a tool that allows users to specify a lexical analyzer by defining patterns for tokens using regular expressions. The LEX compiler transforms these patterns into a transition diagram and generates C code. It takes a LEX source program as input, compiles it to produce lex.yy.c, which is then compiled with a C compiler to generate an executable that takes an input stream and returns a sequence of tokens. LEX programs have declarations, translation rules that map patterns to actions, and optional auxiliary functions. The actions are fragments of C code that execute when a pattern is matched.

Error detection recovery

Error detection recoveryTech_MX The document discusses error detection and recovery in compilers. It describes how compilers should detect various types of errors and attempt to recover from them to continue processing the program. It covers lexical, syntactic and semantic errors and different strategies compilers can use for error recovery like insertion, deletion or replacement of tokens. It also discusses properties of good error reporting and handling shift-reduce conflicts.

Type checking compiler construction Chapter #6

Type checking compiler construction Chapter #6Daniyal Mughal The document discusses type checking in programming languages. It defines type checking as verifying that each operation respects the language's type system, ensuring operands are of appropriate types and number. The document outlines the type checking process, including identifying available types and language constructs with types. It also discusses static and dynamic type checking, type systems, type expressions, type conversion, coercions, overloaded functions, and polymorphic functions.

Introduction to Compiler Construction

Introduction to Compiler Construction Sarmad Ali Translation of a program written in a source language into a semantically equivalent program written in a target language

It also reports to its users the presence of errors in the source program

Syntax Analysis in Compiler Design

Syntax Analysis in Compiler Design MAHASREEM Syntax analysis is the second phase of compiler design after lexical analysis. The parser checks if the input string follows the rules and structure of the formal grammar. It builds a parse tree to represent the syntactic structure. If the input string can be derived from the parse tree using the grammar, it is syntactically correct. Otherwise, an error is reported. Parsers use various techniques like panic-mode, phrase-level, and global correction to handle syntax errors and attempt to continue parsing. Context-free grammars are commonly used with productions defining the syntax rules. Derivations show the step-by-step application of productions to generate the input string from the start symbol.

Compiler design

Compiler designThakur Ganeshsingh Thakur This document discusses compiler design and how compilers work. It begins with prerequisites and definitions of compilers and their origins. It then describes the architecture of compilers, including lexical analysis, parsing, semantic analysis, code optimization, and code generation. It explains how compilers translate high-level code into machine-executable code. In conclusions, it summarizes that compilers translate code without changing meaning and aim to make code efficient. References for further reading on compiler design principles are also provided.

Compiler Design

Compiler DesignMir Majid This document provides information about the CS416 Compiler Design course, including the instructor details, prerequisites, textbook, grading breakdown, course outline, and an overview of the major parts and phases of a compiler. The course will cover topics such as lexical analysis, syntax analysis using top-down and bottom-up parsing, semantic analysis using attribute grammars, intermediate code generation, code optimization, and code generation.

Syntax analysis

Syntax analysisAkshaya Arunan The document discusses syntax analysis and parsing. It defines a syntax analyzer as creating the syntactic structure of a source program in the form of a parse tree. A syntax analyzer, also called a parser, checks if a program satisfies the rules of a context-free grammar and produces the parse tree if it does, or error messages otherwise. It describes top-down and bottom-up parsing methods and how parsers use grammars to analyze syntax.

Compiler Design

Compiler DesignDr. Jaydeep Patil In this PPT we covered all the points like..Introduction to compilers - Design issues, passes, phases, symbol table

Preliminaries - Memory management, Operating system support for compiler, Compiler support for garbage collection ,Lexical Analysis - Tokens, Regular Expressions, Process of Lexical analysis, Block Schematic, Automatic construction of lexical analyzer using LEX, LEX features and specification.

Syntax Directed Definition and its applications

Syntax Directed Definition and its applicationsShivanandManjaragi2 A syntax-directed definition (SDD) is a context-free grammar with attributes and semantic rules. Attributes are associated with grammar symbols and rules are associated with productions. An SDD can be evaluated on a parse tree to compute attribute values at each node. There are two types of attributes: synthesized attributes depend on child nodes, while inherited attributes depend on parent or sibling nodes. The order of evaluation is determined by a dependency graph showing the flow of information between attribute instances.

Language processing system.pdf

Language processing system.pdfRakibRahman19 The document provides an introduction to compilers and language processors. It discusses:

- A compiler translates a program written in one language (the source language) into an equivalent program in another language (the target language). Compilers detect and report errors during translation.

- An interpreter appears to directly execute the operations in a source program on supplied inputs, rather than producing a translated target program.

- Compilers are usually faster than interpreters at running programs, while interpreters can provide better error diagnostics by executing statements sequentially. Java combines compilation and interpretation through bytecode.

- The key differences between compilers and interpreters are how they translate programs, whether they generate intermediate code, translation and execution speed, memory usage

Compiler design syntax analysis

Compiler design syntax analysisRicha Sharma This document discusses syntax analysis in compiler design. It begins by explaining that the lexer takes a string of characters as input and produces a string of tokens as output, which is then input to the parser. The parser takes the string of tokens and produces a parse tree of the program. Context-free grammars are introduced as a natural way to describe the recursive structure of programming languages. Derivations and parse trees are discussed as ways to parse strings based on a grammar. Issues like ambiguity and left recursion in grammars are covered, along with techniques like left factoring that can be used to transform grammars.

Compiler Design Unit 1

Compiler Design Unit 1Jena Catherine Bel D This document provides an overview of compilers, including their history, components, and construction. It discusses the need for compilers to translate high-level programming languages into machine-readable code. The key phases of a compiler are described as scanning, parsing, semantic analysis, intermediate code generation, optimization, and code generation. Compiler construction relies on tools like scanner and parser generators.

Language for specifying lexical Analyzer

Language for specifying lexical AnalyzerArchana Gopinath The document discusses the role and process of lexical analysis using LEX. LEX is a tool that generates a lexical analyzer from regular expression rules. A LEX source program consists of auxiliary definitions for tokens and translation rules that match regular expressions to actions. The lexical analyzer created by LEX reads input one character at a time and finds the longest matching prefix, executes the corresponding action, and places the token in a buffer.

Sets and disjoint sets union123

Sets and disjoint sets union123Ankita Goyal The document discusses disjoint set data structures and union-find algorithms. Disjoint set data structures track partitions of elements into separate, non-overlapping sets. Union-find algorithms perform two operations on these data structures: find, to determine which set an element belongs to; and union, to combine two sets into a single set. The document describes array-based representations of disjoint sets and algorithms for the union and find operations, including a weighted union algorithm that aims to keep trees relatively balanced by favoring attaching the smaller tree to the root of the larger tree.

Code Optimization

Code OptimizationAkhil Kaushik This document discusses various techniques for optimizing computer code, including:

1. Local optimizations that improve performance within basic blocks, such as constant folding, propagation, and elimination of redundant computations.

2. Global optimizations that analyze control flow across basic blocks, such as common subexpression elimination.

3. Loop optimizations that improve performance of loops by removing invariant data and induction variables.

4. Machine-dependent optimizations like peephole optimizations that replace instructions with more efficient alternatives.

The goal of optimizations is to improve speed and efficiency while preserving program meaning and correctness. Optimizations can occur at multiple stages of development and compilation.

1.8. equivalence of finite automaton and regular expressions

1.8. equivalence of finite automaton and regular expressionsSampath Kumar S This document discusses Thompson's construction algorithm for converting regular expressions to equivalent nondeterministic finite automata (NFAs). It explains the rules for converting the operators in regular expressions, such as empty strings, symbols, union, concatenation, and Kleene star, into NFA transitions and states. Examples of converting regular expressions to NFAs are provided for practice. Thompson's construction allows regular expressions to be matched against strings using the resulting NFA.

Regular Expressions To Finite Automata

Regular Expressions To Finite AutomataInternational Institute of Information Technology (I²IT) It is a well-established fact that each regular expression can be transformed into a nondeterministic finite automaton.

Similar to Lexical Analyzer Implementation (20)

Module4 lex and yacc.ppt

Module4 lex and yacc.pptProddaturNagaVenkata Lex and Yacc are compiler-writing tools that are used to specify lexical tokens and context-free grammars. Lex is used to specify lexical tokens and their processing order, while Yacc is used to specify context-free grammars for LALR(1) parsing. Both tools have a long history in computing and were originally developed in the early days of Unix on minicomputers. Lex reads a specification of lexical patterns and actions and generates a C program that implements a lexical analyzer. The generated C program defines a function called yylex() that scans the input and returns the recognized tokens.

Compiler Design_Lexical Analysis phase.pptx

Compiler Design_Lexical Analysis phase.pptxRushaliDeshmukh2 The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

Convert NFA to DFA

Transforming grammars and regular expressions

Transforming automata to grammars

Language for specifying lexical analyzers

Ch 2.pptx

Ch 2.pptxwoldu2 This document provides an overview of lexical analysis, which is the first phase of a compiler. It discusses what lexical analysis involves, defines important terms like tokens and lexemes, and describes how regular expressions are used to specify patterns for token recognition. Finite state automata are used to implement the recognition of tokens from patterns. The document also introduces Lexical Analyzer Generator (Lex) as a tool for automatically generating scanners/lexers from token specifications written as regular expressions. Key aspects of the Lex tool like its structure and use of rules/actions are outlined.

Compier Design_Unit I_SRM.ppt

Compier Design_Unit I_SRM.pptApoorv Diwan This document provides an overview of a compiler design course, including prerequisites, textbook, course outline, and introductions to key compiler concepts. The course outline covers topics such as lexical analysis, syntax analysis, parsing techniques, semantic analysis, intermediate code generation, code optimization, and code generation. Compiler design involves translating a program from a source language to a target language. Key phases of compilation include lexical analysis, syntax analysis, semantic analysis, intermediate code generation, code optimization, and code generation. Parsing techniques can be top-down or bottom-up.

LexicalAnalysis in Compiler design .pt

LexicalAnalysis in Compiler design .ptSannidhanapuharika Lexical analysis is a process that breaks down a text into tokens, which are the smallest units of code that have meaning. It is a fundamental step in natural language processing (NLP) and is also the first phase of a compiler.

Compiler Design

Compiler DesignAnujashejwal The document defines different phases of a compiler and describes Lexical Analysis in detail. It discusses:

1) A compiler converts a high-level language to machine language through front-end and back-end phases including Lexical Analysis, Syntax Analysis, Semantic Analysis, Intermediate Code Generation, Code Optimization and Code Generation.

2) Lexical Analysis scans the source code and groups characters into tokens by removing whitespace and comments. It identifies tokens like identifiers, keywords, operators etc.

3) A lexical analyzer generator like Lex takes a program written in the Lex language and produces a C program that acts as a lexical analyzer.

CD UNIT-1.3 LEX PPT.pptx

CD UNIT-1.3 LEX PPT.pptxVamsiReddyHere The document discusses compiler construction tools and lexical analysis. It provides details about Lex, a tool that generates lexical analyzers from regular expressions. Lex takes a source program written in the Lex language and generates a C program that implements a lexical analyzer as a finite state machine. The generated analyzer reads an input stream and returns a stream of recognized tokens.

Lexicalanalyzer

LexicalanalyzerRoyalzig Luxury Furniture - Lexical analyzer reads source program character by character to produce tokens. It returns tokens to the parser one by one as requested.

- A token represents a set of strings defined by a pattern and has a type and attribute to uniquely identify a lexeme. Regular expressions are used to specify patterns for tokens.

- A finite automaton can be used as a lexical analyzer to recognize tokens. Non-deterministic finite automata (NFA) and deterministic finite automata (DFA) are commonly used, with DFA being more efficient for implementation. Regular expressions for tokens are first converted to NFA and then to DFA.

Lexicalanalyzer

LexicalanalyzerRoyalzig Luxury Furniture - Lexical analyzer reads source program character by character to produce tokens. It returns tokens to the parser one by one as requested.

- A token represents a set of strings defined by a pattern and has a type and attribute to uniquely identify a lexeme. Regular expressions are used to specify patterns for tokens.

- A finite automaton can be used as a lexical analyzer to recognize tokens. Non-deterministic finite automata (NFA) and deterministic finite automata (DFA) are commonly used, with DFA being more efficient for implementation. Regular expressions for tokens are first converted to NFA and then to DFA.

11700220036.pdf

11700220036.pdfSouvikRoy149 The document discusses the differences between compilers and interpreters. It states that a compiler translates an entire program into machine code in one pass, while an interpreter translates and executes code line by line. A compiler is generally faster than an interpreter, but is more complex. The document also provides an overview of the lexical analysis phase of compiling, including how it breaks source code into tokens, creates a symbol table, and identifies patterns in lexemes.

Lexical analysis - Compiler Design

Lexical analysis - Compiler DesignKuppusamy P Role of Lexical Analyzer, Specify the tokens, Recognize the tokens, Lexical analyzer generator, Finite automata,

Design of lexical analyzer generator

Regular Expression to Finite Automata

Regular Expression to Finite AutomataArchana Gopinath The document provides information about regular expressions and finite automata. It discusses how regular expressions are used to describe programming language tokens. It explains how regular expressions map to languages and the basic operations used to build regular expressions like concatenation, alternation, and Kleene closure. The document also discusses deterministic finite automata (DFAs), non-deterministic finite automata (NFAs), and algorithms for converting regular expressions to NFAs and DFAs. It covers minimizing DFAs and using finite automata for lexical analysis in scanners.

Compier Design_Unit I.ppt

Compier Design_Unit I.pptsivaganesh293 The document discusses the phases of a compiler and their functions. It describes:

1) Lexical analysis converts the source code to tokens by recognizing patterns in the input. It identifies tokens like identifiers, keywords, and numbers.

2) Syntax analysis/parsing checks that tokens are arranged according to grammar rules by constructing a parse tree.

3) Semantic analysis validates the program semantics and performs type checking using the parse tree and symbol table.

Compier Design_Unit I.ppt

Compier Design_Unit I.pptsivaganesh293 The document discusses the phases of a compiler:

1) Lexical analysis scans the source code and converts it to tokens which are passed to the syntax analyzer.

2) Syntax analysis/parsing checks the token arrangements against the language grammar and generates a parse tree.

3) Semantic analysis checks that the parse tree follows the language rules by using the syntax tree and symbol table, performing type checking.

4) Intermediate code generation represents the program for an abstract machine in a machine-independent form like 3-address code.

Lexical

Lexicalbaran19901990 The document discusses lexical analysis, which is the first stage of syntax analysis for programming languages. It covers terminology, using finite automata and regular expressions to describe tokens, and how lexical analyzers work. Lexical analyzers extract lexemes from source code and return tokens to the parser. They are often implemented using finite state machines generated from regular grammar descriptions of the lexical patterns in a language.

Unit2 Toc.pptx

Unit2 Toc.pptxviswanath kani This document discusses regular languages and finite automata. It begins by defining regular languages and expressions, and describing the equivalence between non-deterministic finite automata (NFAs) and deterministic finite automata (DFAs). It then discusses converting between regular expressions, NFAs with epsilon transitions, NFAs without epsilon transitions, and DFAs. The document provides examples of regular expressions and conversions between different representations. It concludes by describing the state elimination, formula, and Arden's methods for converting a DFA to a regular expression.

Pcd question bank

Pcd question bank Sumathi Gnanasekaran This document describes the syllabus for the course CS2352 Principles of Compiler Design. It includes 5 units covering lexical analysis, syntax analysis, intermediate code generation, code generation, and code optimization. The objectives of the course are to understand and implement a lexical analyzer, parser, code generation schemes, and optimization techniques. It lists a textbook and references for the course and provides a brief description of the topics to be covered in each unit.

Compiler design Project

Compiler design ProjectDushyantSharma146 The document discusses a compiler design project to convert BNF rules into YACC form and generate an abstract syntax tree. It provides background information on BNF notation and its use in compiler design. It also describes the input and output files used in YACC, including definition, rule, and auxiliary routine parts of the input file and y.tab.c and y.tab.h output files. The document concludes with an overview of syntax directed translation in compiler design for carrying out semantic analysis by augmenting the grammar with attributes.

Ch3.ppt

Ch3.pptMDSayem35 The document discusses lexical analysis and lexical analyzer generators. It begins by explaining that lexical analysis separates a program into tokens, which simplifies parser design and implementation. It then covers topics like token attributes, patterns and lexemes, regular expressions for specifying patterns, converting regular expressions to nondeterministic finite automata (NFAs) and then deterministic finite automata (DFAs). The document provides examples and algorithms for these conversions to generate a lexical analyzer from token specifications.

Ad

More from Akhil Kaushik (19)

Symbol Table, Error Handler & Code Generation

Symbol Table, Error Handler & Code GenerationAkhil Kaushik This ppt is all about the symbol table management, error handling and code generation in compiler design.

Parsing in Compiler Design

Parsing in Compiler DesignAkhil Kaushik This document discusses parsing and context-free grammars. It defines parsing as verifying that tokens generated by a lexical analyzer follow syntactic rules of a language using a parser. Context-free grammars are defined using terminals, non-terminals, productions and a start symbol. Top-down and bottom-up parsing are introduced. Techniques for grammar analysis and improvement like left factoring, eliminating left recursion, calculating first and follow sets are explained with examples.

Context Free Grammar

Context Free GrammarAkhil Kaushik This ppt is about the context free grammar (CFG), which must be studied to learn about the parsing phase.

Error Detection & Recovery

Error Detection & RecoveryAkhil Kaushik This ppt is about error handling in the compiler design. It also states the error recovering strategies.

Symbol Table

Symbol TableAkhil Kaushik The document discusses symbol tables, which are data structures used by compilers to store information about identifiers and other constructs from source code. A symbol table contains entries for each identifier with attributes like name, type, scope, and other properties. It allows efficient access to this information during analysis and synthesis phases of compilation. Symbol tables can be implemented using arrays, binary search trees, or hash tables, with hash tables being commonly used due to fast search times.

NFA & DFA

NFA & DFAAkhil Kaushik It explains the process of Non deterministic Finite Automata and Deterministic Finite Automata. It also explains how to convert NFA to DFA.

File Handling Python

File Handling PythonAkhil Kaushik Files in Python represent sequences of bytes stored on disk for permanent storage. They can be opened in different modes like read, write, append etc using the open() function, which returns a file object. Common file operations include writing, reading, seeking to specific locations, and closing the file. The with statement is recommended for opening and closing files to ensure they are properly closed even if an exception occurs.

Regular Expressions

Regular ExpressionsAkhil Kaushik Regular expressions are a powerful tool for searching, parsing, and modifying text patterns. They allow complex patterns to be matched with simple syntax. Some key uses of regular expressions include validating formats, extracting data from strings, and finding and replacing substrings. Regular expressions use special characters to match literal, quantified, grouped, and alternative character patterns across the start, end, or anywhere within a string.

Algorithms & Complexity Calculation

Algorithms & Complexity CalculationAkhil Kaushik The document defines algorithms and describes their characteristics and design techniques. It states that an algorithm is a step-by-step procedure to solve a problem and get the desired output. It discusses algorithm development using pseudocode and flowcharts. Various algorithm design techniques like top-down, bottom-up, incremental, divide and conquer are explained. The document also covers algorithm analysis in terms of time and space complexity and asymptotic notations like Big-O, Omega and Theta to analyze best, average and worst case running times. Common time complexities like constant, linear, quadratic, and exponential are provided with examples.

Intro to Data Structure & Algorithms

Intro to Data Structure & AlgorithmsAkhil Kaushik The document introduces data structures and algorithms, explaining that a good computer program requires both an efficient algorithm and an appropriate data structure. It defines data structures as organized ways to store and relate data, and algorithms as step-by-step processes to solve problems. Additionally, it discusses why studying data structures and algorithms is important for developing better programs and software applications.

Decision Making & Loops

Decision Making & LoopsAkhil Kaushik This document discusses decision making and loops in Python. It begins with an introduction to decision making using if/else statements and examples of checking conditions. It then covers different types of loops - for, while, and do-while loops. The for loop is used when the number of iterations is known, while the while loop is used when it is unknown. It provides examples of using range() with for loops and examples of while loops.

Basic programs in Python

Basic programs in PythonAkhil Kaushik This document contains a list of 12 basic Python programs presented by Akhil Kaushik, an Assistant Professor from TIT Bhiwani. The programs cover topics such as adding numbers, taking user input, adding different data types, calculator operations, comparisons, logical operations, bitwise operations, assignment operations, swapping numbers, random number generation, and working with dates and times. Contact information for Akhil Kaushik is provided at the top and bottom of the document.

Python Data-Types

Python Data-TypesAkhil Kaushik This document provides an overview of key concepts in Python including:

- Python is a dynamically typed language where variables are not explicitly defined and can change type.

- Names and identifiers in Python are case sensitive and follow specific conventions.

- Python uses indentation rather than brackets to define blocks of code.

- Core data types in Python include numeric, string, list, tuple, dictionary, set, boolean and file types. Each has specific characteristics and operators.

Introduction to Python Programming

Introduction to Python ProgrammingAkhil Kaushik This document provides an introduction to Python programming. It discusses that Python is an interpreted, object-oriented, high-level programming language with simple syntax. It then covers the need for programming languages, different types of languages, and translators like compilers, interpreters, assemblers, linkers, and loaders. The document concludes by discussing why Python is popular for web development, software development, data science, and more.

Compiler Design Basics

Compiler Design BasicsAkhil Kaushik This document provides an overview of compilers, including their structure and purpose. It discusses:

- What a compiler is and its main functions of analysis and synthesis.

- The history and need for compilers, from early assembly languages to modern high-level languages.

- The structure of a compiler, including lexical analysis, syntax analysis, semantic analysis, code optimization, and code generation.

- Different types of translators like interpreters, assemblers, and linkers.

- Tools that help in compiler construction like scanner generators, parser generators, and code generators.

Bootstrapping in Compiler

Bootstrapping in CompilerAkhil Kaushik The document discusses tombstone diagrams, which use puzzle pieces to represent language processors and programs. It then explains bootstrapping, which refers to using a compiler to compile itself. This allows obtaining a compiler for a new target machine by first writing a compiler in a high-level language, compiling it on the original machine, and then using the output compiler to compile itself on the new target machine. The document provides examples of using bootstrapping to generate cross-compilers that run on one machine but produce code for another.

Compiler construction tools

Compiler construction toolsAkhil Kaushik Compiler construction tools were introduced to aid in the development of compilers. These tools include scanner generators, parser generators, syntax-directed translation engines, and automatic code generators. Scanner generators produce lexical analyzers based on regular expressions to recognize tokens. Parser generators take context-free grammars as input to produce syntax analyzers. Syntax-directed translation engines associate translations with parse trees to generate intermediate code. Automatic code generators take intermediate code as input and output machine language. These tools help automate and simplify the compiler development process.

Phases of compiler

Phases of compilerAkhil Kaushik The document discusses the phases of compilation:

1. The front-end performs lexical, syntax and semantic analysis to generate an intermediate representation and includes error handling.

2. The back-end performs code optimization and generation to produce efficient machine-specific code from the intermediate representation.

3. Key phases include lexical and syntax analysis, semantic analysis, intermediate code generation, code optimization, and code generation.

Introduction to Compilers

Introduction to CompilersAkhil Kaushik This document provides an overview of compilers and translation processes. It defines a compiler as a program that transforms source code into a target language like assembly or machine code. Compilers perform analysis on the source code and synthesis to translate it. Compilers can be one-pass or multi-pass. Other translators include preprocessors, interpreters, assemblers, linkers, loaders, cross-compilers, language converters, rewriters, and decompilers. The history and need for compilers and programming languages is also discussed.

Ad

Recently uploaded (20)

Presentation on Tourism Product Development By Md Shaifullar Rabbi

Presentation on Tourism Product Development By Md Shaifullar RabbiMd Shaifullar Rabbi Presentation on Tourism Product Development By Md Shaifullar Rabbi, Assistant Manager- SABRE Bangladesh.

YSPH VMOC Special Report - Measles Outbreak Southwest US 4-30-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 4-30-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC) A measles outbreak originating in West Texas has been linked to confirmed cases in New Mexico, with additional cases reported in Oklahoma and Kansas. The current case count is 795 from Texas, New Mexico, Oklahoma, and Kansas. 95 individuals have required hospitalization, and 3 deaths, 2 children in Texas and one adult in New Mexico. These fatalities mark the first measles-related deaths in the United States since 2015 and the first pediatric measles death since 2003.

The YSPH Virtual Medical Operations Center Briefs (VMOC) were created as a service-learning project by faculty and graduate students at the Yale School of Public Health in response to the 2010 Haiti Earthquake. Each year, the VMOC Briefs are produced by students enrolled in Environmental Health Science Course 581 - Public Health Emergencies: Disaster Planning and Response. These briefs compile diverse information sources – including status reports, maps, news articles, and web content– into a single, easily digestible document that can be widely shared and used interactively. Key features of this report include:

- Comprehensive Overview: Provides situation updates, maps, relevant news, and web resources.

- Accessibility: Designed for easy reading, wide distribution, and interactive use.

- Collaboration: The “unlocked" format enables other responders to share, copy, and adapt seamlessly. The students learn by doing, quickly discovering how and where to find critical information and presenting it in an easily understood manner.

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC) A measles outbreak originating in West Texas has been linked to confirmed cases in New Mexico, with additional cases reported in Oklahoma and Kansas. The current case count is 817 from Texas, New Mexico, Oklahoma, and Kansas. 97 individuals have required hospitalization, and 3 deaths, 2 children in Texas and one adult in New Mexico. These fatalities mark the first measles-related deaths in the United States since 2015 and the first pediatric measles death since 2003.

The YSPH Virtual Medical Operations Center Briefs (VMOC) were created as a service-learning project by faculty and graduate students at the Yale School of Public Health in response to the 2010 Haiti Earthquake. Each year, the VMOC Briefs are produced by students enrolled in Environmental Health Science Course 581 - Public Health Emergencies: Disaster Planning and Response. These briefs compile diverse information sources – including status reports, maps, news articles, and web content– into a single, easily digestible document that can be widely shared and used interactively. Key features of this report include:

- Comprehensive Overview: Provides situation updates, maps, relevant news, and web resources.

- Accessibility: Designed for easy reading, wide distribution, and interactive use.

- Collaboration: The “unlocked" format enables other responders to share, copy, and adapt seamlessly. The students learn by doing, quickly discovering how and where to find critical information and presenting it in an easily understood manner.

CURRENT CASE COUNT: 817 (As of 05/3/2025)

• Texas: 688 (+20)(62% of these cases are in Gaines County).

• New Mexico: 67 (+1 )(92.4% of the cases are from Eddy County)

• Oklahoma: 16 (+1)

• Kansas: 46 (32% of the cases are from Gray County)

HOSPITALIZATIONS: 97 (+2)

• Texas: 89 (+2) - This is 13.02% of all TX cases.

• New Mexico: 7 - This is 10.6% of all NM cases.

• Kansas: 1 - This is 2.7% of all KS cases.

DEATHS: 3

• Texas: 2 – This is 0.31% of all cases

• New Mexico: 1 – This is 1.54% of all cases

US NATIONAL CASE COUNT: 967 (Confirmed and suspected):

INTERNATIONAL SPREAD (As of 4/2/2025)

• Mexico – 865 (+58)

‒Chihuahua, Mexico: 844 (+58) cases, 3 hospitalizations, 1 fatality

• Canada: 1531 (+270) (This reflects Ontario's Outbreak, which began 11/24)

‒Ontario, Canada – 1243 (+223) cases, 84 hospitalizations.

• Europe: 6,814

Understanding P–N Junction Semiconductors: A Beginner’s Guide

Understanding P–N Junction Semiconductors: A Beginner’s GuideGS Virdi Dive into the fundamentals of P–N junctions, the heart of every diode and semiconductor device. In this concise presentation, Dr. G.S. Virdi (Former Chief Scientist, CSIR-CEERI Pilani) covers:

What Is a P–N Junction? Learn how P-type and N-type materials join to create a diode.

Depletion Region & Biasing: See how forward and reverse bias shape the voltage–current behavior.

V–I Characteristics: Understand the curve that defines diode operation.

Real-World Uses: Discover common applications in rectifiers, signal clipping, and more.

Ideal for electronics students, hobbyists, and engineers seeking a clear, practical introduction to P–N junction semiconductors.

Political History of Pala dynasty Pala Rulers NEP.pptx

Political History of Pala dynasty Pala Rulers NEP.pptxArya Mahila P. G. College, Banaras Hindu University, Varanasi, India. The Pala kings were people-protectors. In fact, Gopal was elected to the throne only to end Matsya Nyaya. Bhagalpur Abhiledh states that Dharmapala imposed only fair taxes on the people. Rampala abolished the unjust taxes imposed by Bhima. The Pala rulers were lovers of learning. Vikramshila University was established by Dharmapala. He opened 50 other learning centers. A famous Buddhist scholar named Haribhadra was to be present in his court. Devpala appointed another Buddhist scholar named Veerdeva as the vice president of Nalanda Vihar. Among other scholars of this period, Sandhyakar Nandi, Chakrapani Dutta and Vajradatta are especially famous. Sandhyakar Nandi wrote the famous poem of this period 'Ramcharit'.

How to manage Multiple Warehouses for multiple floors in odoo point of sale

How to manage Multiple Warehouses for multiple floors in odoo point of saleCeline George The need for multiple warehouses and effective inventory management is crucial for companies aiming to optimize their operations, enhance customer satisfaction, and maintain a competitive edge.

Social Problem-Unemployment .pptx notes for Physiotherapy Students

Social Problem-Unemployment .pptx notes for Physiotherapy StudentsDrNidhiAgarwal Unemployment is a major social problem, by which not only rural population have suffered but also urban population are suffered while they are literate having good qualification.The evil consequences like poverty, frustration, revolution

result in crimes and social disorganization. Therefore, it is

necessary that all efforts be made to have maximum.

employment facilities. The Government of India has already

announced that the question of payment of unemployment

allowance cannot be considered in India

Exploring-Substances-Acidic-Basic-and-Neutral.pdf

Exploring-Substances-Acidic-Basic-and-Neutral.pdfSandeep Swamy Exploring Substances:

Acidic, Basic, and

Neutral

Welcome to the fascinating world of acids and bases! Join siblings Ashwin and

Keerthi as they explore the colorful world of substances at their school's

National Science Day fair. Their adventure begins with a mysterious white paper

that reveals hidden messages when sprayed with a special liquid.

In this presentation, we'll discover how different substances can be classified as

acidic, basic, or neutral. We'll explore natural indicators like litmus, red rose

extract, and turmeric that help us identify these substances through color

changes. We'll also learn about neutralization reactions and their applications in

our daily lives.

by sandeep swamy

One Hot encoding a revolution in Machine learning

One Hot encoding a revolution in Machine learningmomer9505 A brief introduction to ONE HOT encoding a way to communicate with machines

Biophysics Chapter 3 Methods of Studying Macromolecules.pdf

Biophysics Chapter 3 Methods of Studying Macromolecules.pdfPKLI-Institute of Nursing and Allied Health Sciences Lahore , Pakistan. This chapter provides an in-depth overview of the viscosity of macromolecules, an essential concept in biophysics and medical sciences, especially in understanding fluid behavior like blood flow in the human body.

Key concepts covered include:

✅ Definition and Types of Viscosity: Dynamic vs. Kinematic viscosity, cohesion, and adhesion.

⚙️ Methods of Measuring Viscosity:

Rotary Viscometer

Vibrational Viscometer

Falling Object Method

Capillary Viscometer

🌡️ Factors Affecting Viscosity: Temperature, composition, flow rate.

🩺 Clinical Relevance: Impact of blood viscosity in cardiovascular health.

🌊 Fluid Dynamics: Laminar vs. turbulent flow, Reynolds number.

🔬 Extension Techniques:

Chromatography (adsorption, partition, TLC, etc.)

Electrophoresis (protein/DNA separation)

Sedimentation and Centrifugation methods.

Introduction to Vibe Coding and Vibe Engineering

Introduction to Vibe Coding and Vibe EngineeringDamian T. Gordon Introduction to Vibe Coding and Vibe Engineering

GDGLSPGCOER - Git and GitHub Workshop.pptx

GDGLSPGCOER - Git and GitHub Workshop.pptxazeenhodekar This presentation covers the fundamentals of Git and version control in a practical, beginner-friendly way. Learn key commands, the Git data model, commit workflows, and how to collaborate effectively using Git — all explained with visuals, examples, and relatable humor.

The ever evoilving world of science /7th class science curiosity /samyans aca...

The ever evoilving world of science /7th class science curiosity /samyans aca...Sandeep Swamy The Ever-Evolving World of

Science

Welcome to Grade 7 Science4not just a textbook with facts, but an invitation to

question, experiment, and explore the beautiful world we live in. From tiny cells

inside a leaf to the movement of celestial bodies, from household materials to

underground water flows, this journey will challenge your thinking and expand

your knowledge.

Notice something special about this book? The page numbers follow the playful

flight of a butterfly and a soaring paper plane! Just as these objects take flight,

learning soars when curiosity leads the way. Simple observations, like paper

planes, have inspired scientific explorations throughout history.

apa-style-referencing-visual-guide-2025.pdf

apa-style-referencing-visual-guide-2025.pdfIshika Ghosh Title: A Quick and Illustrated Guide to APA Style Referencing (7th Edition)

This visual and beginner-friendly guide simplifies the APA referencing style (7th edition) for academic writing. Designed especially for commerce students and research beginners, it includes:

✅ Real examples from original research papers

✅ Color-coded diagrams for clarity

✅ Key rules for in-text citation and reference list formatting

✅ Free citation tools like Mendeley & Zotero explained

Whether you're writing a college assignment, dissertation, or academic article, this guide will help you cite your sources correctly, confidently, and consistent.

Created by: Prof. Ishika Ghosh,

Faculty.

📩 For queries or feedback: [email protected]

To study Digestive system of insect.pptx

To study Digestive system of insect.pptxArshad Shaikh Education is one thing no one can take away from you.”

K12 Tableau Tuesday - Algebra Equity and Access in Atlanta Public Schools

K12 Tableau Tuesday - Algebra Equity and Access in Atlanta Public Schoolsdogden2 Algebra 1 is often described as a “gateway” class, a pivotal moment that can shape the rest of a student’s K–12 education. Early access is key: successfully completing Algebra 1 in middle school allows students to complete advanced math and science coursework in high school, which research shows lead to higher wages and lower rates of unemployment in adulthood.

Learn how The Atlanta Public Schools is using their data to create a more equitable enrollment in middle school Algebra classes.

Geography Sem II Unit 1C Correlation of Geography with other school subjects

Geography Sem II Unit 1C Correlation of Geography with other school subjectsProfDrShaikhImran The correlation of school subjects refers to the interconnectedness and mutual reinforcement between different academic disciplines. This concept highlights how knowledge and skills in one subject can support, enhance, or overlap with learning in another. Recognizing these correlations helps in creating a more holistic and meaningful educational experience.

YSPH VMOC Special Report - Measles Outbreak Southwest US 4-30-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 4-30-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC)

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC)

Political History of Pala dynasty Pala Rulers NEP.pptx

Political History of Pala dynasty Pala Rulers NEP.pptxArya Mahila P. G. College, Banaras Hindu University, Varanasi, India.

Biophysics Chapter 3 Methods of Studying Macromolecules.pdf

Biophysics Chapter 3 Methods of Studying Macromolecules.pdfPKLI-Institute of Nursing and Allied Health Sciences Lahore , Pakistan.

Lexical Analyzer Implementation

- 1. Akhil Kaushik Asstt. Prof., CE Deptt., TIT Bhiwani Lexical Analysis - Implementation

- 3. Tokens

- 4. Tokens

- 5. Tokens -> RE • Specification of Tokens: The Patterns corresponding to a token are generally specified using a compact notation known as regular expression. • Regular expressions of a language are created by combining members of its alphabet. • A regular expression r corresponds to a set of strings L(r) where L(r) is called a regular set or a regular language and may be infinite.

- 6. RegEx A regular expression is defined as follows:- • A basic regular expression a denotes the set {a} where a ∈Σ; L(a) ={a} • The regular expression ε denotes the set {ε} • If r and s are two regular expressions denoting the sets L(r) and L(s) then; following are some rules for regular expressions

- 7. RegEx

- 8. RegEx

- 9. Token Recognition • Finite Automata are recognizers that can identify the tokens occurring in input stream. • Finite Automaton (FA) consists of: – A finite set of states – A set of transitions (or moves) between states: – A special start state – A set of final or accepting states

- 14. Input Buffering • Buffer Pairs: Due to amount of time taken to process characters and the large number of characters that must be processed. • Specialized buffering techniques are developed to reduce the amount of overhead required to process a single input character.

- 15. Language to Specify LA • Lex, allows one to specify a lexical analyzer by specifying regular expressions to describe patterns for tokens. • The input notation for the Lex tool is referred to as the Lex language and the tool itself is the Lex compiler. • Behind the scenes, the Lex compiler transforms the input patterns into a transition diagram and generates code, in a file called lex.yy.c, that simulates this transition diagram.

- 16. Language to Specify LA • Creating LA with Lex:-

- 17. Language to Specify LA In simple words:- • LEX converts Lex source program to Lexical analyzer. • Lexical Analyzer converts input stream into tokens.

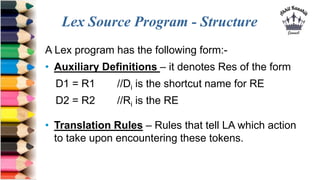

- 18. Lex Source Program - Structure A Lex program has the following form:- • Auxiliary Definitions – it denotes Res of the form D1 = R1 //Di is the shortcut name for RE D2 = R2 //Ri is the RE • Translation Rules – Rules that tell LA which action to take upon encountering these tokens.

- 19. Lex Source Program - Structure Auxiliary Definitions:- • D1 (letter) = A | B | C…| Z | a | b…| z (R1) • D2 (digit) = 0 | 1| 2….. | 9 (R2) • D3 (identifier) = letter (letter | digit)* • D4 (integer) = digit+ • D5 (sign) = + | - • D6 (signed-integer) = sign integer

- 20. Lex Source Program - Structure Translation Rules:- • The translation rules each have form: Pi {Actioni} • Each pattern is a regular expression, which may use the regular definitions of the declaration section. • The actions are fragments of code , typically written in C. • Ex: for ‘keyword’-> begin {return 1} • Ex: for ‘variable’-> identifier {install(); return 6}

- 21. Lex Source Program - Structure

- 22. Lex Source Program - Structure

- 23. Lex Source Program - Structure

- 24. Implementation of LA • Lex generates LA as its o/p by taking Lex program as i/p. • Lex program is collection of patterns (REs) and their corresponding actions. • Patterns represent tokens to be recognized by LA to be generated. • For each pattern, corresponding NFA will be designed.

- 25. Implementation of LA • There can be ‘n’ no. of NFAs for ‘n’ no. of patterns. • A start state is taken & using ε-transitions, all NFAs are combined. • The final state of each NFA show that it has found its own token Pi. • Convert NFA into DFA. • The final state of DFA shows the token we have found.

- 26. Implementation of LA • If none of the states of DFA include any final states of NFA, then an error is reported. • If final state of DFA includes more than one final state of NFA, then final state for pattern coming first in transition rule has priority. ************ -----------------************

- 27. Akhil Kaushik [email protected] 9416910303 CONTACT ME AT: Akhil Kaushik [email protected] 9416910303 THANK YOU !!!