Linked Data Spaces, Data Portability & Access

Download as PPT, PDF1 like1,435 views

Presentation covering issues of Data Access and Portability via Personally controlled points of presence, on Linked Data Networks called: Data Spaces.

1 of 15

Downloaded 24 times

Ad

Recommended

Solving Real Problems Using Linked Data

Solving Real Problems Using Linked DataKingsley Uyi Idehen How Linked Data provides federated and platform independent solution to challenges associated with:

1. Identity

2. Data Access & Integration

3. Precision Find.

R2 microsoft ado.net data services datasheet

R2 microsoft ado.net data services datasheetKlaudiia Jacome Microsoft ADO.NET Data Services enables the creation of REST-based data services that can expose data from any source and be consumed by rich internet applications. It provides libraries and client components to quickly build data services that present data using a uniform URI syntax and standard HTTP operations. These data services can then power interactive experiences when combined with technologies like AJAX and Silverlight.

Enterprise Information Integration

Enterprise Information IntegrationSharbani Bhattacharya Enterprise Information Integration (EII) is a process that provides a single interface and data representation to make heterogeneous data sources appear as a single homogeneous source. EII faces challenges as data can be stored in various formats across different systems. Technologies like ADO.NET and JDBC help access these different data sources. EII architecture supports various data sources, SQL queries across sources, and views of integrated data. EII aims to integrate both structured and unstructured enterprise information for uses like reporting, data warehousing, and applications. Commercial tools like WebSphere Studio and WebSphere Information Integrator provide EII capabilities.

Data Federation/EII Uses And Abuses

Data Federation/EII Uses And Abusesmark madsen As the need for "right-time" information becomes more prevalent, organizations are looking to new information delivery methods such as Enterprise Information Integration (EII). It's more than a distributed query, but where do vendors over-promise and under-deliver? This presentation will explain EII, how it's different from other integration technologies, and the underlying mechanisms. The goal is to outline areas where EII is a good fit and areas where other tools may be more appropriate.

CIS14: Is the Cloud Ready for Enterprise Identity and Security Requirements?

CIS14: Is the Cloud Ready for Enterprise Identity and Security Requirements?CloudIDSummit The cloud provides scalability and flexibility but also poses security challenges for enterprises with strict requirements. It discusses security needs like privacy, compliance, authentication, authorization and access controls. Advanced techniques are needed like attribute-based access control policies and metadata tagging to enable fine-grained security. Standards-based solutions can help meet enterprise needs and facilitate secure collaboration while enabling migration of workloads to the cloud.

Common Data Service – A Business Database!

Common Data Service – A Business Database!Pedro Azevedo In this session I tried to explain to SQL Community what is Common Data Service, it's a new Database or only a service to allow Power Users to create applications.

Basic concepts of xml

Basic concepts of xmlHelpWithAssignment.com This presentation is about the Basic Concepts of XML EXtensible Markup Language. The importance of these concepts is explained in this presentation. XML is one of the popular Markup Languages today.

Writing Code To Interact With Enterprise Search

Writing Code To Interact With Enterprise SearchCorey Roth Slides from my talk at SharePoint Saturday Ozarks 2009 about writing code to interact with MOSS Enterprise Search.

Introduction To Enterprise Search Tulsa Tech Fest 2009

Introduction To Enterprise Search Tulsa Tech Fest 2009Corey Roth This document introduces enterprise search and its key components. It discusses how enterprise search allows indexing and querying of documents from multiple sources within an organization. The main components covered are content sources, crawled properties, managed properties, scopes, and the search center. The document also outlines some drawbacks of earlier enterprise search offerings and new features in SharePoint 2010 and FAST Search Server 2010.

Microsoft Entity Framework

Microsoft Entity FrameworkMahmoud Tolba Microsoft Entity Framework is an object-relational mapper that allows developers to work with relational data as domain-specific objects, and provides automated CRUD operations. It supports various databases and provides a rich query capability through LINQ. Compared to LINQ to SQL, Entity Framework has a full provider model, supports multiple modeling techniques, and continuous support. The Entity Framework architecture includes components like the entity data model, LINQ to Entities, Entity SQL, and ADO.NET data providers. Code First allows defining models and mapping directly through code.

Data Services Platform - FiveSdigital

Data Services Platform - FiveSdigitalFive Splash Infotech Pvt. Ltd. Fivesdigital provide the Data Platform Service, accessing centralized data in a single database or multiple databases. Data Services Platform, the ability to segment the user base with any attributes ingested in the platform.

Document management and collaboration system

Document management and collaboration systemSom Imaging Informatics Pvt. Ltd i-doc is an enterprise grade document management system for searching, managing, viewing, sharing and archiving large volume of documents and data secured in digital format in a unified web and cloud based repository, addressing every gamut of document life-cycle with features such as right based access control, versioning, retrieval, document auditing, workflow creation and collaboration. It aims to minimize the hassles involved in hard copy management in an office environment with an interface supporting the storage of all document and multimedia format and seamless integration with other BPM systems.

Azure for IaaS - Global Windows Azure Bootcamp (GWAB)

Azure for IaaS - Global Windows Azure Bootcamp (GWAB)Loryan Strant A presentation on Windows Azure as a platform for Infrastructure as a Service.

Presented in Melbourne, Australia as part of the Global Windows Azure Bootcamp in April 2013.

Directory Introduction

Directory IntroductionAidy Tificate The Identity management solutions required specific skill to successfully deploy it. This presentation will help you to star build some of them.

Encryption in Microsoft 365 - session for CollabDays UK - Bletchley Park

Encryption in Microsoft 365 - session for CollabDays UK - Bletchley ParkAlbert Hoitingh During my session at the National Museum for Compu ting I described the encryption option and pitfalls when using Microsoft 365.

Data Loss Prevention in Office 365

Data Loss Prevention in Office 365CloudFronts Technologies LLP. In this webinar, we will walk-through Data Loss Prevention in Office 365. We will see how to create DLP policy with Labels as a condition. We will also go through Document fingerprint in Exchange Online DLP and DLP reports.

Modern Workplace Conference 2022 - Paris Microsoft Information Protection Dem...

Modern Workplace Conference 2022 - Paris Microsoft Information Protection Dem...Albert Hoitingh In this session, I went into any nook and cranny regarding Microsoft Information Protection. Labels, auto-classification, and PowerShell - all were part of this session.

Hybrid Identity Management with SharePoint and Office 365 - Antonio Maio

Hybrid Identity Management with SharePoint and Office 365 - Antonio MaioAntonioMaio2 Strong identity management is the foundation of any organization's security strategies. With the many online services available and constant public reports of massive identity theft, businesses and consumers are becoming increasingly concerned with protecting identities and the information they contain. In business, these identities represent our employees, our partners and of our clients. Moving into a hybrid environment with SharePoint on premise and Office 365 can pose challenges in how you protect those identities and enable easy access to cloud based services. This topic will discuss key considerations and the many options available for implementing a strong identity management strategy in a hybrid environment, so that organizations can work securely with on premise resources and Office 365.

Entity framework1

Entity framework1Girish Gowda The document discusses ADO.Net Entity Framework. It describes how the Entity Framework provides a graphical representation of database relationships to make them easier to understand. It uses XML files to define conceptual, storage, and mapping models. These models define how the database appears to applications, storage, and how the two are mapped. The Entity Framework supports database first, design first, and code first approaches. Lambda expressions and LINQ to Entities are used to query data. Advantages include easy CRUD operations and managing relationships.

SPSTC18 Laying Down the Law - Governing Your Data in O365

SPSTC18 Laying Down the Law - Governing Your Data in O365David Broussard Have you ever wanted to tell your users "I am the LAW!" when they ask why they have to tag a file in SharePoint? This session looks at what governance is, why its important, why our data is like laundry, and what tools Microsoft gives us to help you rein in your users and lay down the law!

Solving Real Problems Using Linked Data

Solving Real Problems Using Linked Datarumito The document discusses using linked data to solve problems of identity and data access/integration. It describes linked data as data that is accessible over HTTP and implicitly associated with metadata. It then outlines problems around identity, such as repeating credentials across different apps/enterprises. The solution proposed is assigning individuals HTTP-based IDs and binding IDs to certificates and profiles. Problems of data silos across different databases and apps are also described, with the solution being to generate conceptual views over heterogeneous sources using middleware and RDF.

Linked Data Planet Key Note

Linked Data Planet Key Noterumito The document discusses the concepts of linked data, how it can be created and deployed from various data sources, and how it can be exploited. Linked data allows accessing data on the web by reference using HTTP-based URIs and RDF, forming a giant global graph. It can be generated from existing web pages, services, databases and content, and deployed using a linked data server. Exploiting linked data allows discovery, integration and conceptual interaction across silos of heterogeneous data on the web and in enterprises.

Data Portability And Data Spaces 2

Data Portability And Data Spaces 2Kingsley Uyi Idehen The document discusses data portability and linked data spaces. It argues that data should belong to individuals rather than applications to avoid lock-in. Data portability allows data to be accessed across applications through standard formats and by reference using identifiers. This helps address issues of information overload and the rise of individualized real-time enterprises as people use multiple applications. The document presents an example data portability platform called ODS that exposes individual data through shared ontologies, allows SPARQL querying, and generates RDF from various data sources.

Data Portability And Data Spaces

Data Portability And Data Spacesrumito The document discusses data portability and linked data spaces. It argues that data should belong to individuals rather than applications to avoid lock-in. Data portability allows data mobility across applications through standard formats and by linking data. The Open Data Spaces (ODS) platform is presented as an example that exposes individual data through shared ontologies, makes it accessible via SPARQL, and generates RDF from different data sources to promote data portability.

ArcReady - Architecting For The Cloud

ArcReady - Architecting For The CloudMicrosoft ArcReady For our next ArcReady, we will explore a topic on everyone’s mind: Cloud computing. Several industry companies have announced cloud computing services . In October 2008 at the Professional Developers Conference, Microsoft announced the next phase of our Software + Services vision: the Azure Services Platform. The Azure Services Platforms provides a wide range of internet services that can be consumed from both on premises environments or the internet.

Session 1: Cloud Services

In our first session we will explore the current state of cloud services. We will then look at how applications should be architected for the cloud and explore a reference application deployed on Windows Azure. We will also look at the services that can be built for on premise application, using .NET Services. We will also address some of the concerns that enterprises have about cloud services, such as regulatory and compliance issues.

Session 2: The Azure Platform

In our second session we will take a slightly different look at cloud based services by exploring Live Mesh and Live Services. Live Mesh is a data synchronization client that has a rich API to build applications on. Live services are a collection of APIs that can be used to create rich applications for your customers. Live Services are based on internet standard protocols and data formats.

Azure Platform

Azure Platform Wes Yanaga The document discusses Microsoft's Windows Azure cloud computing platform. It provides an overview of the platform's infrastructure, services, and pricing models. The key points are:

1. Windows Azure provides infrastructure and services for building applications and storing data in the cloud. It offers compute, storage, database, and connectivity services.

2. The platform's infrastructure includes globally distributed data centers housing servers in shipping containers for high density.

3. Services include SQL Azure, storage, content delivery, queues, and an app development platform. Pricing models are consumption-based or via subscriptions.

Data Mesh Part 4 Monolith to Mesh

Data Mesh Part 4 Monolith to MeshJeffrey T. Pollock This is Part 4 of the GoldenGate series on Data Mesh - a series of webinars helping customers understand how to move off of old-fashioned monolithic data integration architecture and get ready for more agile, cost-effective, event-driven solutions. The Data Mesh is a kind of Data Fabric that emphasizes business-led data products running on event-driven streaming architectures, serverless, and microservices based platforms. These emerging solutions are essential for enterprises that run data-driven services on multi-cloud, multi-vendor ecosystems.

Join this session to get a fresh look at Data Mesh; we'll start with core architecture principles (vendor agnostic) and transition into detailed examples of how Oracle's GoldenGate platform is providing capabilities today. We will discuss essential technical characteristics of a Data Mesh solution, and the benefits that business owners can expect by moving IT in this direction. For more background on Data Mesh, Part 1, 2, and 3 are on the GoldenGate YouTube channel: https://www.youtube.com/playlist?list=PLbqmhpwYrlZJ-583p3KQGDAd6038i1ywe

Webinar Speaker: Jeff Pollock, VP Product (https://www.linkedin.com/in/jtpollock/)

Mr. Pollock is an expert technology leader for data platforms, big data, data integration and governance. Jeff has been CTO at California startups and a senior exec at Fortune 100 tech vendors. He is currently Oracle VP of Products and Cloud Services for Data Replication, Streaming Data and Database Migrations. While at IBM, he was head of all Information Integration, Replication and Governance products, and previously Jeff was an independent architect for US Defense Department, VP of Technology at Cerebra and CTO of Modulant – he has been engineering artificial intelligence based data platforms since 2001. As a business consultant, Mr. Pollock was a Head Architect at Ernst & Young’s Center for Technology Enablement. Jeff is also the author of “Semantic Web for Dummies” and "Adaptive Information,” a frequent keynote at industry conferences, author for books and industry journals, formerly a contributing member of W3C and OASIS, and an engineering instructor with UC Berkeley’s Extension for object-oriented systems, software development process and enterprise architecture.

Identity 2.0 and User-Centric Identity

Identity 2.0 and User-Centric IdentityOliver Pfaff This document discusses identity management concepts including Identity 2.0, user-centric identity, and how these apply to web services. It provides an overview and comparison of OpenID and Windows CardSpace as examples of user-centric identity solutions. It also summarizes an eFA project for federating access to medical records across health providers in Germany.

Making the Conceptual Layer Real via HTTP based Linked Data

Making the Conceptual Layer Real via HTTP based Linked DataKingsley Uyi Idehen A presentation that addresses pros and cons associated with approaches to making concrete conceptual models real. It covers HTTP based Linked Data and RDF data model as new mechanism for conceptual model oriented data access and integration.

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...Richard Harbridge The document provides an overview of SharePoint 2010 integration and interoperability capabilities. It discusses business data challenges and how SharePoint addresses interoperability through its user interface, identity, search, and data access platforms. It describes the Business Connectivity Services (BCS) and how it allows external content types, external columns, and external lists. It also covers limitations of the BCS and demonstrations of its capabilities.

Ad

More Related Content

What's hot (12)

Introduction To Enterprise Search Tulsa Tech Fest 2009

Introduction To Enterprise Search Tulsa Tech Fest 2009Corey Roth This document introduces enterprise search and its key components. It discusses how enterprise search allows indexing and querying of documents from multiple sources within an organization. The main components covered are content sources, crawled properties, managed properties, scopes, and the search center. The document also outlines some drawbacks of earlier enterprise search offerings and new features in SharePoint 2010 and FAST Search Server 2010.

Microsoft Entity Framework

Microsoft Entity FrameworkMahmoud Tolba Microsoft Entity Framework is an object-relational mapper that allows developers to work with relational data as domain-specific objects, and provides automated CRUD operations. It supports various databases and provides a rich query capability through LINQ. Compared to LINQ to SQL, Entity Framework has a full provider model, supports multiple modeling techniques, and continuous support. The Entity Framework architecture includes components like the entity data model, LINQ to Entities, Entity SQL, and ADO.NET data providers. Code First allows defining models and mapping directly through code.

Data Services Platform - FiveSdigital

Data Services Platform - FiveSdigitalFive Splash Infotech Pvt. Ltd. Fivesdigital provide the Data Platform Service, accessing centralized data in a single database or multiple databases. Data Services Platform, the ability to segment the user base with any attributes ingested in the platform.

Document management and collaboration system

Document management and collaboration systemSom Imaging Informatics Pvt. Ltd i-doc is an enterprise grade document management system for searching, managing, viewing, sharing and archiving large volume of documents and data secured in digital format in a unified web and cloud based repository, addressing every gamut of document life-cycle with features such as right based access control, versioning, retrieval, document auditing, workflow creation and collaboration. It aims to minimize the hassles involved in hard copy management in an office environment with an interface supporting the storage of all document and multimedia format and seamless integration with other BPM systems.

Azure for IaaS - Global Windows Azure Bootcamp (GWAB)

Azure for IaaS - Global Windows Azure Bootcamp (GWAB)Loryan Strant A presentation on Windows Azure as a platform for Infrastructure as a Service.

Presented in Melbourne, Australia as part of the Global Windows Azure Bootcamp in April 2013.

Directory Introduction

Directory IntroductionAidy Tificate The Identity management solutions required specific skill to successfully deploy it. This presentation will help you to star build some of them.

Encryption in Microsoft 365 - session for CollabDays UK - Bletchley Park

Encryption in Microsoft 365 - session for CollabDays UK - Bletchley ParkAlbert Hoitingh During my session at the National Museum for Compu ting I described the encryption option and pitfalls when using Microsoft 365.

Data Loss Prevention in Office 365

Data Loss Prevention in Office 365CloudFronts Technologies LLP. In this webinar, we will walk-through Data Loss Prevention in Office 365. We will see how to create DLP policy with Labels as a condition. We will also go through Document fingerprint in Exchange Online DLP and DLP reports.

Modern Workplace Conference 2022 - Paris Microsoft Information Protection Dem...

Modern Workplace Conference 2022 - Paris Microsoft Information Protection Dem...Albert Hoitingh In this session, I went into any nook and cranny regarding Microsoft Information Protection. Labels, auto-classification, and PowerShell - all were part of this session.

Hybrid Identity Management with SharePoint and Office 365 - Antonio Maio

Hybrid Identity Management with SharePoint and Office 365 - Antonio MaioAntonioMaio2 Strong identity management is the foundation of any organization's security strategies. With the many online services available and constant public reports of massive identity theft, businesses and consumers are becoming increasingly concerned with protecting identities and the information they contain. In business, these identities represent our employees, our partners and of our clients. Moving into a hybrid environment with SharePoint on premise and Office 365 can pose challenges in how you protect those identities and enable easy access to cloud based services. This topic will discuss key considerations and the many options available for implementing a strong identity management strategy in a hybrid environment, so that organizations can work securely with on premise resources and Office 365.

Entity framework1

Entity framework1Girish Gowda The document discusses ADO.Net Entity Framework. It describes how the Entity Framework provides a graphical representation of database relationships to make them easier to understand. It uses XML files to define conceptual, storage, and mapping models. These models define how the database appears to applications, storage, and how the two are mapped. The Entity Framework supports database first, design first, and code first approaches. Lambda expressions and LINQ to Entities are used to query data. Advantages include easy CRUD operations and managing relationships.

SPSTC18 Laying Down the Law - Governing Your Data in O365

SPSTC18 Laying Down the Law - Governing Your Data in O365David Broussard Have you ever wanted to tell your users "I am the LAW!" when they ask why they have to tag a file in SharePoint? This session looks at what governance is, why its important, why our data is like laundry, and what tools Microsoft gives us to help you rein in your users and lay down the law!

Similar to Linked Data Spaces, Data Portability & Access (20)

Solving Real Problems Using Linked Data

Solving Real Problems Using Linked Datarumito The document discusses using linked data to solve problems of identity and data access/integration. It describes linked data as data that is accessible over HTTP and implicitly associated with metadata. It then outlines problems around identity, such as repeating credentials across different apps/enterprises. The solution proposed is assigning individuals HTTP-based IDs and binding IDs to certificates and profiles. Problems of data silos across different databases and apps are also described, with the solution being to generate conceptual views over heterogeneous sources using middleware and RDF.

Linked Data Planet Key Note

Linked Data Planet Key Noterumito The document discusses the concepts of linked data, how it can be created and deployed from various data sources, and how it can be exploited. Linked data allows accessing data on the web by reference using HTTP-based URIs and RDF, forming a giant global graph. It can be generated from existing web pages, services, databases and content, and deployed using a linked data server. Exploiting linked data allows discovery, integration and conceptual interaction across silos of heterogeneous data on the web and in enterprises.

Data Portability And Data Spaces 2

Data Portability And Data Spaces 2Kingsley Uyi Idehen The document discusses data portability and linked data spaces. It argues that data should belong to individuals rather than applications to avoid lock-in. Data portability allows data to be accessed across applications through standard formats and by reference using identifiers. This helps address issues of information overload and the rise of individualized real-time enterprises as people use multiple applications. The document presents an example data portability platform called ODS that exposes individual data through shared ontologies, allows SPARQL querying, and generates RDF from various data sources.

Data Portability And Data Spaces

Data Portability And Data Spacesrumito The document discusses data portability and linked data spaces. It argues that data should belong to individuals rather than applications to avoid lock-in. Data portability allows data mobility across applications through standard formats and by linking data. The Open Data Spaces (ODS) platform is presented as an example that exposes individual data through shared ontologies, makes it accessible via SPARQL, and generates RDF from different data sources to promote data portability.

ArcReady - Architecting For The Cloud

ArcReady - Architecting For The CloudMicrosoft ArcReady For our next ArcReady, we will explore a topic on everyone’s mind: Cloud computing. Several industry companies have announced cloud computing services . In October 2008 at the Professional Developers Conference, Microsoft announced the next phase of our Software + Services vision: the Azure Services Platform. The Azure Services Platforms provides a wide range of internet services that can be consumed from both on premises environments or the internet.

Session 1: Cloud Services

In our first session we will explore the current state of cloud services. We will then look at how applications should be architected for the cloud and explore a reference application deployed on Windows Azure. We will also look at the services that can be built for on premise application, using .NET Services. We will also address some of the concerns that enterprises have about cloud services, such as regulatory and compliance issues.

Session 2: The Azure Platform

In our second session we will take a slightly different look at cloud based services by exploring Live Mesh and Live Services. Live Mesh is a data synchronization client that has a rich API to build applications on. Live services are a collection of APIs that can be used to create rich applications for your customers. Live Services are based on internet standard protocols and data formats.

Azure Platform

Azure Platform Wes Yanaga The document discusses Microsoft's Windows Azure cloud computing platform. It provides an overview of the platform's infrastructure, services, and pricing models. The key points are:

1. Windows Azure provides infrastructure and services for building applications and storing data in the cloud. It offers compute, storage, database, and connectivity services.

2. The platform's infrastructure includes globally distributed data centers housing servers in shipping containers for high density.

3. Services include SQL Azure, storage, content delivery, queues, and an app development platform. Pricing models are consumption-based or via subscriptions.

Data Mesh Part 4 Monolith to Mesh

Data Mesh Part 4 Monolith to MeshJeffrey T. Pollock This is Part 4 of the GoldenGate series on Data Mesh - a series of webinars helping customers understand how to move off of old-fashioned monolithic data integration architecture and get ready for more agile, cost-effective, event-driven solutions. The Data Mesh is a kind of Data Fabric that emphasizes business-led data products running on event-driven streaming architectures, serverless, and microservices based platforms. These emerging solutions are essential for enterprises that run data-driven services on multi-cloud, multi-vendor ecosystems.

Join this session to get a fresh look at Data Mesh; we'll start with core architecture principles (vendor agnostic) and transition into detailed examples of how Oracle's GoldenGate platform is providing capabilities today. We will discuss essential technical characteristics of a Data Mesh solution, and the benefits that business owners can expect by moving IT in this direction. For more background on Data Mesh, Part 1, 2, and 3 are on the GoldenGate YouTube channel: https://www.youtube.com/playlist?list=PLbqmhpwYrlZJ-583p3KQGDAd6038i1ywe

Webinar Speaker: Jeff Pollock, VP Product (https://www.linkedin.com/in/jtpollock/)

Mr. Pollock is an expert technology leader for data platforms, big data, data integration and governance. Jeff has been CTO at California startups and a senior exec at Fortune 100 tech vendors. He is currently Oracle VP of Products and Cloud Services for Data Replication, Streaming Data and Database Migrations. While at IBM, he was head of all Information Integration, Replication and Governance products, and previously Jeff was an independent architect for US Defense Department, VP of Technology at Cerebra and CTO of Modulant – he has been engineering artificial intelligence based data platforms since 2001. As a business consultant, Mr. Pollock was a Head Architect at Ernst & Young’s Center for Technology Enablement. Jeff is also the author of “Semantic Web for Dummies” and "Adaptive Information,” a frequent keynote at industry conferences, author for books and industry journals, formerly a contributing member of W3C and OASIS, and an engineering instructor with UC Berkeley’s Extension for object-oriented systems, software development process and enterprise architecture.

Identity 2.0 and User-Centric Identity

Identity 2.0 and User-Centric IdentityOliver Pfaff This document discusses identity management concepts including Identity 2.0, user-centric identity, and how these apply to web services. It provides an overview and comparison of OpenID and Windows CardSpace as examples of user-centric identity solutions. It also summarizes an eFA project for federating access to medical records across health providers in Germany.

Making the Conceptual Layer Real via HTTP based Linked Data

Making the Conceptual Layer Real via HTTP based Linked DataKingsley Uyi Idehen A presentation that addresses pros and cons associated with approaches to making concrete conceptual models real. It covers HTTP based Linked Data and RDF data model as new mechanism for conceptual model oriented data access and integration.

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...Richard Harbridge The document provides an overview of SharePoint 2010 integration and interoperability capabilities. It discusses business data challenges and how SharePoint addresses interoperability through its user interface, identity, search, and data access platforms. It describes the Business Connectivity Services (BCS) and how it allows external content types, external columns, and external lists. It also covers limitations of the BCS and demonstrations of its capabilities.

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...

SharePoint Fest Denver - SharePoint 2010 Integration and Interoperability: Wh...Richard Harbridge SharePoint 2010 provides strong interoperability capabilities through its business connectivity services (BCS) which allow external data to be accessed and used within SharePoint. The BCS uses external content types to describe external data structures and metadata and make the data accessible through external lists, web parts, Office applications and search. It supports various external data sources through connectors that can retrieve and write data without custom code, improving integration with external systems.

SQL Server Data Services

SQL Server Data ServicesEduardo Castro This document discusses building applications with SQL Data Services and Windows Azure. It provides an agenda that introduces SQL Data Services architecture, describes SDS application architectures, and how to scale out with SQL Data Services. It also discusses the SQL Data Services network topology and performance considerations for accessing SDS from applications.

SharePoint 2010 Integration and Interoperability: What you need to know

SharePoint 2010 Integration and Interoperability: What you need to knowRichard Harbridge There are challenges with disparate business data systems that cause issues. SharePoint 2010 provides important interoperability capabilities as a UI, identity, search, and data access platform through features like BCS. BCS allows external data to be surfaced in SharePoint as external lists and used in Office applications. It utilizes external content types and connectivity tools in SharePoint Designer and Visual Studio. However, there are also limitations to be aware of with BCS and external lists.

SharePoint 2010 Integration and Interoperability - SharePoint Saturday Hartford

SharePoint 2010 Integration and Interoperability - SharePoint Saturday HartfordRichard Harbridge SharePoint 2010 provides improved interoperability and integration with external business data systems through features like the Business Connectivity Services (BCS) and external content types. The BCS allows SharePoint to act as a platform for user interfaces, identity, search, and accessing external data without special effort. It provides connectors and tools for integrating systems like SQL, SAP, and Oracle into SharePoint as external lists and integrating the external data into Office applications and search.

Arc Ready Cloud Computing

Arc Ready Cloud ComputingPhilip Wheat The document discusses architecting applications for the cloud using Microsoft technologies. It provides an overview of Microsoft's Azure platform, including hosting applications on Azure infrastructure as a service (IaaS) or platform as a service (PaaS). It also discusses using Azure storage services like tables, queues and blobs to build scalable cloud applications.

SharePoint Integration and Interoperability

SharePoint Integration and InteroperabilityRichard Harbridge The document provides an overview of Business Connectivity Services (BCS) in SharePoint 2010. It discusses how BCS addresses challenges of integrating back-end data and provides external data in a centrally managed way. It describes external content types, external columns, external lists, and tools for building BCS solutions. It also covers limitations of using BCS and external lists, such as lack of support for workflows, alerts, and item-level permissions on external lists.

Cloud Modernization and Data as a Service Option

Cloud Modernization and Data as a Service OptionDenodo Watch here: https://bit.ly/36tEThx

The current data landscape is fragmented, not just in location but also in terms of shape and processing paradigms. Cloud has become a key component of modern architecture design. Data lakes, IoT, NoSQL, SaaS, etc. coexist with relational databases to fuel the needs of modern analytics, ML and AI. Exploring and understanding the data available within your organization is a time-consuming task. Dealing with bureaucracy, different languages and protocols, and the definition of ingestion pipelines to load that data into your data lake can be complex. And all of this without even knowing if that data will be useful at all.

Attend this session to learn:

- How dynamic data challenges and the speed of change requires a new approach to data architecture – one that is real-time, agile and doesn’t rely on physical data movement.

- Learn how logical data architecture can enable organizations to transition data faster to the cloud with zero downtime and ultimately deliver faster time to insight.

- Explore how data as a service and other API management capabilities is a must in a hybrid cloud environment.

Introduction to Azure Cloud Storage

Introduction to Azure Cloud StorageGanga R Jaiswal The document provides an overview of Windows Azure cloud storage. It discusses cloud computing fundamentals and models including infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS). It introduces Windows Azure storage services including blobs, tables, queues, and files. It describes features like data replication, storage objects, and durability options. It also provides instructions for using the Azure management portal and C++ SDK to interact with Azure storage.

Shibboleth Guided Tour Webinar

Shibboleth Guided Tour WebinarJohn Lewis The Shibboleth® System is a standards based, open source software package for web single sign-on across or within organizational boundaries. It allows sites to make informed authorization decisions for individual access of protected online resources in a privacy-preserving manner.

* Get an overview of the technical basics of Shibboleth.

* Learn about the two primary parts to the Shibboleth system.

* Review the numerous services and options of Shibboleth.

* See a live demo of Shibboleth in action.

Best Practices Integration And Interoperability

Best Practices Integration And InteroperabilityAllinConsulting Overview of SharePoint Integration and Operability Best Praxtices coving approaches and llimitations.

Ad

More from Kingsley Uyi Idehen (20)

Virtuoso Platform Overview

Virtuoso Platform OverviewKingsley Uyi Idehen This presentation provides an overview of the Virtuoso platform which special emphasis on its Knowledge Graph and Data Virtualization functionality realms.

LOD Cloud Knowledge Graph vs COVID-19

LOD Cloud Knowledge Graph vs COVID-19Kingsley Uyi Idehen Presentation about unleashing the FORCE of the LOD Cloud Knowledge in the the fight against COVID-19.

Enterprise & Web based Federated Identity Management & Data Access Controls

Enterprise & Web based Federated Identity Management & Data Access Controls Kingsley Uyi Idehen This presentation breaks down issues associated with federated identity management and protected resource access controls (policies). Specifically, it uses Virtuoso and RDF to demonstrate how this longstanding issue has been addressed using the combination of RDF based entity relationship semantics and Linked Open Data.

Virtuoso, The Prometheus of RDF -- Sematics 2014 Conference Keynote

Virtuoso, The Prometheus of RDF -- Sematics 2014 Conference KeynoteKingsley Uyi Idehen This document discusses Virtuoso, an RDF-based relational database management system. It summarizes Virtuoso's capabilities and recent improvements. Virtuoso uses structure awareness to store structured RDF data as tables for faster performance similar to SQL. Recent versions have achieved parity with SQL databases on benchmarks by exploiting common structures in RDF data through columnar storage and vector execution. The document outlines several ongoing European Commission projects using Virtuoso to drive further RDF performance improvements and expand its use in applications like geospatial data and life sciences.

OpenLink Virtuoso - Management & Decision Makers Overview

OpenLink Virtuoso - Management & Decision Makers OverviewKingsley Uyi Idehen OpenLink Virtuoso is a multi-model database developed by OpenLink Software that allows for data integration across various data sources. It provides data virtualization capabilities through its middleware layer and pluggable linked data cartridges. Virtuoso has powerful performance and scalability and is used as the core platform behind large linked open data projects like DBpedia and the Linked Open Data cloud. It supports a variety of standards that enable loosely coupled integration with various tools and applications.

Understanding Data

Understanding Data Kingsley Uyi Idehen Data is an increasingly common term used on the assumption that its meaning is commonly understood. This presentation seeks to drill down into the very specifics of what data is all about.

HTML5 based PivotViewer for Visualizing LInked Data

HTML5 based PivotViewer for Visualizing LInked Data Kingsley Uyi Idehen Presentation that showcases Linked Data visualization via SPARQL queries against BBC Nature & Wild Life Linked Data.

Sigma Knowledge Engineering Environment

Sigma Knowledge Engineering EnvironmentKingsley Uyi Idehen The Sigma Knowledge Engineering Environment is an IDE for developing large ontologies in first- and higher-order logic, such as the Suggested Upper Merged Ontology (SUMO). Sigma allows browsing ontologies, performing inference, and debugging. It provides tools for mapping, merging, translating between ontology languages, and consistency checking of knowledge bases.

Linked Open Data (LOD) Cloud & Ontology Life Cycles

Linked Open Data (LOD) Cloud & Ontology Life Cycles Kingsley Uyi Idehen This was a presentation given to the Ontolog groups session on Ontology Life Cycles and Software. It covers the implications of the Web as the software platform and realities delivered by the Linked Open Data cloud.

ISWC 2012 - Linked Data Meetup

ISWC 2012 - Linked Data MeetupKingsley Uyi Idehen The document discusses the concepts of linked data, RDF, the semantic web, and the linked open data cloud. It explains that linked data uses hyperlinks to denote entities, whose descriptions contain structured data with explicit entity relationship semantics based on first-order logic. A diagram shows the growing linked open data cloud, and the document notes that governments, Facebook, Google, and businesses have increasingly adopted these semantic web technologies.

Knowledge Design Patterns (by John F. Sowa)

Knowledge Design Patterns (by John F. Sowa)Kingsley Uyi Idehen Presentation by John F. Sowa that covers knowledge design pattern for combining logic, ontology, and computation.

Accessing the Linked Open Data Cloud via ODBC

Accessing the Linked Open Data Cloud via ODBCKingsley Uyi Idehen Detailed how-to guide covering the fusion of ODBC and Linked Data, courtesy of Virtuoso.

This presentation includes live links to actual ODBC and Linked Data exploitation demos via an HTML5 based XMLA-ODBC Client. It covers:

1. SPARQL queries to various Linked (Open) Data Sources via ODBC

2. ODBC access to SQL Views generated from federated SPARQL queries

3. Local and Network oriented Hyperlinks

4. Structured Data Representation and Formats.

Virtuoso ODBC Driver Configuration & Usage (Mac OS X)

Virtuoso ODBC Driver Configuration & Usage (Mac OS X)Kingsley Uyi Idehen Detailed Installation Guide for using the Virtuoso ODBC Driver to connect Mac OS X Applications to the Linked (Open) Data Cloud and other Big Data sources.

Virtuoso ODBC Driver Configuration & Usage (Windows)

Virtuoso ODBC Driver Configuration & Usage (Windows)Kingsley Uyi Idehen Detailed Installation Guide for using the Virtuoso ODBC Driver to connect Windows Applications to the Linked (Open) Data Cloud and other Big Data sources.

Exploiting Linked Data via Filemaker

Exploiting Linked Data via FilemakerKingsley Uyi Idehen The document discusses using Filemaker as a Linked (Open) Data client via Virtuoso's ODBC Driver. It provides steps for installing the Virtuoso ODBC Driver, configuring ODBC data sources, connecting Filemaker to external data sources like the Linked Open Data Cloud via ODBC, and accessing and exploring remote table data. Benefits include progressive intelligence accumulation through links between structured data from different sources.

Tableau Desktop as a Linked (Open) Data Front-End via ODBC

Tableau Desktop as a Linked (Open) Data Front-End via ODBCKingsley Uyi Idehen Detailed guide (in presentation form) covering the use of Tableau Desktop as a Linked (Open) Data Front-End via Virtuoso's ODBC Driver.

Using SAP Crystal Reports as a Linked (Open) Data Front-End via ODBC

Using SAP Crystal Reports as a Linked (Open) Data Front-End via ODBCKingsley Uyi Idehen Detailed guide (in presentation form) covering the use of Crystal Reports as a Linked (Open) Data Front-End via Virtuoso's ODBC Driver.

Exploiting Linked (Open) Data via Microsoft Access using ODBC File DSNs

Exploiting Linked (Open) Data via Microsoft Access using ODBC File DSNsKingsley Uyi Idehen This is a variation of the initial presentation (which slideshare won't let me overwrite) that includes the use of File DSNs for attaching SPARQL Views to Microsoft Access via ODBC.

Using Tibco SpotFire (via Virtuoso ODBC) as Linked Data Front-end

Using Tibco SpotFire (via Virtuoso ODBC) as Linked Data Front-endKingsley Uyi Idehen Detailed guide covering the configuration of a Virtuoso ODBC Data Source Name (DSN) into the Web of Linked Data en route to utilization via Tibco's SpotFire BI tool.

Basically, SpotFire as a Linked (Open) Data fronte-end via ODBC.

Exploiting Linked (Open) Data via Microsoft Access

Exploiting Linked (Open) Data via Microsoft AccessKingsley Uyi Idehen This presentation walks you through the process of using Microsoft Access (via ODBC) as a front-end for the massive Linked Open Data Cloud and other Linked Data sources.

Ad

Recently uploaded (20)

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

The Future of Cisco Cloud Security: Innovations and AI Integration

The Future of Cisco Cloud Security: Innovations and AI IntegrationRe-solution Data Ltd Stay ahead with Re-Solution Data Ltd and Cisco cloud security, featuring the latest innovations and AI integration. Our solutions leverage cutting-edge technology to deliver proactive defense and simplified operations. Experience the future of security with our expert guidance and support.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Webinar - Top 5 Backup Mistakes MSPs and Businesses Make .pptx

Webinar - Top 5 Backup Mistakes MSPs and Businesses Make .pptxMSP360 Data loss can be devastating — especially when you discover it while trying to recover. All too often, it happens due to mistakes in your backup strategy. Whether you work for an MSP or within an organization, your company is susceptible to common backup mistakes that leave data vulnerable, productivity in question, and compliance at risk.

Join 4-time Microsoft MVP Nick Cavalancia as he breaks down the top five backup mistakes businesses and MSPs make—and, more importantly, explains how to prevent them.

Play It Safe: Manage Security Risks - Google Certificate

Play It Safe: Manage Security Risks - Google CertificateVICTOR MAESTRE RAMIREZ Play It Safe: Manage Security Risks - Google Certificate

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...Raffi Khatchadourian Efficiency is essential to support responsiveness w.r.t. ever-growing datasets, especially for Deep Learning (DL) systems. DL frameworks have traditionally embraced deferred execution-style DL code—supporting symbolic, graph-based Deep Neural Network (DNN) computation. While scalable, such development is error-prone, non-intuitive, and difficult to debug. Consequently, more natural, imperative DL frameworks encouraging eager execution have emerged but at the expense of run-time performance. Though hybrid approaches aim for the “best of both worlds,” using them effectively requires subtle considerations to make code amenable to safe, accurate, and efficient graph execution—avoiding performance bottlenecks and semantically inequivalent results. We discuss the engineering aspects of a refactoring tool that automatically determines when it is safe and potentially advantageous to migrate imperative DL code to graph execution and vice-versa.

Transcript: Canadian book publishing: Insights from the latest salary survey ...

Transcript: Canadian book publishing: Insights from the latest salary survey ...BookNet Canada Join us for a presentation in partnership with the Association of Canadian Publishers (ACP) as they share results from the recently conducted Canadian Book Publishing Industry Salary Survey. This comprehensive survey provides key insights into average salaries across departments, roles, and demographic metrics. Members of ACP’s Diversity and Inclusion Committee will join us to unpack what the findings mean in the context of justice, equity, diversity, and inclusion in the industry.

Results of the 2024 Canadian Book Publishing Industry Salary Survey: https://publishers.ca/wp-content/uploads/2025/04/ACP_Salary_Survey_FINAL-2.pdf

Link to presentation slides and transcript: https://bnctechforum.ca/sessions/canadian-book-publishing-insights-from-the-latest-salary-survey/

Presented by BookNet Canada and the Association of Canadian Publishers on May 1, 2025 with support from the Department of Canadian Heritage.

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

TrsLabs - Leverage the Power of UPI Payments

TrsLabs - Leverage the Power of UPI PaymentsTrs Labs Revolutionize your Fintech growth with UPI Payments

"Riding the UPI strategy" refers to leveraging the Unified Payments Interface (UPI) to drive digital payments in India and beyond. This involves understanding UPI's features, benefits, and potential, and developing strategies to maximize its usage and impact. Essentially, it's about strategically utilizing UPI to promote digital payments, financial inclusion, and economic growth.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Connect and Protect: Networks and Network Security

Connect and Protect: Networks and Network SecurityVICTOR MAESTRE RAMIREZ Connect and Protect: Networks and Network Security

UiPath Agentic Automation: Community Developer Opportunities

UiPath Agentic Automation: Community Developer OpportunitiesDianaGray10 Please join our UiPath Agentic: Community Developer session where we will review some of the opportunities that will be available this year for developers wanting to learn more about Agentic Automation.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...Raffi Khatchadourian

Linked Data Spaces, Data Portability & Access

- 1. Linked Data Spaces, Data Portability & Access © 2008 OpenLink Software, All rights reserved.

- 2. What’s The Problem? We Need To Free Data from the Tyranny of Application Lock-in (Silo Busting!) © 2008 OpenLink Software, All rights reserved.

- 3. Problem Origins Over emphasis on Applications at the expense of their underlying: Database Engines and Data Models (Relational, Hierarchical, Graph) Data Access Mechanisms (HTTP based Linked Data, SQL CLIs, Web Services) Data Query Mechanisms (SPARQL, SQL, XQuery/XPath, etc) © 2008 OpenLink Software, All rights reserved.

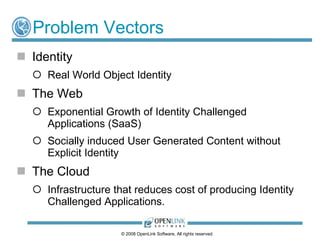

- 4. Problem Vectors Identity Real World Object Identity The Web Exponential Growth of Identity Challenged Applications (SaaS) Socially induced User Generated Content without Explicit Identity The Cloud Infrastructure that reduces cost of producing Identity Challenged Applications. © 2008 OpenLink Software, All rights reserved.

- 5. Aspects of Data Portability Data Mobility (Import/Export) via standard data formats RSS, Atom, OPML Microformats (XFN, hCard, hCalendar, Microdata) RDF Model Data Representation Formats RDFa JSON N3 / Turtle XML - RDF/XML, TriX, TriG, and others. © 2008 OpenLink Software, All rights reserved.

- 6. Aspects of Data Access (C)reate (R)ead (U)pdate (D)elete (CRUD) oriented Data Access by Reference Relational Database APIs Generic - ODBC, JDBC, ADO.NET, OLE-DB Database Specific – Oracle, SQL Server, DB2, MySQL etc. Web of Linked Data (Federated Graph Database) RESTful access to Data Items (Objects or Entities) & Their Containers. © 2008 OpenLink Software, All rights reserved.

- 7. Why is This Important? Your Data belongs to You! Information Overload is here! User Generated Content is growing exponentially Web Application Silos are on the rise courtesy of Web based, and Cloud hosted SaaS solutions Still only 24 hrs in a day! You are an Individual Web Individuality is no longer a trivial pursuit No Human is an Island (Ubuntu!). © 2008 OpenLink Software, All rights reserved.

- 8. How We Bust Data Silos Use platforms that engender Federated Identity via: Personal HTTP Identifiers (WebIDs or Personal URIs) WebIDs bound to X.509 Certificates Association of WebIDs with accounts in existing Data Silos (Web 2.0 Apps & Services) Exposure of Linked Data Spaces built on shared schemas / ontologies. © 2008 OpenLink Software, All rights reserved.

- 9. How Web-Scale Data Access Should Work You Get Yourself a WebID via an Identity Service Provider: self hosted 3 rd party hosted Profile as a Service (PaaS) solution Associate Your WebID with existing Silo-Accounts: Blogs, Wikis, Social-Networks, Bookmarks, Photo Galleries, Discussions etc. Associate Your WebID with existing OpenID and OAuth services. © 2008 OpenLink Software, All rights reserved.

- 10. Personal Data Space Example using ODS You Get Yourself a WebID via one of the following ODS instance forms: a self hosted (via an Amazon EC2 AMI or Hosting Services Provider) shared SaaS service (MyOpenLink.NET) Associate Your WebID with existing Silos: Use the ODS Profile Page to Identify online accounts and home pages for - Blogs, Wikis, Social-Networks, Bookmarks, Photo Galleries, Discussions etc. Your ODS Account is Your WebID, OpenID with OAuth upstreaming (pushback) to associated accounts. © 2008 OpenLink Software, All rights reserved.

- 11. WebID : <http://kingsley.idehen.name/dataspace/person/kidehen#this> © 2008 OpenLink Software, All rights reserved. An ODS Based Personal Profile Page

- 12. © 2008 OpenLink Software, All rights reserved.

- 13. Linked Profile Data Spaces © 2008 OpenLink Software, All rights reserved.

- 14. Some ODS Specific Features Every Data Item has a Unique HTTP scheme Identifier Linked Data Spaces are based on shared schemas SIOC, FOAF, GoodRelations, Dublin Core, SKOS, Music Ontology, Bibliographic Ontology, Annotea etc. Upstreaming (pushback) via Publishing Protocols Linked Data Spaces are traversable via HTTP user agents All Data is SPARQL Accessible. © 2008 OpenLink Software, All rights reserved.

- 15. Additional Information Live Instances: http://myopenlink.net/ods Open Source Edition Information http://ods.openlinksw.com/wiki/ODS/ © 2008 OpenLink Software, All rights reserved.