LinuxCon NA 2016: When Containers and Virtualization Do - and Don’t - Work Together

5 likes771 views

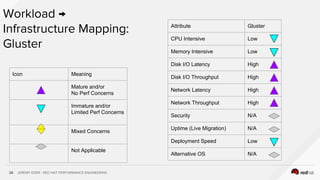

This talk proposes a workload classification technique to help users decide what mix if infrastructure best suits their application's needs.

1 of 29

Downloaded 22 times

Ad

Recommended

OSCON 2017: To contain or not to contain

OSCON 2017: To contain or not to containJeremy Eder I propose a method of choosing the optimal platform based on workload characterization. Learn the differences between containers and virtualization, where they complement each other, and how the two should (and shouldn't) be used together.

KubeCon NA, Seattle, 2016: Performance and Scalability Tuning Kubernetes for...

KubeCon NA, Seattle, 2016: Performance and Scalability Tuning Kubernetes for...Jeremy Eder earn tips and tricks on how to best configure and tune your container infrastructure for maximum performance and scale. The Performance Engineering Group at Red Hat is responsible for performance of the complete container portfolio, including Docker, RHEL Atomic, Kubernetes and OpenShift. We will share: - Latest Performance Features in OpenShift, Docker and RHEL Atomic, tips and tricks on how to best configure and tune your system for maximum performance and scale - Latest performance and scale test results, using RHEL Atomic, OpenvSwitch, Cockpit multi-server container management - DevOps, Agile approach to Performance Analysis of OpenShift, Kubernetes, Docker and RHEL Atomic - Test harness code and example scripts

Audience

The audience is anyone interested in deploying containers to run performance sensitive workloads, as well as architecting highly scalable distributed systems for hosting those workloads. This includes workloads that require NUMA awareness, direct hardware access and kernel-bypass I/O.

Red Hat Summit 2017: Wicked Fast PaaS: Performance Tuning of OpenShift and D...

Red Hat Summit 2017: Wicked Fast PaaS: Performance Tuning of OpenShift and D...Jeremy Eder This document summarizes performance tuning techniques for OpenShift 3.5 and Docker 1.12. It discusses optimizing components like etcd, container storage, routing, metrics and logging. It also describes tools for testing OpenShift scalability through cluster loading, traffic generation and concurrent operations. Specific techniques are mentioned like using etcd 3.1, overlay2 storage and moving image metadata to the registry.

CEPH DAY BERLIN - CEPH ON THE BRAIN!

CEPH DAY BERLIN - CEPH ON THE BRAIN!Ceph Community How does Ceph perform when used in high-performance computing? This talk will cover a year of running Ceph on a (small) Cray supercomputer. I will describe how Ceph was configured to perform in an all-NVME configuration, and the process of analysis and optimisation of the configuration. I'll also give details on the efforts underway to adapt Ceph's messaging to run over high performance network fabrics, and how this work could become the next frontier in the battle against storage performance bottlenecks.

Cephfs - Red Hat Openstack and Ceph meetup, Pune 28th november 2015

Cephfs - Red Hat Openstack and Ceph meetup, Pune 28th november 2015bipin kunal The document summarizes a meetup about Red Hat Openstack and Ceph held on November 28th, 2015 in Pune. It discusses the challenges of distributed filesystems like scalability, performance and stateful clients. It then explains how Ceph addresses these challenges through its POSIX interface, scalable data and metadata, and support for stateful clients. The rest of the document describes the architecture and components of Ceph, how it stores data and metadata in RADOS objects, and how clients interact with the MDS through sessions to perform lookups and manage file metadata. It also provides instructions for mounting CephFS using the kernel driver or FUSE.

Ceph Tech Talk: Ceph at DigitalOcean

Ceph Tech Talk: Ceph at DigitalOceanCeph Community DigitalOcean uses Ceph for block and object storage backing for their cloud services. They operate 37 production Ceph clusters running Nautilus and one on Luminous, storing over 54 PB of data across 21,500 OSDs. They deploy and manage Ceph clusters using Ansible playbooks and containerized Ceph packages, and monitor cluster health using Prometheus and Grafana dashboards. Upgrades can be challenging due to potential issues uncovered and slow performance on HDD backends.

Protecting the Galaxy - Multi-Region Disaster Recovery with OpenStack and Ceph

Protecting the Galaxy - Multi-Region Disaster Recovery with OpenStack and CephSean Cohen IT organizations require a disaster recovery strategy addressing outages with loss of storage, or extended loss of availability at the primary site. Applications need to rapidly migrate to the secondary site and transition with little or no impact to their availability.This talk will cover the various architectural options and levels of maturity in OpenStack services for building multi-site configurations using the Mitaka release. We’ll present the latest capabilities for Volume, Image and Object Storage with Ceph as the backend storage solution, and look at the future developments the OpenStack and Ceph communities are driving to improve and simplify the relevant use cases.

Slides from OpenStack Austin Summit 2016 session: http://alturl.com/hpesz

NantOmics

NantOmicsCeph Community This document discusses Nantomics' use of Ceph for genomics data storage and processing. Key points:

- Nantomics generates large amounts of genomic data from testing and research that needs to be reliably stored and accessed for processing and analysis.

- Ceph is used to provide scalable object, block, and POSIX storage across multiple clusters totaling over 40 petabytes. This replaces more expensive cloud storage and proprietary solutions.

- Benefits of Ceph include lower costs, guaranteed performance, flexibility, and avoiding vendor lock-in. Managing Ceph also requires less than 0.25 FTE of effort through automation.

- While Ceph provides many advantages, tuning large clusters and troubleshooting

CEPH DAY BERLIN - DEPLOYING CEPH IN KUBERNETES WITH ROOK

CEPH DAY BERLIN - DEPLOYING CEPH IN KUBERNETES WITH ROOKCeph Community Rook is a cloud native orchestrator for deploying storage systems within Kubernetes. This presentation will highlight the benefits and goes into the details of using Rook to set up a Ceph cluster. In addition, I will also show how to set up Prometheus and Grafana to monitor Ceph in this environment.

2021.06. Ceph Project Update

2021.06. Ceph Project UpdateCeph Community The document provides an agenda and overview of the Ceph Project Update and Ceph Month event in June 2021. Some key points:

- Ceph is open source software that provides scalable, reliable distributed storage across commodity hardware.

- Ceph Month will include weekly sessions on topics like RADOS, RGW, RBD, and CephFS to promote interactive discussion.

- The Ceph Foundation is working on projects to improve documentation, training materials, and lab infrastructure for testing and development.

Supercomputing by API: Connecting Modern Web Apps to HPC

Supercomputing by API: Connecting Modern Web Apps to HPCOpenStack Audience Level

Intermediate

Synopsis

The traditional user experience for High Performance Computing (HPC) centers around the command line, and the intricacies of the underlying hardware. At the same time, scientific software is moving towards the cloud, leveraging modern web-based frameworks, allowing rapid iteration, and a renewed focus on portability and reproducibility. This software still has need for the huge scale and specialist capabilities of HPC, but leveraging these resources is hampered by variation in implementation between facilities. Differences in software stack, scheduling systems and authentication all get in the way of developers who would rather focus on the research problem at hand. This presentation reviews efforts to overcome these barriers. We will cover container technologies, frameworks for programmatic HPC access, and RESTful APIs that can deliver this as a hosted solution.

Speaker Bio

Dr. David Perry is Compute Integration Specialist at The University of Melbourne, working to increase research productivity using cloud and HPC. David chairs Australia’s first community-owned wind farm, Hepburn Wind, and is co-founder/CTO of BoomPower, delivering simpler solar and battery purchasing decisions for consumers and NGOs.

Ceph on Windows

Ceph on WindowsCeph Community Ceph, an open source distributed storage system, has been ported to run natively on Windows Server to provide improved performance over the iSCSI gateway and better integration into the Windows ecosystem. Key components like librbd and librados have been ported to Windows and a new WNBD kernel driver implements fast communication with Linux-based Ceph OSDs. This allows access to RBD block devices and CephFS file systems from Windows with comparable performance to Linux.

RGW Beyond Cloud: Live Video Storage with Ceph - Shengjing Zhu, Yiming Xie

RGW Beyond Cloud: Live Video Storage with Ceph - Shengjing Zhu, Yiming XieCeph Community This document discusses using Ceph and its RGW storage gateway to store live video streams. It outlines why Ceph/RGW was chosen, including its distribution, erasure coding, and CDN friendliness. It then describes the design and implementation of the live video system, including an API gateway, Nginx RTMP server integrated with RGW via librgw, and handling authorization. Some problems encountered are also mentioned.

Ceph RBD Update - June 2021

Ceph RBD Update - June 2021Ceph Community The document summarizes new features and updates in Ceph's RBD block storage component. Key points include: improved live migration support using external data sources; built-in LUKS encryption; up to 3x better small I/O performance; a new persistent write-back cache; snapshot quiesce hooks; kernel messenger v2 and replica read support; and initial RBD support on Windows. Future work planned for Quincy includes encryption-formatted clones, cache improvements, usability enhancements, and expanded ecosystem integration.

G1: To Infinity and Beyond

G1: To Infinity and BeyondScyllaDB G1 has been around for quite some time now and since JDK 9 it is the default garbage collector in OpenJDK. The community working on G1 is big and the contributions over the last few years have made a significant impact on the overall performance. This talk will focus on some of these features and how they have improved G1 in various ways, including smaller memory footprint and shorter P99 pause times. We will also take a brief look at what features we have lined up for the future.

Manila, an update from Liberty, OpenStack Summit - Tokyo

Manila, an update from Liberty, OpenStack Summit - TokyoSean Cohen Manila is a community-driven project that presents the management of file shares (e.g. NFS, CIFS, HDFS) as a core service to OpenStack. Manila currently works with a variety of storage platforms, as well as a reference implementation based on a Linux NFS server.

Manila is exploding with new features, use cases, and deployers. In this session, we'll give an update on the new capabilities added in the Liberty release:

• Integration with OpenStack Sahara

• Migration of shares across different storage back-ends

• Support for availability zones (AZs) and share replication across these AZs

• The ability to grow and shrink file shares on demand

• New mount automation framework

• and much more…

As well as provide a quick look of whats coming up in Mitaka release with Share Replication demo

CephFS Update

CephFS UpdateCeph Community The document summarizes updates to CephFS in the Pacific release, including improvements to usability, performance, ecosystem integration, multi-site capabilities, and quality. Key updates include MultiFS now being stable, MDS autoscaling, cephfs-top for performance monitoring, scheduled snapshots, NFS gateway support, feature bits for compatibility checking, and improved testing coverage. Performance improvements include ephemeral pinning, capability management optimizations, and asynchronous operations. Multi-site replication between clusters is now possible with snapshot-based mirroring.

NVIDIA GTC 2018: Spectre/Meltdown Impact on High Performance Workloads

NVIDIA GTC 2018: Spectre/Meltdown Impact on High Performance WorkloadsJeremy Eder This document summarizes a presentation given by Jeremy Eder from Red Hat Performance Engineering about the impact of the Spectre and Meltdown vulnerabilities on high performance workloads. It discusses performance data showing the impact on tasks like TensorFlow training on GPUs. It also provides suggestions for managing the performance impact, such as enabling transparent huge pages and PCID, and disabling specific kernel patches if needed. A list of additional reference links is also included.

Object Compaction in Cloud for High Yield

Object Compaction in Cloud for High YieldScyllaDB In file systems, large sequential writes are more beneficial than small random writes, and hence many storage systems implement a log structured file system. In the same way, the cloud favors large objects more than small objects. Cloud providers place throttling limits on PUTs and GETs, and so it takes significantly longer time to upload a bunch of small objects than a large object of the aggregate size. Moreover, there are per-PUT calls associated with uploading smaller objects.

In Netflix, a lot of media assets and their relevant metadata is generated and pushed to cloud.

We would like to propose a strategy to compact these small objects into larger blobs before uploading them to Cloud. We will discuss how to select relevant smaller objects, and manage the indexing of these objects within the blob along with modification in reads, overwrites and deletes.

Finally, we would showcase the potential impact of such a strategy on Netflix assets in terms of cost and performance.

Red Hat Summit 2018 5 New High Performance Features in OpenShift

Red Hat Summit 2018 5 New High Performance Features in OpenShiftJeremy Eder This document introduces 5 new high-performance features in Red Hat OpenShift Container Platform to support critical, latency-sensitive workloads. It describes CPU pinning, huge pages, device plugins for GPUs and other hardware, extended resources, and sysctl support. Demo sections show how these features allow workloads to consume exclusive CPUs, huge pages, GPUs, and configure kernel parameters in OpenShift pods. The roadmap discusses expanding support for NUMA, co-located device scheduling, and the Kubernetes resource API.

RBD: What will the future bring? - Jason Dillaman

RBD: What will the future bring? - Jason DillamanCeph Community Cephalocon APAC 2018

March 22-23, 2018 - Beijing, China

Jason Dillaman, Red Hat Principal Software Engineer

Red Hat Ceph Storage Roadmap: January 2016

Red Hat Ceph Storage Roadmap: January 2016Red_Hat_Storage Attendees of Red Hat Storage Day New York on 1/19/16 heard Red Hat's plans for its storage portfolio.

Why you’re going to fail running java on docker!

Why you’re going to fail running java on docker!Red Hat Developers This document discusses running Java applications on Docker containers and some of the challenges that can cause Java applications to fail when run this way. It begins by listing some big wins of using Docker for developers, such as portability and consistency across environments. It then discusses some potential cons, such as Docker not being a true virtual machine and portability issues. The document provides an overview of containers versus virtualization and the history of containers. It identifies specific challenges for Java applications related to seeing all host system resources rather than being constrained to container limits. Workarounds for memory and CPU limitations in Java are presented. The document emphasizes the importance of configuration for Java applications in containers.

Ceph QoS: How to support QoS in distributed storage system - Taewoong Kim

Ceph QoS: How to support QoS in distributed storage system - Taewoong KimCeph Community This document discusses supporting quality of service (QoS) in distributed storage systems like Ceph. It describes how SK Telecom has contributed to QoS support in Ceph, including an algorithm called dmClock that controls I/O request scheduling according to administrator-configured policies. It also details an outstanding I/O-based throttling mechanism to measure and regulate load. Finally, it discusses challenges like queue depth that can be addressed by increasing the number of scheduling threads, and outlines plans to improve and test Ceph's QoS features.

Ceph Block Devices: A Deep Dive

Ceph Block Devices: A Deep Divejoshdurgin Ceph is an open source distributed storage system designed for scalability and reliability. Ceph's block device, RADOS block device (RBD), is widely used to store virtual machines, and is the most popular block storage used with OpenStack.

In this session, you'll learn how RBD works, including how it:

* Uses RADOS classes to make access easier from user space and within the Linux kernel.

* Implements thin provisioning.

* Builds on RADOS self-managed snapshots for cloning and differential backups.

* Increases performance with caching of various kinds.

* Uses watch/notify RADOS primitives to handle online management operations.

* Integrates with QEMU, libvirt, and OpenStack.

Openstack Summit HK - Ceph defacto - eNovance

Openstack Summit HK - Ceph defacto - eNovanceeNovance Sébastien Han presented on Ceph and its integration with OpenStack. Ceph is an open source distributed storage system that is well-suited for OpenStack deployments due to its self-managing capabilities and ability to scale storage resources easily. The integration between Ceph and OpenStack has improved significantly in recent OpenStack releases like Havana, with features like Cinder backup to Ceph and the ability to boot Nova instances using RBD images. Further integration work is planned for upcoming releases to fully leverage Ceph's capabilities.

Practical CephFS with nfs today using OpenStack Manila - Ceph Day Berlin - 12...

Practical CephFS with nfs today using OpenStack Manila - Ceph Day Berlin - 12...TomBarron Slides of my presentation at Ceph Day Berlin 2018 on using OpenStack Manila to orchestrate NFS shares backed by CephFS.

OpenNebulaConf 2016 - Budgeting: the Ugly Duckling of Cloud computing? by Mat...

OpenNebulaConf 2016 - Budgeting: the Ugly Duckling of Cloud computing? by Mat...OpenNebula Project This document discusses budgeting for cloud computing resources at the Leibniz Supercomputing Centre. It provides an introduction and outline, describes the LRZ's compute cloud setup and increasing user base. It proposes a cost function for budgeting based on resources like cores, RAM, and storage space. The document outlines plans for budgeting including hardware classes, user classes, and pre-paid models to avoid budget overflows. It describes the current budgeting implementation and next steps to update OpenNebula and focus on security.

Red Hat Virtual Infrastructure Storage

Red Hat Virtual Infrastructure StorageRed_Hat_Storage Modernize your datacenter with open, converged virtualization and storage. Learn how Red Hat Virtual Infrastructure Storage enables organizations to build efficient, resilient, and agile virtualization and storage infrastructures across private, public, and hybrid clouds. www.redhat.com/liberate

Station 1

Station 1 Simon Goodwin The document describes the 8 step process of HIV replication within a host cell: 1) The virus fuses with the cell's membrane. 2) Reverse transcriptase catalyzes the synthesis of complementary DNA strands from the viral RNA genome. 3) The double-stranded viral DNA incorporates into the cell's DNA as a provirus. 4) The proviral genes are then transcribed into RNA. 5) This RNA serves as mRNA to produce new HIV proteins and genomes for the next viral generation. 6) New capsids assemble around the genomes and reverse transcriptase. 7) The new viruses then bud off from the host cell.

Ad

More Related Content

What's hot (20)

CEPH DAY BERLIN - DEPLOYING CEPH IN KUBERNETES WITH ROOK

CEPH DAY BERLIN - DEPLOYING CEPH IN KUBERNETES WITH ROOKCeph Community Rook is a cloud native orchestrator for deploying storage systems within Kubernetes. This presentation will highlight the benefits and goes into the details of using Rook to set up a Ceph cluster. In addition, I will also show how to set up Prometheus and Grafana to monitor Ceph in this environment.

2021.06. Ceph Project Update

2021.06. Ceph Project UpdateCeph Community The document provides an agenda and overview of the Ceph Project Update and Ceph Month event in June 2021. Some key points:

- Ceph is open source software that provides scalable, reliable distributed storage across commodity hardware.

- Ceph Month will include weekly sessions on topics like RADOS, RGW, RBD, and CephFS to promote interactive discussion.

- The Ceph Foundation is working on projects to improve documentation, training materials, and lab infrastructure for testing and development.

Supercomputing by API: Connecting Modern Web Apps to HPC

Supercomputing by API: Connecting Modern Web Apps to HPCOpenStack Audience Level

Intermediate

Synopsis

The traditional user experience for High Performance Computing (HPC) centers around the command line, and the intricacies of the underlying hardware. At the same time, scientific software is moving towards the cloud, leveraging modern web-based frameworks, allowing rapid iteration, and a renewed focus on portability and reproducibility. This software still has need for the huge scale and specialist capabilities of HPC, but leveraging these resources is hampered by variation in implementation between facilities. Differences in software stack, scheduling systems and authentication all get in the way of developers who would rather focus on the research problem at hand. This presentation reviews efforts to overcome these barriers. We will cover container technologies, frameworks for programmatic HPC access, and RESTful APIs that can deliver this as a hosted solution.

Speaker Bio

Dr. David Perry is Compute Integration Specialist at The University of Melbourne, working to increase research productivity using cloud and HPC. David chairs Australia’s first community-owned wind farm, Hepburn Wind, and is co-founder/CTO of BoomPower, delivering simpler solar and battery purchasing decisions for consumers and NGOs.

Ceph on Windows

Ceph on WindowsCeph Community Ceph, an open source distributed storage system, has been ported to run natively on Windows Server to provide improved performance over the iSCSI gateway and better integration into the Windows ecosystem. Key components like librbd and librados have been ported to Windows and a new WNBD kernel driver implements fast communication with Linux-based Ceph OSDs. This allows access to RBD block devices and CephFS file systems from Windows with comparable performance to Linux.

RGW Beyond Cloud: Live Video Storage with Ceph - Shengjing Zhu, Yiming Xie

RGW Beyond Cloud: Live Video Storage with Ceph - Shengjing Zhu, Yiming XieCeph Community This document discusses using Ceph and its RGW storage gateway to store live video streams. It outlines why Ceph/RGW was chosen, including its distribution, erasure coding, and CDN friendliness. It then describes the design and implementation of the live video system, including an API gateway, Nginx RTMP server integrated with RGW via librgw, and handling authorization. Some problems encountered are also mentioned.

Ceph RBD Update - June 2021

Ceph RBD Update - June 2021Ceph Community The document summarizes new features and updates in Ceph's RBD block storage component. Key points include: improved live migration support using external data sources; built-in LUKS encryption; up to 3x better small I/O performance; a new persistent write-back cache; snapshot quiesce hooks; kernel messenger v2 and replica read support; and initial RBD support on Windows. Future work planned for Quincy includes encryption-formatted clones, cache improvements, usability enhancements, and expanded ecosystem integration.

G1: To Infinity and Beyond

G1: To Infinity and BeyondScyllaDB G1 has been around for quite some time now and since JDK 9 it is the default garbage collector in OpenJDK. The community working on G1 is big and the contributions over the last few years have made a significant impact on the overall performance. This talk will focus on some of these features and how they have improved G1 in various ways, including smaller memory footprint and shorter P99 pause times. We will also take a brief look at what features we have lined up for the future.

Manila, an update from Liberty, OpenStack Summit - Tokyo

Manila, an update from Liberty, OpenStack Summit - TokyoSean Cohen Manila is a community-driven project that presents the management of file shares (e.g. NFS, CIFS, HDFS) as a core service to OpenStack. Manila currently works with a variety of storage platforms, as well as a reference implementation based on a Linux NFS server.

Manila is exploding with new features, use cases, and deployers. In this session, we'll give an update on the new capabilities added in the Liberty release:

• Integration with OpenStack Sahara

• Migration of shares across different storage back-ends

• Support for availability zones (AZs) and share replication across these AZs

• The ability to grow and shrink file shares on demand

• New mount automation framework

• and much more…

As well as provide a quick look of whats coming up in Mitaka release with Share Replication demo

CephFS Update

CephFS UpdateCeph Community The document summarizes updates to CephFS in the Pacific release, including improvements to usability, performance, ecosystem integration, multi-site capabilities, and quality. Key updates include MultiFS now being stable, MDS autoscaling, cephfs-top for performance monitoring, scheduled snapshots, NFS gateway support, feature bits for compatibility checking, and improved testing coverage. Performance improvements include ephemeral pinning, capability management optimizations, and asynchronous operations. Multi-site replication between clusters is now possible with snapshot-based mirroring.

NVIDIA GTC 2018: Spectre/Meltdown Impact on High Performance Workloads

NVIDIA GTC 2018: Spectre/Meltdown Impact on High Performance WorkloadsJeremy Eder This document summarizes a presentation given by Jeremy Eder from Red Hat Performance Engineering about the impact of the Spectre and Meltdown vulnerabilities on high performance workloads. It discusses performance data showing the impact on tasks like TensorFlow training on GPUs. It also provides suggestions for managing the performance impact, such as enabling transparent huge pages and PCID, and disabling specific kernel patches if needed. A list of additional reference links is also included.

Object Compaction in Cloud for High Yield

Object Compaction in Cloud for High YieldScyllaDB In file systems, large sequential writes are more beneficial than small random writes, and hence many storage systems implement a log structured file system. In the same way, the cloud favors large objects more than small objects. Cloud providers place throttling limits on PUTs and GETs, and so it takes significantly longer time to upload a bunch of small objects than a large object of the aggregate size. Moreover, there are per-PUT calls associated with uploading smaller objects.

In Netflix, a lot of media assets and their relevant metadata is generated and pushed to cloud.

We would like to propose a strategy to compact these small objects into larger blobs before uploading them to Cloud. We will discuss how to select relevant smaller objects, and manage the indexing of these objects within the blob along with modification in reads, overwrites and deletes.

Finally, we would showcase the potential impact of such a strategy on Netflix assets in terms of cost and performance.

Red Hat Summit 2018 5 New High Performance Features in OpenShift

Red Hat Summit 2018 5 New High Performance Features in OpenShiftJeremy Eder This document introduces 5 new high-performance features in Red Hat OpenShift Container Platform to support critical, latency-sensitive workloads. It describes CPU pinning, huge pages, device plugins for GPUs and other hardware, extended resources, and sysctl support. Demo sections show how these features allow workloads to consume exclusive CPUs, huge pages, GPUs, and configure kernel parameters in OpenShift pods. The roadmap discusses expanding support for NUMA, co-located device scheduling, and the Kubernetes resource API.

RBD: What will the future bring? - Jason Dillaman

RBD: What will the future bring? - Jason DillamanCeph Community Cephalocon APAC 2018

March 22-23, 2018 - Beijing, China

Jason Dillaman, Red Hat Principal Software Engineer

Red Hat Ceph Storage Roadmap: January 2016

Red Hat Ceph Storage Roadmap: January 2016Red_Hat_Storage Attendees of Red Hat Storage Day New York on 1/19/16 heard Red Hat's plans for its storage portfolio.

Why you’re going to fail running java on docker!

Why you’re going to fail running java on docker!Red Hat Developers This document discusses running Java applications on Docker containers and some of the challenges that can cause Java applications to fail when run this way. It begins by listing some big wins of using Docker for developers, such as portability and consistency across environments. It then discusses some potential cons, such as Docker not being a true virtual machine and portability issues. The document provides an overview of containers versus virtualization and the history of containers. It identifies specific challenges for Java applications related to seeing all host system resources rather than being constrained to container limits. Workarounds for memory and CPU limitations in Java are presented. The document emphasizes the importance of configuration for Java applications in containers.

Ceph QoS: How to support QoS in distributed storage system - Taewoong Kim

Ceph QoS: How to support QoS in distributed storage system - Taewoong KimCeph Community This document discusses supporting quality of service (QoS) in distributed storage systems like Ceph. It describes how SK Telecom has contributed to QoS support in Ceph, including an algorithm called dmClock that controls I/O request scheduling according to administrator-configured policies. It also details an outstanding I/O-based throttling mechanism to measure and regulate load. Finally, it discusses challenges like queue depth that can be addressed by increasing the number of scheduling threads, and outlines plans to improve and test Ceph's QoS features.

Ceph Block Devices: A Deep Dive

Ceph Block Devices: A Deep Divejoshdurgin Ceph is an open source distributed storage system designed for scalability and reliability. Ceph's block device, RADOS block device (RBD), is widely used to store virtual machines, and is the most popular block storage used with OpenStack.

In this session, you'll learn how RBD works, including how it:

* Uses RADOS classes to make access easier from user space and within the Linux kernel.

* Implements thin provisioning.

* Builds on RADOS self-managed snapshots for cloning and differential backups.

* Increases performance with caching of various kinds.

* Uses watch/notify RADOS primitives to handle online management operations.

* Integrates with QEMU, libvirt, and OpenStack.

Openstack Summit HK - Ceph defacto - eNovance

Openstack Summit HK - Ceph defacto - eNovanceeNovance Sébastien Han presented on Ceph and its integration with OpenStack. Ceph is an open source distributed storage system that is well-suited for OpenStack deployments due to its self-managing capabilities and ability to scale storage resources easily. The integration between Ceph and OpenStack has improved significantly in recent OpenStack releases like Havana, with features like Cinder backup to Ceph and the ability to boot Nova instances using RBD images. Further integration work is planned for upcoming releases to fully leverage Ceph's capabilities.

Practical CephFS with nfs today using OpenStack Manila - Ceph Day Berlin - 12...

Practical CephFS with nfs today using OpenStack Manila - Ceph Day Berlin - 12...TomBarron Slides of my presentation at Ceph Day Berlin 2018 on using OpenStack Manila to orchestrate NFS shares backed by CephFS.

OpenNebulaConf 2016 - Budgeting: the Ugly Duckling of Cloud computing? by Mat...

OpenNebulaConf 2016 - Budgeting: the Ugly Duckling of Cloud computing? by Mat...OpenNebula Project This document discusses budgeting for cloud computing resources at the Leibniz Supercomputing Centre. It provides an introduction and outline, describes the LRZ's compute cloud setup and increasing user base. It proposes a cost function for budgeting based on resources like cores, RAM, and storage space. The document outlines plans for budgeting including hardware classes, user classes, and pre-paid models to avoid budget overflows. It describes the current budgeting implementation and next steps to update OpenNebula and focus on security.

Viewers also liked (14)

Red Hat Virtual Infrastructure Storage

Red Hat Virtual Infrastructure StorageRed_Hat_Storage Modernize your datacenter with open, converged virtualization and storage. Learn how Red Hat Virtual Infrastructure Storage enables organizations to build efficient, resilient, and agile virtualization and storage infrastructures across private, public, and hybrid clouds. www.redhat.com/liberate

Station 1

Station 1 Simon Goodwin The document describes the 8 step process of HIV replication within a host cell: 1) The virus fuses with the cell's membrane. 2) Reverse transcriptase catalyzes the synthesis of complementary DNA strands from the viral RNA genome. 3) The double-stranded viral DNA incorporates into the cell's DNA as a provirus. 4) The proviral genes are then transcribed into RNA. 5) This RNA serves as mRNA to produce new HIV proteins and genomes for the next viral generation. 6) New capsids assemble around the genomes and reverse transcriptase. 7) The new viruses then bud off from the host cell.

OpenShift on OpenStack with Kuryr

OpenShift on OpenStack with KuryrAntoni Segura Puimedon Integrating OpenShift with Neutron networking when running inside of OpenStack Nova instances.

demo recording: https://youtu.be/UKZryuTH4B0

Red Hat Storage Day Boston - OpenStack + Ceph Storage

Red Hat Storage Day Boston - OpenStack + Ceph StorageRed_Hat_Storage - Red Hat OpenStack Platform delivers an integrated and production-ready OpenStack cloud platform that combines Red Hat's hardened OpenStack infrastructure which is co-engineered with Red Hat Enterprise Linux.

- Ceph is an open-source, massively scalable software-defined storage that provides a single, efficient, and unified storage platform on clustered commodity hardware. Ceph is flexible and can provide block, object, and file-level storage for OpenStack.

- Architectures using OpenStack and Ceph include hyperconverged infrastructure which co-locates compute and storage on the same machines, and multi-site configurations with replicated Ceph storage across sites for disaster recovery.

Monoclonal Antibody Production via Hybridoma Technology

Monoclonal Antibody Production via Hybridoma TechnologyKathryn Howard Monoclonal antibodies are identical antibodies that bind to a single antigen or epitope. They are produced through hybridoma technology which involves fusing antibody-producing spleen cells with myeloma tumor cells to create immortalized cell lines that secrete the desired antibody. The hybridoma cells are screened using techniques like ELISA or FACS to identify clones that secrete antibodies targeting the antigen of interest. Selected clones are grown in culture medium or injected into mice to produce antibody-rich ascites fluid for harvesting large amounts of monoclonal antibodies.

Red Hat Storage Day Dallas - Why Software-defined Storage Matters

Red Hat Storage Day Dallas - Why Software-defined Storage MattersRed_Hat_Storage This document discusses the evolution of storage from traditional appliances to software-defined storage. It notes that many IT decision makers find current storage capabilities inadequate and unable to handle emerging workloads. Traditional appliances face issues like vendor lock-in, lack of flexibility, and high costs. Public cloud storage is more scalable but still has complexity and limitations. The document then introduces software-defined storage as an open solution with standardized platforms that addresses these issues through increased cost efficiency, provisioning speed, and deployment options with less vendor lock-in and skill requirements. It describes Red Hat's portfolio of Ceph and Gluster open source software-defined storage solutions and their target use cases.

Genomic and c dna library

Genomic and c dna libraryPromila Sheoran Genomic and cDNA libraries are constructed to isolate genes of interest from organisms. Genomic libraries contain total chromosomal DNA while cDNA libraries contain mRNA from specific cell types. DNA is digested and ligated into vectors to clone fragments. Libraries are screened using probes and PCR to identify clones containing genes of interest. cDNA libraries are useful for studying eukaryotic gene expression as they contain mRNA from specific cells. Thousands of clones may need to be screened to have high probability of isolating a particular gene fragment.

Dna library lecture-Gene libraries and screening

Dna library lecture-Gene libraries and screening Abdullah Abobakr This document discusses gene libraries and screening procedures. It begins by explaining what genomic and cDNA libraries are. It then provides details on creating genomic libraries, including purifying genomic DNA, fragmenting it, and cloning the fragments into vectors. Creating cDNA libraries involves isolating mRNA, synthesizing cDNA, and ligating the cDNA to vectors. The size of libraries needed to ensure coverage of genomes is calculated. Lambda phage is described as a commonly used vector that can accept inserts up to 23kb in size. The processes of packaging recombinant DNA into lambda phage particles and creating lambda phage libraries are outlined.

Monoclonal antibody production

Monoclonal antibody productionSrilaxmiMenon Monoclonal antibodies are identical antibodies produced by one type of immune cell that are clones of a single parent cell. They are produced using hybridoma technology which involves fusing antibody producing B cells from an immunized animal with myeloma tumor cells to create a hybridoma cell line. This hybridoma cell line is capable of indefinite division in culture while producing the same monoclonal antibody. The monoclonal antibodies are then purified from the culture supernatant and have various diagnostic and therapeutic applications such as cancer treatment.

Hybridoma technology and application for monoclonal antibodies

Hybridoma technology and application for monoclonal antibodiesJagphool Chauhan Monoclonal antibodies are identical antibodies produced by a single clone of B cells or hybridoma cells. They are produced through the fusion of myeloma cells with spleen cells from immunized mice. Georges Köhler and Cesar Milstein were the first to produce monoclonal antibodies using this hybridoma technique in 1975, for which they received the Nobel Prize in 1984. Monoclonal antibodies have many important applications in medicine for diagnosis, imaging, and treatment of diseases like cancer, infections, and pregnancy testing.

BPF: Tracing and more

BPF: Tracing and moreBrendan Gregg Video: https://www.youtube.com/watch?v=JRFNIKUROPE . Talk for linux.conf.au 2017 (LCA2017) by Brendan Gregg, about Linux enhanced BPF (eBPF). Abstract:

A world of new capabilities is emerging for the Linux 4.x series, thanks to enhancements that have been included in Linux for to Berkeley Packet Filter (BPF): an in-kernel virtual machine that can execute user space-defined programs. It is finding uses for security auditing and enforcement, enhancing networking (including eXpress Data Path), and performance observability and troubleshooting. Many new open source tools that have been written in the past 12 months for performance analysis that use BPF. Tracing superpowers have finally arrived for Linux!

For its use with tracing, BPF provides the programmable capabilities to the existing tracing frameworks: kprobes, uprobes, and tracepoints. In particular, BPF allows timestamps to be recorded and compared from custom events, allowing latency to be studied in many new places: kernel and application internals. It also allows data to be efficiently summarized in-kernel, including as histograms. This has allowed dozens of new observability tools to be developed so far, including measuring latency distributions for file system I/O and run queue latency, printing details of storage device I/O and TCP retransmits, investigating blocked stack traces and memory leaks, and a whole lot more.

This talk will summarize BPF capabilities and use cases so far, and then focus on its use to enhance Linux tracing, especially with the open source bcc collection. bcc includes BPF versions of old classics, and many new tools, including execsnoop, opensnoop, funcccount, ext4slower, and more (many of which I developed). Perhaps you'd like to develop new tools, or use the existing tools to find performance wins large and small, especially when instrumenting areas that previously had zero visibility. I'll also summarize how we intend to use these new capabilities to enhance systems analysis at Netflix.

Gene transfer (2)

Gene transfer (2)Mandvi Shandilya This document discusses various techniques for gene transfer, including natural methods like conjugation, transformation, and transduction, as well artificial methods like microinjection, biolistics, calcium phosphate and liposome mediated transfer, and electroporation. It provides details on how each method works, such as how conjugation involves transfer of DNA between bacteria via sex pili, and how electroporation uses electrical pulses to create pores in cell membranes to allow DNA entry. The document also summarizes screening and applications of transgenic techniques.

Gene transfer technologies

Gene transfer technologiesManoj Kumar Tekuri Gene transfer technologies can be used to treat diseases by inserting therapeutic genes into cells. There are viral and non-viral methods of gene transfer. Viral methods use viruses like retroviruses, adenoviruses, and adeno-associated viruses to efficiently deliver genes. Non-viral methods include mechanical techniques like electroporation, microinjection, and biolistics (gene gun), as well as chemical methods like liposomes, calcium phosphate, and polyethylene glycol. Each method has advantages and limitations for different applications in research and potential gene therapy.

Complementary DNA (cDNA) Libraries

Complementary DNA (cDNA) LibrariesRamesh Pothuraju This document discusses different strategies for cloning DNA fragments from complex sources like genomic DNA or cDNA. There are two major approaches - cell-based cloning, which divides the DNA into fragments that are cloned to create a library, and directly amplifying target sequences using PCR. The document focuses on cDNA library construction, explaining that cDNA libraries reveal gene expression profiles. It describes early cDNA cloning methods and their limitations, as well as improved directional and non-directional cloning techniques. Finally, it discusses various screening methods for identifying clones of interest from cDNA libraries, including colony hybridization, plaque lifts and immunological screening.

Ad

Similar to LinuxCon NA 2016: When Containers and Virtualization Do - and Don’t - Work Together (20)

NVIDIA GTC 2018: Enabling GPU-as-a-Service Providers with Red Hat OpenShift

NVIDIA GTC 2018: Enabling GPU-as-a-Service Providers with Red Hat OpenShiftJeremy Eder Jeremy Eder of Red Hat discusses enabling GPU-as-a-Service using Red Hat OpenShift. He outlines how OpenShift abstracts away infrastructure, provides high performance features like GPU support, and enables containerization of software. Eder then demonstrates how to deploy GPU workloads on OpenShift using device plugins, extended resources, and optimized configurations.

A Single Platform to Run All The Things - Kubernetes for the Enterprise - London

A Single Platform to Run All The Things - Kubernetes for the Enterprise - LondonVMware Tanzu A Single Platform to Run All The Things - Kubernetes for the Enterprise - London

Ed Hoppitt

EMEA Lead Applications Transformation, VMware

28th March 2018

AMD EPYC 7002 Launch World Records

AMD EPYC 7002 Launch World RecordsAMD AMD's 2nd Generation EPYC processors are #1 in performance across industry standard benchmarks, holding 80+ world records to date.

Tame that Beast

Tame that BeastDataWorks Summit/Hadoop Summit This document discusses improving the reliability and availability of Hadoop clusters. It notes that while Hadoop is taking on more database-like features, the uptime of many Hadoop clusters and lack of SLAs is still an afterthought. It proposes separating computing and storage to improve availability like cloud Hadoop offerings do. It also suggests building KPIs and monitoring around Hadoop clusters similar to how many companies monitor data warehouses. Centralizing Hadoop infrastructure management into a "Big Data as a Service" model is presented as another way to improve reliability.

IBM Edge2015 Las Vegas

IBM Edge2015 Las VegasFilipe Miranda This document provides an overview of Red Hat products and technologies for IBM Power Systems and IBM zSystems platforms. It discusses Red Hat Enterprise Linux offerings that are optimized for these platforms, including features like support for big-endian mode, Linux containers, multipath I/O, and more. It also summarizes Red Hat's overall approach including their open source development model and enterprise Linux lifecycles.

Presentazione PernixData @ VMUGIT UserCon 2015

Presentazione PernixData @ VMUGIT UserCon 2015VMUG IT The document discusses PernixData's solutions for optimizing storage performance, management, and cost in data centers. PernixData's software provides VM-aware storage intelligence that accelerates I/O performance by placing storage close to applications using server flash and RAM. This allows organizations to turn any shared storage infrastructure into a high-performance all-flash array without disruption. PernixData's solutions have helped many customers optimize strategic operations, maximize efficiency, and save substantial costs on hardware and operations.

RackN Physical Layer Automation Innovation

RackN Physical Layer Automation Innovationrhirschfeld Short presentation about how RackN is creating bare metal data center automation for enterprise and edge infrastructure at the most basic level.

Includes a video of Rob giving the presentation

Pro sphere customer technical

Pro sphere customer technicalsolarisyougood ProSphere is a storage management solution from EMC that provides:

- End-to-end visibility of storage performance and capacity across sites

- Monitoring and alerting on capacity utilization and storage infrastructure

- Reports and dashboards on capacity, configuration, and performance to improve planning and reduce costs

AMD EPYC World Records

AMD EPYC World RecordsAMD This document provides information on AMD EPYC processors and their world record performance achievements. It lists numerous world records held by EPYC processors in areas such as single and dual socket configurations, database and analytics workloads, HPC, virtualization, Java applications, and more. A total of 93 world records are claimed as of October 28, 2020. The reader is directed to AMD's website for full details on all world records.

Sviluppo IoT - Un approccio standard da Nerd ad Impresa, prove pratiche di Me...

Sviluppo IoT - Un approccio standard da Nerd ad Impresa, prove pratiche di Me...Codemotion Codemotion Rome 2015 - Gli anni passati a veder nascere e crescere tecnologie e tendenze ci aiutano a comprendere come l'Internet delle Cose sia diventata matura per il mercato delle imprese. L’intervento, che include una panoramica sulle tendenze attuali e future dell’IoT, è centrato sullo sviluppo di soluzioni basate su standard industriali in ascesa (eg. Z-Wave), mettendo in evidenza gli inevitabili vantaggi e limiti derivanti dall’adozione di una metodologia industriale: solo un approccio industriale può rappresentare il vero e proprio salto di qualità per proporre prodotti efficaci per un mercato a doppia cifra.

Building Efficient Edge Nodes for Content Delivery Networks

Building Efficient Edge Nodes for Content Delivery NetworksRebekah Rodriguez Supermicro, Intel®, and Varnish are delivering an optimized CDN solution built with the Intel Xeon-D processor in a Supermicro Superserver running Varnish Enterprise. This solution delivers strong performance in a compact form factor with low idle power and excellent performance per watt.

Join Supermicro, Intel, and Varnish experts as they discuss their collaboration and how their respective technologies work together to improve the performance and lower the TCO of an edge caching server.

EMC ScaleIO Overview

EMC ScaleIO Overviewwalshe1 ScaleIO is software that creates a server-based storage area network (SAN) using local storage drives. It provides elastic scaling of capacity and performance on demand across server nodes. Data is distributed across nodes for high performance parallelism. Additional servers and storage can be added non-disruptively to scale out the system.

Presentazione SimpliVity @ VMUGIT UserCon 2015

Presentazione SimpliVity @ VMUGIT UserCon 2015VMUG IT Hyperconverged Infrastructure Deep Dive: The What, How-To and Why Now? - Giampiero Petrosi, Solutions Architect, Simplivity

EMC Unified Analytics Platform. Gintaras Pelenis

EMC Unified Analytics Platform. Gintaras PelenisLietuvos kompiuterininkų sąjunga The document discusses EMC's Greenplum unified analytics platform. It highlights challenges with current data warehousing solutions in keeping up with growing amounts of data from diverse sources. The Greenplum platform aims to easily scale analytics to large amounts of data, rapidly ingest data from different sources, provide high-performance parallel processing, and support high user concurrency. It achieves this through its massively parallel processing architecture and scale-out design on commodity hardware.

Dell emc - The Changing IT Landscape

Dell emc - The Changing IT LandscapeVITO - Securitas This document discusses the changing IT landscape and Dell's infrastructure portfolio options. It provides an overview of Dell's server, storage, networking and data protection solutions. Key points include:

- Applications are driving business value but require underlying infrastructure to run on. Dell provides a variety of infrastructure building blocks.

- Dell's server portfolio ranges from large hyperscale solutions to small home office towers. Storage solutions include all-flash, hybrid and scale-out options.

- Dell's converged and hyper-converged infrastructure solutions like VxRail and XC Series provide standardized, modular options for consolidating and running workloads.

- Dell's vision is to provide open, software-defined, and disaggregated solutions

Why Pay for Open Source Linux? Avoid the Hidden Cost of DIY

Why Pay for Open Source Linux? Avoid the Hidden Cost of DIYEnterprise Management Associates This document discusses the benefits of paying for enterprise open source software like Red Hat Linux over building it yourself or using unpaid community versions. It argues that paid solutions provide support, security updates, and consistency that save time and reduce costs compared to unpaid alternatives. Data from an IDC study is presented showing organizations that used Red Hat saved 35% on operations costs over 3 years and achieved a 368% ROI within 5 months compared to unpaid solutions.

Transforming your Business with Scale-Out Flash: How MongoDB & Flash Accelera...

Transforming your Business with Scale-Out Flash: How MongoDB & Flash Accelera...MongoDB <b>Transforming your Business with Scale-Out Flash: How MongoDB & Flash Accelerate Application Performance </b>[1:40 pm - 2:00 pm]<br />MongoDB lets you build next-generation applications that require new levels of performance and latency. Flash has become a critical component to meeting these needs and this session will focus on how to best leverage Flash in a MongoDB deployment, covering key best practices and approaches. Armed with these best practices, as your environment scales, the on-going management of Flash within a traditional DAS architecture may still introduce some fundamental challenges. In addition, we will introduce EMC’s XtremIO platform which fully automates and offloads this overhead, allowing MongoDB administrators and architects to focus on driving new capabilities into their applications, all while scaling infinitely. In addition, key features like data-reduction, agile copy services, and free encryption extend the value of Flash well beyond what can be done with traditional DAS architectures.

Sparc solaris servers

Sparc solaris serverssolarisyougood The document discusses Oracle's SPARC servers and Solaris operating system. It highlights the new SPARC T4 servers as providing up to 5x faster performance than previous T3 servers. It also promotes the SPARC SuperCluster as the fastest general purpose platform, capable of outperforming IBM and HP systems. Oracle positions its SPARC/Solaris products as the best foundation for enterprise cloud computing and engineered to work optimally with Oracle software.

Dr Training V1 07 17 09 Rev Four 4

Dr Training V1 07 17 09 Rev Four 4Ricoh This document summarizes concepts related to disaster recovery including objectives, concepts, targets, risks, opportunities, and solutions. The objectives are to review disaster recovery basics, explore customer business risks, and discover opportunities through awareness services and technology. Concepts discussed include disaster recovery, business continuity, availability, recovery time objectives, and costs of downtime. Target audiences are those with mission critical applications. Business risks include various physical events, user errors, hardware/software failures, and security threats. Opportunities discussed include Ricoh consulting and managed services as well as partner solutions for networking, hosting, storage, and data protection. Specific disaster recovery deep dives focus on solutions from SonicWALL, Dell, and IBM that can be combined

Building Hadoop-as-a-Service with Pivotal Hadoop Distribution, Serengeti, & I...

Building Hadoop-as-a-Service with Pivotal Hadoop Distribution, Serengeti, & I...EMC Hadoop has made it into the enterprise mainstream as Big Data technology. But, what about Hadoop as a private or public cloud service on a shared infrastructure? This session looks at a Hadoop solution with virtualization, shared storage, and multi-tenancy, and discuss how service providers can use Pivotal Hadoop Distribution, Isilon, and Serengeti to offer Hadoop-as-a-Service.

Objective 1: Understand Hadoop and its deployment challenges.

After this session you will be able to:

Objective 2: Understand the EMC HDaaS solution architecture and the use cases it addresses.

Objective 3: Understand Pivotal Hadoop Distribution, Serengeti and Isilon's Hadoop features.

Ad

Recently uploaded (20)

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

LinuxCon NA 2016: When Containers and Virtualization Do - and Don’t - Work Together

- 1. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING WHEN CONTAINERS AND VIRTUALIZATION DO - AND DON’T - WORK TOGETHER Jeremy Eder, Sr Principal Performance Engineer LinuxCon/ContainerCon NA 2016

- 2. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING2 Agenda ● Technology Trends ● Container and VM technical Overview ● Performance Data Round-up ● Workload Classification

- 3. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING3 ● Co-team lead for container performance and scale team at Red Hat. ● Architect of Red Hat “tuned” project. ● Authored many blogs and whitepapers on container performance, tuning for high frequency trading. Why listen to me...

- 4. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING4 Listen to your apps. But really, don’t listen to me:

- 5. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING5 Key Technology Trends

- 6. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING6 Red Hat Container Solutions SERVICE CATALOG (LANGUAGE RUNTIMES, MIDDLEWARE, DATABASES, …) SELF-SERVICE APPLICATION LIFECYCLE MANAGEMENT (CI / CD) BUILD AUTOMATION DEPLOYMENT AUTOMATION CONTAINER CONTAINERCONTAINER CONTAINER CONTAINER NETWORKING SECURITYSTORAGE REGISTRY LOGS & METRICS INFRASTRUCTURE AUTOMATION & COCKPIT CONTAINER ORCHESTRATION & CLUSTER MANAGEMENT (KUBERNETES) MANAGEMENT SOLUTIONS CloudForms Satellite Ansible DEVELOPER SOLUTIONS Developer Studio CDK Tools RED HAT ENTERPRISE LINUX CONTAINER RUNTIME & PACKAGING (DOCKER) ATOMIC HOST

- 7. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING7 Containers are an OS Technology TRADITIONAL OS CONTAINERS

- 8. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING8 ● Some don’t care where they run ○ Batch workloads ● Some care greatly ○ Security, Isolation ○ Uptime ○ Performance ○ Proximity/Locality to data It’s all about the workloads...

- 9. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING9 What is a workload? Subsystems

- 10. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING10 What is a workload? Business Requirements

- 11. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING11

- 12. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING CONTAINERS AND VIRTUALIZATION: PERFORMANCE DATA ROUND-UP

- 13. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING13 Network Latency and Throughput

- 14. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING14 Virt Performance of Large “Expensive” Apps :-)

- 15. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING15 RHEL7 + Containerized Solarflare OpenOnload

- 16. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING16 Network Function Virtualization (NFV) Throughput and Packets/sec (RHEL7.x+DPDK)

- 17. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING17 Speedups for Virtual Machines

- 18. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING18 Tuning profile delivery mechanism What is “tuned”?

- 19. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING RHEL7 Desktop/Workstation balanced RHEL6/7 KVM Host, Guest Virtual-host/guest Red Hat Storage rhs-high-throughput, virt RHEL Atomic atomic-host, atomic-guest RHEL7 Server/HPC throughput-performance RHEV virtual-host RHEL OSP (compute node) virtual-host OpenShift openshift-master,node Tuned Profiles throughout Red Hat Products

- 20. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING20 throughput-performance governor energy_perf_bias c/pstates readaheads kernel.sched_min/wakeup_granularity_ns vm.dirty_background/ratio vm.swappiness virtual-guest vm.dirty_ratio vm.swappiness atomic-openshift-node Avc_cache_threshold nf_conntrack_hashsize kernel.pid_max net.netfilter.nf_conntrack_max VM/Cloud Bare M etal future tcp_fastopen multiqueue virtio limitnofile=N for node pty_max=N RFS? Tuned Profiles

- 21. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING21 KVM vs Container Performance (HP results) Distributed Environment ● Java application server ● Internet Message Access Protocol (IMAP) server ● Batch server http://h20195.www2.hpe.com/V 2/getpdf.aspx/4AA6-2761ENW. pdf

- 22. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING22 Workload → Infrastructure Mapping Workload Bare Metal Containers Virt CPU Intensive Memory Intensive Disk I/O Latency Disk I/O Throughput Network Latency Network Throughput Security Uptime (Live Migration) Deployment Speed Alternative OS Color Meaning Mature No Perf Concerns Immature Limited Perf Concerns Difficult/Impossible (currently)

- 23. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING23 Attribute Build Farm CPU Intensive High Memory Intensive High Disk I/O Latency Low Disk I/O Throughput High Network Latency Low Network Throughput High Security Low Uptime (Live Migration) N/A Deployment Speed High Alternative OS N/A Workload → Infrastructure Mapping: Build Farm Icon Meaning Mature and/or No Perf Concerns Immature and/or Limited Perf Concerns Mixed Concerns Not Applicable

- 24. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING Attribute memcached CPU Intensive Medium Memory Intensive Medium Disk I/O Latency Low Disk I/O Throughput Low Network Latency High Network Throughput High Security N/A Uptime (Live Migration) N/A Deployment Speed Low Alternative OS N/A 24 Workload → Infrastructure Mapping: memcached Icon Meaning Mature and/or No Perf Concerns Immature and/or Limited Perf Concerns Mixed Concerns Not Applicable

- 25. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING25 Attribute Stock Trading CPU Intensive High Memory Intensive High Disk I/O Latency Low Disk I/O Throughput Low Network Latency High Network Throughput High Security Low Uptime (Live Migration) N/A Deployment Speed N/A Alternative OS N/A Workload → Infrastructure Mapping: Stock Trading Icon Meaning Mature and/or No Perf Concerns Immature and/or Limited Perf Concerns Mixed Concerns Not Applicable

- 26. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING Attribute Gluster CPU Intensive Low Memory Intensive Low Disk I/O Latency High Disk I/O Throughput High Network Latency High Network Throughput High Security N/A Uptime (Live Migration) N/A Deployment Speed Low Alternative OS N/A 26 Workload → Infrastructure Mapping: Gluster Icon Meaning Mature and/or No Perf Concerns Immature and/or Limited Perf Concerns Mixed Concerns Not Applicable

- 27. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING Attribute Animation CPU Intensive High Memory Intensive Medium Disk I/O Latency Medium Disk I/O Throughput High Network Latency Medium Network Throughput High Security Low Uptime (Live Migration) Low Deployment Speed High Alternative OS N/A 27 Workload → Infrastructure Mapping: Animation Icon Meaning Mature and/or No Perf Concerns Immature and/or Limited Perf Concerns Mixed Concerns Not Applicable

- 28. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING28 It’s all about the workloads.

- 29. JEREMY EDER - RED HAT PERFORMANCE ENGINEERING THANK YOU plus.google.com/+RedHat linkedin.com/company/red-hat youtube.com/user/RedHatVideos facebook.com/redhatinc twitter.com/RedHatNews