Linux-HA with Pacemaker

- 1. Linux High Availability Kris Buytaert

- 2. Kris Buytaert @krisbuytaert ● I used to be a Dev, Then Became an Op ● Senior Linux and Open Source Consultant @inuits.be ● „Infrastructure Architect“ ● Building Clouds since before the Cloud ● Surviving the 10th floor test ● Co-Author of some books ● Guest Editor at some sites

- 3. What is HA Clustering ? ● One service goes down => others take over its work ● IP address takeover, service takeover, ● Not designed for high-performance ● Not designed for high troughput (load balancing)

- 4. Does it Matter ? ● Downtime is expensive ● You mis out on $$$ ● Your boss complains ● New users don't return

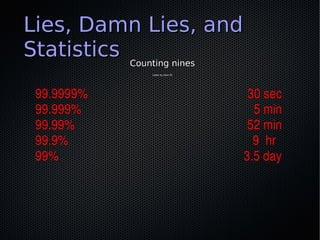

- 5. Lies, Damn Lies, and Statistics Counting nines (slide by Alan R) 99.9999% 30 sec 99.999% 5 min 99.99% 52 min 99.9% 9 hr 99% 3.5 day

- 6. The Rules of HA ● Keep it Simple ● Keep it Simple ● Prepare for Failure ● Complexity is the enemy of reliability ● Test your HA setup

- 7. Myths ● Virtualization will solve your HA Needs ● Live migration is the solution to all your problems ● VM mirroring is the solution to all your problems ● HA will make your platform more stable

- 8. Eliminating the SPOF ● Find out what Will Fail • Disks • Fans • Power (Supplies) ● Find out what Can Fail • Network • Going Out Of Memory

- 9. Split Brain ● Communications failures can lead to separated partitions of the cluster ● If those partitions each try and take control of the cluster, then it's called a split-brain condition ● If this happens, then bad things will happen • http://linux-ha.org/BadThingsWillHappen

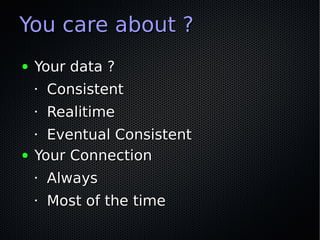

- 10. You care about ? ● Your data ? • Consistent • Realitime • Eventual Consistent ● Your Connection • Always • Most of the time

- 11. Shared Storage ● Shared Storage ● Filesystem • e.g GFS, GpFS ● Replicated ? ● Exported Filesystem ? ● $$$ 1+1 <> 2 ● Storage = SPOF ● Split Brain :( ● Stonith

- 12. (Shared) Data ● Issues : • Who Writes ? • Who Reads ? • What if 2 Active application want to write ? • What if an active server crashes during writing ? • Can we accept delays ? • Can we accept readonly data ? ● Hardware Requirements ● Filesystem Requirements (GFS, GpFS, ...)

- 13. DRBD ● Distributed Replicated Block Device ● In the Linux Kernel (as of very recent) ● Usually only 1 mount • Multi mount as of 8.X • Requires GFS / OCFS2 ● Regular FS ext3 ... ● Only 1 application instance Active accessing data ● Upon Failover application needs to be started on other node

- 14. DRBD(2) ● What happens when you pull the plug of a Physical machine ? • Minimal Timeout • Why did the crash happen ? • Is my data still correct ?

- 15. Alternatives to DRBD ● GlusterFS looked promising • “Friends don't let Friends use Gluster” • Consistency problems • Stability Problems • Maybe later ● MogileFS • Not posix • App needs to implement the API ● Ceph • ?

- 16. HA Projects ● Linux HA Project ● Red Hat Cluster Suite ● LVS/Keepalived ● Application Specific Clustering Software • e.g Terracotta, MySQL NDBD

- 17. Heartbeat ● Heartbeat v1 • Max 2 nodes • No finegrained resources • Monitoring using “mon” ● Heartbeat v2 • XML usage was a consulting opportunity • Stability issues • Forking ?

- 18. Heartbeat v1 /etc/ha.d/ha.cf /etc/ha.d/haresources mdb-a.menos.asbucenter.dz ntc-restart-mysql mon IPaddr2::10.8.0.13/16/bond0 IPaddr2::10.16.0.13/16/bond0.16 mon /etc/ha.d/authkeys

- 19. Heartbeat v2 “A consulting Opportunity” LMB

- 20. Clone Resource Clones in v2 were buggy Resources were started on 2 nodes Stopped again on “1”

- 21. Heartbeat v3 • No more /etc/ha.d/haresources • No more xml • Better integrated monitoring • /etc/ha.d/ha.cf has • crm=yes

- 22. Pacemaker ? ● Not a fork ● Only CRM Code taken out of Heartbeat ● As of Heartbeat 2.1.3 • Support for both OpenAIS / HeartBeat • Different Release Cycles as Heartbeat

- 23. Heartbeat, OpenAis, Corosync ? ● All Messaging Layers ● Initially only Heartbeat ● OpenAIS ● Heartbeat got unmaintained ● OpenAIS had heisenbugs :( ● Corosync ● Heartbeat maintenance taken over by LinBit ● CRM Detects which layer

- 24. Pacemaker Heartbeat or OpenAIS Cluster Glue

- 25. ● Stonithd : The Heartbeat fencing Pacemaker Architecture subsystem. ● Lrmd : Local Resource Management Daemon. Interacts directly with resource agents (scripts). ● pengine Policy Engine. Computes the next state of the cluster based on the current state and the configuration. ● cib Cluster Information Base. Contains definitions of all cluster options, nodes, resources, their relationships to one another and current status. Synchronizes updates to all cluster nodes. ● crmd Cluster Resource Management Daemon. Largely a message broker for the PEngine and LRM, it also elects a leader to co-ordinate the activities of the cluster. ● openais messaging and membership layer. ● heartbeat messaging layer, an alternative to OpenAIS. ● ccm Short for Consensus Cluster Membership. The Heartbeat membership layer.

- 26. Configuring Heartbeat with puppet heartbeat::hacf {"clustername": hosts => ["host-a","host-b"], hb_nic => ["bond0"], hostip1 => ["10.0.128.11"], hostip2 => ["10.0.128.12"], ping => ["10.0.128.4"], } heartbeat::authkeys {"ClusterName": password => “ClusterName ", } http://github.com/jtimberman/puppet/tree/master/heartbeat/

- 27. CRM configure ● Cluster Resource Manager property $id="cibbootstrapoptions" stonithenabled="FALSE" ● Keeps Nodes in Sync noquorumpolicy=ignore startfailureisfatal="FALSE" ● XML Based rsc_defaults $id="rsc_defaultsoptions" migrationthreshold="1" failuretimeout="1" ● cibadm primitive d_mysql ocf:local:mysql op monitor interval="30s" ● Cli manageable params test_user="sure" test_passwd="illtell" test_table="test.table" ● Crm primitive ip_db ocf:heartbeat:IPaddr2 params ip="172.17.4.202" nic="bond0" op monitor interval="10s" group svc_db d_mysql ip_db commit

- 28. Heartbeat Resources ● LSB ● Heartbeat resource (+status) ● OCF (Open Cluster FrameWork) (+monitor) ● Clones (don't use in HAv2) ● Multi State Resources

- 29. LSB Resource Agents ● LSB == Linux Standards Base ● LSB resource agents are standard System V-style init scripts commonly used on Linux and other UNIX-like OSes ● LSB init scripts are stored under /etc/init.d/ ● This enables Linux-HA to immediately support nearly every service that comes with your system, and most packages which come with their own init script ● It's straightforward to change an LSB script to an OCF script

- 30. OCF ● OCF == Open Cluster Framework ● OCF Resource agents are the most powerful type of resource agent we support ● OCF RAs are extended init scripts • They have additional actions: • monitor – for monitoring resource health • meta-data – for providing information about the RA ● OCF RAs are located in /usr/lib/ocf/resource.d/provider-name/

- 31. Monitoring ● Defined in the OCF Resource script ● Configured in the parameters ● You have to support multiple states • Not running • Running • Failed

- 32. Anatomy of a Cluster config • Cluster properties • Resource Defaults • Primitive Definitions • Resource Groups and Constraints

- 33. Cluster Properties property $id="cib-bootstrap-options" stonith-enabled="FALSE" no-quorum-policy="ignore" start-failure-is-fatal="FALSE" No-quorum-policy = We'll ignore the loss of quorum on a 2 node cluster Start-failure : When set to FALSE, the cluster will instead use the resource's failcount and value for resource-failure-stickiness

- 34. Resource Defaults rsc_defaults $id="rsc_defaults-options" migration-threshold="1" failure-timeout="1" resource-stickiness="INFINITY" failure-timeout means that after a failure there will be a 60 second timeout before the resource can come back to the node on which it failed. Migration-treshold=1 means that after 1 failure the resource will try to start on the other node Resource-stickiness=INFINITY means that the resource really wants to stay where it is now.

- 35. Primitive Definitions primitive d_mine ocf:custom:tomcat params instance_name="mine" monitor_urls="health.html" monitor_use_ssl="no" op monitor interval="15s" on-fail="restart" primitive ip_mine_svc ocf:heartbeat:IPaddr2 params ip="10.8.4.131" cidr_netmask="16" nic="bond0" op monitor interval="10s"

- 36. Parsing a config ● Isn't always done correctly ● Even a verify won't find all issues ● Unexpected behaviour might occur

- 37. Where a resource runs • multi state resources • Master – Slave , • e.g mysql master-slave, drbd • Clones • Resources that can run on multiple nodes e.g • Multimaster mysql servers • Mysql slaves • Stateless applications • location • Preferred location to run resource, eg. Based on hostname • colocation • Resources that have to live together • e.g ip address + service • order Define what resource has to start first, or wait for another resource • groups • Colocation + order

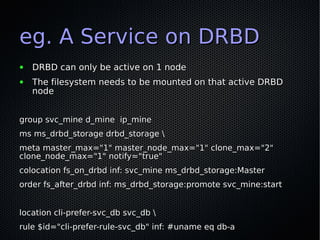

- 38. eg. A Service on DRBD ● DRBD can only be active on 1 node ● The filesystem needs to be mounted on that active DRBD node group svc_mine d_mine ip_mine ms ms_drbd_storage drbd_storage meta master_max="1" master_node_max="1" clone_max="2" clone_node_max="1" notify="true" colocation fs_on_drbd inf: svc_mine ms_drbd_storage:Master order fs_after_drbd inf: ms_drbd_storage:promote svc_mine:start location cli-prefer-svc_db svc_db rule $id="cli-prefer-rule-svc_db" inf: #uname eq db-a

- 39. Crm commands Crm Start the cluster resource manager Crm resource Change in to resource mode Crm configure Change into configure mode Crm configure show Show the current resource config Crm resource show Show the current resource state Cibadm -Q Dump the full Cluster Information Base in XML

- 40. Using crm ● Crm configure ● Edit primitive ● Verify ● Commit

- 41. But We love XML ● Cibadm -Q

- 42. Checking the Cluster State crm_mon -1 ============ Last updated: Wed Nov 4 16:44:26 2009 Stack: Heartbeat Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7 2 Nodes configured, unknown expected votes 2 Resources configured. ============ Online: [ xms-1 xms-2 ] Resource Group: svc_mysql d_mysql (ocf::ntc:mysql): Started xms-1 ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1 Resource Group: svc_XMS d_XMS (ocf::ntc:XMS): Started xms-2 ip_XMS (ocf::heartbeat:IPaddr2): Started xms-2 ip_XMS_public (ocf::heartbeat:IPaddr2): Started xms-2

- 43. Stopping a resource crm resource stop svc_XMS crm_mon -1 ============ Last updated: Wed Nov 4 16:56:05 2009 Stack: Heartbeat Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7 2 Nodes configured, unknown expected votes 2 Resources configured. ============ Online: [ xms-1 xms-2 ] Resource Group: svc_mysql d_mysql (ocf::ntc:mysql): Started xms-1 ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1

- 44. Starting a resource crm resource start svc_XMS crm_mon -1 ============ Last updated: Wed Nov 4 17:04:56 2009 Stack: Heartbeat Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7 2 Nodes configured, unknown expected votes 2 Resources configured. ============ Online: [ xms-1 xms-2 ] Resource Group: svc_mysql d_mysql (ocf::ntc:mysql): Started xms-1 ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1 Resource Group: svc_XMS

- 45. Moving a resource ● Resource migrate ● Is permanent , even upon failure ● Usefull in upgrade scenarios ● Use resource unmigrate to restore

- 46. Moving a resource [xpoll-root@XMS-1 ~]# crm resource migrate svc_XMS xms-1 [xpoll-root@XMS-1 ~]# crm_mon -1 Last updated: Wed Nov 4 17:32:50 2009 Stack: Heartbeat Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7 2 Nodes configured, unknown expected votes 2 Resources configured. Online: [ xms-1 xms-2 ] Resource Group: svc_mysql d_mysql (ocf::ntc:mysql): Started xms-1 ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1 Resource Group: svc_XMS d_XMS (ocf::ntc:XMS): Started xms-1 ip_XMS (ocf::heartbeat:IPaddr2): Started xms-1 ip_XMS_public (ocf::heartbeat:IPaddr2): Started xms-1

- 47. Migrate vs Standby ● Think nrofnodes > 2 clusters ● Migrate : send resource to node X • Only use that available one ● Standby : do not send resources to node X • But use the other available ones

- 48. Debugging ● Check crm_mon -f ● Failcounts ? ● Did the application launch correctly ? ● /var/log/messages/ • Warning: very verbose

- 49. Resource not running [menos-val3-root@mrs-a ~]# crm crm(live)# resource crm(live)resource# show Resource Group: svc-MRS d_MRS (ocf::ntc:tomcat) Stopped ip_MRS_svc (ocf::heartbeat:IPaddr2) Stopped ip_MRS_usr (ocf::heartbeat:IPaddr2) Stopped

- 50. Resource Failcount [menos-val3-root@mrs-a ~]# crm crm(live)# resource crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=1 crm(live)resource# failcount d_MRS delete mrs-a crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=0

- 51. Resource Failcount [menos-val3-root@mrs-a ~]# crm crm(live)# resource crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=1 crm(live)resource# failcount d_MRS delete mrs-a crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=0

- 52. Resource Failcount [menos-val3-root@mrs-a ~]# crm crm(live)# resource crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=1 crm(live)resource# failcount d_MRS delete mrs-a crm(live)resource# failcount d_MRS show mrs-a scope=status name=fail-count-d_MRS value=0

- 53. Pacemaker and Puppet ● Plenty of non usable modules around • Hav1 ● https://github.com/rodjek/puppet-pacemaker.git • Strict set of ops / parameters ● ● Make sure your modules don't enable resources ● I've been using templates till to populate ● Cibadm to configure ● Crm is complex , even crm doesn't parse correctly yet ● ● Plenty of work ahead !

- 54. Getting Help ● http://clusterlabs.org ● #linux-ha on irc.freenode.org ● http://www.drbd.org/users-guide/

- 55. Contact : Kris Buytaert [email protected] Further Reading @krisbuytaert http://www.krisbuytaert.be/blog/ http://www.inuits.be/ http://www.virtualizati on.com/ http://www.oreillygmt.com/ Inuits Esquimaux 't Hemeltje Kheops Business Gemeentepark 2 Center 2930 Brasschaat Avenque Georges 891.514.231 Lemaître 54 6041 Gosselies +32 473 441 636 889.780.406

![Configuring Heartbeat with puppet

heartbeat::hacf {"clustername":

hosts => ["host-a","host-b"],

hb_nic => ["bond0"],

hostip1 => ["10.0.128.11"],

hostip2 => ["10.0.128.12"],

ping => ["10.0.128.4"],

}

heartbeat::authkeys {"ClusterName":

password => “ClusterName ",

}

http://github.com/jtimberman/puppet/tree/master/heartbeat/](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-26-320.jpg)

![Checking the Cluster

State

crm_mon -1

============

Last updated: Wed Nov 4 16:44:26 2009

Stack: Heartbeat

Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum

Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7

2 Nodes configured, unknown expected votes

2 Resources configured.

============

Online: [ xms-1 xms-2 ]

Resource Group: svc_mysql

d_mysql (ocf::ntc:mysql): Started xms-1

ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1

Resource Group: svc_XMS

d_XMS (ocf::ntc:XMS): Started xms-2

ip_XMS (ocf::heartbeat:IPaddr2): Started xms-2

ip_XMS_public (ocf::heartbeat:IPaddr2): Started xms-2](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-42-320.jpg)

![Stopping a resource

crm resource stop svc_XMS

crm_mon -1

============

Last updated: Wed Nov 4 16:56:05 2009

Stack: Heartbeat

Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum

Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7

2 Nodes configured, unknown expected votes

2 Resources configured.

============

Online: [ xms-1 xms-2 ]

Resource Group: svc_mysql

d_mysql (ocf::ntc:mysql): Started xms-1

ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-43-320.jpg)

![Starting a resource

crm resource start svc_XMS

crm_mon -1

============

Last updated: Wed Nov 4 17:04:56 2009

Stack: Heartbeat

Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum

Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7

2 Nodes configured, unknown expected votes

2 Resources configured.

============

Online: [ xms-1 xms-2 ]

Resource Group: svc_mysql

d_mysql (ocf::ntc:mysql): Started xms-1

ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1

Resource Group: svc_XMS](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-44-320.jpg)

![Moving a resource

[xpoll-root@XMS-1 ~]# crm resource migrate svc_XMS xms-1

[xpoll-root@XMS-1 ~]# crm_mon -1

Last updated: Wed Nov 4 17:32:50 2009

Stack: Heartbeat

Current DC: xms-1 (c2c581f8-4edc-1de0-a959-91d246ac80f5) - partition with quorum

Version: 1.0.5-462f1569a43740667daf7b0f6b521742e9eb8fa7

2 Nodes configured, unknown expected votes

2 Resources configured.

Online: [ xms-1 xms-2 ]

Resource Group: svc_mysql

d_mysql (ocf::ntc:mysql): Started xms-1

ip_mysql (ocf::heartbeat:IPaddr2): Started xms-1

Resource Group: svc_XMS

d_XMS (ocf::ntc:XMS): Started xms-1

ip_XMS (ocf::heartbeat:IPaddr2): Started xms-1

ip_XMS_public (ocf::heartbeat:IPaddr2): Started xms-1](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-46-320.jpg)

![Resource not running

[menos-val3-root@mrs-a ~]# crm

crm(live)# resource

crm(live)resource# show

Resource Group: svc-MRS

d_MRS (ocf::ntc:tomcat) Stopped

ip_MRS_svc (ocf::heartbeat:IPaddr2) Stopped

ip_MRS_usr (ocf::heartbeat:IPaddr2) Stopped](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-49-320.jpg)

![Resource Failcount

[menos-val3-root@mrs-a ~]# crm

crm(live)# resource

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=1

crm(live)resource# failcount d_MRS delete mrs-a

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=0](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-50-320.jpg)

![Resource Failcount

[menos-val3-root@mrs-a ~]# crm

crm(live)# resource

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=1

crm(live)resource# failcount d_MRS delete mrs-a

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=0](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-51-320.jpg)

![Resource Failcount

[menos-val3-root@mrs-a ~]# crm

crm(live)# resource

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=1

crm(live)resource# failcount d_MRS delete mrs-a

crm(live)resource# failcount d_MRS show mrs-a

scope=status name=fail-count-d_MRS value=0](https://image.slidesharecdn.com/pacemaker-110418162217-phpapp01/85/Linux-HA-with-Pacemaker-52-320.jpg)