Machine Learning Algorithm - KNN

0 likes291 views

The k-nearest neighbors (kNN) algorithm assumes similar data points exist in close proximity. It calculates the distance between data points to determine the k nearest neighbors, where k is a user-defined value. To classify a new data point, kNN finds its k nearest neighbors and assigns the most common label from those neighbors. Choosing the right k value involves testing different k and selecting the one that minimizes errors while maintaining predictive accuracy on unseen data to avoid underfitting or overfitting. While simple to implement, kNN performance degrades with large datasets due to increased computational requirements.

1 of 11

Download to read offline

Ad

Recommended

KNN Algorithm - How KNN Algorithm Works With Example | Data Science For Begin...

KNN Algorithm - How KNN Algorithm Works With Example | Data Science For Begin...Simplilearn This K-Nearest Neighbor Classification Algorithm presentation (KNN Algorithm) will help you understand what is KNN, why do we need KNN, how do we choose the factor 'K', when do we use KNN, how does KNN algorithm work and you will also see a use case demo showing how to predict whether a person will have diabetes or not using KNN algorithm. KNN algorithm can be applied to both classification and regression problems. Apparently, within the Data Science industry, it's more widely used to solve classification problems. It’s a simple algorithm that stores all available cases and classifies any new cases by taking a majority vote of its k neighbors. Now lets deep dive into these slides to understand what is KNN algorithm and how does it actually works.

Below topics are explained in this K-Nearest Neighbor Classification Algorithm (KNN Algorithm) tutorial:

1. Why do we need KNN?

2. What is KNN?

3. How do we choose the factor 'K'?

4. When do we use KNN?

5. How does KNN algorithm work?

6. Use case - Predict whether a person will have diabetes or not

Simplilearn’s Machine Learning course will make you an expert in Machine Learning, a form of Artificial Intelligence that automates data analysis to enable computers to learn and adapt through experience to do specific tasks without explicit programming. You will master Machine Learning concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, hands-on modeling to develop algorithms and prepare you for the role of Machine Learning Engineer

Why learn Machine Learning?

Machine Learning is rapidly being deployed in all kinds of industries, creating a huge demand for skilled professionals. The Machine Learning market size is expected to grow from USD 1.03 billion in 2016 to USD 8.81 billion by 2022, at a Compound Annual Growth Rate (CAGR) of 44.1% during the forecast period.

You can gain in-depth knowledge of Machine Learning by taking our Machine Learning certification training course. With Simplilearn’s Machine Learning course, you will prepare for a career as a Machine Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms. Those who complete the course will be able to:

1. Master the concepts of supervised, unsupervised and reinforcement learning concepts and modeling.

2. Gain practical mastery over principles, algorithms, and applications of Machine Learning through a hands-on approach which includes working on 28 projects and one capstone project.

3. Acquire thorough knowledge of the mathematical and heuristic aspects of Machine Learning.

4. Understand the concepts and operation of support vector machines, kernel SVM, Naive Bayes, decision tree classifier, random forest classifier, logistic regression, K-nearest neighbors, K-means clustering and more.

Learn more at: https://www.simplilearn.com

K-Nearest Neighbor Classifier

K-Nearest Neighbor ClassifierNeha Kulkarni K-Nearest neighbor is one of the most commonly used classifier based in lazy learning. It is one of the most commonly used methods in recommendation systems and document similarity measures. It mainly uses Euclidean distance to find the similarity measures between two data points.

Knn Algorithm presentation

Knn Algorithm presentationRishavSharma112 k-Nearest Neighbors (k-NN) is a simple machine learning algorithm that classifies new data points based on their similarity to existing data points. It stores all available data and classifies new data based on a distance function measurement to find the k nearest neighbors. k-NN is a non-parametric lazy learning algorithm that is widely used for classification and pattern recognition problems. It performs well when there is a large amount of sample data but can be slow and the choice of k can impact performance.

Decision tree

Decision treeSEMINARGROOT The document discusses various decision tree learning methods. It begins by defining decision trees and issues in decision tree learning, such as how to split training records and when to stop splitting. It then covers impurity measures like misclassification error, Gini impurity, information gain, and variance reduction. The document outlines algorithms like ID3, C4.5, C5.0, and CART. It also discusses ensemble methods like bagging, random forests, boosting, AdaBoost, and gradient boosting.

Bayes Belief Networks

Bayes Belief NetworksSai Kumar Kodam The document discusses Bayesian belief networks (BBNs), which represent probabilistic relationships between variables. BBNs consist of a directed acyclic graph showing the dependencies between nodes/variables, and conditional probability tables quantifying the effects. They allow representing conditional independence between non-descendant variables given parents. The document provides an example BBN modeling a home alarm system and neighbors calling police. It then shows calculations to find the probability of a burglary given one neighbor called police using the network. Advantages are handling incomplete data, learning causation, and using prior knowledge, while a disadvantage is more complex graph construction.

PCA (Principal component analysis)

PCA (Principal component analysis)Learnbay Datascience Principal Component Analysis, or PCA, is a factual method that permits you to sum up the data contained in enormous information tables by methods for a littler arrangement of "synopsis files" that can be all the more handily envisioned and broke down.

KNN

KNN West Virginia University The document discusses the K-nearest neighbor (K-NN) classifier, a machine learning algorithm where data is classified based on its similarity to its nearest neighbors. K-NN is a lazy learning algorithm that assigns data points to the most common class among its K nearest neighbors. The value of K impacts the classification, with larger K values reducing noise but possibly oversmoothing boundaries. K-NN is simple, intuitive, and can handle non-linear decision boundaries, but has disadvantages such as computational expense and sensitivity to K value selection.

Hierarchical Clustering

Hierarchical ClusteringCarlos Castillo (ChaTo) Part of the course "Algorithmic Methods of Data Science". Sapienza University of Rome, 2015.

http://aris.me/index.php/data-mining-ds-2015

Clustering ppt

Clustering pptsreedevibalasubraman Clustering is the process of grouping similar objects together. Hierarchical agglomerative clustering builds a hierarchy by iteratively merging the closest pairs of clusters. It starts with each document in its own cluster and successively merges the closest pairs of clusters until all documents are in one cluster, forming a dendrogram. Different linkage methods, such as single, complete, and average linkage, define how the distance between clusters is calculated during merging. Hierarchical clustering provides a multilevel clustering structure but has computational complexity of O(n3) in general.

Introduction to Machine Learning

Introduction to Machine LearningKnowledge And Skill Forum This document provides an introduction to machine learning, including definitions, types of machine learning problems, common algorithms, and typical machine learning processes. It defines machine learning as a type of artificial intelligence that enables computers to learn without being explicitly programmed. The three main types of machine learning problems are supervised learning (classification and regression), unsupervised learning (clustering and association), and reinforcement learning. Common machine learning algorithms and examples of their applications are also discussed. The document concludes with an overview of typical machine learning processes such as selecting and preparing data, developing and evaluating models, and interpreting results.

K - Nearest neighbor ( KNN )

K - Nearest neighbor ( KNN )Mohammad Junaid Khan Machine learning algorithm ( KNN ) for classification and regression .

Lazy learning , competitive and Instance based learning.

Naive Bayes Classifier

Naive Bayes ClassifierYiqun Hu 1. The Naive Bayes classifier is a simple probabilistic classifier based on Bayes' theorem that assumes independence between features.

2. It has various applications including email spam detection, language detection, and document categorization.

3. The Naive Bayes approach involves computing the class prior probabilities, feature likelihoods, and applying Bayes' theorem to calculate the posterior probabilities to classify new instances. Laplace smoothing is often used to handle cases with insufficient training data.

Machine learning clustering

Machine learning clusteringCosmoAIMS Bassett This document discusses machine learning concepts including supervised vs. unsupervised learning, clustering algorithms, and specific clustering methods like k-means and k-nearest neighbors. It provides examples of how clustering can be used for applications such as market segmentation and astronomical data analysis. Key clustering algorithms covered are hierarchy methods, partitioning methods, k-means which groups data by assigning objects to the closest cluster center, and k-nearest neighbors which classifies new data based on its closest training examples.

K means Clustering Algorithm

K means Clustering AlgorithmKasun Ranga Wijeweera K-means clustering is an algorithm that groups data points into k number of clusters based on their similarity. It works by randomly selecting k data points as initial cluster centroids and then assigning each remaining point to the closest centroid. It then recalculates the centroids and reassigns points in an iterative process until centroids stabilize. While efficient, k-means clustering has weaknesses in that it requires specifying k, can get stuck in local optima, and is not suitable for non-convex shaped clusters or noisy data.

K Nearest Neighbors

K Nearest NeighborsTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL The document discusses the K-nearest neighbors (KNN) algorithm, a simple machine learning algorithm used for classification problems. KNN works by finding the K training examples that are closest in distance to a new data point, and assigning the most common class among those K examples as the prediction for the new data point. The document covers how KNN calculates distances between data points, how to choose the K value, techniques for handling different data types, and the strengths and weaknesses of the KNN algorithm.

Decision Tree Algorithm With Example | Decision Tree In Machine Learning | Da...

Decision Tree Algorithm With Example | Decision Tree In Machine Learning | Da...Simplilearn The document discusses decision trees and how they work. It begins with explaining what a decision tree is - a tree-shaped diagram used to determine a course of action, with each branch representing a possible decision. It then provides examples of using a decision tree to classify vegetables and animals based on their features. The document also covers key decision tree concepts like entropy, information gain, leaf nodes, decision nodes, and the root node. It demonstrates how a decision tree is built by choosing splits that maximize information gain. Finally, it presents a use case of using a decision tree to predict loan repayment.

Dimensionality Reduction and feature extraction.pptx

Dimensionality Reduction and feature extraction.pptxSivam Chinna Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension.

Naive Bayes Presentation

Naive Bayes PresentationMd. Enamul Haque Chowdhury This document provides an overview of Naive Bayes classification. It begins with background on classification methods, then covers Bayes' theorem and how it relates to Bayesian and maximum likelihood classification. The document introduces Naive Bayes classification, which makes a strong independence assumption to simplify probability calculations. It discusses algorithms for discrete and continuous features, and addresses common issues like dealing with zero probabilities. The document concludes by outlining some applications of Naive Bayes classification and its advantages of simplicity and effectiveness for many problems.

3.5 model based clustering

3.5 model based clusteringKrish_ver2 The document discusses various model-based clustering techniques for handling high-dimensional data, including expectation-maximization, conceptual clustering using COBWEB, self-organizing maps, subspace clustering with CLIQUE and PROCLUS, and frequent pattern-based clustering. It provides details on the methodology and assumptions of each technique.

Anomaly detection Workshop slides

Anomaly detection Workshop slidesQuantUniversity Anomaly detection (or Outlier analysis) is the identification of items, events or observations which do not conform to an expected pattern or other items in a dataset. It is used is applications such as intrusion detection, fraud detection, fault detection and monitoring processes in various domains including energy, healthcare and finance.

In this workshop, we will discuss the core techniques in anomaly detection and discuss advances in Deep Learning in this field.

Through case studies, we will discuss how anomaly detection techniques could be applied to various business problems. We will also demonstrate examples using R, Python, Keras and Tensorflow applications to help reinforce concepts in anomaly detection and best practices in analyzing and reviewing results.

What you will learn:

Anomaly Detection: An introduction

Graphical and Exploratory analysis techniques

Statistical techniques in Anomaly Detection

Machine learning methods for Outlier analysis

Evaluating performance in Anomaly detection techniques

Detecting anomalies in time series data

Case study 1: Anomalies in Freddie Mac mortgage data

Case study 2: Auto-encoder based Anomaly Detection for Credit risk with Keras and Tensorflow

K Means Clustering Algorithm | K Means Example in Python | Machine Learning A...

K Means Clustering Algorithm | K Means Example in Python | Machine Learning A...Edureka! The document discusses the K-Means clustering algorithm. It begins by defining clustering as grouping similar data points together. It then describes K-Means clustering, which groups data into K number of clusters by minimizing distances between points and cluster centers. The K-Means algorithm works by randomly selecting K initial cluster centers, assigning each point to the closest center, and recalculating centers as points are assigned until clusters stabilize. The best number of K clusters is found through trial and error to minimize variation between points and clusters.

Decision tree

Decision treeAmi_Surati The document discusses decision tree algorithms. It begins with an introduction and example, then covers the principles of entropy and information gain used to build decision trees. It provides explanations of key concepts like entropy, information gain, and how decision trees are constructed and evaluated. Examples are given to illustrate these concepts. The document concludes with strengths and weaknesses of decision tree algorithms.

Iris - Most loved dataset

Iris - Most loved datasetDrAsmitaTitre This document summarizes the Iris flower data set, which contains measurements of 150 iris flowers from three species. It describes the four attributes measured (sepal length and width, petal length and width) and explains that one species is linearly separable from the other two. Various visualizations and analyses are proposed to better understand the relationships between attributes and species, including box plots, correlation matrices, and evaluating classification algorithms using different feature combinations. Accuracy results are presented for models trained and tested on the data split in various ways.

KNN

KNNBhuvneshYadav13 The document discusses the K-nearest neighbors (KNN) algorithm, a supervised machine learning classification method. KNN classifies new data based on the labels of the k nearest training samples in feature space. It can be used for both classification and regression problems, though it is mainly used for classification. The algorithm works by finding the k closest samples in the training data to the new sample and predicting the label based on a majority vote of the k neighbors' labels.

Hierachical clustering

Hierachical clusteringTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) based on their similarity. It works by randomly assigning data points to k clusters and then iteratively updating cluster centroids and reassigning points until cluster membership stabilizes. K-means clustering aims to minimize intra-cluster variation while maximizing inter-cluster variation. There are various applications and variants of the basic k-means algorithm.

K-means clustering algorithm

K-means clustering algorithmVinit Dantkale K-means clustering is an algorithm that groups data points into k clusters based on their similarity, with each point assigned to the cluster with the nearest mean. It works by randomly selecting k cluster centroids and then iteratively assigning data points to the closest centroid and recalculating the centroids until convergence. K-means clustering is fast, efficient, and commonly used for vector quantization, image segmentation, and discovering customer groups in marketing. Its runtime complexity is O(t*k*n) where t is the number of iterations, k is the number of clusters, and n is the number of data points.

Introduction to Machine Learning Classifiers

Introduction to Machine Learning ClassifiersFunctional Imperative You will learn the basic concepts of machine learning classification and will be introduced to some different algorithms that can be used. This is from a very high level and will not be getting into the nitty-gritty details.

Decision tree

Decision treeShraddhaPandey45 This is the most simplest and easy to understand ppt. Here you can define what is decision tree,information gain,gini impurity,steps for making decision tree there pros and cons etc which will helps you to easy understand and represent it.

KNN Classificationwithexplanation and examples.pptx

KNN Classificationwithexplanation and examples.pptxansarinazish958 This documents is the complete explanation of K Nearest N Classification agorithm, it include both the Theory part, and examples of KNN classification Algorithm with step by step explanation.

Statistical Machine Learning unit3 lecture notes

Statistical Machine Learning unit3 lecture notesSureshK256753 Statistical Machine Learning unit3 lecture notes

Ad

More Related Content

What's hot (20)

Clustering ppt

Clustering pptsreedevibalasubraman Clustering is the process of grouping similar objects together. Hierarchical agglomerative clustering builds a hierarchy by iteratively merging the closest pairs of clusters. It starts with each document in its own cluster and successively merges the closest pairs of clusters until all documents are in one cluster, forming a dendrogram. Different linkage methods, such as single, complete, and average linkage, define how the distance between clusters is calculated during merging. Hierarchical clustering provides a multilevel clustering structure but has computational complexity of O(n3) in general.

Introduction to Machine Learning

Introduction to Machine LearningKnowledge And Skill Forum This document provides an introduction to machine learning, including definitions, types of machine learning problems, common algorithms, and typical machine learning processes. It defines machine learning as a type of artificial intelligence that enables computers to learn without being explicitly programmed. The three main types of machine learning problems are supervised learning (classification and regression), unsupervised learning (clustering and association), and reinforcement learning. Common machine learning algorithms and examples of their applications are also discussed. The document concludes with an overview of typical machine learning processes such as selecting and preparing data, developing and evaluating models, and interpreting results.

K - Nearest neighbor ( KNN )

K - Nearest neighbor ( KNN )Mohammad Junaid Khan Machine learning algorithm ( KNN ) for classification and regression .

Lazy learning , competitive and Instance based learning.

Naive Bayes Classifier

Naive Bayes ClassifierYiqun Hu 1. The Naive Bayes classifier is a simple probabilistic classifier based on Bayes' theorem that assumes independence between features.

2. It has various applications including email spam detection, language detection, and document categorization.

3. The Naive Bayes approach involves computing the class prior probabilities, feature likelihoods, and applying Bayes' theorem to calculate the posterior probabilities to classify new instances. Laplace smoothing is often used to handle cases with insufficient training data.

Machine learning clustering

Machine learning clusteringCosmoAIMS Bassett This document discusses machine learning concepts including supervised vs. unsupervised learning, clustering algorithms, and specific clustering methods like k-means and k-nearest neighbors. It provides examples of how clustering can be used for applications such as market segmentation and astronomical data analysis. Key clustering algorithms covered are hierarchy methods, partitioning methods, k-means which groups data by assigning objects to the closest cluster center, and k-nearest neighbors which classifies new data based on its closest training examples.

K means Clustering Algorithm

K means Clustering AlgorithmKasun Ranga Wijeweera K-means clustering is an algorithm that groups data points into k number of clusters based on their similarity. It works by randomly selecting k data points as initial cluster centroids and then assigning each remaining point to the closest centroid. It then recalculates the centroids and reassigns points in an iterative process until centroids stabilize. While efficient, k-means clustering has weaknesses in that it requires specifying k, can get stuck in local optima, and is not suitable for non-convex shaped clusters or noisy data.

K Nearest Neighbors

K Nearest NeighborsTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL The document discusses the K-nearest neighbors (KNN) algorithm, a simple machine learning algorithm used for classification problems. KNN works by finding the K training examples that are closest in distance to a new data point, and assigning the most common class among those K examples as the prediction for the new data point. The document covers how KNN calculates distances between data points, how to choose the K value, techniques for handling different data types, and the strengths and weaknesses of the KNN algorithm.

Decision Tree Algorithm With Example | Decision Tree In Machine Learning | Da...

Decision Tree Algorithm With Example | Decision Tree In Machine Learning | Da...Simplilearn The document discusses decision trees and how they work. It begins with explaining what a decision tree is - a tree-shaped diagram used to determine a course of action, with each branch representing a possible decision. It then provides examples of using a decision tree to classify vegetables and animals based on their features. The document also covers key decision tree concepts like entropy, information gain, leaf nodes, decision nodes, and the root node. It demonstrates how a decision tree is built by choosing splits that maximize information gain. Finally, it presents a use case of using a decision tree to predict loan repayment.

Dimensionality Reduction and feature extraction.pptx

Dimensionality Reduction and feature extraction.pptxSivam Chinna Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension.

Naive Bayes Presentation

Naive Bayes PresentationMd. Enamul Haque Chowdhury This document provides an overview of Naive Bayes classification. It begins with background on classification methods, then covers Bayes' theorem and how it relates to Bayesian and maximum likelihood classification. The document introduces Naive Bayes classification, which makes a strong independence assumption to simplify probability calculations. It discusses algorithms for discrete and continuous features, and addresses common issues like dealing with zero probabilities. The document concludes by outlining some applications of Naive Bayes classification and its advantages of simplicity and effectiveness for many problems.

3.5 model based clustering

3.5 model based clusteringKrish_ver2 The document discusses various model-based clustering techniques for handling high-dimensional data, including expectation-maximization, conceptual clustering using COBWEB, self-organizing maps, subspace clustering with CLIQUE and PROCLUS, and frequent pattern-based clustering. It provides details on the methodology and assumptions of each technique.

Anomaly detection Workshop slides

Anomaly detection Workshop slidesQuantUniversity Anomaly detection (or Outlier analysis) is the identification of items, events or observations which do not conform to an expected pattern or other items in a dataset. It is used is applications such as intrusion detection, fraud detection, fault detection and monitoring processes in various domains including energy, healthcare and finance.

In this workshop, we will discuss the core techniques in anomaly detection and discuss advances in Deep Learning in this field.

Through case studies, we will discuss how anomaly detection techniques could be applied to various business problems. We will also demonstrate examples using R, Python, Keras and Tensorflow applications to help reinforce concepts in anomaly detection and best practices in analyzing and reviewing results.

What you will learn:

Anomaly Detection: An introduction

Graphical and Exploratory analysis techniques

Statistical techniques in Anomaly Detection

Machine learning methods for Outlier analysis

Evaluating performance in Anomaly detection techniques

Detecting anomalies in time series data

Case study 1: Anomalies in Freddie Mac mortgage data

Case study 2: Auto-encoder based Anomaly Detection for Credit risk with Keras and Tensorflow

K Means Clustering Algorithm | K Means Example in Python | Machine Learning A...

K Means Clustering Algorithm | K Means Example in Python | Machine Learning A...Edureka! The document discusses the K-Means clustering algorithm. It begins by defining clustering as grouping similar data points together. It then describes K-Means clustering, which groups data into K number of clusters by minimizing distances between points and cluster centers. The K-Means algorithm works by randomly selecting K initial cluster centers, assigning each point to the closest center, and recalculating centers as points are assigned until clusters stabilize. The best number of K clusters is found through trial and error to minimize variation between points and clusters.

Decision tree

Decision treeAmi_Surati The document discusses decision tree algorithms. It begins with an introduction and example, then covers the principles of entropy and information gain used to build decision trees. It provides explanations of key concepts like entropy, information gain, and how decision trees are constructed and evaluated. Examples are given to illustrate these concepts. The document concludes with strengths and weaknesses of decision tree algorithms.

Iris - Most loved dataset

Iris - Most loved datasetDrAsmitaTitre This document summarizes the Iris flower data set, which contains measurements of 150 iris flowers from three species. It describes the four attributes measured (sepal length and width, petal length and width) and explains that one species is linearly separable from the other two. Various visualizations and analyses are proposed to better understand the relationships between attributes and species, including box plots, correlation matrices, and evaluating classification algorithms using different feature combinations. Accuracy results are presented for models trained and tested on the data split in various ways.

KNN

KNNBhuvneshYadav13 The document discusses the K-nearest neighbors (KNN) algorithm, a supervised machine learning classification method. KNN classifies new data based on the labels of the k nearest training samples in feature space. It can be used for both classification and regression problems, though it is mainly used for classification. The algorithm works by finding the k closest samples in the training data to the new sample and predicting the label based on a majority vote of the k neighbors' labels.

Hierachical clustering

Hierachical clusteringTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) based on their similarity. It works by randomly assigning data points to k clusters and then iteratively updating cluster centroids and reassigning points until cluster membership stabilizes. K-means clustering aims to minimize intra-cluster variation while maximizing inter-cluster variation. There are various applications and variants of the basic k-means algorithm.

K-means clustering algorithm

K-means clustering algorithmVinit Dantkale K-means clustering is an algorithm that groups data points into k clusters based on their similarity, with each point assigned to the cluster with the nearest mean. It works by randomly selecting k cluster centroids and then iteratively assigning data points to the closest centroid and recalculating the centroids until convergence. K-means clustering is fast, efficient, and commonly used for vector quantization, image segmentation, and discovering customer groups in marketing. Its runtime complexity is O(t*k*n) where t is the number of iterations, k is the number of clusters, and n is the number of data points.

Introduction to Machine Learning Classifiers

Introduction to Machine Learning ClassifiersFunctional Imperative You will learn the basic concepts of machine learning classification and will be introduced to some different algorithms that can be used. This is from a very high level and will not be getting into the nitty-gritty details.

Decision tree

Decision treeShraddhaPandey45 This is the most simplest and easy to understand ppt. Here you can define what is decision tree,information gain,gini impurity,steps for making decision tree there pros and cons etc which will helps you to easy understand and represent it.

Similar to Machine Learning Algorithm - KNN (20)

KNN Classificationwithexplanation and examples.pptx

KNN Classificationwithexplanation and examples.pptxansarinazish958 This documents is the complete explanation of K Nearest N Classification agorithm, it include both the Theory part, and examples of KNN classification Algorithm with step by step explanation.

Statistical Machine Learning unit3 lecture notes

Statistical Machine Learning unit3 lecture notesSureshK256753 Statistical Machine Learning unit3 lecture notes

Supervised and unsupervised learning

Supervised and unsupervised learningAmAn Singh Supervised learning uses labeled training data to predict outcomes for new data. Unsupervised learning uses unlabeled data to discover patterns. Some key machine learning algorithms are described, including decision trees, naive Bayes classification, k-nearest neighbors, and support vector machines. Performance metrics for classification problems like accuracy, precision, recall, F1 score, and specificity are discussed.

K-Nearest Neighbor(KNN)

K-Nearest Neighbor(KNN)Abdullah al Mamun The K-Nearest Neighbors (KNN) algorithm is a robust and intuitive machine learning method employed to tackle classification and regression problems. By capitalizing on the concept of similarity, KNN predicts the label or value of a new data point by considering its K closest neighbours in the training dataset. In this article, we will learn about a supervised learning algorithm (KNN) or the k – Nearest Neighbours, highlighting it’s user-friendly nature.

What is the K-Nearest Neighbors Algorithm?

K-Nearest Neighbours is one of the most basic yet essential classification algorithms in Machine Learning. It belongs to the supervised learning domain and finds intense application in pattern recognition, data mining, and intrusion detection.

It is widely disposable in real-life scenarios since it is non-parametric, meaning, it does not make any underlying assumptions about the distribution of data (as opposed to other algorithms such as GMM, which assume a Gaussian distribution of the given data). We are given some prior data (also called training data), which classifies coordinates into groups identified by an attribute.

SVM & KNN Presentation.pptx

SVM & KNN Presentation.pptxMohamedMonir33 The document compares the SVM and KNN machine learning algorithms and applies them to a photo classification project. It first provides a general overview of SVM and KNN, explaining that SVM finds the optimal decision boundary between classes while KNN classifies points based on their nearest neighbors. The document then discusses implementing each algorithm on a project involving photo classification. It finds that SVM achieved higher accuracy on this dataset compared to KNN.

Data mining Part 1

Data mining Part 1Gautam Kumar There are three main types of missing data: missing completely at random, missing at random, and not missing at random. Common techniques for handling missing data include mean/median imputation, hot deck imputation, cold deck imputation, regression imputation, stochastic regression imputation, K-nearest neighbors imputation, and multivariate imputation by chained equations. Modern deep learning methods can also be used to impute missing values, with techniques like Datawig that leverage neural networks.

K nearest neighbor algorithm

K nearest neighbor algorithmLearnbay Datascience This presentation guide you through k-nearest neighbor, k-nearest neighbor Algorithm, How does the KNN algorithm work?, How does the KNN algorithm work?, How do we choose the factor K?, How do we choose the factor K? and Implementation of kNN in R.

For more topics stay tuned with Learnbay.

MachineLearning.pptx

MachineLearning.pptxBangtangurl Machine learning (ML) is a type of artificial intelligence (AI) that allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so. Machine learning algorithms use historical data as input to predict new output values.

Lecture 11.pptxVYFYFYF UYF6 F7T7T7ITY8Y8YUO

Lecture 11.pptxVYFYFYF UYF6 F7T7T7ITY8Y8YUOAjayKumar773878 BUGUIG UHUKHIUHI HIHOIHO HIOHIHIH IOHIHPOH IHIHPJ HIUHIOHIHUG GGHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHH

KNN Classifier

KNN ClassifierMobashshirur Rahman 👲 This document provides an overview of the K-nearest neighbors (KNN) machine learning algorithm. It defines KNN as a supervised learning method used for both regression and classification. The document explains that KNN finds the k closest training examples to a test data point and assigns the test point the majority class of its neighbors (for classification) or the average of its neighbors (for regression). An illustrative example is provided. Key properties of KNN discussed include distance metrics, choosing k, and that it is a lazy learner. The pros and cons of KNN are summarized. Finally, the document states it will provide an implementation of KNN on a diabetes dataset.

Clustering in Machine Learning: A Brief Overview.ppt

Clustering in Machine Learning: A Brief Overview.pptshilpamathur13 Clustering in machine learning is an unsupervised learning technique that groups data points into clusters based on their similarities. Unlike supervised learning, clustering does not require labeled data, allowing it to discover patterns and structures inherent in the dataset. Each cluster consists of data points that are more similar to one another than to points in other clusters. Common algorithms include k-means, which partitions data into a predefined number of clusters by minimizing variance within clusters, and hierarchical clustering, which creates a tree-like structure of nested clusters. Clustering is widely used in various applications, including customer segmentation, image analysis, and anomaly detection, where understanding the natural grouping of data is essential.

Predict Backorder on a supply chain data for an Organization

Predict Backorder on a supply chain data for an OrganizationPiyush Srivastava The document discusses predicting backorders using supply chain data. It defines backorders as customer orders that cannot be filled immediately but the customer is willing to wait. The data analyzed consists of 23 attributes related to a garment supply chain, including inventory levels, forecast sales, and supplier performance metrics. Various machine learning algorithms are applied and evaluated on their ability to predict backorders, including naive Bayes, random forest, k-NN, neural networks, and support vector machines. Random forest achieved the best accuracy of 89.53% at predicting backorders. Feature selection and data balancing techniques are suggested to potentially further improve prediction performance.

Bb25322324

Bb25322324IJERA Editor This document presents an improved K-means clustering algorithm called I-KMeans that addresses the similar distance problem. The similar distance problem occurs when multiple data points have the same minimum distance to different cluster centroids, making it unclear which cluster they should be assigned to. The I-KMeans algorithm proposes modifications to the standard K-means algorithm to handle this issue, including calculating the number of points and density of clusters to determine cluster assignment when distances are equal. The algorithm is evaluated on a banking dataset and shown to produce better clustering results than standard K-means in terms of a higher quality factor metric.

Introduction to machine learning

Introduction to machine learningKnoldus Inc. Machine learning is presented by Pranay Rajput. The agenda includes an introduction to machine learning, basics, classification, regression, clustering, distance metrics, and use cases. ML allows computer programs to learn from experience to improve performance on tasks. Supervised learning predicts labels or targets while unsupervised learning finds hidden patterns in unlabeled data. Popular algorithms include classification, regression, and clustering. Classification predicts class labels, regression predicts continuous values, and clustering groups similar data points. Distance metrics like Euclidean, Manhattan, and cosine are used in ML models to measure similarity between data points. Common applications involve recommendation systems, computer vision, natural language processing, and fraud detection. Popular frameworks for ML include scikit-learn, TensorFlow, Keras

A HYBRID CLUSTERING ALGORITHM FOR DATA MINING

A HYBRID CLUSTERING ALGORITHM FOR DATA MININGcscpconf The document proposes a hybrid clustering algorithm that combines K-means and K-harmonic mean algorithms. It performs clustering by alternating between using harmonic mean and arithmetic mean to recalculate cluster centers after each iteration. Experimental results on five datasets show the hybrid algorithm produces clusters with lower mean values, indicating tighter grouping, compared to traditional K-means and K-harmonic mean algorithms. The hybrid approach overcomes issues with initialization sensitivity and helps improve computation time and clustering accuracy.

Ad

More from Kush Kulshrestha (17)

Clustering - Machine Learning Techniques

Clustering - Machine Learning TechniquesKush Kulshrestha This document provides an overview of unsupervised learning techniques, specifically clustering algorithms. It discusses the differences between supervised and unsupervised learning, the goal of clustering to group similar observations, and provides examples of K-Means and hierarchical clustering. For K-Means clustering, it outlines the basic steps of randomly assigning clusters, calculating centroids, and repeatedly reassigning points until clusters stabilize. It also discusses selecting the optimal number of clusters K and presents pros and cons of clustering techniques.

Machine Learning Algorithm - Decision Trees

Machine Learning Algorithm - Decision Trees Kush Kulshrestha Description and a basic understanding of Decision Trees algorithm used for classification and regression.

Machine Learning Algorithm - Naive Bayes for Classification

Machine Learning Algorithm - Naive Bayes for ClassificationKush Kulshrestha The document discusses Naive Bayes classification. It begins by explaining Bayes' theorem and how prior probabilities can impact classification. It then provides a candy selection example to introduce Naive Bayes, noting how assuming independence between variables (even if they are dependent) simplifies calculations. The document explains how Naive Bayes works using weather data, shows an example calculation, and lists pros and cons of the technique, such as its simplicity but limitation of assuming independence.

Machine Learning Algorithm - Logistic Regression

Machine Learning Algorithm - Logistic RegressionKush Kulshrestha Logistic regression is a classification algorithm used to predict binary outcomes. It transforms predictor variable values using the sigmoid function to produce a probability value between 0 and 1. The log odds of the outcome are modeled as a linear combination of the predictor variables. Positive coefficient values increase the probability of the outcome while negative values decrease the probability. Logistic regression outputs probabilities that can be converted into binary class predictions.

Assumptions of Linear Regression - Machine Learning

Assumptions of Linear Regression - Machine LearningKush Kulshrestha There are 5 key assumptions in linear regression analysis:

1. There must be a linear relationship between the dependent and independent variables.

2. The error terms cannot be correlated with each other.

3. The independent variables cannot be highly correlated with each other.

4. The error terms must have constant variance (homoscedasticity).

5. The error terms must be normally distributed. Violations of these assumptions can result in poor model fit or inaccurate predictions. Various tests can be used to check for violations.

Interpreting Regression Results - Machine Learning

Interpreting Regression Results - Machine LearningKush Kulshrestha The document discusses how to interpret the results of linear regression analysis. It explains that p-values indicate whether predictors significantly contribute to the model, with lower p-values meaning a predictor is meaningful. Coefficients represent the change in the response variable per unit change in a predictor. The constant/intercept is meaningless if all predictors cannot realistically be zero. Goodness-of-fit is assessed using residual plots and R-squared, though high R-squared does not guarantee a good fit. Interpretation focuses on statistically significant predictors and coefficients rather than fit metrics alone.

Machine Learning Algorithm - Linear Regression

Machine Learning Algorithm - Linear RegressionKush Kulshrestha This document provides an overview of linear regression machine learning techniques. It introduces linear regression models using one feature and multiple features. It discusses estimating regression coefficients to minimize error and find the best fitting line. The document also covers correlation, explaining that a correlation does not necessarily indicate causation. Multiple linear regression is described as fitting a linear function to multiple predictor variables. The risks of overfitting with too complex a model are noted. Code examples of implementing linear regression in Scikit-Learn and Statsmodels are referenced.

General Concepts of Machine Learning

General Concepts of Machine LearningKush Kulshrestha The document provides an overview of machine learning concepts including supervised and unsupervised learning algorithms. It discusses splitting data into training and test sets, training algorithms on the training set, testing algorithms on the test set, and measuring performance. For supervised learning, it describes classification and regression tasks, the bias-variance tradeoff, and how supervised algorithms learn by minimizing a loss function. For unsupervised learning, it discusses clustering, representation learning, dimensionality reduction, and exploratory analysis use cases.

Performance Metrics for Machine Learning Algorithms

Performance Metrics for Machine Learning AlgorithmsKush Kulshrestha Description of performance metrics used for various supervised and unsupervised machine learning algorithms.

Visualization-2

Visualization-2Kush Kulshrestha Preattentive attributes of a Visualization, Gestalt Principles and their applications and best practices to follow while making a Visualization.

Visualization-1

Visualization-1Kush Kulshrestha Contains different types of Data Visualizations, best practices to follow for each case and what type of visualization should be made for different kinds of datasets.

Inferential Statistics

Inferential StatisticsKush Kulshrestha Inferential Statistics, Sampling, Sampling Distributions, Central Limit Theorem, Estimation and Hypothesis Testing.

Descriptive Statistics

Descriptive StatisticsKush Kulshrestha This document provides an overview of descriptive statistics. It discusses key topics including measures of central tendency (mean, median, mode), measures of variability (range, IQR, variance, standard deviation, skewness, kurtosis), probability and probability distributions (binomial distribution), and how descriptive statistics is used to understand and describe data. Descriptive statistics involves numerically summarizing and presenting data through methods such as graphs, tables, and calculations without inferring conclusions about a population.

Scaling and Normalization

Scaling and NormalizationKush Kulshrestha Scaling transforms data values to fall within a specific range, such as 0 to 1, without changing the data distribution. Normalization changes the data distribution to be normal. Common normalization techniques include standardization, which transforms data to have mean 0 and standard deviation 1, and Box-Cox transformation, which finds the best lambda value to make data more normal. Normalization is useful for algorithms that assume normal data distributions and can improve model performance and interpretation.

Wireless Charging of Electric Vehicles

Wireless Charging of Electric VehiclesKush Kulshrestha Wireless charging using electromagnetic induction, and resonance magnetic coupling. Effects and limitations, cheallenges faced and meathods to overcome. Success Case study. References included.

Time management

Time managementKush Kulshrestha Time management is the act of consciously controlling how time is spent on activities to increase productivity, effectiveness, and efficiency. It involves using skills and tools to help accomplish tasks and work towards goals and deadlines. The document provides tips for effective time management, including preparing a schedule with priorities, balancing efforts, focusing on most productive times, taking breaks, tracking progress, reassessing tasks, and getting proper sleep.

Handshakes and their types

Handshakes and their typesKush Kulshrestha This was the Infy softskill assignment allotted to me. I made it, don't repeat the mistake :P. Enjoy!

Ad

Recently uploaded (20)

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptx

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptxPareaRusan planning and calculation monitoring project

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

Machine Learning Algorithm - KNN

- 1. Lesson 18 Classification Techniques – K-Nearest Neighbors Kush Kulshrestha

- 2. kNN Algorithm Summed up

- 3. kNN Algorithm The KNN algorithm assumes that similar things exist in close proximity. In other words, similar things are near to each other. Notice in the image above that most of the time, similar data points are close to each other. The KNN algorithm hinges on this assumption being true enough for the algorithm to be useful.

- 4. Which data points are near to me? KNN captures the idea of similarity (sometimes called distance, proximity, or closeness) by calculating the distance between points on a graph. How could you find the distance between two points? One way is to use the Pythagoras theorem and draw some perpendicular lines.

- 5. Which data points are near to me? By solving below equation, we can get the value of c, which could be used as effective distance between A and B. This distance is called Euclidean distance. There are other distances that could be used to calculate the number representing the distance between two points. Check here: https://towardsdatascience.com/importance-of-distance-metrics-in-machine-learning-modelling-e51395ffe60d For now, we will be going forward with Euclidean distance.

- 6. The Algorithm 1. Load the data 2. Initialize K to your chosen number of neighbors 3. For each example in the data: 1. Calculate the distance between the training data and the current example from the data. 2. Add the distance and the index of the example to an ordered collection. 4. Sort the ordered collection of distances and indices from smallest to largest (in ascending order) by the distances 5. Pick the first K entries from the sorted collection 6. Get the labels of the selected K entries 7. If regression, return the mean of the K labels 8. If classification, return the mode of the K labels

- 7. Choosing the right k To select the K that’s right for your data, we run the KNN algorithm several times with different values of K and choose the K that reduces the number of errors we encounter while maintaining the algorithm’s ability to accurately make predictions when it’s given data it hasn’t seen before. Things to care about: a. As we decrease the value of K to 1, our predictions become less stable b. Inversely, as we increase the value of K, our predictions become more stable due to majority voting / averaging, and thus, more likely to make more accurate predictions (up to a certain point). Eventually, we begin to witness an increasing number of errors. It is at this point we know we have pushed the value of K too far. c. In cases where we are taking a majority vote (e.g. picking the mode in a classification problem) among labels, we usually make K an odd number to have a tiebreaker.

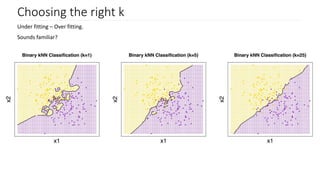

- 8. Choosing the right k Under fitting – Over fitting. Sounds familiar?

- 9. Choosing the right k To select the K that’s right for your data, we run the KNN algorithm several times with different values of K and choose the K that reduces the number of errors we encounter while maintaining the algorithm’s ability to accurately make predictions when it’s given data it hasn’t seen before. Trend in Training error: With k=1, training error will always be 0 because, because we are just looking at ourselves to classify us. As k increases, the training error will also increase, until k is so big that all of the examples are classified as one class.

- 10. Choosing the right k Trend in Testing error: At K=1, we were overfitting the boundaries. Hence, error rate initially decreases and reaches a minima. After the minima point, it then increase with increasing K. To get the optimal value of K, you can segregate the training and validation from the initial dataset. Now plot the validation error curve to get the optimal value of K. This value of K should be used for all predictions.

- 11. Pros and Cons Advantages: The algorithm is simple and easy to implement. There’s no need to build a model, tune several parameters, or make additional assumptions. Disadvantages: The algorithm gets significantly slower as the number of examples and/or predictors/independent variables increase. This is called the curse of Dimensionality. Reference: https://en.wikipedia.org/wiki/Curse_of_dimensionality