Machine Learning using biased data

- 1. Machine Learning using biased data Machine Learning Meetup Sydney Arnaud de Myttenaere Data Scientist @ OCTO Technology 27/06/2017 1 / 17

- 2. About me Data Scientist Consultant, OCTO Technology. Founder of Uchidata Text-Mining API PhD in Mathematics at Paris 1 University 20+ Machine Learning challenges 2 / 17

- 3. Context 2015: Product classification challenge sponsored by Cdiscount (French marketplace). 15M products Text (title, description, brand) Product price 5700 categories 15 000e 3 / 17

- 4. Cdiscount product classification challenge Data: Id_Produit Categorie Description Titre Marque Prix 107448 10170 DKNY Montre Homme - DKNY Montre Homme - DKNY 58.68 14088553 3649 Mini four SEVERIN 2020 SEVERIN 56.83 14214236 1995 Flower magic 1KG Flower magic 1KG KB 9.8 481700 14194 Voie Express Chinois initiation AUCUNE 28.3 412300 14217 Susumu Shingu Les petits oiseaux AUCUNE 15.05 1010000 14349 Références sciences Calcul différentiel AUCUNE 28.22 Target Explanatory variables Process: Learn a model Apply the model on a test file Submit predictions on the platform 4 / 17

- 5. Evaluation process The platform provides 2 different datasets: Train data: used to learn a model Test data: used to compute prediction and evaluate the model’s accuracy. Every day, we can submit up to 5 distinct prediction files to have feedbacks on our model’s accuracy. 5 / 17

- 6. Evaluation process The test data is actually divided in 2 parts: 30% to evaluate models accuracy during the challenge (public leaderboard) 70% for the final evaluation (private leaderboard) → overfitting the public leaderboard leads to poor accuracy during the final evaluation. 5 / 17

- 7. Evaluation process Model calibration: 1. Split the train data 2. Learn a model 3. Validate model and estimate accuracy 4. Compute and submit predictions 5. Get public score If training and test data are not biased, the estimated accuracy is close to the public score. 5 / 17

- 8. Evaluation process Model calibration: 1. Split the train data 2. Learn a model 3. Validate model and estimate accuracy 4. Compute and submit predictions 5. Get public score If training and test data are not biased, the estimated accuracy is close to the public score. 5 / 17

- 9. Sometimes cross-validation fails... Cross-validation score 90% Leaderboard score 58.9% 6 / 17

- 10. Sometimes cross-validation fails... Cross-validation score 90% Leaderboard score 58.9% → 2 possibilities: You are overfitting OR The data is biased 6 / 17

- 11. Biased data In this challenge, the test data was biased. Why? → Organisers selected the same number of products in each category to build the test set. Phone bumper Books Battery % in train data: 13.87% 6.53% 3.66% % in test data: 0.017% 0.017% 0.017% → In practice the data is often biased, due to the collection process, seasonality, ... Bias correction? 7 / 17

- 12. Biased data In this challenge, the test data was biased. Why? → Organisers selected the same number of products in each category to build the test set. Phone bumper Books Battery % in train data: 13.87% 6.53% 3.66% % in test data: 0.017% 0.017% 0.017% → In practice the data is often biased, due to the collection process, seasonality, ... Bias correction? → Solution: data sampling Example: randomly select 100 products of each category in the training set to make it similar to the test set. 7 / 17

- 13. Biased data In this challenge, the test data was biased. Why? → Organisers selected the same number of products in each category to build the test set. Phone bumper Books Battery % in train data: 13.87% 6.53% 3.66% % in test data: 0.017% 0.017% 0.017% → In practice the data is often biased, due to the collection process, seasonality, ... Bias correction? → Solution: data sampling Example: randomly select 100 products of each category in the training set to make it similar to the test set. → Problem: data sampling does not allow us to use the whole training set. 7 / 17

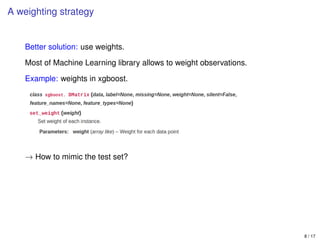

- 14. A weighting strategy Better solution: use weights. Most of Machine Learning library allows to weight observations. Example: weights in xgboost. → How to mimic the test set? 8 / 17

- 15. A weighting strategy Better solution: use weights. Most of Machine Learning library allows to weight observations. Example: weights in xgboost. → How to mimic the test set? Weight = 1 Frequency 8 / 17

- 16. Results Leaderboard score without weights: 58.9% Leaderboard score with weights: 65.8% My final score (aggregation of 3 weighted models): 66.9%. → The weighting strategy works very well... but why? What is the theory behind weights? 9 / 17

- 17. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Objective: find the category of the red dot? 10 / 17

- 18. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Objective: find the category of the red dot? 10 / 17

- 19. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Objective: find the category of the red dot? Proportions on the test dataset are different than the ones on the train dataset → The model calibrated on the train dataset is not optimal on the test dataset. 10 / 17

- 20. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Objective: find the category of the red dot? Proportions on the test dataset are different than the ones on the train dataset → The model calibrated on the train dataset is not optimal on the test dataset. → The red dot is more likely to belong to the green group on the test dataset 10 / 17

- 21. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Problem: The relation between the target variable and the observation in training and test set is different. Why? Proportions are different on train and test datasets. But: Knowing the category, the distribution of obser- vations is the same on both training and test datasets. 10 / 17

- 22. Similar problem, simpler case: 2 categories. 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (50% purple, 50% green) size weight Problem: The relation between the target variable and the observation in training and test set is different. Why? Proportions are different on train and test datasets. But: Knowing the category, the distribution of obser- vations is the same on both training and test datasets. Formally: → Ptrain(Y|X) = Ptest (Y|X) → Ptrain(Y) = Ptest (Y) → Ptrain(X|Y) = Ptest (X|Y) 10 / 17

- 23. Theoretical justification For some weights, minimizing the average error on the weighted train set is equivalent to minimizing the error on the test set. Formally: If is a loss function, Y and X are random variables such that Ptrain(X|Y) = Ptest (X|Y), then by denoting ωi = ptest (Yi ) ptrain(Yi ) , for every model g we have: Etrain[ω(Y) (Y, g(X))] = Etest [ (Y, g(X))] Consequence: The best model on the weighted training set is the best model on the test set. 11 / 17

- 24. Optimal weights Optimal weights: ωi = ptest (Yi ) ptrain(Yi ) → If a label is rare in the test set, the weights are small. → Weights can be used to mimic the test set. 12 / 17

- 25. Optimal weights Optimal weights: ωi = ptest (Yi ) ptrain(Yi ) → If a label is rare in the test set, the weights are small. → Weights can be used to mimic the test set. Problem: The distribution of the target variable on the test set, ptest (Yi ), is unknown. 12 / 17

- 26. Optimal weights Optimal weights: ωi = ptest (Yi ) ptrain(Yi ) → If a label is rare in the test set, the weights are small. → Weights can be used to mimic the test set. Problem: The distribution of the target variable on the test set, ptest (Yi ), is unknown. Suggested solution: Estimate the distribution of the target variable iteratively. 12 / 17

- 27. An iterative approach 150 160 170 180 190 20406080100 Train (90% purple, 10% green) size weight 150 160 170 180 190 20406080100 Test (model: 61% purple, 39% green) size weight → The model calibrated on the training set gives an estimation of the distribution of the target variable on the test set: 61% purple, 39% green. → Use this predictions to estimate weights, update the model... and iterate! 13 / 17

- 28. Results Predictions obtained by the model after 5 iterations: 150 160 170 180 190 20406080100 Test (model: 50.8% purple, 49.2% green) size weight Purple: 50.79% Green: 49.21% The final model is very well calibrated on the test set! If the data is not biased, this strategy will converge in 1 iteration. 14 / 17

- 29. Conclusion In practice the data is often biased, due to the collection process, seasonality, ... Resampling the data is a way to remove the bias But weighting observations is a better strategy since it allows to use the whole data Weights are easy to use in practice (weight option in almost every Machine Learning library) There is a nice theory behind weights! 15 / 17

- 30. References Shimodaira et al., "Improving predictive inference under covariate shift by weighting the log-likelihood function", Journal of statistical planning and inference, 2000. Sugiyama et al., "Covariate shift adaptation by importance weighted cross validation", The Journal of Machine Learning Research, 2007. 16 / 17

![Theoretical justification

For some weights, minimizing the average error on the weighted train set is

equivalent to minimizing the error on the test set.

Formally: If is a loss function, Y and X are random variables such that

Ptrain(X|Y) = Ptest (X|Y), then by denoting ωi = ptest (Yi )

ptrain(Yi )

, for every model g we

have:

Etrain[ω(Y) (Y, g(X))] = Etest [ (Y, g(X))]

Consequence: The best model on the weighted training set is the best model

on the test set.

11 / 17](https://image.slidesharecdn.com/20170627mlsydney-170627115545/85/Machine-Learning-using-biased-data-23-320.jpg)