Making Netflix Machine Learning Algorithms Reliable

- 1. Making Netflix Machine Learning Algorithms Reliable Tony Jebara & Justin Basilico ICML Reliable ML in the Wild Workshop 2017-08-11

- 3. 3 2017 > 100M members > 190 countries

- 4. Goal: Help members find content to watch and enjoy to maximize member satisfaction and retention

- 5. Algorithm Areas ▪ Personalized Ranking ▪ Top-N Ranking ▪ Trending Now ▪ Continue Watching ▪ Video-Video Similarity ▪ Personalized Page Generation ▪ Search ▪ Personalized Image Selection ▪ ...

- 6. Models & Algorithms ▪ Regression (linear, logistic, ...) ▪ Matrix Factorization ▪ Factorization Machines ▪ Clustering & Topic Models ▪ Bayesian Nonparametrics ▪ Tree Ensembles (RF, GBDTs, …) ▪ Neural Networks (Deep, RBMs, …) ▪ Gaussian Processes ▪ Bandits ▪ …

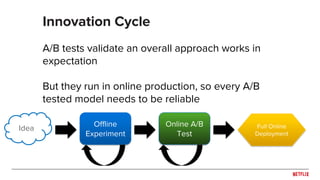

- 7. A/B tests validate an overall approach works in expectation But they run in online production, so every A/B tested model needs to be reliable Innovation Cycle Idea Offline Experiment Full Online Deployment Online A/B Test

- 8. 1) Collect massive data sets 2) Try billions of hypotheses to find* one(s) with support *Find with computational and statistical efficiency Batch Learning

- 9. USERS TIME Collect Learn A/B Test Roll-out Data Model A B Batch Learning

- 10. USERS TIME A BREGRET Batch Learning Collect Learn A/B Test Roll-out Data Model

- 11. Explore and exploit → Less regret Helps cold start models in the wild Maintain some exploration for nonstationarity Adapt reliably to changing and new data Epsilon-greedy, UCB, Thompson Sampling, etc. USERS TIME x x x x x x x x x x x x x x x x x Bandit Learning

- 12. USERS TIME Bandit Learning 1) Uniform population of hypotheses 2) Choose a random hypothesis h 3) Act according to h and observe outcome 4) Re-weight hypotheses 5) Go to 2 TS

- 13. Bandits for selecting images

- 14. Bandits for selecting images

- 15. Different preferences for genre/theme portrayed Contextual bandits to personalize images

- 16. Different preferences for cast members Contextual bandits to personalize images

- 17. Putting Machine Learning In Production

- 18. 18 Training Pipeline Application “Typical” ML Pipeline: A tale of two worlds Historical Data Generate Features Train Models Validate & Select Models Application Logic Live Data Load Model Offline Online Collect Labels Publish Model Evaluate Model Experimentation

- 19. Offline: Model trains reliably Online: Model performs reliably … plus all involved systems and data What needs to be reliable?

- 22. To be reliable, first learning must be repeatable Automate retraining of models Akin to continuous deployment in software engineering How often depends on application; typically daily Detect problems and fail fast to avoid using a bad model Retraining

- 23. Example Training Pipeline ● Periodic retraining to refresh models ● Workflow system to manage pipeline ● Each step is a Spark or Docker job ● Each step has checks in place ○ Response: Stop workflow and send alerts

- 24. What to check? ● For both absolute and relative to previous runs... ● Offline metrics on hold-out set ● Data size for train and test ● Feature distributions ● Feature importance ● Large (unexpected) changes in output between models ● Model coverage (e.g. number of items it can predict) ● Model integrity ● Error counters ● ...

- 25. Example 1: number of samples Numberoftrainingsamples Time

- 26. Example 1: number of samples Numberoftrainingsamples Time Alarm fires and model is not published due to anomaly

- 27. Example 2: offline metrics Liftw.r.tbaseline Time

- 28. Example 2: offline metrics Liftw.r.tbaseline Time Alarm fires and model is not published due to anomaly Absolute threshold: if lift < X then alarm also fires

- 29. Testing - Unit testing and code coverage - Integration testing Improve quality and reliability of upstream data feeds Reuse same data and code offline and online to avoid training/serving skew Running from multiple random seeds Preventing offline training failures

- 31. Check for invariants in inputs and outputs Can catch many unexpected changes, bad assumptions and engineering issues Examples: - Feature values are out-of-range - Output is NaN or Infinity - Probabilities are < 0 or > 1 or don’t sum to 1 Basic sanity checks

- 32. Online staleness checks can cover a wide range of failures in the training and publishing Example checks: - How long ago was the most recent model published? - How old is the data it was trained on? - How old are the inputs it is consuming? Model and input staleness Stale Model Ageofmodel

- 33. Track the quality of the model - Compare prediction to actual behavior - Online equivalents of offline metrics For online learning or bandits reserve a fraction of data for a simple policy (e.g. epsilon-greedy) as a sanity check Online metrics

- 34. Your model isn’t working right or your input data is bad: Now what? Common approaches in personalization space: - Use previous model? - Use previous output? - Use simplified model or heuristic? - If calling system is resilient: turn off that subsystem? Response: Graceful Degradation

- 35. Want to choose personalized images per profile - Image lookup has O(10M) requests per second (e.g. “House of Cards” -> Image URL) Approach: - Precompute show to image mapping per user near-line system - Store mapping in fast distributed cache - At request time - Lookup user-specific mapping in cache - Fallback to unpersonalized results - Store mapping for request - Secondary fallback to default image for a missing show Example: Image Precompute Personalized Selection (Precompute) Unpersonalized Default Image

- 36. Netflix runs 100% in AWS cloud Needs to be reliable on unreliable infrastructure Want a service to operate when an AWS instance fails? Randomly terminate instances (Chaos Monkey) Want Netflix to operate when an entire AWS region is having a problem? Disable entire AWS regions (Chaos Kong) Prevention: Failure Injection

- 37. What failure scenarios do you want your model to be robust to? Models are very sensitive to their input data - Can be noisy, corrupt, delayed, incomplete, missing, unknown Train model to be resilient by injecting these conditions into the training and testing data Failure Injection for ML

- 38. Want to add a feature for type of row on homepage Genre, Because you watched, Top picks, ... Problem: Model may see new types online before in training data Solution: Add unknown category and perturb a small fraction of training data to that type Rule-of-thumb: Apply for all categorical features unless new category isn’t possible (Days of week -> OK, countries -> Not) Example: Categorical features

- 39. Conclusions.

- 40. ● Consider online learning and bandits ● Build off best practices from software engineering ● Automate as much as possible ● Adjust your data to cover the conditions you want your model to be resilient to ● Detect problems and degrade gracefully Take aways

- 41. Thank you. Tony Jebara & Justin Basilico Yes, we are hiring!