Managing Container Clusters in OpenStack Native Way

- 1. Managing Container Clusters in OpenStack Native Way

- 2. OTSUKA, Motohiro/Yuanying NEC Solution Innovators OpenStack Magnum Core Reviewer Haiwei Xu NEC Solution Innovators OpenStack Senlin Core Reviewer Qiming Teng IBM, Research Scientist OpenStack Senlin PTL, OpenStack Heat Core Reviewer

- 3. Agenda • Why containers if you already have OpenStack • What are the use cases? • The many roads leading to Roma • Container as first-class citizens on OpenStack • Deployment and management • Technology Gaps • Experience Sharing and Outlook • What we can do today • Things to expect in Newton cycle

- 4. Why Containers, If You Already Have OpenStack

- 5. Photographer: Captain Albert E. Theberge, NOAA Corps (ret.) from http://www.photolib.noaa.gov/coastline/line3174.htm

- 6. X-ray: NASA/CXC/RIKEN/D.Takei et al; Optical: NASA/STScI; Radio: NRAO/VLA from http://www.nasa.gov/sites/default/files/thumbnails/image/gkper.jpg

- 7. Advantages of container technology Server Host OS Hypervisor Guest OS libs / bins Application Guest OS libs / bins Application Server Host OS libs / bins Application libs / bins Application Virtual Machine Container

- 8. Container Image libs / bins Application Container Image libs / bins Application Advantages of container technology Server A Host OS Container Image libs / bins Application Container Image Server B Host OS libs / bins Application Dockerfile Docker Registry Development Production Version managiment

- 9. Advantages of container technology

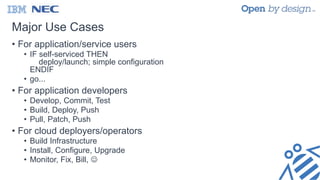

- 10. Major Use Cases • For application/service users • IF self-serviced THEN deploy/launch; simple configuration ENDIF • go... • For application developers • Develop, Commit, Test • Build, Deploy, Push • Pull, Patch, Push • For cloud deployers/operators • Build Infrastructure • Install, Configure, Upgrade • Monitor, Fix, Bill,

- 11. All Roads Lead to Roma • How many roads do we have? • nova lxc • nova docker • heat docker • heat deployment • magnum bay • docker swarm • kubernetes • mesos • marathon, ... • openstack ansible • kolla • kolla-mesos • .....

- 12. Nova: Docker / LXC Ironic Bare Metal Bare Metal VM VM VM VM VM VM Docker/LXC ??? Virtualization Bare metal Container VM VM VM VM VM VM VM VM VM VM VM VM libvirt VMware Xen Nova driver nova-docker virt driver LXC (libvirt) driver

- 13. Heat: Docker / Deployment Heat tcp://$FLOATING_IP:2375 DockerInc::Docker::Container Heat OS::Heat::StructuredConfig group: docker-compose heat-config- docker- compose unix:///var/run/docker.sock

- 14. Magnum: Kubernetes / Swarm • Container Orchestration Engine (COE) as a Service Tenant Bay (Kubernetes Type) Kubernetes Master Kubernetes Minion 1 Kubernetes Minion 2 Service Pod Pod Replication Controller Pod ContainerContainer ContainerContainer ContainerContainer Bay (Swarm Type) Swarm Master Docker Node 2 ContainerContainerContainerContainer ContainerContainerContainerContainer ContainerContainerContainerContainer ContainerContainerContainerContainer Docker Node 1 ContainerContainerContainerContainer ContainerContainerContainerContainer ContainerContainerContainerContainer ContainerContainerContainerContainer Magnum Kubernetes Type Baymodel image: fedora-atomic keypair: my_public_key external-network: $NIC_ID dns-nameserver: 8.8.8.8 flavor: baremetal type-of-bay: kubernetes Swarm Type Baymodel image: fedora-atomic keypair: my_public_key external-network: $NIC_ID dns-nameserver: 8.8.8.8 flavor: baremetal type-of-bay: swarm

- 15. Kolla: Ansible + Container = OpenStack • OpenStack as a Service nova-api neutron-server cinder-api neutron-agent nova-compute cinder-volume

- 16. Then the next question is: How? • Unified API? • Refer to mailinglist discussion • http://lists.openstack.org/pipermail/openstack-dev/2016-April/091947.html • Unified Abstraction? • OpenStack Container API ? • https://blueprints.launchpad.net/magnum/+spec/unified-containers • Kubernetes Driver • Docker Swarm Driver

- 17. Containers as First-Class Citizens on OpenStack

- 18. Balancing across the abstraction layer • Container as another compute API? • maybe pm, vm, lwVM • so many backends • An abstraction over all existing container management software? • it is possible, but many questions to be answered, e.g. why? • do you really need to switch between these software frequently? • are you willing to develop a client software to interact with all of them? • So ... container clustering • better integration with OpenStack • ease of use

- 19. OpenStack Clustering Service Scalable Load-Balanced Highly-Available Manageable ...... of any (OpenStack) objects - What is missing from OpenStack? A Clustering Service - Auto-scaling? Just one of the usage scenario of a cluster. - Auto-Healing (HA)? Just another usage scenario. - We can address the concerns by making policies orthogonal

- 20. Senlin Architecture Senlin Engine Senlin API Senlin Database Senlin Client REST RPC Profiles Policies

- 21. Senlin Features • Profiles: A specification for the objects to be managed • Policies: Rules to be checked/enforced before/after actions are performed 21 (others) Senlin Nova Docker Heat Ironic BareMetal VMs Stacks Containers placement deletion scaling health load-balance affinity Policies as Plugins Profiles as Plugins Cluster/Nodes Managed

- 22. Senlin Server Architecture openstacksdk identity compute orchestration network ... engineengine lock scheduler actions nodecluster service registry receiverparser drivers openstack dummy (others) dbapi rpc client policies placement deletion scaling health load-balance affinity receiver webhoook MsgQueue extension points for external monitoring services extension points facilitating a smarter cluster management extension points to talk to different endpoints for object CRUD operations extension points for interfacing with different services or clouds profiles os.heat.stack (others) os.nova.server senlin-api WSGI middleware apiv1

- 23. Senlin Server Architecture (for containers) engineengine lock scheduler actions nodecluster service registry receiverparser drivers docker-py dummy lxc dbapi rpc client policies placement deletion scaling health load-balance affinity receiver webhoook MsgQueue extension points for external monitoring services extension points facilitating a smarter cluster management extension points to talk to different endpoints for object CRUD operations extension points for interfacing with different services or clouds profiles container.docker (others) container.lxc senlin-api WSGI middleware apiv1

- 24. Targeted Use Cases: Auto-Scaling VM1 VM2 VM3 C1-1 C1-2 C1-3C2-1 C2-2 C3-1 C3-2 VM4 C3-3 VM Cluster Node Node Node Node Container Cluster Node Node Node Container Cluster Node Node Container Cluster Node Node Node Senlin

- 25. Targeted Use Cases: Auto-Healing VM1 VM2 VM3 C1-1 C1-2 C1-3C2-1 C2-2 C3-1 C3-2 VM4 C3-3 VM Cluster Node Node Node Node Container Cluster Node Node Node Container Cluster Node Node Container Cluster Node Node Node C3-2 C1-3 Senlin

- 26. Targeted Use Cases: Controller Plane VM/Baremetal VM2 / Baremetal key keyn-api n-api g-api Container Cluster Node Node Container Cluster Node Node Monitoring (Consul, Sensu, ... ) Senlin webhook / message

- 27. What can we do today & How to do it?

- 28. Container type profile type: container.docker version: 1.0 properties: name: container1 image: hello-world command: ‘/bin/sleep 30’ networks: - network: container-network …… … Nova server type profile spec type: os.nova.server version: 1.0 properties: name: cirros_server image: “cirros-0.3.4-x86_64-uec” flavor: m1.small key_name: oskey networks: - network: private-network

- 29. Container node and container cluster node Heat stack Nova server Profile type Container Nova server Heat stack Container Nova server Template for Heat Heat stack container cluster Template for container Template for Nova Nova serverNova server Heat stackHeat stack containercontainer

- 30. How to create a container cluster? container profile cluster1 vm server vm server vm server cluster1 container vm vm container container vm cluster2 container container container container container

- 31. The scalability of vm cluster and container cluster cluster1 container vm container vm cluster2 container container user Placement policy Deletion policy Scaling policy Placement policy Deletion policy Scaling policy vm container container

- 32. Demo

- 33. Outlook

- 34. Container Backends • lxc, lxd, docker, rocket, runC, ... • docker • Docker-py Container scheduling • Senlin placement policy • Start container on specified some nodes • Make the policy more intelligent • Dynamic rescheduling?

- 35. Networking support • Kuryr • others? Storage support • Kuryr • Rexray • flocker

- 36. Welcome to join us! • IRC: #senlin • Weekly meeting from UTC 13:00~14:00, Tuesday

- 37. Thank you

Editor's Notes

- #5: The section which I will talk is “Why Containers, If you already have OpenStack.”

- #6: Container is a type of virtualization technology, and we can use it as computing resource. But computing resource?

- #7: OpenStack already has the Nova, which is an abstraction layoer of computing resource.

- #8: Basically Nova handles virtual machine, and Nova provides abstraction layer for managing virtual machine. So if container is a type of virtual machine, “Why Containers, If you already have OpenStack.” This diagram shows the difference between virtual machine and container model. Left side is a traditional virtual machine model. And right side is a container model. Virtual machine requires a hypervisor which emulate and translate the hardware, and has its own OS. Container provide isolation for processes sharing compute resources. They are similar to virtualized machine but share the host kernel and avoid hardware emulation. So you can use a Host resources more effectively than Virtual machine. Soin this case, you can use container like a virtual machine, It means that nova can manage containers.

- #9: In addition, Docker provides a simple tools and eco system for container which makes container technology become very polular . You can create container image easily using Dockerfile. You can share container image using docker registry, Container Image has all the additional dependencies that application need, above what is provided by host. You can move application from host to host easily.

- #10: Futthermore container scalability and elasticity are much better than virtual machine, and thanks to some managiment tools like kubernetes and docke swarm, managiment of containers between defferent host become much easier. So OpenStack needs this technology to make cloud managiment easiler.

- #11: This slide show major use cases of container technology. The first one is for application users, who only want the application to be started quickly, The don’t care how the application is started. For the application developers, they care about application lifecycles, version managiment and portablicty. And for cloud operators they care about how to manage infrastructure effectively, how to upgrade the system and so on.

- #12: Let’s see what container technology exists in OpenStack. we have nova lxc, docker and magnum so many projects supporting container technlogy.

- #13: For example, Nova has lxc driver and docker driver which provides same interface with virtual machine. User can start container like virtual machine. This model doesn’t support all the advantage of container technology, But this can meet the application user’s needs who just want to deploy application quickly.

- #14: Next heat. Heat has two way to manage cotainer. One is Docker::Container resource, and the other is SoftwareConfig or StructuredConfig Resource. This can also meet the application user’s needs but it has limitaion of managing the containers after they are created.

- #15: And the next is magnum. Magnum is a container orchestration engine as a service which deploy and manage COE. When magnum deploy the COE, user can use all of the advantage of container technology through it’s COE specific tools such as kubectl or docker cli. This can meet the developer and operator use cases. But you must manage containers without an OpenStack native way.

- #16: Next one is Kolla. Kolla is a OpenStack as a Service. which uses container technology to make managiment openstack easier. This is one caese of operator’s usecases. this is just use a containers but not a way to manage containers itself.

- #17: So in order to manage container well in OpenStack, we need to find the new solution. But we have a some problem to solve it. The commuty has disscussed a lot about this issues. Create an unified api which can support vm, baremetal and containers? But the usecase of vm and conrainer are different so we can’t provider unified API. next issue is how create an unified abstraction api for container orchestration engine? This also have same problem which is a difference between kuber But we can’t get an agreement on it.

- #29: As introduced previously Senlin is a project which provides clustering service, currently it only supports vm cluster. When supports container clustering, Senlin will do it in the similar way of vm clustering. So at first, we need a new type profile – a container type profile. In the profile we will define some properties which will be used to create containers. All necessary properties can be defined in this profile. The format is similar to Nova server format.

- #30: With the profile , we can create container nodes and container clusters, When create containers, we need host vms, and the host vms are also managed by Senlin.So Senlin can manage both vm layer and container layer.

- #31: This is the workflow of creating container cluster. We can create multiple containers in one vm depending on the vm resource. We can see physically containers are running on vms, but logically the container cluster(cluster2) and the vm cluster(cluster1) are separated clusters, Senlin can manage them separately. That means to end uses who just want containers, they may just see the container cluster.

- #32: Lets see how to manage the resources. The scalability control is the advantage of Senlin. When we have a vm cluster and container cluster, sometime the resource is not enough, it need to scale out. Senlin invents policies to tell the cluster how to scale out/scale in. As we see the policies are attached to the cluster. When the resource is not enough, we got an alarm from the ceilometer, the policy will be triggered. Then it will tell the vm cluster to create a vm, after that the scaling policy attached to the container cluster will be triggered, then a new container will be created. This is the scale out model, of course when the resources are idle, some vm/containers will be deleted. This is the way how senlin control cluster scalability.

- #35: About the design for container cluster, we still have some issues to think about. Everyone has its own advantage. When starting a container we need to determine starting it on which cluster which node. So do we need a scheduler to do this job. In senlin we have a placement policy, in the policy we can define where to start to nodes, it is a kind of scheduler, but very simple one, it’s not smart enough, we still need to improve it to meet our needs. Anyway it is a solution of this issue.

- #36: So we hope we can use Kuryr to create container network automatically just like we create vm network.

- #37: After all about container cluster support in senlin, we have had some discussions and have made some agreements on some issues. But we still want to hear more voices from the community, we need your ideas, your suggestions and also new hands, so please join us if you are interested in this job. You can find us on IRC senin channel and can also join our weekly meeting, any ideas are appreciated.