Memory Mapping Implementation (mmap) in Linux Kernel

- 1. Memory Mapping Implementation (mmap) in Linux Kernel Adrian Huang | May, 2022 * Based on kernel 5.11 (x86_64) – QEMU * SMP (4 CPUs) and 8GB memory * Kernel parameter: nokaslr norandmaps * Userspace: ASLR is disabled * Legacy BIOS

- 2. Agenda • Three different IO types • Process Address Space – mm_struct & VMA • Four types of memory mappings • mmap system call implementation • Demand page: page fault handling for four types of memory mappings • fork() • COW mapping configuration: set ‘write protect’ when calling fork() • COW fault call path

- 3. Three different IO types • Buffered IO o Leverage page cache • Direct IO o Bypass page cache • Memory-mapped IO (file-based mapping, memory-mapped file) • File content can be accessed by operations on the bytes in the corresponding memory region.

- 4. Process Address Space – mm_struct & VMA (1/2)

- 5. Process Address Space – mm_struct & VMA (2/2)

- 6. Four types of memory mappings Reference from: Chapter 49, The Linux Programming Interface File Anonymous Private (Modification is not visible to other processes) Initializing memory from contents of file Example: Process's .text and .data segements (Changes are not carried through to the underlying file) Memory Allocation Shared (Modification is visible to other processes) 1. Memory-mapped IO: Changes are carried through to the underlying file 2. Sharing memory between processes (IPC) Sharing memory between processes (IPC) Visibility of Modification Mapping Type

- 7. mmap system call implementation (1/2)

- 8. mmap system call implementation (2/2) 1. thp_get_unmapped_area() eventually calls arch_get_unmapped_area_topdown() 2. unmapped_area_topdown() is the key point for allocating an userspace address

- 9. inode_operations & file_operations: file thp_get_unmapped_area(): DAX supported for huge page

- 10. inode_operations & file_operations: directory

- 11. vma vm_start = 0x400000 vm_end = 0x401000 vma vm_start = 0x401000 vm_end = 0x496000 vma vm_start = 0x496000 vm_end = 0x4bd000 vma vm_start = 0x4be000 vm_end = 0x4c1000 vma vm_start = 0x4c1000 vm_end = 0x4c4000 vma (heap) vm_start = 0x4c4000 vm_end = 0x4e8000 vma (vvar) vm_start = 0x7ffff7ffa000 vm_end = 0x7ffff7ffe000 vma (vdso) vm_start = 0x7ffff7ffe000 vm_end = 0x7ffff7fff000 vma (stack) vm_start = 0x7ffffffde000 vm_end = 0x7ffffffff000 GAP GAP G A P GAP user space address 0 0x7ffffffff000 Process address space: Doubly linked-list

- 12. Process address space: VMA rbtree

- 13. Process address space: VMA rbtree -> rb_subtree_gap • Maximum of the following items: o gap between this vma and previous one o rb_left.subtree_gap o rb_right.subtree_gap rb_subtree_gap calculation

- 14. Process address space: VMA rbtree -> rb_subtree_gap • Maximum of the following items: o gap between this vma and previous one o rb_left.subtree_gap o rb_right.subtree_gap rb_subtree_gap calculation max(0x7ffff7ffa000 – 0x4e8000, 0, 0) = 0x7fff7b12000

- 15. Process address space: VMA rbtree -> rb_subtree_gap • VM_GROWSDOWN is set in vma->vm_flags • max(0x7ffffffde000 – 0x7ffff7fff000 - stack_guard_gap, 0, 0) = 0x7edf000 • stack_guard_gap = 256UL<<PAGE_SHIFT

- 17. unmapped_area_topdown() - Principle vm_unmapped_area_info length = 0x1000 low_limit = PAGE_SIZE high_limit = mm->mmap_base = 0x7ffff7fff000 gap_end = info->high_limit (0x7ffff7fff000) high_limit = gap_end – info->length = 0x7ffff7ffe000 gap_start = mm->highest_vm_end = 0x7ffffffff000 unmapped_area_topdown(): Preparation [Efficiency] Traverse vma rbtree to find gap space instead of traversing vma linked list

- 22. unmapped_area_topdown() Stack guard gap gap_end = 0x7ffffffde000 – 0x100000 = 0x7fffffede000 * stack_guard_gap = 256UL<<PAGE_SHIFT = 0x100000

- 26. unmapped_area_topdown() Found return 0x7ffff7ffa000 – 0x1000 = 0x7ffff7ff9000

- 27. vma_merge() – Possible ways to merge Note: VMAs must have the same attributes

- 28. mmap_region()->munmap_vma_range()->find_vma_links find_vma_links(…, 0x7ffff7ff9000, …) Iterate rbtree to find an empty VMA link (rb_link) • rb_parent: The parent of rb_link • rb_prev: Previous vma of rb_parent • rb_prev is used in vma_merge() • rb_link, rb_parent and rb_prev are used in vma_link(). • rb_prev is the previous VMA after the new VMA is inserted. Note

- 30. __vma_link_file(): i_mmap for recording all memory mappings (vma) of the memory-mapped file task_struct mm files_struct fd_array[] file f_inode f_pos f_mapping . . file inode *i_mapping i_atime i_mtime i_ctime mnt dentry f_path address_space i_data host page_tree i_mmap page mapping index radix_tree_root height = 2 rnode radix_tree_node count = 2 63 0 1 … page 2 3 radix_tree_node count = 1 63 0 1 … 2 3 page page slots[0] slots[3] slots[1] slots[3] slots[2] index = 1 index = 3 index = 194 radix_tree_node count = 1 63 0 1 … 2 3 Interval tree implemented via red-black tree Radix Tree (v4.19 or earlier) Xarray (v4.20 or later) files mm struct vm_area_struct *mmap get_unmapped_area mmap_base page cache vm_area_struct vm_mm vm_ops vm_file vm_area_struct vm_file . . pgd 1. i_mmap: Reverse mapping (RMAP) for the memory-mapped file (page cache) → check __vma_link_file () 2. anon_vma: Reverse mapping for anonymous pages -> anon_vma_prepare() invoked during page fault RMAP

- 31. mm_populate()

- 32. Anonymous Page: Page Fault – Discussion List • Private (MAP_PRIATE) • Write • Read before a write • Share (MAP_SHARED)

- 33. Demand Paging: page fault – Anonymous page (MAP_PRIVATE)

- 34. Demand Paging: page fault → Page table configuration - Anonymous page (MAP_PRIVATE) Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 mmap 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset

- 35. Demand Paging: page fault → Page table configuration [anonymous page: MAP_PRIVATE] page fault flow

- 36. man mmap [gdb] backtrace Demand Paging: page fault → Page table configuration - Anonymous page (MAP_PRIVATE) [anonymous page: MAP_PRIVATE] page fault flow

- 37. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 mmap 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Demand Paging: page fault → Page table configuration - Anonymous page (MAP_PRIVATE)

- 38. Demand Paging: page fault → call trace - Anonymous page (MAP_PRIVATE)

- 39. Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE) Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 mmap 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset

- 40. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE) – Read before writing data (read fault) Pre-allocated zero page Physical Memory read fault 1. A pre-allocated zero page is initialized during system init -> Check init_zero_pfn() 2. Anonymous private page + read fault -> Link to the pre-allocated zero page

- 41. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE) – Read before writing data (read fault) Pre-allocated zero page Physical Memory read fault 1. A pre-allocated zero page is initialized during system init -> Check init_zero_pfn() 2. Anonymous private page + read fault -> Link to the pre-allocated zero page Link to the pre-allocated zero page

- 42. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PDE #511 PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PDE #447 PTE #505 Dedicated zero page 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE) – Read before writing data (read fault) -> Write fault mmap (anonymous) 1 Allocate a zeroed page 2 Link to the newly allocated page write fault

- 43. Page fault handler for userspace address

- 44. Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE & MAP_SHARED): first-time access Anonymous page + MAP_PRIVATE Anonymous page + MAP_SHARED + WRITE Anonymous page + MAP_SHARED + READ [do_anonymous_page] • Read fault: Apply the pre-allocated zero page

- 45. Demand Paging: page fault → VMAs – Anonymous page (MAP_SHARED): first-time write

- 46. Demand Paging: page fault → VMAs – Anonymous page (MAP_SHARED): first-time write Anonymous page + MAP_SHARED

- 47. Types of memory mappings: update Reference from: Chapter 49, The Linux Programming Interface Description Backing file Private (Modification is not visible to other processes) Initializing memory from contents of file Example: Process's .text and .data segements (Changes are not carried through to the underlying file) Memory Allocation No Shared (Modification is visible to other processes) 1. Memory-mapped IO: Changes are carried through to the underlying file 2. Sharing memory between processes (IPC) Sharing memory between processes (IPC) /dev/zero Mapping Type Anonymous File Visibility of Modification

- 48. Memory-mapped file vma->vm_ops: invoke vm_ops callbacks in page fault handler • struct vm_operations_struct o fault : Invoked by page fault handler to read the corresponding data into a physical page. o map_pages : Map the page if it is in page cache (check function ‘do_fault_around’: warm/cold page cache). o page_mkwrite: Notification that a previously read-only page is about to become writable.

- 49. Memory-mapped file: related data structures NULL: anonymous page

- 50. Page fault handler for userspace address: Memory-mapped file Look at this firstly

- 51. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PTE #505 mmap (memory-mapped file = page cache) 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Disk PDE #511 PDE #447 Already in page cache: file->f_mapping 1 2 Demand Paging: page fault → do_read_fault(): warm page cache read page fault Memory-mapped file

- 52. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PML4E #255 PML4E for kernel PDPTE #511 Physical Memory PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 Legend Allocated pages or page table entry Will be allocated if page fault occurs PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PTE #505 mmap (memory-mapped file = page cache) 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Disk PDE #511 PDE #447 Already in page cache: file->f_mapping 1 2 Demand Paging: page fault → do_read_fault(): warm page cache read page fault Memory-mapped file

- 53. Demand Paging: page fault → filemap_map_pages(): warm page cache read page fault 1 2 map_pages callback: map a page cache (already available) to process address space (process page table) → warm page cache Memory-mapped file

- 54. Demand Paging: page fault → call path for warm/cold page cache do_read_fault alloc_set_pte filemap_map_pages vmf->vma->vm_ops->map_pages do_fault_around Find the page cache from address_space (page cache pool) __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte warm page cache cold page cache find_get_page do_async_mmap_readahead No page in page cache page in page cache

- 55. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PML4E #255 PML4E for kernel PDPTE #511 PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PTE #505 mmap (memory-mapped file = page cache) 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Disk PDE #511 PDE #447 Already in page cache: file->f_mapping 1 2 warm page cache: make sure “page cache = mmap physical page” (1/3) Physical Memory Page Cache - Verification page->mapping • [bit 0] = 1: anonymous page → mapping field = anon_vma descriptor • [bit 0] = 0: page cache → mapping field = address_space descriptor

- 56. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PML4E #255 PML4E for kernel PDPTE #511 PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PTE #505 mmap (memory-mapped file = page cache) 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Disk PDE #511 PDE #447 Already in page cache: file->f_mapping 1 2 After alloc_set_pte() After alloc_set_pte() Physical Memory << PAGE_SHIFT warm page cache: make sure “page cache = mmap physical page” (2/3)

- 57. Page Map Level-4 Table Sign-extend Page Map Level-4 Offset 30 21 39 38 29 47 48 63 Page Directory Pointer Offset Page Directory Offset Page Directory Pointer Table Page Directory Table PML4E #255 PML4E for kernel PDPTE #511 PTE #510 stack task_struct pgd mm mm_struct mmap PTE #509 … PTE #478 PML4E #0 PDE #2 PDPTE #0 PTE #0 .text, .data, … Linear Address: 0x7ffff7ff9000 PTE #188 … PTE #190 PTE #199 … heap PTE #505 mmap (memory-mapped file = page cache) 12 20 11 0 Page Table Page Directory Pointer Offset Page Directory Offset Disk PDE #511 PDE #447 Already in page cache: file->f_mapping 1 2 After alloc_set_pte() After alloc_set_pte() Physical Memory will generate a write fault for next write warm page cache: make sure “page cache = mmap physical page” (3/3)

- 58. write fault (write-protected fault) write fault Memory-mapped file

- 59. do_wp_page wp_page_copy new_page = alloc_page_vma(…) cow_user_page copy_user_highpage maybe_mkwrite wp_page_shared [MAP_PRIVATE] COW: Copy On Write [MAP_SHARED] vma is (VM_WRITE|VM_SHARED) write fault (write-protected fault): do_wp_page write fault [MAP_PRIVATE] COW: Quote from `man mmap` Memory-mapped file

- 60. write fault (write-protected fault) – call path

- 61. write fault without previously reading (MAP_SHARED): do_shared_fault write fault Memory-mapped file

- 62. write fault without previously reading (MAP_SHARED): do_shared_fault do_shared_fault __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache do_page_mkwrite fault_dirty_shared_page

- 63. write fault without previously reading (MAP_SHARED): do_shared_fault do_shared_fault __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache: COW do_page_mkwrite fault_dirty_shared_page

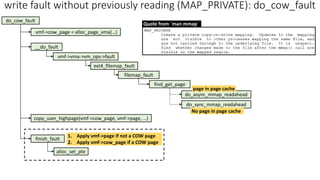

- 64. write fault without previously reading (MAP_PRIVATE): do_cow_fault write fault Memory-mapped file

- 65. write fault without previously reading (MAP_PRIVATE): do_cow_fault do_cow_fault vmf->cow_page = alloc_page_vma(…) __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache copy_user_highpage(vmf->cow_page, vmf->page, …) 1. Apply vmf->page if not a COW page 2. Apply vmf->cow_page if a COW page Quote from `man mmap`

- 66. do_cow_fault vmf->cow_page = alloc_page_vma(…) __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache: COW copy_user_highpage(vmf->cow_page, vmf->page, …) 1. Apply vmf->page if not a COW page 2. Apply vmf->cow_page if a COW page 1 2 3 4 breakpoint write fault without previously reading (MAP_PRIVATE): do_cow_fault

- 67. do_cow_fault vmf->cow_page = alloc_page_vma(…) __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache: COW copy_user_highpage(vmf->cow_page, vmf->page, …) 1. Apply vmf->page if not a COW page 2. Apply vmf->cow_page if a COW page 1 2 3 4 breakpoint write fault without previously reading (MAP_PRIVATE): do_cow_fault 2

- 68. do_cow_fault vmf->cow_page = alloc_page_vma(…) __do_fault vmf->vma->vm_ops->fault ext4_filemap_fault filemap_fault do_sync_mmap_readahead finish_fault alloc_set_pte find_get_page do_async_mmap_readahead No page in page cache page in page cache: COW copy_user_highpage(vmf->cow_page, vmf->page, …) 1. Apply vmf->page if not a COW page 2. Apply vmf->cow_page if a COW page 1 2 3 4 breakpoint write fault without previously reading (MAP_PRIVATE): do_cow_fault

- 69. [Recap] Think about this: #1 write fault read fault 1 1 2 2 Memory-mapped file

- 70. Think about this: #2 fork(): Which function (do_cow_fault or do_wp_page) is called when COW is triggered? Think about this… this one? or, this one?

- 71. fork(): COW mapping – set ‘write protect’ for src/dst PTEs

- 72. fork(): COW mapping – set ‘write protect’ for src/dst PTEs

- 73. fork(): COW Fault Call Path

- 74. Backup

- 75. vdso & vvar • vsyscall (Virtual System Call) o The context switch overhead (user <-> kernel) of some system calls (gettimeofday, time, getcpu) is greater than execution time of those functions: Built on top of the fixed-mapped address o Machine code format o Core dump: debugger cannot provide the debugging info because symbols of this area are unavailable • vDSO (Virtual Dynamic Shared Object) • ELF format • A small shared library that the kernel automatically maps into the address space of all userspace applications • VVAR (vDSO Variable)

![unmapped_area_topdown() - Principle

vm_unmapped_area_info

length = 0x1000

low_limit = PAGE_SIZE

high_limit = mm->mmap_base

= 0x7ffff7fff000

gap_end = info->high_limit (0x7ffff7fff000)

high_limit = gap_end – info->length = 0x7ffff7ffe000

gap_start = mm->highest_vm_end = 0x7ffffffff000

unmapped_area_topdown(): Preparation

[Efficiency] Traverse vma rbtree to find gap space instead of traversing vma linked list](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-17-320.jpg)

![__vma_link_file(): i_mmap for recording all memory mappings

(vma) of the memory-mapped file

task_struct

mm

files_struct

fd_array[]

file

f_inode

f_pos

f_mapping

.

.

file

inode

*i_mapping

i_atime

i_mtime

i_ctime

mnt

dentry

f_path

address_space

i_data

host

page_tree

i_mmap

page

mapping

index

radix_tree_root

height = 2

rnode

radix_tree_node

count = 2

63

0 1 …

page

2 3

radix_tree_node

count = 1

63

0 1 …

2 3

page page

slots[0]

slots[3]

slots[1] slots[3] slots[2]

index = 1 index = 3 index = 194

radix_tree_node

count = 1

63

0 1 …

2 3

Interval tree implemented

via red-black tree

Radix Tree (v4.19 or earlier)

Xarray (v4.20 or later)

files

mm

struct vm_area_struct *mmap

get_unmapped_area

mmap_base

page cache

vm_area_struct

vm_mm

vm_ops

vm_file

vm_area_struct

vm_file

.

.

pgd

1. i_mmap: Reverse mapping (RMAP) for the memory-mapped file (page cache) → check __vma_link_file ()

2. anon_vma: Reverse mapping for anonymous pages -> anon_vma_prepare() invoked during page fault

RMAP](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-30-320.jpg)

![Demand Paging: page fault → Page table configuration

[anonymous page: MAP_PRIVATE] page fault flow](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-35-320.jpg)

![man mmap

[gdb] backtrace

Demand Paging: page fault → Page table configuration - Anonymous page

(MAP_PRIVATE) [anonymous page: MAP_PRIVATE] page fault flow](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-36-320.jpg)

![Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE &

MAP_SHARED): first-time access

Anonymous page +

MAP_PRIVATE

Anonymous page +

MAP_SHARED + WRITE

Anonymous page +

MAP_SHARED + READ

[do_anonymous_page]

• Read fault: Apply the pre-allocated zero page](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-44-320.jpg)

![Page Map

Level-4 Table

Sign-extend

Page Map

Level-4 Offset

30 21

39 38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

PML4E #255

PML4E for

kernel

PDPTE #511

PTE #510

stack

task_struct

pgd

mm

mm_struct

mmap

PTE #509

…

PTE #478

PML4E #0

PDE #2

PDPTE #0

PTE #0

.text, .data, …

Linear Address: 0x7ffff7ff9000

PTE #188

…

PTE #190

PTE #199

…

heap

PTE #505 mmap

(memory-mapped file

= page cache)

12

20 11 0

Page Table

Page Directory

Pointer Offset

Page Directory Offset

Disk

PDE #511

PDE #447

Already in page cache:

file->f_mapping

1

2

warm page cache: make sure “page cache = mmap physical page” (1/3)

Physical Memory

Page Cache - Verification

page->mapping

• [bit 0] = 1: anonymous page → mapping field = anon_vma descriptor

• [bit 0] = 0: page cache → mapping field = address_space descriptor](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-55-320.jpg)

![do_wp_page

wp_page_copy

new_page = alloc_page_vma(…)

cow_user_page

copy_user_highpage

maybe_mkwrite

wp_page_shared

[MAP_PRIVATE] COW: Copy On Write

[MAP_SHARED] vma is (VM_WRITE|VM_SHARED)

write fault (write-protected fault): do_wp_page

write fault

[MAP_PRIVATE] COW: Quote from `man mmap`

Memory-mapped file](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-59-320.jpg)

![[Recap] Think about this: #1

write fault

read fault

1

1

2

2

Memory-mapped file](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-69-320.jpg)