ML_Lec4 introduction to linear regression.pdf

- 1. Regression Dr. Marwa M. Emam Faculty of computers and Information

- 3. Quiz : Question 2

- 4. Solutions (Q1) A recommendation system on a social network that recommends potential friends to a user: This is an example of unsupervised learning. The system doesn't have labeled data (e.g., "This is a good friend suggestion). It uses clustering or collaborative filtering techniques to group users with similar interests or behavior patterns and suggests potential friends based on those patterns. It doesn't rely on explicit feedback or a target variable, making it unsupervised. A system in a news site that divides the news into topics: This is an example of unsupervised learning. The system likely uses techniques like topic modeling or clustering to automatically group news articles into topics without having prior labels for each topic. It doesn't require labeled data or a target variable for classification.

- 5. Solutions (Q1) … The Google autocomplete feature for sentences: This is an example of unsupervised learning. The autocomplete feature doesn't rely on labeled data but uses algorithms that analyze patterns in a large corpus of text data to predict the next word or phrase in a sentence. It doesn't require explicit supervision or a target variable. A recommendation system on an online retailer that recommends to users what to buy based on their past purchasing history: This is an example of supervised learning. The system uses a user's past purchasing history as labeled data to make recommendations. Each user's purchase history provides labeled examples of items they bought, and the goal is to predict what other items they might be interested in based on those past purchases. This is a supervised learning task because it uses labeled data for training and prediction.

- 6. Solutions (Q1)… A system in a credit card company that captures fraudulent transactions: This is an example of supervised learning. The system uses historical data that includes labeled examples of fraudulent and non-fraudulent transactions to train a model. The model learns from these labeled examples to identify potentially fraudulent transactions in real-time. It's supervised because it uses labeled data to make predictions.

- 7. Solutions (Q2) An online store predicting how much money a user will spend on their site: In this scenario, you would use regression. The goal is to predict a continuous value, which is the amount of money a user will spend. A voice assistant decoding voice and turning it into text: This is typically a sequence-to-sequence problem and doesn't directly fit into either regression or classification. It involves taking an audio signal (sequence) and producing text (sequence) as the output. While regression is not well-suited for this task, classification is not directly applicable either. Instead, you would use techniques like speech recognition or natural language processing (NLP), which involve complex models such as recurrent neural networks (RNNs) or transformers to handle sequence data.

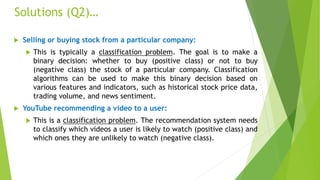

- 8. Solutions (Q2)… Selling or buying stock from a particular company: This is typically a classification problem. The goal is to make a binary decision: whether to buy (positive class) or not to buy (negative class) the stock of a particular company. Classification algorithms can be used to make this binary decision based on various features and indicators, such as historical stock price data, trading volume, and news sentiment. YouTube recommending a video to a user: This is a classification problem. The recommendation system needs to classify which videos a user is likely to watch (positive class) and which ones they are unlikely to watch (negative class).

- 9. Multiple linear regression Multiple linear regression is an extension of simple linear regression that allows you to model the relationship between a dependent variable and two or more independent variables. It assumes that the relationship between the dependent variable and the independent variables is linear. Here's a detailed explanation of multiple linear regression:

- 10. Multiple linear regression representation: 1. Variables: Dependent Variable (Y): This is the variable you want to predict. It's also called the target variable. In multiple linear regression, Y is a continuous numeric variable. Independent Variables (X1, X2, ..., Xn): These are the variables that you believe influence the dependent variable. You can have multiple independent variables, denoted as X1, X2, ..., Xn.

- 11. Multiple linear regression representation: 2. Model: The multiple linear regression model can be expressed as follows: Y = β0 + β1*X1 + β2*X2 + ... + βn*Xn + ε β0 is the intercept, representing the value of Y when all independent variables are zero. β1, β2, ..., βn are the coefficients, representing the change in Y for a one-unit change in the corresponding independent variable, assuming all other variables remain constant. ε represents the error term, which accounts for the variability in Y that cannot be explained by the model.

- 12. Multiple LR Vs. Simple LR Simple Linear regression: 𝑦(𝑖) = Ɵ0 + Ɵ1𝑥(𝑖) Multiple linear regression: 𝒚(𝒊) = Ɵ𝟎 + Ɵ𝟏𝒙𝟏 (𝒊) + Ɵ𝟐𝒙𝟐 (𝒊) + ⋯ + Ɵ𝒏𝒙𝒏 (𝒊)

- 13. Example

- 14. The Problem Formulation Multiple LR: size Base Price no. of floor no. of bedroom age of house h800.1x13x20.01x32x4

- 15. Gradient descent for multiple variables Gradient descent is an optimization algorithm used to find the minimum of a function, typically a cost or loss function, by iteratively adjusting the model's parameters in the direction of the steepest descent (negative gradient). It's a fundamental technique for training machine learning models, including multiple linear regression. Here's a step-by-step explanation of how gradient descent can be used to solve multiple linear regression:

- 16. Step 1: Define the Cost Function: In the context of multiple linear regression, you usually use the mean squared error (MSE) as the cost function. The MSE quantifies the difference between the predicted values and the actual values. It is defined as: MSE = (1/m) * Σ(yi - ŷi)^2 Step 2: Initialize Model Parameters: Initialize the coefficients (Ɵ0, Ɵ1, Ɵ2, ..., Ɵn) with some arbitrary values or set them to zero. Step 3: Update Parameters with Gradient Descent: Iteratively update the coefficients to minimize the cost function. The updates are performed by computing the gradient of the cost function with respect to each parameter and taking steps in the opposite direction of the gradient.

- 17. For the j-th coefficient (Ɵj), the update rule is as follows: Ɵj = Ɵj - α * (∂MSE/∂Ɵj) = Ɵj - α * ∂/ ∂ Ɵj J(Ɵ0, Ɵ1) Where: α is the learning rate, a hyperparameter that determines the step size in each iteration. ∂MSE/∂Ɵj is the partial derivative of the MSE with respect to Ɵj. This represents how much the cost function changes as Ɵj changes. Step 4: Repeat Step 3: Repeat the parameter update process for a specified number of iterations or until the change in the cost function is below a predefined threshold. Step 5: Use the Trained Model: Once the gradient descent algorithm converges (or after a specified number of iterations), you have the optimized coefficients. You can now use the trained model with these coefficients to make predictions on new data.

- 19. X 0 =1

- 21. m i i j j Y i1 2 0 1 1 h(x ) d 2m j( , ) d d d m i i j j i1 2 0 1 (x ) Y 01 1 d 2m j( , ) d d d xi m i i i i Y Y m m i1 0 1 1 m i1 0 1 0 1 j 1: d 1 j 0: d h(x ) h(x ) j( ,) d j( ,) d

- 24. Learning Rate: The learning rate is a hyperparameter used in various optimization algorithms, including gradient descent, to determine the step size at each iteration when updating the model's parameters. It controls the rate at which the model learns and how quickly it converges to the optimal solution. The learning rate is a crucial parameter to tune because choosing an inappropriate learning rate can lead to slow convergence, divergence, or other training issues.

- 25. Learning Rate: Choosing the right learning rate is essential for training machine learning models effectively. One common approach is to perform a grid search over a range of predefined learning rates. You can experiment with a logarithmic scale, trying values like 0.1, 0.01, 0.001, 0.0001, etc. Train the model with each learning rate and observe the convergence and performance on a validation set. You can then select the learning rate that yields the best results.

- 27. θ1 θ2 if gradient is working properly then J(Ɵ) should decrease after every iteration. J(Ɵ1 ) J(Ɵ2 ) J(Ɵ3 ) θ3

- 29. •The orange plot shows the divergence of the algorithm when the learning rate is really high where in the learning steps overshoot. •The green plot shows the case where learning rate is not as large as the previous case but is high enough that the steps keep oscillating at a point which is not the minima. •The blue plot is the least value of α and converges very slowly as the steps taken by the algorithm during update steps are very small. •The red plot would be the optimum curve for the cost drop as it drops steeply initially and then saturates very close to the optimum value.

- 31. Polynomial Regression Polynomial regression is a type of regression analysis in which the relationship between the independent variable (or variables) and the dependent variable is modeled as an nth-degree polynomial. Unlike simple linear regression, which assumes a linear relationship between the variables, polynomial regression can capture more complex, nonlinear relationships. Y = Ɵ0 + Ɵ1*X + Ɵ2 * 𝑿𝟐 + ... + Ɵn * 𝑿𝒏

- 32. Polynomial Regression … The sign of the coefficient for the highest order regressor determines the direction of the curvature Linear Quadratic Cubic Y’ = 0 + 1X Y’ = 0 + 1X + 1X2 Y’ = 0 + 1X + 1X2 +1X3 Y’ = 0 + - 1X Y’ = 0 + 1X + - 1X2 Y’ = 0 + 1X + 1X2 +- 1X3

- 33. hθ(xθ=)0θ+1x1θ+2x1 2 hθ(xθ=)0θ+1x1θ+2x1 2θ +3x1 3 Size 1-1000 size2 1-1000 000 size3 1- 1000 000 000 Range

- 34. Example1: Polynomial Regression Predicting Ice Cream Sales: Let's say you work for an ice cream shop and want to predict daily ice cream sales based on the daily temperature. In a simple linear regression model, you might start with a linear relationship: Sales = Ɵ0 + Ɵ1*Temperature However, you notice that sales seem to increase more rapidly with temperature than a linear model can capture. This is a situation where polynomial regression can be more suitable. You can use a polynomial model to capture the nonlinearity: Sales = Ɵ0 + Ɵ1*Temperature + Ɵ2*Temperature^2

- 35. Example1: Polynomial Regression…. In this case, you're fitting a quadratic (second-degree) polynomial to the data, allowing the relationship between temperature and sales to be nonlinear. Choosing the Degree of the Polynomial: The degree of the polynomial (n) should be chosen carefully. A higher-degree polynomial can fit the data more closely but might overfit, leading to poor generalization on new data. Conversely, a lower-degree polynomial might underfit and not capture the underlying trends.

- 36. Example1: Polynomial Regression…. You typically experiment with different polynomial degrees and use techniques like cross-validation to determine which degree provides the best trade-off between fitting the data and avoiding overfitting.

- 37. Example 2: Polynomial Regression Predicting a Car's Fuel Efficiency: Suppose you want to demonstrate polynomial regression with a real-world problem, such as predicting a car's fuel efficiency (miles per gallon or MPG) based on its speed. The idea is to show how the relationship between speed and fuel efficiency might not be linear.

- 38. Example 2: Polynomial Regression… Data: You have collected data on various cars, recording their speeds (in miles per hour) and their corresponding fuel efficiency (in miles per gallon). The data points look like this: Speed (mph) Fuel Efficiency (MPG) 20 30 30 28 40 25 50 22 60 20 70 18

- 39. Example 2: Polynomial Regression… Simple Linear Regression: If you start with a simple linear regression model: Plotting the data points would reveal that a straight line doesn't capture the relationship well. It's clear that as speed increases, fuel efficiency tends to decrease, but it may not be a linear decrease.

- 40. Example 2: Polynomial Regression… Polynomial Regression: To account for the nonlinear relationship, you can apply polynomial regression. Let's consider a cubic (third-degree) polynomial: MPG = Ɵ𝟎 + Ɵ𝟏* Speed + Ɵ𝟐 * Speed^2 + Ɵ𝟑 * Speed^3 Visualization: We created a graph to visualize the cubic polynomial regression curve alongside the data points. This allowed us to see how well the polynomial model captured the nonlinear relationship. The curve showed a clear downward trend as speed increased, illustrating that as cars go faster, their fuel efficiency tends to decrease.

- 41. Next Lecture ….. The next lecture is about the logistic regression, I'd like each of you to take the following steps: Review the basics of linear regression, as we'll be building upon that concept. Understand the Sigmoid Function. Familiarize yourself with the concept of classification problems in machine learning, as logistic regression is primarily used for classification tasks. Try to find real-world examples or use cases where logistic regression is applied.

- 42. Have a nice Day …

- 43. Thanks